3 Monte Carlo Simulation and other random thoughts

Many seasoned estimating practitioners will tell you that a Range Estimate is always better than a single-point deterministic estimate. (We have a better chance of being right, or less chance of being wrong, if we are one of those ‘glass is half-empty’ people!)

If we create a Range Estimate (or 3-Point Estimate) using Monte Carlo Simulation we are in effect estimating with random numbers (… and I’ve lost count of the number of Project Managers who have said, ‘Isn’t that how you estimators usually do it?’).

A word (or two) from the wise?

'The generation of random numbers is too important to be left to chance.'

Robert R. Coveyou

American Research

Mathematician

Oak Ridge National Laboratory

1915-1996

There is, of course, a theory and structure to support the use of Monte Carlo Simulation; after all, as Robert Coveyou is reported to have commented (Peterson, 1997), we wouldn’t want its output to be a completely chance encounter, would we?

3.1 Monte Carlo Simulation: Who, what, why, where, when and how

3.1.1 Origins of Monte Carlo Simulation: Myth and mirth

Based purely on its name, it is often assumed that the technique was invented by, or for use in, the gambling industry in Monte Carlo in order to minimise the odds of the ‘house’ losing, except on an occasional chance basis … after all someone’s good luck is their good public relations publicity. However, there is a link, albeit a somewhat more tenuous one, between Monte Carlo Simulation and gambling.

It was ‘invented’ as a viable numerical technique as part of the Manhattan Project which was an international research programme tasked with the development of nuclear weapons by the USA, UK and Canada (Metropolis & Ulam, 1949). (So why, wasn’t it called ‘Manhattan Simulation’ or the ‘Big Bang Theory Simulation’? You may well ask ... but it just wasn’t.) The phrase ‘Monte Carlo Simulation’ was coined as a codename by Nicholas Metropolis in recognition that the uncle of its inventor, colleague Stanislaw Ulam, used to frequent the casinos in Monaco hoping to chance his luck. (The name ‘Metropolis’ always conjures up images of Superman for me, but maybe that’s just the comic in me wanting to come out? In this context we could regard Stanislaw Ulam as a super hero.)

The Manhattan Project needed a repeatable mathematical model to solve complex differential equations that could not be solved by conventional deterministic mathematical techniques. Monte Carlo Simulation gave them a viable probabilistic technique. The rest is probably history.

The fundamental principle behind Monte Carlo Simulation is that if we can describe each input variable to a system or scenario by a probability distribution (i.e. the probability that the variable takes a particular value in a specified range) then we can model the likely outcome of several independent or dependent variables acting together.

3.1.2 Relevance to estimators and planners

Let me count the ways 1, 2, 3, 4 .... Err, 12, 13, 14, 15… dah! I’ve lost count, but I think I got up to ‘umpteen’.

It has been used extensively in the preparation of this book to demonstrate likely outcomes of certain worked examples and empirical results.

We can use it to model:

- The range of possible outcomes, and their probabilities, of particular events or combination of events where the mathematics could be computed by someone that way inclined, … and not all estimators have or indeed need that skill or knowledge

- The range of possible outcomes, and their probabilities, of particular events or combination of events, where the mathematics are either nigh on impossible to compute, or are just totally impractical from a time perspective, even for an estimator endowed with those advanced mathematical skills. (Why swim the English Channel when you can catch a ferry or a train? It may not feel as rewarding but it’s a lot quicker and probably less risky too.) The original use in the Manhattan Project is the prime example of this

- We can use it to model assumptions to test out how likely or realistic certain technical or programmatic assumptions might be, or how sensitive their impact may be for cost or schedule, for example

- … and, of course, to model risk, opportunity and uncertainty in cost and/or schedule to generate 3-Point Estimates of Cost Outturn and Forecast Completion Dates, which definitely falls into the second group above

Over the next few sub-sections, we’ll take a look at a few examples to demonstrate its potential use before we delve deeper into cost and schedule variability in the rest of the chapter.

Note: Whilst we can build and run a Monte Carlo Model in Microsoft Excel, it is often only suitable for relatively simple models with a limited number of independent input variables. Even then, we will find that with 10,000 iterations, such models are memory-hungry, creating huge files and slower refresh times. There are a number of dedicated software applications that will do all the hard work for us; some of these are directly compatible and interactive or even integrated with Microsoft Excel; some are not.

3.1.3 Key principle: Input variables with an uncertain future

All variables inherently have uncertain future values, otherwise we would call them Constants. We can describe the range of potential values by means of an appropriate probability distribution, of which there are scores, if not hundreds of different ones. We discussed a few of these in Volume II Chapter 4. We could (and we will) refer to this range of potential values as ‘Uncertainty’. As is often the case, there is no universal source of truth about the use of the term ‘Uncertainty’ so let’s define what we mean by it in this context.

Definition 3.1 Uncertainty

Uncertainty is an expression of the lack of sureness around a variable’s eventual value, and is frequently quantified in terms of a range of potential values with an optimistic or lower end bound and a pessimistic or upper end bound

Here we have avoided using the definitive expressions of ‘minimum’ and ‘maximum’ in expressing a practical and reasonable range of uncertainty. In other words, we can often describe situations which are possible but which also fall outside the realm of reasonableness. However, for more pragmatic reasons when we input these uncertainty ranges into a Monte Carlo Simulation tool, we may often use statistical distributions which have absolute minima and maxima.

There is often a view expressed of either the ‘Most Likely’ (Mode) value or the ‘Expected’ value (Arithmetic Mean) within that range, although in the case of a Uniform Distribution a ‘Most Likely’ value is something of an oxymoron as all values are equally likely (or unlikely if we have lots of them, and whether we are a glass half-full or half-empty personality).

Each variable will have either a fixed number of discrete values it can take depending on circumstances, or an infinite number of values from a continuous range. If we were to pick an infinite number of values at random from a single distribution, we would get a representation of the distribution to which they belong. However, choosing an infinite number of things at random is not practical, so we’ll have to stick with just having a large number of them instead!

For instance, if we tossed a coin a thousand times, or a thousand coins once each, then we would expect that around 500 would be ‘Heads’ and similarly around 500 would be ‘Tails’. (We’ll discount the unlikely chance of the odd one or two balancing on its edge.) This would reflect a 1 in 2 chance of getting either.

If we rolled a conventional die 6,000 times, we would expect approximately 1,000 of each of the faces numbered 1 to 6 to turn uppermost. This represents a discrete Uniform Distribution of the integers from 1 to 6.

If we divided the adult population of a major city into genders and ethnic groups we would expect that the heights of the people in each group to be approximately Normally Distributed.

So how can we exploit this property of large random numbers being representative of the whole? The answer is through Monte Carlo Simulation.

Let’s consider a simple example of two conventional dice. Let’s say we roll the two dice and add their values. In this case we can very simply compute the probability distribution for the range of potential values. Figure 3.1 summarises them. We have only one possible way of scoring 2 (1+1), and one way of scoring 12 (6+6), but we have six different ways of scoring 7:

1+6, 2+5, 3+4, 4+3, 5+2, 6+1

So, let’s see what happens when we roll two dice at random 36 times. In a perfect world each combination would occur once and once only giving us the distribution above, but we just know that that is not going to happen, don’t we? In fact we have tried this twice, with completely different results as shown in Figure 3.2. (Not what we would call very convincing, is it?) The line depicts the theoretical or true distribution derived in Figure 3.1; the histogram depicts our 36 random samples. The random selections look exactly that – random!

Figure 3.1 Probability Distribution for the Sum of the Values of Two Dice

If we did it a third time and added all the random samples together so that we had 108 samples, we might get a slightly better result as we have in the left hand graph of Figure 3.3, but it’s still not totally convincing. If we carried on and got 1,080 sample rolls of two dice, we would get something more akin to the right hand graph of Figure 3.3, which whilst it is not perfect fit, it is much more believable as evidence supporting the hypothesis that by taking a large number of random samples we will get a distribution that is more representative of the true distribution. So, let’s continue that theme of increasing the number of iterations tenfold, and then repeat it all again just to make sure it was not a fluke result. We show both sets of results in Figure 3.4.

Figure 3.2 Sum of the Values of Two Dice Based on 36 Random Rolls (Twice)

Figure 3.3 Sum of the Values of Two Dice Based on 108 and 1,080 Random Rolls

The two results are slightly different (for instance, look at the Summed Value of 6; in the left hand graph, the number of occurrence is slightly under 1,500 whereas it is slightly over that in the right hand graph.) However, we would probably agree that the two graphs are consistent with each other, and that they are both reasonably good representations of the true distribution, which is depicted by the line graph. In the grand scheme of estimating this difference is insignificant; it’s another case of Accuracy being more important than Precision (Volume I Chapter 4). For completeness, we have shown the results of our two simulation runs of 10,800 random double dice rolls in Table 3.1, from which we can determine the cumulative probability or percentage frequency of occurrence. If we plot these in Figure 3.5, we can barely see the difference between the three lines. (We might do if we have a magnifying glass handy.)

What we have demonstrated here is the basic principles and procedure that underpin Monte Carlo Simulation:

Figure 3.4 Sum of the Values of Two Dice Based on 10,800 Random Rolls

Table 3.1 Sum of the Values of Two Dice Based on 10,800 Random Rolls (Twice)

Figure 3.5 Cumulative Frequency of the Sum of Two Dice Being less than or Equal to the Value Shown

- Define the probability distribution of each variable in a model

- Select a value at random from each variable

- Compute the model value using these random choices

- Repeat the procedure multiple times

- Count the number of times each particular output total occurs, and convert it to a percentage of the total

- Stability in random sampling comes with an increased number of samples

Monte Carlo Simulation will model the likely output distribution for us.

3.1.4 Common pitfalls to avoid

One common mistake made by estimators and other number jugglers new to Monte Carlo Simulation is that they tend to use too small a sample size, i.e. too few iterations. In this case ‘size does matter – bigger is better!’ In any small sample size, it is always possible that we could get a freak or fluke result, the probability of which is very, very small (like winning the lottery that for some reason, has always eluded me!). Someone has to win it, but it is even more unlikely that someone will win the jackpot twice (although not impossible).

The stability that comes with large sample sizes was demonstrated in the last example where we had stability of the output when we used 10,800 iterations. (We used that number because it was a multiple of 36; there is no other hidden significance.)

Another common mistake people make is that they think that Monte Carlo Simulation is summing up complex probability distributions. This is quite understandable as the procedure is often described as taking a random number from a distribution AND another random number from another distribution AND … etc. Furthermore, the word ‘AND’ is normally associated mathematically with the additive operator PLUS. In the sense of Monte Carlo Simulations we should really say that AND should be interpreted as AND IN COMBINATION WITH, or DEPENDENT ON, the results of other things happening. In that sense we should describe it as the product of complex probability distributions, or perhaps liken it to Microsoft Excel’s SUMPRODUCT function.

The net result is that Monte Carlo Simulation will always bunch higher probability values together around the Arithmetic Mean, narrowing the range of potential output values (recall our Measures of Central Tendency in Volume II Chapter 2), and ‘dropping off’ the very unlikely results of two or more extremely rare events occurring together.

Let’s consider this in relation to 5 dice rolled together. The sum of the values could be as low as 5 or as high as 30, but these have only a 0.01286% chance each of occurring (1/6 x 1/6 x 1/6 x 1/6 x 1/6). In other words, on average we would only expect to get 5 1s or 5 6s, once in 10,000 tries. So, it’s not actually impossible … just very improbable.

Without computing the probability of every possible sum of values between 5 and 30, Monte Carlo will show us that we can reasonably expect a value between 11 and 24 with

For the Formula-phobes: Monte Carlo Simulation narrows in on more likely values

It is a not uncommon mistake that people take the range of possible outcomes as being between the sum of the ‘best case’ values and the sum of the worst-case values. In an absolute sense, this is true, but the probability of getting either value at the bounds of the range is highly unlikely.

For instance, the chance of throwing a 6 on a die is 1 in 6; the chance of getting a double 6 is 1 in 36, or 1 in 6 multiplied by 1 in 6.

As we saw in Figure 3.1 each combination of the two dice have that same probability of 1/36 of happening together, but there are six ways that we can score 7 meaning that the probability of scoring 7 is 6/36 or 1/6. So, we have to multiply the probabilities of each random event occurring and sum the number of instances that we get the same net result. The chance of two or more rare events occurring together is very small, we are more likely to get a mixture of events: some good, some bad. If we increase the number of dice, the number of physical combinations increases, and the chances of getting all very low or very high values in total get more unlikely, and gets more challenging to compute manually. However, we can simulate this with Monte Carlo without the need for all the mathematical or statistical number juggling. Remember …

Not all the good things in life happen together, and neither do all the bad things … it might just feel that way sometimes.

90% Confidence. Here we have set up a simple model that generates a random integer between 1 and 6 (inclusive) using Microsoft Excel’s RANDBETWEEN(bottom, top) function. We can then sum the 5 values to simulate the random roll of 5 dice. We can run this as many times as we like using a new row in Excel for every ‘roll of the dice’. Using the function COUNTIF(range, criteria) we can then count the number of times we get every possible value between 5 and 30. We show an example in Figure 3.6 based on 10,000 simulation iterations.

For the Formula-phobes: 90% Confidence Interval

It might not be obvious from Figure 3.6 why the 90% Confidence Interval is between values 11 and 24. Unless we specify otherwise, the 90% Confidence Interval is taken to be the symmetrical interval between the 5% and 95% Confidence Levels. In our example:

- There are 283 values recorded with a score of 11 which accounts for a Confidence Level > 3.1% and ≤ 5.9%, implying that the 5% level must be 11

- There are 248 values recorded with a score of 24 which accounts for Confidence Level > 94.4% and ≤ 96.9%, implying that the 95% level must be 24

In fact, in this example the range 11 to 24 accounts for some 93.8% of all potential outcomes.

3.1.5 Is our Monte Carlo output normal?

If we look at the Probability Density Function for the range of possible outcomes, we will notice that it is very ‘Normalesque’ i.e. Bell-shaped (Figure 3.7) whereas when we were looking at only two dice (Figure 3.1), the output was distinctly triangular in shape.

This is no fluke; it is an early indication of phenomena described by the Central Limit Theorem and the Weak and Strong Laws of Large Numbers for independent identically distributed random samples. (Don’t worry, I’m not going to drag you through those particular proofs; there’s plenty of serious textbooks and internet sources that will take you through those particular delights.)

However, although this tendency towards a Normal Distribution is quite normal, it is not always the result!

Figure 3.6 Cumulative Distribution of the Sum of the Values of Five dice Based on 10,000 Random Rolls

Figure 3.7 Probability Mass Function for a 5 Dice Score cf. Normal Distribution

Caveat augur

Whilst modelling uncertainty in a system of multiple variables can often appear to be Normally Distributed, do not make the mistake of assuming that it always will be. It depends on what we are modelling.

For instance, let’s look at a case where the output is not Normal. Consider a continuous variable such as the height of school children. Now we know that the heights of adult males are Normally Distributed, as are the heights of adult females. The same can be inferred also for children of any given age. To all intents and purposes, we can identify three variables:

- Gender – which for reasons of simplicity we will assume to be a discrete uniform distribution, i.e. 50:50 chance of being male or female (rather than a slightly less imprecise split of 49:51)

- Age – which we will also assume to be a discrete uniform distribution. In reality this would be a continuous variable but again for pragmatic reasons of example simplicity we will round these to whole years (albeit children of a younger age are more likely to be precise and say they are or whatever). We are assuming that class sizes are approximately the same within a school

- Height for a given Gender and Age – we will assume to be Normally Distributed as per the above

Now let’s visit a virtual primary school and sample the heights of all its virtual pupils. No, let’s visit all the virtual primary schools in our virtual city and see what distribution we should assume for the height of a school child selected at random.

The child selected could be a Girl or a Boy, and be any age between 5 years and 11 (assuming the normal convention of rounding ages down to the last birthday).

Many people’s first intuitive reaction is that it would be a Normal Distribution, which is quite understandable, but unfortunately it is also quite wrong. (If you were one of those take heart in the fact that you are part of a significant majority.)

When they think about it they usually realise that at the lower age range their heights will be Normally Distributed; same too at the upper age range, and … everywhere between the two. Maybe it’s a flat-topped distribution with Queen Anne Legs at the leading and trailing edges (i.e. slightly S-Curved)?

However, although that is a lot closer to reality, it is still not quite right. Figure 3.8 illustrates an actual result from such random sampling … the table top is rounded and sloping up from left to right, more like a tortoise. For the cynics amongst us (and what estimator isn’t endowed with that virtue?) who think perhaps that we have a biased sample of ages, Figure 3.9 shows that the selection by age is Uniformly Distributed and as such is consistent with being randomly selected.

Figure 3.8 Height Distribution of Randomly Selected School Children Aged 5–11

Figure 3.9 Age Distribution of Randomly Selected School Children Aged 5–11

Let’s analyse what is happening here. There is little significant difference between the heights of girls and boys at primary school age. Furthermore, the standard deviation of their height distributions at each nominal age of 5 through to 11 is also fairly consistent at around 5.5% to 5.6%. (Standard Deviation based on the spread of heights between the Mean Nominal Age and the 2nd Percentile six months younger and the 98th Percentile six months older). These give us the percentile height growth charts shown in Figure 3.10, based on those published by Royal College of Paediatrics and Child Health (RCPCHa,b, 2012). (It is noted that there are gender differences but these are relatively insignificant for this age range … perhaps that’s a hint of where this discussion is going.)

We have calculated the theoretical distribution line in Figure 3.8 using the sum of a Normal Distribution for each nominal age group for girls and boys using the 50th Percentile (50% Confidence Level) from Figure 3.10. The 96% Confidence Interval between 2% and 98% has been assumed to be 4 Standard Deviations (offset by 6 months on either side). Figure 3.11 shows the individual Normal Distributions which then summate to the overall theoretical distribution (assuming the same number of boys and girls in each and every age group). We will have noticed that the age distributions get wider and shorter … but their areas remain the same; this is due to the standard deviation increasing in an absolute sense as it is a relatively constant percentage of the mean. The net result is that there is an increased probability of children of any given height, and the characteristic, albeit slight sloping table top.

Figure 3.10 Percentile Height School Children Ages 5–11 Source: Copyright © 2009 Royal College of Paediatrics and Child Health

Figure 3.11 Height Distribution of Randomly Selected School Children Aged 5–11

Now let’s extend the analysis to the end of secondary school and 6th form college, nominally aged 18. Let’s look at all girls first in the age range from 5 to 18 (Figure 3.12). Following on from the primary years we get a short plateauing on the number of schoolgirls over an increasing height, before it rises dramatically to a peak around 163.5 cm.

Figure 3.12 Height Distribution of Randomly Selected Schoolgirls Aged 5–18

Figure 3.13 explains why this happens. After age 13, girls’ growth rate slows down and effectively reaches a peak between the ages 16 and 18. This gives us more girls at the taller height range. Figure 3.14 shows the primary and secondary age groups separately just for clarity. Whereas primary school aged children were more of a rounded mound with a slight slope, the secondary school aged girls are ‘more normal’ with a small negative skew.

Now let’s turn our attention to the boys through primary and secondary school including 6th form college (aged 5–18). It’s time for an honesty session now… how many of us expected it to be the Bactrian Camel Distribution shown in Figure 3.15?

No, the dip is nothing to do with teenage boys having a tendency to slouch. Figure 3.16 provides us with the answer; it is in the anomalous growth of boys through puberty, where we see a slowdown in growth after age 11 (in comparison to girls – right hand graph) before a surge in growth in the middle teen years, finally achieving full height maturity at 18 or later.

As a consequence of this growth pattern we get:

- Less boys in the 140–160 cm height range as they accelerate through this range

- The slowdown immediately prior to this growth surge causes an increased number of boys in the height range 135–140 cm in comparison to girls.

- After age 16, boys’ growth rate slows again, giving a more Normal Distribution around 175 cm as they reach full expected growth potential

- For completeness, we have shown primary and secondary school aged boys separately in Figure 3.17

Figure 3.13 Percentile Growth Charts for UK Girls Aged 5–18 Source: Copyright © 2009 Royal College of Paediatrics and Child Health

Overall across all schoolchildren aged 5.18, we get the distribution in Figure 3.18. Collectively from this we might conclude the following:

- Overall randomly selected children’s height is not Normally Distributed

- Teenage boys are Statistical Anomalies, but the parents amongst us will know that already and be nodding wisely

- Collectively, girls become Normal before boys! (My wife would concur with that, but I detect an element of bias in her thinking)

More importantly …

Figure 3.14 Height Distribution of Randomly Selected Schoolgirls Aged 5–18

Figure 3.15 Height Distribution of Randomly Selected Schoolboys Aged 5–18

Figure 3.16 Percentile Growth Charts for UK Boys Aged 5–18 Source: Copyright © 2009 Royal College of Paediatrics and Child Health

Figure 3.17 Height Distribution of Randomly Selected Schoolboys Aged 5–18

Figure 3.18 Height Distribution of Randomly Selected Schoolchildren Aged 5–18

- Monte Carlo Simulation is a very useful tool to understand the nature of the impact of multiple variables, and that the output is not necessarily Normally Distributed, or even one that we might intuitively expect

- Don’t guess the output; it’s surprising how wrong we can be

3.1.6 Monte Carlo Simulation: A model of accurate imprecision

When we are building a Monte Carlo Simulation Model, there is no need to specify input parameters to a high degree of precision, other than where it provides that all-important TRACEability link to the Basis of Estimate.

Input variables can be in any measurement scale or currency, but at some point, there has to be a conversion in the model to allow an output to be expressed in a single currency. In this scenario, the term currency is not restricted to financial or monetary values, but could be physical or time-based values:

- These conversion factors could be fixed scale factors (such as Imperial to metric dimensions) in which case there is no variability to consider

- The conversion factors could themselves be variables with associated probability distributions such as Currency Exchange Rates or Escalation Rates

Don’t waste time and energy or spend sleepless nights on unnecessary imprecision. Take account of the range of the input variables’ scales in relation to the whole model.

In terms of input parameters for the input variable distributions, such as the Minimum (or Optimistic), Maximum (or Pessimistic) or Mode (Most Likely) values, we do not need to use precise values; accurate rounded values are good enough.

For example, the cumulative probability of getting a value that is less than or equal to £ 1,234.56789 k in a sample distribution cannot be distinguished from a statistical significance point of view from getting the value £ 1,235 k.

Using the rounded value as an input will not change the validity of the output.

However, resist the temptation to opt for the easy life of a plus or minus a fixed percentage of the Most Likely (see the next section) unless there are compelling reasons that can be captured and justified in the Basis of Estimate; remember the principles of TRACEability (Transparent, Repeatable, Appropriate, Credible and Experientially-based). Remember that input data often has a natural skew.

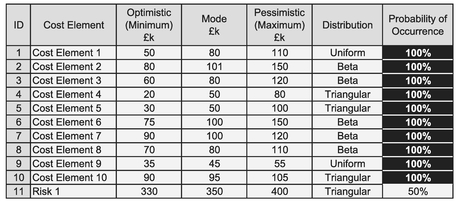

For an example of this ‘accurate imprecision’ let’s turn our attention to one of the most common, perhaps popular, uses of Monte Carlo Simulation in the world of estimating and scheduling ... modelling uncertainty across a range of input values. In Table 3.2 we have shown ten cost variables each with its own defined distribution. For reference, we have summated the Minimum and Maximum values across the variables, along with the sum of their modes and means.

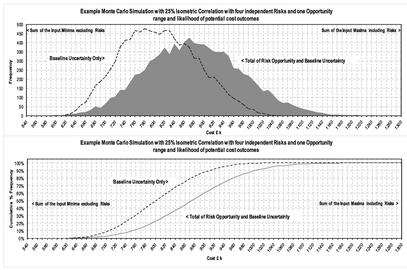

If we run the model with 10,000 iterations, we will get an output very similar to that shown in Figures 3.19 and 3.20. Every time we run the model, even with this number of iterations, we will get precisely different but very consistent results. In other words, Monte Carlo Simulation is a technique to use to get accurate results with acceptable levels of imprecision. For instance, if we were to re-run the model, the basic shape of the Probability Density Function (PDF) graph in Figure 3.19 will remain the same, but all the individual mini-spikes will change (as shown in the upper half of Figure 3.21). In contrast, the Cumulative Distribution Function (CDF) S-Curve of the probability of the true outcome being less than or equal to the value depicted will hardly change at all if we re-run the model, as illustrated in the lower half of Figure 3.21. (We’re talking of ‘thickness of a line’ differences only here.)

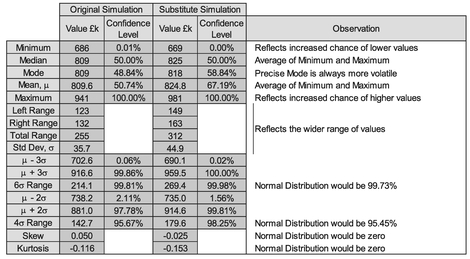

Table 3.3 emphasises the consistency by comparing some of the key output statistics from these two simulations using common input data.

From the common input data in our earlier Table 3.2, the output results in Table 3.3 and either result in Figure 3.21 we can observe the following:

Table 3.2 Example Monte Carlo Simulation of Ten Independently Distributed Cost Variables

Figure 3.19 Sample Monte Carlo Simulation PDF Output Based on 10,000 Iterations

Figure 3.20 Sample Monte Carlo Simulation CDF Output Based on 10,000 Iterations

Figure 3.21 Comparison of Two Monte Carlo Simulations of the Same Cost Model

Table 3.3 Comparison of Summary Output Statistics Between Two Corresponding Simulations

- With the exception of the two variables with Uniform Distributions, all the other input variables have positively skewed distributions (i.e. the Mode is closer to the Minimum than the Maximum (Table 3.2)

- The output distribution of potential cost outcomes is almost symmetrical as suggested by the relatively low absolute values of the Skewness statistic (Table 3.3)

- The output distribution is significantly narrower than that we would get by simply summing the minima and the maxima of the input distributions (Figure 3.21)

- Consistent with a symmetrical distribution, the rounded Mean and Median of the Output are the same and are equal to the sum of the input Means (Tables 3.2 and 3.3)

- The precise ‘local output Mode’ is closer to the Mean and Median than the sum of the input Modes, further suggesting a symmetrical distribution (Table 3.3). Note: on a different simulation run of 10,000 iterations, the local mode will always drift slightly in value due to changes in chance occurrences

- The Mean +/- thr ee times the Standard Deviation gives a Confidence Interval of 99.84% which is not incompatible with that of a Normal Distribution at 99.73% (Table 3.3)

- If we measured the Confidence Interval at +/- one Standard Deviation or +/two Standard Deviations around the Mean we would get 67.59% and 95.77% respectively. This also compares favourably with the Normal Distribution intervals of 68.27% and 95.45%

- The Excess K urtosis being reasonably close to zero is also consistent with that of an approximately Normal Distribution

However, if only life was as simple as this …

Caveat augur

This model assumes that all the input variable costs are independent of each other, and whilst this may seem a reasonable assumption, it is often not true in the absolute sense. For example, overrunning design activities may cause manufacturing activities to overrun also, but manufacturing as a function is quite capable of overrunning without any help from design!

Within any system of variables there is likely to be a degree of loose dependence between ostensibly independent variables. They share common system objectives and drivers.

We will return to the topic of partially correlated input variables in Section 3.2.

3.1.7 What if we don't know what the true Input Distribution Functions are?

At some stage we will have to make a decision about the choice of input distribution. This is where Monte Carlo Simulation can be forgiving to some extent. The tendency of models to converge towards the central range of values will happen regardless of the distributions used so long as we get the basic shape correct, i.e. we don’t want to use negatively skewed distributions when they should be positively skewed, but even then, Monte Carlo has the ‘magnanimity’ to be somewhat forgiving.

However, as a Rule of Thumb, distributions of independent variables of cost or time are more likely to be positively rather than negatively skewed. We cannot rule out symmetrical distributions if the input is at a sufficiently high level in terms of systems integration.

If we were to model the basic activities that result in the higher level integrated system then, as we have already seen, they would naturally converge to a symmetrical distribution that can be approximated to a Normal Distribution. Neither can we rule out negatively skewed distributions of other variables that might be constituent elements of a cost or schedule calculation such as the performance we might expect to achieve against some standard, norm or benchmark, but for basic cost and schedule, we should expect these to be positively skewed.

For the Formula-phobes: Why are cost and time likely to be positively skewed?

Suppose we have to cross the precinct in town to get to the Bank, a distance of some 100m.

We might assume that at a reasonable average walking pace of around four miles per hour, it will take us around a minute. Let’s call that our Most Likely Time.

The best we could expect to achieve if we were world class athletes, would be around ten seconds, giving us what we might consider to be an absolute minimum. (So, that's not going to happen in my case, before anyone else says it.)

The worst time we could reasonably expect, could be considerably more than our Most Likely Time. The town centre may be very busy and we may have to weave our way around other people, and we’re in no rush, we can take as long as we like, do a bit of window shopping on route … and then there’s the age factor for some of us, and … in these shoes?!

The net result is that our capacity to take longer is greater than our capacity to do it more quickly. We have an absolute bound at the lower end (it must be greater than zero), but it’s relatively unbounded at the top end (life expectancy permitting!).

Caveat augur

The natural tendency for positive skewness in input variables of time and cost is a valid consideration at the lowest detail level. If we are using higher level system cost or time summaries, these are more likely to be Normally Distributed … as we are about to discover.

If we were to include these remote, more extreme values in our Monte Carlo analysis, they are unlikely to occur by random selection, and if one did, it would be a bit of a ‘one-off’ that we would ignore in relation to the range of realistic values we could reasonably expect. That though may lead us to wonder whether it really matters what distributions we input to our Monte Carlo Model. Let’s consider now what might those same basic distribution shapes be that we might ‘get away with’ substituting (summarised in Table 3.4), and see how they perform. Note that we can mirror the positively skewed distributions in this table to give us their negatively skewed equivalents should the eventuality arise.

Note: If we do know the likely distribution because we have actual data that has been calibrated to a particular situation, then we should use it; why go for the rough and ready option? Remember the spirit of TRACEability (Transparent, Repeatable, Appropriate, Credible and Evidence-based).

Table 3.4 Example Distributions that Might be Substituted by Other Distributions of a Similar Shape

More often though, we won’t have the luxury of a calibrated distribution and we will have to rely on informed judgement, and that might mean having to take a sanity check. For instance, if we are considering using a Beta Distribution but we don’t know the precise parameter values (i.e. it has not been calibrated to actual data) then we might like to consider plotting our distribution first using our ‘best guess’ at the parameter values (i.e. alpha, beta, AND the Start and End points.) If the realistic range of the distribution does not match our intentions or expectations then we can modify our input parameters, or use a more simplistic Triangular Distribution.

If you are concerned that we may be missing valid but extreme values by using a Triangular Distribution, then we can always consider modelling these as Risks (see Section 3.3.1) but as we will see it may not be the best use of our time.

Before we look at the effects on making substitutions, let’s examine the thought processes and see what adjustments we might need to make in our thinking.

- If the only things of which we are confident are the lower and upper bounds, i.e. that the Optimistic and Pessimistic values cover the range of values that are likely to occur in reality, then we should use a Uniform Distribution because we are saying in effect that no single value has a higher chance of arising than any other … to the best of our knowledge.

- If we are comfortable with our Optimistic value and that the Pessimistic value covers what might be reasonably expected, but excludes the extreme values or doomsday scenarios covered by the long trailing leg of a Beta Distribution, then provided that we are also confident that one value is more likely than any other, then we can be reasonably safe in using a Triangular Distribution

- If we are reasonably confident in our Optimistic and Most Likely values but are also sure that our Pessimistic value is really an extreme or true ‘worst case’ scenario, then we should either use a Beta Distribution … or truncate the trailing leg and use a Triangular Distribution with a more reasonable pessimistic upper bound. Table 3.5 illustrate how close we can approximate a range of positively and negatively skewed Beta Distributions by Triangular Distributions

From a practical point of view, if we know the Beta Distribution shape, (and therefore its parameters) then why would we not use them anyway? There is no need to approximate them just for the sake of it (who needs extra work?). However, suppose we believe that we have a reasonable view of the realistic lower and upper bounds but that we want to make sure that we have included the more extreme values in either the leading or trailing legs of a wider Beta Distribution, how can we use our Triangular approximation to get the equivalent Beta Distribution?

However, whilst we can create Triangular Distributions which have the same key properties as Beta Distributions such that the Modes and Means match, the relationship is too intertwined and complex to be of any practical use, as illustrated by the Formula-phile call-out. We could of course find the best fit solution using Microsoft Excel’s Solver and performing a Least Squares algorithm but that again begs the question of why we are looking at an approximation if we already know what we think the true distribution is? We are far better in many instances asking ourselves what the reasonable lower and upper bounds of a distribution are and using those with a Triangular Distribution.

Table 3.5 Beta and Triangular Distributions with Similar Shapes

For the Formula-philes: Substituting the Beta Distribution with a Triangular Distribution

Consider a Triangular Distribution with a start point B and an end point E and a mode,. Consider also a Beta Distribution with parameters α and β, a start point S and finish point F such that its mode, M̂, coincides with that of the Triangular Distribution. Let the two distributions have a common mean, M̄ also.

… which expresses the range of the ‘equivalent’ Beta Distribution in relation to a Triangular Distribution with the same Mean and Mode. We just need to know the sum of the two Beta Distribution parameters, and to have an idea of the Beta Distribution Start Point if it is Positively Skewed.

… not really the snappiest or most practical of conversion formulae, is it?

I can see that some of us are not convinced. Let’s see what happens when we start switching distributions. Using the data from our previous Table 3.2 and plotting the five Beta Distributions, we might accept that the Triangular Distribution in Figure 3.22 are not unreasonable approximations.

Now let’s see what happens if we make these Triangular substitutions from Figure 3.22 in our Model Carlo Model. Figure 3.23 illustrates the result on the right hand side in comparison with the original data from Figure 3.19 reproduced on the left. Now let’s play a game of ‘Spot the Difference’ between the left and right hand graphs. Apart from the exact positions of the individual ‘stalagmites’ in the upper graph, it is hard to tell any real difference in the lower cumulative graph.

Table 3.6 summarises and compares some of the key statistics. Note that in this instance we are using the same set of random numbers to generate the Monte Carlo Simulations as we did in the original simulation; they’re just ‘driving’ different distributions. (Just in case you thought it might make a difference by fluke chance.)

Now let’s try something inappropriate (no, not in that way, behave); let’s forget about making the adjustment to truncate the Beta Distribution Maximum and simply replace it with a Triangular Distribution; we’ve done just that in Figure 3.24 and Table 3.7. This time there is a marked difference with the central and right areas of the output

Figure 3.22 Approximation of 5 Beta Distributions by 5 Triangular Distributions

Figure 3.23 Comparison of Two Monte Carlo Simulations with Appropriate Substituted Distributions

Table 3.6 Comparison of Two Monte Carlo Simulations with Appropriate Substituted Distributions

Figure 3.24 Comparison of Two Monte Carlo Simulations with Inappropriate Substituted Distributions (1)

distribution moving more to the right; the left hand ‘start’ area is largely unchanged. Overall, however, the basic output shape is still largely ‘Normalesque’ with its symmetrical bell-shape, leading us to re-affirm perhaps that system level costs are likely to be Normally Distributed rather than positively skewed.

However, if we had simply replaced all the distributions with Uniform Distributions on the grounds that we weren’t confident in the Most Likely value, we would have widened the entire output distribution even more, but on both sides, but yet it would have still remained basically Normalesque in shape, as illustrated in Figure 3.25 and Table 3.8.

Table 3.7 Comparison of Two Monte Carlo Simulations with Inappropriate Substituted Distributions (1)

Figure 3.25 Comparison of Two Monte Carlo Simulations with Inappropriate Substituted Distributions (2)

Table 3.8 Comparison of Two Monte Carlo Simulations with Inappropriate Substituted Distributions (2)

We can conclude from this that there is no way of telling from the output distribution whether we have chosen the right input variable’s distributions of uncertainty as it will always be generally Normalesque, i.e. bell-shaped and symmetrical, where we are aggregating costs or time. (This may not be the case when we use Monte Carlo in other ways, for instance when we consider risk and opportunity ... we’ll be coming to those soon.)

In order to make an appropriate choice of distribution where we don’t know the true distribution then we should try to keep it simple, and ask ourselves the checklist questions in Table 3.9. For those of us who prefer diagrams, these are shown in Figures 3.26 and 3.27 for basic input levels, and system level input variables.

In terms of our ‘best guess’ at Beta Distribution shape parameters (alpha and beta), we can choose them based on the ratio of the Mode’s relative position in the range between the Optimistic and Pessimistic values. (The alpha and beta parameters will always be greater than one.) Typical values are shown in Table 3.10, but as Figure 3.28 illustrates, several Beta Distributions can be fit to pass through the same Start, Mode and Finish points. However, the peakier the distribution is (i.e. greater values of parameters alpha and beta combined), then the more likely it is that the minimum and maximum values will be extreme values and therefore may not be representative of the Optimistic and Pessimistic values intended.

Table 3.9 Making an Appropriate Informed Choice of Distribution for Monte Carlo Simulation

Caveat augur

These checklists decision and flow diagrams are aimed at modelling cost and schedule estimates and should not be construed as being applicable to all estimating and modelling scenarios, where other specific distributions may be more appropriate.

Figure 3.26 Making an appropriate informed choice of Distribution for Monte Carlo Simulation (1)

Figure 3.27 Making an Appropriate Informed Choice of Distribution for Monte Carlo Simulation (2)

Figure 3.28 Three Example Beta Distributions with Common start, Mode and Finish Points

Table 3.10 Choosing Beta Distribution Parameters for Monte Carlo Simulation Based on the Mode

For the Formula-philes: Selecting Beta Distribution parameters based on the Mode

Consider a Beta Distribution with parameters α and β, a start point S and finish point F and a mode, M̂.

… which expresses the Mode as a proportion of the Range of a Beta Distribution as the ratio of the alpha parameter less one in relation to the sum of the two parameters less one each.

In conclusion, if we don’t know what an actual input distribution is, but we have a reasonable idea of the Optimistic, Most Likely and Pessimistic values, then Monte Carlo Simulation will be quite forgiving if we opt for a rather simplistic triangular distribution instead of a Normal or Beta Distribution. It will not, however, be quite so accommodating to our fallibilities if we were to substitute a uniform distribution by a triangular distribution, or use a uniform distribution where the true distribution has a distinct Most Likely value.

3.2 Monte Carlo Simulation and correlation

3.2.1 Independent random uncertain events – How real is that?

If only using Monte Carlo Simulation for modelling cost or schedule was always that simple, i.e. that all the variables were independent of each other. We should really ask ourselves whether our supposedly independent events are in fact truly and wholly independent of each other.

For instance, let’s consider a Design and Manufacture project:

- If the Engineering Department comes up with a design that is difficult to make, then there will be a knock-on cost to Manufacturing

- If Engineering is late in completing their design, or makes late changes at the customer’s request, there is likely to be a knock-on effect to Manufacturing’s cost or schedule

- If Engineering produces a design on time that is easy to make, with no late customer changes, then that doesn’t mean that Manufacturing’s costs will be guaranteed Manufacturing is just as capable as Engineering of making their own mistakes and incurring additional costs

- Changes to the design of systems to incorporate customer changes, or in response to test results, may have an impact on other parts of the engineered design, previously frozen

- Programme Management in the meantime is monitoring all the above and their costs may increase if they have to intervene in order to recover performance issues

In essence there is a ‘soft link’ between various parts of an organisation working together to produce a common output. We can express the degree to which the performance of different parts of the organisation, or the ripple effect of design changes through the product’s work breakdown structure are interlinked by partial correlation. We may recall covering this in Volume II Chapter 5 with the aid of the Correlation Chicken, Punch and Judy and the rather more sophisticated Copulas.

To see what difference this makes let’s consider our previous example from Section 3.1.6 and impose 100% correlation between the ten cost elements in Table 3.2.

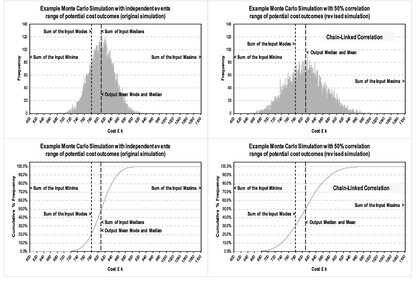

Of course, this is an extreme example and it is an unlikely and unreasonable assumption that all the input cost variables are perfectly positively correlated with one another, i.e. all the minima align and all the maxima align, as do all the corresponding confidence levels in-between. However, it serves us at present to illustrate the power of correlation, giving us the result on the right hand side of Figure 3.29; the left hand side represents the independent events view which we generated previously in Figure 3.19. Table 3.11 summarises and compares the key statistics from the two simulations.

The difference in the results is quite staggering (apart from looking like hedgehog roadkill! Note: No real hedgehogs were harmed in the making of this analogy, and I hope you weren’t eating), but when we analyse what we have told our Monte Carlo Model to do, it is quite rational. When we define tasks within a model to be perfectly (i.e. 100%) correlated, we are forcing values to match and team up in the order of their values. This is referred to as Rank Order Correlation, which as we know from Volume II Chapter 5 is not the same generally as Linear Correlation.

This gives us three notable features:

Figure 3.29 Monte Carlo Simulation–Comparison Between Independent and 100% Correlated Tasks

Table 3.11 Comparison of Monte Carlo Simulations with 100% and 0% (Independent) Correlated Events

- It reduces the frequency of central values and increase occurrences of values in the leading and trailing edges … data is squashed down in the middle and displaced out to the lower and upper extremes, i.e. all the low level values are forced to happen together, and the same for all the high level values

- The Monte Carlo Output will be less ‘Normalesque’ in shape and instead will take on the underlying skewness of the input data

- The Monte Carlo minimum and maximum values now coincide with the sum of the input minima and the sum of the input maxima respectively

From a pragmatic perspective the likelihood of our getting a situation where all (or some might say ‘any’) input variables are 100% correlated is rather slim, perhaps even more improbable than a situation in which we have full independence across multiple input variables. In reality, it is more likely that we will get a situation where there is a more of an ‘elastic’ link between the input variables; in other words, the input variables may be partially correlated. Based on the two extremes of our original model (which assumed completely independent events or tasks), and our last example in which we assumed that all the tasks were 100% Correlated, then intuitively we might expect that if all tasks are 50% Correlation with each other, then the range of potential outcomes will be somewhere between these two extremes

For the Formula-phobes: Impact of correlating inputs

The impact of correlating distribution values together is to move them down and out towards the edges. Let’s consider just two input variables. If they are independent, there is a reduced chance of both Optimistic values occurring together, or both Pessimistic values occurring together. As a consequence, we are more likely to get middle range values.

If we set the two variables to be 100% correlated with each other, then all the low-end values will occur together, as will all the high-end values.

There will be no mixing of low and high values which reduces the number of times values occur in the middle range.

of 0% and 100% correlation. Whether it is ‘halfway’ or not remains to be seen, but to some extent it depends on how we construct the model and apply the correlation relationship.

3.2.2 Modelling semi-independent uncertain events (bees and hedgehogs)

Does it really matter if we ignore any slight tendency for interaction between variable values? According to the considered opinion and research of the renowned cost estimating expert Stephen Book (1997), the answer would be a definite ‘Yes’. Whilst he acknowledges that establishing the true correlation between variables is very difficult, he advises that any ‘reasonable’ non-zero correlation would be better (i.e. closer to reality) than ignoring correlation, and by default, assuming it was zero.

As we have already demonstrated, if we have a sufficient (not necessarily large) number of independent cost variables, then the output uncertainty range can be approximated by a Normal Distribution, and that we can measure the variance or standard deviation of that output distribution. Book (1997, 1999) demonstrated that if we were to take a 30-variable model and assume a relatively low level of correlation of 20% across the board, then we are likely to underestimate the standard deviation by about 60% in comparison with a model that assumes total independence between variables. As a consequence, the range of potential outcomes will be understated by a similar amount. Book also showed that the degree of underestimation of the output range increases rapidly with small levels of correlation, but that rate of underestimation growth slows for larger levels of correlation (the curve would arch up and over to the right if we were to draw a graph of correlation on the horizontal access and percentage underestimation on the vertical scale).

Based on this work by Book, Smart (2009) postulated that a background correlation of 20%, or possibly 30%, is a better estimate of the underlying correlation between variables than 0% or 100%, and that the level of background correlation increases as we roll-up the Work Breakdown Structure from Hardware-to-Hardware level (at 20%), Hardware-to-Systems (at 40%) and finally Systems-to-Systems Level integration (at 100%).

Let’s explore some of the options we have to model this partial correlation.

However, in order to demonstrate what is happening in the background with partially correlated distributions, a more simplistic logic using a relatively small number of variables may be sufficient to illustrate some key messages on what to do, and what not do, with our Commercial Off-the-Shelf toolset.

Caveat augur

Whilst it is possible to create a Monte Carlo Simulation Engine in Microsoft Excel with partially correlated variables, it is not recommended that we do this without the aid of a dedicated Monte Carlo Add-in, or instead that we use a discrete application that will interact seamlessly with Microsoft Excel. Doing it exclusively in Microsoft Excel creates very large files, and the logic is more likely to be imperfect and over-simplified.

Unless we skipped over Volume II Chapter 5 as a load of mumbo-jumbo mathematics, we might recall that we defined Correlation as a measure of linearity or linear dependence between paired values, whereas Rank Correlation links the order of values together. From a probability distribution perspective, the latter is far more intuitive than the former in terms of how we link and describe the relationship between two variables with wildly different distributions. This is illustrated in Figure 3.30, in which the left hand graph shows two independent Beta Distributions. By forcing a 1:1 horizontal mapping of the Confidence Levels between the two, we can force a 100% Rank Correlation, but as shown in the right hand graph, this is clearly not a Linear Correlation.

Notes:

- If we took more mapping points between the two distributions then the Linear Correlation would increase as there would be proportionately more points in the central area than in the two extreme tails, giving us a closer approximation to a straight line … but we’ll never get a perfect 100% Linear Correlation.

- The degree of curvature is accentuated in this example as we have chosen oppositely skewed distributions. If the two distributions had had the same positive or negative direction of skew, then the degree of Linear Correlation would increase also.

- For these reasons, we can feel more relaxed about enforcing degrees of correlation between two distributions based on their Rank Correlation rather than Linear Correlation.

Figure 3.30 Enforcing a 100% Rank Correlation Between Two Beta Distributions

Mapping the distribution Confidence levels together in this way seems like a really good idea until we start to think how should we do it when the distributions are only partially correlated? What we want to get is a loose tying together that allows both variables to vary independently of each other but also apply some restrictions so that if we were to produce a scatter diagram of points selected at random between two distributions it would look like our Correlation Chicken example from Volume II Chapter 5. We have already seen in that chapter that the Controlling Partner Technique works well to ‘push’ a level of correlation between an independent and dependent variable, but it doesn’t work with semi-independent variables without distorting the underlying input distributions

Iman and Conover (1982) showed that it is possible to impose a partial rank correlation between two variables which maintains the marginal input distributions. Book (1999) and Vose (2008, pp.356–358) inform us that most commercially available Monte Carlo Simulation toolsets use Rank Order Correlation based on the seminal work of Iman and Conover ... but not all; some Monte Carlo toolsets available in the market can provide a range of more sophisticated options using mathematical Copulas to enable partial correlation of two or more variables (Vose, 2008, p.367). Here, in order to keep the explanations and examples relatively simple, we will only be considering the relatively simple Normal Copula as we discussed and illustrated in Volume II Chapter 5.

In Monte Carlo Analysis, when we pick a random number in the range [0,1] we are in essence choosing a Confidence Level for the Distribution in question. The Cumulative Distribution Function of a Standard Continuous Uniform Distribution is a linear function between 0 and 1 on both scales. In Volume II Chapter 5 we discussed ways in which we can enforce a level of linear correlation between two variables that are linearly dependent on each other. However, when we start to consider multiple variables, we must start to consider the interaction between other variables and their consequential knock-on effects. Let’s look at two basic models of applying 50% correlation (as an example) to a number of variables:

- We could define that each variable is 50% correlated with the previous variable (in a pre-defined sequence). We will call this ‘Chain-Linking’

- We could identify a ‘master’ or ‘hub’ variable with which all other variables are correlated at the 50% level. Let’s call this ‘Hub-Linking’

A Standard Continuous Uniform Distribution is one that can take any value between a minimum of 0 and a maximum of 1with equal probability

Conceptually, these are not the same as we can see from Figure 3.31, and neither of them are perfect in a logical sense, but they serve to illustrate the issues generated. In both we are defining a ‘master variable’ to which all others can be related in terms of their degree of correlation. In practice, we may prefer a more democratic approach where all semi-independent variables are considered equal, but we may also argue that some variables may be more important as say cost drivers than others (which brings to mind thoughts of Orwell’s Animal Farm (1945) in which, to paraphrase the pigs, it was declared that there was a distinct hierarchy amongst animals when it came to interpreting what was meant by equality!).

Figure 3.31 Conceptual Models for Correlating Multiple Variables

3.2.3 Chain-Linked Correlation models

Let’s begin by examining the Chain-Linked model applied to the ten element cost data example we have been using in this chapter. If we specify that each Cost Item in our Monte Carlo Model is 50% correlated to the previous Cost Item using a Normal Copula, then we get the result in Figure 3.32, which shows a widening and flattening of the output in the right hand graph in comparison with the independent events output in the left hand graph. Reassuringly, the widening and flattening is less than that for the 100% Correlated example we showed earlier in Figure 3.29.

In Figure 3.33 we show the first link in the chain, in which we have allowed Cost Item 1 to be considered as the free-ranging chicken, and Cost Item 2 to be the correlated chicken (or chick) whose movements are linked by its correlation to the free-ranging chicken. The left-hand graph depicts the Correlated Random Number generated by the Copula (notice the characteristic clustering or ‘pinch-points’ in the lower left and upper right where the values of similar rank order have congregated … although not exclusively as we can see by the thinly spread values in the top left and bottom right corners). This pattern then maps directly onto the two variables’ distribution functions, giving us the range of potential paired values shown in the right hand graph of Figure 3.33, which looks more like the Correlated Chicken analogy from Volume II Chapter 5.

Figure 3.34 highlights that despite the imposition of a 50% correlation between the two variables, the input distributions have not been compromised (in this case Uniform and PERT-Beta for Cost Items 1 and 2 respectively). As Cost Item 1 is the Lead Variable, the values selected at random are identical to the case of independent events, whereas for Cost Item 2, the randomly selected values differ in detail but not in their basic distribution, which remains a PERT-Beta Distribution.

Figure 3.32 Example of 50% Chain-Linked Correlation Using a Normal Copula

Figure 3.33 Chain-Linked Output Correlation for Cost Items 1 and 2

The net effect on the sum of the two variables is to increase the likelihood of two lower values or two higher values occurring together, and therefore decrease the chances of a middle range value to compensate, as shown in Figure 3.35.

This pattern continues through each successive pairing of Chain-Linked variables.

Figure 3.34 Impact of Chain-Linked Correlation on ‘Controlled’ Variable Value

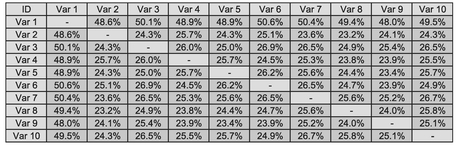

In Table 3.12 we have extracted the Correlation Matrix which shows that indeed each pair in the chain (just to the right or left of the diagonal, is around the 50% correlation we specified as an input (which must be re-assuring that we have done it right). Normally, the diagonal would say 100% correlated for every variable with itself. For improved clarity, we have suppressed these here.) Perhaps what is more interesting is that if we step one more place away from the diagonal to read the Correlation between alternate links in the chain (e.g. first and third or second and fourth) we get a value around 25%. Some of us may be thinking that this is half the value of the chain, but it is actually the product of the adjacent pairs, in this case 50% of 50%.

As we look further away from the diagonal the level of ‘consequential’ correlation diminishes with no obvious pattern due to the random variations. However, there is a pattern, albeit a little convoluted; it’s the Geometric Mean of the products of the preceding correlation pairs (above and to the right if we are below the diagonal, (or below and to the left if we are above the diagonal) as illustrated in Figure 3.36, for a chain of five variables in four decreasing correlation pairs (60%, 50%, 40%, 30%).

Commercially available Monte Carlo Simulation applications are likely to create an error message in these circumstances.

It would also seem to be perfectly reasonable to expect that if we were to reverse the chain sequence then we would get the same Monte Carlo Output. However, in our

Figure 3.35 Impact of Chain-Linked Correlation on Sum of Variable Values

Table 3.12 Monte Carlo Output Correlation with 50% Chain-Linked Input Correlation

Figure 3.36 Generation of a Chain-Linked Correlation Matrix

Resist the temptation to close the chain and create a circular correlation loop. This is akin to creating a circular reference in Microsoft Excel, in which one calculation is ultimately dependent on itself.

Commercially available Monte Carlo Simulation applications are likely to create an error message in these circumstances.

example if we were to reverse the linking and use Variable 10 as the lead variable, we would get the results in Figure 3.37 and Table 3.13, which is very consistent with but not identical to that which we had in Figure 3.32. This is due to the fact that we are allowing a different variable to be the lead and the difference is within the variation we can expect between any two Monte Carlo Simulation iterations. The reason for this difference is that we are holding a different set of random values fixed for the purposes of the comparison.

Figure 3.37 Example of 50% Chain-Linked Correlation Where the Chain Sequence has been Reversed

There may be a natural sequence of events that would suggest which should be our lead variable. However, as we will have spotted from this example, chain-linking correlated variables in this way does not give us that background correlation that Smart (2009) recommended as a starting point for cost modelling. Perhaps we will get better luck with a Hub-Linked Model?

Table 3.13 Output Correlation Matrix where the Chain-Link Sequence has been Reversed

3.2.4 Hub-Linked Correlation models

Now let’s consider Hub-Linking all the Cost Items to Cost Item 1 (the Hub or Lead Variable). As we can see from the hedgehog plot on the right in comparison with the Mohican haircut on the left of Figure 3.38, Hub-Linked Correlation also widens the range of potential output values in comparison with independent events. A quick look back at Figure 3.32 tells us that this appears to create a wider range of potential outcomes than the Chain-Linked model example.

Figure 3.38 Example of 50% Hub-Linked Correlation

Table 3.14 reproduces the Cross-Variable Correlation Matrix (again with the diagonal 100% values suppressed for clarity). Here we can see that each variable is close to being 50% correlated with Hub Variable 1, but the consequence is that all other variable pairings are around 25% correlated (i.e. the square of the Hub’s Correlation; we might like to consider this to be the ‘background correlation’).

Notes:

- 1) We instructed the model to use a 50% Rank Correlation. Whilst the corresponding Linear Correlation will be very similar in value it will be slightly different. If we want the Output Linear Correlation to be closer to the 50% Level we may have to alter the Rank Correlation by small degree, say 51%.

- 2) If we were to use an input Rank Correlation of 40%, the background correlation would be in the region of 16%

Let’s just dig a little deeper to get an insight into what is happening here by looking at the interaction between individual pairs of values. Cost Items 1 and 2 behave in exactly the same way as they did for Chain-Linking where Cost Item 1 was taken as the Lead Variable in the Chain (see previous Figures 3.33, 3.34 and 3.35). We would get a similar set of pictures if we look at what is happening between each successive value and the Hub Variable. If we compare the consequential interaction of Cost Items 2 and 3, we will get the ‘swarm of bees’ in Figure 3.39. We would get a similar position if we compared other combinations of variable values as they are all just one step away from a Hub-Correlated pair … very similar to being two steps removed from the Chain-Link diagonal.

Table 3.14 Monte Carlo Output Correlation with 50% Hub-Linked Input Correlation

However, you may be disappointed, but probably not surprised, that if we change the Hub Variable we will get a different result, as illustrated in Figure 3.40 in comparison with previous Figure 3.38. (In this latter case, we used Cost Item 10.) On the brighter side, there is still a broader range of potential model outcomes than we would get with independent events. However, it does mean that we would need to be careful in picking our Hub variable. In the spirit of TRACEability (Transparent, Repeatable, Appropriate, Credible and Experientially-based), we should have a sound logical reason, or better still evidence, of why we have chosen that particular variable as the driver for all the others.

Figure 3.39 Consequential Hub-Linked Correlation for Cost Items 2 and 3

Figure 3.40 Example of 50% Hub-Linked Correlation Using a Different Hub Variable

Again, using a simple Normal bi-variate Copula as we have here to drive correlation through our model using a specified Lead or Hub-variable, does not give us a constant or Background Isometric correlation across all variables … but it does get us a lot closer than the Chain-Linked model.

3.2.5 Using a Hub-Linked model to drive a background isometric correlation

Suppose that we find that our chosen Monte Carlo application works as it does for a Hub-Linked model, and we want to get a background Correlation of a particular value, here’s a little cheat that can work. Again, for comparison we’ll look at achieving a background correlation of around 50%. We’ll reverse engineer the fact that the Hub-Linked model above created a consequential correlation equal to the square of the Hub-based Correlation.

- Let’s create a dummy variable with a uniform distribution but a zero value (or next to nothing, so that its inclusion is irrelevant from an accuracy perspective)

- Use this dummy variable as the Hub variable to which all others are correlated … but at a Correlation level equal to the square root of the background correlation we want. In this case we will use 70.71% as the square root of 50%

When we run our simulation, we get an output similar to Figure 3.41 with a Correlation Matrix as Table 3.15.

Just to wrap this up, let’s have a look in Figure 3.42 at what our background Isometric Correlation of 25% looks like using this little cheat:

- 25% is midway in the starting correlation of 20% to 30% as recommended by Smart (2009), which in turn was based on the on observations made by Book (1997, 1999)

- The Square Root of 25% is 50%, which is what we will use as the correlation level for our Dummy Hub variable

3.2.6 Which way should we go?

The answer is ‘Do not build it ourselves in Microsoft Excel.’ The files are likely to be large, unwieldly and limited in potential functionality. We are much better finding a Commercial Off-The-Shelf (COTS) Monte Carlo Simulation tool which meets our needs. There are a number in the market that are or operate as Microsoft Excel Add-Ins, working seamlessly in supplementing Excel without the need for worrying whether our calculations are correct. There are other COTS Monte Carlo Simulation tools that are not integrated with Microsoft Excel but which can still be used by cutting and pasting data between them.

How these various toolsets ‘push’ correlation through the model is usually hidden under that rather vague term ‘proprietary information’ but in the views of Book (1999) and Vose (2008) many of them use the algorithms published by Iman and Conover (1982). We should run a few tests to see if we get the outputs we expect:

Figure 3.41 Using Hub-Linked Correlation with a Dummy Variable to Derive Isometric Correlation

Table 3.15 Monte Carlo Output Correlation with 50% Isometric Hub-Linked Input Correlation

- Some toolsets allow us to view the Output Correlation Matrix. Does it give us what we expect?

- If a Correlation Matrix is not a standard output, can we generate the correlation between any pairs of variables generated within the model, and plot them on a scatter diagram?

Figure 3.42 Using Hub-Linked Correlation with a Dummy Variable to Derive 25% Isometric Correlation

- Try to replicate the models in this book to see whether we get outputs that are compatible with the Chain-Linked, Hub-Linked or Background Isometric Models.

- Check what happens if we reverse a Chain-Linked model. Does it maintain a consistent output?

Let’s compare our three models of Correlation and draw out the differences (Table 3.16). Based on the three Correlation Matrices we have created we would expect that there was a progressive movement of each model away from total independence to total correlation … and not to be disappointed, we have that. (Don’t tell me you were all thinking that I was going to disappoint you?) The range and standard deviation of the potential outcomes all increase relative to the model to the left. The Mean and the Median remain relatively unfazed by all the correlation going on around them. The 4σ range of a Normal Distribution would yield a Confidence Interval of 95.45%. Our models get less and less ‘Nor-malesque’ as we move from left to right, becoming slightly more positively skewed (Skew > 0) and less peaky or more platykurtic (Excess Kurtosis < 0) than a Normal Distribution.

Of one thing we can be sure, whatever model of correlation we choose it will only ever be an approximation to reality, as a result we should choose a model that we can support rationally under the spirit of TRACEability. With this in mind we may want to consider defining the level of Correlation as an Uncertainty Variable itself and model it accordingly. (We could do this, but are we then not at risk of failing to make an informed judgement and passing the probability buck to a ‘black box’?)

Table 3.16 Comparison of Chain-Linked, Hub-Linked and Background Isometric Correlation Models

3.2.7 A word of warning about negative correlation in Monte Carlo Simulation

Until now we have only been considering positive correlation in relation to distributions, i.e. situations where they have a tendency to pull each other in the same direction (low with low, high with high.) We can also in theory use negative correlation in which the distributions push each other in opposite directions (low with high, high with low.)

As a general rule, ‘Don’t try this at home’, unless you have a compelling reason!

It is true that negative correlation will have the opposite effect to positive correlation where we have only two variables, forcing a Monte Carlo Simulation inwards and upwards, but it causes major logical dilemmas where we have multiple variables as we inevitably will have in the majority of cases.

Caveat augur

Think very carefully before using negative correlation, and if used, use them sparingly.

Chain-Correlation between pairs of variables push consequential correlation onto adjacent pairs of variables based on the product of the parent variables’ correlations. This may create unexpected results when using negative correlation.

We can illustrate the issues it causes with an extreme example, if we revisit our Chain-Linked Model but impose a 50% Negative Correlation between consecutive overlapping pairs, (Var 1 with Var 2, Var 2 with Var 3 etc.), giving us an alternating pattern of positive and negative correlated pairs, similar to Table 3.17.

Table 3.17 Monte Carlo Output Correlation with 50% Negative Chain-Linked Input Correlation

3.3 Modelling and analysis of Risk, Opportunity and Uncertainty

In Section 3.1.3 we defined ‘Uncertainty’ to be an expression of the lack of sureness around a variable’s value that is frequently quantified as a distribution or range of potential values with an optimistic or lower end bound and a pessimistic or upper end bound.

In principle all tasks and activities will have an uncertainty range around their cost and/or the duration or timescale. Some of the cause of the uncertainty can be due to a lack of complete definition of the task or activity to be performed, but it also includes allowance for a lack of sure knowledge in terms of the level of performance applied to complete the task or activity.

Some tasks or activities may have zero uncertainty, for example a fixed price quotation from a supplier. This doesn’t mean that there is no uncertainty, just that the uncertainty is being managed by the supplier and a level of uncertainty has been baked into the quotation.

There will be some tasks or activities that may or may not have to be addressed, over and above the baseline project assumptions; these are Risk and Opportunities. In some circles Risk is sometimes defined as the continuum stretching between a negative ‘Threat’ and a positive ‘Opportunity’, but if we were to consult authoritative sources such as the Oxford English Dictionary (Stevenson & Waite, 2011), the more usual interpretation has a negative rather than a positive connotation. As we can surmise, this is another of those annoying cases where there is no universally accepted definition in industry. For our purposes in the context of this discussion, we shall define three terms as follows:

Definition 3.2 Risk

A Risk is an event or set of circumstances that may or may not occur, but if it does occur a Risk will have a detrimental effect on our plans, impacting negatively on the cost, quality, schedule, scope compliance and/or reputation of our project or organisation.

Definition 3.3 Opportunity

An Opportunity is an event or set of circumstances that may or may not occur, but if it does occur, an Opportunity will have a beneficial effect on our plans, impacting positively on the cost, quality, schedule, scope compliance and/or reputation of our project or organisation.

Definition 3.4 Probability of Occurrence

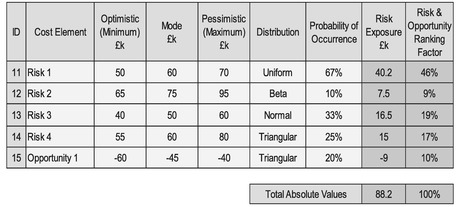

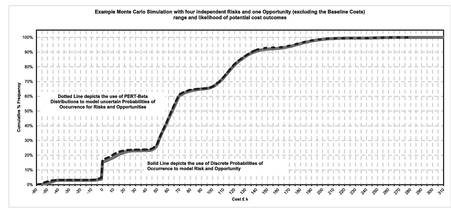

A Probability of Occurrence is a quantification of the likelihood that an associated Risk or Opportunity will occur with its consequential effects.T