- Scala for Machine Learning Second Edition

- Table of Contents

- Scala for Machine Learning Second Edition

- Credits

- About the Author

- About the Reviewers

- www.PacktPub.com

- Customer Feedback

- Preface

- 1. Getting Started

- Mathematical notations for the curious

- Why machine learning?

- Why Scala?

- Model categorization

- Taxonomy of machine learning algorithms

- Leveraging Java libraries

- Tools and frameworks

- Source code

- Let's kick the tires

- Summary

- 2. Data Pipelines

- 3. Data Preprocessing

- 4. Unsupervised Learning

- K-mean clustering

- Expectation-Maximization (EM)

- Summary

- 5. Dimension Reduction

- 6. Naïve Bayes Classifiers

- 7. Sequential Data Models

- 8. Monte Carlo Inference

- 9. Regression and Regularization

- 10. Multilayer Perceptron

- 11. Deep Learning

- 12. Kernel Models and SVM

- 13. Evolutionary Computing

- 14. Multiarmed Bandits

- 15. Reinforcement Learning

- 16. Parallelism in Scala and Akka

- 17. Apache Spark MLlib

- A. Basic Concepts

- B. References

- Index

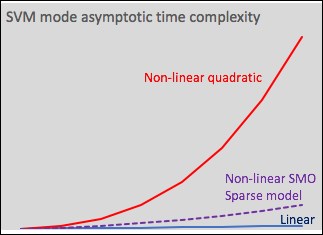

As with most discriminative models, the performance of the support vector machine obviously depends on the optimizer selected to maximize the margin during training. Let's look at the time complexity for different configuration and applications of SVM:

- A linear model (SVM without kernel) has an asymptotic time complexity O(N) for training N labeled observations

- Nonlinear models with quadratic kernel methods (formulated as a quadratic programming problem) have an asymptotic time complexity of O(N3)

- An algorithm that uses sequential minimal optimization techniques, such as index caching or elimination of null values (as in LIBSVM), has an asymptotic time complexity of O(N2) with the worst-case scenario (quadratic optimization) of O(N3)

- Sparse problems for very large training sets (N > 10,000) also have an asymptotic time of O(N2):

Graph asymptotic time complexity for various SVM implementations

The time and space complexity of the kernelized support vector machine has been the source of recent research [12:16] [12:17].

-

No Comment

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.