Chapter 7. Lessons About Process Improvement

You might recall that the first sentence in this book was: “I’ve never known anyone who could truthfully say, ‘I am building software today as well as software could ever be built.’” Unless you can legitimately make this claim, you should always look for better ways to conduct your software projects. That’s what software process improvement (SPI) is all about.

Software Process Improvement: What and Why

The objective of SPI is to reduce the cost of developing and maintaining software. It’s not to generate a shelf full of processes and procedures. It’s not to comply with the dictates of the currently most fashionable process improvement model or project management framework. Process improvement is a means to an end, the end being superior business results, however you define that. Your goal might be to deliver products faster, incur less rework, better satisfy customer needs, reduce support costs, or all of the above. Something needs to change in the way your teams work so you can achieve the goal. That change is software process improvement.

Whenever you apply a new technique to make your project work more efficient and effective, you’re practicing process improvement. Each time your team holds a retrospective to get ideas for doing their work better the next time, they’re practicing process improvement. Beginning in the late 1980s, many organizations undertook systematic improvement efforts across their departments and project teams, with varying degrees of success. These approaches often followed an established SPI model, such as the Capability Maturity Model for Software or CMM (Paulk, et al., 1995) or its successor, the Capability Maturity Model Integration or CMMI (Chrissis, Konrad, and Shrum, 2003). Thousands of organizations worldwide, particularly government suppliers in the United States, still conduct formal CMMI process capability appraisals each year (CMMI Institute, 2017). Many companies have found that a systematic SPI approach helped them achieve superior results.

In part, the agile development movement was a reaction to the often comprehensive processes embodied in many maturity model-driven SPI efforts. In fact, some early agile approaches were called lightweight methodologies. The twelfth principle behind the Agile Manifesto states, “At regular intervals, the team reflects on how to become more effective, then tunes and adjusts its behavior accordingly” (Agile Alliance, 2021c). That’s the essence of process improvement for individuals, teams, and organizations.

Don’t Fear the Process

The word process has a negative connotation in some circles. Sometimes people don’t realize that they already have a process for software development, even if it’s ill-defined or undocumented. Some developers are afraid that having to follow defined procedures will cramp their style or stifle their creativity. Managers might fear that following a defined process will slow the project down. Sure, it’s possible to adopt inappropriate processes and follow them in a dogmatic fashion that doesn’t add value or allow for variations in projects and people. But this is not a requirement! When it works properly, organizations succeed because of their processes, not in spite of them.

Sensible and appropriate processes help software organizations be successful consistently, not just when the right people unite to pull off a difficult project through heroic efforts. Process and creativity are not incompatible. I follow a process for writing books, but that process doesn’t constrain the words I put on the page in the least. My process is a structure that saves me time, keeps my work organized, and lets me continuously track progress toward my goal of completing a good book on schedule. When I can rely on an existing process, I can concentrate my mental resources on the problem at hand instead of on how to manage the effort.

Despite its conceptual simplicity, SPI is challenging. It’s not easy to get people to acknowledge shortcomings in their current ways of working. With project work always pressing, how do you coax teams to spend the time it takes to identify and address improvement areas? Persuading managers to invest in future strategic benefits when they have looming deadlines is an uphill battle. And it’s a challenge to alter an organization’s culture, yet SPI involves culture change along with modifications in technical and management practices.

Making SPI Stick

Many SPI programs fail to yield effective and sustained results. Big, shiny new change initiatives are introduced with fanfare, but they quietly disappear with no announcement or retrospective. The organization abandons the effort and tries something different later on. I think you get only two failed attempts at strategic improvement before people conclude that the organization isn’t serious about change. After two failures, few team members will take the next change initiative seriously.

Successful process improvement takes time. Organizations need to stick with it long enough to reap the benefits. Almost any systematic improvement approach can yield better results. If you bail out partway through the process, though, after you’ve invested in assessment and learning but before the changes pay off, you’ll lose your investment. Because large-scale process change is not quick, learn to take satisfaction from small victories. Try to spot improvements you can put into place quickly that will address known problems, as well as the longer-term, systemic changes.

Consistency in management leadership also is essential. One of my consulting clients was frustrated. His organization had been making excellent progress on a CMM-based improvement strategy for some time. A new senior manager decided to go a different direction. He abandoned the CMM effort and switched to an ISO 9001-based quality management system approach. Those who had worked diligently on the CMM strategy were discouraged when the fruits of all their hard work were discarded. Flailing on SPI activities can disillusion those practitioners who are genuinely interested in doing better. Unless the organization has a compelling need to comply with a specific standard, such as for certification purposes, any framework for developing high-quality processes should be acceptable.

When I began a new job leading the SPI program in a large organization, I met a woman who had held the corresponding position for a year in a similar organization in the same company. I asked my counterpart what attitude the developers in her division held toward the program. She thought for a moment and then replied, “They’re just waiting for it to go away.” If SPI is viewed simply as the latest management fad, most practitioners will just ride it out, trying to get their real work done despite the distraction. That’s not a formula for successful change.

This chapter presents ten lessons I’ve learned in many years of software process improvement work in my organizations and those of my consulting clients. Perhaps they’ll help your SPI initiative to succeed.

First Steps: Software Process Improvement

I suggest you spend a few minutes on the following activities before reading the process improvement-related lessons in this chapter. As you read the lessons, contemplate to what extent each of them applies to your organization or project team.

1. What business outcomes have you not yet achieved that might indicate the need for some improved software development or management processes?

2. How successful has your organization been in the past with its SPI initiatives? If you’ve had some success, what actions and attitudes made those efforts pay off? Did you get better results by applying an established improvement model or with homegrown approaches?

3. Identify any shortcomings or problems in how your organization works to improve its software development and management processes.

4. State the impacts that each problem has on your ability to identify, design, implement, and roll out better processes and practices. How do the problems impede your ability to continuously improve the way you build software or your success in product delivery?

5. For each problem from Step #3, identify the root causes that contribute to the problem. Problems, impacts, and root causes can blur together, so try to tease them apart and see their connections. You might find multiple root causes that contribute to the same problem, as well as several problems that arise from a single root cause.

6. As you read this chapter, list any practices that would be useful to your team.

Lesson #51. Watch out for “Management by Businessweek.”

Frustration with disappointing results is a powerful motivation for trying a different approach. However, you need to be confident that any new strategy you adopt has a good chance of solving your problem. Organizations sometimes turn to the latest buzzword solution, the hot new thing in software development, as the magic elixir for their software challenges.

A manager might read about a promising—but possibly overhyped—methodology and insist that his organization adopt it immediately to cure their ills. I’ve heard this phenomenon called “management by Businessweek.” Perhaps a developer is enthused after hearing a conference presentation about a new way of working and wants his team to try it. The drive to improve is laudable, but you need to direct that energy toward the right problem and assess how well a potential solution fits your culture before adopting it.

Over the years, people have leaped onto the bandwagons of countless new software engineering and management paradigms, methodologies, and frameworks. Among others, we’ve gone through:

• Structured systems analysis and design

• Object-oriented programming

• Information engineering

• Software factory

• Rapid application development

• Spiral model

• Dynamic systems development method

• Test-driven development

• Rational Unified Process

• DevOps

More recently, agile software development in numerous variations—Extreme Programming, Adaptive Software Development, Feature-Driven Development, Scrum, Lean, Kanban, and Scaled Agile Framework, among others—has exemplified this pursuit of ideal solutions.

Alas, as Frederick P. Brooks, Jr., (1995) eloquently informed us, there are no silver bullets: “There is no single development, in either technology or management technique, which by itself promises even one order-of-magnitude improvement within a decade in productivity, in reliability, in simplicity.” All of the approaches listed above have their merits and limitations; all must be applied to appropriate problems by properly prepared teams and managers. I’ll use a hypothetical new software development approach called Method-9 as an example for this discussion.

First Problem, Then Solution

The articles and books that its inventors and early adopters wrote about Method-9 praised its benefits. Some companies are drawn to Method-9 because they want their products to satisfy customer needs better. Maybe you want to deliver useful software faster (and who doesn’t?). Method-9 can get you there. Perhaps you wish to reduce the defects that annoy customers and drain the team’s time with rework (again, who doesn’t?). Method-9 to the rescue! This is the essence of process improvement: setting goals, identifying barriers, and choosing techniques you believe will address them.

Before you settle on any new development approach, though, ask yourself, “What’s preventing us from achieving those better results today?” (Wiegers, 2019f). If you want to deliver useful products faster, what’s slowing you down? If your goal is fewer defects and less rework, why do your products contain too many bugs today? If your ambition is to respond faster to changing needs, what’s standing in your way?

In other words, if Method-9 is the answer—at least according to that article you read—what was the question?

I suspect that not all organizations perform a careful root cause analysis before they latch on to what sounds like a promising solution. Setting improvement goals is a great start, but you must also understand the current obstacles to achieving those goals. You need to treat real causes, not symptoms. If you don’t understand those problems, choosing any new approach is just a hopeful shot in the dark.

A Root Cause Example

Suppose you want to deliver software products that meet the customers’ needs better than in the past. You’ve read that Method-9 teams include a role called the Vision Guru, who’s responsible for ensuring the product achieves the desired outcome. “Perfect!” you think. “The Vision Guru will make sure we build the right thing. Happy customers are guaranteed.” Problem solved, right? Maybe, but I suggest that, before making any wholesale process changes, your team should understand why your products don’t thrill your customers already.

Root cause analysis is a process of thinking backward, asking “why” several times until you get to issues that you can target with thoughtfully selected improvement actions. The first contributing cause suggested might not be directly actionable; nor might it be the ultimate root cause. Therefore, addressing that initial cause won’t solve the problem. You need to ask “why” another time or two to ensure that you’re getting to the tips of the analysis tree.

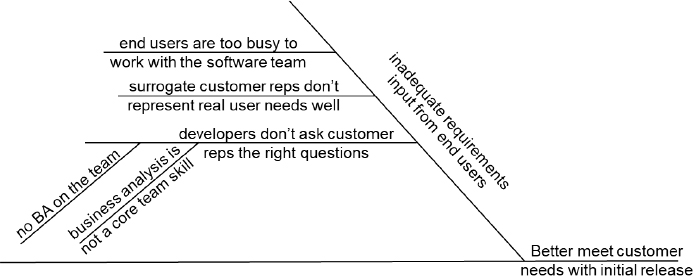

Figure 7.1 shows a portion of a fishbone diagram—also called an Ishikawa or cause-and-effect diagram—which is a convenient way to work through a root cause analysis. The only tools you need are a few interested stakeholders, a whiteboard, and some markers. Let’s walk through that diagram.

Figure 7.1 Root cause analysis often is depicted as a fishbone diagram.

Your goal is to better meet customer needs with the initial release of your software products. Write that goal on a long horizontal line. Alternatively, you could phrase it as a problem statement: “Initial product release does not meet customer needs.” In either case, that long horizontal line—the backbone in the fishbone diagram—represents your target issue.

Next, ask your group, “Why are we not already meeting our customer needs?” Now the analysis begins. One possible answer is that the team doesn’t get adequate input to the requirements from end users—a common situation. Write that cause on a diagonal line coming off the goal statement line. That’s a good start, but you need a deeper understanding to know how to solve the problem. You ask, “Why not?”

One member of the group says, “We’ve tried to talk to real users, but their managers say they’re too busy to work with the software team.” Someone else complains that the surrogate customer representatives who work with the team don’t do a good job of presenting the ultimate users’ real needs. Write those second-level causes on horizontal lines coming off the parent problem’s diagonal line.

A third participant points out that the developers who attempt to elicit requirements aren’t skilled at asking customer reps the right questions. Then comes the usual follow-up question: “Why not?” There could be multiple reasons, including a lack of education or interest in requirements on the developers’ part. It might be that business analysis is neither a core team skill nor a dedicated team role. Each cause goes onto a new diagonal line attached to its parent.

Now you’re getting to actionable barriers that stand between your current team’s performance and where you all want to be. Continue this layered analysis until the participants agree that they understand why they aren’t already achieving the desired results. The diagram might get messy; consider writing the causes on sticky notes so you can shuffle them around as the exploration continues. I’ve found this technique to be remarkably efficient at focusing the participants’ thinking and quickly reaching a clear understanding of the situation.

Diagnosis Leads to Cure

In subsequent brainstorming sessions, team members can explore practical solutions to control those root causes. Then you are well on your way toward achieving superior performance. You might conclude that adding experienced BAs to your teams could be more valuable than adopting Method-9 with its Vision Guru. Or maybe the combination of the two will prove to be the secret sauce. You just don’t know until you think it through.

As you contemplate whether a new development method will work for you, look past the hype and the fads. Understand the prerequisites and risks associated with the new approach, and then balance those against a realistic appraisal of the potential payoff. Good questions to explore include these:

• Will your team need training, tools, or consulting assistance to get started and sustain progress?

• Would the solution’s cost yield a high return on investment?

• What possible cultural impacts would the transition have on your team, your customers, and their respective organizations and businesses?

• How ugly could the learning curve be?

The insights from a root cause analysis can point you toward better practices to address each problem you discover. Without exploring the barriers between where you are today and your goals, don’t be surprised if the problems are still there after you switch to a different development strategy. Try a root cause analysis instead of chasing after the Hottest New Thing someone read in a headline.

Root cause analysis takes less time than you might fear. It’s a sound investment in focusing your improvement efforts effectively. Any doctor will tell you that it’s essential to understand the disease before prescribing a treatment.

Lesson #52. Ask not, “What’s in it for me?” Ask, “What’s in it for us?”

When people are asked to use a new development approach, follow a different procedure, or undertake an unexpected task, their instinctive reaction is to wonder, “What’s in it for me?” That’s a natural human reaction, but it’s not quite the proper question. The right question is, “What’s in it for us?” The us in this question could refer to the rest of the team, the IT organization, the company, or even humanity as a whole—anyone beyond the individual. Effective change initiatives must consider the team’s collective results, not just the impact on each individual’s productivity, effectiveness, or comfort level. The people involved with leading an improvement effort should be able to answer the question “What’s in it for us?” persuasively. If there’s some important value in it for us, then there’s also something in it for me, considering that I am part of the us.

Asking a busy project team member to do something extra, like reviewing a colleague’s work, might not seem to provide any immediate benefit for that individual. However, that effort could collectively save the team more time than the individual invested, thereby providing a net positive contribution to the project. Enlisting colleagues to examine some of your requirements or code for errors does consume their time. A code review could involve two or three hours of work per participant. Those are hours that the reviewers aren’t spending on their own responsibilities. However, an effective review reveals defects, and we’ve seen that it’s always cheaper to correct defects earlier rather than later.

The Team Payoff

To illustrate the idea of team versus individual payoffs, let’s work through a hypothetical example to see how a peer review can yield a substantial collective benefit. Suppose that my team’s BA, Ari, has written several pages of requirements, accompanied by some visual analysis models and a state table. Ari asks two other colleagues and me to review her requirements. The four of us each spend one hour examining the material before a team review meeting, which also lasts an hour:

Preparation effort = 1 hour/reviewer * 4 reviewers = 4 hours

Review meeting effort = 1 hour/reviewer * 4 reviewers = 4 hours

Total review effort = 4 hours + 4 hours = 8 hours

Let’s assume that our review discovers 24 defects of varying severity and that it takes Ari an average of 5 minutes to correct each one:

Actual rework effort = 24 defects * 0.0833 hour/defect = 2 hours

Now, imagine that Ari didn’t solicit this review. Those defects would remain in the requirements set, only to be discovered later in the development cycle. Ari would still need to correct the requirements, and other team members would have to redo any designs, code, tests, and documentation based on the erroneous requirements. This work could easily take ten times as long as quickly fixing the bad requirements. The rework cost could be far greater if those defects made it into the final product to be discovered by customers. This ten-fold effort multiplication factor lets us estimate the potential rework had Ari and company not held the review:

Potential rework effort = 24 defects * 0.833 hour/defect = 20 hours

Thus, this hypothetical requirements review prevented perhaps 18 hours of late-stage rework:

Rework effort prevented = 20 hours potential rework – 2 hours actual rework = 18 hours

This simple analysis suggests a minimum return on investment from the review of 225 percent:

ROI from peer review = 18 avoided rework hours / 8 hours review effort = 2.25

That’s a tangible benefit for the project team as a whole. There’s something in it for us, even if there isn’t something in it for each participant.

Numerous companies that have measured the benefits of performing the type of rigorous peer review called inspection have reported results more dramatic than this example. For instance, Hewlett-Packard measured a return on investment of ten to one from their inspection program (Grady and Van Slack, 1994). IBM found that an hour of inspection on average saved eighty-two hours of rework effort compared to finding defects in the released product (Holland, 1999). As with all technical practices, your mileage may vary, but few single software engineering practices can potentially yield a 10-fold return on investment.

The Personal Payoff

Suppose you explain this analysis to a reluctant team member who doesn’t see what’s in it for him if he spends his valuable time reviewing your work. He might buy your reasoning and recognize that his two hours of review time could contribute to a significant payoff for the team. However, he still sees nothing in it for himself. How can you convince him otherwise? As a frequent reviewer myself, I’ve found many benefits from the time I spent examining someone else’s work, including these:

• I learn something every time I look over a colleague’s shoulder. They might be using some coding technique I’m not familiar with, or perhaps they’ve found a way to communicate requirements that’s better than my method.

• I better understand certain aspects of the project, which could help me perform my part of the work better. It also helps to ensure against critical knowledge loss when a particular developer leaves the organization.

• A review disseminates knowledge across a project team, which enhances the whole team’s performance. It’s reasonable to expect all team members to share their knowledge with their colleagues; reviews are one way to do that.

Are these benefits worth the hours I invested in reviewing someone else’s work? Maybe not. But there’s yet another payoff. At some point, I’ll ask some colleagues to review my work. As a software developer and as an author, I’ve learned the great value of having a diverse group of colleagues examine my work. The errors they find and their improvement suggestions invariably help me create a superior product.

Review input from others makes me aware of the kinds of errors I make. This knowledge, in turn, helps me do a better job from the outset on all my future deliverables. The bottom line is that my participation in a collective quality-improvement activity always pays off for both my colleagues and me.

Take One for the Team

The next time a colleague or a manager asks you to do something on the project that doesn’t appear to benefit you personally, think past your self-interest. Employees have a responsibility to conform to established team and company development practices. It’s fair to ask, “What’s in it for us if I do this?” The burden is on the requester to explain how your contribution will benefit the team collectively. Then it’s up to you to contribute to the team’s mutual success.

Lesson #53. The best motivation for changing how people work is pain.

When I was traveling on a consulting job in December of 2000, I slipped on some ice-covered steps and fell, badly injuring my right shoulder. It was the most severe pain I’ve ever experienced. Three days later, I finally got home and saw a doctor, who informed me that I had a rotator cuff tear. A physical therapist gave me exercises to do at home. The pain powerfully motivated me to do the exercises and try to recover quickly, especially because I’m very dominantly right-handed—my left arm is primarily for visual symmetry.

As with individuals, pain is a powerful change motivator for teams and organizations. I’m not talking about artificial, externally-induced pain, such as managers or customers who demand the impossible, but rather the very real pain the team experiences from its current ways of working. One way to encourage people to change is to explain how green the grass will be when they reach CMMI Level 5 or are fully Scrum-Lean-Kanban-agilified. However, a more compelling motivation to get people moving is to point out that the grass behind them is on fire. Process improvement should emphasize reducing project pain by first extinguishing, and then preventing, fires.

Process improvement activities aren’t that much fun. They’re a distraction from the project work that interests team members the most and delivers business value. Change efforts can feel like eternally pushing a boulder uphill, as so many factors oppose making sustained organizational alterations. To motivate people to engage in the change initiative, the pain-reduction promise must outweigh the discomfort of the effort itself. And at some point, those involved must feel the pain relief, or they won’t get on board the next time. Find the influencers in your organization and their pain points, connect those to the change goals, and you should have a strong foundation on which to build the change effort.

Pain Hurts!

I once led SPI activities in a fast-moving group that built a large corporation’s websites. They were overwhelmed with requests for new projects and enhancements to their existing sites. They also had a configuration management problem, using two web servers that had largely duplicate—but not precisely the same—content. Everyone in this group accepted my recommendations for introducing a practical change request system and tuning up their configuration management practices. They saw how much confusion and wasted effort their current practices caused. The processes we put into place helped considerably to reduce the configuration management pain level.

How would you define “pain” in your organization? What recurrent problems do your projects experience? If you can identify those, you can focus improvement efforts where you know they’ll yield the greatest rewards. Common examples of project pain include these:

• Failing to meet planned delivery schedules.

• Releasing products with excessive defects.

• Failing to keep up with change requests.

• Creating systems that aren’t easily extended without significant rework.

• Dealing with system failures that force the on-call support person to work in the middle of the night.

• Delivering products that don’t adequately meet customer needs.

• Dealing with managers who lack a sufficient understanding of current technology issues and software development approaches.

• Suffering from risks that weren’t identified or mitigated.

The purpose of any process assessment activity—a team discussion, a project retrospective, or an appraisal by an outside consultant—is to identify these problem areas. Then you can determine the root causes of the problems and select actions to address them. As a consultant, I rarely give my clients surprising observations, but they haven’t yet taken the time to confront and resolve the resulting pain. Outside perspectives can help us see problems more clearly and motivate action.

Invisible Pain

Long ago, I was the customer working with a developer, Jean, to create a database and a simple query interface. We didn’t have written requirements, just frequent discussions about the system’s capabilities. At one point, Jean’s manager called me, a little angry. “You have to stop changing the requirements so much,” he demanded. “Jean can’t make any progress because you request so many changes.”

This problem was news to me. Jean had never indicated that our uncertainty about requirements was a problem. The difficulty that my approach caused Jean wasn’t visible to me. Had she or her manager explained their preferred process at the outset, I would have been happy to follow it. Jean and I agreed on a more structured approach for specifying my needs from then on, and we made better progress.

This experience taught me that problems that affect certain project participants might not be apparent to others. This highlights the need for clear communication of expectations and issues among all the stakeholders. It also revealed an important corollary to this section’s lesson: It’s hard to sell a better mousetrap to people who don’t know they have mice.

If you aren’t aware of your current practices’ negative impacts, you probably won’t be receptive to suggestions for change. Any proposed change would appear to be a solution in search of a problem. Thus, an important aspect of SPI is identifying the causes and costs of process-related problems and then communicating those to the affected people. That knowledge might encourage all those involved to do something different.

Sometimes you can stimulate this awareness in small ways. I break the students in my training classes into small groups to discuss problems their project teams currently experience. Those discussions are most fruitful when the group members represent different project viewpoints: BA, project manager, developer, customer, tester, marketing staff, and so forth. After one such discussion, a customer representative shared an eye-opening insight. “I have more sympathy for the developers now,” she said. That sympathy is a good starting point toward better ways of working that benefit everyone.

Lesson #54. When steering an organization toward new ways of working, use gentle pressure, relentlessly applied.

When I was in the SPI business some years ago, a joke circulated through our community:

Q: How many process improvement leaders does it take to change a light bulb?

A: Only one, but the light bulb must be willing to change.

I’ve heard that therapists have an analogous joke.

There’s truth in that bit of humor. No one can truly change how someone else thinks, behaves, or works. You can only employ mechanisms that motivate them to behave in some different way. You can explain why making some change is to their—and other people’s—advantage and hope they accept your reasoning. You can even threaten or punish people if they don’t get on board, though that’s not a recommended SPI motivational technique. Ultimately, though, it’s up to each individual to decide that they’re willing to operate differently in the future.

Steering

Effectively steering a software organization—be it a small group or an entire company—toward new ways of working requires the change leaders to exert continuous, gentle pressure in the desired direction (The Mann Group, 2019). To establish the desired direction, the change initiative’s goals must be well defined, clearly communicated, and clearly beneficial for the organization’s business success. It’s hard for any organization or individual to make quick, radical changes. Incremental change is less disruptive and more readily threaded into everyone’s daily routine. It takes time for individuals to absorb both different practices and a different mindset.

Leaders must try to get buy-in from an emotional perspective, as well. Everyone involved needs to understand how they can contribute to the effort’s success. Seek out early adopters who are receptive and can serve as champions who advocate for the change with their peers. People who implement and are affected by the change must feel as though their voices are heard and that the change isn’t being shoved down their throats.

If you’re a change leader, here are some ways to gently—yet relentlessly—apply pressure to keep the organization moving toward its destination.

• Define the change initiative’s objectives, the motivation for it, and the key results being sought. Vague targets like becoming “world-class” or “performance leaders” aren’t helpful.

• Set realistic, meaningful goals and expectations. Team members will resist demands to dramatically and instantaneously change how they work. They might go through the motions, but that doesn’t mean they’re sincerely complying with the initiative or acknowledge its value.

• Treat the change initiative as a project with activities, deliverables, milestones, and responsibilities. Oh, and don’t forget resources. You need to provide the necessary time so people can work on improvement activities in parallel with their project commitments.

• Aim for some small, early wins to show that the change initiative is already beginning to yield results. Make clear what benefits those wins provide to the organization. Teams that successfully pilot new practices blaze the trail for the others.

• Keep the change initiative visible. Make it a regular part of status meetings and reports. Track the SPI effort’s progress toward its objectives along with regular project tracking activities.

• Hold people who agree to perform certain SPI activities accountable for their commitments. If project work always takes exclusive priority, the improvement actions will be neglected.

• Publicly tout even small successes as evidence that the new ways of working are paying off. Keep the practices visible with this low-key, positive pressure until they become ingrained in team members’ individual work styles and organizational operations.

• Select metrics—key performance indicators—that will demonstrate progress toward the objectives and the impact those changes have on project performance. The latter are lagging indicators. You initiate a change activity because you’re confident the new ways will yield better results. It takes time for those new practices to have an impact on projects, though. Communicating positive metric trends can help sustain commitment from the participants.

• Provide any needed training in the new ways of working. Observe whether team members are applying the training, but accept the reality of the learning curve. It takes time for people to put what they’ve learned into effective practice.

Training is an investment in future performance. At a year-in-review meeting with nearly 2000 people, the director of a corporate research laboratory reported as an achievement that a large number of scientists had taken design-of-experiments training. That’s not an achievement—it’s an investment. I wish management had followed up with the scientists several months later to see what they were doing better because of that training. Management didn’t check to see what return they got from their training investment.

Managing Upward

Process changes affect managers as well as technical team members. Managing upward is a valuable skill for any change leader. If you’re a change leader, coach your managers regarding what to say publicly, the behaviors and results to look for, and the outcomes to either reward or correct.

I once had a consulting client who was adept at managing upward. Linda had figured out how to present the value of the change project to each manager in terms that would resonate with them and gain their backing. She knew how to guide her managers to publicly reinforce the importance of the change initiative she led. Linda was skilled at navigating the organizational politics to align key leaders behind the change project without becoming entangled in the politics herself. She had mastered a delicate dance.

One organization with a successful SPI program documented the attitudes and behaviors they expected managers to exhibit in the future. Figure 7.2 shows just a few of their expectations. Whether you’re aiming for a higher maturity level in a process framework such as CMMI or implementing agile development across an entire organization, managers need to understand how their own actions and expectations must change. Managers who lead by example, practicing the new ways of working themselves and publicly reinforcing them, send a continuous, positive signal to everyone else.

Figure 7.2 One organization defined some expectations of management behavior associated with improved software processes.

My father once told me that if I were ever in a big crowd of people all trying to get through the same door, I should just keep my feet moving, and I would get there. I’ve tried this technique—it works. The same is true with software process improvement. Keep your feet moving in the desired direction, and you’ll make steady progress toward better ways of working and superior outcomes.

Lesson #55. You don’t have time to make each mistake that every practitioner before you has already made.

As I mentioned in Chapter 1, I have little formal education in software development, just three programming courses I took in college long ago. Since then, though, I learned a great deal more by reading books and articles, taking training courses, and attending conferences and professional society meetings. I judge the value of a learning experience by how many ideas I write down for things that I want to try when I get back to work. (I hope you’re doing that as you read this book.) The best learning experiences inform me about techniques that I can share with my coworkers, so we all become more capable.

Picking up knowledge from other people is a lot more efficient than climbing every learning curve yourself. That’s the point of this book, sharing insights from my career to save you time as you learn about and try to apply the same practices. All professionals should spend part of their time acquiring knowledge and broadening their skills in this ever-growing field.

One software group I was in held weekly learning sessions. In rotation, each team member would select a magazine article or book chapter and summarize it for the others. We discussed how we could apply the topic to our work. Anyone who attended a conference shared the highlights with the rest of us. Such learning sessions propagated a lot of relevant information throughout the team. It did feel a bit awkward when I joined a new group at Kodak that was going chapter by chapter in this fashion through my first book, Creating a Software Engineering Culture. However, we had some interesting discussions, and they could understand my viewpoint as I worked with them to improve their development processes.

Not every method I took home from a conference worked as well as I hoped. One enthusiastic speaker touted his technique for stimulating ideas during a requirements elicitation workshop. I gave his suggestion a try after I returned to work; it fell flat on its face. I got excited about use cases when I heard conference speakers praise their potential. The first time I tried to apply use cases, I struggled to explain them to my user representatives and make them work. But I pushed through that initial difficulty and was able to employ use cases successfully after that. I had to persevere through the discomfort of learning a new skill to get to the payoff. The translation from an idea to a routine practice always involves a learning curve.

The Learning Curve

The learning curve describes how proficient someone becomes at performing a new task or technique as a function of their experience with it. We all confront countless learning curves in life whenever we try to do anything novel. We shouldn’t expect a method to yield its full potential from the outset. When project teams attempt unfamiliar techniques, their plans must account for the time it will take them to get up to speed. If they don’t succeed in mastering the new practice, the time they invested is lost forever.

You’re undoubtedly interested in the overall work performance—productivity, perhaps—that your repertoire of techniques lets you achieve. Figure 7.3 shows that you begin at some initial productivity level, which you want to enhance through an improved process, practice, method, or tool. Your first step is to undertake some learning experience. Your productivity takes an immediate hit because you don’t get any work done during the time you invest in learning (Glass, 2003).

Figure 7.3 The learning curve reduces your productivity before increasing it.

Productivity decreases further as you take time to create new processes, wrestle to discover how to make a new technique work, acquire and learn to use a new tool, and otherwise get up to speed. As you begin to master some new way of working, you could see gains but also experience some setbacks—the jagged part of the productivity growth curve in Figure 7.3. If all goes well, you eventually reap benefits from your investment and enjoy increased effectiveness, efficiency, and quality. Keep the learning curve’s reality in mind as you incorporate new practices into your personal activities, your team, and your organization.

Good Practices

I find it amusing when someone complains about another person, “He always thinks his way is best.” Of course he does! Why would anyone deliberately do something in a way that they knew was not the best option? That would be silly.

The problem doesn’t come from thinking your way is best; it comes if you don’t consider that others might have better ways and aren’t willing to learn from them. I collect good ideas and useful techniques from any source I encounter. It would be foolish to reject something that’s demonstrably better than my old way just because of pride.

Peer reviews provide a good opportunity to observe other ways of working. You might see someone using unfamiliar language features, clever coding tricks, or something else that triggers a lightbulb in your brain. During a code review, I saw that the programmer’s commenting style was obviously superior to mine. I immediately adopted his style and used it from then on. That’s an easy way to learn and improve.

People often talk about industry best practices, which immediately triggers a debate about which practice is best, for what purpose, in what context, and who decided that. An Internet search will reveal scores of articles and books about software best practices (see [Foord, 2017] as one example). Good stuff all, but best practice is a strong statement.

My advice is to accumulate a toolbox filled with good practices. A different technique simply has to be better than what you’re doing now to go into your toolbox. For instance, my book Software Requirements with coauthor Joy Beatty (2013) describes more than fifty good practices relating to requirements development and management. I believe some of them truly are best practices in their niche, but others might disagree. The point is not worth debating—what works for you might not work for someone else.

As you accumulate tools and techniques, hang onto most of those that you’ve used successfully in the past. Replace a current technique with a new one only when the new one yields superior results in all cases. Often, the two can coexist, and you can choose between them as the situation dictates. For instance, a UML activity diagram is essentially a flowchart on steroids, but sometimes a basic flowchart shows everything you need to see. So have both tools available, and use the simplest one that gets the job done.

As I’ve mentioned previously, I’m a proponent of use cases as a requirements elicitation technique. In an otherwise very good book on use cases, the authors recommend that the reader discard certain requirements tools from their repertoire of solutions: “As with requirements lists, we recommend that DFDs [data flow diagrams] be dropped from the requirements analyst’s toolbox” (Kulak and Guiney, 2004). I consider that poor advice, as I’ve encountered many situations in which data flow diagrams are useful in requirements analysis. Why not keep a variety of hammers in the toolbox in case you encounter an assortment of nails during your project work?

Lesson #56. Good judgment and experience sometimes trump a defined process.

I knew a project team that tried diligently to conform to the heavy-duty process framework their organization had implemented. They dedicated a person to write a detailed project plan for their six-month project, as that’s what they thought the process required. He completed the plan shortly before the project was finished.

This is the kind of story that gives SPI a bad reputation. That team completely missed the point of having a process: it’s not to conform to the process, but to get better business results by applying the process than you would otherwise. The process is supposed to work for you, not the reverse. Sensible processes, situationally adjusted based on experience, guide teams to repeated success. People need to select, scale, and adapt processes to yield the maximum benefit for each situation. A process is a structure, not a straitjacket.

Simply having a defined process available is no guarantee that it’s sensible, effective, appropriate, or adds value. However, a process often is in place for a good reason. It’s always okay to question a process, but it’s not always okay to subvert it. Be sure you understand the rationale and intent behind a step that you question before electing to bypass it. In a regulated industry, some process steps are included to achieve mandatory compliance with a quality management system. Skipping a required step could cause problems when you try to get a product certified. Usually, though, the process steps are there simply because some people agreed that they would add value to the team’s work and the customer’s product.

Processes and Rhythms

Organizations put processes and methodologies in place to try to become more effective. Often the processes contribute significantly to success; sometimes they don’t. A process might have made sense when it was written but doesn’t serve the current situation well. Even with good intentions, people sometimes create processes that are too elaborate and prescriptive. They seem like a good idea, but if they’re not practical, people will ignore them. A defined process represents good judgment version 1.0, but processes don’t replace thinking. The good judgment that grows out of experience helps wise practitioners know when following a process is smart and when it’s smarter to bend the process a bit and then improve it based on what they learn.

Whenever people don’t follow a process that they claim to be—or should be—using, I see just three possible courses of action:

1. Start following the process, because it’s the best way we know to perform that particular activity.

2. If the process doesn’t meet your needs, modify it to be more effective and practical—and then follow it.

3. Throw away the process and stop pretending that you follow it.

The word process leaves a bad taste in some people’s mouths, but it doesn’t have to be that way. A process simply describes how individuals and teams get their work done. A process could be random and chaotic, highly structured and disciplined, or anywhere in between. The project situation should dictate how rigorous a process is appropriate. I don’t care how you build a little website or app, provided it works right for the customer, but I care a lot about how people build medical devices and transportation systems.

One of my consulting clients told me, “We didn’t have a process, but we had a rhythm.” That’s a nice way to describe an informal process. He meant that their team didn’t have documented procedures, but everyone knew what activities they were expected to perform and how they meshed together so that they could collaborate smoothly.

The other extreme from having no documented process at all is to apply a systematic process improvement framework, such as the five-level Capability Maturity Model Integration (Chrissis, Konrad, and Shrum, 2003). At maturity level 1, people perform their work in whatever way they think they should at that moment. Higher maturity levels gradually introduce more structured processes and measurements in the spirit of continuous process improvement. Level 5 organizations have a comprehensive set of processes in place that teams consistently perform and improve.

An interesting thing sometimes happens as organizations move to high maturity levels. A friend who worked in a level 5 organization said, “We’re not really following a process. This is just how we work.” They did indeed have a collection of well-defined processes, but my friend and her colleagues had internalized the process elements. They weren’t consciously following each stepwise procedure. Instead, they applied the process automatically and effectively based on years of collected, recorded, and shared experience. That’s the ideal objective.

Being Nondogmatic

I believe in sensible processes, but it’s important not to adhere dogmatically to overly prescriptive processes or methodologies. As mentioned in the previous lesson, I like to accumulate a rich toolbox of techniques for diverse problems rather than following a script. I work within a general process framework that’s populated with selected good practices that I’ve found valuable. Over the years, the software world has created many development and management methodologies that claim to solve all your problems. Rather than following any of those strictly, I like to choose the best bits from many and apply them situationally.

Agile software development methods have exploded since the late 1990s. Wikipedia (2021) identifies no fewer than fourteen significant agile software development frameworks and twenty-one commonly-used agile practices. The developers of those methods have packaged various practices together, expecting that a particular grouping of techniques and activities will yield the best results.

I encounter some purists who seem highly concerned about conforming to, say, Scrum. There’s a worry that dropping or replacing certain practices means that a Scrum team isn’t really doing Scrum anymore. That’s true, per The Scrum Guide (Schwaber and Sutherland, 2020):

The Scrum framework, as outlined herein, is immutable. While implementing only parts of Scrum is possible, the result is not Scrum. Scrum exists only in its entirety and functions well as a container for other techniques, methodologies, and practices.

Those who invent a method get to define what constitutes that method—but so what? Is the goal to be Scrum- (or whatever-) compliant, or is it to get the project work done well and expeditiously? I’ve heard people complain that certain practices are “Not very compliant with the agile way of doing things” or “Against agile fundamental principles.” Again, I ask, “So what?” Does that particular practice help the project and organization achieve business success, or does it not? That should be the determining factor in deciding whether to use it. No software development approach is so perfect that teams dare not customize it in a way that they believe adds value. I’m a pragmatist, not a purist.

Agile—like all processes and methods— is not an end in itself. It’s a means to the end of achieving business success. I propose that all team members accumulate tools and practices to perform their own work and to work well with others toward their common objective. It’s not important to me whether a particular practice is compliant with a specific development or management philosophy. However, the practice must be the best way to get the job done in a particular situation when you consider all of its implications. If it’s not, then do something else.

Lesson #57. Adopt a shrink-to-fit philosophy with document templates.

After I learned to appreciate the value of creating a written set of requirements, I would compile a simple list of functionality my customers had requested. That was a good start, but I always had other important pieces of information requirements-related knowledge beyond functionality. It wasn’t clear where to put them. Then I discovered the software requirements specification (SRS) template described in the now-obsolete IEEE Standard 830, “IEEE Recommended Practice for Software Requirements Specifications.” I adopted this template, as it contained many sections that helped me organize my diverse requirements information. With experience, I modified the template to be more suitable for the systems that my team developed. Figure 7.4 shows the SRS template I ultimately created with my colleague, Joy Beatty (Wiegers and Beatty, 2013).

Figure 7.4 A rich software requirements specification template contains sections for many types of information.

Document templates offer several benefits. They define consistent ways to organize sets of information from one project to the next and from individual to individual. Consistency makes it easier for people who work with those deliverables to find the information they need. A template can reveal potential gaps in a document author’s project knowledge, reminding them of information that perhaps should be included. I’ve used or developed templates to create many types of project documents, including these:

• Request for proposal

• Vision and scope document

• Use cases

• Software requirements specification

• Project charter

• Project management plan

• Risk management plan

• Configuration management plan

• Test plan

• Lessons learned

• Process improvement action plan

Suppose I’m using the template in Figure 7.4 to structure requirements information about a new system. I don’t complete the template from top to bottom; I populate specific sections as I accumulate the pertinent information. After a while, maybe I notice that section 2.5, Assumptions and Dependencies, is empty. This hole prompts me to wonder whether there’s some missing information I should track down regarding assumptions and dependencies. Maybe there are conversations I haven’t had yet with certain stakeholders. Perhaps no one has yet pointed out any assumptions or dependencies, but there could be some out there that we should record. Some assumptions or dependencies might have been recorded someplace else—should I move them to this section or put pointers to them in there? Or, maybe there really aren’t any known assumptions or dependencies—I should find out. The empty section reminds me that there’s work yet to be done.

I should also consider what to do if a particular template section isn’t relevant to my project. One option is to simply delete the section from my requirements document when I’m done. But that absence might raise a question in a reader’s mind: “I didn’t see anything about assumptions and dependencies here. Are there any? I’d better ask somebody.” Or, I could leave the heading in place but leave the section blank. That might make a reader wonder if the document was completed yet. I prefer to leave the heading in place and put an explanation in that section: “No assumptions or dependencies have been identified for this project.” Explicit communication causes less confusion than implicit.

Developing a suitable template from a blank sheet of paper is slow and haphazard. I like to begin with a rich template and then mold it to each project’s size, nature, and needs. That’s what I mean by shrink-to-fit. Many established technical standards describe document templates. Organizations that issue technical standards relevant to software development include

• IEEE (Institute of Electrical and Electronics Engineers),

• ISO (International Organization for Standardization), and

• IEC (International Electrotechnical Commission).

For instance, international standard ISO/IEC/IEEE 29148 includes suggested outlines—along with descriptive guidance information—for software, stakeholder, and system requirements specifications (ISO/IEC/IEEE, 2018). An online search will reveal many sources of downloadable templates for various software project deliverables to help you get started.

Because such generic templates are intended to cover a wide range of projects, they might not be just right for you. But they will provide many ideas regarding the types of information that you should include and reasonable ways to organize that information. The shrink-to-fit concept implies that you can tailor those templates to your situation:

• Delete sections that your projects don’t need.

• Add sections the template doesn’t already contain that would help your projects.

• Simplify or consolidate template sections where that won’t cause confusion.

• Change the terminology to suit your project or culture.

• Reorganize the template’s contents to meet your audiences’ needs better.

• Split or merge templates for related deliverables when appropriate to avoid overly massive documents, document proliferation, and redundancy.

If your organization works on multiple classes or sizes of projects, create sets of templates that are suitable for each class. That’s better than expecting every project to start with standard templates that aren’t a good fit for what they’re doing. A consulting client asked me to create numerous processes and accompanying deliverable templates for their complex systems engineering projects, which worked well for them. That client later requested a parallel set of process assets appropriate for their newer—and smaller—agile projects. Agile projects still must record necessary project information while minimizing their documentation effort, so I simplified the original processes and templates.

Companies aren’t successful because they write great specifications or plans; they’re successful because they build high-quality information systems or commercial applications. Well-crafted key documents can contribute to that success. Some people are leery of templates, perhaps fearing that they’ll impose an overly restrictive structure on the project. They could be concerned that the team will focus on completing the template instead of building software. Unless you have a contractual requirement to do so, you’re not obligated to populate every section of a template. And you’re certainly not obligated to complete a template before you can proceed with development work. As with other sensible process components, a good template will support your work, not hobble it.

Even if your organization doesn’t use documents to store information, projects still need to record certain knowledge in some persistent form. You might prefer using checklists instead of templates to avoid overlooking some important category of information. Like a template, a checklist helps you assess how complete your information set is, but it doesn’t help you organize the information in a consistent fashion.

Many organizations store requirements and other project information in a tool. The tools will let you define templates for stored data objects analogous to the sections in a traditional document. Users can create documents when needed as reports generated from the contents of the tool’s database. It’s important for everyone who uses the tool to recognize that the tool is the ultimate repository of current information. A generated document represents a snapshot in time of the database contents, which could be obsolete tomorrow.

I value simple tools like templates, checklists, and forms that save me from reinventing how to do my work on each project. I don’t want to create documents for their own sake or to spend time on process overhead that doesn’t add proportionate value to the work. Thoughtfully designed templates remind my colleagues and me how we can contribute to the project most effectively. That seems like a reasonable degree of structured process to me.

Lesson #58. Unless you take the time to learn and improve, don’t expect the next project to go any better than the last one.

The city in which I live suffered a major ice storm recently. My all-electric house with no fireplace or generator got quite chilly while the power was out. My wife nd I were well prepared, so we got through it okay. However, once the lights came back on, I contemplated how we could be even better prepared for the next emergency. I bought several items that would keep us safer and more comfortable during an extended power outage, updated my food storage and preparation plans, and tuned up my emergency planning checklist.

The process of reflecting on an event to learn how to weather (in this case, literally) the next one better is called a retrospective. All software project teams should perform retrospectives at the end of a development cycle (a release or an iteration), at the project’s conclusion, and when some surprising or disruptive event occurs.

Looking Back

A retrospective is a learning and improvement opportunity. It’s a chance for the team to look back at what happened, identify what worked well and what did not, and incorporate the resulting wisdom into future work. An organization that doesn’t invest the time for this reflection is basing its desire for better future performance on hope, not on experience-guided improvement.

Also called post-project reviews, after-action reviews, and postmortems (even when the project survived!), the term retrospective is well-established in the software world. The seminal resource in the field is Project Retrospectives, by Norman L. Kerth (2001). A retrospective seeks to answer four questions:

1. What went well for us that we’d like to repeat?

2. What didn’t go so well that we should do differently next time?

3. What happened that surprised us and might be a risk to consider in the future?

4. Is there anything that we don’t yet understand and should investigate further?

Another way to frame this discussion is to ask the retrospective participants, “If I’m starting a new project that’s similar to the one you just completed, what recommendations could you give me?” That sage advice from people who have gone before would be the answers to the four questions above.

A retrospective is more of an interpersonal interaction than a technical activity. As such, it must be conducted with consideration and respect for all participants. A retrospective is not an opportunity for laying blame but rather a mechanism for learning how we can all do better the next time (Winters, Manshreck, and Wright, 2020). It’s important to explore what happened objectively and without repercussions. Everyone who participates in a retrospective should remember Kerth’s Prime Directive:

Regardless of what we discover, we must understand and truly believe that everyone did the best job he or she could, given what was known at the time, his or her skills and abilities, the resources available, and the situation at hand.

The agile development community has embraced the idea of continual learning, growth, and adaptation by incorporating retrospectives into each iteration (Scaled Agile, 2021d). Recall that one of the principles behind the Agile Manifesto states, “At regular intervals, the team reflects on how to become more effective, then tunes and adjusts its behavior accordingly” (Agile Alliance, 2021c). Agile’s short iteration cycle time provides frequent opportunities to enhance performance on upcoming iterations. Teams that are relatively new to agile or transitioning to a different agile framework will learn a lot from their early iterations. The book Agile Retrospectives by Esther Derby and Diana Larsen (2006) describes a palette of thirty activities from which you can select to craft a retrospective experience that’s just right for your team.

Retrospective Structure

A retrospective is not a free-form gripe session. It involves a structured and time-boxed sequence of activities: planning, kicking off the event, gathering information, prioritizing issues, analyzing issues, and deciding what to do with the information (Wiegers, 2007; Wiegers, 2019g). Figure 7.5 illustrates the major inputs to and outputs from a retrospective. Team members contribute their recollections of their experiences on the project: what happened when and how it went. It’s a good idea to solicit input from everyone involved with the project, as each participant’s perspective is unique. I like to begin with the positive: let’s remember—with pride—the things we did effectively and how we helped each other succeed.

Figure 7.5 A retrospective collects project experiences and metrics to yield lessons learned, new risks, improvement actions, and a team commitment to make valuable changes.

In a safe and nonjudgmental environment, the retrospective participants also can share their emotional ups and downs. Someone might have been frustrated because the delayed delivery of part of the project impacted his ability to make good on his commitments. Another team member might have been grateful for the extra assistance a colleague provided. Perhaps people felt super-stressed because the already optimistic schedule was reduced to even more unrealistic levels, and they had to work to exhaustion in an attempt to deliver on time. The team’s well-being is a significant contributor to project success. Addressing the emotional factor during the retrospective yields ideas for elevating everyone’s happiness and job satisfaction.

The retrospective participants can bring any data the team collected in the usual software metrics dimensions:

• Size: counts and sizes for requirements, user stories, and other items

• Time: planned and actual calendar duration for tasks

• Effort: planned and actual labor hours for tasks

• Quality: defect counts and types, performance, and other quality attribute measures

All of this input provides fodder for the team to gain deep insight into how the work went and how the participants felt about the project.

The retrospective outputs shown in Figure 7.5 fit into several categories. First are lessons learned that you want to remember for subsequent development cycles. You might make lists of things to make sure you do again, things the team should not do again, and things they should do differently the next time (Kerth, 2001). If your organization accumulates a lessons-learned repository, add selected items from the retrospective to that collection to help future projects.

Unpleasant surprises could feed into your organization’s master list of candidate risks that every project should study. (See Lesson #32, “If you don’t control your project’s risks, they will control you.”) Project risks that weren’t adequately mitigated and other things that didn’t go quite as planned indicate good starting points for process improvement actions. The most important output from a retrospective is the team’s commitment to making changes that will improve their work life (Pearls of Wisdom, 2014b).

After the Retrospective

A retrospective on its own won’t change how things go the next time—it must feed into ongoing SPI activities. Reaching a better future requires an action plan that itemizes the process changes the team should explore before or during the next development cycle. The people who own retrospective action items need to devote time to exploring ways to solve past problems. This time isn’t available for them to work on project tasks, so the improvement effort must be added to project schedules. Otherwise, it won’t get done. If a project team holds a retrospective but management provides no resources to address the issues identified, the retrospective was useless.

Teams that lack downtime between development cycles won’t have a chance to reflect, learn, and retool their capabilities (DeMarco, 2001). Therefore, leave some time in your project schedules for the education and experimentation that people require to apply new practices, tools, and methods effectively. If you don’t invest this time, you shouldn’t expect the next project to run more smoothly. Conducting retrospectives without ever making any changes wastes time and discourages the participants.

A retrospective is not free—it takes time, effort, and maybe money. However, that investment is more than repaid by the improved performance that teams can achieve on all their future work. Retrospectives align with continuous improvement by triggering changes that target known sources of pain.

Retrospectives are valuable when the culture encourages, listens to, and acts upon candid feedback. The ritual of a retrospective brings a group of people together to view their shared experience from a wider point of view than any single participant can provide. As the group reflects on the work they just concluded, they can design a new way going forward that they expect will lead to a higher plateau of professional work. The ultimate sign of a successful retrospective is that it leads to sustained change. That’s a lasting return on investment from the hours the team spends reflecting on past events.

Lesson #59. The most conspicuous repeatability the software industry has achieved is doing the same ineffective things over and over.

When I worked at Kodak, the company held an annual internal software quality conference. One year, I was invited to serve on the conference’s planning team. I asked to see their procedures book for how to plan and conduct the conference. The response was, “We don’t have one.”

I was floored. Of all the groups that I thought would know to accumulate procedures, checklists, and lessons learned from previous experience, a conference run by quality and process improvement specialists was at the top of the list. Such a resource is especially valuable when you have a revolving cast of characters with limited staff continuity from year to year, as we did. Each year’s planning team had to reconstruct how to plan and run the conference, filling in any gaps from scratch. How inefficient! (See Lesson #7, “The cost of recording knowledge is small compared to the cost of acquiring knowledge.”) We started a procedures book the year that I served on the committee. I can only hope that the people who worked on subsequent conferences referred to that resource and kept it current.

This experience highlighted a phenomenon that’s too common in the software industry: repeating the mistakes of the past, project after project (Brössler, 2000; Glass, 2003). (This is the point in the book where some authors would incorporate philosopher George Santayana’s famous quotation, “Those who cannot remember the past are condemned to repeat it,” but I’m not going to do that here.) We have countless collections of software industry best practices and books of lessons learned on various topics—including this one. There’s a vast body of literature on all aspects of software engineering and project management. Nonetheless, many projects continue to get into trouble because they don’t practice some of the activities that we know contribute to project success.

The Merits of Learning

The Standish Group has published its CHAOS report every few years since 1994. (CHAOS is not a description but an aptly-crafted acronym for the Comprehensive Human Appraisal for Originating Software.) Based on data from thousands of projects, CHAOS reports indicate the percentages of recent projects were fully successful, were challenged in some way, or failed. Success is defined as a combination of being completed approximately on time and budget and delivering customer and user satisfaction (The Standish Group, 2015). The CHAOS report results have varied over the years, but the fraction of fully successful projects still struggles to exceed 40 percent. Perhaps more discouragingly, some of the same factors contribute to challenged and failed projects for years and years.

Observing patterns of results is one pathway that leads to new paradigms for doing work. For instance, analysis paralysis and requirements that become obsolete on long-term projects helped motivate a push toward incremental development. Some CHAOS report data indicates that agile projects have a higher average success rate than waterfall projects (The Standish Group, 2015).

Software development is unlike other technical fields in that it’s possible to do useful work with minimal formal education and background, at least up to a point. No one’s going to ask an amateur physician to remove their appendix, but many amateur programmers know enough to write small apps. However, there’s a vast gulf between being a small-scale programmer and being a proficient software engineer capable of executing large and complex projects by collaborating with others.

Many young professionals enter the industry today through an academic program in computer science, software engineering, information technology, or a related field. Whether formally educated or self-taught, every software professional should continue to absorb the ever-growing body of knowledge and learn how to apply it effectively. Many facets of the software domain change quickly. Keeping up with the current technologies can feel like running on a treadmill. Fortunately, many lessons like those I’ve collected here are durable over time.

Each organization also accumulates an informal collection of local, experience-based knowledge. Rather than passing folklore around the campfire in an oral tradition, I suggest that organizations record that painfully-gained knowledge in a lessons-learned collection. Prudent project managers and software developers will refer to this collected wisdom when they’re launching a new endeavor.

The Merits of Thinking

When I was a research scientist early in my career, I knew a fellow scientist named Amanda. When Amanda began a new research project, I would find her sitting back in her office chair, staring at the ceiling. She was thinking. Amanda diligently studied the company’s internal technical literature about similar projects. She learned all she could about the problem domain and approaches that had—and had not—worked in the past before she began doing experiments. Amanda’s experiments were time-consuming and expensive; she didn’t want to go off in the wrong direction. Her study of previous research made her a highly efficient scientist. Observing Amanda reinforced the importance of learning from history, both across the industry and from local experience, before diving headlong into a new project.

This chapter is about software process improvement. Individuals, project teams, and organizations all need to enhance their software engineering and management skills continuously. The initial goal of five-level process maturity models was to establish processes that could lead to repeatable success from project to project and from one team to another. Regardless of the specific methodology or framework an organization chooses to structure its improvement efforts, I think every software development organization would want to be repeatedly successful.

Next Steps: Software Process Improvement

1. Identify which of the lessons described in this chapter are relevant to your experiences with software process improvement.

2. Can you think of any other lessons related to SPI from your own experience that are worth sharing with your colleagues?

3. Identify three of your greatest points of pain and conduct root cause analyses to reveal factors toward which you can direct some initial improvement activities.

4. Identify any practices described in this chapter that might be solutions to the process improvement-related problems you identified in the First Steps at the beginning of the chapter. How could each practice improve your project teams’ efficiency and effectiveness?

5. How could you tell if each practice from Step #4 was yielding the desired results? What would those results be worth to you?

6. Identify any barriers that might make it difficult to apply the practices from Step #4. How could you break down those barriers or enlist allies to help you implement the practices effectively?

7. What training, materials, guidance, or other resources would help your organization pursue its process improvement activities more successfully?