LEARNING OBJECTIVES

To be able to discern desirable properties of a software design

To understand different notions of complexity, at both the component and system level

To be aware of some object-oriented metrics

To be aware of some widely known classical design methods

To understand the general flavor of object-oriented analysis and design methods

To be aware of a global classification scheme for design methods

To understand the role of design patterns and be able to illustrate their properties

To be aware of guidelines for the design documentation

Note

Software design concerns the decomposition of a system into its constituent parts. A good design is the key to the successful implementation and evolution of a system. A number of guiding principles for this decomposition help to achieve quality designs. These guiding principles underlie the main design methods discussed in this chapter. Unlike more classical design fields, there is no visual link between the design representation of a software system and the ultimate product. This complicates the communication of design knowledge and raises the importance of proper design representations.

During software development, we should adhere to a planned approach. If we want to travel from point A to point B, we will (probably) consult a map first. According to some criterion, we will then plan our route. The time-loss caused by the planning activity is bound to outweigh the misery that occurs if we do not plan our trip at all but just take the first turn left, hoping that this will bring us somewhat closer to our destination. In designing a garden, we also follow some plan. We do not start by planting a few bulbs in one corner, an apple tree in another, and a poplar next to the front door.

The above examples sound ridiculous. They are. Yet, many a software development project is undertaken in this way. Somewhat exaggeratedly, we may call it the 'programmer's approach' to software development. Much software is still being developed without a clear design phase. The reasons for this 'code first, design later' attitude are many:

We do not want to, or are not allowed to, 'waste our time' on design activities.

We have to, or want to, quickly show something to our customer.

We are judged by the amount of code written per man-month.

We are, or expect to be, pressed for time.

For many types of system (just think of an airline reservation system), such an approach grossly underestimates the complexity of software and its development. Just as with the furnishing of a house or the undertaking of a long trip, it is paramount to put thought into a plan, resulting in a blueprint that is then followed during actual construction. The outcome of this process (the blueprint) will be termed the design or, if the emphasis is on its notation, the (technical) specification. The process of making this blueprint is also called design. To a large extent, the quality of the design determines the quality of the resulting product. Errors made during the design phase often go undetected until the system is operational. At that time, they can be repaired only by incurring very high costs.

Software design is a 'wicked problem'. The term originated in research into the nature of design issues in social planning problems. Properties of wicked problems in this area are remarkably similar to properties of software design:

A wicked problem has no definite formulation. The design process can hardly be separated from either the preceding requirements engineering phase or the subsequent documentation of the design in a specification. These activities will, in practice, overlap and influence each other. At the more global (architectural) stages of system design, the designer will interact with the user to assess fitness-for-use aspects of the design. This may lead to adaptations in the requirements specification. The more detailed stages of design often cannot be separated from the specification method used. One corollary of this is that the waterfall model does not fit the type of problem it is meant to address.

A wicked problem has no stopping rule. There is no criterion that tells us when the solution has been reached. Though we do have a number of quality measures for software designs, there does not exist a single scale against which to measure the quality of a design. There probably never will be such a scale.

The solution to a wicked problem is not true or false. At best, it is good or bad. The software design process is not analytic. It does not consist of a sequence of decisions each of which brings us somewhat closer to that one, optimal solution. Software design involves making a large number of tradeoffs, such as those between speed and robustness. As a consequence, there is a number of acceptable solutions, rather than one best solution.

Every wicked problem is a symptom of another problem. Resolving one problem may very well result in an entirely different problem elsewhere. For example, the choice of a particular dynamic data structure may solve the problem of an unknown input size and at the same time introduce an efficiency problem. A corollary of this is that small changes in requirements may have large consequences in the design or implementation. In Chapter 1, we described this by saying that software is not continuous.

During design we may opt for a Taylorian, functionality-centered view and consider the design problem as a purely technical issue. Alternatively, we may realize that design involves user issues as well and therefore needs some form of user involvement. The role of the user during design need not be restricted to that of a guinea-pig in shaping the actual user interface. It may also involve much deeper issues.

Rather than approaching system design from the point of view that human weaknesses need to be compensated for, we may take a different stand and consider computerized systems as a way of supporting human strengths. Likewise, systems need not reflect the interests of system owners only. In a democratic world, systems can be designed so that all those involved benefit. This less technocratic attitude leads to extensive user involvement during all stages of system development. Agile development methods advocate this type of approach.

Whereas traditional system development has a production view in which the technical aspects are optimized, the 'Scandinavian school' pays equal attention to the human system and holds the view that technology must be compatible with organizational and social needs. The various possible modes of interaction between the designer or analyst on the one hand and the user on the other hand are also discussed in Section 9.1.

Pure agile approaches do suggest starting by planting just a few bulbs in one corner of the garden. If we happen to move into our new house in late fall and want some color when spring sets in, this sounds like the best thing we can do. If we change our mind at some later point in time, we can always move the bulbs and do some additional garden design. It thus depends on the situation at hand how much upfront design is feasible (see also Chapter 8). In this chapter, we assume enough context and requirements are known to warrant an explicit design step. Of course, there may be successive design steps in successive iterations.

From the technical point of view, the design problem can be formulated as follows: how can we decompose a system into parts such that each part has a lower complexity than the system as a whole, while the parts together solve the user's problem? Since the complexity of the individual components should be reasonable, it is important that the interaction between components is not too complicated.

Design has both a product aspect and a process aspect. The product aspect refers to the result, while the process aspect is about how we get there. At the very global, architectural levels of design, there is little process guidance, and the result is very much determined by the experience of the designer. For that reason, Chapter 11 largely focuses on the characterization of the result of the global design process, the software architecture. In this chapter, we focus on the more detailed stages of design, where more process guidance has been accumulated in a number of software design methods. But for the more detailed stages of software design too, the representational aspect is the more important one. This representation is the main communication vehicle between the designer and the other stakeholders. Unlike more classical design fields, there is no visual link between the design representations of a software system and the ultimate product. The blueprint of a bridge gives us lots of visual clues as to how that bridge will eventually look. This is not the case for software and we have to seek other ways to communicate design knowledge to our stakeholders.

There really is no universal design method. The design process is a creative one, and the quality and expertise of the designers are a critical determinant for its success. However, over the years a number of ideas and guidelines have emerged which may serve us in designing software.

The single most important principle of software design is information hiding. It exemplifies how to apply abstraction in software design. Abstraction means that we concentrate on the essential issues and ignore, abstract from, details that are irrelevant at this stage. Considering the complexity of the problems we are to solve, applying some sort of abstraction is a sheer necessity. It is simply impossible to take in all the details at once.

Section 12.1 discusses desirable design properties that bear on quality issues, most notably maintainability and reusability. Five properties are identified that have a strong impact on the quality of a design: abstraction, modularity, information hiding, complexity, and system structure. Assessment of a design with respect to these properties allows us to get an impression of design quality, albeit not a very quantitative one yet. Efforts to quantify such heuristics have resulted in a number of metrics specifically aimed at object-oriented systems.

A vast number of design methods exist, many of which are strongly tied to a certain notation. These methods give strategies and heuristics to guide the design process. Most methods use a graphical notation to depict the design. Though the details of those methods and notations differ widely, it is possible to provide broad characterizations in a few classes. The essential characteristics of those classes are elaborated upon in Sections 12.2 and 12.3.

Design patterns are collections of a few components (or, in object-oriented circles, classes) which are often used in combination and which together provide a useful abstraction. A design pattern is a recurring solution to a standard problem. The opposite of a pattern is an antipattern: a mistake often made. The prototypical example of a pattern is the Model-View-Controller (MVC) pattern from Smalltalk. A well-known antipattern is the Swiss Army Knife: an overly complex class interface. Design patterns and antipatterns are discussed in Section 12.5.

During the design process, quite a lot of documentation is generated. This documentation serves various users, such as project managers, designers, testers, and programmers. Section 12.6 discusses IEEE Standard 1016. This standard contains useful guidelines for describing software designs. The standard identifies a number of roles and indicates, for each role, the type of design documentation needed.

Finally, Section 12.7 discusses some verification and validation techniques that may fruitfully be applied at the design stage.

Up till now we have used the notion of 'component' in a rather intuitive way. It is not easy to give an accurate definition of that notion. Obviously, a component does not denote some random piece of software. We apply certain criteria when decomposing a system into components.

At the programming-language level, a component usually refers to an identifiable unit with respect to compilation. We will use a similar definition of the term 'component' with respect to design: a component is an identifiable unit in the design. It may consist of a single method, a class, or even a set of classes. It preferably has a clean interface to the outside world and the functionality of the component then is only approached through that interface.

There are, in principle, many ways to decompose a system into components. Obviously, not every decomposition is equally desirable. In this section, we are interested in desirable properties of a decomposition, irrespective of the type of system or the design method used. These properties can in some sense be used as a measure of the quality of the design. Designs that have those properties are considered superior to those that do not have them.

The design properties we are most interested in are those that facilitate maintenance and reuse: simplicity, a clear separation of concepts into different components, and restricted visibility (i.e. locality) of information.[14] Systems that have those properties are easier to maintain since we may concentrate our attention on those parts that are directly affected by a change. These properties also bear on reusability, because the resulting components tend to have a well-defined functionality that fits concepts from the application domain. Such components are likely candidates for inclusion in other systems that address problems from the same domain.

In the following subsections, we discuss five interrelated issues that have a strong impact on the above properties:

For object-oriented systems, a specific set of quality heuristics and associated metrics has been defined. The main object-oriented metrics are discussed in Section 12.1.6.

Abstraction means that we concentrate on the essential properties and ignore, abstract from, details that are not relevant at the level we are currently working. Consider, for example, a typical sorting component. From the outside we cannot (and need not be able to) discern exactly how the sorting process takes place. We need only know that the output is indeed sorted. At a later stage, when the details of the sorting component are decided upon, then we can rack our brains about the most suitable sorting algorithm.

The complexity of most software problems makes applying abstraction a sheer necessity. In the ensuing discussion, we distinguish two types of abstraction: procedural abstraction and data abstraction.

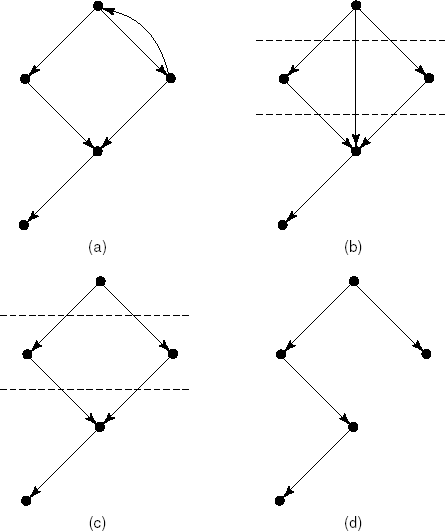

The notion of procedural abstraction is fairly traditional. A programming language offers if constructs, loop constructs, assignment statements, and so on. The transition from a problem to be solved to these primitive language constructs is a large one in many cases. To this end, a problem is first decomposed into subproblems, each of which is handled in turn. These subproblems correspond to major tasks to be accomplished. They can be recognized by their description, in which some verb plays a central role (for example, read the input, sort all records, process the next user request, compute the net salary). If needed, subproblems are further decomposed into even simpler subproblems. Eventually we get at subproblems for which a standard solution is available. This type of (top-down) decomposition is the essence of the main-program-with-subroutines architectural style (see Section 11.4).

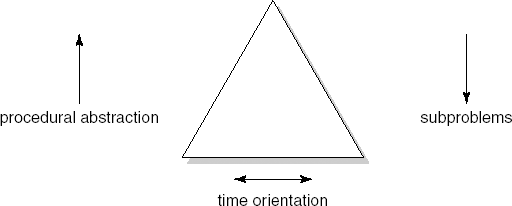

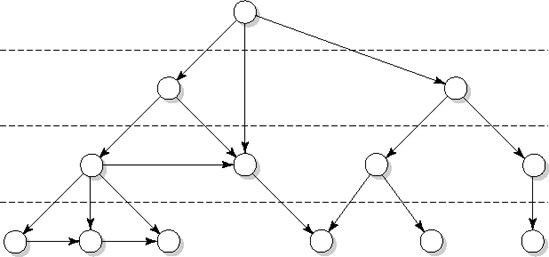

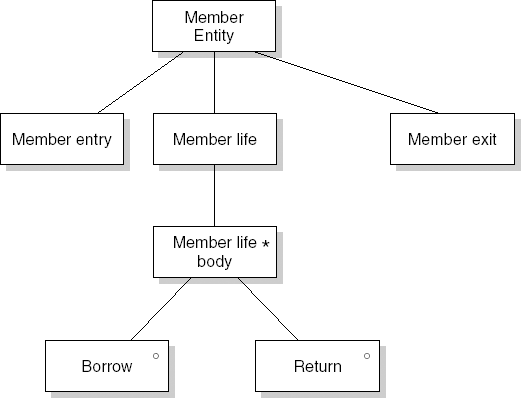

The result of this type of stepwise decomposition is a hierarchical structure. The top node of the structure denotes the problem to be solved. The next level shows its first decomposition into subproblems. The leaf nodes denote primitive problems. This is schematically depicted in Figure 12.1.

The procedure concept offers us a notation for the subproblems that result from this decomposition process. The application of this concept is known as procedural abstraction. With procedural abstraction, the name of a procedure (or method, in object-oriented languages) is used to denote the corresponding sequence of actions. When that name is used, we need not bother ourselves about the exact way in which its effect is realized. The important thing is that, after the call, certain prestated requirements are fulfilled.

This way of going about the process closely matches the way in which humans are inclined to solve problems. Humans too are inclined to the stepwise handling of problems. Procedural abstraction thus offers an important means of tackling software problems.

When designing software, we are inclined to decompose the problem so that the result has a strong time orientation. A problem is decomposed into subproblems that follow each other in time. In its simplest form, this approach results in input – process – output schemes: a program first has to read and store its data, next some process computes the required output from this data, and the result finally is output. Application of this technique may result in programs that are difficult to adapt and hard to comprehend. Applying data abstraction results in a decomposition which shows this affliction to a far lesser degree.

Procedural abstraction is aimed at finding a hierarchy in the program's control structure: which steps have to be executed and in which order. Data abstraction is aimed at finding a hierarchy in the program's data. Programming languages offer primitive data structures for integers, real numbers, truth values, characters and possibly a few more. Using these building blocks, we may construct more complicated data structures, such as stacks and binary trees. Such structures are of general use in application software. They occur at a fairly low level in the hierarchy of data structures. Application-oriented objects, such as 'paragraph' in text-processing software or 'book' in our library system, are found at higher levels of the data structure hierarchy. This is schematically depicted in Figure 12.2.

For the data, too, we wish to abstract from details that are not relevant at a certain level. In fact, we already do so when using the primitive data structures offered by our programming language. In using these, we abstract from details such as the internal representation of numbers and the way in which the addition of two numbers is realized. At the programming language level we may view the integers as a set of objects (0, 1, −1, 2, −2, ...) and a set of operations on these objects (+, −, ×, /, ...). These two sets together determine the data type integer. To be able to use this data type, we need only name the set of objects and specify its operations.

We may proceed along the same lines for the data structures not directly supported by the programming language. A data type binary-tree is characterized by a set of objects (all conceivable binary trees) and a set of operations on those objects. When using binary trees, their representation and the implementation of the corresponding operations need not concern us. We need only ascertain the intended effect of the operations.

Applying data abstraction during design is sometimes called object-oriented design, since the type of object and the associated operations are encapsulated in one component. The buzzword 'object-oriented' however also has a subtly different meaning in the context of design. We will further elaborate upon this notion in Section 12.3.

Languages such as Ada, Java and C++ offer a language construct (called package, class, and struct, respectively) that allows us to maintain a syntactic separation between the implementation and specification of data types. Note that it is also possible to apply data abstraction during design when the ultimate language does not offer the concept. However, it then becomes more cumbersome to move from design to code.

We noticed before that procedural abstraction fits in nicely with the way humans tend to tackle problems. To most people, data abstraction is a bit more complicated.

When searching for a solution to a software problem we will find that the solution needs certain data structures. At some point we will also have to choose a representation for these data structures. Rather than making those decisions at an early stage and imposing the result on all other components, you are better off if you create a separate subproblem and make only the procedural, implementation-independent, interfaces public. Data abstraction thus is a prime example of information hiding.

The development of these abstraction techniques went hand-in-hand with other developments, particularly those in the realm of programming languages. Procedures were originally introduced to avoid the repetition of sequences of instructions. At a later stage, we viewed the name of a procedure as an abstraction of the corresponding instruction sequence. Only then did the notion of procedural abstraction get its present connotation. In a similar vein, developments in the field of formal data type specifications and language notions for components (starting with the class concept of SIMULA-67) strongly contributed to our present notion of data abstraction.

As a final note, we remark that we may identify yet a third type of abstraction, control abstraction. In control abstraction, we abstract from the precise order in which a sequence of events is to be handled. Though control abstraction is often implicit when procedural abstraction is used, it is sometimes convenient to be able to explicitly model this type of nondeterminacy, for instance when specifying concurrent systems. This topic falls outside the scope of this book.

During design, the system is decomposed into a number of components and the relationships between those components are indicated. In another design of the same system, different components may show up and there may be different relationships between the components. We may try to compare those designs by considering both a typology for the individual components and the type of connections between them. This leads us to two structural design criteria: cohesion and coupling.

Cohesion may be viewed as the glue that keeps the component together. It is a measure of the mutual affinity of the elements of a component. In general we will wish to make the cohesion as strong as possible. In their classic text, Yourdon and Con-stantine (1975) identify the following seven levels of cohesion of increasing strength:

Coincidental cohesion: Elements are grouped into components in a haphazard way. There is no significant relation between the elements.

Logical cohesion: Elements realize tasks that are logically related. One example is a component that contains all input routines. These routines do not call one another and they do not pass information to each other. Their function is just very similar.

Temporal cohesion: The elements are independent but they are activated at about the same point in time. A typical example of this type of cohesion is an initialization component.

Procedural cohesion: The elements have to be executed in a given order. For instance, a component may have to first read some data, then search a table, and finally print a result.

Communicational cohesion: The elements of a component operate on the same (external) data. For instance, a component may read some data from a disk, perform certain computations on the data, and print the result.

Sequential cohesion: The component consists of a sequence of elements where the output of one element serves as input to the next element.

Functional cohesion: All elements contribute to a single function. Such a component often transforms a single input into a single output. The well-known mathematical subroutines are a typical example of this. Less trivial examples are components such as 'execute the next edit command' and 'translate the program given'.

In a classic paper on structured design, (Stevens et al., 1974) provide some simple heuristics that may be of help in establishing the degree of cohesion of a component. They suggest writing down a sentence that describes the function (purpose) of the component and examining that sentence. Properties to look for include the following:

If the sentence is compound, has a connective (such as a comma or the word 'and'), or contains more than one verb, then that component is probably performing more than one function. It is likely to have sequential or communicational cohesion.

If the sentence contains words that relate to time (such as 'first', 'next', 'after', and 'then'), then the component probably has sequential or temporal cohesion.

If the sentence contains words such as 'initialize', the component probably has temporal cohesion.

The levels of cohesion identified above reflect the cohesion between the functions that a component provides. Abstract data types cannot easily be accommodated in this scheme. Macro and Buxton (1987) therefore propose adding an extra level, data cohesion, to identify components that encapsulate an abstract data type. Data cohesion is even stronger than functional cohesion.

It goes without saying that it is not always an easy task to obtain the strongest possible cohesion between the elements of a component. Though functional cohesion may be attainable at the top levels and data cohesion at the bottom levels, we often have to settle for less at the intermediate levels of the component hierarchy. The tradeoffs to be made here are what makes design such a difficult, and yet challenging, activity.

The second structural criterion is coupling. Coupling is a measure of the strength of the intercomponent connections. A high degree of coupling indicates a strong dependence between components. A high degree of coupling between components means that we can only fully comprehend this set of components as a whole and may result in ripple effects when a component has to be changed, because such a change is likely to incur changes in the dependent components as well. Loosely coupled components, on the other hand, are relatively independent and are easier to comprehend and adapt. Loose coupling therefore is a desirable property of a design (and its subsequent realization). The following types of coupling can be identified (from tightest to loosest):

Content coupling: One component directly affects the working of another component. Content coupling occurs when a component changes another component's data or when control is passed from one component to the middle of another (as in a jump). This type of coupling can, and should, always be avoided.

Common coupling: Two components have shared data. The name originates from the use of COMMON blocks in FORTRAN. Its equivalent in block-structured languages is the use of global variables.

External coupling: Components communicate through an external medium, such as a file.

Control coupling: One component directs the execution of another component by passing the necessary control information. This is usually accomplished by means of flags that are set by one component and reacted upon by the dependent component.

Stamp coupling: Complete data structures are passed from one component to another. With stamp coupling, the precise format of the data structures is a common property of those components.

Data coupling: Only simple data is passed between components.

The various types of coupling emerged in the 1970s and reflect the data type concepts of programming languages in use at that time. For example, programming languages of that time had simple scalar data types such as real and integer. They allowed arrays of scalar values and records were used to store values of different types. Components were considered data-coupled if they passed scalars or arrays. They were considered stamp-coupled if they passed record data. When two components are control-coupled, the assumption is that the control is passed through a scalar value.

Nowadays, programming languages have much more flexible means of passing information from one component to another, and this requires a more detailed set of coupling levels. For example, components may pass control data through records (as opposed to scalars only). Components may allow some components access to their data and deny it to others. As a result, there are many levels of visibility. The coupling between components need not be commutative. When component A passes a scalar value to B and B returns a value which is used to control the further execution of A, then A is data-coupled to B, while B is control-coupled to A. As a result, people have extended and refined the definitions of cohesion and coupling levels.

Coupling and cohesion are dual characteristics. If the various components exhibit strong internal cohesion, the intercomponent coupling tends to be minimal, and vice versa.

Simple interfaces — weak coupling between components and strong cohesion among a component's elements — are of crucial importance for a variety of reasons:

Communication between programmers becomes simpler. When different people are working on the same system, it helps if decisions can be made locally and do not interfere with the working of other components.

Correctness proofs become easier to derive.

It is less likely that changes will propagate to other components, which reduces maintenance costs.

The reusability of components is increased. The fewer assumptions that are made about an element's environment, the greater the chance of fitting another environment.

The comprehensibility of components is increased. Humans have limited memory capacity for information processing. Simple component interfaces allow for an understanding of a component independent of the context in which it is used.

Empirical studies show that interfaces exhibiting weak coupling and strong cohesion are less error-prone than those that do not have these properties.

The concept of information hiding originates from a seminal paper (Parnas, 1972). The principle of information hiding is that each component has a secret which it hides from other components.

Design involves a sequence of decisions, such as how to represent certain information, or in which order to accomplish tasks. For each such decision we should ask ourselves which other parts of the system need to know about the decision and how it should be hidden from parts that do not need to know.

Information hiding is closely related to the notions of abstraction, cohesion, and coupling. If a component hides some design decision, the user of that component may abstract from (ignore) the outcome of that decision. Since the outcome is hidden, it cannot possibly interfere with the use of that component. If a component hides some secret, that secret does not penetrate the component's boundary, thereby decreasing the coupling between that component and its environment. Information hiding increases cohesion, since the component's secret is what binds the component's constituents together. Note that, in order to maximize its cohesion, a component should hide one secret only.

It depends on the programming language used whether the separation of concerns obtained during the design stage will be identifiable in the ultimate code. To some extent, this is of secondary concern. The design decomposition will be reflected, if only implicitly, in the code and should be explicitly recorded (for traceability purposes) in the technical documentation. It is of great importance for the later evolution of the system. A confirmation of the impact of such techniques as information hiding on the maintainability of software can be found in (Boehm, 1983).

Like all good inventions, readability yardsticks can cause harm in misuse. They are handy statistical tools to measure complexity in prose. They are useful to determine whether writing is gauged to its audience. But they are not formulae for writing. ... Writing remains an art governed by many principles. By no means all factors that create interest and affect clarity can be measured objectively.

Gunning (1968)

In a very general sense, the complexity of a problem refers to the amount of resources required for its solution. We may try to determine complexity in this way by measuring, say, the time needed to solve a problem. This is called an external attribute: we are not looking at the entity itself (the problem), but at how it behaves.

In the present context, complexity refers to attributes of the software that affect the effort needed to construct or change a piece of software. These are internal attributes: they can be measured purely in terms of the software itself. For example, we need not execute the software to determine their values.

Both these notions are very different from the complexity of the computation performed (with respect to time or memory needed). The latter is a well-established field in which many results have been obtained. This is much less true for the type of complexity in which we are interested. Software complexity in this sense is still a rather elusive notion.

Serious efforts have been made to measure software complexity in quantitative terms. The resulting metrics are intended to be used as anchor points for the decomposition of a system, to assess the quality of a design or program, to guide reengineering efforts, etc. We then measure certain attributes of a software system, such as its length, the number of if statements, or the information flow between components, and try to relate the numbers thus obtained to the system's complexity. The type of software attributes considered can be broadly categorized into two classes:

intracomponent attributes are attributes of individual components, and

intercomponent attributes are attributes of a system viewed as a collection of components with dependencies.

In this subsection, we are dealing with intracomponent attributes. Intercompo-nent attributes are discussed in Section 12.1.5. We may distinguish two classes of complexity metrics:

Size-based complexity metrics. The size of a piece of software, such as the number of lines of code, is fairly easy to measure. It also gives a fair indication of the effort needed to develop that piece of software (see also Chapter 7). As a consequence, it could also be used as a complexity metric.

Structure-based complexity metrics. The structure of a piece of software is a good indicator of its design quality, because a program that has a complicated control structure or uses complicated data structures is likely to be difficult to comprehend and maintain, and thus more complex.

The easiest way to measure software size is to count the number of lines of code. We may then impose limits on the number of lines of code per component. In (Weinberg, 1971), for instance, the ideal size of a component is said to be 30 lines of code. In a variant, limits are imposed on the number of elements per component. Some people claim that a component should contain at most seven elements. This number seven can be traced back to research in psychology, which suggests that human memory is hierarchically organized with a short-term memory of about seven slots, while there is a more permanent memory of almost unlimited capacity. If there are more than seven pieces of information, they cannot all be stored in short-term memory and information gets lost.

There are serious objections to the direct use of the number of lines of code as a complexity metric. Some programmers write more verbose programs than others. We should at least normalize the counting to counteract these effects and be able to compare different pieces of software.

A second objection is that this technique makes it hard to compare programs written in different languages. If the same problem is solved in different languages, the results may differ considerably in length. For example, APL is more compact than COBOL.

Finally, some lines are more complex than others. An assignment statement such as:

a = b

looks simpler than a loop:

while (current = null) {current = current.next;}although they each occupy one line.

Halstead (1977) uses a refinement of counting lines of code. This refinement is meant to overcome the problems associated with metrics based on a direct count of lines of code.

Halstead's method, also known as 'software science', uses the number of operators and operands in a piece of software. The set of operators includes the arithmetic and Boolean operators, as well as separators (such as a semicolon between adjacent instructions) and (pairs of) reserved words. The set of operands contains the variables and constants used. Halstead then defines four basic entities:

n1 is the number of unique (i.e. different) operators in the component;

n2 is the number of unique (i.e. different) operands in the component;

N1 is the total number of occurrences of operators;

N2 is the total number of occurrences of operands.

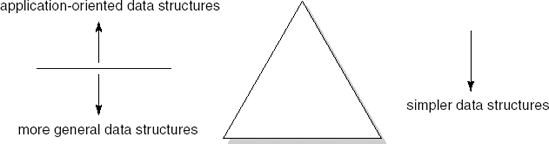

Figure 12.3 contains a simple sorting routine. Table 12.1 lists the operators and operands of this routine together with their frequencies. Note that there is no generally agreed definition of what exactly an operator or operand is, so the numbers given have no absolute meaning. This is part of the criticism of this theory.

Table 12.1. Counting the number of operators and operands in the sort routine

Operator | Number of occurrences | Operand | Number of occurrences |

|---|---|---|---|

| 1 |

| 9 |

| 1 |

| 2 |

| 1 |

| 7 |

| 1 |

| 6 |

| 4 |

| 2 |

| 7 |

| 1 |

{ } | 4 |

| 2 |

| 2 | ||

| 5 | ||

< | 2 | ||

| 2 | ||

| 1 | ||

| 2 | ||

| 1 | ||

| 1 | ||

> | 1 | ||

| 3 | ||

n1 = 17 | N1 = 39 | n2 = 7 | N2 = 29 |

Using the primitive entities defined above, Halstead defines a number of derived entities, such as:

Size of the vocabulary: n = n1 + n2.

Program length: N = N1 + N2.

Program volume: V = N log 2 n.

This is the minimal number of bits needed to store N elements from a set of cardinality n.

Program level: L = V*/V.

Here V* is the most compact representation of the algorithm in question. For the example in Figure 12.3 this is

sort(x);, so n = N = 3, and V* = 3 log 2 3. From the formula, it follows that L is at most 1. Halstead postulates that the program level increases if the number of different operands increases, while it decreases if the number of different operators or the total number of operands increases. As an approximation of L, he therefore suggests:

Programming effort: E = V/L.

The effort needed increases with volume and decreases as the program level increases. E represents the number of mental discriminations (decisions) to be taken while implementing the problem solution.

Estimated programming time in seconds:

The constant 18 is determined empirically. Halstead explains this number by referring to (Stroud, 1967), which discusses the speed with which human memory processes sensory input. This speed is said to be 5–20 units per second. In Halstead's theory, the number 18 is chosen. This number is also referred to as Stroud's number.

The above entities can only be determined after the program has been written. It is, however, possible to estimate a number of these entities. When doing so, the values for n1 and n2 are assumed to be known. This may be the case, for instance, after the detailed design step. Halstead then estimates program length as:

An explanation for this formula can be given as follows. There are n12n1 × n22n2 ways to combine the n given symbols such that operators and operands alternate. However, the program is organized and organization generally gives a logarithmic reduction in the number of possibilities. Doing so yields the above formula for

Table 12.2. Values for 'software science' entities for the routine in Figure 12.3

Entity | Value |

|---|---|

Size vocabulary | 24 |

Program length | 68 |

Estimated program length | 89 |

Program volume | 312 |

Level of abstraction | 0.015 |

Estimated level of abstraction | 0.028 |

Programming effort | 20 800 |

Estimated programming time | 1 155 |

A number of empirical studies have addressed the predictive value of Halstead's formulae. These studies often give positive evidence of the validity of the theory.

The theory has also been heavily criticized. The underpinning of Halstead's formulae is not convincing. Results from cognitive psychology, such as Stroud's number, are badly used, which weakens the theoretical foundation. Halstead concentrates on the coding phase and assumes that programmers are 100% devoted to a programming task for an uninterrupted period of time. Practice is likely to be quite different. Different people use quite different definitions of the notions of operator and operand, which may lead to widely different outcomes for the values of entities. Yet, Halstead's work has been very influential. It was the first major body of work to point out the potential of metrics for software development.

The second class of intracomponent complexity metrics concerns metrics based on the structure of the software. If we try to derive a complexity metric from the structure of a piece of software, we may focus on the control structure, the data structures, or a combination of these.

If we base the complexity metric on the use of data structures, we may do so by considering, for instance, the number of instructions between successive references to one object. If this number is large, information about these variables must be retained for a long period of time when we try to comprehend that program text. Following this line of thought, complexity can be related to the average number of variables for which information must be kept by the reader.

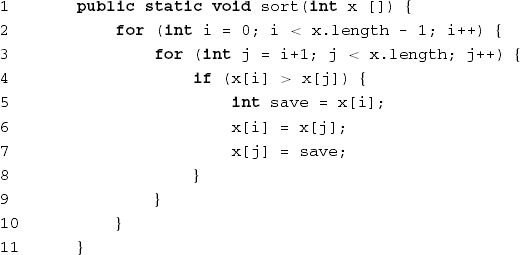

The best-known structure-based complexity metric is McCabe's cyclomatic complexity (McCabe, 1976). McCabe bases his complexity metric on a (directed) graph depicting the control flow of a component. He assumes that the graph of a single component has a unique start and end node, that each node is reachable from the start node, and that the end node can be reached from each node. In that case, the graph is connected. If the component is a class consisting of one or more methods, then the control graph has a number of connected subgraphs, one for each of its methods.

The cyclomatic complexity, CV, equals the number of predicates (decisions) plus 1 in the component that corresponds to this control graph. Its formula reads

where e, n and p denote the number of edges, nodes, and connected subgraphs in the control graph, respectively.

Figure 12.4 shows the control flow graph for the example routine from Figure 12.3. The numbers inside the nodes correspond to the line numbers from Figure 12.3. The cyclomatic complexity of this graph is 13 − 11 + 1 + 1 = 4. The decisions in the component from Figure 12.3 occur in lines 2, 3 and 4. In both for loops, the decision is to either exit the loop or iterate it. In the if statement, the choice is between the then part and the else part.

McCabe suggests imposing an upper limit of ten for the cyclomatic complexity of a component. McCabe's complexity metric is also applied to testing. One criterion used during testing is to get a good coverage of the possible paths through the component. Applying McCabe's cyclomatic complexity leads to a structured testing strategy involving the execution of all linearly independent paths (see also Chapter 13).[15]

Complexity metrics such as those of Halstead, McCabe, and many others all measure attributes which are in some sense related to the size of the task to be accomplished, be it the time in man-months, the number of lines of code, or something else. As such, they may serve various purposes: determining the optimal size of a component, estimating the number of errors in a component, or estimating the cost of a piece of software.

All known complexity metrics suffer from some serious shortcomings, though:

They are not very context-sensitive. For example, any component with five

ifstatements has the same cyclomatic complexity. Yet we may expect that different organizations of thoseifstatements (consecutive versus deeply nested, say) have an effect on the perceived complexity of those components. In terms of measurement theory, this means that cyclomatic complexity does not fulfill the 'representation condition', which says that the empirical relations should be preserved in the numerical relation system. If we empirically observe that component A is more complex than component B, then any complexity metric F should be such that FA > FB.They measure only a few facets. Halstead's method does not take into account the control flow complexity, for instance.

We may formulate these shortcomings as follows: complexity metrics tell us something about the complexity of a component (i.e. a higher value of the metric is likely to represent greater complexity), but a more complex component does not necessarily result in a higher value for a complexity metric. Complexity is made up of many specific attributes. It is unlikely that there will ever be one 'general' complexity metric.

We should thus be very careful in the use of complexity metrics. Since they seem to measure along different dimensions of what is perceived as complexity, the use of multiple metrics is likely to yield better insights. But even then the results must be interpreted with care. Redmond and Ah-Chuen (1990), for instance, evaluated various complexity metrics for a few systems, including the MINIX operating system. Of the 277 components in MINIX, 34 have a cyclomatic complexity greater than ten. The highest value (58) was observed for a component that handles a number of ASCII escape character sequences from the keyboard. This component, and most others with a large cyclomatic complexity, was considered 'justifiably complex'. An attempt to reduce the complexity by splitting those components would increase the difficulty of understanding them while artificially reducing its complexity value. Complexity yardsticks too can cause harm in misuse.

Finally, we may note that various validations of both software science and cyclomatic complexity indicate that they are not substantially better indicators of coding effort, maintainability, or reliability than the length of a component (number of lines of code). The latter is much easier to determine, though.

The high correlation that is often observed between a size-related complexity metric and a control-related complexity metric such as McCabe's cyclomatic complexity should not come as a surprise. Large components tend to have more if statements than small components. What counts, however, is the density with which those if statements occur. This suggests a complexity metric of the form CV/LOC rather than CV.

We may depict the outcome of the design process, a set of components and their mutual dependencies, in a graph. The nodes of this graph correspond to components and the edges denote relations between components. We may think of many types of intercomponent relations, such as:

component A contains component B;

component A follows component B;

component A delivers data to component B;

component A uses component B.

The type of dependencies we are interested in are those that determine the complexity of the relations between components. The amount of knowledge that components have of each other should be kept to a minimum. To be able to assess this, it is important to know, for each component, which other components it uses, since that tells us which knowledge of each other they (potentially) use. In a proper design, the information flow between components is restricted to flow that comes about through method calls. The graph depicting the 'uses' relation is therefore often termed a call graph.

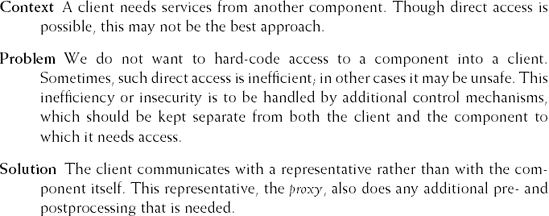

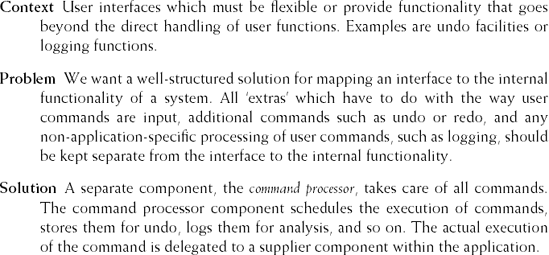

The call graph may have different shapes. In its most general form it is a directed graph (see Figure 12.5a).[16] If the graph is acyclic, i.e. it does not contain a path of the form M1, M2, ..., Mn, M1, the uses relation forms a hierarchy. We may then decompose the graph into a number of distinct layers such that a component at one layer uses only components from lower layers (Figure 12.5b). Going one step further, we get a scheme such as the one in Figure 12.5c, where components from level i use only components from level i + 1. Finally, if each component is used by only one other component, the graph reduces to a tree (Figure 12.5d).

Figure 12.5. Component hierarchies: (a) directed graph, (b) directed acyclic graph, (c) layered graph, (d) tree

There are various aspects of the call graph that can be measured. Directly measurable attributes that relate to the 'shape' of the call graph include:

its size, measured in terms of the number of nodes, the number of edges, or the sum of these;

its depth, the length of the longest path from the root to some leaf node (in an acyclic directed graph);

its width, the maximum number of nodes at some level (in an acyclic directed graph).

We do not know of studies that try to quantitatively relate those measures to other complexity-related aspects such as debugging time, maintainability, etc. They may be used, though, as one of the parameters in a qualitative assessment of a design.

It is often stated that a good design should have a tree-like call graph. It is therefore worthwhile to consider the tree impurity of a call graph, i.e. the extent to which the graph deviates from a pure tree. Suppose we start with a connected (undirected) graph (such as the ones in Figure 12.5b-d, if we ignore the direction of the arrows). If the graph is not a tree, it has at least one cycle, i.e. a path from some node A via one or more other nodes back to A again. We may then remove one of the edges from this cycle and the result will still be a connected graph. We may continue removing edges from cycles until the result is a tree, as we did in the transition from Figure 12.5b to Figure 12.5c to Figure 12.5d. The final result is called the graph's spanning tree. The number of edges removed in this process is an indication of the graph's tree impurity.

In order to obtain a proper measure of tree impurity we proceed as follows. The complete graph Kn is the graph with n nodes and the maximum number of edges. This maximum number of edges is n(n − 1)/2. A tree with n nodes has (n − 1) edges. Given a connected graph G with n nodes and e edges, we define its tree impurity m(G) as the number of extra edges divided by the maximum number of extra edges:

This measure of tree impurity fits our intuitive notion of that concept. The value of m(G) lies between 0 and 1. It is 0 if G is a tree and 1 if it is a complete graph. If we add an edge to G, the value of m(G) increases. Moreover, the 'penalty' of extra edges is proportional to the size of the spanning tree.

It is not always easy, or even meaningful, to strive for a neat hierarchical decomposition. We will often have to settle for a compromise. It may for instance be appropriate to decompose a system into a number of clusters, each of which contains a number of components. The clusters may then be organized hierarchically, while the components within a given cluster show a more complicated interaction pattern. Also, tree-like call graphs do not allow for reuse (if a component is reused within the same system, its node in the call graph has at least two ancestors).

The call graph allows us to assess the structure of a design. In deriving the measures above, each edge in the call graph is treated alike. Yet, the complexity of the information flow that is represented by the edges is likely to vary. As noted in the earlier discussion on coupling, we would like the intercomponent connections to be 'thin'. Therefore, we would like a measure which does not merely count the edges, but which also considers the amount of information that flows through them.

The best-known attempt to measure the total level of information flow between the components of a system is due to Henri and Kafura (1981). Their measures were able to identify change-prone UNIX procedures and evaluate potential design changes. Shepperd (1990) studied the information flow measure extensively and proposed several refinements, thus obtaining a 'purer' metric. Using Shepperd's definitions, the information flow measure is based on the following notions of local and global data flow:

A local flow from component A to component B exists if

A invokes B and passes it a parameter, or

B invokes A and A returns a value.

A global flow from component A to component B exists if A updates some global data structure and B retrieves from that structure.

Using these notions of local and global data flow, Shepperd defines the 'complexity' of a component M as

where

fan-in(M) is the number of (local and global) flows whose sink is M, and

fan-out(M) is the number of (local and global) flows whose source is M.

A weak point of the information flow metric is that all flows have equal weight. Passing a simple integer as a parameter contributes equally to this measure of complexity as invoking a complex global data structure. The abstract-data-type architectural style easily results in components with a high fan-in and fan-out. If the same system is built using global data structures, its information flow metric is likely to have a smaller value. Yet, the information flow to and from the components in the abstract-data-type style generally concern simple scalar values only and are therefore considered simpler.

In a more qualitative sense, the information flow metric may indicate spots in the design that deserve our attention. If some component has a high fan-in, this may indicate that the component has little cohesion. Also, if we consider the information flow per level in a layered architecture, an excessive increase from one level to the next might indicate a missing level of abstraction.

During design, we (pre)tend to follow a top-down decomposition strategy. We may take a completely different stand and try to compose a hierarchical system structure from a flat collection of system elements. Elements that are in some sense 'closest' to one another are grouped together. We then have to define some measure for the distance between elements and a mathematical technique known as cluster analysis can be used to do the actual grouping. Elements in the same group are more like other elements within the same group and less like elements in other groups. If the measure is based on the number of data types that elements have in common, this clustering results in abstract data types or, more generally, components having high cohesion. If the measure is based on the number of data bindings between elements, the result is likely to have a low value for the information-flow metric.

The measure chosen, in a sense, determines how we define 'friendship' between elements. Close friends should be grouped in the same component while distant relatives may reside in different components. The various qualitative and quantitative design criteria that we have discussed above have different, but in essence very similar, definitions of friendship.

Though much work remains to be done, a judicious use of available design metrics is already a valuable tool in the design and quality assurance of software systems.

At the level of individual methods of an object-oriented system, we may assess quality characteristics of components by familiar metrics such as: length, cyclomatic complexity, and so on. At higher levels of abstraction, object-oriented systems consist of a collection of classes that interact by sending messages. Familiar intercomponent metrics which focus on the relationships between components do not account for the specifics of object-oriented systems. In this section, we discuss a few metrics specifically aimed at characteristics of object-oriented systems. These metrics are listed in Table 12.3.

WMC is a measure of the size of a class. The assumption is that larger classes are in general less desirable. They take more time to develop and maintain and they are likely to be less reusable. The formula is: WMC = Σni = 1 ci, where ci is the complexity of method i. For the complexity of an individual method we may choose its length, cyclomatic complexity, and so on. Most often, ci is set at 1. In that case, we simply count the number of methods. Besides being simple, this has the advantage that the metric can be applied during design, once the class interface has been decided upon. Note that each entry in the class interface counts as one method, the principle being that each method which requires additional design effort should be counted. For example, different constructors for the same operation, as is customary in C++, count as different methods.

Classes in an object-oriented design are related through a subtype — supertype hierarchy. If the class hierarchy is deep and narrow, a proper understanding of a class may require knowledge of many of its superclasses. On the other hand, a wide and shallow inheritance structure occurs when classes are more loosely coupled. The latter situation may indicate that commonality between elements is not sufficiently exploited. DIT is the distance of a class to the root of its inheritance tree. Note that the value of DIT is somewhat language-dependent. In Smalltalk, for example, every class is a subclass of Object, and this increases the value of DIT. A widely accepted heuristic is to strive for a forest of classes, i.e. a collection of inheritance trees of medium height.

NOC counts the number of immediate descendants of a class. If a class has a large number of descendants, this may indicate an improper abstraction of the parent class. A large number of descendants also suggests that the class is to be used in a variety of settings, which will make it more error-prone. The idea thus is that higher values of NOC suggest a higher complexity of the class.

CBO is the main coupling metric for object-oriented systems. Two classes are coupled if a method of one class uses a method or state variable of the other class. The CBO is a count of the number of other classes with which it is coupled. As with the traditional coupling metric, high values of CBO suggest tight bindings with other components and this is undesirable.

In the definition of CBO, all couplings are considered equal. However, if we look at the different ways in which classes may be coupled, it is reasonable to say that:

access to state variables is worse than mere parameter passing;

access to elements of a foreign class is worse than access to elements of a superclass;

passing many complex parameters is worse than passing a few simple parameters;

messages that conform to Demeter's Law[17] are better than those which do not.

If we view the methods as bubbles and the couplings as connections between bubbles, CBO simply counts the number of connections for each bubble. In reality, we consider some types of couplings worse than others: some connections are 'thicker' than others and some connections are to bubbles 'further away'. For the representation condition of measurement theory to hold, these empirical relations should be reflected in the numerical relation system.

Martin (2002) defines coupling measures at the package level:

The afferent coupling (Ca) of a package P is the number of other packages that depend upon classes within P (through inheritance or associations). It indicates the dependence of a package on its environment.

The efferent coupling (Ce) of a package P is the number of packages that classes within P depend upon. It indicates the dependence of the environment on a package.

Adding these numbers together results in a total coupling measure of a package P. The ratio I = Ce/(Ce + Ca) indicates the relative dependence of the environment to P with respect to the total number of dependencies between P and its environment. If Ce equals zero, P does not depend at all on other packages and I = 0 as well. If on the other hand Ca equals zero, P only depends on other packages and no other package depends on P. In that case, I = 1. I thus can be seen as an instability measure for P. Larger values of I denote a larger instability of the package.

RFC measures the 'immediate surroundings' of a class. Suppose a class C has a collection of methods M. Each method from M may in turn call other methods, from C or any other class. Let {Ri} be the set of methods called from method Mi. Then the response set of this class is defined as: {M} Ui {Ri}, i.e. the set of messages that may potentially be executed if a message is sent to an object of class C. RFC is defined as the number of elements in the response set. Note that we only count method calls up to one level deep. Larger values of RFC means that the immediate surroundings of a class is larger in size. There is, then, a lot of communication with other methods or classes. This makes comprehension of a class more difficult and increases test time and complexity.

The final object-oriented metric to be discussed is the lack of cohesion of a method. The traditional levels of cohesion express the degree of mutual affinity of the elements of a component. It is a measure of the glue that keeps the component together. If all methods of a class use the same state variables, these state variables serve as the glue which ties the methods together. If some methods use one subset of the state variables and other methods use another subset of the state variables, the class lacks cohesion. This may indicate a flaw in the design and it may be better to split it into two or more subclasses. LCOM is the number of disjoint sets of methods of a class. Any two methods in the same set share at least one local state variable. The preferred value for LCOM is 0.

There are obviously many more metrics that aim to address the specifics of object-oriented systems. Most of these have not been validated extensively, though. Several experiments have shown that the above set does have some merit. Overall, WMC, CBO, RFC and LCOM have been found to be the more useful quality indicators.

These metrics for example were able to predict fault-proneness of classes during design, and were found to have a strong relationship to the maintenance effort. The merits of DIT and NCO remain somewhat unclear.

Note that many of these metrics correlate with class size. One may expect that larger classes have more methods, more descendants, more couplings with other classes, etc. (El Emam et al., 2001) indeed found that class size has a confounding effect on the values of the above metrics. It thus remains questionable whether these metrics tell more than a plain LOC count.

Having discussed the properties of a good system decomposition, we now come to a question which is at least as important: how do you get a good decomposition to start with?

There exist a vast number of design methods, a sample of which are given in Table 12.4. These design methods generally consist of a set of guidelines, heuristics, and procedures on how to go about designing a system. They also offer a notation for expressing the result of the design process. Together they provide a systematic way of organizing and structuring the design process and its products.

For some methods, such as FSM or Petri nets, emphasis is on the notation, while the guidelines on how to tackle design are not very well developed. Methods such as JSD, on the other hand, offer extensive prescriptive guidelines as well. Most notations are graphical and somewhat informal, but OBJ uses a very formal mathematical language. Some methods concentrate on the design stage proper, while others, such as SSADM and JSD, are part of a wider methodology covering other life cycle phases as well. Finally, some methods offer features that make them especially useful for the design of certain types of application, such as SA/RT (for real-time systems) or Petri nets (for concurrent systems).

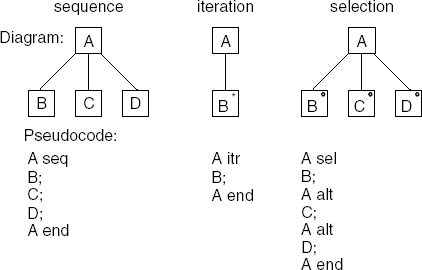

In the following subsections, we discuss three classical design methods:

functional decomposition, which is a rather general approach to system design, not tied to any specific method listed in Table 12.4; many different notations can be used to depict the resulting design, ranging from flowcharts or pseudocode to algebraic specifications;

data flow design, as exemplified by SA/SD;

design based on data structures, as is done in JSP and JSD.

Table 12.4. A sample of design methods

Decision tables | Matrix representation of complex decision logic at the detailed design level. |

E-R | Entity-relationship model. Family of graphical techniques for expressing data relationships; see also Chapter 10. |

Flowcharts | Simple diagram technique to show control flow at the detailed design level. Exists in many flavors; see (Tripp, 1988) for an overview. |

FSM | Finite state machine. A way to describe a system as a set of states and possible transitions between those states; the resulting diagrams are called state transition diagrams; see also Chapter 10. |

JSD | Jackson System Development; see Section 12.2.3. Successor to, and more elaborate than, JSP; has an object-oriented flavor. |

JSP | Jackson Structured Programming. Data-structure-oriented method; see Section 12.2.3. |

NoteCards | Example hypertext system. Hypertext systems make it possible to create and navigate through a complex organization of unstructured pieces of text (Conklin, 1987). |

OBJ | Algebraic specification method; highly mathematical (Goguen, 1986). |

OOD | Object-oriented design; exists in many flavors; see Section 12.3. |

Petri nets | Graphical design representation, well-suited for concurrent systems. A system is described as a set of states and possible transitions between those states. States are associated with tokens and transitions are described by firing rules. In this way, concurrent activities can be synchronized (Peterson, 1981). |

SA/SD | Structured analysis/structured design; data flow design technique; see also Section 12.2.2. |

SA/RT | Extension to structured analysis so that real-time aspects can be described (Hatley and Pirbhai, 1988). |

SSADM | Structured systems analysis and design method. A highly prescriptive method for performing the analysis and design stages; UK standard (Downs et al., 1992). |

A fourth design method, object-oriented design, is discussed in Section 12.3. Whereas the above three methods concentrate on identifying the functions of the system, object-oriented design focuses on the data on which the system is to operate. Object-oriented design is the most popular design approach today, not the least because of the omnipresence of UML as a notational device for the outcome of both requirements engineering and design.

In a functional decomposition, the intended function is decomposed into a number of subfunctions that each solve part of the problem. These subfunctions themselves may be further decomposed into yet more primitive functions, and so on. Functional decomposition is a design philosophy rather than a design method. It denotes an overall approach to problem decomposition which underlies many a design method.

With functional decomposition, we apply divide-and-conquer tactics. These tactics are analogous to, but not the same as, the technique of stepwise refinement as it is applied in 'programming in the small'. Using stepwise refinement, the refinements tend to be context-dependent. As an example, consider the following pseudo-code algorithm to insert an element into a sorted list:

procedureinsert(a, n, x);begininsert x at the end of the list; k:= n + 1;whileelementkis not at its proper placedoswap elementkand elementk- 1; k:= k-1enddo;endinsert;

The refinement of a pseudo-code instruction such as elementk is not at its proper place is done within the context of exactly the above routine, using knowledge of other parts of this routine. In the decomposition of a large system, it is precisely this type of dependency that we try to avoid. The previous section addressed this issue at great length.

During requirements engineering, the base machine has been decided upon. This base machine need not be a 'real' machine. It can be a programming language or some other set of primitives that constitutes the bottom layer of the design. During this phase too, the functions to be provided to the user have been fixed. These are the two ends of a rope. During the design phase we try to get from one end of this rope to the other. If we start from the user function end and take successively more detailed design decisions, the process is called top-down design. The reverse is called bottom-up design.

Top-down design: Starting from the main user functions at the top, we work down decomposing functions into subfunctions. Assuming we do not make any mistakes on the way down, we can be sure to construct the specified system. With top-down design, each step is characterized by the design decisions it embodies. To be able to apply a pure top-down technique, the system has to be fully described. This is hardly ever the case. Working top-down also means that the earliest decisions are the most important ones. Undoing those decisions can be very costly.

Bottom-up design: Using bottom-up design, we start from a set of available base functions. From there we proceed towards the requirements specification through abstraction. This technique is potentially more flexible, especially since the lower layers of the design could be independent of the application and thus have wider applicability. This is especially important if the requirements have not been formulated very precisely yet or if a family of systems has to be developed. A real danger of the bottom-up technique is that we may miss the target.

In their pure form, neither the top-down nor the bottom-up technique is likely to be used all that often. Both techniques are feasible only if the design process is a pure and rational one. And this is an idealization of reality. There are many reasons why the design process cannot be rational. Some of these have to do with the intangibles of design processes per se, some originate from accidents that befall many a software project. Parnas and Clements (1986) list the following reasons, amongst others:

Mostly, users do not know exactly what they want and they are not able to tell all they know.

Even if the requirements are fully known, a lot of additional information is needed. This information is discovered only when the project is under way.

Almost all projects are subject to change. Changes influence earlier decisions.

People make errors.

During design, people use the knowledge they already have, experiences from earlier projects, and so on.

In many projects, we do not start from scratch — we build from existing software.

Design exhibits a 'yo-yo' character: something is devised, tried, rejected again, new ideas crop up, etc. Designers frequently go about in rather opportunistic ways. They frequently switch from high-level, application domain issues to coding and detailed design matters, and use a variety of means to gather insight into the problem to be solved. At most, we may present the result of the design process as if it came about through a rational process.

A general problem with any form of functional decomposition is that it is often not immediately clear along which dimension the system is decomposed. If we decompose along the time axis, the result is often a main program that controls the order in which a number of subordinate components is called. In Yourdon's classification, the resulting cohesion type is temporal. If we decompose with respect to the grouping of data, we obtain the type of data cohesion exhibited in abstract data types. Both these functional decompositions can be viewed as an instance of some architectural style. Rather than worrying about which dimension to focus on during functional decomposition, you had better opt for a particular architectural style and let that style guide the decomposition.

At some intermediate level, the set of interrelated components comprises the software architecture as discussed in Chapter 11. This software architecture is a product which serves various purposes: it can be used to discuss the design with different stakeholders; it can be used to evaluate the quality of the design; it can be the basis for the work-breakdown structure; it can be used to guide the testing process, etc. If a software architecture is required, it necessitates a design approach in which, at quite an early stage, every component and connection is present. A bottom-up or top-down approach does not meet this requirement, since in both these approaches only part of the solution is available at intermediate points in time.

Parnas (1978) offers the following useful guidelines for a sound functional decomposition:

Try to identify subsystems. Start with a minimal subset and define minimal extensions to this subset.

The idea behind this guideline is that it is extremely difficult, if not impossible, to get a complete picture of the system during requirements engineering. People ask too much or they ask the wrong things. Starting from a minimal subsystem, we may add functionality incrementally, using the experience gained with the actual use of the system. The idea is very similar to that of agile approaches, discussed in Chapter 3.

Apply the information-hiding principle.

Try to define extensions to the base machine step by step.

This holds for both the minimal machine and its extensions. Such incremental extensions lead to the concept of a virtual machine. Each layer in the system hierarchy can be viewed as a machine. The primitive operations of this machine are implemented by the lower layers of the hierarchy. This machine view of the component hierarchy nicely maps onto a layered architectural style. It also adds a further dimension to the system structuring guidelines offered in Section 12.1.5.

Apply the uses relation and try to place the dependencies thus obtained in a hierarchical structure.

Obviously, the above guidelines are strongly interrelated. It has been said before that a strictly hierarchical tree structure of system components is often not feasible. A compromise that often is feasible is a layered system structure as depicted in Figure 12.6.

The arrows between the various nodes in the graph indicate the uses relation. Various levels can be distinguished in the structure depicted. Components at a given level only use components from the same, or lower, levels. The layers distinguished in Figure 12.6 are not the same as those induced by the acyclicity of the graph (as discussed in Section 12.1.5) but are rather the result of viewing a distinct set of components as an abstract, virtual machine. Deciding how to group components into layers in this way involves considering the semantics of those components. Lower levels in this hierarchy bring us closer to the 'real' machine on which the system is going to be executed. Higher levels are more application-oriented. The choice of the number of levels in such an architecture is a (problem-dependent) design decision.

This work of Parnas heralds some of the notions that were later recognized as important guiding principles in the field of software architecture. The idea of a minimal subset to which extensions are defined is very similar to the notion of a product-line architecture: a basic architecture from which a family of similar systems can be derived. The layered approach is one of the basic architectural styles discussed in Section 11.4.

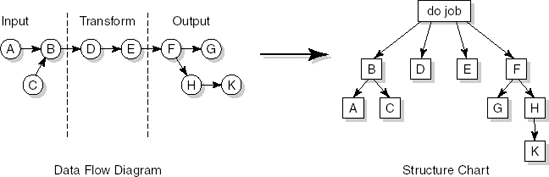

The data flow design method originated in the early 1970s with Yourdon and Constantine. In its simplest form, data flow design is but a functional decomposition with respect to the flow of data. A component is a black box which transforms some input stream into some output stream. In data flow design, heavy use is made of graphical representations known as data flow diagrams (DFDs) and structure charts. Data flow diagrams were introduced as a modeling notation in Section 10.1.3.

Data flow design is usually seen as a two-step process. First, a logical design is derived in the form of a set of data flow diagrams. This step is referred to as structured analysis (SA). Next, the logical design is transformed into a program structure represented as a set of structure charts. The latter step is called structured design (SD). The combination is referred to as SA/SD.

Structured analysis can be viewed as a proper requirements engineering method insofar as it addresses the modeling of some universe of discourse (UoD). It should be noted that, as data flow diagrams are refined, the analyst performs an implicit (top-down) functional decomposition of the system as well. At the same time, the diagram refinements result in corresponding data refinements. The analysis process thus has design aspects as well.

Structured design, being a strategy to map the information flow contained in data flow diagrams into a program structure, is a genuine component of the (detailed) design phase.

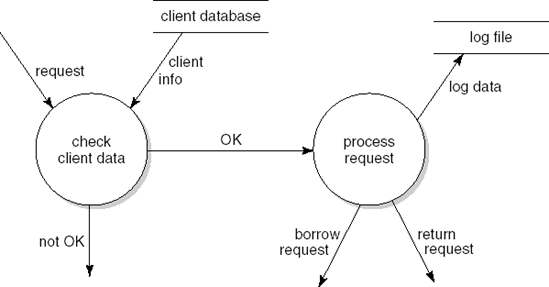

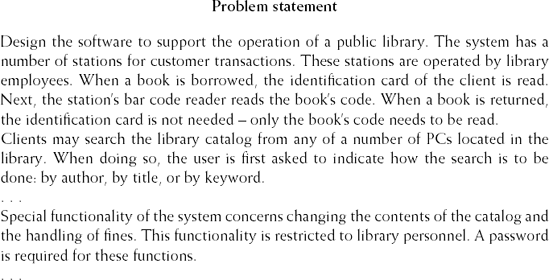

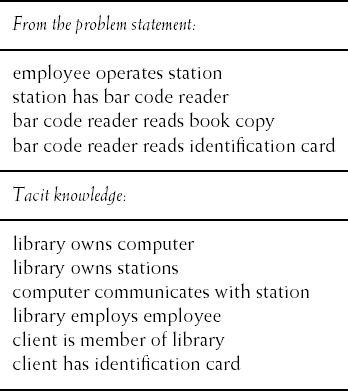

The main result of structured analysis is a series of data flow diagrams. Four types of data entity are distinguished in these diagrams:

External entities are the source or destination of a transaction. These entities are located outside the domain considered in the data flow diagram. External entities are indicated as squares.

Processes transform data. Processes are denoted by circles.

Data flows between processes, external entities, and data stores. A data flow is indicated by an arrow. Data flows are paths along which data structures travel.

Data stores lie between two processes. This is indicated by the name of the data store between two parallel lines. Data stores are places where data structures are stored until needed.

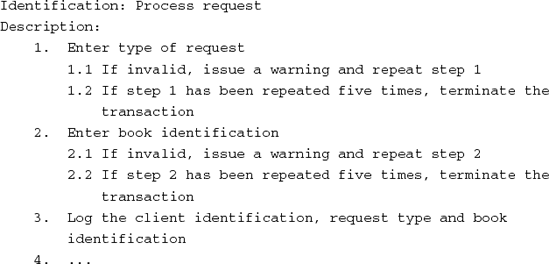

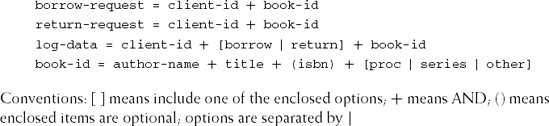

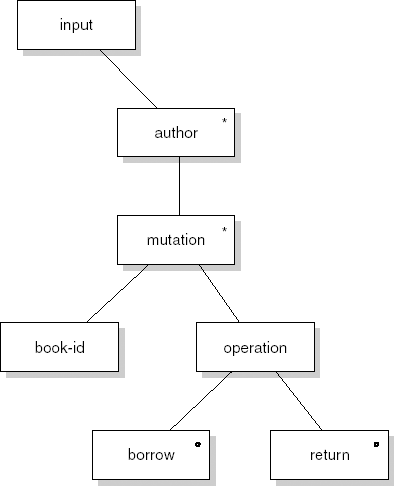

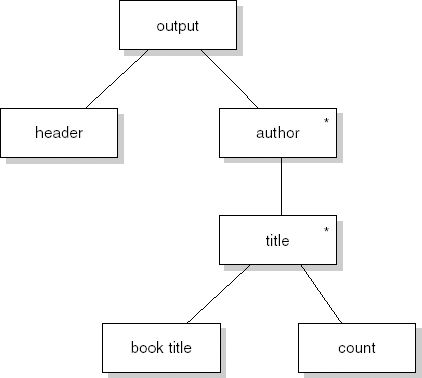

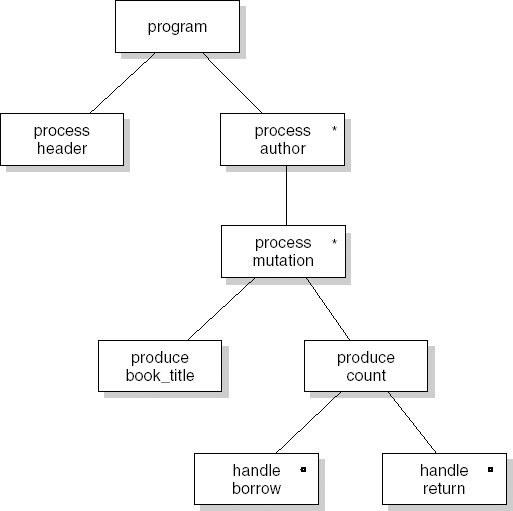

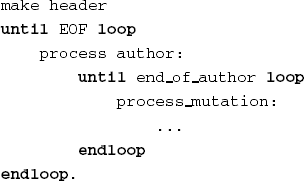

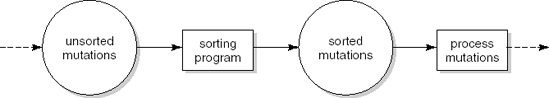

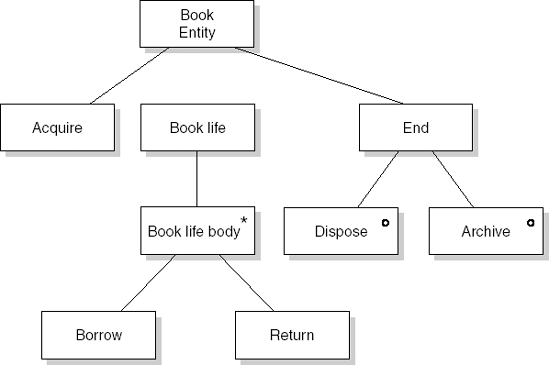

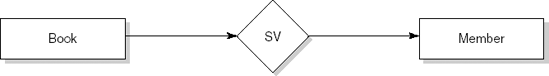

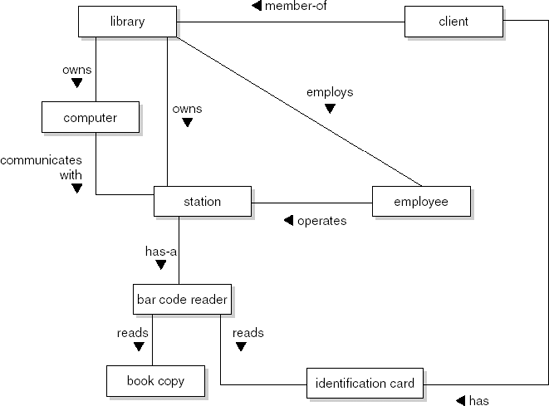

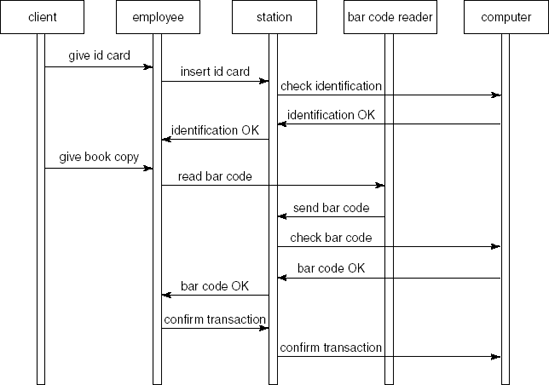

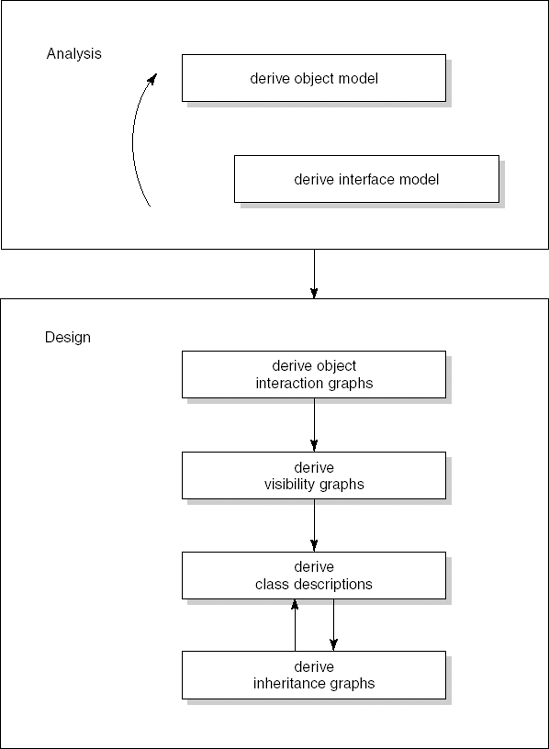

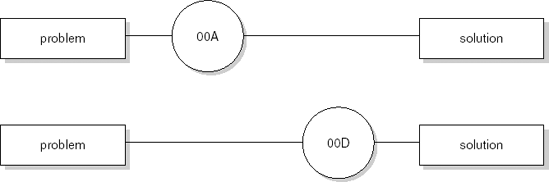

We illustrate the various process steps of SA/SD by analyzing and designing a simple library automation system. The system allows library clients to borrow and return books. It also reports to library management about how the library is used by its clients (for example, the average number of books on loan and authors much in demand).