With Gerrit C. van der Veer, Open University, Heerlen, The Netherlands

LEARNING OBJECTIVES

To be aware of different architectural styles for interactive systems

To appreciate the role of different types of expertise in user interface design

To be aware of the role of various models in user interface design

To understand that a user interface entails considerably more than what is represented on the screen

To recognize the differences between a user-centered approach to the design of interactive systems and other requirements engineering approaches

Note

Software systems are used by humans. Cognitive issues are a major determinant of the effectiveness with which users go about their work. Why is one system more understandable than another? Why is system X more 'user-friendly' than system Y? In the past, the user interface was often only addressed after the system had been fully designed. However, the user interface concerns more than the size and placement of buttons and pull-down menus. This chapter addresses issues about the human factors that are relevant to the development of interactive systems.

Today, user needs are recognized to be important in designing interactive computer systems, but as recently as 1980, they received little emphasis. Grudin (1991)

We can't worry about these user interface issues now. We haven't even gotten this thing to work yet!

(Mulligan et al., 1991)

A system in which the interaction occurs at a level which is understandable to the user will be accepted faster than a system where it is not. A system which is available at irregular intervals or gives incomprehensible error messages, is likely to meet resistance. A 1992 survey found that 48% of the code of applications was devoted to the user interface, and about 50% of the development time was devoted to implementing that part of the application (Myers and Rosson, 1992). Often, the user interface is one of the most critical factors as regards the success or failure of a computerized system. Yet, most software engineers know fairly little about this aspect of our trade.

Users judge the quality of a software system by the degree in which it helps them to accomplish their tasks and by the sheer joy they have in using it. This judgment is to a large extent determined by the quality of the user interface. Good user interfaces contribute to a system's quality in the following ways (Bias and Mayhew, 1994):

Increased efficiency: If the system fits the way its users work and if it has a good ergonomic design, users can perform their tasks efficiently. They do not lose time struggling with the functionality and its appearance on the screen.

Improved productivity: A good interface does not distract the user, but rather allows him to concentrate on the task to be done.

Reduced errors: Many so-called 'human errors' can be attributed to poor user interface quality. Avoiding inconsistencies, ambiguities, and so on, reduces user errors.

Reduced training: A poor user interface hampers learning. A well-designed user interface encourages its users to create proper models and reinforces learning, thus reducing training time.

Improved acceptance: Users prefer systems whose interface is well-designed. Such systems make information easy to find and provide the information in a form which is easy to use.

In a technical sense, the user interface often comprises one or more layers in the architecture of a system. Section 16.1 discusses two well-known architectural styles that highlight the place and role of the user interface in interactive systems. A common denominator of these and other schemes is that they separate the functionality of the system from the interaction with the user. In a similar vein, many software engineering methods also separate the design of the functionality from the design of the user interface. The design of the user interface then reduces to a mere design of the screen layout, menu structure, size and color of buttons, format of help and error messages, etc. User interface design then becomes an activity that is only started after the requirements engineering phase has finished. It is often done by software engineers who have little specialized knowledge of user interface design.

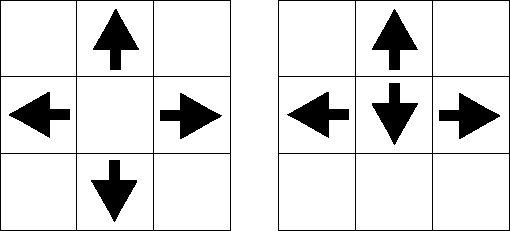

Software engineers are inclined to model the user interface after the structure of the implementation mechanism, rather than the structure of the task domain. For instance, a structure-based editor may force you to input ↑ 10 2 in order to obtain 102, simply because the former is easier for the system to recognize. This resembles the interface to early pocket calculators, where the stack mechanism used internally shows itself in the user interface. Similarly, user documentation often follows implementation patterns and error messages are phrased in terms that reflect the implementation rather than the user tasks.

In this chapter we advocate a rather different approach. This approach may be summarized as 'The user interface is the system'. This broader view of the concept user interface and the disciplines that are relevant while developing user interfaces are discussed in Section 16.2. Within the approach discussed, the design of the user interface replaces what we used to call requirements engineering. The approach is inspired by the observation that the usability of a system is not only determined by its perceptual appearance in the form of menus, buttons, etc. The user of an interactive system has to accomplish certain tasks. Within the task domain, e.g. sending electronic mail or preparing documents, these tasks have a certain structure. The human-computer interaction (HCI) then should have the same structure, as far as this can be accomplished. Discovering an adequate structuring of the task domain is considered part of user interface design. This discovery process and its translation into user interface representations requires specific expertise, expertise that most software engineers do not possess. Section 16.5 discusses this eclectic approach to user interface design. Its main activities – task analysis, interface specification, and evaluation – are discussed in Sections 16.6 to 16.8.

In order to develop a better understanding of what is involved in designing user interfaces, it is necessary to take a closer look at the role of the user in operating a complex device such as a computer. Two types of model bear upon the interplay between a human and the computer: the user's mental model and the conceptual model.

Users create a model of the system they use. Based on education, knowledge of the system or application domain, knowledge of other systems, general world knowledge, and so on, the user constructs a model, a knowledge structure, of that system. This is called the mental model. During interaction with the system, this mental model is used to plan actions and predict and interpret system reactions. The mental model reflects the user's understanding of what the system contains, how it works, and why it works the way it does. The mental model is initially determined through metacommunication, such as training and documentation. It evolves over time as the user acquires a better understanding of the system. The user's mental model need not be, and often is not, accurate in technical terms. It may contain misunderstandings and omissions.

The conceptual model is the technically accurate model of the computer system created by designers and teachers for their purposes. It is a consistent and complete representation of the system as far as user-relevant characteristics are involved. The conceptual model reflects itself in the system's reaction to user actions.

The central question in human-computer interaction is how to attune the user's mental model and the conceptual model as well as possible. When this is achieved to a higher degree, an interactive system becomes easier to learn and easier to use. Where the models conflict, the user gets confused, makes errors, and gets frustrated. A good design starts with a conceptual model derived from an analysis of the intended users and their tasks. The conceptual model should result in a system and training materials which are consistent with the conceptual model. This, in turn, should be designed such that it induces adequate mental models in the users.

Section 16.4 discusses various models that play a role in HCI. As well as the aforementioned mental and conceptual models, attention is given to a model of human information processing. When interacting with a system, be it a car or a library information system, the user processes information. Limitations and properties of human information processing have their effect on the interaction. Knowledge of how humans process information may help us to develop systems that users can better cope with.

There are many factors that impact human-computer interaction. In this chapter, we just scratch the surface. Important topics not discussed include the socio-economic context of human-computer interaction, input and output media and their ergonomics, and workplace ergonomics. Section 16.10 contains some pointers to relevant literature.

A computerized library system includes a component to search the library's database for certain titles. This component includes code to implement its function as well as code to handle the interaction with the user. In the old days, these pieces of code tended to be entangled, resulting in one large, monolithic piece of software.

In 1983, a workshop on user interface management systems took place at Seeheim in West Germany (Pfaff, 1985). At this workshop, a model was proposed which separates the application proper from the user interface. This model has become known as the Seeheim model.

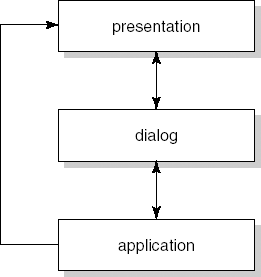

The Seeheim model (see Figure 16.1) describes the user interface as the outer layer of the system. This outer layer is an agent responsible for the actual interaction between the user and the application. It, in turn, consists of two layers:

the presentation, i.e. the perceptible aspects including screen design and keyboard layout;

the dialog, i.e. the syntax of the interaction including metacommunication (help functions, error messages, and state information). If the machine is said to apply a model of its human partner in the dialog, e.g. by choosing the user's native language for command names, this model is also located in the dialog layer.

This conceptualization of the user interface does not include the application semantics, or 'functionality'. In the Seeheim model, the tasks the user can ask the machine to perform are located in another layer, the application interface. Figure 16.1 shows the separation of concerns into three parts. For efficiency reasons, an extra connection is drawn between the application and the display. In this way, large volumes of output data may skip the dialog layer.

The Seeheim model provides some very relevant advantages. For example, we may provide the same outer layer to different applications. We may apply the same look and feel to a text editor, a spreadsheet, and so on, as in Microsoft products. In this way, the user does not have to learn different dialog languages for different applications. Conversely, we may provide a single application to be implemented behind several different outer layers, so as to allow different companies to adopt the same application with their own corporate interface style.

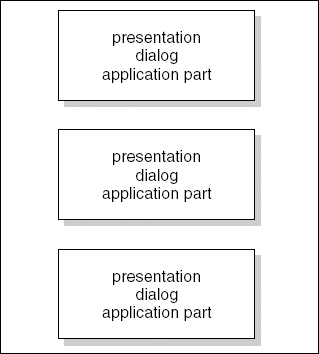

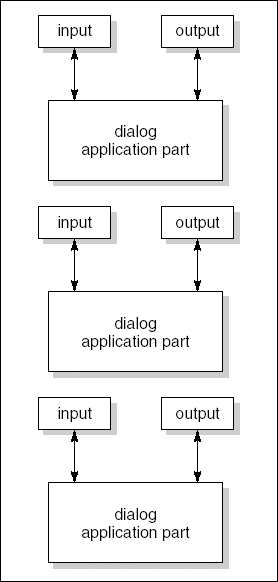

In both these cases, it is assumed that the changes are likely to occur in the interface part of the system, while the application part remains largely unaffected. Alternatively, we may assume that the functionality of the system will change. We then look for an architecture in which parts of the system can be modified independently of each other. A first decomposition of an interactive system along these lines is depicted in Figure 16.2. Each component in this decomposition handles part of the application, together with its presentation and dialog. In a next step, we may refine this architecture such that the input or output device of each component may be replaced. The result of this is shown in Figure 16.3. This result is in fact the Model-View-Controller (MVC) paradigm used in Smalltalk. It is also the archetypal example of a design pattern; see Section 12.5. The dialog and application together constitute the model part of a component. In MVC, the output and input are called view and controller, respectively.

Both the Seeheim model and MVC decompose an interactive system according to quality arguments pertaining to flexibility. The primary concern in the Seeheim model is with changes in the user interface, while the primary concern of MVC is with changes in the functionality. This difference in emphasis is not surprising if we consider the environments in which these models were developed: the Seeheim model by a group of specialists in computer graphics and MVC in an exploratory Smalltalk software development environment. Both models have their advantages and disadvantages. The project at hand and its quality requirements should guide the design team in selecting an appropriate separation of concerns between the functionality proper and the part which handles communication with the user.

In many applications, the user interface part is running on one machine, the client, while the application proper is running on another, the server. This of course also holds for Web applications, where the user interface is in the browser. On one hand, there has been a tendency towards 'thin' clients, where only the user interface is located at the client side, while all processing takes place at the server side. However, in circumstances where one is not always connected to the Internet, e.g. with mobile devices, one would still like to continue work, and restore data once the connection is re-established. This gives rise to more data manipulation at the client side and, thus, 'fatter' clients (Jazayeri, 2007). The separation between user interface and application, then, is not the same as that between browser and server application.

The concept 'user interface' has several meanings. It may denote the layout of the screen, 'windows', or a shell or layer in the architecture of a system or the application. Each of these meanings denotes a designer's point of view. Alternatively, the user interface can be defined from the point of view of the intended user of a system. In most cases, users do not make a distinction between layers in an architecture and they often do not even have a clear view of the difference between hardware and software. For most users an information system as a whole is a tool to perform certain tasks. To them, the user interface is the system.

In this chapter, we use the term user interface to denote all aspects of an information system that are relevant to a user. This includes not only everything that a user can perceive or experience (as far as it has a meaning), but also aspects of internal structure and processes as far as the user should be aware of them. For example, car salesmen sometimes try to impress their customers and mention the horse-power of each and every car in their shop. Most customers probably do not know how to interpret those figures. They are not really interested in them either. A Rolls-Royce dealer knows this. His answer to a question about the horse-power of one of his cars would simply be: 'Enough'. The same holds for many aspects of the internal structure of an information system. On the other hand, the user of a suite of programs including a text editor, a spreadsheet, and a graphics editor should know that a clipboard is a memory structure whose contents remain unchanged until overwritten.

We define the user interface in this broad sense as the user virtual machine (UVM). The UVM includes both hardware and software. It includes the workstation or device (gadget) with which the user is in physical contact as well as everything that is 'behind' it, such as a network and remote data collections. In this chapter, we take the whole UVM, including the application semantics, as the subject of (user interface) design.

In many cases, several groups of users have to be distinguished with respect to their tasks. As an example, consider an ATM. One type of user consists of people, bank clients, who put a card into the machine to perform some financial transaction. Other users are specially trained people who maintain the machine and supply it with a stock of cash. Their role is in ATM maintenance. Lawyers constitute a third category of users of ATM machines. They have to argue in favor of (or against) a bank to show that a transaction has been fraudulent, using a log or another type of transaction trace that is maintained by the system. Each of these three user categories represents a different role in relation to the use of the ATM. For each of these roles, the system has a different meaning; each role has to be aware of different processes and internal structures. Consequently, each has a different interface. If we are going to design these interfaces, however, we have to design all of them, and, moreover, we have to design the relation between them. In other words, within the task domain of the ATM, we have to design a set of related UVMs, with respect to the tasks that are part of the various roles.

Not only will several groups of users have different interfaces to the same application, but sometimes a single user will have different interfaces to one and the same application as well. This is the case in particular when a user is accessing a system through different devices, such as a mobile phone and a laptop. Not only do these devices have different characteristics, such as the size of the screen and the number of buttons available, but the user is likely to be in a different mood as well: in a hurry and with a lot of distracting noise around him when working via the mobile phone, while in a more quiet environment when working on his laptop. Conceptually, this is not different from the multi-role situation sketched above. Technically, the multi-device user situation poses its own set of challenges (Seffah et al., 2004).

We may look at the user interface from different viewpoints:

how to design all that is relevant to the user (the design aspect)

what does the user need to understand (the human side)

In principle these aspects have to be combined, otherwise the user will not understand the system's features. The next section is concerned with the human side. Later sections focus on the design aspects of the user interface.

Attention to the user interface is often located in the later phases of the software life cycle. The design approach we elaborate in this chapter, however, requires attention to the human user (or to the different user roles) from the very start of the design process. The various design activities are carried out in parallel and in interaction with each other, even though a large design team may allocate to specialists the tasks of analyzing, specifying, and evaluating user interface aspects. The design of the user interface consists of a complex of activities, all of which are intended to focus on the human side of the system.

The human side cannot be covered by a single discipline or a single technique. There are at least three relevant disciplines: the humanities, artistic design, and ergonomics.

In this view we pay attention to people based on psychological approaches (how do humans perceive, learn, remember, think, and feel), and to organization and culture (how do people work together and how does the work situation affect the people's work). Relevant disciplines are cognitive psychology, anthropology, and ethnography. These disciplines provide a theoretical base and associated techniques for collecting information on people's work as well as techniques for assessing newly designed tools and procedures. Designers of the virtual machine or user interface need some insight into the theories and experience with techniques from these disciplines. For example, in specifying what should be represented at a control panel, one may have to consider that less information makes it easier for the user to identify indications of process irregularity (the psychological phenomenon of attention and distraction). On the other hand, if less of the relevant information is displayed, the user may have to remember more, and psychology teaches us that human working memory has a very limited capacity.

Creative and performing artists in very different fields have developed knowledge on how to convey meaning to their public. Graphical artists know how shapes, colors, and spatial arrangements affect the viewer. Consequently, their expertise teaches interface designers how to draw the attention of users to important elements of the interface. For example, colors should be used sparingly in order not to devalue their possible meaning. Well-chosen use of colors helps to show important relations between elements on the screen and supports users searching for relevant structures in information. Design companies nowadays employ graphical artists to participate in the design of representational aspects of user interfaces.

Complex systems often need a representation of complex processes, where several flows of activity influence each other. Examples of this type of work situation are the team monitoring a complex chemical process and the cockpit crew flying an intercontinental passenger airplane. In such situations, users need to understand complex relations over time. The representation of the relevant processes and their relations over time is far from trivial. Representing in an understandable way what is going on and how the relations change over time is only part of the question. Frequently, such complex processes are safety critical, which means that the human supervisor needs to make the right decision very soon after some abnormal phenomenon occurs, so immediate detection of an event as well as immediate understanding of the total complex of states and process details is needed. Experts in theater direction turn out to have knowledge of just this type of situation. This type of interface may be compared with a theater show, where an optimal direction of the action helps to make the audience aware of the complex of intentions of the author and the cast (Laurel, 1990, 1993). Consequently, theater sciences are another source for designing interfaces to complex processes.

Another type of artistic expertise that turns out to be very relevant for interface design is cinematography. Film design has resulted in systematic knowledge of the representation of dynamics and processes over time (May and Barnard, 1995). For example, there are special mechanisms to represent the suggestion of causality between processes and events. If it is possible to graphically represent the causing process with a directional movement, the resulting event or state should be shown in a location that is in the same direction. For example, in an electronic commerce system, buying an object may be represented by dragging that object to a shopping cart. If the direction of this movement is to the right of the screen, the resulting change in the balance should also be shown to the right.

In the same way there are 'laws' for representing continuity in time. In a movie, the representation of a continuing meeting between two partners can best be achieved by ensuring that the camera viewpoints do not cross the line that connects the location points of the two partners. As soon as this line is crossed, the audience will interpret this as a jump in time. This type of expertise helps the design of animated representations of processes and so on.

In general, artists are able to design attractive solutions, to develop a distinctive style for a line of products or for a company, and to relate the design to the professional status of the user. There are, however, tradeoffs to be made. For example, artistic design sometimes conflicts with ergonomics. When strolling through a consumer electronics shop you will find artistic variants of mobile phones, coffee machines, and audio systems where the designer seems to have paid a tribute to artistic shape and color, while making the device less intuitive and less easy to use, from the point of view of fitting the relevant buttons to the size of the human hand. A similar fate may befall a user interface of an information system.

Ergonomics is concerned with the relation between human characteristics and artifacts. Ergonomics develops methods and techniques to allow adaptation between humans and artifacts (whether physical tools, complex systems, organizations, or procedures). In classical ergonomics, the main concern is anthropometrics (statistics of human measures, including muscle power and attributes of human perception). During the past 25 years, cognitive ergonomics developed as a field that focuses mainly on characteristics of human information processing in interaction with information systems. Cognitive ergonomics is increasingly considered to be the core view for managing user interface design. A cognitive ergonomist is frequently found to be the leader of the design team as far as the virtual machine is concerned.

For beginning users, the human-computer conversation is often very embarrassing. The real beginner is a novice in using a specific computer program. He might even be a novice in computer use in general. Sometimes he is also relatively new to the domain of the primary task (the office work for which he will use the PC or the monitoring of the chemical process for which the computer console is the front end). In such a situation, problems quickly reach a level at which an expert is asked for help and the user tends to blame the program or the system for his failure to use the new facility.

There seems to be a straightforward remedy for this dilemma: start by educating the user in the task domain, next teach him everything about the facility, and only thereafter allow him access to the computer. This, however, is only a theoretical possibility. Users will insist on using the computer from the outset, if they intend ever to use it, and introducing a task domain without giving actual experience with the system that is designed for the task is bad education. So the cure must be found in another direction. The designer of the system must start from a detailed 'model of the user' and a 'model of the task'. If he knows that the user is a novice both on the task domain and on the system, he will have to include options for learning both these areas at the same time.

In general, the system designer will try to apply cognitive ergonomic knowledge and adapt the interface to the intended task rather than vice versa. The system should be made transparent (unobtrusive) as far as anything but the intended task is concerned. This should facilitate the user's double task: to delegate tasks to the system and to learn how to interact with the system. However, in many cases this cannot be accomplished completely in one direction and a solution has to be found by adapting the human user to the artifact, i.e. by teaching and training the user or by selecting users that are able to work with the artifacts. Adapting the user to the artifacts requires a strong motive, though. Constraints of available technology, economic aspects, and safety arguments may contribute to a decision in this direction. A mobile phone has only a few keys and a fairly small screen. Instead of designing an 'intuitive' airplane cockpit that would allow an average adult to fly without more than a brief series of lessons, analogous to driving a car, most airlines prefer to thoroughly select and train their pilots.

Cognitive ergonomics developed when information technology started to be applied by people whose expertise was not in the domains of computer science or computer programming. The first ideas in this field were elaborated more or less simultaneously in different parts of the world, and in communities that used different languages, which resulted in several schools with rather specific characteristics. Much of the early work in the US and Canada, for instance, is based on applying cognitive ergonomics to actual design problems. Also, success stories (such as the development of the Xerox Star) were, after the fact, interpreted in terms of ergonomic design concepts (Smith et al., 1982). Carroll (1990) describes this as 'the theory is in the artifact'. Conversely, European work in the field of cognitive ergonomics has concentrated on the development of models: models of computer users, models of human-computer interaction, models of task structures, and so on. By now, these differences are fading away, but a lot of important sources still require some understanding of their cultural background.

The concept of a model has an important place in the literature on human-computer interaction and cognitive ergonomics. Models represent relevant characteristics of a part of reality that we need to understand. At the same time, models are abstract: they represent only what is needed, thus helping us to find our way in complex situations. We need to be aware of differences between types of model, though, and of the inconsistent use of names for the various types of model. First, we discuss the difference between internal and external models in human-computer interaction.

Internal models are models 'for execution'. Internal models use an agent (a human or a machine) who makes a decision based on the behavior of the model. If the agent is human, this model is termed a mental model in psychology. Humans apply these models whenever they have to interact with complex systems. We discuss mental models in Section 16.4.2.

If the agent is a machine, the internal model is a program or a knowledge system. For example, a user interface may retain a model of the user. In that case the literature mostly speaks of a user model: a model of the user that is used by the interface. The model could help the interface to react differently to different users or, alternatively, to adjust to the current user depending on the machine's understanding of that user's current goals or level of understanding. User models of this type may be designed to learn from user behavior and are commonly used in so-called intelligent user interfaces. A third type of internal model in machines is a model of the task domain, which enables the user and the system to collaborate in solving problems about the task. The latter type of internal model leads to systems that can reason, critique user solutions, provide diagnosis, or suggest user actions. User models are not discussed further in this book.

External models are used for communication and, hence, are first of all represented in some type of formalism (which could also be a graphical representation such as a Petri net or flow diagram). The formalism should be chosen in relation to what is being modeled, as well as to the goal of the communication. In designing user interfaces, there are several domains where external models are needed. Designers need to understand some relevant aspects of the user, especially human information processing. Cognitive psychology provides such types of knowledge, hence in Section 16.4.1 we briefly discuss a recent variant of the model of human information processing, only mentioning those aspects that are relevant when designing for users of computers. Another type of model is used in the various types of design activity. These external models help designers to document their decisions, to backtrack when one design decision overrules another, and to communicate the result of one design phase to people responsible for another phase (e.g. to communicate a view of the task to a colleague responsible for usability evaluation). In Section 16.4.3, we give some examples of external models used in various design activities. These models are the HCI-oriented counterparts of the requirements representation formats discussed in Chapter 9.

Some types of model, such as task knowledge models, refer to aspects that are both internal and external. Task knowledge is originally to be found in the memory of human beings, in documents about the work domain, and in the actual situation of the work environment. These are internal models, applied while doing the work. Designers need to understand this knowledge and apply it as the base of their design, hence they need to perform task analysis and model the task knowledge. This is an external model for use in design. Task models and task modeling are treated in Section 16.6.

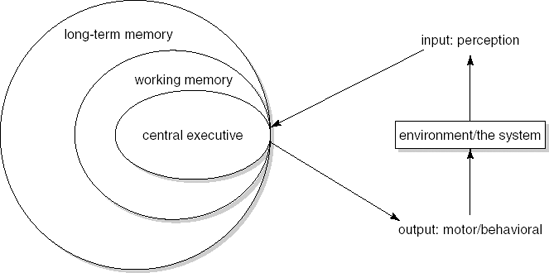

The model of human information processing is an example of an external model. We only briefly mention some notions that need to be understood in analyzing human-computer interaction. We focus on human perception, memory, and the processing of information in relation to the input and output of the human in interaction with an outside system. Figure 16.4 depicts this model. In textbooks on psychology, a figure such as this is often adorned with formulae that allow calculation of the speed of processing, the effect of learning, etc.

In modern cognitive psychology, perception (the input of human information processing) is considered to proceed through a number of phases:

Edge detection: the large amount of unstructured information that bombards our senses is automatically and quickly structured, e.g. into phonemes (hearing) or a '2.5-D sketch' based on movement, color, and location (vision).

Gestalt formation: a small number of understandable structures (such as a triangle, a word, and a tactile shape) known as 'gestalts' are formed, based on similarities detected in the sketch, on spatial relations and on simplicity.

Combination: the gestalts are combined into groups of segments that seem to belong together: an object consisting of triangles, cubes and cylinders; a spoken utterance consisting of a series of words.

Recognition: the group of segments is recognized as, for example, a picture of a horse or a spoken sentence.

The processing in the later phases is done less and less automatically. People are aware of gestalt formation and combination when any problem arises, for example because of irregularity or exceptional situations such as 'impossible' figures. Recognition leads to conscious perception.

The whole series of phases takes a fraction of a second. It takes more time when a problem occurs because of an unexpected or distorted stimulus. It takes less time when the type of stimulus is familiar. So we may train our computer users to perceive important signals quickly and we may design our signals for easy and quick detection and discrimination. Psychologists and ergonomists know when a signal is easy to detect, what color combinations are slow to be detected, and what sounds are easy to discriminate.

The output of human beings is movement. People make gestures, manipulate tools, speak, or use a combination of these. For computer use, manipulation of keys, mouse or touch-screen, and speaking into microphones are common examples of output. According to modern psychology, all those types of output are monitored by a central processing mechanism in the human. This central executive decides on the meaning of the output (say yes, move the mouse to a certain location, press the return key) but leaves the actual execution to motor processes that, in normal cases, are running 'unattended', i.e. the actual execution is not consciously controlled. Only in case of problems is attention needed. For example, if the location to be pointed to on the screen is in an awkward position, if a key is not functioning properly, or if the room is so noisy that the person cannot properly hear his own spoken command. So we should design for human movements and human measures. It pays to ask an ergonomist about the most ergonomic design of buttons and dials.

The central executive unit of human information processing is modeled as an instance that performs productions of the form if condition then action, where the condition in most cases relates to some perceived input or to some knowledge available from memory. The action is a command to the motor system, with attributes derived from working memory. The central executive unit has a very limited capacity. First of all, only a very small number of processes can be performed simultaneously. Secondly, the knowledge that is needed in testing the condition as well as the knowledge that is processed on behalf of the motor output has to be available in working memory. Most of the time, we may consider the limitations to result in the execution of one process at a time and, consequently, in causing competing processes to be scheduled for sequential execution based on perceived priority. For example, when a driver approaches a crossroads, the talk with his passenger will be temporarily interrupted and only resumed when the driving decisions have been made. The amount of available resources has to be taken into account when designing systems. For example, humans cannot cope with several error messages each of which requires an immediate decision, especially if each requires complex error diagnosis to be performed before reaction is feasible.

Working memory is another relevant concept in the model. Modern psychology presumes there is only one memory structure, long-term memory, that contains knowledge that is permanently stored. Any stimulus that reaches the central executive unit leads to the activation of an element in long-term memory. The activated elements together form the current working memory. The capacity of the set of activated elements is very limited. The average capacity of the human information processor is 5–9 elements. If new elements are activated, other elements lose their activation status and, hence, are no longer immediately available to the central executive. In other words, they are not in working memory any more.

Long-term memory is highly structured. One important type of relation concerns semantic relations between concepts, such as part-of, member-of, and specialization-generalization. In fact, each piece of knowledge can be considered a concept defined by its relation to other elements. Such a piece of knowledge is often called a chunk. It is assumed that working memory has a capacity of 5–9 chunks. An expert in some task domain is someone who has available well-chosen chunks in that domain, so that he is able to expand any chunk into relevant relations, but only when needed for making decisions and deriving an answer to a problem. If not needed, an expert will not expand the chunk that has been triggered by the recognition phase of perception or by the production based on a stimulus. For example, the sequence of digits '85884' could occupy five entries in working memory. However, if it is your mother's telephone number, it is encoded as such and occupies only one entry. When the number has to be dialed, this single entry is expanded to a series of digits again. Entries in working memory can thus be viewed as labels denoting some unit of information, such as a digit, your mother's telephone number, or the routine quicksort. In this way an expert can cope with a situation even with the restrictions on the capacity of working memory.

The structures in long-term memory are the basis for solving problems in a domain and for expertise. When working with a system, people develop a suitable knowledge structure for performing their tasks. If they are able to understand the system as much as they need (we defined this as the virtual machine in Section 16.2), they may develop a coherent and useful structure in long-term memory. As far as this structure can be considered a model of the system, we consider it to be the mental model of the system.

Mental models are structures in long-term memory. They consist of elements and relations between those elements. They represent relevant knowledge structures analogous to physical, organizational, and procedural structures in the world. These mental models become 'instantiated' when activated by an actual need, e.g. when one needs to make a decision related to an element of this knowledge. The activated mental model is, to a certain extent, run or executed in order to predict how the structure in the world that is represented by the mental model would behave in relation to the current situation and in reaction to the possible actions of the person. In the terminology introduced before, mental models are internal models.

When working with complex systems, where part of the relevant structure and processes of the system cannot be perceived by a human being or cannot be completely monitored, a mental model is needed to behave optimally in relation to the system. Hence, people develop mental models of such situations and systems. If the system is a computer system, there are four functions of using the system that require the activation of a mental model:

When planning the use of the technology, users will apply their knowledge (i.e. their mental model) to find out for what part of their task the system could be used and the conditions for its use. Users will determine what they need to do beforehand, when they would like to perform actions and take decisions during the use of the system, and when they would abort execution or reconsider use.

Suppose I want to use our library information system to search for literature on mental models of computer systems. I may decide to search by author name. First, I must find one or a few candidate authors.

During execution of a task with a system, there is a continuous need for fine-tuning of user actions towards system events. The mental model is applied to monitoring the collaboration and reconsidering decisions taken during the planning stage.

If the result of my search action is not satisfactory, I may decide to look up alternative author names or switch to a keyword search. If the keywords used by the system are keywords listed in the titles of publications, there is quite a chance that relevant literature is not found by a search using the keywords

mental model. I may then consider other keywords as well, such ashuman-computer interaction.If the system has performed some tasks for the user and produced output, there is the need to evaluate the results, to understand when to stop, and to understand the value of the results in relation to the intended task. The mental model of the system is needed to evaluate the system's actions and output, and to translate these to the goals and needs of the user.

Some of the literature sources found may have titles that indicate a relation between mental models and learning, while others relate mental models to personality factors. I may decide to keep only those titles that relate mental models to HCI, since the others are probably not relevant.

Modern computer systems are frequently not working in isolation and more processes may be going on than those initiated by current use. The user has to cope with unexpected system events, and needs to interpret the system's behavior in relation to the intended task as well as to the state of the system and the network of related systems. For this interpretation, users need an adequate mental model of the system and its relation to the current task.

I may accept a slow response to my query knowing that the answer is quite long and network traffic during office hours is heavy.

Mental models as developed by users of a system are always just models. They abstract from aspects the user considers not relevant and they have to be usable for a human information processor with his restricted resources and capacities. Consequently, we observe some general restrictions in the qualities of human mental models of computer systems. Norman (1983) has shown that mental models of systems of the complexity of a computer application have the following general characteristics:

They are incomplete and users are generally aware of the fact that they do not really know all details of the system, even if relevant. They will know, if they are experts, where details can be found.

They can only partly be 'run', because of the nature of human knowledge representation. I may know how to express a global replacement in my text editor (i.e., I know the start and end situation) without knowing how the intended effect is obtained.

They are unstable. They change over time, both because of users using different systems and spoiling the knowledge of the previously applied system and because of new experiences, even if the user has been considered a guru on this system for the past ten years.

They have vague boundaries. People tend to mix characteristics of their word processor with aspects of the operating system and, hence, are prone to occasionally make fatal errors based on well-prepared decisions.

They are parsimonious. People like to maintain models that are not too complex and try to stick to enough basic knowledge to be able to apply the model for the majority of their tasks. If something uncommon has to be done they accept having to do some extra operations, even if they know there should be a simpler solution for those exceptions.

They have characteristics of superstitions that help people feel comfortable in situations which they know they do not really understand. An example is the experienced user who changes back to his root directory before switching off his machine or before logging out. He knows perfectly well there is no real need for this, but he prefers to behave in a nice and systematic way and hopes the machine will behave nicely and systematically in return.

Designers of user interfaces should understand the types of mental structures users tend to develop. There are techniques for acquiring information about an individual user's mental models, as well as about the generic mental structures of groups of users. Psychological techniques can be applied and, in the design of new types of system, it is worthwhile to apply some expert help in assessing the knowledge structures that may be needed for the system, as well as those that may be expected to be developed by users. If the knowledge needed differs from the mental models developed in actual use, there is a problem and designers should ask for expert help before the design results in an implementation that does not accord with the users' models.

A central part of user interface design is the stepwise-refined specification of the system as far as it is relevant for the user. This includes the knowledge the user needs in order to operate the system, the definition of the dialog between user and system, and the functionality that the system provides to the user. All that is specified in the design process is obviously also explicitly modeled, in order to make sure implementation does not result in a system that differs from the one intended. In cognitive ergonomics, all that is modeled about the system as far as relevant to its different sets of users is called the conceptual model of the system (Norman, 1983).

Formal design modeling techniques have been developed in order to communicate in design teams, to document design decisions, to be able to backtrack on specifications, and to calculate the effects of design specifications. Some techniques model the user's knowledge (so-called competence models), others focus on the interaction process (so-called process models), and others do both. Reisner's Psychological BNF (Reisner, 1981) is an example of a competence model. In this model, the set of valid user dialogs is defined using a context-free grammar. Process models may model time aspects of interaction, as in the Keystroke model (Card et al., 1983) which gives performance predictions of low-level user actions. Task Action Grammar (TAG) (Payne and Green, 1989) is an example of a combined model. It allows the calculation of indexes for learning (the time needed to acquire knowledge) and for ease of use (mental load, or the time needed for the user's part of executing a command).

Moran (1981) was one of the first to structure the conceptual model into components, somewhat akin to the Seeheim model. Even though, at that time, command dialogs were the only type of interactive user interface available to the general public, his Command Language Grammar (CLG) still provides a remarkably complete view of the types of design decision to be made during user interface design. Additionally, Moran was the first to state that a conceptual model can be looked upon from three different viewpoints:

The psychological view considers the specification as the definition of all that a user should understand and know about the new system.

The linguistic view describes the interaction between human and system in all aspects that are relevant for both participants in the dialog.

The design view specifies all that needs to be decided about the system from the point of view of the user interface design.

Moran distinguishes six levels in the conceptual model, structured in three components. Each level details concepts from a higher level, from the specific point of view of the current level. The formalism that Moran proposes (the actual grammar) would nowadays be replaced by more sophisticated notations, but the architectural concepts show the relevance of analyzing design decisions from different viewpoints and at the same time investigating the relationships between these viewpoints:

Conceptual component. This component concerns design decisions at the level of functionality: what will the system do for the users.

a.1 Task level. At this level we describe the task domain in relation to the system: which tasks can be delegated to the machine, which tasks have to be done by the user in relation to this (preparation, decisions in between one machine task and the next, etc.). A representation at this level concerns tasks and task-related objects as seen from the eyes of the user, not detailing anything about the system, such as 'print a letter on office stationery' or 'store a copy'.

a.2 Semantic level. Semantics in the sense of CLG concern the system's functionality in relation to the tasks that can be delegated. At this level, task delegation is specified in relation to the system. The system objects are described with their attributes and relevant states, and the operations on these objects as a result of task delegation are specified. For example, there may be an object

letterwith an attributeprint dateand an operation to store a copy in another object calledprinted letterswith attributeslist of printed lettersanddate of last storage operation.In terms of the Seeheim model, this level describes the application interface.

Communication component. This component describes the dialog of the Seeheim model.

b.1 Syntax level. This level describes the dialog style, such as menus, form-filling, commands, or answering questions, by specifying the lexicographical structure of the user and system actions. For example, to store a letter, the user has to indicate the letter to be stored, then the storage location, then the storage command, and, finally, an end-of-command indication.

b.2 Keystroke level. The physical actions of the user and the system are specified at this level, such as clicking the mouse buttons, pointing, typing, dragging, blinking the cursor, and issuing beeping signals.

Material component. At this level, Moran refers to the domain of classical ergonomics, including perceptual aspects of the interface, as well as relevant aspects of the hardware. The presentation aspect of the Seeheim model is located at the spatial layout level.

c.1 Spatial layout level. The screen design, for example, the shape, color, and size of icons and characters on the screen, and the size of buttons, is specified at this level. This level is also intended to cover sound and tactile aspects of the interface (such as tactile mouse feedback) not covered by the hardware.

c.2 Apparatus level. At this level, Moran suggests we specify the shape of buttons and the power needed to press them, as well as other relevant hardware aspects.

Moran's CLG provides a fairly complete specification model for the user interface or UVM. The actual grammar representation is no longer relevant, but the layers and their relations are important, and the design models discussed in the next section cover most of them: task models relate to Moran's task level and the UVM specifications include the semantic level, the communication component, and parts of the spatial layout level.

The concept user interface in this chapter denotes the complete UVM, the user's virtual machine. Traditional user interface design mainly concerns the situation of a single user and a monolithic system. In current applications, computers are mostly part of a network, and users are collaborating, or at least communicating, with others through networks. Consequently, the UVM should include all aspects of communication between users as far as this communication is routed through the system. It should also include aspects of distributed computing and networking as far as this is relevant for the user, such as access, structural, and time aspects of remote sources of data and computing. For example, when using a Web browser, it is relevant to understand mechanisms of caching and of refreshing or reloading a page, both in terms of the content that may have changed since the previous loading operation and in terms of the time needed for operations to complete.

These newer types of application bring another dimension of complexity into view. People are collaborating in various ways mediated by information technology. Collaboration via systems requires special aspects of functionality. It requires facilities for the integration of actions originating from different users on shared objects and environments, facilities to manage and coordinate the collaboration, and communication functionality. Such systems are often denoted as groupware. Modern user interface design techniques cater for both the situation of the classical single user system and groupware. We expect this distinction to disappear in the near future.

There are several classes of stakeholder in system development (see also Chapter 9). These include, at least, the clients, i.e. the people or organizations that pay for the design or acquisition of systems, and the users, i.e. the people or groups that apply the systems as part of their daily work, often referred to as the end users. Throughout the process of design, these two classes of stakeholders have to be distinguished, since they may well have different goals for the system, different (and possibly even contradictory) knowledge about the task domain, and different views on what is an optimal or acceptable system. This does not mean that in certain situations these classes will not overlap. But even if this is the case, individual people may well turn out to have contradictory views on the system they need. In many situations there will be additional classes of stakeholders to cater for, such as people who are involved in maintaining the system, and people who need traces or logs of the system to monitor cases of failure or abuse, such as lawyers.

In relation to these different classes of stakeholders, designers are in a situation of potential political stress. Clients and users may have contradictory inputs into the specification of the system. Moreover, the financial and temporal constraints on the amount of effort to be invested in designing the different aspects of the system (such as specifying functionality and user interface, implementation, and testing) tend to counteract the designers' ambitions to sufficiently take care of the users' needs.

Making a distinction between classes of stakeholders does not solve the problem of user diversity. In complex systems design, we are confronted with different end users playing different roles, as well as end-user groups that have knowledge or a view on the task domain that need not be equivalent to the (average or aggregated) knowledge and views of the individuals.

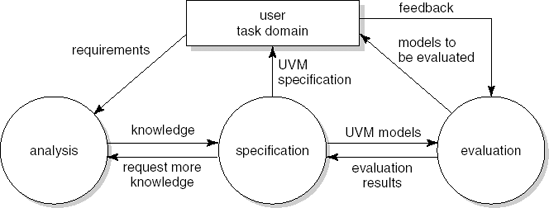

Viewing design as a structure of interrelated activities, we need a process model. The model we use will be familiar to readers of this book: it is a cyclical process with phases devoted to analysis, specification, and evaluation. Figure 16.5 depicts this process model.

Analysis: Since the system to be developed will feature in a task situation, we start with task analysis. We further structure this activity into the development of two models: task model 1, which models the current task situation, and task model 2, which models the task domain of the future situation, where the system to be developed will be used, including changes in the organization of people and work procedures. The relationship between task models 1 and 2 reflects the change in the structure and organization of the task world as caused by the implementation of the system to be developed. As such, the difference is relevant both for the client and the user.

The development of task model 2 from task model 1 uses knowledge of current inadequacies and problems concerning the existing task situation, needs for change as articulated by the clients, and insight into current technological developments. Section 16.6 discusses task analysis.

Specification: The specification of the system to be designed is based on task model 2. It has to be modeled in all details that are relevant to the users, including cooperation technology and user-relevant system structure and network characteristics. Differences between the specification of the new system (the user's virtual machine or UVM) and task model 2 must be considered explicitly and lead to design iteration. Specifying the UVM is elaborated in Section 16.7.

Evaluation: The specification of the new system incurs many design decisions that have to be considered in relation to the system's prospective use. For some design decisions, guidelines and standards might be used as checklists. In other situations, formal evaluation may be applied, using formal modeling tools that provide an indication of the complexity of use or learning effort required. For many design decisions, however, evaluation requires confronting the future user with relevant aspects of the intended system. Some kind of prototyping is a good way to confront the user with the solution proposed. A prototype allows experimentation with selected elements or aspects of the UVM. It enables imitation of (aspects of) the presentation interface, it enables the user to express himself in (fragments of) the interaction language, and it can be used to simulate aspects of the functionality, including organizational and structural characteristics of the intended task structure. We discuss some evaluation techniques in Section 16.8.

Figure 16.5 is similar to Figure 9.1. This is not surprising. The design of an interactive system as discussed in this chapter is very akin to the requirements engineering activity discussed in Chapter 9. The terminology is slightly different and reflects the user-centered stance taken in this chapter. For example, 'elicitation' sounds more passive than 'analysis'. 'Evaluation' entails more than 'validation'; it includes usability testing as well. Finally, we treat the user and the task domain as one entity from which requirements are elicited. In the approach advocated here, the user is observed within the task domain.

The main problem with the design activities discussed in the previous section is that different methods may provide conflicting viewpoints and goals. A psychological focus on individual users and their capacities tends to lead to Taylorism, neglecting the reality of a multitude of goals and methods in any task domain. On the other hand, sociological and ethnographical approaches towards groupware design tend to omit analysis of individual knowledge and needs. Still, both extremes provide unique contributions.

In order to design for people, we have to take into account both sides of the coin: the individual users and clients of the system, and the structure and organization of the group for which the system is intended. We need to know the individuals' knowledge and views on the task, on applying the technology, and the relation between using technology and task-relevant user characteristics (expertise, knowledge, and skills). With respect to the group, we need to know its structure and dynamics, the phenomenon of 'group knowledge' and work practice and culture. These aspects are needed in order to acquire insight into both existing and projected task situations where (new) cooperation technology is introduced. Both types of insight are also needed in relation to design decisions, for functionality and for the user interface. Consequently, in prototyping and field-testing, we need insight into the acceptance and use by individuals and the effect of the new design on group processes and complex task dynamics.

For example, in a traditional bank setting, the client and the bank employee are on different sides of a counter. The bank employee is probably using a computer, but the client cannot see the screen, and does not know what the clerk is doing. In a service-oriented bank setting, the clerk and client may be looking at the screen together. They are together searching for a solution to the client's question. This overturns the existing culture of the bank and an ethnographer may be asked to observe what this new setup brings about.

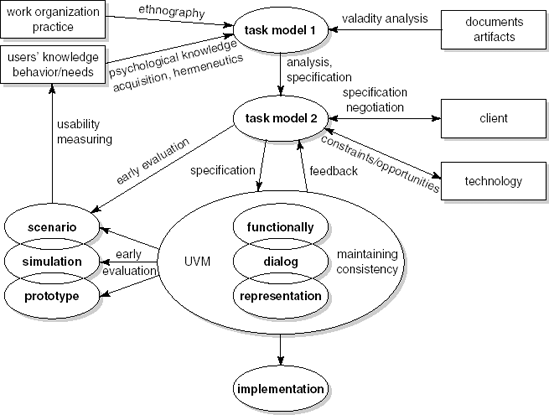

The general framework for our approach to user interface design is depicted in Figure 16.6. It is a refinement of Figure 16.5, emphasizing the specialties involved in carrying out different activities. Task model 1 is based on knowledge of single users (psychological variables, task-related variables, knowledge, and skills) and on complex phenomena in the task situation (roles, official and actual procedures, variation in strategies, and variation in the application of procedures). The integration of this insight in a model often does not provide a single (or a single best) decomposition of tasks and a unique structure of relationships between people, activities, and environments. The model often shows alternative ways to perform a certain task, role-specific and situation-specific methods and procedures, and a variety of alternative assignments of subtasks to people. For example, the joint problem-solving approach to the bank counter, sketched out above, cannot be applied to the drive-in counter of the bank. The drive-in counter requires a different approach and a different user interface.

From this, and because of client requirements, compromises often have to be made in defining task model 2, the new task situation for which the technology has to be designed. This process includes the interpretation of problems in the current task situation, negotiation with the client regarding his conditions, and the resources available for design (including both financial impacts and time constraints). Ultimately, decisions have to be made about complex aspects, such as re-arranging the balance of power and the possibilities for users in various roles to exercise control.

Again, when detailed design decisions are being considered, early evaluation needs to include analytical methods (formal evaluation and cognitive walkthrough techniques) in combination with usability testing where users in different roles are studied both in the sense of traditional individual measures and in the sense of ethnographic interaction analysis.

Analyzing a complex system means analyzing the world in which the system functions, the context of use, which comprises (according to standards such as (ISO 9241, 1996)):

the users;

the tasks;

the equipment (hardware, software, and materials);

the social environment;

the physical environment.

If we design systems for the context of use, we must take these different aspects of the task world into consideration. In traditional literature on task analysis from the HCI mainstream, the focus is mostly on users, tasks, and software. Design approaches for groupware and computer-supported collaborative work (CSCW), on the other hand, often focus on analyzing the world first of all from the point of view of the (physical and social) environment. Recent approaches to task modeling include some aspects that belong to both categories, but it still looks as if one has to, by and large, opt for one view or the other. Section 16.6.1 presents task analysis approaches from the classical HCI tradition and distinguishes different phases in task analysis. Section 16.6.2 presents an ethnographic point of view, as frequently applied to the design of CSCW systems, where phases in the analysis process are hardly considered.

Jordan (1996), though originally working from an ethnographic approach and focusing on groupware applications, provides a view on analyzing knowledge of the task world that is broad enough to cover most of the context of use as now defined by ISO 9241 (1996). We illustrate Jordan's view in Section 16.6.3. The groupware task analysis (GTA) framework of modeling task knowledge combines approaches from both HCI and CSCW design. GTA is described in Section 16.6.4.

Classical HCI features a variety of notions regarding task analysis. Task analysis can mean different activities:

analyzing a current task situation,

envisioning a task situation for which information technology is to be designed, or

specifying the semantics of the information technology to be designed.

Many HCI task analysis methods combine more than one of these activities and relate them to actual design stages. Others do not bother about the distinction. For example, goals, operators, methods, and selection rules (GOMS, see (Card et al., 1983)) can be applied for any or a combination of the above activities.

In many cases. the design of a new system is triggered by an existing task situation. Either the current way of performing tasks is not considered optimal, or the availability of new technology is expected to allow an improvement over current methods. A systematic analysis of the current situation may help formulate requirements and allow later evaluation of the design. In all cases where a current version of the task situation exists, it pays to model this. Task models of this type pretend to describe the situation as it can be found in real life, by asking or observing people who know the situation (see (Johnson, 1989)). Task model 1 is often considered to be generic, indicating the belief that different expert users have at their disposal basically the same task knowledge.

Many design methods in HCI that start with task modeling are structured in a number of phases. After describing a current situation (in task model 1), the method requires a re-design of the task structure in order to include technological solutions for problems and technological answers to requirements. Task model 2 is in general formulated and structured in the same way as task model 1. However, it is not considered a descriptive model of users' knowledge, though in some cases it may be applied as a prescriptive model of the knowledge an expert user of the new technology should possess.

A third type of modeling activity focuses on the technology to be designed. This may be considered part of task model 2. However, some HCI approaches distinguish specific design activities which focus on the technology (e.g. see (Tauber, 1990)). This part of the design activity is focused on a detailed description of the system as far as it is of direct relevance to the end user, i.e. the UVM. We separate the design of the UVM from the design of the new task situation as a whole, mainly because the UVM models the detailed solution in terms of technology, whereas task model 2 focuses on the task structure and work organization. In actual design, iteration is needed between the specification of these two models. This should be an explicit activity, making the implications of each obvious in its consequences for the other. Specifying the UVM is treated in more detail in Section 16.7.

HCI task models represent a restricted point of view. All HCI task modeling is rather narrowly focused, mainly considering individual people's tasks. Most HCI approaches are based on cognitive psychology. Johnson (1989) refers to knowledge structures in long-term memory. Tauber (1990) refers to 'knowledge of competent users'. HCI approaches focus on the knowledge of individuals who are knowledgeable or expert in the task domain, whether this domain already exists (task model 1) or still has to be re-structured by introducing new technology (task model 2 and the UVM).

As a consequence of their origin, HCI task models seldom provide an insight into complex organizational aspects, situational conditions for task performance, or complex relationships between tasks of individuals with different roles. Business processes and business goals (such as the service focus of a modern bank counter, which may be found in a business reengineering project) are seldom part of the knowledge of individual workers and, consequently, are seldom related to the goals and processes found in HCI task modeling.

Task analysis assumes there are tasks to analyze. Many current Web applications, though, are information-centric. The value of such systems is in the information they provide (Wikipedia, Amazon.com, and so on), and to a much lesser extent in the tasks they offer to the user. The key operation is searching. The developers seek to make searching simpler by organizing the information in a logical way, by providing navigation schemes, and so on (Nerurkar, 2001). The challenge for such applications is to induce the users to provide ever more information. This is another example of crowdsourcing, as already mentioned in Chapter 1.

CSCW work stresses the importance of situational aspects, group phenomena and organizational structure and procedures. Shapiro (1996) even goes so far as to state that HCI has failed in the case of task analysis for cooperative work situations, since generic individual knowledge of the total complex task domain does not exist. The CSCW literature strongly advocates ethnographic methods.

Ethnographers study a task domain (or community of practice) by becoming a participant observer, if possible with the status of an apprentice. The ethnographer observes the world 'through the eyes of the aboriginal' and at the same time is aware of his status as an outside observer whose final goal is to understand and describe for a certain purpose and a certain audience (in the case of CSCW, a design project). Ethnographers start their observation purposely without a conceptual framework regarding characteristics of task knowledge, but, instead, may choose to focus on activities, environments, people, or objects. The choice of focus is itself based on prior ethnographic observations, which illustrates the bootstrapping character of knowledge elicitation in ethno-methodology. Methods of data collection nowadays start with video recordings of relevant phenomena (the relevance of which, again, can only be inferred from prior observation) followed by systematic transaction analysis, where inter-observer agreement serves to improve the reliability of interpretation. Knowledge of individual workers in the task domain may be collected as far as it seems to be relevant, but it is in no sense a priori considered the main source and is never considered indicative of generic task knowledge.

The ethnographic approach is unique in its attention to all relevant phenomena in the task domain that cannot be verbalized explicitly by (all) experts (see (Nardi, 1995)). The approach attends to knowledge and intentions that are specific for some actors only, to conflicting goals, to cultural aspects that are not perceived by the actors in the culture, to temporal changes in beliefs, to situational factors that are triggers or conditions for strategies, and to non-physical objects such as messages, stories, signatures and symbols, of which the actors may not be aware while interacting.

Ethno-methodology covers the methods of collecting information that might serve as a basis for developing task model 1 (and no more than this since ethno-methodology only covers information on the current state of a task domain). However, the methodology for the collection of data and its structuring into a complete task domain description is often rather special and difficult to follow in detail. The general impression is that CSCW design methods skip the explicit construction of task models 1 and 2 and, after collecting sufficient information on the community of practice, immediately embark on specifying the UVM, based on deep knowledge of the current task situation that is not formalized. This may cause two types of problem. Firstly, the relationship between specifications for design and analysis of the current task world might depend more on intuition than on systematic design decisions. Secondly, skipping the development of task model 2 may lead to conservatism with respect to organizational and structural aspects of the work for which a system is to be (re)designed.

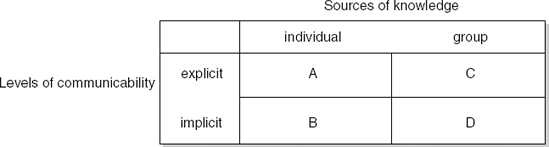

Collecting task knowledge to analyze the current situation of a complex system has to start by identifying the relevant knowledge sources. In this respect, we refer to a framework derived from (Jordan, 1996), see Figure 16.7. The two dimensions of this framework denote where the knowledge resides and how it can be communicated. For example, A stands for the explicit knowledge of an individual, while D stands for the implicit knowledge of a group.

Jordan's framework has been applied in actual design processes for large industrial and government interactive systems. We may expand the two factors distinguished from dichotomies to continuous dimensions to obtain a two-dimensional framework for analyzing the relevant sources of knowledge in the context of use. This framework provides a map of knowledge sources that helps us to identify the different techniques that we might need in order to collect information and structure this information into a model of the task world.

To gather task knowledge in cell A, psychological methods may be used: interviews, questionnaires, think-aloud protocols, and (single-person-oriented) observations. For knowledge in cell B, observations of task behavior must be complemented by hermeneutic methods to interpret mental representations (see (Veer, 1990)). For the knowledge referred to in cell C, the obvious methods concern the study of artifacts such as documents and archives. In fact all these methods are to be found in classical HCI task analysis approaches and, for that matter, the requirements elicitation techniques discussed in Chapter 9.

The knowledge indicated in cell D is unique in that it requires ethnographic methods such as interaction analysis. Moreover, this knowledge may be in conflict with what can be learned from the other sources. First of all, explicit individual knowledge often turns out to be abstract with respect to observable behavior and to ignore the situation in which task behavior is exhibited. Secondly, explicit group knowledge such as expressed in official rules and time schedules is often in conflict with actual group behavior, and for good reasons. Official procedures do not always work in practice and the literal application of them is sometimes used as a political weapon in labor conflicts, or as a legal alternative to a strike. In all cases of discrepancy between sources of task knowledge, ethnographic methods will reveal unique and relevant additional information that has to be explicitly represented in task model 1.

The allocation of methods to knowledge sources should not be taken too strictly. The knowledge sources often cannot be located completely in single cells of Jordan's conceptual map. The main conclusion is that we need different methods in a complementary sense, as we need information from different knowledge sources.

Groupware Task Analysis (GTA) is an attempt to integrate the merits from the most important classical HCI approaches with the ethnographic methods applied for CSCW (see (Veer and van Welie, 2003)). GTA contains a collection of concepts and their relations (a conceptual framework) that allows analysis and representation of all relevant notions regarding human work and task situations as dealt with in the different theories.

The framework is intended to structure task models 1 and 2, and, hence, to guide the choice of techniques for information collection in the case of task model 1. For task model 2, design decisions have to be made, based on problems and conflicts that are present in task model 1 and the requirement specification.

Task models for complex situations are composed of different aspects. Each describes the task world from a different viewpoint and each relates to the others. The three viewpoints are:

Agents, often people, either individually or in groups. Agents are considered in relation to the task world. Hence, we make a distinction between agents as actors and the roles they play. Moreover, we need the concept of organization of agents. Actors have to be described with relevant characteristics (e.g. for human actors, the language they speak, their amount of typing skill, or their experience with Microsoft Windows). Roles indicate classes of actors to whom certain subsets of tasks are allocated. By definition, roles are generic for the task world. More than one actor may perform the same role and a single actor may have several roles at the same time. Organization refers to the relation between actors and roles in respect to task allocation. Delegating and mandating responsibilities from one role to another is part of the organization.

For example, an office may have agents such as 'a secretary', 'the typing pool', and 'the answering machine'. A possible role is 'answer a telephone call'. Sometimes it is not relevant to know which agent performs a certain role: it is not important who answers a telephone call, as long as it is answered.

Work, in both its structural and dynamic aspects. We take task as the basic concept. A task has a goal as an attribute. We make a distinction between tasks and actions. Tasks can be identified at various levels of complexity. The unit level of tasks needs special attention. We need to make a distinction between

the unit task: the lowest task level that people want to consider in referring to their work

the basic task (after (Tauber, 1990)): the unit level of task delegation that is defined by the tool that is used, for example a single command in a command-driven computer application.