Internet of Things

Mark Kraeling*; Michael C. Brogioli† * CTO Office, GE Transportation, Melbourne, FL, United States

† Polymathic Consulting, Austin, TX, United States

Abstract

The Internet of Things is a topic that is increasing in importance almost daily. Mass deployment of low-cost sensor technologies, edge of network computing, and cloud computing are creating an environment in which new types of distributed applications can be developed and deployed at ever-accelerating rates. This chapter explores the history of the Internet of Things (IoT), common application use cases, topics around cloud and edge computing, as well as challenges that managers and technologists should anticipate when developing IoT-based applications and frameworks.

Keywords

Cloud; Internet of Things (IoT); RFID; Factory automation; Connected devices; Fog; Edge; Data analytics; Communication methods

1 Introduction

The Internet of Things (IoT) is a vast topic that started to hit its stride in the 2010s. The phrase itself certainly has been thrown around a lot—and of course, when mentioned, it is something that gets a lot of attention and discussion. Simply put, it is any device that is connected to the Internet. It started with the concept that devices have useful information that could be offered to a larger cloud-based system. Before the IoT revolution truly set in this information was often relayed through a “smart” device that was connected and then sent to the cloud.

With the advent of both inexpensive and more accessible communications paths the focus shifted to the devices themselves, which send information to the cloud without having to use a relay node. The IoT still has the same communications paths but is now more focused on having devices able to make decisions locally as opposed to in the cloud. Often IoT devices use Internet communication paths to get the data they need to make smarter decisions or to send results of their calculations (as opposed to a giant stream of data) to the cloud or other devices.

This chapter discusses various IoT concepts, its history, and its progression. It then discusses factors associated with the technology and architecture for software when developing or using an IoT product.

1.1 Definition

The definition of an IoT device is one that has the intelligence to use a communication path connected to the Internet or a private network. An IoT device is sufficiently complex—a simple discrete device measuring light levels over a pulse width–modulated output does not qualify. IoT devices are required to have a communications and security stack to communicate.

Not only does an IoT device have the option of communicating with a centralized back office, it also has the capability to communicate with other IoT devices. Such devices can be similar or even the same in the case of a set of devices working together to produce a given result. Such devices can also interface with humans as opposed to just other things.

Any device that has an on-off switch and the capability of running software that enables connectivity can be considered an IoT device.

1.2 Examples

From fitness bands and watches to cellular phones and appliances any device that has something to say or something to listen to over the network becomes an IoT device. The power, however, is in having the IoT devices work together to drive an outcome that is useful. The following are a few examples of how IoT devices can be very effective.

A person sets an alarm for 6:00 a.m. The alarm is a connected IoT device that either communicates with a smart home server or is the smart home server. When the alarm is set it communicates with the coffee maker (another IoT device) to make sure water is in the reservoir and a filter with coffee is in the tray. If not, the person is informed when the alarm is set, otherwise no notification is required. At 5:50 a.m., 10 min before the wake-up alarm, the coffee pot is turned on automatically, so the coffee is ready. Over a period of time the coffee maker measures the amount of time that passes from when the coffee is done to when the coffee pot is lifted. It then makes the decision that, even if it is turned on through the alarm clock, it should wait a certain amount of time before brewing so that the coffee is fresh. Information such as who set the alarm clock can also be conveyed, just in case one person becomes ready for their coffee faster than another. This example shows there are many ways information can get from one IoT device to another to help make smarter and more useful decisions (Fig. 1).

In a separate example a person has a smart fitness device. Throughout the day the fitness device measures how active the person is and when they are the most active (steps, flights of stairs, calorie expenditure, etc.). Upon returning home the device communicates with other family members’ devices to not only relay details on its performance data but to inquire about their data. The fitness device can then communicate with a cloud-based fitness server or decide locally to provide recommendations for family fitness. Perhaps a 1-mile walk will be recommended and sent to the smart home server so that if someone asks for a joint activity it has one already prepared (Fig. 2).

2 History and Device Progression

This section discusses the history of IoT and the progression of migrating cloud-based decisions to a smart device type of architecture.

2.1 History of Internet of Things and Cloud

One of the first instances of a device that communicated over a network to the outside was an RFID-enabled device. RFID (or radio frequency identification) is a device that when radiated with a source RF signal sends back information to its base. The devices themselves started in the 1960s as static devices, relaying back an ID number to identify themselves remotely to a server. In the 1980s this technology was applied to livestock to track movement between fields and food distribution areas, as well as in the transportation industry for identifying not only specific device ids, but also more dynamic information such as the driver of the vehicle (Fig. 3).

In the 1990s this expanded to the tracking of shipments and even goods coming into and out of stores or warehouses. RFID-based technology is still used today for billing vehicles when using automated toll booths on roadways.

Many consider the RFID device to be the first IoT device—as it communicates information over RF when requested. The usefulness of RFID has increased from simple identification of how assets have expanded to tracking and understanding product or assembly line flow. This led to thinking of devices as being smarter with the goal of getting devices to make more and more decisions.

Progression in the device space was overlaid with progression in the cloud and server space. Starting in the late 1980s there was a drive to push all the data that you could to a centralized server. Once there decisions could be made based on the data and then pushed back out as “decisions.” These data could then be accessed from the Internet to see near real-time data from devices or reports depending on the need to be addressed. Changes in the algorithms or how to process the data could be made in one place along with the ability to scale to more servers or more disk space. The disadvantages of a cloud-centric architecture is the bandwidth cost of sending the data, handling situations where the path from device to cloud is broken (in and out of coverage), and latency and response time. Cloud-oriented architecture for IoT reached its peak in 2001.

Starting in 2001 more fog-oriented architectures began to appear. These configurations pushed the centralized server that held all the data to regional servers located closer to the data—typically on the same subnetwork. Data could then stay in country when looking at a global view or stay at a site or location instead of being pushed to a central server. Decisions could then be made at a more local level with decreased latency. Fog-oriented architectures brought about more data paths that had to be managed. However, problems with connectivity between the decentralized servers and devices still existed, especially with wireless or mobile devices.

Focus shifted to the edge from the cloud and fog architectures with the advent of higher processing power in a smaller profile, wireless technology, and wireless protocols that were more supportive of battery-operated devices. This allowed a device to make decisions locally and report the outcome of those decisions to either the fog or cloud. This architecture minimized latency and data bandwidth requirements. However, it did make it more difficult to manage and scale, as adding processing and storage resources at the edge is often difficult (Fig. 4).

Edge IoT architecture are enabled by and comprised of embedded systems. Whether battery-operated or performing a useful function on its own without needing a lot of external connectivity the device itself is well and truly embedded.

2.2 Industrial Revolutions and Industry of Things

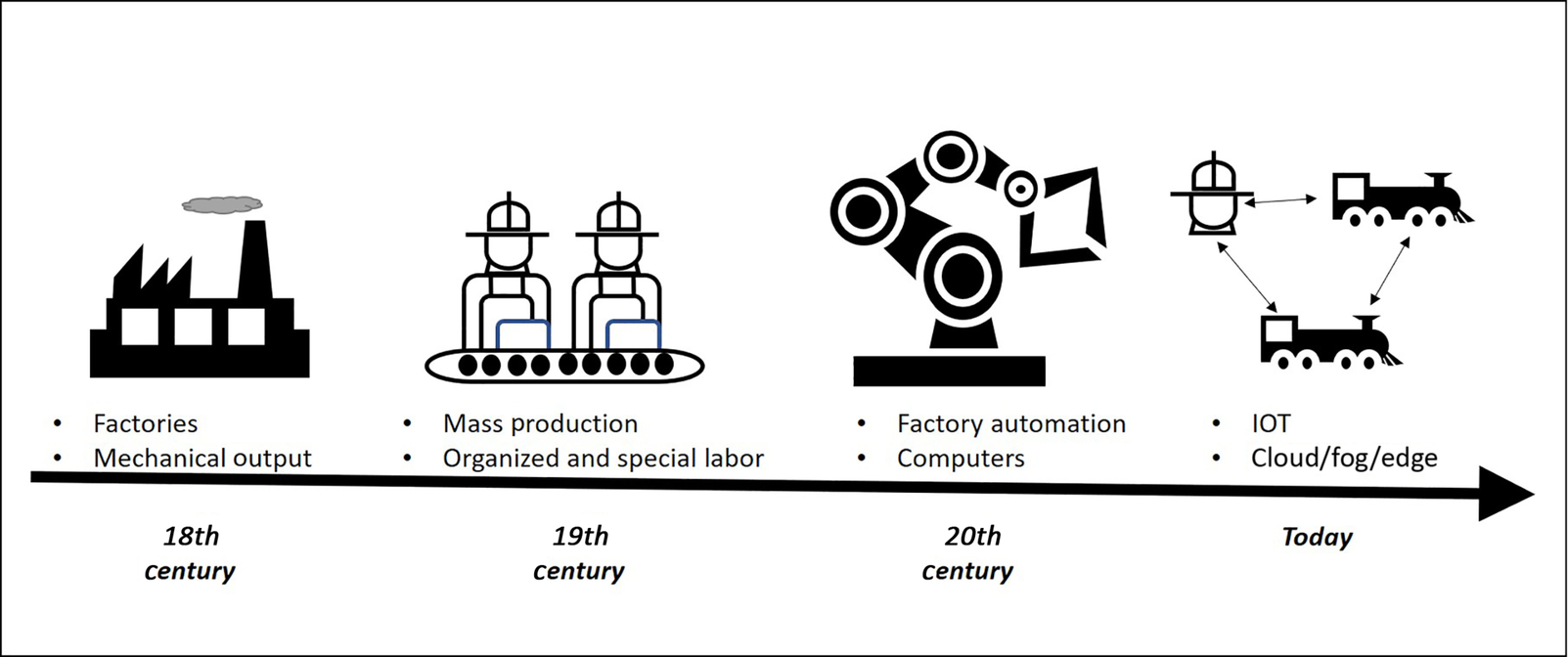

Many economists and technologists agree that we are at the forefront of the fourth industrial revolution and IoT is a large factor in this (Fig. 5).

The first industrial revolution came about when we started harnessing energy and began large-scale deployment of mechanical devices in the late 1700s. The first scaled factories arrived and goods were produced to make lives easier—whether it was a mechanical loom or oil lamp.

The second industrial revolution started in the late 1800s when labor in factories became more specialized and organized. Electricity was also harnessed to create better products and provide more features in the home. Mass production also started in earnest—from locomotive factories to vehicle manufacture.

The third industrial revolution started in 1960. Throughout this period computers became increasingly important with automation in factories doing repetitive and defined work. Computers also became smaller and increasingly faster, reaching the point where cellular phones became more than just phones.

We are currently in the fourth industrial revolution. Most agree it started around 2010 with a renewed focus on cyber-physical systems. A great deal of investment has gone into making devices not only connected, but also smarter and able to do more complex tasks at the source. Machine learning also comes into play here—where devices get smarter and adapt to the tasks being performed to perform them better. All these traits are encompassed in IoT: devices being connected, devices being smarter, and devices performing more tasks locally. The fourth industrial revolution is often termed the “IoT Revolution.”

2.3 Connected Devices

The premise underlying IoT is to have more and more connected devices. Fig. 6 shows the number of connected devices (in billions of devices) over time according to a statistical company.

As the number of devices increases, the importance of IoT expands. There are many applications for IoT in this connected device space and many enabling technologies are required.

3 Applications

Each market segment for IoT has its own unique requirements and needs. There are needs that are common across all market segments with respect to IoT such as data security and data latency. This section provides various IoT requirements for market segments and a few use cases where IoT gives value.

3.1 Factory Automation

One of the advances in the IoT space involves manufacturing and factory automation. Whether dealing with a small-scale facility that performs remelts on small batches of aluminum or a subassembly and vehicle production line, IoT is everywhere.

Typically, automation in the manufacturing environments of the past followed more of a cloud-like scenario when carrying out tasks. Data were collected from the entire operating facility and then tasks were run in the back office to alter manufacturing flow or reports were made on how the operation was running. Devices attached to the machine simply collected data and forwarded them to the back office—but did not communicate with each other.

This data architecture model has shifted. Now devices can talk to each other removing much of the data latency so decisions can be made quickly, a functionality the former server-based architecture could not support. For example, individual spray nozzles for coolant on an aluminum hot rolling mill can now communicate the volume of spray they are emitting to each other. If one isn’t performing as well as it should the two adjacent spray nozzles can pick up the slack until proper maintenance is performed. This is just one simple example from an entire network of devices and sensors where IoT with its connected devices makes a big impact in manufacturing.

3.1.1 Use Cases

The following use cases provide examples of how IoT can be used in a factory or a manufacturing setting. They are meant to help illustrate how IoT could make a difference in this type of environment.

3.1.1.1 Overhead Crane in a Factory

This use case is for an overhead crane that runs along a steel track, so it can move back and forth in the X direction (the Y direction for the entire crane is negligible since it is on a track). The crane is used to pick up loads from one location and move them to another. The load can be shifted along the Y axis using motors so that items can be taken from one corner in the factory and placed in the opposite corner.

The IoT sensors included for this crane are:

- • position sensors that determine the X and Y position of the entire crane

- • position sensors that determine the X, Y, and Z position of the load being carried

- • strain gauge that measures force in the cable between the crane and load

- • Radar and LIDAR sensors for foreign object detection.

One use case involves using the strain gauge and the crane. Tension can be measured from the time the motor is activated until movement of the load being lifted is detected. Based on the weight of the load after it is free of the floor a measurement can be made by the IoT sensor over time to determine how much the cable has stretched. Maintenance flags can be set when outside a maintenance boundary.

Another use case for the crane could be measurement in the Y direction for the entire crane itself. There shouldn’t be any—however, over time the wheels that keep the crane on the track could become worn. If more and more movement over time is detected the sensor can also flag that a maintenance inspection needs to occur.

A final use case for the crane involves sensors used to understand objects on the factory floor. Using object detection the crane can detect if the load will hit something on the floor. Even if the crane operator is commanding movement in a specific direction, because decision making can be made locally the sensors could prevent that movement from occurring or require a special override by the operator.

3.1.1.2 Aluminum Coils in a Plant

This use case involves the handling of aluminum coils produced in an aluminum rolling facility. For this use case the coils have been rolled in a hot rolling line and are waiting to be sent to the cold rolling line for further thickness reduction. There is a staging area, with an ideal “cooling” temperature range for the coil to be cold-rolled, based on alloy of the aluminum.

The IoT sensors that are included on a module placed on the spool are:

- • temperature readings (ranging from outside of coil to inside)

- • position in staging area (picks up positioning signals in local area).

The first use case involves monitoring the coil temperature and sending a wireless message every 5 min giving the predicted window of time that it should be cold-rolled. The alloy of the aluminum is sent to the IoT device as it is being hot-rolled onto the coil, which allows the device to determine the correct temperature for cold-rolling. The IoT device predicts the correct temperature based on its own cooling rate as proximity to other coils or its position in the staging area may be influential factors. This information is sent to the cold-rolling staging, so the coil can be pulled at the correct time and sent to the appropriate cold-rolling mill stand.

The second use case involves the positioning of the coil. The IoT device communicates its alloy and current temperature as soon as its coil is hot-rolled so that a decision can be made by the staging area controller on where to place the coil. Coils that will take much longer to cool for cold-rolling can be placed in an area where they are out of the way to minimize the number of coil movements required to “fetch” a coil for the next cold-rolling stage.

Another use case—not involving aluminum coil management—mentioned previously concerns using an IoT-based architecture on coolant spray used to cool aluminum as it is rolled. Individual coolant nozzles are used across the width of the aluminum sheet as it is rolled. The amount of spray from each nozzle can alter the thickness of the aluminum sheet as it is being rolled to adjust for variability across the sheet. If an IoT-based nozzle can measure the amount of coolant coming out vs. what is being commanded, it can try to compensate for this itself. If it cannot communicate with the other adjacent nozzle sensors immediately the spray volume can be increased to compensate (Fig. 7).

As the coil is being rolled at many feet per second quick decisions made locally are the only way this can be performed as sending data to a higher level centralized manufacturing facility computer would not suffice.

3.1.2 Important Factors

In factory automation the important factors for IoT devices typically involve information that can positively contribute to safety, quality, and time efficiency. In the previously mentioned use cases device position is important. In enclosed factory environments constellation-based positioning, like GPS, isn’t typically used so quality is enhanced as IoT devices bring in precision sensors like GPS. In addition to enhancing quality there are also many IoT device steps that can be put in place for safety. Even if the primary control path is triggered by human interaction, like movement of goods by an overhead crane, safety overlay technology can be put in place to assist the operator. This provides an opportunity to catch something that the operator hasn’t. Finally, being able to shift to an IoT-based architecture enhances efficiency by enabling lower network latency and more local decision making. The example of dynamic adjustment of aluminum rolling nozzle cooling shows the importance of taking delay out of making decisions as an excellent way to improve product efficiency and quality.

3.2 Rail Transportation

Rail transportation, of course, involves using railway track to move goods or passengers from one location to another. In North America it is more efficient to move goods by rail vs. trucking over longer distances, especially when efficiency is measured using dollars spent per ton-mile. Intermodal containers that are offloaded from cargo ships in ports can be loaded on either freight trains or trucking tractor trailers. If we are dealing with long distances—say, from San Diego to Chicago—it is more efficient to ship freight by rail. If an intermodal container needs to go from San Diego to a location that is 80 miles inland, then shipping it by truck is likely more efficient.

Data can make the train operate more efficiently over its journey. The IoT use cases for rail transportation involve not being constantly connected to a network as the train will likely go across areas where there is no cellular coverage. This makes it very important for decisions to be made locally on the locomotive as opposed to relying on a cloud architecture for constant information during the train trip.

For the rail transportation use case there is information available outside the locomotive, such as schedules or types of goods, which can be retrieved to make the trip more efficient and safer. For example, trains that start and stop frequently use more energy than trains that maintain a consistent speed during the trip. Trains that carry sand are safer than trains that carry hazardous materials.

The importance of subnetworks is key to a locomotive because, even though cellular communications are not available, each of the subsystems can talk to each other. Centralized networking and wireless communications that can be shared by multiple applications can improve the uniformity of data used aboard a locomotive and hence help the data become more manageable.

3.2.1 Use Cases

The following use cases illustrate how IoT can be used on a railroad. They are meant to help illustrate how IoT could make a difference in this type of environment.

3.2.1.1 Rules-Based Decision Making

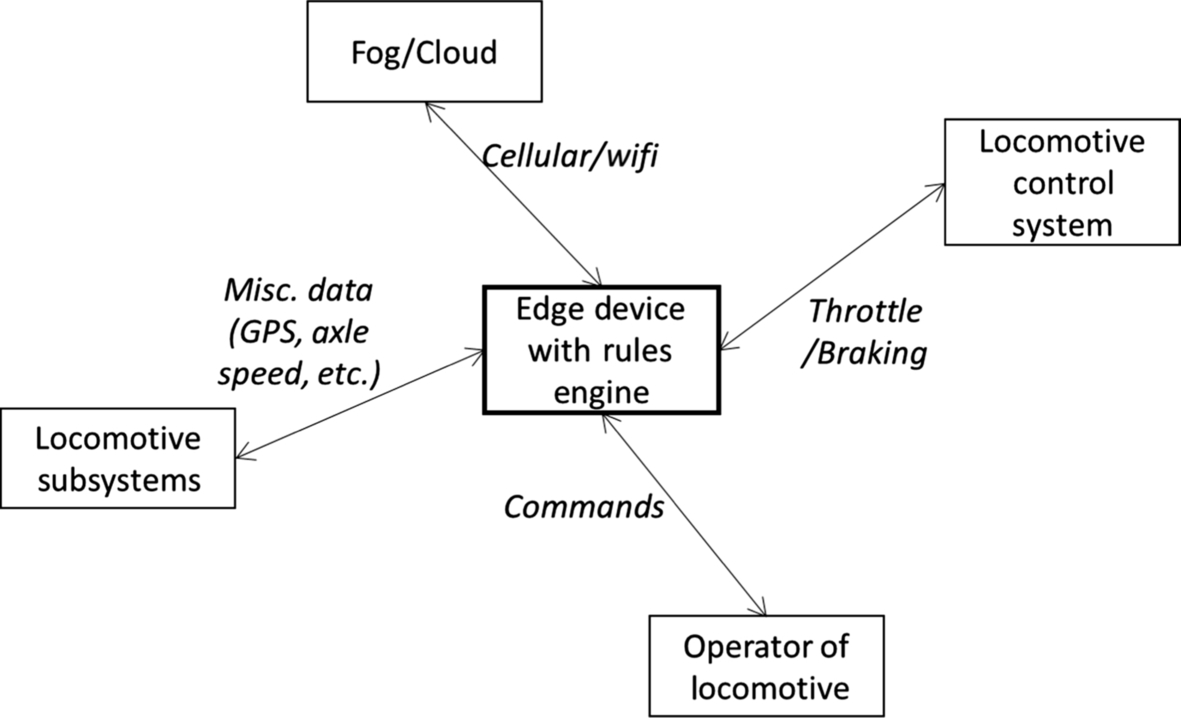

The use case for rules-based decision making involves using IoT devices to make decisions locally in the absence of cellular or other back office connectivity. For this use case there is an IoT edge device that can capture data from any of the locomotive subnets and use this information in a series of rule-based analytics. The analytics engine itself runs on board the locomotive (Fig. 8).

The IoT sensors that will be used in this use case are:

- • sensors that measure the in-train forces of the railcars in a train

- • throttle and braking positions used by the operator of the train.

The first use case for rules-based decision making involves collecting data on how an operator is driving the train. Measurement can be made for in-train forces because fast stretching or bunching of the train can break railcar knuckles and equipment. The throttle or braking commands could be evaluated on a locomotive locally so that sudden changes made by the operator are flagged and information is passed back to the operator for better operation. The rules governing what constitutes inappropriate throttle or brake command progression could be created in the back office as rules and then as trains enter areas with good cellular or Wi-Fi communications the operator can grab those rules and implement them during the trip.

Other complex rules can be created on IoT edge devices on the locomotive to process data quickly and only flag areas of concern. Typically, the amount of information needed to operate a train is measured at around 1 TB per day. Instead of transferring all these data over a cellular or other network when the train enters a yard, smart rules-based decisions can be made by IoT devices on the train that only send data deemed important to be analyzed. This could be something like 10 min before and 10 min after a fault or error condition occurred, so that only the relevant data subset needs to be analyzed as opposed to sifting through a lot of data evaluated as “normal.” Rules applied to a locomotive could provide immediate train operator feedback or be stored until offboard communications are restored and the data can be sent to the back office.

3.2.1.2 Smart Sensor Recalibration

The use case for smart sensor recalibration involves IoT sensors working with each other to understand the real speed of the train. Speed is measured based on wheel sensors mounted on the axles of the locomotive. The IoT sensors that will be used in this use case are:

- • axle speed sensors

- • GPS speed sensors.

This use case also involves calibration of the wheel diameter of a locomotive. Over time the wheels on a locomotive shrink in diameter due to normal wear. Speed sensors on the axles measure the number of revolutions of the axle over time. That information combined with the known diameter of the wheel gives us the speed. As axles are replaced on a locomotive the speed (diameter setting) needs to be recalibrated. While operating, each of the axle speed sensors acting as IoT devices can communicate with each other and self-calibrate the information. GPS speed data could also be used by employing a formula evaluating the number of satellites overhead and whether the locomotive is operating at a constant speed. Then the GPS information could be used as an additional input for self-calibration of the speed sensors (Fig. 9).

As trains do not accelerate or decelerate very quickly axle speed sensors can also be used to detect wheel slip. A sudden acceleration could indicate loss of wheel adhesion requiring a local decision that sand needs to be placed under the wheels to provide more traction. Communication between speed sensors can initiate automatic sanding, and at the same time modify the measured speed to only include axles that are not slipping.

3.2.2 Important Factors

Important factors relating to the rail transportation space using IoT devices as given in the examples include safety, local decision making, and operating efficiency. A locomotive not having consistent connectivity is a driver for all three of these factors. The operator of the train is responsible for its safe movement, but IoT devices can provide the operator better information (such as reliable speed data) and be able to flag when in-train forces as a result of throttling and braking need to be reduced. Local decision making needs to happen at the IoT edge as opposed to in a cloud-based IoT architecture because of the lack of constant connectivity. Finally, the operating efficiency of the entire train and train network is important so that goods, freight, and people can be transported effectively. Using all the IoT information that is available can enable these smarter decisions.

4 Enabling Technologies

This section takes a look at the technology that is important for IoT devices. It provides a glimpse into the factors that have progressed to enable IoT technology to increase from wearable devices to IoT technology used in industry.

4.1 Processing

With the expansion of the IoT device space the processing device space has been more focused to larger volume more functionality-oriented processing. Microprocessors containing a CPU (and depending on scale a GPU) are typically used in computing but kept separate from microprocessor storage (RAM) and peripherals. Microcontrollers incorporate not only the CPU, but also the peripherals required for the application focus. This could include serial and communications interfaces, RAM, flash storage, and similar peripherals designed for the task, so incorporating several different chips for a device is not required (Fig. 10).

Using a microcontroller is often much simpler for the IoT device space than using a microprocessor. A full-featured operating system is often not required (depending on the device size), so simple programming is all that is needed to get it in correct functional operation. Having peripherals, such as discrete I/O and communications, integrated on the same die is also much simpler to get up and running as opposed to integrating separate components together in a microprocessor-oriented architecture.

Developing a security feature set and plan is also easier on a microcontroller than on a microprocessor. All the buses and connections are internal to the silicon, so there is no need for specific protection to be given to them. The focus can turn to the security of the external interfaces of devices, which often come with specific recommendations that cannot be tampered with after shipping. Although there are still security concerns with the data to be sent on and off the device the hardware itself is often more difficult to hack than that of a microprocessor-oriented architecture.

The cost of a microcontroller for IoT is also typically much cheaper than designing a microprocessor architecture. Costs of RAM and flash or other peripherals are minimized as they are incorporated on the same die. There is no expense of designing a high-speed bus interface with peripherals because this has already been done and incorporated into the chip.

Processing for an IoT device needs to be scaled to the application. If the device is going to be battery operated, for instance, then running a higher level operating system, such as Linux or Windows, is not going to be appropriate. Having a more embedded-type system is going to be ideal where the device can power down to lower power consumption levels when it is not needed—or shut down parts of the system that are not being used at the time. Microcontroller architectures support this type of system much more easily and often come with drivers for lightweight operating systems that can turn on and off quickly or enable low power states.

4.2 Wireless Communications

When someone thinks of a typical IoT device it is often wireless. It has the capability of being wearable, such as a fitness device or watch, or accompanies its owner wherever they travel like a smartphone. There are also various levels of service for wireless communications depending on the device being used. All these come into play when addressing wireless communication.

The first design factor to address for wireless communication is whether the IoT device will be stationary or moving. An IoT device in a factory assembly line could likely rely on Wi-Fi oriented technology for a local network as opposed to using a cellular network. Coverage maps could be put in place for the IoT device—especially as it is understood to remain in the same place throughout its life. Depending on the location of the IoT device, clearly a wired solution could be evaluated since it is stationary. There can also be stationary devices in an entirely different environment than a factory, like the top of a power-generating windmill. It is for these reasons that understanding whether the IoT device will be stationary or moving is important to selecting the right wireless technology.

The second design factor is to understand how much data bandwidth is required for the IoT device. A cellular phone demands a large bandwidth. A 4G mobile communications standard known as the LTE cellular network is recommended if the user is downloading and watching movies or using Internet sites that transmit and receive large quantities of data. Releases of newer 3GPP and LTE standards focus on increasing bandwidth and reducing network latency, which are important for the cellular phone market. Advances in 5G will bring bandwidths measured in gigabytes per second. Having a bandwidth capable of 5 GB/s, which on the 5G roadmap is equivalent to a user watching 20 videos in 4 K resolution at the same time, is not as important for smaller temperature IoT sensors or even battery-operated health wearable devices. These devices monitor physical values, and then using IoT device intelligence send periodic status or alert functions. In these types of cases a large bandwidth is not required and it is more important to be efficient with battery use than transferring large amounts of data.

Another newer technology for IoT devices involves the Cat-M1 and NB-IoT LTE standards. Cat-M1 and NB-IoT were designed to use existing LTE cellular networks but on different types of access methods that focus on devices that do not need to communicate very often. The protocols and modulation are optimized for longer distance communication and lower overhead communications in comparison with the typical 4G type of LTE. LTE Cat-M1 allows a device to transfer from one cellular tower to another, so it supports a nonstationary type of device. The effective bandwidth is measured in kilobytes per second, so if only small pieces of information are transferred it may be ideal for IoT devices. NB-IoT communications do not allow a device to seamlessly transfer between cellular towers, so it is meant for a stationary device. NB-IoT has an even lower bandwidth than Cat-M1; however, the device cost is expected to be much lower than Cat-M1 devices. The following table summarizes this functionality compared with today’s LTE advanced technology available in cellphones and other advanced communications devices.

4.3 Wired Communications

Wired communications are often not considered for IoT devices because the more widely known use case is for a mobile-type application. However, there are plenty of IoT devices that are wired.

Often IoT devices need to communicate with devices that are not specifically “smart.” This could include limit switches in a factory environment or an older RS-232 serial device that needs to be connected. The IoT edge device may have multiple communication paths to deal with when gathering information such as discrete inputs and outputs. In cases where the IoT device is stationary having a wired connection may make more sense.

Ethernet is the typical wired interface for today’s stationary IoT devices. It has a given address and can transfer data to the fog or cloud based on its use. In that way it isn’t much different than a wireless device, but again it will be stationary as far as its network is concerned.

IoT devices that require special timing for their communications can opt for a variety of technologies that are enabled over wired Ethernet. One such technology is time-sensitive networking (TSN) that enables the classification of Ethernet messages based on priority and queuing. The Ethernet nodes themselves that are TSN-enabled and connected to a TSN network can receive the queue and priority information for the TSN scheduler and then send its packets according to that classification and schedule (Fig. 11).

TSN provides the ability to have a packet sent and received at regular intervals, such as every second, while other Ethernet traffic that is not associated with this time slice will be delayed. This allows regular, consistent messaging for devices regardless of other lower priority Ethernet traffic being processed in the system.

4.4 Power Storage

Power storage for an IoT device is another important technology consideration. An IoT device that is located near a power source and can be wired into that source clearly doesn’t have to worry about the availability of power. IoT devices in factories, or automobiles, fall into that category.

Another category are devices that are not constantly connected to a power source, but instead are connected occasionally for charging. These devices call for an architecture and a storage system that meets a different set of needs of the IoT device and its user. A cellular phone or smart watch is clearly in this category—the user is expected to periodically charge both to keep them operating. It then becomes much more important to understand the specific power requirements of the use case for the IoT device. A cellular phone that only lasts an hour before it needs to be charged again would be returned to the store and classified as unusable!

The last primary category for power storage is an IoT device that will not have the opportunity of being recharged during its normal life. These IoT devices are ones that must be extremely power efficient, especially when still utilizing cellular and other communications options. They are certainly microcontroller based, with operating modes that allow them to “sleep” for long periods of time, then “wake up” to perform the required task, and then go back to a deep, low-power state. Every aspect of such an IoT device would be optimized for the power available. Current IoT devices that require this type of power storage typically perform some task every 24 h, provide a status report over wireless communications, and then go back to sleep. It is common to find devices perform this function over a 5-year time span before needing to be replaced or refurbished.

5 Internet of Things Architecture

The architecture of IoT applications can vary greatly depending on the use case. While a simple architecture may have a device at the sensing site that talks directly to a remote server or cloud node, increasingly more and more logic will be required to be intelligently dispersed across the network. This not only includes cloud computing, but also edge-of-network compute nodes in addition to the devices or “things” themselves. This section provides an overview and highlights the characteristics of various components that can comprise a modern IoT application deployment.

5.1 Cloud-Computing Nodes

As was mentioned earlier in this chapter, cloud computing can be thought of as the practice of using a network of remote servers that are hosted on the Internet. These servers are used to store, manage, and process data for a given application or service. This contrasts with the notion of having a local server or server farm or a locally based personal computer. To date, cloud-computing servers differ in several ways from various other compute nodes within a given IoT deployment. For example, at the time of writing a given general-purpose cloud-based server will generally be high-performance compute with RAID-based SSD storage. The server could have redundant 10-GB networking capabilities. In addition, disk I/O may be up to 35,000 4 K random read IOPS and 35,000 4 K random write IOPS. Such a cloud server may contain as much as 8 GB of RAM, 8 CPUs, and hundreds of gigabytes of SSD storage. It is also important to note that these types of servers would be capable of running robust operating systems, such as one of various Linux distributions or Microsoft Windows.

With such a resource-rich development environment, developers can often use very high-level programming languages to implement application and business logic. Modern frameworks for Web development can be used for the rapid development and prototyping of products, given the very resource-rich development and runtime environments that these devices can afford. In addition, as compute and network bandwidth demand increases, modern cloud infrastructures, such as Amazon, Microsoft, and Rackspace, allow system architects to rapidly deploy provision servers as needed to meet application needs, while taking into effect such things as load balancing, geographical proximity to users, and caching.

5.2 Fog/Edge-Computing Nodes

Fog or edge computing is another layer of computing that may be present in modern IoT applications and systems. Unlike cloud computing, whereby there is a network of remote servers hosted on the Internet, edge computing is a communications topology that includes edge-of-network compute devices that may be used to carry out significant amounts of computation, storage, and communication. These edge devices do not reside in the cloud but rather are located at the edge of the computer network in greater proximity to where collected data are sampled. As such, a fog compute node will often be connected to and have input/output from the physical world such as sensors, actuators, and mobile health care components. It is these edge nodes, as described below, that perform the physical input and output within the system.

The processing power of modern fog or edge compute nodes, such as those in high-performance networking, artificial intelligence computing, and autonomous vehicles, can be quite high power compared with traditional personal computers and modern mobile phones. Edge compute nodes in contrast to traditional cloud servers are enabled to reside at the edge of the network as opposed to traditional or virtual servers that reside in a data center. This allows a given fog or edge node to be in physical proximity to the end users and input/output devices within the system. In addition, due to the large amount of input and output as well as compute and storage capability increasing amounts of business logic may reside within the fog or edge compute node, thereby precluding the need for all traffic from the sensor site or extreme end of the network to travel all the way to the cloud compute nodes and vice versa.

An example fog or edge compute node would be the NXP QorIQ LS1043 reference design board, which is a quad-core, 64-bit ARM-based processor for embedded networking and industrial applications. The hardware that comprises such an edge compute node is the quad-core ARM Cortex A53 processor, up to 2 GB of RAM, and 10-GB Ethernet support with various UART ports. This particular edge compute device is also typical of many industrial IoT edge compute nodes in that it comes with embedded Linux and related software tools and development kits.

5.3 Device-Computing Nodes

Devices or “things” in the IoT also differ in marked ways from previously described cloud computing and fog/edge computing. Whereas cloud-computing nodes are robust servers with full operating systems and highly provisioned hardware resources and edge/fog nodes are multicore CPU-based devices with embedded operating systems, large amounts of RAM, and I/O capability, devices or things are typically at the opposite end of the computing resource spectrum.

Conceptually, a device will often comprise a programmable processor, a small amount of local memory, modest amounts of I/O, such as Bluetooth, Zigbee, Z-Wave, or similar, and possibly an embedded operating system or bare metal software development environment. These types of devices also often contain various sensing components, such as temperature, pressure, accelerometers, and the like. These devices are often not capable of connecting directly to the Internet, although in some cases that is possible. Rather, they are designed to perform a certain function to sense the real world, perhaps perform some lightweight computation on that sensed data and then transmit the data to either other devices via a device-to-device communications link, to edge compute nodes for additional processing or business logic steps, or in some cases ultimately up to a cloud-computing node for additional business analytics and computation and application logic layers.

Examples of devices can be rather sparse in technical features when compared with other parts of a given IoT deployment. A given device, for instance, may have a real-time operating system or some variant of embedded Linux; however, this is not always the case. Oftentimes, these devices have no operating system at all and application code must run on the bare metal itself. While some devices include a 32-bit CPU capable of running an embedded OS or Linux at higher clock rates and with larger memory footprints, some devices may only contain a single 8-bit MCU running at perhaps 5–10 MHz. Typically, these types of devices will come with supporting software development kits and libraries to aid the developer to bring a solution to market. In addition, even in the bare metal MCU case these devices can usually be programming in a low-level language such as C. Program space is often limited as well; for instance, some devices may be limited to as little as 32 KB of flash memory and perhaps as little as 2 KB of SRAM with possible scratchpad memory. I/O that is included is similar to that mentioned before, such as Zigbee, Z-Wave, or Bluetooth.

6 Communications Used in Internet of Things

IoT implementations can vary widely in terms of system architecture, communications technologies, and models used. As mentioned earlier in this chapter the notion of IoT is not a particularly new concept in computing, as networks to monitor and control remote devices have been in place for decades. Similarly, the use of IP (Internet Protocol) to connect devices other than traditional computers to the Internet has also been around for some time. At a high level, however, the current IoT is a conflux of multiple technologies and emerging market factors that is rapidly making it possible to connect orders-of-magnitude more devices in smaller form factors at lower cost and with increasing ease.

Some of the factors driving current trends for communication in the IoT are discussed in this section. For example, the large-scale adoption of IP-based networking is one such factor. IP-based networking is the primary communications protocol in the Internet Protocol Suite and is used for relaying datagrams or packets across network boundaries. As such, IP has become a global standard in computer networking. Accordingly, there are rich and robust platforms of software and tools that can be incorporated into networked devices of varying types, like low-cost, low-power, smaller form factors, that lead to its use within the IoT.

Similarly, the rise of cloud computing has been a factor in modern communications and architectural trends for the IoT. Cloud computing can be thought of as the use of remote servers hosted on the Internet to store, manage, and process data vs. a locally hosted and managed server. Cloud-computing devices offer relatively low-cost, highly scalable server solutions to which large networks of distributed devices may be connected to interact. In addition to this, since cloud-computing devices can be rapidly provisioned and configured, they provide an attractive solution for back end analytics.

As mentioned above, back end data analytics is another driving factor in the IoT that dovetails with cloud computing. As cloud-computing power continues to mature and cost of compute becomes more affordable this paves the way for new algorithms and computational complexity, data storage, virtualization, and related services. These services can support vast amounts of data, aggregation, and analysis with ever-increasing economy of scale. With the ability to effectively and efficiently handle such large and dynamic data sets new opportunities for deep insight and extraction of information have become available to IoT systems designers.

Keeping all of this in mind, there is still no widely accepted definition of what comprises the IoT across different architectures and deployments. For instance, some groups refer to smart objects like devices that have limited resources and are highly constrained. These can include devices with low power, low memory, limited compute power, or limited bandwidth. Others refer to IoT devices as devices that do not necessarily connect to the Internet, but rather are capable of communicating with a local gateway or with other machine-to-machine or device-to-device nodes. Still others refer to the IoT as devices and deployments that communicate with cloud services via the traditional Internet. Nevertheless, each case contemplates a deployment of objects, sensors, etc. that possess some level of network connectivity and local compute capability. The following sections break down the different types of communications used for various types of IoT deployments.

6.1 Device-to-Device Communications

While there are varying definitions of device-to-device communications depending on the infrastructure in which the devices are deployed, generally device-to-device communication is defined as representing two or more devices that communicate and connect directly with one another. This is in contrast to devices that might communicate through an intermediary application server or connection point. One subtle difference is device-to-device communication that occurs in certain cellular network technologies.

Device-to-device communication for the IoT can occur over a number of communication types like IP networks of the Internet. Unlike other types of computing, however, oftentimes these devices use protocols, such as Bluetooth, Zigbee, or Z-wave, to establish direct point-to-point communications. Fig. 12 shows a number of wireless device-to-device compute nodes talking to each other via bidirectional communication links. In this figure the channels may be Bluetooth, Zigbee, or Z-Wave. Note that there is no centralized hub through which each of the devices communicate, but rather communication is from device to device.

6.1.1 Device-to-Device Communications With Cellular Network

In the case of cellular communications, however, device-to-device communication can differ slightly in that communication may also require assistance from the cellular network. These are often referred to as device-to-device assisted networks. Fig. 13 encompasses the device-to-device communication that was described previously in this chapter (i.e., standalone device-to-device communication) in addition to network-assisted device-to-device communication.

As can be seen in Fig. 13 the difference between the two network structures is the presence of a cellular support infrastructure in (II). It is used to organize communications and resource utilization within a given cell of the cell network. Conversely, in (I) the devices organize the communications by themselves without the support of infrastructure help. The figure shows the difference in the fundamental network architecture of D2D communications across the two solutions.

D2D aggregators can be used to collect data from devices intending to connect to what is referred to as the cellular core network and send them to gateways that connect to the core network. The access network may be wired or may be wireless and the core network itself is what connects the devices with service providers to manage the different D2D services.

Nevertheless, device-to-device networks allow devices to adhere to the communications protocol of choice to exchange messages and information, as mentioned earlier. Exemplary applications are those with typically low data rate requirements that may only be required to transmit small packets of information between devices. These could be garage door opener systems, lighting systems, and home or commercial security systems.

One challenge with many device-to-device systems, however, is that systems oftentimes use device-specific data communications. This requires various vendors to implement the same functionality across product lines and requires capital investment in development and ongoing support for these specific data formats. This contrasts with the use of standardized data formats across vendors and products. As a result underlying communications protocols may not be compatible resulting in a silo effect across vendor offerings. An example of this would be a family of Zigbee devices within a given product offering that would not be compatible with Z-Wave based devices, and vice versa.

6.2 Device-to-Cloud Communications

In contrast to the device-to-device communications model discussed earlier device-to-cloud communications incorporate cloud compute nodes as part of the communications network. In the device-to-cloud communications model the device itself connects directly to an Internet cloud service or server like an application server. This cloud server facilitates the exchange of data and control of communications. As can be imagined these types of deployment typically make use of more traditional communications technologies such as Wi-Fi connections and Ethernet.

Fig. 14 illustrates a device-to-cloud communications system whereby multiple sensors are in communication with a cloud service provider or compute node. One real-world example of this might be a manufacturing floor whereby each of the sensors is a thermostat at different locations within the manufacturing facility. Each of the thermostats is in communication with a cloud-based application server whereby facility operations can view the temperature data of the manufacturing facility via various interfaces. As can be seen in the figure there are several mechanisms by which the thermostats may communicate with the cloud server such as HTTP, TCP, UDP, CoAP, and so forth.

Examples of a device-to-cloud communications model can be found in numerous consumer electronics devices such as modern smart televisions, smart thermostat devices, and certain smart speaker devices. One application of these device-to-cloud communication devices is to transmit data collected at the device to a cloud server, which in turn analyzes the data to determine certain metrics. These might include home temperature, lighting usage, home access patterns, and energy consumption. Since intelligence is performed on a cloud-based application server this also allows the user a number of different ways to observe these data such as via a traditional Web interface, a mobile phone app, mobile browser, or tablet computer.

Like the device-to-device communication model above, however, interoperability can also be an issue when trying to connect device-to-cloud services and devices from different manufacturers. Oftentimes, the device and cloud service are from the same manufacturer such as a smart thermostat manufacturer. If a manufacturer uses proprietary data protocols or web interfaces, then a consumer is often locked into a given manufacturer’s product line. By creating a barrier to exit for the consumer or barrier to integration of other manufacturers’ devices a given product manufacturer can increase the certainty of locking in a customer.

6.3 Device-to-Gateway (Fog) Computing Communications

Up to now we have considered point-to-point communications models whereby either a given device communicates directly with other devices as a standalone configuration or with network assistance, or a given device communicates directly with a cloud server itself. Device to gateway adds another layer to the communications and network architecture. In this model of communications the device itself does not connect directly to a cloud application service, but rather talks to a gateway or fog compute node as an intermediary. In turn this gateway node will then itself communicate directly with a cloud-based application server as a proxy for the devices themselves. This provides several benefits to system developers, as will be seen below.

Since the gateway itself acts as a form of application software there will hence be application software operating locally on the gateway device. This allows the gateway to act as an intermediary between cloud-computing services and device services. The gateway node can perform several different services such as security, data translation, or protocol translation. In addition, it is increasingly more common for the gateway itself to contain business logic operations thereby removing the requirement of the devices themselves to communicate all the way to a remote cloud service provider. Fig. 15 illustrates an example of a device-to-gateway communication path.

As can be seen in the figure the sensors themselves talk to a local gateway node. They may communicate by any number of means, such as HTTP, TCP, UDP, and so forth (as described in Fig. 15). The local gateway node in turn communicates with the cloud service provider, cloud node, or application server using standard IPv4 or IPv6. The wireless connectivity used to pair a given sensor to the gateway can be one via Wi-Fi, Bluetooth, or other means. The local gateway node itself may perform a number of different functions such as data formatting and conversion, various application or business logic, and other functionality.

The model illustrated in Fig. 15 is currently common to a number of consumer electronics devices. For example, with some products the local gateway device is actually a modern smartphone capable of Bluetooth or other technology. An app would run on the smartphone that may pair with other consumer devices like a smart watch. In many cases the smart watch itself does not have the built-in capability to connect to a local Wi-Fi connection or cellular network, as this would commonly increase the cost of the device’s bill of materials, increase price, or have an adverse effect on power consumption. Instead, the smart watch would pair to the local gateway or smartphone and the smartphone in turn would operate as the intermediary local gateway facilitating the connectivity of the smart watch to the cloud server. Other examples of this communication topology are certain home security systems that connect to Internet services that may use Z-Wave or Zigbee technology for connectivity between devices and local gateways. As will be discussed in greater detail later in this chapter the addition of a local gateway device brings enhanced system flexibility, but at the cost of increased development resources, system complexity, and cost.

6.4 Back End Data-Sharing Model

While this chapter has largely focused on models where sensor data are collected and processed throughout the network for a given IoT application, there are also data models in which data can be shared across IoT applications as well as entities and management systems. One term for this type of data sharing is back end data sharing. In summary, back end data sharing refers to a data model whereby the system architecture supports the ability for the export of data for consumption via other systems. That is to say, application data for a given IoT application or infrastructure can be shared outside a given use case or organization. For example, data could be collected at the sensor level, potentially preprocessed or postprocessed, and ultimately made available as data objects at the cloud or application server level. The data can then be consumed by other users or management systems to combine with yet other third-party data sets to perform various analyses. In summary, the system architecture has been set up with the goal to grant access to uploaded cloud-based data to third parties.

One use case might be a large hospital system, perhaps spread across multiple geographic locations, comprised of HVAC systems, various lighting systems, automated control, and perhaps asset-tracking systems comprised of RFID or other enabling technology. In the traditional device-to-cloud architecture or even device-to-fog-to-cloud architecture the data collected within the system will sit on a given server of a series of servers that support the underlying architecture. In this case the data are often walled off from consumption by third-party applications either due to connectivity to the server holding the data, or due to the fact that data aren’t shared in a normalized or meaningful manner (data format, UI, etc.). By designing a data-sharing model such data can be readily accessed and analyzed by the organization at the cloud level using an assortment of modern graphical and analytical tools, as well as parsing and consuming data across the enterprise and across the various types of sensing devices and infrastructure deployed within the enterprise. In addition, the data can be packaged or made accessible via various mechanisms and APIs for consumption by third-party organizations or services. This allows the breakdown of what are commonly referred to as silos within IoT. It is important to note, however, that these are data silos rather than development silos, which are addressed later in this chapter.

Fig. 16 illustrates what a data-sharing diagram for the facility-based use case described above might look like. Various HVAC sensor data as well as potential RFID tracking data can be collected and aggregated for a given facility. The data collected across the various sensors and solutions can be hosted at application service provider A. At the same time such data may also be made accessible to application service providers B and C for further consumption and postprocessing. This use case will be discussed further in the next section on data analytics.

7 Data Analytics

As many readers are probably aware the term “big data” has frequently been used in the literature. Big data can simply be thought of as large data sets of information that can be collected and analyzed for varying purposes. The types of data collected can often be categorized and characterized by the volume of data that is being collected, the variety of data being collected, and the velocity at which the data are collected. That said, there is no shortage of opportunities to analyze and use the vast amounts of data collected in modern, and especially future-facing, IoT applications. Simply envisioning use cases, such as deployments for intelligent transportation, power grid, energy and smart metering, health care and smart cities, immediately brings to mind the scale and speed at which data will be generated via the various types of systems described in this chapter.

In general terms IoT and analytics can be thought of as the various steps that are taken via a system, either in real time or offline, in conjunction with analysts and automation whereby a variety of IoT data are examined to reveal trends in the data. These may be as simple as analysis of the raw data at a sensor collection point to the revealing of underlying trends, unseen patterns, correlations, and other new secondary information that can be created with the goal of businesses, data miners, and data scientists to make efficient and well-informed decisions.

As most readers will be aware many techniques for data analysis have been developed for both application-specific domains and more generalized use cases. IoT, however, is quite a bit different. Rather than having normalized and regular data sets to work with IoT data characteristics vary in a number of important ways. Data collected within a given system may vary due to the sensors used and various solutions used within the infrastructure. This can result in highly heterogeneous data, noise in the sampled data, variety, and unforeseen and rapid growth in data consumption and analysis requirements.

7.1 IoT and Analytics/Big Data

The volume and rate at which data are generated by various sensors, devices, health care applications, temperature systems, and myriad other applications and services is ever increasing. This will only continue with the rollout of additional IoT applications and systems. At the same time the data continuously generated are oftentimes unstructured or semi-structured. As such, traditional database systems are not able to meet or are prohibitively inefficient when storing, processing, and analyzing rapidly growing amounts of data.

For data miners and scientists to be able to analyze these types of data at large volumes and with reasonable processing times, analysts require tools and technologies that enable them rather than hinder them. These tools must be able to transform a vast amount of structured, unstructured, and semi-structured data into more easily compressible data and metainformation. It is not enough for these tools to simply analyze and format data, however, they must also be able to generate visualized findings into tables, graphics, and spatial charts for proper decision making within the organization. The integration of disparate data systems for comparison and analysis, similar to the back end data-sharing model described previously, must also be made feasible.

7.2 Analytical Systems for Internet of Things

When analyzing the data collected via a given IoT system oftentimes different types of systems must be employed according to the requirements and characteristics of the application. These different types of analysis can be characterized by their footprint, timing requirements, and end goals to name a few examples. These can be characterized roughly as real-time systems, postprocessing systems, in-memory systems, business intelligence, and large scale.

| Type of Analysis | Characteristics |

|---|---|

| Real time | This is often performed on data that are collected from sensors themselves. The data have the potential to change over time, often quite rapidly. To this end rapid analytics are required to process the data in real time. One advantage of these types of solutions is that they can benefit from parallel processing implementations. |

| Memory level | These are analytics solutions that are capable of processing data sets wherein the size of the data is smaller than that of the memory available on the cluster or compute node. At the time of writing compute clusters can often be at the terabyte scale. These types of solutions may or may not be capable of real-time processing and analysis. Similarly, these solutions can also provide the addition of real-time processing capability. |

| Postprocessing | Postprocessing or offline analytics solutions are attractive when a real-time response is not required. Systems such as Hadoop are capable of performing these offline analyses. One advantage of these types of solutions is that they can provide efficient data acquisition and reduce the cost of data format conversions for subsequent analysis. |

| Business intelligence | Business intelligence–style analytics can be adopted when the size of data sets is beyond that of the memory level of the cluster itself. In this case data may be imported into the system itself for processing. These systems currently support terabyte-level data sets and can be used to discover business opportunities from the vast sets of data. Oftentimes these solutions can be used in both offline and online modes of operation. |

| Large scale | These types of analysis solutions are employed when the size of the data to be analyzed exceeds the maximum capacity of business intelligence products and/or traditional database solutions. They often use distributed file systems for data storage and map reduce-type technologies for analysis. Large-scale solutions, like those described above, are most often only available in nonreal-time and offline modes of operation. |

8 Internet of Things Development Challenges

IoT development can often be thought of as occurring in silos. This is true in a number of different capacities including not only data collection and storage, but also the technology development process itself. It is important to note that unlike other areas of computing, such as general-purpose application development or mobile application development, IoT development is much more heterogeneous in nature. Rather than writing a mobile app UI or perhaps a business logic application, end-to-end IoT application and system development crosses a number of heterogeneous areas of computing. These can include but are not limited to security, general-purpose or high-performance computing, Linux-based application development perhaps on real-time operating systems, and even true embedded systems development that requires a deep understanding of the underlying processor and peripheral architecture.

This creates a siloed development environment in which engineers and practitioners who work in a given part of the system may not have the sufficient understanding or skill sets to operate on other parts of the system. For example, a mobile app developer or UI person likely does not have the skill set to do edge-of-network layer development in the C or C ++ programming languages. Similarly, it is widely known that there is an ever-increasing shortage of embedded engineers available for edge-of-network and embedded device development. The development processes for embedded firmware and cloud applications have taken largely divergent paths. Embedded development has stayed close to the metal, focusing on coping with extremely limited computing resources. Cloud development has raced toward frameworks and abstractions that eliminate the individual hardware nodes as a developer concern and try to enable transparent access to computing resources limited only by budget.

This section characterizes some key traits of system development that managers and system designers should be mindful of not only when ramping teams, but also in terms of the skill sets required for ongoing development and maintenance of legacy systems.

8.1 Cloud-Computing Development

Cloud computing and application development on high-performance, high-resource computers is alive and well. The availability of industrial-grade cloud service providers over the last decade has evidenced this with Microsoft Azure, Amazon Web Services, and the related solutions such vendors offer. Cloud-computing developers are used to rich development environments, highly powerful integrated development environments, and high-level languages that may be interpreted or scripting-based. Similarly, myriad development frameworks are available to accelerate and alleviate the burden faced by application developers. Rich libraries may be used that are often very large in memory and compute requirements without generally affecting overall system performance. As mentioned earlier the price of cloud computing is quite low with various commercial vendors, and hence the provisioning of additional hardware, memory, and operating systems is not usually of huge concern when resources do become limited or underperforming.

The developers of these applications often rely on multigigahertz CPU speeds, gigabytes of RAM, and terabytes of disk space. Networking is reliable, fast, and relatively cheap. In addition, more compute nodes can usually be provisioned in a matter of minutes with command line tools or the push of a button on a Web UI. In fact, it is not unrealistic to assume that the application developers are often largely unaware of the underlying hardware architecture that they are developing for. As bare metal machines gave way to virtual machines, which in turn are giving way to containers, software components are built to communicate via lightweight APIs and message buses that eliminate developer considerations of where or how much of a component is deployed. In this space the concept of fixed storage, finite compute cycles, hardware failures, and all the concerns of deploying to physical computers are abstracted away. Developers are allowed to think purely in terms of data flows through the application, communication over invisibly fast network meshes, and storage in limitless reliable data pools.

8.2 Embedded Device Development

Embedded development and design, especially when considering embedded device nodes acting as agents for powerful cloud software, is a markedly different animal from the above characterizations of cloud computing. Embedded developers often develop in far more resource-constrained environments. Chapter 2, titled “Development Process,” gives a good overview of the application development cycle for embedded systems..

Here developers often do not have the robust integrated development tools that are afforded to other areas of computing. The system being developed may be as little as a bare metal MCU with only a few kilobytes of program memory. There may be hardware assists for things like computational acceleration or direct memory transfer, but these are accessed at a very low level often using complex data structures and memory-mapped registers. A far cry from the runtime of a cloud server, application developers must possess an understanding of the minute details of the underlying architecture.

The system may have a variant of embedded Linux or a real-time operating system, but it is entirely possible the device will not. While there are an increasing number of development tool chains available for the embedded developer, assembly language is still used in certain cases. C and C ++ may be the more desirable programming language when available, either due to system resources, vendor tool chain, memory, or performance considerations. Even in this case, however, memory resources and compute resources may severely mandate how the development works. If the device has no underlying floating-point hardware, for example, computation may need to be done with fixed-point or saturating arithmetic. This often requires developers to understand proprietary intrinsic functions for the device. Memory alignment, real-time compute deadlines, and other aspects further burden the developer.

8.3 Integration of Development Silos

With the future of embedded computing and cloud software working together in tandem, program managers and developers alike must be mindful of the human resources and capital required for building and deploying these solutions. High-level cloud developers likely do not possess the intricate knowledge of embedded hardware and oftentimes do not possess the programming skills required for these systems. Similarly, embedded developers more than likely are not aware of the high-level development frameworks and tools rapidly emerging for cloud development. Care must be taken to align the many moving parts for unified and heterogeneous development of these systems if they are to successfully deploy these applications in the future.

The integration of silos goes far beyond just the development for a given target node, however. Various communications channels between devices must be accounted for as well as the maintenance of infrastructure against which a given IoT application is deployed. In addition, the deployment of security configuration information and security layers themselves must be continuously maintained throughout the life cycle of the application’s deployment. When the application code, security layers, or configurations for one compute node within an IoT application are updated they need not only be tested and validated against the other software and systems enabling the application, but also deployed. This iterative deployment cycle, often comprised of multiple interconnected software modules and layers, some proprietary and some third-party or open source, must be accounted for across development teams and enterprises.

Exercises

- 1. Q: What are the three types of architectural configurations that have evolved over time?

A: Cloud-centralized server, fog-regional servers, and edge on the device. - 2. Q: Why is decision making on the edge better than in the cloud or fog?

A: Because it is faster and enables more real-time decision making. - 3. Q: What KPIs do IoT devices typically contribute positively to in a factory setting?

A: Quality, efficiency, and safety.