Chapter 4. Code Review with a Tool[1]

![Code Review with a ToolTouchpointslist ofcode reviewParts of this chapter appeared in original form in IEEE Security & Privacy magazine coauthored with Brian Chess [Chess and McGraw 2004].](http://imgdetail.ebookreading.net/software_development/21/0321356705/0321356705__software-security-building__0321356705__graphics__105fig01.gif)

Debugging is at least twice as hard as programming. If your code is as clever as you can possibly make it, then by definition you’re not smart enough to debug it. | ||

| --BRIAN KERNIGHAN | ||

All software projects are guaranteed to have one artifact in common—source code. Because of this basic guarantee, it makes sense to center a software assurance activity around code itself. Plus, a large number of security problems are caused by simple bugs that can be spotted in code (e.g., a buffer overflow vulnerability is the common result of misusing various string functions including strcpy() in C). In terms of bugs and flaws, code review is about finding and fixing bugs. Together with architectural risk analysis (see Chapter 5), code review for security tops the list of software security touchpoints. In this chapter, I describe how to automate source code security analysis with static analysis tools.

Using a tool makes sense because code review is boring, difficult, and tedious. Analysts who practice code review often are very familiar with the “get done, go home” phenomenon described in Building Secure Software [Viega and McGraw 2001]. It is all too easy to start a review full of diligence and care, cross-referencing definitions and variable declarations, and end it by giving function definitions (and sometimes even entire pages of code) only a cursory glance.

Instead of focusing on descriptions and discussions of processes for generic code review or code inspection in this chapter, I refer the reader to the classic texts on the subject [Fagan 1976; Gilb and Graham 1993]. This chapter assumes that you know something about manual code review. If you don’t, take a quick look at Tom Gilb’s Web site <http://www.gilb.com/> before you continue.

Programmers make little mistakes all the time—a missing semicolon here, an extra parenthesis there. Most of the time, such gaffes are inconsequential; the compiler notes the error, the programmer fixes the code, and the development process continues. This quick cycle of feedback and response stands in sharp contrast to what happens with most security vulnerabilities, which can lie dormant (sometimes for years) before discovery. The longer a vulnerability lies dormant, the more expensive it can be to fix. Adding insult to injury, the programming community has a long history of repeating the same security-related mistakes.

One of the big problems is that security is not yet a standard part of the programming curriculum. You can’t really blame programmers who introduce security problems into their software if nobody ever told them what to avoid or how to build secure software. Another big problem is that most programming languages were not designed with security in mind. Unintentional (mis)use of various functions built into these languages leads to very common and often exploited vulnerabilities.

Creating simple tools to help look for these problems is an obvious way forward. The promise of static analysis is to identify many common coding problems automatically, before a program is released.

Static analysis tools (also called source code analyzers) examine the text of a program statically, without attempting to execute it. Theoretically, they can examine either a program’s source code or a compiled form of the program to equal benefit, although the problem of decoding the latter can be difficult. We’ll focus on source code analysis in this chapter because that’s where the most mature technology exists (though see the box Binary Analysis?!).

Manual auditing of the kind covered in Tom Gilb’s work is a form of static analysis. Manual auditing is very time consuming, and to do it effectively, human code auditors must first know how security vulnerabilities look before they can rigorously examine the code. Static analysis tools compare favorably to manual audits because they’re faster, which means they can evaluate programs much more frequently, and they encapsulate security knowledge in a way that doesn’t require the tool operator to have the same level of security expertise as a human auditor. Just as a programmer can rely on a compiler to enforce the finer points of language syntax consistently, the operator of a good static analysis tool can successfully apply that tool without being aware of the finer points of security bugs.

Testing for security vulnerabilities is complicated by the fact that they often exist in hard-to-reach states or crop up in unusual circumstances. Static analysis tools can peer into more of a program’s dark corners with less fuss than dynamic analysis, which requires actually running the code. Static analysis also has the potential to be applied before a program reaches a level of completion at which testing can be meaningfully performed. The earlier security risks are identified and managed in the software lifecycle, the better.

No individual touchpoint or tool can solve all of your software security problems. Static analysis tools are no different. For starters, static analysis tools look for a fixed set of patterns, or rules, in the code. Although more advanced tools allow new rules to be added over time, if a rule hasn’t been written yet to find a particular problem, the tool will never find that problem. When it comes to security, what you don’t know is pretty darn likely to hurt you, so beware of any tool that says something like, “Zero defects found, your program is now secure.” The appropriate output is, “Sorry, couldn’t find any more bugs.”

A static analysis tool’s output still requires human evaluation. There’s no way for any tool to know automatically which problems are more or less important to you, so there’s no way to avoid trawling through the output and making a judgment call about which issues should be fixed and which ones carry an acceptable level of risk. Plus, knowledgeable people still need to get a program’s design right to avoid any flaws. Static analysis tools can find bugs in the nitty-gritty details, but they can’t even begin to critique design. Don’t expect any tool to tell you, “I see you’re implementing a funds transfer application. You should tighten up the user password requirements.”

Finally, there’s computer science theory to contend with. Rice’s theorem,[2] which says (in essence) that any nontrivial question you care to ask about a program can be reduced to the halting problem,[3] applies in spades to static analysis tools. In scientific terms, static analysis problems are undecidable in the worst case. The practical ramifications of Rice’s theorem are that all static analysis tools are forced to make approximations and that these approximations lead to less-than-perfect output.

Static analysis tools suffer from false negatives (in which the program contains bugs that the tool doesn’t report) and false positives (in which the tool reports bugs that the program doesn’t really contain). False positives cause immediate grief to any analyst who has to sift through them, but false negatives are much more dangerous because they lead to a false sense of security.

A tool is sound if, for a given set of assumptions, it produces no false negatives. Unfortunately, the downside to always erring on the side of caution is a potentially debilitating number of false positives. The static analysis crowd jokes that too high a percentage of false positives leads to 100% false negatives because that’s what you get when people stop using a tool. A tool is unsound if it tries to reduce false positives at the cost of sometimes letting a false negative slip by. Most commercial tools these days are unsound.

The first code scanner built to look for security problems in code was Cigital’s ITS4 <http://www.cigital.com/its4/>.[4] Since ITS4’s release in early 2000, the idea of detecting security problems by looking over source code with a tool has come of age. Much better approaches exist and are being rapidly commercialized.

ITS4 and its counterparts RATS <http://www.securesoftware.com> and Flawfinder <http://www.dwheeler.com/flawfinder/> are extremely simple—the tools scan through a file (lexically), looking for syntactic matches based on a number of simple “rules” that might indicate possible security vulnerabilities. One such rule might be “use of strcpy() should be avoided,” which can be applied by looking through the software for the pattern “strcpy” and alerting the user when and where it is found. This is obviously a simple-minded approach that is often referred to with the derogatory label “glorified grep.”[5]

The best thing about ITS4 and company was that creating them involved gathering and publishing a preliminary set of software security rules all in one place. When we released the tool (as open source), our hope was that the world would participate in helping to gather and improve the ruleset. Though over 15,000 people downloaded ITS4 in the first year it was out, we never received even one rule to add to its knowledge base. The world did not end, however, and a number of prominent commercial efforts to build up and evolve rulesets were undertaken. Appendix B describes a very basic set of software security rules (those included in ITS4) to serve as part of a minimum set of security rules that every static analysis tool should cover.

Worth mentioning is the fact that ITS4 and friends were never intended to be “push the button, see the bug” kinds of tools. The basic idea was instead to turn an impossible problem (remembering all those rules while doing manual code review) into a really hard one (figuring out whether the things flagged by the tool matter or not). Simple tools like ITS4 help you carry out a source code security review, but they certainly don’t do it for you. The same can be said for modern tools, though they definitely make things much easier than the first-generation tools did.

Probably the simplest and most straightforward approach to static analysis is the UNIX utility grep—the same functionality you find implemented in the earliest tools such as ITS4. Armed with a list of good search strings, grep can reveal a lot about a code base. The downside is that grep is rather lo-fi because it doesn’t understand anything about the files it scans. Comments, string literals, declarations, and function calls are all just part of a stream of characters to be matched against.

You might be amused to note that using grep to search code for words like “bug,” “XXX,” “fix,” “here,” and best of all “assume” often reveals interesting and relevant tidbits. Any good security source code review should start with that.

Better fidelity requires taking into account the lexical rules that govern the programming language being analyzed. By doing this, a tool can distinguish between a vulnerable function call:

gets(&buf);

a comment:

/* never ever call gets */

and an innocent and unrelated identifier:

int begetsNextChild = 0;

As mentioned earlier, basic lexical analysis is the approach taken by early static analysis tools, including ITS4, Flawfinder, and RATS—all of which preprocess and tokenize source files (the same first steps a compiler would take) and then match the resulting token stream against a library of vulnerable constructs. Earlier, Matt Bishop and Mike Dilger built a special-purpose lexical analysis tool specifically to identify time-of-check–time-of-use (TOCTOU) flaws [Bishop and Dilger 1996].

While lexical analysis tools are certainly a step up from grep, they produce a hefty number of false positives because they make no effort to account for the target code’s semantics. A stream of tokens is better than a stream of characters, but it’s still a long way from understanding how a program will behave when it executes. Although some security defect signatures are so strong that they don’t require semantic interpretation to be identified accurately, most are not so straightforward.

To increase precision, a static analysis tool must leverage more compiler technology. By building an abstract syntax tree (AST) from source code, such a tool could take into account the basic semantics of the program being evaluated.

Armed with an AST, the next decision to make involves the scope of the analysis. Local analysis examines the program one function at a time and doesn’t consider relationships between functions. Module-level analysis considers one class or compilation unit at a time, so it takes into account relationships between functions in the same module and considers properties that apply to classes, but it doesn’t analyze calls between modules. Global analysis involves analyzing the entire program, so it takes into account all relationships between functions.

The scope of the analysis also determines the amount of context the tool considers. More context is better when it comes to reducing false positives, but it can lead to a huge amount of computation to perform.

Coding rules in explicit form have evolved rapidly in their coverage of potential vulnerabilities. Before Bishop and Dilger’s work [1996] on race conditions in file access, explicit coding rulesets (if they existed at all) were only checklist documents of ad hoc information authored, managed, and typically not widely shared by experienced software security practitioners. Bishop and Dilger’s tool was one of the first recognized attempts to capture a ruleset and automate its application through lexical scanning of code.[6] For the next four years, plenty of research was done in the area, but no other tools and accompanying rulesets emerged to push things forward.

This changed in early 2000 with the release of ITS4, a tool whose rule-set also targeted C/C++ code but went beyond the single-dimensional approaches of the past to cover a broad range of potential vulnerabilities in 144 different APIs or functions. This was followed the next year by the release of two more tools, Flawfinder and RATS. Flawfinder, written by David Wheeler, is an “interestingly” implemented C/C++ scanning tool with a somewhat larger set of rules than ITS4. RATS, authored by John Viega, not only offers a broader ruleset covering 310 C/C++ APIs or functions but also includes rulesets for the Perl, PHP, Python, and OpenSSL domains. In parallel with this public development, Cigital (the company that originally created ITS4) began commercially using SourceScope, a follow-on to ITS4 with a new standard of coverage—653 C/C++ APIs or functions. Figure 4-1 shows how the rulesets from early tools intersect.

Figure 4-1. A Venn diagram showing the overlap for ITS4, RATS, and SourceScope rules. Together, these rules define a reasonable minimum set of C and C++ rules for static analysis tools. (Thanks to Sean Barnum, who created this diagram.)

Today a handful of first-tier options are available in the static code analysis tools space. These tools include but are not limited to:

Coverity: Prevent <http://www.coverity.com/products/products_security.html>

Fortify: Source Code Analysis <http://www.fortifysoftware.com/products/sca/>

Ounce Labs: Prexis/Engine <http://www.ouncelabs.com/prexis_engine.html>

Secure Software: CodeAssure Workbench <http://www.securesoftware.com/products/source.html>

Each of the tools offers a comprehensive and growing ruleset varying in both size and area of focus. As you investigate and evaluate which tool is most appropriate for your needs, the coverage of the accompanying ruleset should be one of your primary factors of comparison.

Together with the Software Engineering Institute, Cigital has created a searchable catalog of rules published on the Department of Homeland Security’s Building Security In portal <http://buildsecurityin.us-cert.gov/portal/>. This catalog contains full coverage of the C/C++ rulesets from ITS4, RATS, and SourceScope and is intended to represent the foundational set of security rules for C/C++ development. Though some currently available tools have rulesets much more comprehensive than this catalog, we consider this the minimum standard for any modern tool scanning C/C++ code for security vulnerabilities.

Since the early days of ITS4, the idea of security rules and security vulnerability categories has progressed. Today, a number of distinct efforts to categorize, describe, and “tool-ify” software security knowledge are under way. My approach is covered in Chapter 12, where I present a simple taxonomy of coding errors that lead to security problems. The first box, Modern Security Rules Schema, describes the schema developed at Cigital for organizing security rule information and gives an example.[7] The second box, A Complete Modern Rule on pages 119 through 122, provides an example of one of the many rules compiled in the extensive Cigital knowledge base.

Researchers have explored many methods for making sense of program semantics. Some are sound, some aren’t; some are built to detect specific classes of bugs, while others are flexible enough to read definitions for what they’re supposed to detect. Some of the more recent tools are worth pondering. You really won’t be able to download most of these research prototypes and merrily start finding bugs in your own code. Rather, the ideas from these tools are driving the current crop of commercial tools (not to mention the next round of research tools).

BOON applies integer range analysis to determine whether a C program can index an array outside its bounds [Wagner et al. 2000]. While capable of finding many errors that lexical analysis tools would miss, the checker is still imprecise: It ignores statement order, it can’t model interprocedural dependencies, and it ignores pointer aliasing.

Inspired by Perl’s taint mode, CQual uses type qualifiers to perform a taint analysis, which detects format string vulnerabilities in C programs [Foster, Terauchi, and Aiken 2002]. CQual requires a programmer to annotate a few variables as either tainted or untainted and then uses type inference rules (along with pre-annotated system libraries) to propagate the qualifiers. Once the qualifiers are propagated, the system can detect format string vulnerabilities by type checking.

The xg++ tool uses a template-driven compiler extension to attack the problem of finding kernel vulnerabilities in Linux and OpenBSD [Ashcraft and Engler 2002]. It looks for locations where the kernel uses data from an untrusted source without checking it first, methods by which a user can cause the kernel to allocate memory and not free it, and situations in which a user could cause the kernel to deadlock.

The Eau Claire tool uses a theorem prover to create a general specification-checking framework for C programs [Chess 2002]. It can help find common security problems like buffer overflows, file access race conditions, and format string bugs. Developers can use specifications to ensure that function implementations behave as expected.

MOPS takes a model-checking approach to look for violations of temporal safety properties [Chen and Wagner 2002]. Developers can model their own safety properties, and some have used the tool to check for privilege management errors, incorrect construction of

chrootjails, file access race conditions, and ill-conceived temporary file schemes.Splint extends the lint concept into the security realm [Larochelle and Evans 2001]. By adding annotations, developers can enable splint to find abstraction violations, unannounced modifications to global variables, and possible use-before-initialization errors. Splint can also reason about minimum and maximum array bounds accesses if it is provided with function pre- and postconditions.

Many static analysis approaches hold promise but have yet to be directly applied to security. Some of the more noteworthy ones include ESP (a large-scale property verification approach) [Das, Lerner, and Seigle 2002], model checkers such as SLAM and BLAST (which use predicate abstraction to examine program safety properties) [Ball and Rajamani 2001; Henzinger et al. 2003], and FindBugs (a lightweight checker with a good reputation for unearthing common errors in Java programs) [Hovemeyer and Pugh 2004].

Academic work on static analysis continues apace, and research results are published with some regularity at conferences such as USENIX Security, IEEE Security and Privacy (Oakland), ISOC Network and Distributed System Security, and Programming Language Design and Implementation (PLDI). Although it often takes years for results to make a commercial impact, solid technology transfer paths have been established, and the pipeline looks good. Expect great progress in static analysis during the next several years.

In 2004 and 2005, a number of startups formed to address the software security space. Many of these vendors have built and are selling basic source code analysis tools. Major vendors in the space include the following:

Coverity <http://www.coverity.com>

Fortify <http://www.fortifysoftware.com>

Ounce Labs <http://www.ouncelabs.com>

Secure Software <http://www.securesoftware.com>

The technological approach taken by many of these vendors is very similar, although some are more academically inclined than others. By basing their tools on compiler technology, these vendors have upped the level of sophistication far beyond the early, almost unusable tools like ITS4.[8]

A critical feature that currently serves as an important differentiator in the static analysis tools market is the kind of knowledge (the ruleset) that a tool enforces. The importance of a good ruleset can’t be overestimated.

One of the main reasons to use a source code analysis tool is that manual review is costly and time consuming. Manual review is such a pain that reviewers regularly suffer from the “get done, go home” phenomenon—starting strong and ending with a sputter. An automated tool can begin to check every line of code whenever a build is complete, allowing development shops to get on with the business of building software.

Integrating a source code analyzer into your development lifecycle can be painless and easy. As long as your code builds, you should be able to run a modern analysis. Working through the results remains a challenge but is nowhere near as much trouble as painstakingly checking every line of code by hand.

Modern approaches to static analysis can now process on the order of millions of lines of code quickly and efficiently. Though a complete review certainly requires an analyst with a clue, the process of looking through the results of a tool and thinking through potential vulnerabilities beats looking through everything. A time savings of several hundred percent is not out of the question.

Several timesaving mechanisms are built into modern tools. The first is the knowledge encapsulated in a tool. Keeping a burgeoning list of all known security problems found in a language like C (several hundred) in your head while attempting to trace control flow, data flow, and an explosion of states by hand is extremely difficult. Having a tool that remembers security problems (and can easily be expanded to cover new problems) is a huge help. The second timesaving mechanism involves automatically tracking control flow, call chains, and data flow. Though commercial tools make tradeoffs when it comes to soundness (as discussed earlier), they certainly make the laborious process of control and data flow analysis much easier. For example, a decent tool can locate a potential strcpy() vulnerability on a given line, present the result in a results browser, and arm the user with an easy and automated way to determine (through control flow, call chains, and data flow structures) whether the possible vulnerability is real. Though tools are getting better at figuring out this kind of thing for themselves, they are not perfect.

The root cause of most security problems can be found in the source code and configuration files of common software applications—especially custom apps that you write yourself. Problems are seeded when vulnerable code is written right into the system, which is undeniably the most efficient and effective time to remove them. The way forward is to use automated tools and processes that systematically and comprehensively target the root cause of security issues in source code. Instead of sorting through millions of lines of code looking for vulnerabilities, a developer using an advanced software security tool that returns a small set of potential vulnerabilities can pinpoint actual vulnerabilities in a matter of seconds—precisely the same vulnerabilities that would take a malicious hacker or manual code reviewer weeks or even months to find. Of course, most bad guys know this and will use these kinds of tools themselves [Hoglund and McGraw 2004].

To be useful and cost effective, a source code analysis tool must have six key characteristics.

Be designed for security. Software security may well be a subset of software quality, but software security requires the ability to think like a bad guy. Exploiting software is not an exercise in standard-issue QA. A software defect uncovered during functionality testing might be addressed in such a way that the functional issue is resolved, but security defects may still remain and be reachable via surprising execution paths that are not even considered during functionality testing. It almost goes without saying that software security risks tend to have much more costly business impacts than do standard-issue software risks. Security impact is payable in terms of loss of business data, loss of customer trust and brand loyalty, cost of downtime and inability to perform business transactions, and other intangible costs. Simply put, software quality tools may be of some use when it comes to robustness, but software security tools have more critical security knowledge built into them. The knowledge base built into a tool is an essential deciding factor.[9]

Support multiple tiers. Modern software applications are rarely written in a single programming language or targeted to a single platform. Most business-critical applications are highly distributed, with multiple tiers each written in a different programming language and executed on a different platform. Automated security analysis software must support each of these languages and platforms, as well as properly negotiate between and among tiers. A tool that can analyze only one or two languages can’t meet the needs of modern software.

Be extensible. Security problems evolve, grow, and mutate, just like species on a continent. No one technique or set of rules will ever perfectly detect all security vulnerabilities. Good tools need a modular architecture that supports multiple kinds of analysis techniques. That way, as new attack and defense techniques are developed, the tool can be expanded to encompass them. Likewise, users must be able to add their own security rules. Every organization has its own set of corporate security policies, meaning that a fixed “one-size-fits-all” approach to security is doomed to fail.

Be useful for security analysts and developers alike. Security analysis is complicated and hard. Even the best analysis tools cannot automatically fix security problems, just as debuggers can’t magically debug your code. The best automated tools make it possible for analysts to focus their attention directly on the most important issues. Good tools support not only analysts but also the poor developers who need to fix the problems uncovered by a tool. Good tools allow users to find and fix security problems as efficiently as possible. Used properly, source code analysis tools are excellent teaching tools. Simply by using them, developers can learn about software security (almost by osmosis).

Support existing development processes. Seamless integration with build processes and IDEs is an essential characteristic of any software tool. For a source code analysis tool to become accepted as part of an application development team’s toolset, the tool must properly interoperate with existing compilers used on the various platforms and support popular build tools like

makeandant. Good tools both integrate into existing build processes and also coexist with and support analysis in familiar development tools.Make sense to multiple stakeholders. Software is built for a reason—usually a business reason. Security tools need to support the business. A security-oriented development focus is new to a vast majority of organizations. Of course, software security is not a product; rather, it is an ongoing process that necessarily involves the contributions of many people across an organization. But good automated tools can help to scale a software security initiative beyond a select few to an entire development shop. Views for release managers, development managers, and even executives allow comparison using relative metrics and can support release decisions, help control rework costs, and provide much-needed data for software governance.

Source code analysis is not easy, and early approaches (including ITS4) suffered from a number of unfortunate problems. Some of these problems persist in source code analysis tools today. Watch out for these characteristics.

Too many false positives. One common problem with early approaches to static analysis was their excessive false positive rates. Practitioners seem to feel that tools that provide a false positive rate under 40% are okay. ITS4 would sometimes produce rates in the range of 90% and higher, making it a real pain to use. Glorified

grepmachines have an extremely low signal-to-noise ratio. Modern approaches that include data flow analysis capability dramatically reduce false positives, making source code analysis much more effective.Spotty integration with IDEs. Emacs may be great, but it is not for everyone. Developers already have an IDE they like, and they shouldn’t have to switch to do a security analysis. Enough said.

Single-minded support for C. Canonical security bugs are pervasive in C. However, modern software is built with multiple languages and supports multiple platforms. If your system is built of more than C, make sure you don’t skip the “non-C” parts when you review code.

The Fortify Source Code Analysis Suite[10]

I think it is important to give you a feel for what a real commercial tool looks like (especially if you read about the use of RATS in Building Secure Software [Viega and McGraw 2001]). This section is about one of the leading software security tools. Others exist. Make sure that you pick the tool that is right for you.

That said, Fortify Software produces a very successful source code analysis suite that many organizations will find useful. It includes the five components outlined in Table 4-1.

Table 4-1. The Five Components of the Fortify Source Code Analysis Suite

Component | Description |

|---|---|

Source Code Analysis Engine | The Fortify code analysis and vulnerability detection engine performs basic semantic, data flow, control flow, and configuration analysis. |

Secure Coding Rulepacks and Rules Builder | Secure Coding Rulepacks provide coverage of 2,000+ base language and third-party functions and over 50,000 vulnerability paths. Rules Builder allows creation of custom rules. |

Audit Workbench | Fortify’s visual interface enables rapid analysis of software vulnerabilities in order to prioritize the remediation of defects. |

Developer Desktop | Fortify integrates critical vulnerability detection directly into popular IDEs (including JBuilder, Visual Studio .NET, Rational Application Developer for WebSphere Software, and Eclipse). |

Software Security Manager | Fortify centralizes analysis and reporting of vulnerability trend data across people and projects. |

The Source Code Analysis (SCA) Engine searches for violations of security-specific coding rules in source code. An intermediate representation in the form of an AST built using advanced parser technology enables a set of analyzers in the SCA Engine to pinpoint and prioritize violations. This helps to make security code reviews more efficient, consistent, and complete, especially where large code bases are involved.

The SCA Engine determines the location of security vulnerabilities in source code and computes vulnerability relevance based on the relationship of the vulnerability to the surrounding code. The analyzers built into the tool provide multilanguage analysis across multiple tiers, allowing developers to determine which path or paths through a piece of code are actually vulnerable.

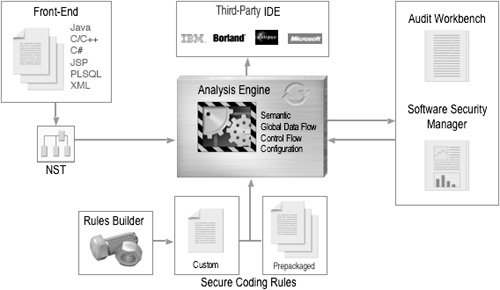

The SCA Engine includes four analyzers: semantic, data flow, control flow, and configuration. These analyzers locate security defects across the entire code base, including problems that span multiple tiers. The tool, which supports Java, C, C++, C#, JSP, XML, and PL/SQL, produces an XML results file that is consumed by the results browser. Figure 4-2 shows a basic architecture.

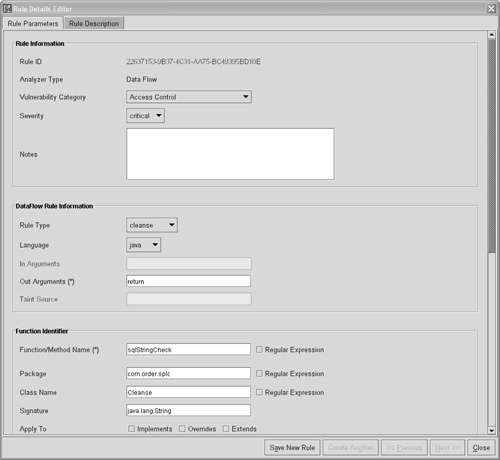

The SCA Engine uses the Secure Coding Rulepacks as the expandable knowledge base for analysis. The prepackaged Secure Coding Rulepacks that come with the tool encapsulate years of security knowledge about anomalous constructs and vulnerable functions in software. The rules can identify dozens of vulnerability categories, including buffer overflows, log forging, cross-site scripting, memory leaks, and SQL injection. The Fortify toolset is extensible and allows automated creation of new application-specific, third-party library, and corporate-standards–based custom rules using the Rules Builder (Figure 4-3).

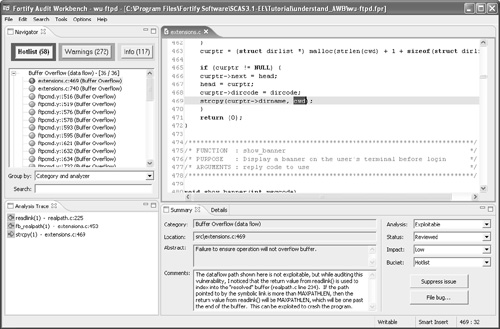

The Audit Workbench (Figure 4-4) is a visual interface allowing detailed and efficient analysis of potential software vulnerabilities in order to prioritize and fix problems. Human interface concerns are critical in these kinds of tools because the human analyst plays a central role in the process of automated code review. Without a smart human in the loop, the tool is not very useful. The Audit Workbench provides a summary view of security problems with detail related to the defect in focus. The data displayed include information about particular vulnerabilities, the rules that uncovered them, and what to do about them. (This kind of information is extremely useful in context and is available to interested readers at <http://vulncat.fortifysoftware.com>. See Chapter 12 for a taxonomy of vulnerability information.) Potential problems are displayed with surrounding source code and a call tree. Results are categorized into customizable buckets of defects and can be annotated with resolution severity, priority, and status.

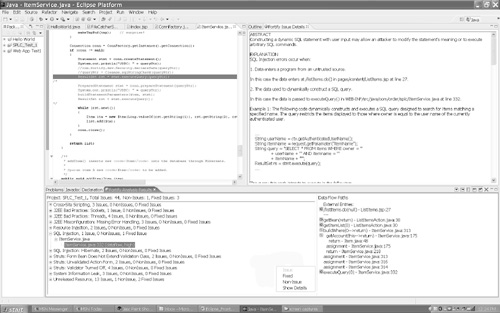

The Developer Desktop (Figure 4-5) is a collection of software components for a developer’s desktop. It includes the SCA Engine, Secure Coding Rulepacks, and plug-ins for common IDEs. Because it is integrated into standard development tools, adoption is fairly painless. Integration of the toolset enhances a standard IDE with detailed and accurate security vulnerability knowledge. This is an effective way to train developers about secure coding practices as they do their normal thing. Fortify supports Eclipse, Rational Application Developer for WebSphere Software, and Microsoft Visual Studio .NET Add-in.

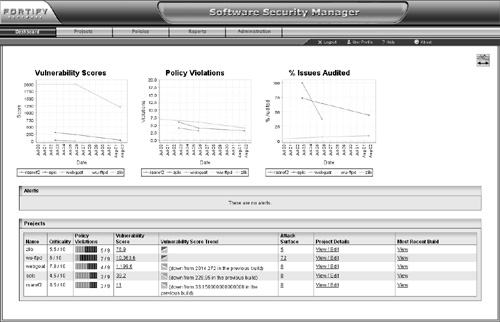

The Software Security Manager is a Web-based security policy and reporting interface that enables development teams to manage and control risk across multiple projects and releases. The Software Security Manager helps to centralize reporting, enable trend analysis, and produce software security reports for management. The Software Security Manager includes a number of predefined metrics that cover the number and type of vulnerabilities, policy violations, and severity. Figure 4-6 shows the Software Security Manager Dashboard.

The most critical feature of any static analysis tool involves the knowledge built into it. We’ve come a long way since the early days of RATS and ITS4 when a simple grep for a possibly dangerous API might suffice. Today, the software security knowledge expected to drive static analysis tools is much more sophisticated.

A complete taxonomy of software security vulnerabilities that can be uncovered using automated tools is discussed in Chapter 12. Software security rules knowledge has progressed much further than other more subtle knowledge categories such as secure coding patterns and technology-specific guidelines.

The vulnerability descriptions powering the Fortify SCA Engine are far more sophisticated than the early ITS4 database mentioned earlier in this chapter. For the complete taxonomy, see the Fortify Web site at <http://vulncat.fortifysoftware.com>.

A special demonstration version of the Fortify Source Code Analysis product is included with this book. Please note that the demonstration software includes only a subset of the functionality offered by the Source Code Analysis Suite. For example, this demonstration version scans for buffer overflow and SQL injection vulnerabilities but does not scan for cross-site scripting or access control vulnerabilities.

Appendix A is a tutorial guide reprinted with permission from Fortify Software. If you would like to learn more about how the Fortify Source Code Analysis Suite works in a hands-on way, check out the appendix. The key you will need to unlock the demo on the CD is FSDMOBEBESHIPFSDMO. To prevent any confusion, this key is composed of letters exclusively. There are no numbers.

I am not a process person, especially when it comes to software. But there is no denying that complex tools like those described in this chapter can’t simply be thrown at the software security problem and expected to solve problems willy-nilly. By wrapping a tool like the Fortify SCA Engine in a process, your organization can benefit much more from tool use than if you buy the tool and stick it on a shelf.

Figure 4-7 shows a very simple process for applying a static analysis. Note that this is only one of many processes that can be wrapped around a source code analysis tool. The process here is very much based on a software assurance perspective and is the kind of process that a software security type or an analyst would use. There are other use cases for developers (e.g., more closely aligned with IDE integration). This process is one of many.

Figure 4-7. A simple process diagram showing the use of a static analysis tool. This is a simplified version of the process used by Cigital.

Static code analysis can be carried out by any kind of technical resource. Background in software security and lots of knowledge about software security bugs is very helpful because the tool identifies particular areas of the code for the analyst to check more thoroughly. The tool is really an analyst aid more than anything.

The analyst can choose from any number of security tools (as shown in Figure 4-7), including, in some cases, use of research prototypes. The analyst uses a tool on the code to be analyzed and both refers to external information regarding potential problems and tracks issues that are identified.

Note that raw tool results are not always the most useful form of information that this process can provide. As an analyst pours over results, some possible problems will turn out to be non-issues. Other possible problems will turn out to be exploitable. Figuring this all out is the bulk of the work when using a source code analysis tool.

The simple process shown in Figure 4-7 results in code that has been fully diagnosed and a set of issues that need to be addressed. Fixing the code itself is not part of this process.

A much different approach can be taken by developers who can use a tool to spot potential problems and then fix them as they work. This is probably the most effective use of static analysis technology. Even so, widespread adoption of source code analysis tools by development shops is only now beginning to happen.

Good static analysis tools must be easy to use, even for non-security people. This means that the results from these tools must be understandable to normal developers who might not know much about security. In the end, source code analysis tools educate their users about good programming practice. Good static checkers can help their users spot and eradicate common security bugs. This is especially important for languages such as C or C++, for which a very large corpus of rules already exists.

Static analysis for security should be applied regularly as part of any modern development process.

[1] Parts of this chapter appeared in original form in IEEE Security & Privacy magazine coauthored with Brian Chess [Chess and McGraw 2004].

[2] See <http://en.wikipedia.org/wiki/Rice’s_theorem> if you need to understand more about Rice’s theorem.

[3] See <http://en.wikipedia.org/wiki/Halting_problem> if you’re not a computer science theory junkie.

[4] ITS4 is actually an acronym for “It’s The Software Stupid Security Scanner,” a name we invented much to the dismay of our poor marketing people. That was back in the day when Cigital was called Reliable Software Technologies.

[5] For the non-UNIX geeks in the audience, grep is a command-line UNIX utility for finding lexical patterns.

[6] Bishop and Dilger’s tool was built around a limited set of rules covering potential race conditions in file accesses using C on UNIX systems [Bishop and Dilger 1996].

[7] Also of note is the new book The 19 Deadly Sins of Software Security, which provides treatment of the rules space as well [Howard, LeBlanc, and Viega 2005]. Chapter 12 includes a mapping of my taxonomy against the 19 sins and the OWASP top ten <http://www.owasp.org/documentation/topten.html>.

[8] Beware of security consultants armed with ITS4 who aren’t software people. Consultants with code review tools are rapidly becoming to the software security world what consultants with penetration testing tools are to the network security world. Make sure you carefully vet your vendors.

[9] While more general quality tools will not pinpoint security issues, they can be used by a seasoned reviewer to identify “smells” in the complexity, cohesion, coupling, and effort/volume relationship of code modules—all good starting points for identifying possible security weak spots. In many cases, security errors arising from sloppy coding don’t appear as rare blips among otherwise pristine code. They are usually the consequence of a larger, more pervasive carelessness that can sometimes be seen from high up in terms of quality errors. Don’t rely on quality metrics to identify security issues, but keep an eye out for quality weak modules. Vices tend to roll together

[10] Full disclosure: I am the chairman of Fortify Software’s Technical Advisory Board. Part of Fortify’s code analysis technology (in the form of SourceScope) was invented and developed by Cigital.