15

Priority Queues

15.1 Introduction

A priority queue is a multiset of items, where each item has an associated priority, a score that indicates its importance (by convention, smaller scores are more important, indicating a higher priority). A priority queue typically provides an add() method to add an item to the set, and a removeMin() method to remove and return the item of minimal score (highest priority). Priority queues appear everywhere from high-level applications to low-level operating system kernels.

A bounded-range priority queue is one where each item’s score is taken from a discrete set of items, while an unbounded-range priority queue is one where scores are taken from a very large set, say 32-bit integers, or floating-point values. Not surprisingly, bounded-range priority queues are generally more efficient, but many applications require unbounded ranges. Fig. 15.1 shows the priority queue interface.

Figure 15.1 Priority Queue Interface.

15.1.1 Concurrent Priority Queues

In a concurrent setting, where add() and removeMin() method calls can overlap, what does it mean for an item to be in the set?

Here, we consider two alternative consistency conditions, both introduced in Chapter 3. The first is linearizability, which requires that each method call appear to take effect at some instant between its invocation and its response. The second is quiescent consistency, a weaker condition that requires that in any execution, at any point, if no additional method calls are introduced, then when all pending method calls complete, the values they return are consistent with some valid sequential execution of the object. If an application does not require its priority queues to be linearizable, then it is usually more efficient to require them to be quiescently consistent. Careful thought is usually required to decide which approach is correct for a particular application.

15.2 An Array-Based Bounded Priority Queue

A bounded-range priority queue has range m if its priorities are taken from the range 0, …, m − 1. For now, we consider bounded priority queue algorithms that use two component data structures: Counter and Bin. A Counter (see Chapter 12) holds an integer value, and supports getAndIncrement() and getAndDecrement() methods that atomically increment and decrement the counter value and return the counter’s prior value. These methods may optionally be bounded, meaning they do not advance the counter value beyond some specified bound.

A Bin is a pool that holds arbitrary items, and supports a put(x) method for inserting an item x, and a get() method for removing and returning an arbitrary item, returning null if the bin is empty. Bins can be implemented using locks or in a lock-free manner using the stack algorithms of Chapter 11.

Fig. 15.2 shows the SimpleLinear class, which maintains an array of bins. To add an item with score i a thread simply places the item in the i-th bin. The removeMin() method scans the bins in decreasing priority and returns the first item it successfully removes. If no item is found it returns null. If the bins are quiescently consistent, so is SimpleLinear. The add() and removeMin() methods are lock-free if the Bin methods are lock-free.

Figure 15.2 The SimpleLinear class: add() and removeMin() methods.

15.3 A Tree-Based Bounded Priority Queue

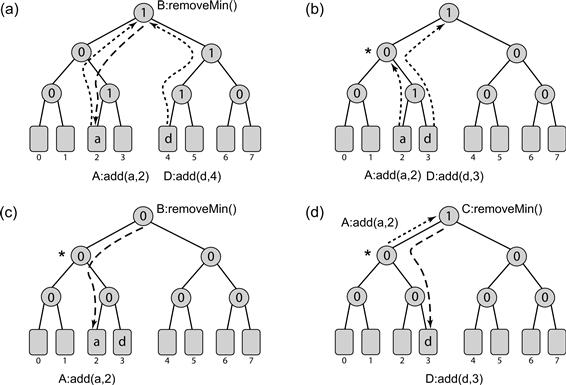

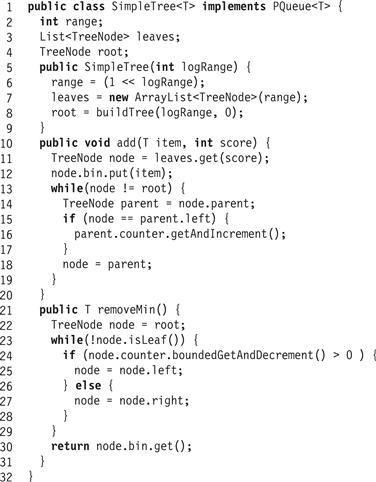

The SimpleTree (Fig. 15.3) is a lock-free quiescently consistent bounded-range priority queue. It is a binary tree (Fig. 15.4) of treeNode objects (Fig. 15.5). As depicted in Fig. 15.3, the tree has m leaves where the i-th leaf node has a bin holding items of score i. There are m − 1 shared bounded counters in the tree’s internal nodes that keep track of the number of items in the leaves of the subtree rooted in each node’s left (lower score/higher priority) child.

Figure 15.3 The SimpleTree priority queue is a tree of bounded counters. Items reside in bins at the leaves. Internal nodes hold the number of items in the subtree rooted at the node’s left child. In Part (a) threads A and D add items by traversing up the tree, incrementing the counters in the nodes when they ascend from the left. Thread B follows the counters down the tree, descending left if the counter had a nonzero value (we do not show the effect of B’s decrements). Parts (b), (c), and (d) show a sequence in which concurrent threads A and B meet at the node marked by a star. In Part (b) thread D adds d, then A adds a and ascends to the starred node, incrementing a counter along the way. In Part (c) B traverses down the tree, decrementing counters to zero and popping a. In Part (d), A continues its ascent, incrementing the counter at the root even though B already removed any trace of a from the starred node down. Nevertheless, all is well, because the nonzero root counter correctly leads C to item d, the item with the highest priority.

Figure 15.4 The SimpleTree bounded-range priority queue.

Figure 15.5 The SimpleTree class: the inner treeNode class.

An add(x, k) call adds x to the bin at the kth leaf, and increments node counters in leaf-to-root order. The removeMin() method traverses the tree in root-to-leaf order. Starting from the root, it finds the leaf with highest priority whose bin is non empty. It examines each node’s counter, going right if the counter is zero and decrementing it and going left otherwise (Line 24).

An add() traversal by a thread A moving up may meet a removeMin() traversal by a thread B moving down. As in the story of Hansel and Gretel, the descending thread B follows the trail of non-zero counters left by the ascending add() to locate and remove A’s item from its bin. Part (a) of Fig. 15.3 shows an execution of the SimpleTree.

One may be concerned about the following “Grimm” scenario. thread A, moving up, meets thread B, moving down, at a tree node marked by a star, as described in Fig. 15.3. Thread B moves down from the starred node to collect A’s item at the leaf, while A continues up the tree, incrementing counters until it reaches the root. What if another thread, C, starts to follow A’s path of nonzero counters from the root down to the starred node where B encountered A? When C reaches the starred node, it may be stranded there in the middle of the tree, and seeing no marks it would follow the right child branches to an empty Bin, even though there might be other items in the queue.

Fortunately, this scenario cannot happen. As depicted in Parts (b) through (d) of Fig. 15.3, the only way the descending thread B could meet the ascending thread A at the starred node is if another add() call by an earlier thread D incremented the same set of counters from the starred node to the root, allowing the descending thread B to reach the starred node in the first place. The ascending thread A, when incrementing counters from the starred node to the root, is simply completing the increment sequence leading to the item inserted by some other thread D. To summarize, if the item returned by some thread in Line 24 is null, then the priority queue is indeed empty.

The SimpleTree algorithm is not linearizable, since threads may overtake each other, but it is quiescently consistent. The add() and removeMin() methods are lock-free if the bins and counters are lock-free (the number of steps needed by add() is bounded by the tree depth and removeMin() can fail to complete only if items are continually being added and removed from the tree.) A typical insertion or deletion takes a number of steps logarithmic in the lowest priority (maximal score) in the range.

15.4 An Unbounded Heap-Based Priority Queue

This section presents a linearizable priority queue that supports priorities from an unbounded range. It uses fine-grained locking for synchronization.

A heap is a tree where each tree node contains an item and a score. If b is a child node of a, then b’s priority is no greater than a’s priority (i.e., items higher in the tree have lower scores and are more important). The removeMin() method removes and returns the root of the tree, and then rebalances the root’s subtrees. Here, we consider binary trees, where there are only two subtrees to rebalance.

15.4.1 A Sequential Heap

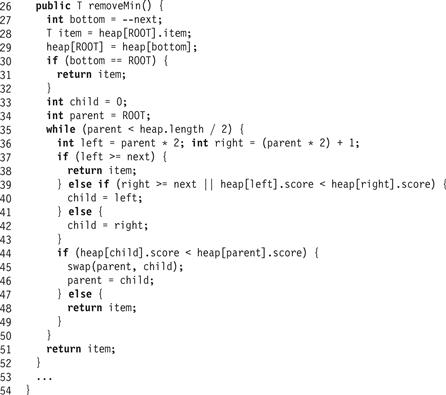

Figs. 15.6 and 15.7 show a sequential heap implementation. An efficient way to represent a binary heap is as an array of nodes, where the tree’s root is array entry 1, and the right and left children of array entry i are entries 2 ⋅ i and (2 ⋅ i) + 1, respectively. The next field is the index of the first unused node.

Figure 15.6 The SequentialHeap class: inner node class and add() method.

Figure 15.7 The SequentialHeap class: the removeMin() method.

Each node has an item and a score field. To add an item, the add() method sets child to the index of the first empty array slot (Line 13). (For brevity, we omit code to resize a full array.) The method then initializes that node to hold the new item and score (Line 14). At this point, the heap property may be violated, because the new node, which is a leaf of the tree, may have higher priority (smaller score) than an ancestor. To restore the heap property, the new node “percolates up” the tree. We repeatedly compare the new node’s priority with its parent’s, swapping them if the parent’s priority is lower (it has a larger score). When we encounter a parent with a higher priority, or we reach the root, the new node is correctly positioned, and the method returns.

To remove and return the highest-priority item, the removeMin() method records the root’s item, which is the highest-priority item in the tree. (For brevity, we omit the code to deal with an empty heap.) It then moves a leaf entry up to replace the root (Lines 27–29). If the tree is empty, the method returns the recorded item (Line 30). Otherwise, the heap property may be violated, because the leaf node recently promoted to the root may have lower priority than some of its descendants. To restore the heap property, the new root “percolates down” the tree. If both children are empty, we are done (Line 37). If the right child is empty, or if the right child has lower priority than the left, then we examine the left child (Line 39). Otherwise, we examine the right child (Line 41). If the child has higher priority than the parent, then we swap the child and parent, and continue moving down the tree (Line 44). When both children have lower priorities, or we reach a leaf, the displaced node is correctly positioned, and the method returns.

15.4.2 A Concurrent Heap

Bird’s-Eye View

The FineGrainedHeap class is mostly just a concurrent version of the SequentialHeap class. As in the sequential heap, add() creates a new leaf node, and percolates it up the tree until the heap property is restored. To allow concurrent calls to proceed in parallel, the FineGrainedHeap class percolates items up the tree as a sequence of discrete atomic steps that can be interleaved with other such steps. In the same way, removeMin() deletes the root node, moves a leaf node to the root, and percolates that node down the tree until the heap property is restored. The FineGrainedHeap class percolates items down the tree as a sequence of discrete atomic steps that can be interleaved with other such steps.

In Detail

Warning: The code presented here does not deal with heap overflow (adding an item when the heap is full) or underflow (removing an item when the heap is empty). Dealing with these cases makes the code longer, without adding much of interest.

The class uses a heapLock field to make short, atomic modifications to two or more fields (Fig. 15.8).

Figure 15.8 The FineGrainedHeap class: fields.

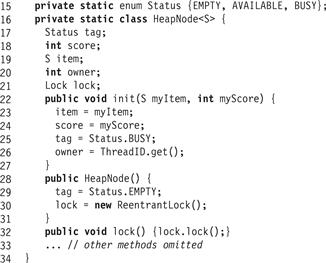

The HeapNode class (Fig. 15.9) provides the following fields. The lock field is a lock (Line 21) held for short-lived modifications, and also while the node is being percolated down the tree. For brevity, the class exports lock() and unlock() methods to lock and unlock the node directly. The tag field has one of the following states: EMPTY means the node is not in use, AVAILABLE means the node holds an item and a score, and BUSY means that the node is being percolated up the tree, and is not yet in its proper position. While the node is BUSY, the owner field holds the ID of the thread responsible for moving it. For brevity, the class provides an amOwner method that returns true if and only if the node’s tag is BUSY and the owner is the current thread.

Figure 15.9 The FineGrainedHeap class: inner HeapNode class.

The asymmetry in synchronization between the removeMin() method, which percolates down the tree holding the lock, and the add() method (Fig. 15.10), which percolates up the tree with the tag field set to BUSY, ensures that a removeMin() call is not delayed if it encounters a node that is in the middle of being shepherded up the tree by an add() call. As a result, an add() call must be prepared to have its node swapped out from underneath it. If the node vanishes, the add() call simply moves up the tree. It is sure to encounter that node somewhere between its present position and the root.

Figure 15.10 The FineGrainedHeap class: the add() method.

The removeMin() method (Fig. 15.11) acquires the global heapLock, decrements the next field, returning the index of a leaf node, locks the first unused slot in the array, and releases heapLock (Lines 76–80). It then stores the root’s item in a local variable to be returned later as the result of the call (Line 81). It marks the node as EMPTY and unowned, swaps it with the leaf node, and unlocks the (now empty) leaf (Lines 82–84).

Figure 15.11 The FineGrainedHeap class: the removeMin() method.

At this point, the method has recorded its eventual result in a local variable, moved the leaf to the root, and marked the leaf’s former position as EMPTY. It retains the lock on the root. If the heap had only one item, then the leaf and the root are the same, so the method checks whether the root has just been marked as EMPTY. If so, it unlocks the root and returns the item (Lines 85–89).

The new root node is now percolated down the tree until it reaches its proper position, following much the same logic as the sequential implementation. The node being percolated down is locked until it reaches its proper position. When we swap two nodes, we lock them both, and swap their fields. At each step, the method locks the node’s right and left children (Line 96). If the left child is empty, we unlock both children and return (Line 98). If the right child is empty, but the left child has higher priority, then we unlock the right child and examine the left (Line 103). Otherwise, we unlock the left child and examine the right (Line 106).

If the child has higher priority, then we swap the parent and child, and unlock the parent (Line 111). Otherwise, we unlock the child and the parent and return.

The concurrent add() method acquires the heapLock, allocates, locks, initializes, and unlocks an empty leaf node (Lines 36–41). This leaf node has tag BUSY, and the owner is the calling thread. It then unlocks the leaf node.

It then proceeds to percolate that node up the tree, using the child variable to keep track of the node. It locks the parent, then the child (all locks are acquired in ascending order). If the parent is AVAILABLE and the child is owned by the caller, then it compares their priorities. If the child has higher priority, then the method swaps their fields, and moves up (Line 50). Otherwise the node is where it belongs, and it is marked AVAILABLE and unowned (Line 53). If the child is not owned by the caller, then the node must have been moved up by a concurrent removeMin() call so the method simply moves up the tree to search for its node (Line 58).

Fig. 15.12 shows an execution of the FineGrainedHeap class. In Part (a) the heap tree structure is depicted, with the priorities written in the nodes and the respective array entries above the nodes. The next field is set to 10, the next array entry into which a new item can be added. As can be seen, thread A starts a removeMin() method call, collecting the value 1 from the root as the one to be returned, moving the leaf node with score 10 to the root, and setting next back to 9. The removeMin() method checks whether 10 needs to be percolated down the heap. In Part (b) thread A percolates 10 down the heap, while thread B adds a new item with score 2 to the heap in the recently emptied array entry 9. The owner of the new node is B, and B starts to percolate 2 up the heap, swapping it with its parent node of score 7. After this swap, it releases the locks on the nodes. At the same time A swaps the node with scores 10 and 3. In Part (c), A, ignoring the busy state of 2, swaps 10 and 2 and then 10 and 7 using hand-over-hand locking. It has thus swapped 2, which was not locked, from under thread B. In Part (d), when B moves to the parent node in array entry 4, it finds that the busy node with score 2 it was percolating up has disappeared. However, it continues up the heap and locates the node with 2 as it ascends, moving it to its correct position in the heap.

Figure 15.12 The FineGrainedHeap class: a heap-based priority queue.

15.5 A Skiplist-Based Unbounded Priority Queue

One drawback of the FineGrainedHeap priority queue algorithm is that the underlying heap structure requires complex, coordinated rebalancing. In this section, we examine an alternative that requires no rebalancing.

Recall from Chapter 14 that a skiplist is a collection of ordered lists. Each list is a sequence of nodes, and each node contains an item. Each node belongs to a subset of the lists, and nodes in each list are sorted by their hash values. Each list has a level, ranging from 0 to a maximum. The bottom-level list contains all the nodes, and each higher-level list is a sublist of the lower-level lists. Each list contains about half the nodes of the next lower-level list. As a result, inserting or removing a node from a skiplist containing k items takes expected time O(log k).

In Chapter 14 we used skiplists to implement sets of items. Here, we adapt skiplists to implement a priority queue of items tagged with priorities. We describe a PrioritySkipList class that provides the basic functionality needed to implement an efficient priority queue. We base the PrioritySkipList (Figs. 15.13 and 15.14) class on the LockFreeSkipList class of Chapter 14, though we could just as easily have based it on the LazySkipList class. Later, we describe a SkipQueue wrapper to cover some of the PrioritySkipList<T> class’s rough edges.

Figure 15.13 The PrioritySkipList<T> class: inner Node<T> class.

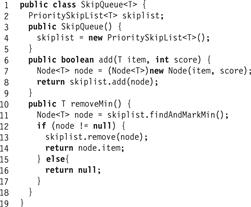

Figure 15.14 The SkipQueue<T> class.

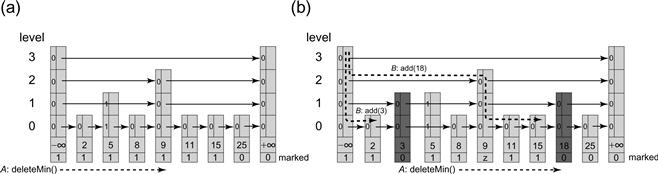

Here is a bird’s-eye view of the algorithm. The PrioritySkipList class sorts items by priority instead of by hash value, ensuring that high-priority items (the ones we want to remove first) appear at the front of the list. Fig. 15.15 shows such a PrioritySkipList structure. Removing the item with highest priority is done lazily (See Chapter 9). A node is logically removed by marking it as removed, and is later physically removed by unlinking it from the list. The removeMin() method works in two steps: first, it scans through the bottom-level list for the first unmarked node. When it finds one, it tries to mark it. If it fails, it continues scanning down the list, but if it succeeds, then removeMin() calls the PrioritySkipList class’s logarithmic-time remove() method to physically remove the marked node.

Figure 15.15 The SkipQueue priority queue: an execution that is quiescently consistent but not linearizable. In Part (a) thread A starts a removeMin() method call. It traverses the lowest-level list in the PrioritySkipList to find and logically remove the first unmarked node. It traverses over all marked nodes, even ones like the node with score 5 which is in the process of being physically removed from the SkipList. In Part (b) while A is visiting the node with score 9, thread B adds a node with score 3, and then adds a node with score 18. Thread A marks and returns the node with score 18. A linearizable execution could not return an item with score 18 before the item with score 3 is returned.

We now turn our attention to the algorithm details. Fig. 15.13 shows an outline of the the PrioritySkipList class, a modified version of the LockFreeSkipList class of Chapter 14. It is convenient to have the add() and remove() calls take skiplist nodes instead of items as arguments and results. These methods are straightforward adaptations of the corresponding LockFreeSkipList methods, and are left as exercises. This class’s nodes differ from LockFreeSkipList nodes in two fields: an integer score field (Line 4), and an AtomicBoolean marked field used for logical deletion from the priority queue (not from the skiplist) (Line 5). The findAndMarkMin() method scans the lowest-level list until it finds a node whose marked field is false, and then atomically tries to set that field to true (Line 19). If it fails, it tries again. When it succeeds, it returns the newly marked node to the caller (Line 20).

Fig. 15.14 shows the SkipQueue<T> class. This class is just a wrapper for a PrioritySkipList<T>. The add(x, p) method adds item x with score p by creating a node to hold both values, and passing that node to the PrioritySkipList class’s add() method. The removeMin() method calls the PrioritySkipList class’s findAndMarkMin() method to mark a node as logically deleted, and then calls remove() to physically remove that node.

The SkipQueue class is quiescently consistent: if an item x was present before the start of a removeMin() call, then the item returned will have a score less than or equal to that of x. This class is not linearizable: a thread might add a higher priority (lower score) item and then a lower priority item, and the traversing thread might find and return the later inserted lower priority item, violating linearizability. This behavior is quiescently consistent, however, because one can reorder add() calls concurrent with any removeMin() to be consistent with a sequential priority queue.

The SkipQueue class is lock-free. A thread traversing the lowest level of the SkipList might always be beaten to the next logically undeleted node by another call, but it can fail repeatedly only if other threads repeatedly succeed.

In general, the quiescently consistent SkipQueue tends to outperform the linearizable heap-based queue. If there are n threads, then the first logically undeleted node is always among the first n nodes in the bottom-level list. Once a node has been logically deleted, then it will be physically deleted in worst-case O(log k) steps, where k is the size of the list. In practice, a node will probably be deleted much more quickly, since that node is likely to be close to the start of the list.

There are, however, several sources of contention in the algorithm that affect its performance and require the use of backoff and tuning. Contention could occur if several threads concurrently try to mark a node, where the losers proceed together to try to mark the next node, and so on. Contention can also arise when physically removing an item from the skiplist. All nodes to be removed are likely to be neighbors at the start of the skiplist, so chances are high that they share predecessors, which could cause repeated compareAndSet() failures when attempting to snip out references to the nodes.

15.6 Chapter Notes

The FineGrainedHeap priority queue is by Galen Hunt, Maged Michael, Srinivasan Parthasarathy, and Michael Scott [74]. The SimpleLinear and SimpleTree priority queues are credited to Nir Shavit and Asaph Zemach [143]. The SkipQueue is by Itai Lotan and Nir Shavit [107] who also present a linearizable version of the algorithm.

Exercise 173. Give an example of a quiescently consistent priority queue execution that is not linearizable.

Exercise 174. Implement a quiescently consistent Counter with a lock-free implementation of the boundedGetAndIncrement() and boundedGetAndDecrement() methods using a counting network or diffracting tree.

Exercise 175. In the SimpleTree algorithm, what would happen if the boundedGetAndDecrement() method were replaced with a regular getAndDecrement()?

Exercise 176. Devise a SimpleTree algorithm with bounded capacity using boundedGetAndIncrement() methods in treeNode counters.

Exercise 177. In the SimpleTree class, what would happen if add(), after placing an item in the appropriate Bin, incremented counters in the same top-down manner as in the removeMin() method? Give a detailed example.

Exercise 178. Prove that the SimpleTree is a quiescently consistent priority queue implementation.

Exercise 179. Modify FineGrainedHeap to allocate new heap nodes dynamically. What are the performance limitations of this approach?

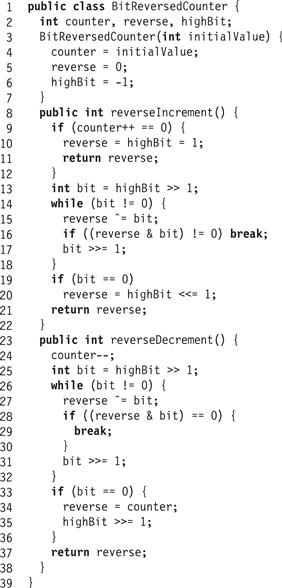

Exercise 180. Fig. 15.16 shows a bit-reversed counter. We could use the bit-reversed counter to manage the next field of the FineGrainedHeap class. Prove the following: for any two consecutive insertions, the two paths from the leaves to the root have no common nodes other than the root. Why is this a useful property for the FineGrainedHeap?

Figure 15.16 A bit-reversed counter.

Exercise 181. Provide the code for the PrioritySkipList class’s add() and remove() methods.

Exercise 182. The PrioritySkipList class used in this chapter is based on the LockFreeSkipList class. Write another PrioritySkipList class based on the LazySkipList class.

Exercise 183. Describe a scenario in the SkipQueue implementation in which contention would arise from multiple concurrent removeMin() method calls.

Exercise 184. The SkipQueue class is quiescently consistent but not linearizable. Here is one way to make this class linearizable by adding a simple time-stamping mechanism. After a node is completely inserted into the SkipQueue, it acquires a timestamp. A thread performing a removeMin() notes the time at which it starts its traversal of the lower level of the SkipQueue, and only considers nodes whose timestamp is earlier than the time at which it started its traversal, effectively ignoring nodes inserted during its traversal. Implement this class and justify why it works.