Chapter 7. Traffic Shaping with Queues and Priorities

In this chapter, we look at how to use traffic shaping to allocate bandwidth resources efficiently and according to a specified policy. If the term traffic shaping seems unfamiliar, rest assured it means what you think it means: that you’ll be altering the way your network allocates resources in order to satisfy the requirements of your users and their applications. With a proper understanding of your network traffic and the applications and users that generate it, you can, in fact, go quite a bit of distance toward “bigger, better, faster, more” just by optimizing your network for the traffic that’s actually supposed to pass there.

A small but powerful arsenal of traffic-shaping tools is at your disposal; all of them work by introducing nondefault behavior into your network setup to bend the realities of your network according to your wishes. Traffic shaping for PF contexts currently comes in two flavors: the once experimental ALTQ (short for alternate queuing) framework, now considered old-style after some 15 years of service, and the newer OpenBSD priorities and queuing system introduced in OpenBSD 5.5.

In the first part of the chapter, we introduce traffic shaping by looking at the features of the new OpenBSD priority and queuing system. If you’re about to set up on OpenBSD 5.5 or newer, you can jump right in, starting with the next section, Always-On Priority and Queues for Traffic Shaping. This is also where the main traffic-shaping concepts are introduced with examples.

On OpenBSD 5.4 and earlier as well as other BSDs where the PF code wasn’t current with OpenBSD 5.5, traffic shaping was the domain of the ALTQ system. On OpenBSD, ALTQ was removed after one transitional release, leaving only the newer traffic-shaping system in place from OpenBSD 5.6 onward. If you’re interested in converting an existing ALTQ setup to the new system, you’ll most likely find Transitioning from ALTQ to Priorities and Queues useful; this section highlights the differences between the older ALTQ system and the new system.

If you’re working with an operating system where the queues system introduced in OpenBSD 5.5 isn’t yet available, you’ll want to study the ALTQ traffic-shaping subsystem, which is described in Directing Traffic with ALTQ. If you’re learning traffic-shaping concepts and want to apply them to an ALTQ setup, please read the first part of this chapter before diving into ALTQ-specific configuration details.

Always-On Priority and Queues for Traffic Shaping

Managing your bandwidth has a lot in common with balancing your checkbook or handling other resources that are either scarce or available in finite quantities. The resource is available in a constant supply with hard upper limits, and you need to allocate the resource with maximum efficiency, according to the priorities set out in your policy or specification.

OpenBSD 5.5 and newer offers several different options for managing your bandwidth resources via classification mechanisms in our PF rule sets. We’ll take a look at what you can do with pure traffic prioritization first and then move on to how to subdivide your bandwidth resources by allocating defined subsets of your traffic to queues.

Note

The always-on priorities were introduced as a teaser of sorts in OpenBSD 5.0. After several years in development and testing, the new queuing system was finally committed in time to be included in OpenBSD 5.5, which was released on May 1, 2014. If you’re starting your traffic shaping from scratch on OpenBSD 5.5 or newer or you’re considering doing so, this section is the right place to start. If you’re upgrading from an earlier OpenBSD version or transitioning from another ALTQ system to a recent OpenBSD, you’ll most likely find the following section, Transitioning from ALTQ to Priorities and Queues, useful.

Shaping by Setting Traffic Priorities

If you’re mainly interested in pushing certain kinds of traffic ahead of others, you may be able to achieve what you want by simply setting priorities: assigning a higher priority to some items so that they receive attention before others.

The prio Priority Scheme

Starting with OpenBSD 5.0, a priority scheme for classifying network traffic on a per-rule basis is available. The range of priorities is from 0 to 7, where 0 is lowest priority. Items assigned priority 7 will skip ahead of everything else, and the default value 3 is automatically assigned for most kinds of traffic. The priority scheme, which you’ll most often hear referred to as prio after the PF syntax keyword, is always enabled, and you can tweak your traffic by setting priorities via your match or pass rules.

For example, to speed up your outgoing SSH traffic to the max, you could put a rule like this in your configuration:

pass proto tcp to port ssh set prio 7

Then your SSH traffic would be served before anything else.

You could then examine the rest of your rule set and decide what traffic is more or less important, what you would like always to reach its destination, and what parts of your traffic you feel matter less.

To push your Web traffic ahead of everything else and bump up the priority for network time and name services, you could amend your configuration with rules like these:

pass proto tcp to port { www https } set prio 7

pass proto { udp tcp } to port { domain ntp } set prio 6Or if you have a rule set that already includes rules that match criteria other than just the port, you could achieve much the same effect by writing your priority traffic shaping as match rules instead:

match proto tcp to port { www https } set prio 7

match proto { udp tcp } to port { domain ntp } set prio 6In some networks, time-sensitive traffic, like Voice over Internet Protocol (VoIP), may need special treatment. For VoIP, a priority setup like this may improve phone conversation quality:

voip_ports="{ 2027 4569 5036 5060 10000:20000 }"

match proto udp to port $voip_ports set prio 7But do check your VoIP application’s documentation for information on what specific ports it uses. In any case, using match rules like these can have a positive effect on your configuration in other ways, too: You can use match rules like the ones in the examples here to separate filtering decisions—such as passing, blocking, or redirecting—from traffic-shaping decisions, and with that separation in place, you’re likely to end up with a more readable and maintainable configuration.

It’s also worth noting that parts of the OpenBSD network stack set default priorities for certain types of traffic that the developers decided was essential to a functional network. If you don’t set any priorities, anything with proto carp and a few other management protocols and packet types will go by priority 6, and all types of traffic that don’t receive a specific classification with a set prio rule will have a default priority of 3.

The Two-Priority Speedup Trick

In the examples just shown, we set different priorities for different types of traffic and managed to get specific types of traffic, such as VoIP and SSH, to move faster than others. But thanks to the design of TCP, which carries the bulk of your traffic, even a simple priority-shaping scheme has more to offer with only minor tweaks to the rule set.

As readers of RFCs and a few practitioners have discovered, the connection-oriented design of TCP means that for each packet sent, the sender will expect to receive an acknowledgment (ACK) packet back within a preset time or matching a defined “window” of sequence numbers. If the sender doesn’t receive the acknowledgment within the expected limit, she assumes the packet was lost in transit and arranges to resend the data.

One other important factor to consider is that by default, packets are handled in the order they arrive. This is known as first in, first out (FIFO), and it means that the essentially dataless ACK packets will be waiting their turn in between the larger data packets. On a busy or congested link, which is exactly where traffic shaping becomes interesting, waiting for ACKs and performing retransmissions can eat measurably into effective bandwidth and slow down all transfers. In fact, concurrent transfers in both directions can slow each other significantly more than the value of their expected data sizes.[39]

Fortunately, a simple and quite popular solution to this problem is at hand: You can use priorities to make sure those smaller packets skip ahead. If you assign two priorities in a match or pass rule, like this:

match out on egress set prio (5, 6)

The first priority will be assigned to the regular traffic, while ACK packets and other packets with a low delay type of service (ToS) will be assigned the second priority and will be served faster than the regular packets.

When a packet arrives, PF detects the ACK packets and puts them on the higher-priority queue. PF also inspects the ToS field on arriving packets. Packets that have the ToS set to low delay to indicate that the sender wants speedier delivery also get the high-priority treatment. When more than one priority is indicated, as in the preceding rule, PF assigns priority accordingly. Packets with other ToS values are processed in the order they arrive, but with ACK packets arriving faster, the sender spends less time waiting for ACKs and resending presumably lost data. The net result is that the available bandwidth is used more efficiently. (The match rule quoted here is the first one I wrote in order to get a feel for the new prio feature—on a test system, of course—soon after it was committed during the OpenBSD 5.0 development cycle. If you put that single match rule on top of an existing rule set, you’ll probably see that the link can take more traffic and more simultaneous connections before noticeable symptoms of congestion turn up.)

See whether you can come up with a way to measure throughout before and after you introduce the two-priorities trick to your traffic shaping, and note the difference before you proceed to the more complex traffic-shaping options.

Introducing Queues for Bandwidth Allocation

We’ve seen that traffic shaping using only priorities can be quite effective, but there will be times when a priorities-only scheme will fall short of your goals. One such scenario occurs when you’re faced with requirements that would be most usefully solved by assigning a higher priority, and perhaps a larger bandwidth share, to some kinds of traffic, such as email and other high-value services, and correspondingly less bandwidth to others. Another such scenario would be when you simply want to apportion your available bandwidth in different-sized chunks to specific services and perhaps set hard upper limits for some types of traffic, while at the same time wanting to ensure that all traffic that you care about gets at least its fair share of available bandwidth. In cases like these, you leave the pure-priority scheme behind, at least as the primary tool, and start doing actual traffic shaping using queues.

Unlike with the priority levels, which are always available and can be used without further preparations, in any rule, queues represent specific parts of your available bandwidth and can be used only after you’ve defined them in terms of available capacity. Queues are a kind of buffer for network packets. Queues are defined with a specific amount of bandwidth, or as a specific portion of available bandwidth, and you can allocate portions of each queue’s bandwidth share to subqueues, or queues within queues, which share the parent queue’s resources. The packets are held in a queue until they’re either dropped or sent according to the queue’s criteria and subject to the queue’s available bandwidth. Queues are attached to specific interfaces, and bandwidth is managed on a per-interface basis, with available bandwidth on a given interface subdivided into the queues you define.

The basic syntax for defining a queue follows this pattern:

queue name on interface bandwidth number [ ,K,M,G] queue name1 parent name bandwidth number[ ,K,M,G] default queue name2 parent name bandwidth number[ ,K,M,G] queue name3 parent name bandwidth number[ ,K,M,G]

The letters following the bandwidth number denote the unit of measurement: K denotes kilobits; M megabits; and G gigabits. When you write only the bandwidth number, it’s interpreted as the number of bits per second. It’s possible to tack on other options to this basic syntax, as we’ll see in later examples.

Note

Subqueue definitions name their parent queue, and one queue needs to be the default queue that receives any traffic not specifically assigned to other queues.

Once queue definitions are in place, you integrate traffic shaping into your rule set by rewriting your pass or match rules to assign traffic to a specific queue.

The HFSC Algorithm

Underlying any queue system you define using the queue system in OpenBSD 5.5 and later is the Hierarchical Fair Service Curve (HFSC) algorithm. HFSC was designed to allocate resources fairly among queues in a hierarchy. One of its interesting features is that it imposes no limits until some part of the traffic reaches a volume that’s close to its preset limits. The algorithm starts shaping just before the traffic reaches a point where it deprives some other queue of its guaranteed minimum share.

Note

All sample configurations we present in this book assign traffic to queues in the outgoing direction because you can realistically control only traffic generated locally and, once limits are reached, any traffic-shaping system will eventually resort to dropping packets in order to make the endpoint back off. As we saw in the earlier examples, all well-behaved TCP stacks will respond to lost ACKs with slower packet rates.

Now that you know at least the basics of the theory behind the OpenBSD queue system, let’s see how queues work.

Splitting Your Bandwidth into Fixed-Size Chunks

You’ll often find that certain traffic should receive a higher priority than other traffic. For example, you’ll often want important traffic, such as mail and other vital services, to have a baseline amount of bandwidth available at all times, while other services, such as peer-to-peer file sharing, shouldn’t be allowed to consume more than a certain amount. To address these kinds of issues, queues offer a wider range of options than the pure-priority scheme.

The first queue example builds on the rule sets from earlier chapters. The scenario is that we have a small local network, and we want to let the users on the local network connect to a predefined set of services outside their own network while also letting users from outside the local network access a Web server and an FTP server somewhere on the local network.

Queue Definition

In the following example, all queues are set up with the root queue, called main, on the external, Internet-facing interface. This approach makes sense mainly because bandwidth is more likely to be limited on the external link than on the local network. In principle, however, allocating queues and running traffic shaping can be done on any network interface.

This setup includes a queue for a total bandwidth of 20Mb with six subqueues.

queue main on $ext_if bandwidth 20M

queue defq parent main bandwidth 3600K default

queue ftp parent main bandwidth 2000K

queue udp parent main bandwidth 6000K

queue web parent main bandwidth 4000K

queue ssh parent main bandwidth 4000K

queue ssh_interactive parent ssh bandwidth 800K

queue ssh_bulk parent ssh bandwidth 3200K

queue icmp parent main bandwidth 400KThe subqueue defq, shown in the preceding example, has a bandwidth allocation of 3600K, or 18 percent of the bandwidth, and is designated as the default queue. This means any traffic that matches a pass rule but that isn’t explicitly assigned to some other queue ends up here.

The other queues follow more or less the same pattern, up to subqueue ssh, which itself has two subqueues (the two indented lines below it). Here, we see a variation on the trick of using two separate priorities to speed up ACK packets, and as we’ll see shortly, the rule that assigns traffic to the two SSH subqueues assigns different priorities. Bulk SSH transfers, typically SCP file transfers, are transmitted with a ToS indicating throughput, while interactive SSH traffic has the ToS flag set to low delay and skips ahead of the bulk transfers. The interactive traffic is likely to be less bandwidth consuming and gets a smaller share of the bandwidth, but it receives preferential treatment because of the higher-priority value assigned to it. This scheme also helps the speed of SCP file transfers because the ACK packets for the SCP transfers will be assigned a higher priority.

Finally, we have the icmp queue, which is reserved for the remaining 400K, or 2 percent, of the bandwidth from the top level. This guarantees a minimum amount of bandwidth for ICMP traffic that we want to pass but that doesn’t match the criteria that would have it assigned to the other queues.

Rule Set

To tie the queues into the rule set, we use the pass rules to indicate which traffic is assigned to the queues and their criteria.

set skip on { lo, $int_if }

pass log quick on $ext_if proto tcp to port ssh

queue (ssh_bulk, ssh_interactive) set prio (5,7)

pass in quick on $ext_if proto tcp to port ftp queue ftp

pass in quick on $ext_if proto tcp to port www queue http

pass out on $ext_if proto udp queue udp

pass out on $ext_if proto icmp queue icmp

pass out on $ext_if proto tcp from $localnet to port $client_out ➊The rules for ssh, ftp, www, udp, and icmp assign traffic to their respective queues, and we note again that the ssh queue’s subqueues are assigned traffic with two different priorities. The last catchall rule ➊ passes all other outgoing traffic from the local network, lumping it into the default defq queue.

You can always let a block of match rules do the queue assignment instead in order to make the configuration even more flexible. With match rules in place, you move the filtering decisions to block, pass, or even redirect to a set of rules elsewhere.

match log quick on $ext_if proto tcp to port ssh

queue (ssh_bulk, ssh_interactive) set prio (5,7)

match in quick on $ext_if proto tcp to port ftp queue ftp

match in quick on $ext_if proto tcp to port www queue http

match out on $ext_if proto udp queue udp

match out on $ext_if proto icmp queue icmpNote that with match rules performing the queue assignment, there’s no need for a final catchall to put the traffic that doesn’t match the other rules into the default queue. Any traffic that doesn’t match these rules and that’s allowed to pass will end up in the default queue.

Upper and Lower Bounds with Bursts

Fixed bandwidth allocations are nice, but network admins with traffic-shaping ambitions tend to look for a little more flexibility once they’ve gotten their feet wet. Wouldn’t it be nice if there were a regime with flexible bandwidth allocation, offering guaranteed lower and upper bounds for bandwidth available to each queue and variable allocations over time—and one that starts shaping only when there’s an actual need to do so?

The good news is that the OpenBSD queues can do just that, courtesy of the underlying HFSC algorithm discussed earlier. HFSC makes it possible to set up queuing regimes with guaranteed minimum allocations and hard upper limits, and you can even have allocations that include burst values to let available capacity vary over time.

Queue Definition

Working from a typical gateway configuration like the ones we’ve altered incrementally over the earlier chapters, we insert this queue definition early in the pf.conf file:

queue rootq on $ext_if bandwidth 20M

queue main parent rootq bandwidth 20479K min 1M max 20479K qlimit 100

queue qdef parent main bandwidth 9600K min 6000K max 18M default

queue qweb parent main bandwidth 9600K min 6000K max 18M

queue qpri parent main bandwidth 700K min 100K max 1200K

queue qdns parent main bandwidth 200K min 12K burst 600K for 3000ms

queue spamd parent rootq bandwidth 1K min 0K max 1K qlimit 300This definition has some characteristics that are markedly different from the previous one in Introducing Queues for Bandwidth Allocation. We start with this rather small hierarchy by splitting the top-level queue, rootq, into two. Next, we subdivide the main queue into several subqueues, all of which have a min value set—the guaranteed minimum bandwidth allocated to the queue. (The max value would set a hard upper limit on the queue’s allocation.) The bandwidth parameter also sets the allocation the queue will have available when it’s backlogged—that is, when it’s started to eat into its qlimit, or queue limit, allocation.

The queue limit parameter works like this: In case of congestion, each queue by default has a pool of 50 slots, the queue limit, to keep packets around when they can’t be transmitted immediately. Here, the top-level queues, main and spamd, both have larger-than-default pools set by their qlimit setting: 100 for main and 300 for spamd. Cranking up these qlimit sizes means we’re a little less likely to drop packets when the traffic approaches the set limits, but it also means that when the traffic shaping kicks in, we’ll see increased latency for connections that end up in these larger pools.

Rule Set

The next step is to tie the newly created queues into the rule set. If you have a filtering regime in place already, the tie-in is simple—just add a few match rules:

match out on $ext_if proto tcp to port { www https }

set queue (qweb, qpri) set prio (5,6)

match out on $ext_if proto { tcp udp } to port domain

set queue (qdns, qpri) set prio (6,7)

match out on $ext_if proto icmp

set queue (qdns, qpri) set prio (6,7)Here, the match rules once again do the ACK packet speedup trick with the high- and low-priority queue assignment, just as we saw earlier in the pure-priority-based system. The only exception is when we assign traffic to our lowest-priority queue (with a slight modification to an existing pass rule), where we really don’t want any speedup.

pass in log on egress proto tcp to port smtp

rdr-to 127.0.0.1 port spamd set queue spamd set prio 0Assigning the spamd traffic to a minimal-sized queue with 0 priority here is intended to slow down the spammers on their way to our spamd. (See Chapter 6 for more on spamd and related matters.)

With the queue assignment and priority setting in place, it should be clear that the queue hierarchy here uses two familiar tricks to make efficient use of available bandwidth. First, it uses a variation of the high- and low-priority mix demonstrated in the earlier pure-priority example. Second, we speed up almost all other traffic, especially the Web traffic, by allocating a small but guaranteed portion of bandwidth for name service lookups. For the qdns queue, we set the burst value with a time limit: After 3000 milliseconds, the allocation goes down to a minimum of 12K to fit within the total 200K quota. Short-lived burst values like this can be useful to speed connections that transfer most of their payload during the early phases.

It may not be immediately obvious from this example, but HFSC requires that traffic be assigned only to leaf queues, or queues without subqueues. That means it’s possible to assign traffic to main’s subqueues—qpri, qdef, qweb, and qdns—as well as rootq’s subqueue—spamd—as we just did with the match and pass rules, but not to rootq or main themselves. With all queue assignments in place, we can use systat queues to show the queues and their traffic:

6 users Load 0.31 0.28 0.34 Tue May 19 21:31:54 2015 QUEUE BW SCH PR PKTS BYTES DROP_P DROP_B QLEN BORR SUSP P/S B/S rootq 20M 0 0 0 0 0 main 20M 0 0 0 0 0 qdef 9M 48887 15M 0 0 0 qweb 9M 18553 8135K 0 0 0 qpri 600K 37549 2407K 0 0 0 qdns 200K 15716 1568K 0 0 0 spamd 1K 10590 661K 126 8772 47

The queues are shown indented to indicate their hierarchy, from root to leaf queues. The main queue and its subqueues—qpri, qdef, qweb, and qdns—are shown with their bandwidth allocations and number of bytes and packets passed. The DROP_P and DROP_B columns, which show the number of packets and bytes dropped, would appear if we had been forced to drop packets at this stage. QLEN is the number of packets waiting for processing, while the final two columns show live updates of packets and bytes per second.

For a more detailed view, use pfctl -vvsq to show the queues and their traffic:

queue rootq on xl0 bandwidth 20M qlimit 50 [ pkts: 0 bytes: 0 dropped pkts: 0 bytes: 0 ] [ qlength: 0/ 50 ] [ measured: 0.0 packets/s, 0 b/s ] queue main parent rootq on xl0 bandwidth 20M, min 1M, max 20M qlimit 100 [ pkts: 0 bytes: 0 dropped pkts: 0 bytes: 0 ] [ qlength: 0/100 ] [ measured: 0.0 packets/s, 0 b/s ] queue qdef parent main on xl0 bandwidth 9M, min 6M, max 18M default qlimit 50 [ pkts: 1051 bytes: 302813 dropped pkts: 0 bytes: 0 ] [ qlength: 0/ 50 ] [ measured: 2.6 packets/s, 5.64Kb/s ] queue qweb parent main on xl0 bandwidth 9M, min 6M, max 18M qlimit 50 [ pkts: 1937 bytes: 1214950 dropped pkts: 0 bytes: 0 ] [ qlength: 0/ 50 ] [ measured: 3.6 packets/s, 13.65Kb/s ] queue qpri parent main on xl0 bandwidth 600K, max 1M qlimit 50 [ pkts: 2169 bytes: 143302 dropped pkts: 0 bytes: 0 ] [ qlength: 0/ 50 ] [ measured: 6.6 packets/s, 3.55Kb/s ] queue qdns parent main on xl0 bandwidth 200K, min 12K burst 600K for 3000ms qlimit 50 [ pkts: 604 bytes: 65091 dropped pkts: 0 bytes: 0 ] [ qlength: 0/ 50 ] [ measured: 1.6 packets/s, 1.31Kb/s ] queue spamd parent rootq on xl0 bandwidth 1K, max 1K qlimit 300 [ pkts: 884 bytes: 57388 dropped pkts: 0 bytes: 0 ] [ qlength: 176/300 ] [ measured: 1.9 packets/s, 1Kb/s ]

This view shows that the queues receive traffic roughly as expected with the site’s typical workload. Notice that only a few moments after the rule set has been reloaded, the spamd queue is already backed up more than halfway to its qlimit setting, which seems to indicate that the queues are reasonably dimensioned to actual traffic.

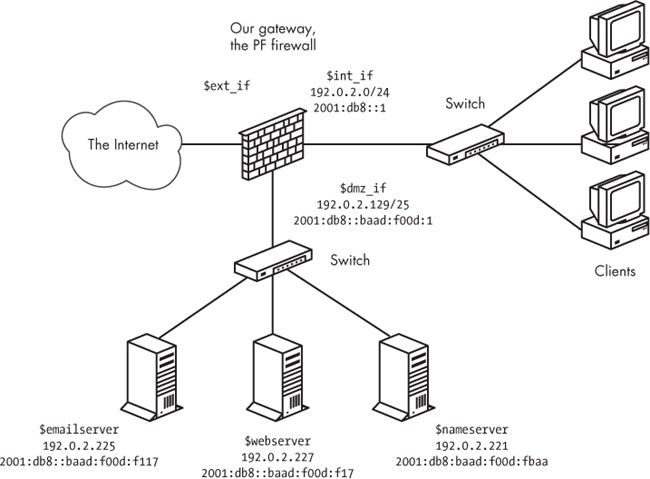

The DMZ Network, Now with Traffic Shaping

In Chapter 5, we set up a network with a single gateway and all externally visible services configured on a separate DMZ (demilitarized zone) network so that all traffic to the servers from both the Internet and the internal network had to pass through the gateway. That network schematic, illustrated in Chapter 5, is shown again in Figure 7-1. Using the rule set from Chapter 5 as the starting point, we’ll add some queuing in order to optimize our network resources. The physical and logical layout of the network will not change.

The most likely bottleneck for this network is the bandwidth for the connection between the gateway’s external interface and the Internet. Although the bandwidth elsewhere in our setup isn’t infinite, of course, the available bandwidth on any interface in the local network is likely to be less limiting than the bandwidth actually available for communication with the outside world. In order to make services available with the best possible performance, we need to set up the queues so that the bandwidth available at the site is made available to the traffic we want to allow. The interface bandwidth on the DMZ interface is likely either 100Mb or 1Gb, while the actual available bandwidth for connections from outside the local network is considerably smaller. This consideration shows up in our queue definitions, where the actual bandwidth available for external traffic is the main limitation in the queue setup.

queue ext on $ext_if bandwidth 2M

queue ext_main parent ext bandwidth 500K default

queue ext_web parent ext bandwidth 500K

queue ext_udp parent ext bandwidth 400K

queue ext_mail parent ext bandwidth 600K

queue dmz on $dmz_if bandwidth 100M

queue ext_dmz parent dmz bandwidth 2M

queue ext_dmz_web parent ext_dmz bandwidth 800K default

queue ext_dmz_udp parent ext_dmz bandwidth 200K

queue ext_dmz_mail parent ext_dmz bandwidth 1M

queue dmz_main parent dmz bandwidth 25M

queue dmz_web parent dmz bandwidth 25M

queue dmz_udp parent dmz bandwidth 20M

queue dmz_mail parent dmz bandwidth 20MNotice that for each interface, there’s a root queue with a bandwidth limitation that determines the allocation for all queues attached to that interface. In order to use the new queuing infrastructure, we need to make some changes to the filtering rules, too.

Note

Because any traffic not explicitly assigned to a specific queue is assigned to the default queue for the interface, be sure to tune your filtering rules as well as your queue definitions to the actual traffic in your network.

The main part of the filtering rules could end up looking like this after adding the queues:

pass in on $ext_if proto { tcp, udp } to $nameservers port domain

set queue ext_udp set prio (6,5)

pass in on $int_if proto { tcp, udp } from $localnet to $nameservers

port domain

pass out on $dmz_if proto { tcp, udp } to $nameservers port domain

set queue ext_dmz_udp set prio (6,5)

pass out on $dmz_if proto { tcp, udp } from $localnet to $nameservers

port domain set queue dmz_udp

pass in on $ext_if proto tcp to $webserver port $webports set queue ext_web

pass in on $int_if proto tcp from $localnet to $webserver port $webports

pass out on $dmz_if proto tcp to $webserver port $webports

set queue ext_dmz_web

pass out on $dmz_if proto tcp from $localnet to $webserver port $webports

set queue dmz_web

pass in log on $ext_if proto tcp to $mailserver port smtp

pass in log on $ext_if proto tcp from $localnet to $mailserver port smtp

pass in log on $int_if proto tcp from $localnet to $mailserver port $email

pass out log on $dmz_if proto tcp to $mailserver port smtp set queue ext_mail

pass in on $dmz_if proto tcp from $mailserver to port smtp set queue dmz_mail

pass out log on $ext_if proto tcp from $mailserver to port smtp

set queue ext_dmz_mailNotice that only traffic that will pass either the DMZ or the external interface is assigned to queues. In this configuration, with no externally accessible services on the internal network, queuing on the internal interface wouldn’t make much sense because that’s likely the part of the network with the least restricted available bandwidth. Also, as in earlier examples, there’s a case to be made for separating the queue assignments from the filtering part of the rule set by making a block of match rules responsible for queue assignment.

Using Queues to Handle Unwanted Traffic

So far, we’ve focused on queuing as a way to make sure specific kinds of traffic are let through as efficiently as possible. Now, we’ll look at two examples that present a slightly different way to identify and handle unwanted traffic using various queuing-related tricks to keep miscreants in line.

Overloading to a Tiny Queue

In Turning Away the Brutes, we used a combination of state-tracking options and overload rules to fill a table of addresses for special treatment. The special treatment we demonstrated in Chapter 6 was to cut all connections, but it’s equally possible to assign overload traffic to a specific queue instead. For example, consider the rule from our first queue example, shown here.

pass log quick on $ext_if proto tcp to port ssh flags S/SA

keep state queue (ssh_bulk, ssh_interactive) set prio (5,7)To create a variation of the overload table trick from Chapter 6, add state-tracking options, like this:

pass log quick on $ext_if proto tcp to port ssh flags S/SA

keep state (max-src-conn 15, max-src-conn-rate 5/3,

overload <bruteforce> flush global) queue (ssh_bulk, ssh_interactive)

set prio (5,7)Then, make one of the queues slightly smaller:

queue smallpipe parent main bandwidth 512

And assign traffic from miscreants to the small-bandwidth queue with this rule:

pass inet proto tcp from <bruteforce> to port $tcp_services queue smallpipe

As a result, the traffic from the bruteforcers would pass, but with a hard upper limit of 512 bits per second. (It’s worth noting that tiny bandwidth allocations may be hard to enforce on high-speed links due to the network stack’s timer resolution. If the allocation is small enough relative to the capacity of the link, packets that exceed the stated per-second maximum allocation may be transferred anyway, before the bandwidth limit kicks in.) It might also be useful to supplement rules like these with table-entry expiry, as described in Tidying Your Tables with pfctl.

Queue Assignments Based on Operating System Fingerprint

Chapter 6 covered several ways to use spamd to cut down on spam. If running spamd isn’t an option in your environment, you can use a queue and rule set based on the knowledge that machines that send spam are likely to run a particular operating system. (Let’s call that operating system Windows.)

PF has a fairly reliable operating system fingerprinting mechanism, which detects the operating system at the other end of a network connection based on characteristics of the initial SYN packets at connection setup. The following may be a simple substitute for spamd if you’ve determined that legitimate mail is highly unlikely to be delivered from systems that run that particular operating system.

pass quick proto tcp from any os "Windows" to $ext_if

port smtp set queue smallpipeHere, email traffic originating from hosts that run a particular operating system get no more than 512 bits per second of your bandwidth.

Transitioning from ALTQ to Priorities and Queues

If you already have configurations that use ALTQ for traffic shaping and you’re planning a switch to OpenBSD 5.5 or newer, this section contains some pointers for how to manage the transition. The main points are these:

The rules after transition are likely simpler. The OpenBSD 5.5 and newer traffic-shaping system has done away with the somewhat arcane ALTQ syntax with its selection of queuing algorithms, and it distinguishes clearly between queues and pure-priority shuffling. In most cases, your configuration becomes significantly more readable and maintainable after a conversion to the new traffic-shaping system.

For simple configurations, set prio is enough. The simplest queue discipline in ALTQ was priq, or priority queues. The most common simple use case was the two-priority speedup trick first illustrated by Daniel Hartmeier in the previously cited article. The basic two-priority configuration looks like this:

ext_if="kue0"

altq on $ext_if priq bandwidth 100Kb queue { q_pri, q_def }

queue q_pri priority 7

queue q_def priority 1 priq(default)

pass out on $ext_if proto tcp from $ext_if queue (q_def, q_pri)

pass in on $ext_if proto tcp to $ext_if queue (q_def, q_pri)In OpenBSD 5.5 and newer, the equivalent effect can be achieved with no queue definitions. Instead, you assign two priorities in a match or pass rule, like this:

match out on egress set prio (5, 6)

Here, the first priority will be assigned to regular traffic, while ACK and other packets with a low-delay ToS will be assigned the second priority and will be served faster than the regular packets. The effect is the same as in the ALTQ example we just quoted, with the exception of defined bandwidth limits and the somewhat dubious effect of traffic shaping on incoming traffic.

Priority queues can for the most part be replaced by set prio constructs. For pure-priority differentiation, applying set prio on a per pass or match rule basis is simpler than defining queues and assigning traffic and affects only the packet priority. ALTQ allowed you to define CBQ or HFSC queues that also had a priority value as part of their definition. Under the new queuing system, assigning priority happens only in match or pass rules, but if your application calls for setting both priority and queue assignment in the same rule, the new syntax allows for that, too:

pass log quick on $ext_if proto tcp to port ssh

queue (ssh_bulk, ssh_interactive) set prio (5,7)The effect is similar to the previous behavior shown in Splitting Your Bandwidth into Fixed-Size Chunks, and this variant may be particularly helpful during transition.

Priorities are now always important. Keep in mind that the default is 3. It’s important to be aware that traffic priorities are always enabled since OpenBSD 5.0, and they need to be taken into consideration even when you’re not actively assigning priorities. In old-style configurations that employed the two-priority trick to speed up ACKs and by extension all traffic, the only thing that was important was that there were two different priorities in play. The low-delay packets would be assigned to the higher-priority queue, and the net effect would be that traffic would likely pass faster, with more efficient bandwidth use than with the default FIFO queue. Now the default priority is 3, and setting the priority for a queue to 0, as a few older examples do, will mean that the traffic assigned that priority will be considered ready to pass only when there’s no higher-priority traffic left to handle.

For actual bandwidth shaping, HFSC works behind the scenes. Once you’ve determined that your specification calls for slicing available bandwidth into chunks, the underlying algorithm is always HFSC. The variety of syntaxes for different types of queues is gone. HFSC was chosen for its flexibility as well as the fact that it starts actively shaping traffic only once the traffic approaches one of the limits set by your queuing configuration. In addition, it’s possible to create CBQ-like configurations by limiting the queue definitions to only bandwidth declarations. Splitting Your Bandwidth into Fixed-Size Chunks (mentioned earlier) demonstrates a static configuration that implements CBQ as a subset of HFSC.

You can transition from ALTQ via the oldqueue mechanism. OpenBSD 5.5 supports legacy ALTQ configurations with only one minor change to configurations: The queue keyword was needed as a reserved word for the new queuing system, so ALTQ queues need to be declared as oldqueue instead. Following that one change (a pure search and replace operation that you can even perform just before starting your operating system upgrade), the configuration will work as expected.

If your setup is sufficiently complicated, go back to specifications and reimplement. The examples in this chapter are somewhat stylized and rather simple. If you have running configurations that have been built up incrementally over several years and have reached a complexity level, orders of magnitude larger than those described here, the new syntax may present an opportunity to define what your setup is for and produce a specification that is fit to reimplement in a cleaner and more maintainable configuration.

Going the oldqueue route and tweaking from there will work to some degree, but it may be easier to make the transition via a clean reimplementation from revised specification in a test environment where you can test whether your accumulated assumptions hold up in a the context of the new traffic-shaping system. Whatever route you choose for your transition, you’re more or less certain to end up with a more readable and maintainable configuration after your switch to OpenBSD 5.5 or newer.

Directing Traffic with ALTQ

ALTQ is the very flexible legacy mechanism for network traffic shaping, which was integrated into PF on OpenBSD[41] in time for the OpenBSD 3.3 release by Henning Brauer, who’s also the main developer of the priorities and queues system introduced in OpenBSD 5.5 (described in the previous sections of this chapter). OpenBSD 3.3 onward moved all ALTQ configuration into pf.conf to ease the integration of traffic shaping and filtering. PF ports to other BSDs were quick to adopt at least some optional ALTQ integration.

Note

OpenBSD 5.5 introduced a new queue system for traffic shaping with a radically different (and more readable) syntax that complements the always-on priority system introduced in OpenBSD 5.0. The new system is intended to replace ALTQ entirely after one transitional release. The rest of this chapter is useful only if you’re interested in learning about how to set up or maintain an ALTQ-based system.

Basic ALTQ Concepts

As the name suggests, ALTQ configurations are totally queue-centric. As in the more recent traffic-shaping system, ALTQ queues are defined in terms of bandwidth and attached to interfaces. Queues can be assigned priority, and in some contexts, they can have subqueues that receive a share of the parent queue’s bandwidth.

The general syntax for ALTQ queues looks like this:

altq on interface type [options ... ] main_queue { sub_q1, sub_q2 ..} queue sub_q1 [ options ... ] queue sub_q2 [ options ... ] { subA, subB, ... } [...] pass [ ... ] queue sub_q1 pass [ ... ] queue sub_q2

Note

On OpenBSD 5.5 and newer, ALTQ queues are denoted oldqueue instead of queue due to an irresolvable syntax conflict with the new queuing subsystem.

Once queue definitions are in place, you integrate traffic shaping into your rule set by rewriting your pass or match rules to assign traffic to a specific queue. Any traffic that you don’t explicitly assign to a specific queue gets lumped in with everything else in the default queue.

Queue Schedulers, aka Queue Disciplines

In the default networking setup, with no queuing, the TCP/IP stack and its filtering subsystem process the packets according to the FIFO discipline.

ALTQ offers three queue-scheduler algorithms, or disciplines, that can alter this behavior slightly. The types are priq, cbq, and hfsc. Of these, cbq and hfsc queues can have several levels of subqueues. The priq queues are essentially flat, with only one queue level. Each of the disciplines has its own syntax specifics, and we’ll address those in the following sections.

priq

Priority-based queues are defined purely in terms of priority within the total declared bandwidth. For priq queues, the allowed priority range is 0 through 15, where a higher value earns preferential treatment. Packets that match the criteria for higher-priority queues are serviced before the ones matching lower-priority queues.

cbq

Class-based queues are defined as constant-sized bandwidth allocations, as a percentage of the total available or in units of kilobits, megabits, or gigabits per second. A cbq queue can be subdivided into queues that are also assigned priorities in the range 0 to 7, and again, a higher priority means preferential treatment.

hfsc

The hfsc discipline uses the HFSC algorithm to ensure a “fair” allocation of bandwidth among the queues in a hierarchy. HFSC comes with the possibility of setting up queuing regimes with guaranteed minimum allocations and hard upper limits. Allocations can even vary over time, and you can even have fine-grained priority with a 0 to 7 range.

Because both the algorithm and the corresponding setup with ALTQ are fairly complicated, with a number of tunable parameters, most ALTQ practitioners tend to stick with the simpler queue types. Yet the ones who claim to understand HFSC swear by it.

Setting Up ALTQ

Enabling ALTQ may require some extra steps, depending on your choice of operating system.

ALTQ on OpenBSD

On OpenBSD 5.5, all supported queue disciplines are compiled into the GENERIC and GENERIC.MP kernels. Check that your OpenBSD version still supports ALTQ. If so, the only configuration you need to do involves editing your pf.conf.

ALTQ on FreeBSD

On FreeBSD, make sure that your kernel has ALTQ and the ALTQ queue discipline options compiled in. The default FreeBSD GENERIC kernel doesn’t have ALTQ options enabled, as you may have noticed from the messages you saw when running the /etc/rc.d/pf script to enable PF. The relevant options are as follows:

options ALTQ options ALTQ_CBQ # Class Bases Queuing (CBQ) options ALTQ_RED # Random Early Detection (RED) options ALTQ_RIO # RED In/Out options ALTQ_HFSC # Hierarchical Packet Scheduler (HFSC) options ALTQ_PRIQ # Priority Queuing (PRIQ) options ALTQ_NOPCC # Required for SMP build

The ALTQ option is needed to enable ALTQ in the kernel, but on SMP systems, you also need the ALTQ_NOPCC option. Depending on which types of queues you’ll be using, you’ll need to enable at least one of these: ALTQ_CBQ, ALTQ_PRIQ, or ALTQ_HFSC. Finally, you can enable the congestion-avoidance techniques random early detection (RED) and RED In/Out with the ALTQ_RED and ALTQ_RIO options, respectively. (See the FreeBSD Handbook for information on how to compile and install a custom kernel with these options.)

ALTQ on NetBSD

ALTQ was integrated into the NetBSD 4.0 PF implementation and is supported in NetBSD 4.0 and later releases. NetBSD’s default GENERIC kernel configuration doesn’t include the ALTQ-related options, but the GENERIC configuration file comes with all relevant options commented out for easy inclusion. The main kernel options are these:

options ALTQ # Manipulate network interfaces' output queues options ALTQ_CBQ # Class-Based queuing options ALTQ_HFSC # Hierarchical Fair Service Curve options ALTQ_PRIQ # Priority queuing options ALTQ_RED # Random Early Detection

The ALTQ option is needed to enable ALTQ in the kernel. Depending on the types of queues you’ll be using, you must enable at least one of these: ALTQ_CBQ, ALTQ_PRIQ, or ALTQ_HFSC.

Using ALTQ requires you to compile PF into the kernel because the PF loadable module doesn’t support ALTQ functionality. (See the NetBSD PF documentation at http://www.netbsd.org/Documentation/network/pf.html for the most up-to-date information.)

Priority-Based Queues

The basic concept behind priority-based queues (priq) is fairly straightforward. Within the total bandwidth allocated to the main queue, only traffic priority matters. You assign queues a priority value in the range 0 through 15, where a higher value means that the queue’s requests for traffic are serviced sooner.

Using ALTQ Priority Queues to Improve Performance

Daniel Hartmeier discovered a simple yet effective way to improve the throughput for his home network by using ALTQ priority queues. Like many people, he had his home network on an asymmetric connection, with total usable bandwidth low enough that he wanted better bandwidth utilization. In addition, when the line was running at or near capacity, oddities started appearing. One symptom in particular seemed to suggest room for improvement: Incoming traffic (downloads, incoming mail, and such) slowed down disproportionately whenever outgoing traffic started—more than could be explained by measuring the raw amount of data transferred. It all came back to a basic feature of TCP.

When a TCP packet is sent, the sender expects acknowledgment (in the form of an ACK packet) from the receiver and will wait a specified time for it to arrive. If the ACK doesn’t arrive within that time, the sender assumes that the packet hasn’t been received and resends it. And because in a default setup, packets are serviced sequentially by the interface as they arrive, ACK packets, with essentially no data payload, end up waiting in line while the larger data packets are transferred.

If ACK packets could slip in between the larger data packets, the result would be more efficient use of available bandwidth. The simplest practical way to implement such a system with ALTQ is to set up two queues with different priorities and integrate them into the rule set. Here are the relevant parts of the rule set.

ext_if="kue0"

altq on $ext_if priq bandwidth 100Kb queue { q_pri, q_def }

queue q_pri priority 7

queue q_def priority 1 priq(default)

pass out on $ext_if proto tcp from $ext_if queue (q_def, q_pri)

pass in on $ext_if proto tcp to $ext_if queue (q_def, q_pri)Here, the priority-based queue is set up on the external interface with two subordinate queues. The first subqueue, q_pri, has a high-priority value of 7; the other subqueue, q_def, has a significantly lower-priority value of 1.

This seemingly simple rule set works by exploiting how ALTQ treats queues with different priorities. Once a connection is set up, ALTQ inspects each packet’s ToS field. ACK packets have the ToS delay bit set to low, which indicates that the sender wanted the speediest delivery possible. When ALTQ sees a low-delay packet and queues of differing priorities are available, it assigns the packet to the higher-priority queue. This means that the ACK packets skip ahead of the lower-priority queue and are delivered more quickly, which in turn means that data packets are serviced more quickly. The net result is better performance than a pure FIFO configuration with the same hardware and available bandwidth. (Daniel Hartmeier’s article about this version of his setup, cited previously, contains a more detailed analysis.)

Using a match Rule for Queue Assignment

In the previous example, the rule set was constructed the traditional way, with the queue assignment as part of the pass rules. However, this isn’t the only way to do queue assignment. When you use match rules (available in OpenBSD 4.6 and later), it’s incredibly easy to retrofit this simple priority-queuing regime onto an existing rule set.

If you worked through the examples in Chapter 3 and Chapter 4, your rule set probably has a match rule that applies nat-to on your outgoing traffic. To introduce priority-based queuing to your rule set, you first add the queue definitions and make some minor adjustments to your outgoing match rule.

Start with the queue definition from the preceding example and adjust the total bandwidth to local conditions, as shown in here.

altq on $ext_if priq bandwidth $ext_bw queue { q_pri, q_def }

queue q_pri priority 7

queue q_def priority 1 priq(default)This gives the queues whatever bandwidth allocation you define with the ext_bw macro.

The simplest and quickest way to integrate the queues into your rule set is to edit your outgoing match rule to read something like this:

match out on $ext_if from $int_if:network nat-to ($ext_if) queue (q_def, q_pri)

Reload your rule set, and the priority-queuing regime is applied to all traffic that’s initiated from your local network.

You can use the systat command to get a live view of how traffic is assigned to your queues.

$ sudo systat queuesThis will give you a live display that looks something like this:

2 users Load 0.39 0.27 0.30 Fri Apr 1 16:33:44 2015 QUEUE BW SCH PR PKTS BYTES DROP_P DROP_B QLEN BORRO SUSPE P/S B/S q_pri priq 7 21705 1392K 0 0 0 12 803 q_def priq 12138 6759K 0 0 0 9 4620

Looking at the numbers in the PKTS (packets) and BYTES columns, you see a clear indication that the queuing is working as intended.

The q_pri queue has processed a rather large number of packets in relation to the amount of data, just as we expected. The ACK packets don’t take up a lot of space. On the other hand, the traffic assigned to the q_def queue has more data in each packet, and the numbers show essentially the reverse packet numbers–to–data size ratio as in to the q_pri queue.

Note

systat is a rather capable program on all BSDs, and the OpenBSD version offers several views that are relevant to PF and that aren’t found in the systat variants on the other systems as of this writing. We’ll be looking at systat again in the next chapter. In the meantime, read the man pages and play with the program. It’s a very useful tool for getting to know your system.

Class-Based Bandwidth Allocation for Small Networks

Maximizing network performance generally feels nice. However, you may find that your network has other needs. For example, it might be important for some traffic—such as mail and other vital services—to have a baseline amount of bandwidth available at all times, while other services—peer-to-peer file sharing comes to mind—shouldn’t be allowed to consume more than a certain amount. To address these kinds of requirements or concerns, ALTQ offers the class-based queue (cbq) discipline with a slightly larger set of options.

To illustrate how to use cbq, we’ll build on the rule sets from previous chapters within a small local network. We want to let the users on the local network connect to a predefined set of services outside their own network and let users from outside the local network access a Web server and an FTP server somewhere on the local network.

Queue Definition

All queues are set up on the external, Internet-facing interface. This approach makes sense mainly because bandwidth is more likely to be limited on the external link than on the local network. In principle, however, allocating queues and running traffic shaping can be done on any network interface. The example setup shown here includes a cbq queue for a total bandwidth of 2Mb with six subqueues.

altq on $ext_if cbq bandwidth 2Mb queue { main, ftp, udp, web, ssh, icmp }

queue main bandwidth 18% cbq(default borrow red)

queue ftp bandwidth 10% cbq(borrow red)

queue udp bandwidth 30% cbq(borrow red)

queue web bandwidth 20% cbq(borrow red)

queue ssh bandwidth 20% cbq(borrow red) { ssh_interactive, ssh_bulk }

queue ssh_interactive priority 7 bandwidth 20%

queue ssh_bulk priority 5 bandwidth 80%

queue icmp bandwidth 2% cbqThe subqueue main has 18 percent of the bandwidth and is designated as the default queue. This means any traffic that matches a pass rule but isn’t explicitly assigned to some other queue ends up here. The borrow and red keywords mean that the queue may “borrow” bandwidth from its parent queue, while the system attempts to avoid congestion by applying the RED algorithm.

The other queues follow more or less the same pattern up to the subqueue ssh, which itself has two subqueues with separate priorities. Here, we see a variation on the ACK priority example. Bulk SSH transfers, typically SCP file transfers, are transmitted with a ToS indicating throughput, while interactive SSH traffic has the ToS flag set to low delay and skips ahead of the bulk transfers. The interactive traffic is likely to be less bandwidth consuming and gets a smaller share of the bandwidth, but it receives preferential treatment because of the higher-priority value assigned to it. This scheme also helps the speed of SCP file transfers because the ACK packets for the SCP transfers will be assigned to the higher-priority subqueue.

Finally, we have the icmp queue, which is reserved for the remaining 2 percent of the bandwidth from the top level. This guarantees a minimum amount of bandwidth for ICMP traffic that we want to pass but that doesn’t match the criteria for being assigned to the other queues.

Rule Set

To make it all happen, we use these pass rules, which indicate which traffic is assigned to the queues and their criteria:

set skip on { lo, $int_if }

pass log quick on $ext_if proto tcp to port ssh queue (ssh_bulk, ssh_

interactive)

pass in quick on $ext_if proto tcp to port ftp queue ftp

pass in quick on $ext_if proto tcp to port www queue http

pass out on $ext_if proto udp queue udp

pass out on $ext_if proto icmp queue icmp

pass out on $ext_if proto tcp from $localnet to port $client_outThe rules for ssh, ftp, www, udp, and icmp assign traffic to their respective queues. The last catchall rule passes all other traffic from the local network, lumping it into the default main queue.

A Basic HFSC Traffic Shaper

The simple schedulers we have looked at so far can make for efficient setups, but network admins with traffic-shaping ambitions tend to look for a little more flexibility than can be found in the pure-priority-based queues or the simple class-based variety. The HFSC queuing algorithm (hfsc in pf.conf terminology) offers flexible bandwidth allocation, guaranteed lower and upper bounds for bandwidth available to each queue, and variable allocations over time, and it only starts shaping when there’s an actual need. However, the added flexibility comes at a price: The setup is a tad more complex than the other ALTQ types, and tuning your setup for an optimal result can be quite an interesting process.

Queue Definition

First, working from the same configuration we altered slightly earlier, we insert this queue definition early in the pf.conf file:

altq on $ext_if bandwidth $ext_bw hfsc queue { main, spamd }

queue main bandwidth 99% priority 7 qlimit 100 hfsc (realtime 20%, linkshare 99%)

{ q_pri, q_def, q_web, q_dns }

queue q_pri bandwidth 3% priority 7 hfsc (realtime 0, linkshare 3% red )

queue q_def bandwidth 47% priority 1 hfsc (default realtime 30% linkshare 47% red)

queue q_web bandwidth 47% priority 1 hfsc (realtime 30% linkshare 47% red)

queue q_dns bandwidth 3% priority 7 qlimit 100 hfsc (realtime (30Kb 3000 12Kb),

linkshare 3%)

queue spamd bandwidth 0% priority 0 qlimit 300 hfsc (realtime 0, upperlimit 1%,

linkshare 1%)The hfsc queue definitions take slightly different parameters than the simpler disciplines. We start off with this rather small hierarchy by splitting the top-level queue into two. At the next level, we subdivide the main queue into several subqueues, each with a defined priority. All the subqueues have a realtime value set—the guaranteed minimum bandwidth allocated to the queue. The optional upperlimit sets a hard upper limit on the queue’s allocation. The linkshare parameter sets the allocation the queue will have available when it’s backlogged—that is, when it’s started to eat into its qlimit allocation.

In case of congestion, each queue by default has a pool of 50 slots, the queue limit (qlimit), to keep packets around when they can’t be transmitted immediately. In this example, the top-level queues main and spamd both have larger-than-default pools set by their qlimit setting: 100 for main and 300 for spamd. Cranking up queue sizes here means we’re a little less likely to drop packets when the traffic approaches the set limits, but it also means that when the traffic shaping kicks in, we’ll see increased latency for connections that end up in these larger than default pools.

The queue hierarchy here uses two familiar tricks to make efficient use of available bandwidth:

It uses a variation of the high- and low-priority mix demonstrated in the earlier pure-priority example.

We speed up almost all other traffic (and most certainly the Web traffic that appears to be the main priority here) by allocating a small but guaranteed portion of bandwidth for name service lookups. For the

q_dnsqueue, we set up therealtimevalue with a time limit—after3000milliseconds, therealtimeallocation goes down to12Kb. This can be useful to speed connections that transfer most of their payload during the early phases.

Rule Set

Next, we tie the newly created queues into the rule set. If you have a filtering regime in place already, which we’ll assume you do, the tie-in becomes amazingly simple, accomplished by adding a few match rules.

match out on $ext_if from $air_if:network nat-to ($ext_if)

queue (q_def, q_pri)

match out on $ext_if from $int_if:network nat-to ($ext_if)

queue (q_def, q_pri)

match out on $ext_if proto tcp to port { www https } queue (q_web, q_pri)

match out on $ext_if proto { tcp udp } to port domain queue (q_dns, q_pri)

match out on $ext_if proto icmp queue (q_dns, q_pri)Here, the match rules once again do the ACK packet speedup trick with the high- and low-priority queue assignment, just as you saw earlier in the pure-priority-based system. The only exception is when we assign traffic to our lowest-priority queue, where we really don’t care to have any speedup at all.

pass in log on egress proto tcp to port smtp rdr-to 127.0.0.1 port spamd queue spamd

This rule is intended to slow down the spammers a little more on their way to our spamd. With a hierarchical queue system in place, systat queues shows the queues and their traffic as a hierarchy, too.

2 users Load 0.22 0.25 0.25 Fri Apr 3 16:43:37 2015 QUEUE BW SCH PRIO PKTS BYTES DROP_P DROP_B QLEN BORROW SUSPEN P/S B/S root_nfe0 20M hfsc 0 0 0 0 0 0 0 0 main 19M hfsc 7 0 0 0 0 0 0 0 q_pri 594K hfsc 7 1360 82284 0 0 0 11 770 q_def 9306K hfsc 158 15816 0 0 0 0.2 11 q_web 9306K hfsc 914 709845 0 0 0 50 61010 q_dns 594K hfsc 7 196 17494 0 0 0 3 277 spamd 0 hfsc 0 431 24159 0 0 0 2 174

The root queue is shown as attached to the physical interface—as nfe0 and root_nfe0, in this case. main and its subqueues—q_pri, q_def, q_web, and q_dns—are shown with their bandwidth allocations and number of bytes and packets passed. The DROP_P and DROP_B columns are where number of packets and bytes dropped, respectively, would appear if we had been forced to drop packets at this stage. The final two columns show live updates of packets per second and bytes per second.

Queuing for Servers in a DMZ

In Chapter 5, we set up a network with a single gateway but with all externally visible services configured on a separate DMZ network. That way, all traffic to the servers from both the Internet and the internal network had to pass through the gateway (see Figure 7-1).

With the rule set from Chapter 5 as our starting point, we’ll add some queuing in order to optimize our network resources. The physical and logical layout of the network will not change. The most likely bottleneck for this network is the bandwidth for the connection between the gateway’s external interface and the Internet at large. The bandwidth elsewhere in our setup isn’t infinite, of course, but the available bandwidth on any interface in the local network is likely to be less of a limiting factor than the bandwidth actually available for communication with the outside world. For services to be available with the best possible performance, we need to set up the queues so the bandwidth available at the site is made available to the traffic we want to allow.

In our example, it’s likely that the interface bandwidth on the DMZ interface is either 100Mb or 1Gb, while the actual available bandwidth for connections from outside the local network is considerably smaller. This consideration shows up in our queue definitions, where you clearly see that the bandwidth available for external traffic is the main limitation in the queue setup.

total_ext = 2Mb

total_dmz = 100Mb

altq on $ext_if cbq bandwidth $total_ext queue { ext_main, ext_web, ext_udp,

ext_mail, ext_ssh }

queue ext_main bandwidth 25% cbq(default borrow red) { ext_hi, ext_lo }

queue ext_hi priority 7 bandwidth 20%

queue ext_lo priority 0 bandwidth 80%

queue ext_web bandwidth 25% cbq(borrow red)

queue ext_udp bandwidth 20% cbq(borrow red)

queue ext_mail bandwidth 30% cbq(borrow red)

altq on $dmz_if cbq bandwidth $total_dmz queue { ext_dmz, dmz_main, dmz_web,

dmz_udp, dmz_mail }

queue ext_dmz bandwidth $total_ext cbq(borrow red) queue { ext_dmz_web,

ext_dmz_udp, ext_dmz_mail }

queue ext_dmz_web bandwidth 40% priority 5

queue ext_dmz_udp bandwidth 10% priority 7

queue ext_dmz_mail bandwidth 50% priority 3

queue dmz_main bandwidth 25Mb cbq(default borrow red) queue { dmz_main_hi,

dmz_main_lo }

queue dmz_main_hi priority 7 bandwidth 20%

queue dmz_main_lo priority 0 bandwidth 80%

queue dmz_web bandwidth 25Mb cbq(borrow red)

queue dmz_udp bandwidth 20Mb cbq(borrow red)

queue dmz_mail bandwidth 20Mb cbq(borrow red)Notice that the total_ext bandwidth limitation determines the allocation for all queues where the bandwidth for external connections is available. In order to use the new queuing infrastructure, we need to make some changes to the filtering rules, too. Keep in mind that any traffic you don’t explicitly assign to a specific queue is assigned to the default queue for the interface. Thus, it’s important to tune your filtering rules as well as your queue definitions to the actual traffic in your network.

With queue assignment, the main part of the filtering rules could end up looking like this:

pass in on $ext_if proto { tcp, udp } to $nameservers port domain

queue ext_udp

pass in on $int_if proto { tcp, udp } from $localnet to $nameservers

port domain

pass out on $dmz_if proto { tcp, udp } to $nameservers port domain

queue ext_dmz_udp

pass out on $dmz_if proto { tcp, udp } from $localnet to $nameservers

port domain queue dmz_udp

pass in on $ext_if proto tcp to $webserver port $webports queue ext_web

pass in on $int_if proto tcp from $localnet to $webserver port $webports

pass out on $dmz_if proto tcp to $webserver port $webports queue ext_dmz_web

pass out on $dmz_if proto tcp from $localnet to $webserver port $webports

queue dmz_web

pass in log on $ext_if proto tcp to $mailserver port smtp

pass in log on $ext_if proto tcp from $localnet to $mailserver port smtp

pass in log on $int_if proto tcp from $localnet to $mailserver port $email

pass out log on $dmz_if proto tcp to $mailserver port smtp queue ext_mail

pass in on $dmz_if from $mailserver to port smtp queue dmz_mail

pass out log on $ext_if proto tcp from $mailserver to port smtp

queue ext_dmz_mailNotice that only traffic that will pass either the DMZ interface or the external interface is assigned to queues. In this configuration, with no externally accessible services on the internal network, queuing on the internal interface wouldn’t make much sense because it’s likely the part of our network with the least restrictions on available bandwidth.

Using ALTQ to Handle Unwanted Traffic

So far, we’ve focused on queuing as a method to make sure specific kinds of traffic are let through as efficiently as possible given the conditions that exist in and around your network. Now, we’ll look at two examples that present a slightly different approach to identify and handle unwanted traffic in order to demonstrate some queuing-related tricks you can use to keep miscreants in line.

Overloading to a Tiny Queue

Think back to Turning Away the Brutes, where we used a combination of state-tracking options and overload rules to fill up a table of addresses for special treatment. The special treatment we demonstrated in Chapter 6 was to cut all connections, but it’s equally possible to assign overload traffic to a specific queue instead.

Consider this rule from our class-based bandwidth example in Class-Based Bandwidth Allocation for Small Networks.

pass log quick on $ext_if proto tcp to port ssh flags S/SA keep state queue (ssh_bulk, ssh_interactive)

We could add state-tracking options, as shown in here.

pass log quick on $ext_if proto tcp to port ssh flags S/SA keep state (max-src-conn 15, max-src-conn-rate 5/3, overload <bruteforce> flush global) queue (ssh_bulk, ssh_interactive)

Then, we could make one of the queues slightly smaller.

queue smallpipe bandwidth 1kb cbq

Next, we could assign traffic from miscreants to the small-bandwidth queue with the following rule.

pass inet proto tcp from <bruteforce> to port $tcp_services queue smallpipe

It might also be useful to supplement rules like these with table-entry expiry, as described in Tidying Your Tables with pfctl.

Queue Assignments Based on Operating System Fingerprint

Chapter 6 covered several ways to use spamd to cut down on spam. If running spamd isn’t an option in your environment, you can use a queue and rule set based on the common knowledge that machines that send spam are likely to run a particular operating system.

PF has a fairly reliable operating system fingerprinting mechanism, which detects the operating system at the other end of a network connection based on characteristics of the initial SYN packets at connection setup. The following may be a simple substitute for spamd if you’ve determined that legitimate mail is highly unlikely to be delivered from systems that run that particular operating system.

pass quick proto tcp from any os "Windows" to $ext_if port smtp queue smallpipe

Here, email traffic originating from hosts that run a particular operating system get no more than 1KB of your bandwidth, with no borrowing.

Conclusion: Traffic Shaping for Fun, and Perhaps Even Profit

This chapter has dealt with traffic-shaping techniques that can make your traffic move faster, or at least make preferred traffic pass more efficiently and according to your specifications. By now you should have at least a basic understanding of traffic-shaping concepts and how they apply to the traffic-shaping tool set you’ll be using on your systems.

I hope that the somewhat stylized (but functional) examples in this chapter have given you a taste of what’s possible with traffic shaping and that the material has inspired you to play with some of your own ideas of how you can use the traffic-shaping tools in your networks. If you pay attention to your network traffic and the underlying needs it expresses (see Chapter 9 and Chapter 10 for more on studying network traffic in detail), you can use the traffic-shaping tools to improve the way your network serves its users. With a bit of luck, your users will appreciate your efforts and you may even enjoy the experience.

[39] Daniel Hartmeier, one of the original PF developers, wrote a nice article about this problem, which is available at http://www.benzedrine.cx/ackpri.html. Daniel’s explanations use the older ALTQ priority queues syntax but include data that clearly illustrates the effect of assigning two different priorities to help ACKs along.

[40] This really dates the book, I know. In a few years, these numbers will seem quaint.

[41] The original research on ALTQ was presented in a paper for the USENIX 1999 conference. You can read Kenjiro Cho’s paper “Managing Traffic with ALTQ” online at http://www.usenix.org/publications/library/proceedings/usenix99/cho.html. The code turned up in OpenBSD soon after through the efforts of Cho and Chris Cappucio.