Chapter 6. Classes. Object-Oriented Style

Object-oriented programming (OOP) has evolved through the years from an endearing child to an annoying pimple-faced adolescent to the well-adjusted individual of today. Nowadays we have a better understanding of not only the power but also the inherent limitations of object technology. This in turn made the programming community aware that a gainful approach to creating solid designs is to combine the strengths of OOP with the strengths of other paradigms. That trend is quite visible—increasingly, today’s languages either adopt more eclectic features or are designed from the get-go to foster OOP in conjunction with other programming styles. D is in the latter category, and, at least in the opinion of some, it has done a quite remarkable job of keeping different programming paradigms in harmony. This chapter explores D’s object-oriented features and how they integrate with the rest of the language. For an in-depth treatment of object orientation, a good starting point is Bertrand Meyer’s classic Object-Oriented Software Construction [40] (for a more formal treatment, see Pierce’s Types and Programming Languages [46, Chapter 18]).

6.1 Classes

The unit of object encapsulation in D is the class. A class defines a cookie cutter for creating objects, defining how they look and feel. A class may specify constants, perclass state, per-object state, and methods. For example:

class Widget {

// A constant

enum fudgeFactor = 0.2;

// A shared immutable value

static immutable defaultName = "A Widget";

// Some state allocated for each Widget object

string name = defaultName;

uint width, height;

// A static method

static double howFudgy() {

return fudgeFactor;

}

// A method

void changeName(string another) {

name = another;

}

// A non-overridable method

final void quadrupleSize() {

width *= 2;

height *= 2;

}

}

Creation of an object of type Widget is achieved with the new expression new Widget (§ 2.3.6.1 on page 51), which you’d usually invoke to store its result in a named object. To access a symbol defined inside Widget, you need to prefix it with the object you want to operate on, followed by a dot. In case the accessed member is static, the class name suffices. For example:

unittest {

// Access a static method of Widget

assert(Widget.howFudgy() == 0.2);

// Create a Widget

auto w = new Widget;

// Play with the Widget

assert(w.name == w.defaultName); // Or Widget.defaultName

w.changeName("My Widget");

assert(w.name == "My Widget");

}

Note a little twist. The code above used w.defaultName instead of Widget.defaultName. Wherever you access a static member, an object name is as good as the class name. This is because the name to the left of the dot guides name lookup first and (if needed) object identification second. w is evaluated whether it ends up being used or not.

6.2 Object Names Are References

Let’s conduct a little experiment:

import std.stdio;

class A {

int x = 42;

}

unittest {

auto a1 = new A;

assert(a1.x == 42);

auto a2 = a1;

a2.x = 100;

assert(a1.x == 100);

}

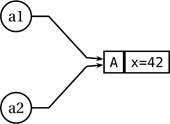

This experiment succeeds (all assertions pass), revealing that a1 and a2 are not distinct objects: changing a2 in fact went back and changed a1 as well. The two are only two distinct names for the same object, and consequently changing a2 affected a1. The statement auto a2 = a1; created no extra object of type A; it only made the existing object known by another name. Figure 6.1 illustrates this fact.

Figure 6.1. The statement auto a2 = a1; only adds one extra name for the same underlying object.

This behavior is consistent with the notion that all class objects are entities, meaning that they have “personality” and are not supposed to be duplicated without good reason. In contrast, value objects (e.g., built-in numbers) feature full copying; to define new value types, struct (Chapter 7) is the way to go.

So in the world of class objects there are objects, and then there are references to them. The imaginary arrows linking references to objects are called bindings—we say, for example, that a1 and a2 are bound to the same object, or have the same binding. The only way you can work on an object is to use a reference to it. As far as the object itself is concerned, once you have created it, it will live forever in the same place. What you can do if you get bored with some object is to bind your reference to another object. For example, consider that you want to swap two references:

unittest {

auto a1 = new A;

auto a2 = new A;

a1.x = 100;

a2.x = 200;

// Let's swap a1 and a2

auto t = a1;

a1 = a2;

a2 = t;

assert(a1.x == 200);

assert(a2.x == 100);

}

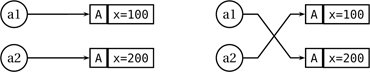

Instead of the last three lines we could have availed ourselves of the universal routine swap found in the module std.algorithm by calling swap(a1, a2), but doing the rewiring explicitly makes what’s going on clearer. Figure 6.2 illustrates the bindings before and after swapping.

Figure 6.2. The bindings before and after swapping two references. The swapping process changes the way references are wired to the objects; the objects themselves stay in the same place.

The objects themselves stay put, that is, their locations in memory never change after creation. Just as remarkably, the object never goes away—once it’s created, you can count on it being there forever. (A garbage collector recycles in the background memory of objects that are not used anymore.) The references to the objects (in this case a1 and a2) can be convinced to “look” elsewhere by rebinding them. When the runtime system figures out that an object has no more references bound to it, it can recycle the object’s memory, an activity known as garbage collection. Such a behavior is fundamentally different from value semantics (e.g., int), in which there is no indirection and no rebinding—each name is stuck directly to the value it manipulates.

A reference that is not bound to any object is a null reference. Upon default initialization with .init, class references are null. A reference can be compared to null and assigned from null. The following assertions succeed:

unittest {

A a;

assert(a is null);

a = new A;

assert(a !is null);

a = null;

assert(a is null);

a = A.init;

assert(a is null);

}

Accessing a member of an unbound (null) reference results in a hardware fault that terminates the application (or, on some systems and under certain conditions, starts the debugger). If you try to access a non-static member of a reference and the compiler can prove statically that the reference would definitely be null, it will refuse to compile the code.

In an attempt to not annoy you too much, the compiler is conservative—if a reference may be null but not always, the compiler lets the code go through and defers any errors to runtime. For example:

A a;

if (‹condition›) {

a = new A;

}

...

if (‹condition›) {

a.x = 43; // Fine

}

The compiler lets such code go through even though it’s possible that ‹condition› changes value between the two evaluations. In the general case it would be very difficult to verify proper object initialization, so the compiler assumes you know what you’re doing except for the simplest cases where it can vouch that you are trying to use a null reference in a faulty manner.

D’s reference semantics approach to handling class objects is similar to that found in many object-oriented languages. Using reference semantics and garbage collection for class objects has both positive and negative consequences, including the following:

+ Polymorphism. The level of indirection brought by the consistent use of references enables support for polymorphism. All references have the same size, but related objects can have different sizes even though they have ostensibly the same type (through the use of inheritance, which we’ll discuss shortly). Because references have the same size regardless of the size of the object they refer to, you can always substitute references to derived objects for references to base objects. Also, arrays of objects work properly even when the actual objects in the array have different sizes. If you’ve used C++, you sure know about the necessity of using pointers with polymorphism, and about the various lethal problems you encounter when you forget to.

+ Safety. Many of us see garbage collection as just a convenience that simplifies coding by relieving the programmer of managing memory. Perhaps surprisingly, however, there is a very strong connection between the infinite lifetime model (which garbage collection makes practical) and memory safety. Where there’s infinite lifetime, there are no dangling references, that is, references to some object that has gone out of existence and has had its memory reused for an unrelated object. Note that it would be just as safe to use value semantics throughout (have auto a2 = a1; duplicate the A object that a1 refers to and have a2 refer to the copy). That setup, however, is hardly interesting because it disallows creation of any referential structure (such as lists, trees, graphs, and more generally shared resources).

– Allocation cost. Generally, class objects must reside in the garbage-collected heap, which generally is slower and eats more memory than memory on the stack. The margin has diminished quite a bit lately but is still nonzero.

– Long-range coupling. The main risk with using references is undue aliasing. Using reference semantics throughout makes it all too easy to end up with references to the same object residing in different—and unexpected—places. In Figure 6.1 on page 177, a1 and a2 may be arbitrarily far from each other as far as the application logic is concerned, and additionally there may be many other references hanging off the same object. Interestingly, if the referred object is immutable, the problem vanishes—as long as nobody modifies the object, there is no coupling. Difficulties arise when one change effected in a certain context affects surprisingly and dramatically the state as seen in a different part of the application. Another way to alleviate this problem is explicit duplication, often by calling a special method clone, whenever passing objects around. The downside of that technique is that it is based on discipline and that it could lead to inefficiency if several parts of an application decide to conservatively clone objects “just to be sure.”

Contrast reference semantics with value semantics à la int. Value semantics has advantages, notably equational reasoning: you can always substitute equals for equals in expressions without altering the result. (In contrast, references that use method calls to modify underlying objects do not allow such reasoning.) Speed is also an important advantage of value semantics, but if you want the dynamic generosity of polymorphism, reference semantics is a must. Some languages tried to accommodate both, which earned them the moniker of “impure,” in contrast to pure object-oriented languages that foster reference semantics uniformly across all types. D is impure and up-front about it. You get to choose at design time whether you use OOP for a particular type, in which case you use class; otherwise, you go with struct and forgo the particular OOP amenities that go hand in hand with reference semantics.

6.3 It’s an Object’s Life

Now that we have a general notion of objects’ whereabouts, let’s look in detail at their life cycle. To create an object, you use a new expression:

import std.math;

class Test {

double a = 0.4;

double b;

}

unittest {

// Use a new expression to create an object

auto t = new Test;

assert(t.a == 0.4 && isnan(t.b));

}

Issuing new Test constructs a default-initialized Test object, which is a Test with each field initialized to its default value. Each type T has a statically known default value, accessible as T.init (see Table 2.1 on page 30 for the .init values of basic types). If you’d prefer to initialize some member variables to something other than their types’ .init value, you can specify a statically known initializer when you define the member, as shown in the example above for a. The unittest above passes because a is explicitly initialized with 0.4, and b is left alone so it is implicitly initialized with double.init, which is “Not a Number” (NaN).

6.3.1 Constructors

Of course, most of the time initializing fields with some statically known values is not enough. To execute code upon the creation of an object, you can use special functions called constructors. A constructor is a function with the name this that declares no return type:

class Test {

double a = 0.4;

int b;

this(int b) {

this.b = b;

}

}

unittest {

auto t = new Test(5);

}

As soon as a class defines at least one constructor, the implicit default constructor is not available anymore. With Test defined as above, trying

does not work anymore. This interdiction was intended to help avoiding a common bug: a designer carefully defines a number of constructors with parameters but forgets all about the default constructor. As is often the case in D, such protection for the forgetful is easy to avoid by simply telling the compiler that yes, you did remember:

class Test {

double a = 0.4;

int b;

this(int b) {

this.b = b;

}

this() {} // Default constructor,

// all fields implicitly initialized

}

Inside a method—except static ones; see § 6.5 on page 196—the reference this is implicitly bound to the object receiving the call. That reference is occasionally useful, as in the example above that illustrates a common naming convention in constructors: if a parameter is meant to initialize a member, give it the same name and disambiguate the member from the parameter by prefixing the former with an explicit reference to this. Without the prefix this, a parameter hides the homonym members.

Although you can modify this.field for any field, you can never rebind this itself, which effectively behaves like an rvalue:

class NoGo {

void fun() {

// Let's just rebind this to a different object

this = new NoGo; // Error! Cannot modify 'this'!

}

}

The usual function overloading rules (§ 5.5 on page 142) apply to constructors: a class may define any number of constructors as long as they have distinct signatures (different in the number of parameters or in the type of at least one parameter).

6.3.2 Forwarding Constructors

Consider a class Widget that defines two constructors:

class Widget {

this(uint height) {

this.width = 1;

this.height = height;

}

this(uint width, uint height) {

this.width = width;

this.height = height;

}

uint width, height;

...

}

The code above is quite repetitive and gets only worse for larger classes, but fortunately one constructor may defer to another:

class Widget {

this(uint height) {

this(1, height); // Defer to the other constructor

}

this(uint width, uint height) {

this.width = width;

this.height = height;

}

uint width, height;

...

}

There are certain limitations when it comes to calling constructors explicitly à la this(1, h). First, you can issue such a call only from within another constructor. Second, if you decide to make such a call, you must convince the compiler that you’re making exactly one such call throughout your constructor, no matter what. For example, this constructor would be invalid:

this(uint h) {

if (h > 1) {

this(1, h);

}

// Error! One path skips constructor

}

In the case above, the compiler figures there are cases in which another constructor is not called and flags that situation as an error. The intent is to have a constructor either carry the construction itself or forward the task to another constructor. The situations in which a constructor may or may not choose to defer to another constructor are rejected.

Invoking the same constructor twice is incorrect as well:

You don’t want a doubly initialized object any more than one you forgot to initialize, so this case is also flagged as a compile-time error. In short, a constructor is allowed to call another constructor either exactly zero times or exactly one time. This claim is verified during compilation by using simple flow analysis.

6.3.3 Construction Sequence

In all languages, object construction is a bit tricky. The construction process starts with a chunk of untyped memory and deposits in it information that makes that chunk look and feel like a class object. A certain amount of magic is always needed.

In D, object construction takes the following steps:

- Allocation. The runtime allocates on the heap a chunk of raw memory large enough to store the non-

staticfields of the object. All class-based objects are dynamically allocated—unlike in C++, there is no way to allocate a class object on the stack. If allocation fails, construction is abandoned by throwing an exception. - Field initialization. Each field is initialized to its default value. As discussed above, the default field value is the one specified in the field declaration with

= valueor, absent that, the.initvalue of the field’s type. - Branding. After default field initialization has taken place, the object is branded as a full-fledged

T, even before the actual constructor gets called. The branding process is implementation-dependent and usually consists of initializing one or more hidden fields with type-dependent information. - Constructor call. Finally, the compiler issues a call to the applicable constructor. If the class defines no constructor, this step is skipped.

Since the object is considered alive and well right after the default field initialization, it is highly recommended that the field initializers always put the object in a meaningful state. Then, the actual constructor does adjustments that put the object in an interesting state (which is, of course, also meaningful).

In case your constructor reassigns some fields, the double assignment should not be an efficiency problem. Most of the time, if the body of the constructor is simple enough, the compiler should be able to figure out that the first assignment is redundant and perform the dark-humored “dead assignment elimination” optimization.

If efficiency is an absolutely essential concern, you may specify = void as a field initializer for certain fields, in which case you must be very careful to initialize that member in the constructor. You might find = void useful with fixed-size arrays. It is difficult for the compiler to optimize double initialization of all array elements, so you can give it a hand. The code below efficiently initializes a fixed-size array with 0.0, 0.1, 0.2, . . ., 1.28:

class Transmogrifier {

double[128] alpha = void;

this() {

foreach (i, ref e; alpha) {

e = i * 0.1;

}

}

...

}

Sometimes, a design may ask for certain fields to be left deliberately uninitialized. For example, Transmogrifier may track the already used portion of alpha in a separate variable usedAlpha, initially zero. The object primitives know that only the portion of alphas from zero up to a size usedAlpha are actually initialized:

class Transmogrifier {

double[128] alpha = void;

size_t usedAlpha;

this() {

// Leave usedAlpha = 0 and alpha uninitialized

}

...

}

Initially usedAlpha is zero, which is all the initialization that Transmogrifier needs. As usedAlpha grows, the code must never read elements in the interval alpha[usedAlpha .. $] before writing them. This is, of course, stuff that you, not the compiler, must ensure—which illustrates the inevitable tension that sometimes exists between efficiency and static verifiability. Although such optimizations are often frivolous, there are cases in which unnecessary compulsive initializations could sensibly affect bottom-line results, so having an opt-in escape hatch is reassuring.

6.3.4 Destruction and Deallocation

D maintains a garbage-collected heap for all class objects. Once an object is successfully allocated, it may be considered to live forever as far as the application is concerned. The garbage collector recycles the memory used by an object only when it can prove there are no more accessible references to that object. This setup makes for clean, safe class-based code.

For certain classes, it is important to have a hook on the termination process so they can free additional resources that they might have acquired. Such classes may define a destructor, introduced as a special function with the name ~this:

import core.stdc.stdlib;

class Buffer {

private void* data;

// Constructor

this() {

data = malloc(1024);

}

// Destructor

~this() {

free(data);

}

...

}

The example above illustrates an extreme situation—a class maintaining its own raw memory buffer. Most of the time a class would use properly encapsulated resources, so there is little need for defining destructors.

6.3.5 Tear-Down Sequence

Like construction, tearing an object down follows a little protocol:

- Right after branding (step 3 in the construction process) the object is considered alive and put under the scrutiny of the garbage collector. Note that this happens even if the user-defined constructor throws an exception later. Given that default initialization and branding cannot fail, it follows that an object that was successfully allocated is considered a full-fledged object as far as the garbage collector is concerned.

- The object is used throughout the program.

- All accessible references to the object are gone; no code could possibly reach the object anymore.

- At some implementation-dependent point, the system acknowledges that the object’s memory may be recycled and invokes its destructor.

- At a later point in time (either immediately after calling the destructor or later on) the system reuses the object’s memory.

One important detail regarding the last two steps is that the garbage collector makes sure that an object’s destructor can never access an already deallocated object. It is possible to access an already destroyed object, just not deallocated; in D, destroyed objects hold their memory for a short while, until their peers get destroyed. If that were not the case, destroying and deallocating objects that refer to each other in a cycle (e.g., circular lists) would be impossible to implement safely.

The life cycle described above may be amended in several ways. First, it is very possible that an application ends before ever reaching step 4. This is often the case for small applications running on systems with enough memory. In that case, D assumes that exiting the application will de facto free all resources associated with it, so it does not invoke any destructor.

Another way to amend the life cycle of an object is to explicitly invoke its destructor. This is accomplished by calling the clear function defined in module object (the standard library module that is imported automatically in any compilation).

unittest {

auto b = new Buffer;

...

clear(b); // Get rid of b's extra state

... // b is still usable here

}

By calling clear(b), the user expresses the intent to explicitly invoke b’s destructor (if any), obliterate that object’s state with Buffer.init, and call Buffer’s default constructor. However, unlike in C++, clear does not dispose of the object’s own memory and there is no delete operator. (D used to have a delete operator, but it was deprecated.) You still can free memory manually if you really, really know what you’re doing by calling the function GC.free() found in the module core.memory. Freeing memory is unsafe, but calling clear is safe because no memory goes away so there’s no risk of dangling pointers. After clear(obj), the object obj remains eminently accessible and usable for any purpose, even though it does not contain any interesting state. For example, the following is correct D code:

unittest {

auto b = new Buffer;

auto b1 = b; // Extra alias for b

clear(b);

assert(b1.data is null); // The extra alias still refers to

// the (valid) chassis of b

}

So after you invoke clear, the object is still alive and well, but its destructor has been called and the object is now carrying its default-constructed state. Interestingly, during the next garbage collection, the destructor of the object is called again. This is because, obviously, the garbage collector has no idea in what state you have left the object.

Why this behavior? The answer is simple—divorcing object tear-down from memory deallocation gives you manual control over expensive resources that the object might control (such as files, sockets, mutexes, and system handles) while at the same time ensuring memory safety. You will never create dangling pointers by using new and clear. (Of course, if you get your hands greasy by using C’s malloc and free or the aforementioned GC.free, you do expose yourself to the dangers of dangling pointers.) Generally, it is wise to separate resource disposal (safe) with memory recycling (unsafe). Memory is fundamentally different from any other resource because it is the physical support of the type system. Deallocate it unwittingly, and you are liable to break any guarantee that the type system could ever make.

6.3.6 Static Constructors and Destructors

Inside a class and essentially anywhere in D, static data must always be initialized with compile-time constants. To allow for an orderly means to execute code during thread startup, the compiler allows defining the special function static this(). The code doing module-level and class-level initialization is collected together, and the runtime support library proceeds with static initialization in an orderly fashion.

Inside a class you can define one or more default constructors prefixed by static:

class A {

static A a1;

static this() {

a1 = new A;

}

...

static this() {

a2 = new A;

}

static A a2;

}

Such functions are called static class constructors. When loading the application and before executing main, the compiler executes each static class constructor in turn, in the order they appeared in the source code. In the example above, a1 will be initialized before a2. The order of execution of static class constructors in distinct classes inside the same module is again dictated by the lexical order. Static class constructors in unrelated modules are executed in an unspecified order. Finally and most interestingly, static class constructors of classes that are in interdependent modules are ordered to eliminate the possibility of a class ever being used before its static this() has run.

initialization orderHere’s how the ordering works. Consider class A defined in module MA and class B defined in module MB. The following situations may occur:

- At most one of

AandBdefines a static class constructor. Then there is no ordering to worry about. - Neither

MAnorMB imports the other. Then the ordering is unspecified—any order works because the two modules don’t depend on each other. MA importsMB. ThenA’s static class constructors run beforeB’s.MB importsMA. ThenB’s static class constructors run beforeA’s.MA importsMBandMB importsMA. Then a “cyclic dependency” error is signaled and execution is abandoned during program loading.

This reasoning does not really depend on classes A and B, just on the modules themselves and their import relationships. Chapter 11 discusses the matter in detail.

If any static class constructor fails by throwing an exception, the program is terminated. If all succeed, classes are also given a chance to clean things up during thread shutdown by defining static class destructors, which predictably look like this:

class A {

static A a1;

static ~this() {

clear(a1);

}

...

static A a2;

static ~this() {

clear(a2);

}

}

Static destructors are run during thread shutdown. Within each module they are run in reverse order of their definition. In the example above, a2’s destructor will get called before a1’s. When multiple modules are involved, the order of modules invoking static class destructors is the exact reverse of the order in which the modules were given a chance to call their static class constructor. It’s reversed turtles, all the way up.

6.4 Methods and Inheritance

We’re now experts in creating and obliterating objects, so let’s take a look at using them. Most of the interaction with an object is carried out by calling its methods. (In some languages that activity is known as “sending messages” to the object.) A method definition looks much like a regular function definition, the only difference being that it occurs inside a class. To focus the description on an example, say you build a Rolodex application that allows you to store and display contact information. The unit of information—one virtual business card—could then be a class Contact. Among other things, it might define a method specifying the background color used when displaying the contact:

class Contact {

string bgColor() {

return "Gray";

}

}

unittest {

auto c = new Contact;

assert(c.bgColor() == "Gray");

}

The interesting part starts when you decide that a class should inherit another. For example, certain contacts are friends, and for those we use a different background color:

class Friend : Contact {

string currentBgColor = "LightGreen";

string currentReminder;

override string bgColor() {

return currentBgColor;

}

string reminder() {

...

return currentReminder;

}

}

By declaring inheritance from Contact through the notation : Contact, an instance of class Friend will contain everything that a Contact has, plus Friend’s additional state (in this case, currentBgColor) and methods (in this case, reminder).

We call Friend a subclass of Contact and Contact a superclass of Friend. By virtue of subclassing, you can substitute a Friend value wherever a Contact value is expected:

unittest {

Friend f = new Friend;

Contact c = f; // Substitute a Friend for a Contact

auto color = c.bgColor(); // Call a Contact method

}

If the substituted Friend would behave precisely like a Contact, there would be little if any impetus to use Friends. One key feature of object technology is that it allows a derived class to override functions in the base class and therefore customize behavior in a modular manner. Predictably, overriding is introduced with the override keyword (in Friend’s definition of bgColor), which indicates that calling c.bgColor() against an object of type Contact that was actually substituted with an object of type Friend will always invoke Friend’s version of the method. Friends will always be Friends, even when the compiler thinks they’re simple Contacts.

6.4.1 A Terminological Smörgåsbord

Object technology has had a long and successful history in both academia and industry. Consequently, it has been the focus of much work and has spawned a fair amount of terminology, which can get confusing at times. Let’s stop for a minute to review the nomenclature.

If a class D directly inherits a class B, D is called a subclass of B, a child class of B, or a class derived from B. Conversely, B is called a superclass, parent class, or base class of D.

A class X is a descendant of class B if and only if either X is a child of B, or X is a descendant of a child of B. This definition is recursive, which, put another way, means that if you walk up X’s parent and then X’s parent’s parent and so on, at some point you’ll meet B.

This book uses the notions of parent/child and ancestor/descendant throughout because these phrases distinguish the notion of direct versus possibly indirect inheritance more precisely than the terms superclass/subclass.

Oddly enough, although classes are types, subtype is not the same thing as subclass (and supertype is not the same thing as superclass). Subtyping is a more general notion that means a type S is a subtype of type T if a value of type S can be safely used in all contexts where a value of type T is expected. Note that this definition does not require or mention inheritance. Indeed, inheritance is but one way of achieving subtyping, but there are other means in general (and in D). The relationship between subtyping and inheritance is that the descendants of a class C plus C itself are all subtypes of C. A subtype of C that is different from C is a proper subtype.

6.4.2 Inheritance Is Subtyping. Static and Dynamic Type

Let’s exemplify how inheritance induces subtyping. As mentioned, an object of the derived class is always substitutable for an object of the base class:

class Contact { ... }

class Friend : Contact { ... }

void fun(Contact c) { ... }

unittest {

auto c = new Contact; // c has type Contact

fun(c);

auto f = new Friend; // f has type Friend

fun(f);

}

Although fun expects a Contact object, passing f is fair game because Friend is a subclass (and therefore a subtype) of Contact.

When subtyping is in effect, it is very often possible that the actual type of an object is partially “forgotten.” Consider:

class Contact { string bgColor() { return ""; } }

class Friend : Contact {

override string bgColor() { return "LightGreen"; }

}

unittest {

Contact c = new Friend; // c has type Contact

// but really refers to a Friend

assert(c.bgColor() == "LightGreen");

// It's a friend indeed!

}

Given that c has type Contact, it could be used only in ways any Contact object could be used, even though it has been bound to an object of type Friend. For example, you can’t call c.reminder because that method is specific to Friend and not present in Contact. However, the assert above shows that friends will always be friends: calling c.bgColor reveals that the Friend-specific method gets called. As discussed in the section on construction (§ 6.3 on page 181), once an object is constructed it will just live forever, so the Friend object created with new never goes away. The interesting twist that occurs is that the reference c bound to it has type Contact, not Friend. In that case we say that c has static type Contact and dynamic type Friend. An unbound (null) reference has no dynamic type.

Teasing out the Friend that’s hiding under the disguise of a Contact—or in general a descendant from an ancestor—is a bit more elaborate. For one thing, the operation may fail: what if this contact didn’t really refer to a Friend? Most of the time, the compiler wouldn’t be able to tell. To do such extraction you’d need to rely on a cast:

unittest {

auto c = new Contact; // c has static and dynamic type Contact

auto f = cast(Friend) c;

assert(f is null); // f has static type Friend and is unbound

c = new Friend; // Static: Contact, dynamic: Friend

f = cast(Friend) c; // Static: Friend, dynamic: Friend

assert(f !is null); // Passes!

}

6.4.3 Overriding Is Only Voluntary

The override keyword in the signature of Friend.bgColor is required, which at first sight is a bit annoying. After all, the compiler could figure out that overriding is in effect all by itself and wire things appropriately. So why was override deemed necessary?

The answer is related to maintainability. Indeed, the compiler has no trouble figuring out automatically which methods you wanted to override. The problem is, it has no means to determine which methods you did not mean to override. Such a situation may occur when you change the base class after having defined the derived class. Imagine, for example, that class Contact initially defines only the bgColor method and you derive Friend from it and override bgColor as shown in the snippet above. You may also define another method in Friend, such as Friend.reminder, which allows you to retrieve reminders about that particular friend. If later on someone else (including you three months later) defines a reminder method for Contact with some other meaning, you now have the odd bug that calls to Contact.reminder get routed through Friend.reminder when passed to a Contact or a Friend, something that Friend was unprepared for.

The converse situation is just as pernicious, if not more so. Say, for example, that after the initial design, Contact decides to remove a method or change its name. The designer would have to manually go through all of Contact’s derived classes and decide what to do with the now orphaned methods. This is a highly error-prone activity and is sometimes impossible to carry out in its entirety when parts of a hierarchy are not writable by the base class designer.

So requiring override allows you to modify base classes without risking unexpected harm to derived classes.

6.4.4 Calling Overridden Methods

Sometimes, an overriding method wants to call the very method it is overriding. Consider, for example, a graphical widget Button that inherits a Clickable class. The Clickable class knows how to dispatch button presses to listeners but is not concerned with visual effects at all. To introduce visual feedback, Button overrides the onClick method defined by Clickable and introduces the visual effects part but also wants to invoke Clickable.onClick to carry out the dispatch part.

class Clickable {

void onClick() { ... }

}

class Button : Clickable {

void drawClicked() { ... }

override void onClick() {

drawClicked(); // Introduce graphical effect

super.onClick(); // Dispatch click to listeners

}

}

To call the overridden method, use the predefined alias super, which instructs the compiler to access a method as it was defined in the parent class. You are free to call any method, not only the method being currently overridden (for example, you can issue super.onDoubleClick() from within Button.onClick). To be entirely honest, the accessed symbol doesn’t even have to be a method name; it could be a field name as well, or really any symbol. For example:

class Base {

double number = 5.5;

}

class Derived : Base {

int number = 10;

double fun() {

return number + super.number;

}

}

Derived.fun accesses its own member and also the member in its base class, which incidentally has a different type.

The general means to access members in ancestors is to use Classname.membername. In fact, super is nothing but an alias for whatever name the current base class has. In the example above, writing Base.number is entirely equivalent to writing super.number. The obvious difference is that super leads to more maintainable code: if you change the base of a class, you don’t need to search and replace names.

With explicit class names, you can jump more than one inheritance level. Explicitly qualifying a method name with super or a class name is also a tad faster because the compiler knows exactly which function to dispatch to. If the symbol involved is anything but an overridable method, the explicit qualification affects only visibility but not speed.

Although destructors (§ 6.3.4 on page 186) are just methods, destructor call handling is a bit different. You cannot issue a call to super’s destructor, but when calling a destructor (either during a garbage collection cycle or in response to a clear(obj) request), D’s runtime support always calls all destructors all the way up in the hierarchy.

6.4.5 Covariant Return Types

Continuing the example with Widget, TextWidget, and VisibleTextWidget, consider that you want to add code that duplicates a Widget. In that case, if the duplicated object is a Widget, the copy will also be a Widget; if it is a TextWidget, the copy will be a TextWidget as well; and so on. A way to achieve proper duplication is by defining a method duplicate in the base class and by requiring every derived class to implement it:

class Widget {

...

this(Widget source) {

... // Copy state

}

Widget duplicate() {

return new Widget(this); // Allocates memory

// and calls this(Widget)

}

}

So far, so good. Let’s look at the corresponding override in the TextWidget class:

class TextWidget : Widget {

...

this(TextWidget source) {

super(source);

... // Copy state

}

override Widget duplicate() {

return new TextWidget(this);

}

}

Everything is correct, but there is a notable loss of static information: TextWidget.duplicate actually returns a Widget object, not a TextWidget object. But the result type of TextWidget.duplicate is TextWidget as long as we look inside that function. However, that information is lost as soon as TextWidget.duplicate returns because the return type of TextWidget.duplicate is Widget—the same as Widget.duplicate’s return type. Therefore, the following code does not work, although in a perfect world it should:

void workWith(TextWidget tw) {

TextWidget clone = tw.duplicate(); // Error!

// Cannot convert a Widget to a TextWidget!

...

}

To maximize the availability of static type information, D defines a feature known as covariant return types. As snazzy as it sounds, covariance of return types is rather simple: if a base type returns some class type C, an overriding function is allowed to return not only C, but any class derived from C. With this feature, you can declare TextWidget.duplicate to return TextWidget. Just as important, sneaking “covariant return types” into a conversation makes you sound pretty cool. (Kidding. Really. Do not attempt.)

6.5 Class-Level Encapsulation with static Members

Sometimes it’s useful to encapsulate not only fields and methods, but regular functions and (gasp) global data inside a class. Such functions and data should have no special property aside from being scope inside the class. To share regular functions and data among all objects of a class, introduce them with the static keyword:

class Widget {

static Color defaultBgColor;

static Color combineBackgrounds(Widget bottom, Widget top) {

...

}

}

Inside a static method, there is no this reference. This is because, again, static methods are regular functions scoped inside a class. It logically follows that you don’t need an object to access defaultBgColor or call combineBackgrounds—you just prefix them with the class’s name:

unittest {

auto w1 = new Widget, w2 = new Widget;

auto c = Widget.defaultBgColor;

// This works too: w1.defaultBgColor;

c = Widget.combineBackgrounds(w1, w2);

// This works too: w2.combineBackgrounds(w1, w2);

}

If you use an object instead of the class name when accessing a static member, that’s fine, too. Note that the object value is still computed even though it’s not really needed:

// Creates a Widget and throws it away

auto c = (new Widget).defaultBgColor;

6.6 Curbing Extensibility with final Methods

There are times when you actively want to disallow subclasses from overriding a certain method. This is a common occurrence because some methods are not meant as customization points. Such methods may call customizable methods, but there may often be cases when you want to keep certain control flows unchanged. (The Template Method design pattern [27] comes to mind.) To prevent inheriting classes from overriding a method, prefix its definition with final.

For example, consider a stock ticker application that wants to make sure it updates the on-screen information whenever a stock price has changed:

class StockTicker {

final void updatePrice(double last) {

doUpdatePrice(last);

refreshDisplay();

}

void doUpdatePrice(double last) { ... }

void refreshDisplay() { ... }

}

The methods doUpdatePrice and refreshDisplay are overridable and therefore offer customization points to subclasses of StockTicker. For example, some stock tickers may introduce triggers and alerts upon certain changes in price or display themselves in specific colors. But updatePrice cannot be overriden, so the caller can be sure that no stock price gets updated without an accompanying update of the display. In fact, just to be sticklers for correctness, let’s define updatePrice as follows:

final void updatePrice(double last) {

scope(exit) refreshDisplay();

doUpdatePrice(last);

}

With scope(exit) in tow, the display is properly refreshed even if doUpdatePrice throws an exception. This approach really ensures that the display reflects the latest and greatest state of the object.

There is a perk associated with final methods that is almost dangerous to know, because it could easily lure you toward the dark side of premature optimization. The truth is, final methods may be more efficient. This is because non-final methods go through one indirection step for each call, a step that ensures the flexibility brought about by override. For some final methods that indirection is still necessary. For example, a final override of a method is normally still subject to indirect calls when invoked via a base class object; in general the compiler still wouldn’t know where the call goes. But if the final method is also a first introduced method (not an override of a base class’ method), then whenever you call it, the compiler is 100% sure where the call will land. So final non-override methods are never subjected to indirect calls; instead, they enjoy the same calling convention, low overhead, and inlining opportunities as regular functions. It would appear that final non-override methods are much faster than others, but this margin is eroded by two factors.

First, the baseline call overhead is assessed against a function that does nothing. To assess the overhead that matters, the actual time spent inside the function must be considered in addition to the invocation overhead. If the function is very short, the relative overhead can be considerable, but if the function does some nontrivial work, the relative overhead decreases quickly until it falls into the noise. Second, a variety of compiler, linker, and runtime optimization techniques work aggressively to minimize or eliminate the dispatch overhead. You’re definitely much better off starting with flexibility and optimizing sparingly, instead of crippling your design from day one by making it unduly rigid for the sake of a potential future performance issue.

If you’ve used Java and C#, final is immediately recognizable because it has the same semantics in those languages. If you compare the state of affairs with C++, you’ll notice an interesting change of defaults: in C++ methods are final by default if you don’t use any annotation, and non-final if you explicitly annotate them with virtual. Again, at least in this case it was deemed that it is better to default on the side of flexibility. You may want to use final primarily in support of a design decision, and only seldom as a means to shave off some extra cycles.

6.6.1 final Classes

Sometimes you want a class to be the final word on a subject. You can achieve that by marking an entire class as final:

class Widget { ... }

final class UltimateWidget : Widget { ... }

class PostUltimateWidget : Widget { ... } // Error!

// Cannot inherit from a final class

A final class cannot be inherited from—it is a leaf in the inheritance hierarchy. This can sometimes be an important design device. Obviously, all of a final class’ methods are implicitly final because no overriding would ever be possible.

An interesting secondary effect of final classes is a strong implementation guarantee. Client code that uses a final class can be sure that said class’ methods have known implementations with guaranteed effects that cannot be tweaked by some subclass.

6.7 Encapsulation

One hallmark of object-oriented design, and of other design techniques as well, is encapsulation. Objects encapsulate their implementation details and expose only well-defined interfaces. That way, objects reserve the freedom to change a host of implementation details without disrupting clients. This leads to more decoupling and consequently fewer dependencies, confirming the adage that every design technique is, at the end of the day, aimed at dependency management.

In turn, encapsulation is a manifestation of information hiding, a general philosophy in designing software. Information hiding prescribes that various modular elements in an application should focus on defining and using abstract interfaces for communicating with one another, while hiding the details of how they implement the interfaces. Often, the details are related to data layout, for which reason “data hiding” is a commonly encountered notion. Focusing on data hiding alone, however, misses part of the point because a component may hide a variety of information, such as design decisions or algorithmic strategies.

Today, encapsulation sounds quite attractive and perhaps even obvious, but much of that is the result of accumulated collective experience. Things weren’t that clear-cut in the olden times. After all, information is ostensibly a good thing and the more you have of it the better off you are, so why would you want to hide it?

Back in the 1960s, Fred Brooks (author of the seminal book The Mythical Man-Month) was an advocate of a transparent, white-box, “everybody knows everything” approach to designing software. Under his management, the team building the OS/360 operating system received documentation of all design details of the project on a regular basis through a sophisticated hard copy annotation method [13, Chapter 7]. The project enjoyed qualified success, but it would be tenuous to argue that transparency was a positive contributor; more plausibly, it was a risk minimized by intensive management. It took a revolutionary paper by David L. Parnas [44] to forever establish the notion of information hiding in the community lore. Brooks himself commented in 1995 that his advocacy of transparency was the only major element of The Mythical Man-Month that hadn’t withstood the test of time. But the information hiding concept was quite controversial back in 1972, as witnessed by this comment by a reviewer of Parnas’ revolutionary paper: “Obviously Parnas does not know what he is talking about because nobody does it this way.” Funnily enough, only a decade later the tide had reversed so radically, the same paper almost got trivialized: “Parnas only wrote down what all good programmers did anyway” [32, page 138].

Getting back to encapsulation as enabled by D, you can prefix the declaration of any type, data, function, or method with one of five access specifiers. Let’s start from the most reclusive specifier and work our way up toward notoriety.

6.7.1 private

The label private can be specified at class level, outside all classes (module-level), or inside a struct (Chapter 7). In all contexts, private has the same power: it restricts symbol access to the current module (file).

This behavior is unlike that in other languages, which limit access to private symbols to the current class only. However, making private module-level is consistent with D’s general approach to protection—the units of protection are identical to the operating system’s unit of protection (file and directory). The advantage of file-level protection is that it facilitates collecting together small, tightly coupled entities that have a given responsibility. If class-level protection is needed, simply put the class in its own file.

6.7.2 package

The label package can be specified at class level, outside all classes (module-level), or inside a struct (Chapter 7). In all contexts, package introduces directory-level protection: all files within the same directory as the current module have access to the symbol. Subdirectories or the parent directory of the current module’s directory have no special privileges.

6.7.3 protected

The protected access specifier makes sense only inside a class, not at module level. When used inside of some class C, the protected access specifier means that access to the declared symbol is reserved to the module in which C is defined and also to C’s descendants, regardless of which modules they’re in. For example:

class C {

// x is accessible only inside this file

private int x;

// This file plus all classes inheriting C directly or

// indirectly may call setX()

protected void setX(int x) { this.x = x; }

// Everybody may call getX()

public int getX() { return x; }

}

Again, the access protected grants is transitive—it goes not only to direct children, but to all descendants that ultimately inherit from the class using protected. This makes protected quite generous in terms of giving access away.

6.7.4 public

Public access means that the declared symbol is accessible freely from within the application. All the application has to do is gain visibility to the symbol, most often by importing the module that defines it.

In D, public is also the default access level throughout. Since the order of declarations is ineffectual, a nice style is to put the visible interface of a module or class toward the beginning, then restrict access by using (for example) private: and continue with definitions. That way, the client only needs to look at the top of a file or class to learn about its accessible entities.

6.7.5 export

It would appear that public is the rock bottom of access levels, the most permissive of all. However, D defines an even more permissive access: export. When using export with a symbol, the symbol is accessible even from outside the program it’s defined in. This is the case with shared libraries that expose interfaces to the outside world. The compiler carries out the system-dependent steps required for a symbol to be exported, often including a special naming convention for the symbol. At this time, D does not define a sophisticated dynamic loading infrastructure, so export is to some extent a stub waiting for more extensive support.

6.7.6 How Much Encapsulation?

One interesting question we should ask ourselves is: How do the five access specifiers compare? For example, assuming we have already agreed that information hiding is a good thing, it is reasonable to infer that private is “better” than protected because it is more restrictive. Continuing along that line of thought, we might think that protected is better than public (heck, public sets the bar pretty low, not to mention export). It is unclear, however, how to rate protected in comparison to package. Most important, such a qualitative analysis does not give an idea of how much of a hit the design takes if, for example, it decides to loosen the restrictiveness of a symbol. Is protected closer to private, closer to public, or smack in the middle of the scale? And what’s the scale after all?

Back in December 1999, when everybody else was worried about Y2K, Scott Meyers was worried about encapsulation, or more exactly, about coding techniques that could maximize it. In his subsequent article [41], Meyers proposes a simple criterion for devising the “amount” of encapsulation of an entity: if we were to change that entity, how much code would be affected? The less code is affected, the more encapsulation has been achieved.

Having a means to measure the degree of encapsulation clarifies things a lot. Without a metric, a common assessment is that private is good, public is bad, and protected is sort of halfway in between. As people are optimistic by nature, protected has been for many of us a feel-good-within-bounds protection level, kind of like drinking responsibly.

Another aspect we can use in assessing the degree of encapsulation is control, that is, the influence you can exercise over the code that may be affected by a change. Do you know (or can you cheaply find) the code affected by a change? Do you have the rights to modify that code? Can others add to that code? The answers to these questions define degrees of control.

For starters, consider private. Modifying a private symbol affects exactly one file. A source file has on the order of a thousand lines; smaller files are common, whereas much larger files (e.g., 10,000 lines) would become difficult to manage. You have control over that file by the sheer fact that you’re changing it, and you could easily restrict others’ access to it by the use of file attributes, version control, or team coding standards. So private offers excellent encapsulation: little code affected and good control over that code.

When you use package-level access, all files within the same directory would be affected by the change. We can estimate that the files grouped in a package have about one order of magnitude more lines (for example, it’s reasonable to think of a package containing on the order of ten modules). Correspondingly, it costs to mess with package symbols: a change affects an order of magnitude more code than a similar change against a private symbol. Fortunately, however, you have good control over the affected code because, again, the operating system and various version control tools allow directory-level control over adding and changing files.

Sadly, protected protects much less than it would appear. First off, protected marks a definite departure from the confines of private and package: any class situated anywhere in a program can gain access to a protected symbol by simply inheriting a descendant of the class defining it. You don’t have fine-grained control over inheritance, except through the all-or-none attribute final. It follows that if you mess with a protected symbol, you affect an unbounded amount of code. To add insult to injury, not only do you have no ability to limit who inherits from your class, but you could also break code that you yourself don’t have the right to fix. (Think, for example, of changing a library symbol that affects applications elsewhere.) The reality is as grim as it is crisp: as soon as you step outside private and package, you’re out in the wild. Using protected offers hardly any protection.

How much code do you need to inspect when changing a protected symbol? That would be all of the descendants of the class defining the symbol. A reasonable ballpark is one order of magnitude above a package size, or a few hundreds of thousands of lines. Tools that index source code and track a class’ descendants can help a lot here, but at the end of the day, a change of a protected symbol potentially affects large amounts of code.

Using public does not change much in terms of control, but it does add one extra order of magnitude to the bulk of the code potentially affected. Now it’s not only a class’ descendants, it’s the entire code of the application. And finally, export adds one interesting twist to the situation—it’s all binary applications using your code as a binary library, so you’re not only looking at code that you can’t modify, it’s code you can’t even look at because it may not be available in source form.

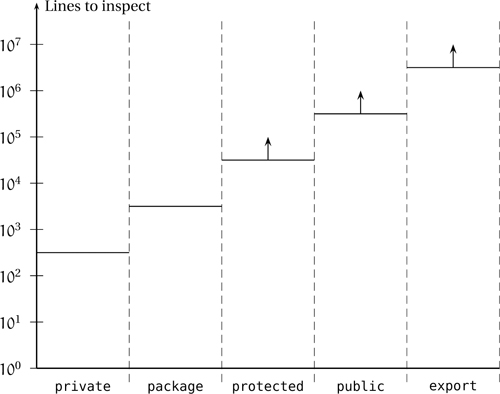

Figure 6.3 on the following page illustrates these ballpark approximations by plotting the potentially affected lines of code for each access specifier. Of course, the amounts are guesstimates and could vary wildly, but the rough proportions should not be affected too much. The vertical axis uses logarithmic scale and the steps suggest a linear trend, so each time you give up one iota of access protection, you must work about ten times harder to keep things in sync. The upward-pointing arrows suggest loss of control over the affected code. One corollary is that protected is not smack in between private and public—it’s much more like public so you should treat it as such (that is, with atavistic fear).

Figure 6.3. Ballpark estimates of lines of codes potentially affected by altering a symbol for each protection level. The vertical axis uses logarithmic scale, so each relaxation of encapsulation makes things worse by an order of magnitude. The upward-pointing arrows symbolize the fact that the amount of code affected by protected, public, and export is not under the control of the coder who maintains the symbol.

6.8 One Root to Rule Them All

Some languages define a root class for all other classes, and D is among them. The root of everything is called Object. If you define a class like this:

to the compiler things are exactly as if you wrote

Other than the automatic rewrite above, Object is not very special—it’s a class like any other. Your implementation defines it in a module called object.di or object.d, which is imported automatically in every module you compile. You should easily find and inspect that module by navigating around the directory in which your D implementation resides.

There are several advantages to having a root for all classes. An obvious boon is that Object can introduce a few universally useful methods. Below is a slightly simplified definition of the Object class:

class Object {

string toString();

size_t toHash();

bool opEquals(Object rhs);

int opCmp(Object rhs);

static Object factory(string classname);

}

Let’s look closer at the semantics of each of these symbols.

6.8.1 string toString()

This returns a textual representation of the object. By default it returns the class name:

// File test.d

class Widget {}

unittest {

assert((new Widget).toString() == "test.Widget");

}

Note that the name of the class comes together with the name of the module the class is defined in. By default, the module name is the same as the file name, a default that can be changed with a module declaration (§ 11.1.8 on page 348).

6.8.2 size_t toHash()

This returns a hash of the object as an unsigned integral value (32 bits on 32-bit machines, 64 bits on 64-bit machines). By default, the hash is computed by using the bitwise representation of the object. The hash value is a concise but inexact digest of the object. One important requirement is consistency: If toHash is called twice against a reference without an intervening change to the state of the object, it should return the same value. Also, the hashes of two equal objects must be equal, and the hash values of two distinct (non-equal) objects are unlikely to be equal. The next section discusses in detail how object equality is defined.

6.8.3 bool opEquals(Object rhs)

This returns true if this considers that rhs is equal to it. This odd formulation is intentional. Experience with Java’s similar function equals has shown that there are some subtle issues related to defining equality in the presence of inheritance, for which reason D approaches the problem in a relatively elaborate manner.

First off, one notion of equality for objects already exists: when you compare two references to class objects a1 and a2 by using the expression a1 is a2 (§ 2.3.4.3 on page 48), you get true if and only if a1 and a2 refer to the same object, just as in Figure 6.1 on page 177. This notion of object equality is sensible, but too restrictive to be useful. Often, two actually distinct objects should be considered equal if they hold the same state. In D, logical equality is assessed by using the == and != operators. Here’s how they work.

Let’s say you write ‹lhs› == ‹rhs› for expressions ‹lhs› and ‹rhs›. Then, if at least one of ‹lhs› and ‹rhs› has a user-defined type, the compiler rewrites the comparison as object.opEquals(‹lhs›, ‹rhs›). Similarly, ‹lhs› != ‹rhs› is rewritten as !object.opEquals(‹lhs›, ‹rhs›). Recall from earlier in this section that object is a core module defined by your D implementation and implicitly imported in any module that you build. So the comparisons are rewritten into calls to a free function provided by your implementation and residing in module object.

The equality relation between objects is expected to obey certain invariants, and object.opEquals(‹lhs›, ‹rhs›) goes a long way toward ensuring correctness. First, null references must compare equal. Then, for any three non-null references x, y, and z, the following assertions must hold true:

// The null reference is singular; no non-null object equals null

assert(x != null);

// Reflexivity

assert(x == x);

// Symmetry

assert((x == y) == (y == x));

// Transitivity

if (x == y && y == z) assert(x == z);

// Relationship with toHash

if (x == y) assert(x.toHash() == y.toHash());

A more subtle requirement of opEquals is consistency: evaluating equality twice against the same references without an intervening mutation to the underlying objects must return the same result.

The typical implementation of object.opEquals eliminates a few simple or degenerate cases and then defers to the member version. Here’s what object.opEquals may look like:

// Inside system module object.d

bool opEquals(Object lhs, Object rhs) {

// If aliased to the same object or both null => equal

if (lhs is rhs) return true;

// If either is null => non-equal

if (lhs is null || rhs is null) return false;

// If same exact type => one call to method opEquals

if (typeid(lhs) == typeid(rhs)) return lhs.opEquals(rhs);

// General case => symmetric calls to method opEquals

return lhs.opEquals(rhs) && rhs.opEquals(lhs);

}

First, if the two references refer to the same object or are both null, the result is trivially true (ensuring reflexivity). Then, once it is established that the objects are distinct, if one of them is null, the comparison result is false (ensuring singularity of null). The third test checks whether the two objects have exactly the same type and, if they do, defers to lhs.opEquals(rhs). And a more interesting part is the double evaluation on the last line. Why isn’t one call enough?

Recall the initial—and slightly cryptic—description of the opEquals method: “returns true if this considers that rhs is equal to it.” The definition cares only about this but does not gauge any opinion rhs may have. To get the complete agreement, a handshake must take place—each of the two objects must respond affirmatively to the question: Do you consider that object your equal? Disagreements about equality may appear to be only an academic problem, but they are quite common in the presence of inheritance, as pointed out by Joshua Bloch in his book Effective Java [9] and subsequently by Tal Cohen in an article [17]. Let’s restate that argument.

Getting back to an example related to graphical user interfaces, consider that you define a graphical widget that could sit on a window:

class Rectangle { ... }

class Window { ... }

class Widget {

private Window parent;

private Rectangle position;

... // Widget-specific functions

}

Then you define a class TextWidget, which is a widget that displays some text.

How do we implement opEquals for these two classes? As far as Widget is concerned, another Widget that has the same state is equal:

// Inside class Widget

override bool opEquals(Object rhs) {

// The other must be a Widget

auto that = cast(Widget) rhs;

if (!that) return false;

// Compare all state

return parent == that.parent

&& position == that.position;

}

The expression cast(Widget) rhs attempts to recover the Widget from rhs. If rhs is null or rhs’s actual, dynamic type is not Widget or a subclass thereof, the cast expression returns null.

The TextWidget class has a more discriminating notion of equality because the right-hand side of the comparison must also be a TextWidget and carry the same text.

// Inside class TextWidget

override bool opEquals(Object rhs) {

// The other must be a TextWidget

auto that = cast(TextWidget) rhs;

if (!that) return false;

// Compare all relevant state

return super.opEquals(that) && text == that.text;

}

Now consider a TextWidget tw superimposed on a Widget w with the same position and parent window. As far as w is concerned, tw is equal to it. But from tw’s viewpoint, there is no equality because w is not a TextWidget. If we accepted that w == tw but tw != w, that would break reflexivity of the equality operator. To restore reflexivity, let’s consider making TextWiget less strict: inside TextWidget.opEquals, if comparison is against a Widget that is not a TextWidget, the comparison just agrees to go with Widget’s notion of equality. The implementation would look like this:

// Alternate TextWidget.opEquals -- BROKEN

override bool opEquals(Object rhs) {

// The other must be at least a Widget

auto that = cast(Widget) rhs;

if (!that) return false;

// Do they compare equal as Widgets? If not, we're done

if (!super.opEquals(that)) return false;

// Is it a TextWidget?

auto that2 = cast(TextWidget) rhs;

// If not, we're done comparing with success

if (!that2) return true;

// Compare as TextWidgets

return text == that.text;

}

Alas, TextWidget’s attempts at being accommodating are ill advised. The problem is that now transitivity of comparison is broken: it is easy to create two TextWidgets tw1 and tw2 that are different (by containing different texts) but at the same time equal with a simple Widget object w. That would create a situation where tw1 == w and tw2 == w, but tw1 != tw2.

So in the general case, comparison must be carried out both ways—each side of the comparison must agree on equality. The good news is that the free function object.opEquals(Object, Object) avoids the handshake whenever the two involved objects have the same exact type, and even any other call in a few other cases.

6.8.4 int opCmp(Object rhs)

This implements a three-way ordering comparison, which is needed for using objects as keys in associative arrays. It returns an unspecified negative number if this is less than rhs, an unspecified positive number if rhs is less than this, and 0 if this is considered unordered with rhs. Similarly to opEquals, opCmp is seldom called explicitly. Most of the time, you invoke it implicitly by using one of a < b, a <= b, a > b, and a >= b.

The rewrite follows a protocol similar to opEquals, by using a global object.opCmp definition that intermediates communication between the two involved objects. For each of the operators <, <=, >, and >=, the D compiler rewrites the expression a ‹op› b as object.opCmp(a, b)‹op› 0. For example, a < b becomes object.opCmp(a, b) < 0.

Implementing opCmp is optional. The default implementation Object.opCmp throws an exception. In case you do implement it, opCmp must be a “strict weak order,” that is it must satisfy the following invariants for any non-null references x, y, and z.

// 1. Reflexivity

assert(x.opCmp(x) == 0);

// 2. Transitivity of sign

if (x.opCmp(y) < 0 && y.opCmp(z) < 0) assert(x.opCmp(z) < 0);

// 3. Transitivity of equality with zero

if ((x.opCmp(y) == 0 && y.opCmp(z) == 0) assert(x.opCmp(z) == 0);

The rules above may seem a bit odd because they express axioms in terms of the less familiar notion of three-way comparison. If we rewrite them in terms of <, we obtain the familiar properties of strict weak ordering in mathematics:

// 1. Irreflexivity of '<'

assert(!(x < x));

// 2. Transitivity of '<'

if (x < y && y < z) assert(x < z);

// 3. Transitivity of '!(x < y) && !(y < x)'

if (!(x < y) && !(y < x) && !(y < z) && !(z < y))

assert(!(x < z) && !(z < x));

The third condition is necessary for making < a strict weak ordering. Without it, < is called a partial order. You might get away with a partial order, but only for restricted uses; most interesting algorithms require a strict weak ordering. If you want to define a partial order, you’re better off giving up all syntactic sugar and defining your own named functions distinct from opCmp.

Note that the conditions above focus only on < and not on the other ordering comparisons because the latter are just syntactic sugar (x > y is the same as y < x, x <= y is the same as !(y > x), and so on).

One property that derives from irreflexivity and transitivity, but is sometimes confused with an axiom, is antisymmetry: x < y implies !(y < x). It is easy to verify by reductio ad absurdum that there can never be x and y such that x < y and y < x are simultaneously true: if that were the case, we could replace z with x in the transitivity of < above, obtaining

The tested condition is true by the hypothesis, so the assert will be checked. But it can never pass because of irreflexivity, thus contradicting the hypothesis.

In addition to the restrictions above, opCmp must be consistent with opEquals:

The relationship with opEquals is relaxed: it is possible to have classes for which x <= y and y <= x are simultaneously true, so common sense would dictate they are equal. However, it is not necessary that x == y. A simple example would be a class that defines equality in terms of case-sensitive string matching, but ordering in terms of case-insensitive string matching.

6.8.5 static Object factory(string className)

This is an interesting method that allows you to create an object given the name of its class. The class involved must accept construction without arguments; otherwise, factory throws an exception. Let’s give factory a test drive.

// File test.d

import std.stdio;

class MyClass {

string s = "Hello, world!";

}

void main() {

// Create an Object

auto obj1 = Object.factory("object.Object");

assert(obj1);

// Now create a MyClass

auto obj2 = cast(MyClass) Object.factory("test.MyClass");

writeln(obj2.s); // Writes "Hello, world!"

// Attempting factory against nonexistent classes returns null

auto obj3 = Object.factory("Nonexistent");

assert(!obj3);

}

Having the ability to create an object from a string is very useful for a variety of idioms, such as the Factory design pattern [27, Chapter 23] and object serialization. On the face of it, there’s nothing wrong with

However, it is possible that later on you will change your mind and decide a derived class TextWidget is better for the task at hand, so you need to change the code above to

The problem is that you need to change the code. Doing surgery on code for new functionality is bad because it is liable to break existing functionality. Ideally you’d only need to add code to add functionality, thus remaining confident that existing code continues to work as usual. That’s when overridable functions are most useful—they allow you to customize code without actually changing it, by tweaking specific and well-defined customization points. Meyer nicely dubbed this notion the Zen-sounding Open/Closed Principle [40]: a class (and more generally a unit of encapsulation) should be open for extension, but closed for modification. The new operator works precisely against all that because it requires you to change the initializer of w if you want to tweak its behavior. A better solution would be to pass the name of the class to be created from the outside, thus decoupling widgetize from the choice of the exact widget to use:

void widgetize(string widgetClass) {

Widget w = cast(Widget) Object.factory(widgetClass);

... use w ...

}

Now widgetize is relieved of the responsibility for choosing which concrete Widget to use. There are some other ways of achieving flexible object construction that explore the design space in different directions. For a thorough discussion of the matter, you may want to peruse the dramatically entitled “Java’s new considered harmful” [4].

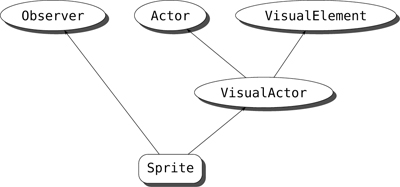

6.9 Interfaces

Most often, class objects contain state and define methods that work with that state. As such, a given object acts at the same time as an interface to the outside world (through its public methods) and as an encapsulated implementation of that interface.

Sometimes, however, it is very useful to separate the notion of interface from that of implementation. Doing so is particularly useful when you want to define communication among various parts of a large program. A function trying to operate on a Widget is solely preoccupied with Widget’s interface—Widget’s implementation is irrelevant by the very definition of encapsulation. This brings to the fore the notion of a completely abstract interface, consisting only of the methods that a class must implement, but devoid of any implementation. That entity is called an interface.

D’s interface definitions look like restricted class definitions. In addition to replacing the keyword class with interface, an interface definition needs to obey certain restrictions. You cannot define any non-static data in an interface, and you cannot specify an implementation for any overridable function. It is legal to define static data and final functions with implementation inside an interface. For example:

interface Transmogrifier {

void transmogrify();

void untransmogrify();

final void thereAndBack() {

transmogrify();

untransmogrify();

}

}

This is everything a function using Transmogrifier needs to compile. For example:

void aDayInLife(Transmogrifier device, string mood) {

if (mood == "play") {

device.transmogrify();

play();

device.untransmogrify();

} else if (mood == "experiment") {

device.thereAndBack();

}

}

Of course, given that at the moment there is no definition for Transmogrifier’s primitives, there is no sensible way to call aDayInLife. So let’s create an implementation of the interface:

class CardboardBox : Transmogrifier {

override void transmogrify() {

// Get in the box

...

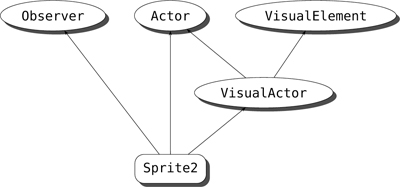

}