Domain 3

Risk, Identification, Monitoring, and Analysis

Organizations face a wide range of challenges today, including ever-expanding risks to organizational assets, intellectual property, and customer data. Understanding and managing these risks is an integral component of organizational success. The security practitioner is expected to participate in organizational risk management process, assist in identifying risks to information systems, and develop and implement controls to mitigate identified risks. As a result, the security practitioner must have a firm understanding of risk, response, and recovery concepts and best practices.

Topics

- The following topics are addressed in this chapter:

- Understand the risk management process

- Risk management concepts (e.g., impacts, threats, vulnerabilities)

- Risk assessment

- Risk treatment (accept, transfer, mitigate, avoid)

- Risk visibility and reporting (e.g., Risk Register, sharing threat intelligence)

- Audit findings

- Perform security assessment activities

- Participation in security and testing results

- Penetration testing

- Internal and external assessment (e.g., audit, compliance)

- Vulnerability scanning

- Interpretation and reporting of scanning and testing results

- Operate and maintain monitoring systems (e.g., continuous monitoring)

- Events of interest

- Logging

- Source systems

- Analyze and report monitoring results

- Security analytics, metrics, and trends (e.g., baseline)

- Visualization

- Event data analysis (e.g., log, packet dump, machine data)

- Communicate findings

- Understand the risk management process

Objectives

Effective incident response allows organizations to respond to threats that attempt to exploit vulnerabilities to compromise the confidentiality, integrity, and availability of organizational assets.

A Systems Security Certified Practitioner (SSCP) plays an integral role in incident response at any organization. The security practitioner must:

- Understand the organization’s incident response policy and procedures.

- Perform security assessments.

- Operate and maintain monitoring systems.

- Be able to execute the correct role in the incident response process.

Introduction to Risk Management

The security practitioner will be expected to be a key participant in the organizational risk management process. As a result, it is imperative that the security practitioner have a strong understanding of the responsibilities in the risk management process. To obtain this understanding, we will define key risk management concepts and then present an overview of the risk management process. We will then present real-world examples to reinforce key risk management concepts.

Risk Management Concepts

While you are reviewing risk management concepts, it is critical to remember that the ultimate purpose of information security is to reduce risks to organizational assets to levels that are deemed acceptable by senior management. Information security should not be performed using a “secure at any cost” approach. The cost of controls should never exceed the loss that would result if the confidentiality, integrity, or availability of a system were compromised. Risks, threats, vulnerabilities, and potential impacts should be assessed. Only after assessing these factors can cost-effective information security controls be selected and implemented that eliminate or reduce risks to acceptable levels.

We will reproduce the National Institute of Standards and Technology (NIST) Special Publication 800-30 R1, “Risk Management Guide for Information Systems”1 to establish definitions of key risk management concepts.

- Risk—A risk is a function of the likelihood of a given threat source’s exercising a potential vulnerability, and the resulting impact of that adverse event on the organization.

- Likelihood—The probability that a potential vulnerability may be exercised within the construct of the associated threat environment.

- Threat Source—Either intent and method targeted at the intentional exploitation of a vulnerability or a situation or method that may accidentally trigger a vulnerability.

- Threat—The potential for a threat source to exercise (accidentally trigger or intentionally exploit) a specific vulnerability.

- Vulnerability—A flaw or weakness in system security procedures, design, implementation, or internal controls that could be exercised (accidentally triggered or intentionally exploited) and result in a security breach or a violation of the system’s security policy.

- Impact—The magnitude of harm that could be caused by a threat’s exercise of a vulnerability.

- Asset—Anything of value that is owned by an organization. Assets include both tangible items such as information systems and physical property and intangible assets such as intellectual property.

These basic risk management concepts merge to form a model for risk as seen in the NIST diagram shown in Figure 3-1.

Figure 3-1: Determining Likelihood of Organizational Risk

Now that we have established definitions for basic risk management concepts, we can proceed with an explanation of the risk management process. It is important to keep these definitions in mind when reviewing the risk management process because these concepts will be continually referenced.

Risk Management Process

Risk management is the process of identifying risks, assessing their potential impacts to the organization, determining the likelihood of their occurrence, communicating findings to management and other affected parties, and developing and implementing risk mitigation strategies to reduce risks to levels that are acceptable to the organization. The first step in the risk management process is conducting a risk assessment.

Risk Assessment

Risk assessments assess threats to information systems, system vulnerabilities, and weaknesses, and the likelihood that threats will exploit these vulnerabilities and weaknesses to cause adverse effects. For example, a risk assessment could be conducted to determine the likelihood that an un-patched system connected directly to the Internet would be compromised. The risk assessment would determine that there is almost 100% likelihood that the system would be compromised by a number of potential threats such as casual hackers and automated programs. Although this is an extreme example, it helps to illustrate the purpose of conducting risk assessments.

The security practitioner will be expected to be a key participant in the risk assessment process. The security practitioner's responsibilities may include identifying system, application, and network vulnerabilities or researching potential threats to information systems. Regardless of the security practitioner's role, it is important that the security practitioner understand the risk assessment process and how it relates to implementing controls, safeguards, and countermeasures to reduce risk exposure to acceptable levels.

When performing a risk assessment, an organization should use a methodology that uses repeatable steps to produce reliable results. Consistency is vital in the risk assessment process, as failure to follow an established methodology can result in inconsistent results. Inconsistent results prevent organizations from accurately assessing risks to organizational assets and can result in ineffective risk mitigation, risk transference, and risk acceptance decisions.

Although a number of risk assessment methodologies exist, they generally follow a similar approach. NIST Special Publication 800-30 R1, “Risk Management Guide for Information Technology Systems” details a four-step risk assessment process. The risk assessment process described by NIST is composed of the steps shown in Figure 3-2.

Although a complete discussion of each step described within the NIST Risk Assessment Process is outside the scope of this text, we will use the methodology as a guideline to explain the typical risk assessment process. A brief description of a number of the steps is provided to help the security practitioner understand the methodology and functions that the security practitioner will be expected to perform as an SSCP.

Figure 3-2: The NIST Risk Assessment Process

Step 1: Preparing for the Assessment

The first step in the risk assessment process is to prepare for the assessment. The objective of this step is to establish a context for the risk assessment. This context is established and informed by the results from the risk framing step of the risk management process. Risk framing identifies, for example, organizational information regarding policies and requirements for conducting risk assessments, specific assessment methodologies to be employed, procedures for selecting risk factors to be considered, scope of the assessments, rigor of analyses, degree of formality, and requirements that facilitate consistent and repeatable risk determinations across the organization. Preparing for a risk assessment includes the following tasks:

- Identify the purpose of the assessment.

- Identify the scope of the assessment.

- Identify the assumptions and constraints associated with the assessment.

- Identify the sources of information to be used as inputs to the assessment.

- Identify the risk model and analytic approaches (i.e., assessment and analysis approaches) to be employed during the assessment.

Step 2: Conducting the Assessment

The second step in the risk assessment process is to conduct the assessment. The objective of this step is to produce a list of information security risks that can be prioritized by risk level and used to inform risk response decisions. To accomplish this objective, organizations analyze threats and vulnerabilities, impacts and likelihood, and the uncertainty associated with the risk assessment process. This step also includes the gathering of essential information as a part of each task and is conducted in accordance with the assessment context established in the prepare step of the risk assessment process (Step 1). The expectation for risk assessments is to adequately cover the entire threat space in accordance with the specific definitions, guidance, and direction established during the prepare step. However, in practice, adequate coverage within available resources may dictate generalizing threat sources, threat events, and vulnerabilities to ensure full coverage and assessing specific, detailed sources, events, and vulnerabilities only as necessary to accomplish risk assessment objectives. Conducting risk assessments includes the following specific tasks:

- Identify threat sources that are relevant to organizations.

- Identify threat events that could be produced by those sources.

- Identify vulnerabilities within organizations that could be exploited by threat sources through specific threat events and the predisposing conditions that could affect successful exploitation.

- Determine the likelihood that the identified threat sources would initiate specific threat events and the likelihood that the threat events would be successful.

- Determine the adverse impacts to organizational operations and assets, individuals, other organizations, and the Nation resulting from the exploitation of vulnerabilities by threat sources (through specific threat events).

- Determine information security risks as a combination of likelihood of threat exploitation of vulnerabilities and the impact of such exploitation, including any uncertainties associated with the risk determinations.

The specific tasks are presented in a sequential manner for clarity. Depending on the purpose of the risk assessment, the SSCP may find reordering the tasks advantageous. Whatever adjustments the SSCP makes to the tasks, risk assessments should meet the stated purpose, scope, assumptions, and constraints established by the organizations initiating the assessments.

Step 2a: Identifying Threat Sources

As mentioned previously, a threat is the potential for a particular threat source to successfully exercise a specific vulnerability. During the threat identification stage, potential threats to information resources are identified. Threat sources can originate from natural threats, human threats, or environmental threats. Natural threats include earthquakes, floods, tornadoes, hurricanes, tsunamis, and the like. Human threats are events that are either caused through employee error or negligence or are events that are caused intentionally by humans via malicious attacks that attempt to compromise the confidentiality, integrity, and availability of IT systems and data. Environmental threats are those issues that arise because of environmental conditions such as power failure, HVAC failure, or electrical fire.

For the SSCP, the first step of a risk assessment involves understanding the threats that may target an organization. This step considers both adversarial threats and non-adversarial threats such as natural disasters. During this step, the security practitioner may:

- Determine sources for obtaining threat information.

- Determine what threat taxonomy may be used.

- Provide input or determine what threat information will be used in the overall assessment.

Several threat sources may be referenced as part of the threat identification. Honey pots and honey nets provide targeted and specific threat information in near–real time, but they require expert operation, interpretation, and analysis and therefore can be cumbersome and expensive for smaller organizations. Incorrectly configured and deployed honey pots/nets can also provide a staging ground for further attacks against an organization. Organizations may also consider threat catalogs such as Appendix D of NIST SP 800-30 R1 or the German BSI Threats Catalogue.2

An organization can subscribe to threat source information services. These services scan the Internet and the globe to determine active threats and how they may be targeting a specific industry, business, or economy. The more an organization is willing to pay, the more specific threat intelligence the organization may be able to maintain. Some programs such as the United States' FBI Infraguard are designed to provide threat information from the government to businesses and non-governmental organizations.

Since threat information is volatile yet crucial for the risk management process, organizations in similar economies or sectors may band together to share threat information between them. These collations often exist within a “no attribution” environment where confidentiality of the source of the threat information is the norm. While some areas, such as government, have done well in collaborating, other industries have been slow to adopt threat sharing for fear of losing competitive advantage over rivals.

After the threat identification process has been completed, a threat statement should be generated. A threat statement lists potential threat sources that could exploit system vulnerabilities. Successfully identifying threats coupled with vulnerability identification are prerequisites for selecting and implementing information security controls.

Step 2a: Identifying Potential Threat Events

Threat events are characterized by the threat sources that could initiate the events. The SSCP needs to define these threat events with sufficient detail to accomplish the purpose of the risk assessment. Multiple threat sources can initiate a single threat event. Conversely, a single threat source can potentially initiate any of multiple threat events. Therefore, there can be a many-to-many relationship among threat events and threat sources that can potentially increase the complexity of the risk assessment. For each threat event identified, organizations need to determine the relevance of the event. The values selected by organizations have a direct linkage to organizational risk tolerance. The more risk averse, the greater the range of values considered. Organizations accepting greater risk or having a greater risk tolerance are more likely to require substantive evidence before giving serious consideration to threat events. If a threat event is deemed to be irrelevant, no further consideration is given. For relevant threat events, organizations need to identify all potential threat sources that could initiate the events.

Step 2b: Identifying Vulnerabilities and Predisposing Conditions

Identifying vulnerabilities is an important step in the risk assessment process. The step allows for the identification of both technical and nontechnical vulnerabilities that, if exploited, could result in a compromise of system or data confidentiality, integrity, and availability. A review of existing documentation and reports is a good starting point for the vulnerability identification process. This review can include the results of previous risk assessments. It can also include a review of audit reports from compliance assessments, a review of security bulletins and advisories provided by vendors, and data made available via personal and social networking. NIST SP 800-30 R1 Appendix F provides a set of tables for using in identifying predisposing conditions and vulnerabilities.

Vulnerability identification and assessment can be performed via a combination of automated and manual techniques. Automated techniques such as system scanning allow a security practitioner to identify technical vulnerabilities present in assessed IT systems. These technical vulnerabilities may result from failure to apply operating system and application patches in a timely manner, architecture design problems, or configuration errors. By using automated tools, systems can be rapidly assessed and vulnerabilities can be quickly identified.

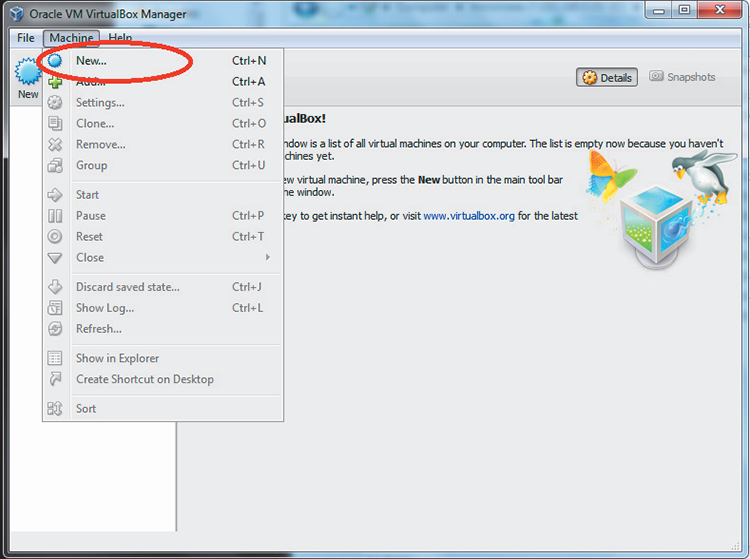

Although a comprehensive list of vulnerability assessment tools is outside the scope of this text, an SSCP should spend a significant amount of time becoming familiar with the various tools that could be used to perform automated assessments. Although many commercial tools are available, there are also many open-source tools equally as effective in performing automated vulnerability assessments. Figure 3-3 is a screenshot of policy configuration using Nessus, a widely used vulnerability assessment tool.

Manual vulnerability assessment techniques may require more time to perform when compared to automated techniques. Typically, automated tools will initially be used to identify vulnerabilities. Manual techniques can then be used to validate automated findings. By performing manual techniques, one can eliminate false positives. False positives are potential vulnerabilities identified by automated tools that are not actual vulnerabilities.

Figure 3-3: A screenshot of policy configuration using Nessus

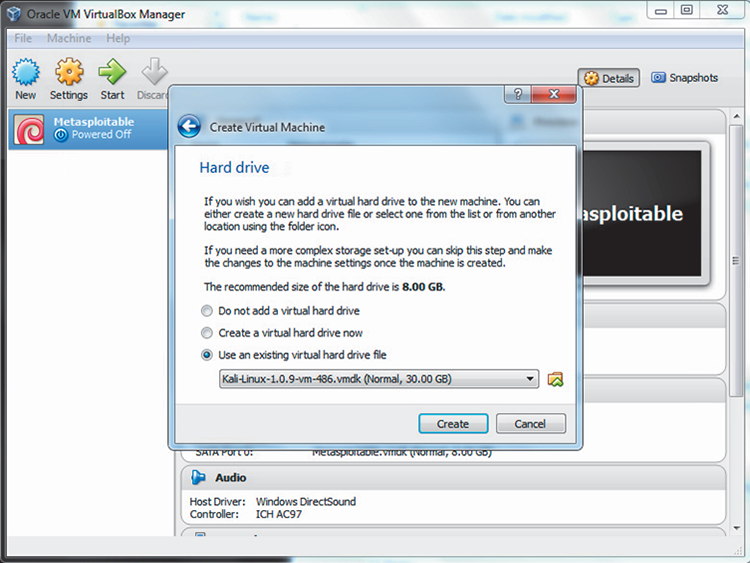

Manual techniques may involve attempts to actually exploit vulnerabilities. When approved personnel attempt to exploit vulnerabilities to gain access to systems and data, it is referred to as penetration testing. Although vulnerability assessments merely attempt to identify vulnerabilities, penetration testing actually attempts to exploit vulnerabilities. Penetration testing is performed to assess security controls and to determine if vulnerabilities can be successfully exploited. Figure 3-4 is a screenshot of the Metasploit console, a widely used penetration-testing tool.

Figure 3-4: A screenshot of the Metasploit console

Step 2c: Determining Likelihood

Likelihood determination attempts to define the likelihood that a given vulnerability could be successfully exploited within the current environment. Likelihood is often the most difficult to determine in the risk framework. Factors that must be considered when assessing the likelihood of a successful exploit include:

- The nature of the vulnerability, including factors such as:

- The operating system, application, database, or device affected by the vulnerability

- Whether local or remote access is required to exploit the vulnerability

- The skills and tools required to exploit the vulnerability

- The threat source’s motivation and capability, including factors such as:

- Threat source motivational factors (e.g., financial gain, political motivation, revenge)

- Capability (skills, tools, and knowledge required to exploit a given vulnerability)

- The effectiveness of controls deployed to prevent exploit of the given vulnerability

Step 2d: Determining Impact

An impact analysis defines the impact to an organization that would result if a vulnerability were successfully exploited. An impact analysis cannot be performed until system mission, system and data criticality, and system and data sensitivity have been obtained and assessed. The system mission refers to the functionality provided by the system in terms of business or IT processes supported. System and data criticality refer to the system’s importance to supporting the organizational mission. System and data sensitivity refer to requirements for data confidentiality and integrity.

In many cases, system and data criticality and system and data sensitivity can be assessed by determining the adverse impact to the organization that would result from a loss of system and data confidentiality, integrity, or availability. Remember that confidentiality refers to the importance of restricting access to data so that they are not disclosed to unauthorized parties. Integrity refers to the importance that unauthorized modification of data is prevented. Availability refers to the importance that systems and data are available when needed to support business and technical requirements. When a person assesses each of these factors individually and aggregates the individual impacts resulting from a loss of confidentiality, integrity, and availability, the overall adverse impact from a system compromise can be assessed.

Impact can be assessed in either quantitative or qualitative terms. A quantitative impact analysis assigns a dollar value to the impact. The dollar value can be calculated based on an assessment of the likelihood of a threat source exploiting a vulnerability, the loss resulting from a successful exploit, and an approximation of the number of times that a threat source will exploit a vulnerability over a defined period. To understand how a dollar value can be assigned to an adverse impact, one must review some fundamental concepts. An explanation of each of these concepts is provided below.

- Single Loss Expectancy (SLE)—SLE represents the expected monetary loss to an organization from a threat to an asset. SLE is calculated by determining the value of a particular asset (AV) and the approximated exposure factor (EF). EF represents the portion of an asset that would be lost if a risk to the asset was realized. EF is expressed as a percentage value where 0% represents no damage to the asset and 100% represents complete destruction of the asset. SLE is calculated by multiplying the AV by the EF as indicated by the formula:

Single Loss Expectancy = Asset Value X Exposure Factor

- Annualized Loss Expectancy (ALE)—ALE represents the expected annual loss because of a risk to a specific asset. ALE is calculated by determining the SLE and then multiplying it by the Annualized Rate of Occurrence (ARO) as indicated by the formula:

Annual Loss Expectancy = Single Loss Expectancy X Annualized Rate of Occurrence

- Annualized Rate of Occurrence—ARO represents the expected number of exploitations by a specific threat of a vulnerability to an asset in a given year.

Organizations can use the results of annual loss expectancy calculations to determine the quantitative impact to an organization if an exploitation of a specific vulnerability were successful. In addition to the results of quantitative impact analysis, organizations should evaluate the results of qualitative impact analysis.

A qualitative impact analysis assesses impact in relative terms such as high impact, medium impact, and low impact without assigning a dollar value to the impact. A qualitative assessment is often used when it is difficult or impossible to accurately define loss in terms of dollars. For example, a qualitative assessment may be used to assess the impact resulting from a loss of customer confidence, from negative public relations, or from brand devaluation.

Organizations generally find it most helpful to use a blended approach to impact analysis. When they evaluate both the quantitative and qualitative impacts to an organization, a complete picture of impacts can be obtained. The results of these impact assessments provide required data for the risk determination process.

Step 2e: Risk Determination

In the risk determination step, the overall risk to an IT system is assessed. Risk determination uses the outputs from previous steps in the risk assessment process to assess overall risk. Risk determination results from the combination of:

- The likelihood of a threat source attempting to exploit a specific vulnerability

- The magnitude of the impact that would result if an attempted exploit were successful

- The effectiveness of existing and planned security controls in reducing risk

A risk-level matrix can be created that analyzes the combined impact of these factors to assess the overall risk to a given IT system. The exact process for creating a risk-level matrix is outside the scope of this text. For additional information, refer to NIST Special Publication 800-30 R1, “Risk Management Guide for Information Technology Systems.”

The result of creating a risk-level matrix is that overall risk level may be expressed in relative terms of high, medium, and low risk. Figure 3-5 provides an example of a risk matrix.

Figure 3-5: A risk-level matrix

A description of each risk level and recommended actions:

- High Risk—Significant risk to the organization and to the organizational mission exists. There is a strong need for corrective actions that include reevaluation of existing controls and implementation of additional controls. Corrective actions should be implemented as soon as possible to reduce risk to an acceptable level.

- Medium Risk—A moderate risk to the organization and to the organizational mission exists. There is a need for corrective actions that include reevaluation of existing controls and may include implementation of additional controls. Corrective actions should be implemented within a reasonable time frame to reduce risk to an acceptable level.

- Low Risk—A low risk to the organization exists. An evaluation should be performed to determine if the risk should be reduced or if it will be accepted. If it is determined that the risk should be reduced, corrective actions should be performed to reduce risk to an acceptable level.

Step 3: Communicating and Sharing Risk Assessment Information

The third step in the risk assessment process is to communicate the assessment results and share risk-related information. The objective of this step is to ensure that decision makers across the organization have the appropriate risk-related information needed to inform and guide risk decisions. Communicating and sharing information consists of the following specific tasks:

- Communicate the risk assessment results.

- Share information developed in the execution of the risk assessment to support other risk management activities.

Step 4: Maintaining the Risk Assessment

The fourth step in the risk assessment process is to maintain the assessment. The objective of this step is to keep current the specific knowledge of the risk organizations incur. The results of risk assessments inform risk management decisions and guide risk responses. To support the ongoing review of risk management decisions (e.g., acquisition decisions, authorization decisions for information systems and common controls, connection decisions), organizations maintain risk assessments to incorporate any changes detected through risk monitoring. Risk monitoring provides organizations with the means to, on an ongoing basis:

- Determine the effectiveness of risk responses.

- Identify risk-impacting changes to organizational information systems and the environments in which those systems operate.

- Verify compliance.

Maintaining risk assessments includes the following specific tasks:

- Monitor risk factors identified in risk assessments on an ongoing basis and understand subsequent changes to those factors.

- Update the components of risk assessments reflecting the monitoring activities carried out by organizations.

Risk Treatment

Risk treatment is the next step in the risk assessment process. The goal of risk treatment is to reduce risk exposure to levels that are acceptable to the organization. Risk treatment can be performed using a number of different strategies. These strategies include:

- Risk mitigation

- Risk transference

- Risk avoidance

- Risk acceptance

Risk Mitigation

Risk mitigation reduces risks to the organization by implementing technical, managerial, and operational controls. Controls should be selected and implemented to reduce risk to acceptable levels. When controls are selected, they should be selected based on their cost, effectiveness, and ability to reduce risk. Controls restrict or constrain behavior to acceptable actions. To help understand controls, look at some examples of security controls.

A simple control is the requirement for a password to access a critical system. When an organization implements a password control, unauthorized users would theoretically be prevented from accessing a system. The key to selecting controls is to select controls that are appropriate based on the risk to the organization. Although we noted that a password is an example of a control, we did not discuss the length of the password.

If a password is implemented that is one character long and must be changed on an annual basis, a control is implemented; however, the control will have almost no effect on reducing the risk to the organization because the password could be easily cracked. If the password is cracked, unauthorized users could access the system and, as a result, the control has not effectively reduced the risk to the organization.

On the other hand, if a password that is 200 characters long that must be changed on an hourly basis is implemented, it becomes a control that will effectively prevent authorized access. The issue in this case is that a password with those requirements will have an unacceptably high cost to the organization. End-users would experience a significant productivity loss because they would be constantly changing their passwords.

The key to control selection is to implement cost-effective controls that reduce or mitigate risks to levels that are acceptable to the organization. By implementing controls based on this concept, organizations will reduce risk but not totally eliminate it.

Controls can be categorized in technical, managerial, or operational control categories. Managerial controls are controls that dictate how activities should be performed. Policies, procedures, standards, and guidelines are examples of managerial controls. These controls provide a framework for managing personnel and operations. They also can establish requirements for systems operations. An example of a managerial control is the requirement that information security policies should be reviewed on an annual basis and updated as necessary to ensure that they accurately reflect the environment and remain valid.

Technical controls are designed to control end-user and system actions. They can exist within operating systems, applications, databases, and network devices. Examples of technical controls include password constraints, access control lists, firewalls, data encryption, antivirus software, and intrusion prevention systems.

Technical controls help to enforce requirements specified within administrative controls. For example, an organization could have implemented a malicious code policy as an administrative control. The policy could require that all end-users’ systems have antivirus software installed. Installation of the antivirus software would be the technical control that provides support to the administrative control.

In addition to being categorized as technical, administrative, or operational, controls can be simultaneously categorized as either preventative or detective. Preventative controls attempt to prevent adverse behavior and actions from occurring. Examples of preventative controls include firewalls, intrusion prevention systems, and segregation of duties. Detective controls are used to detect actual or attempted violations of system security. Examples of detective controls include intrusion detection systems and audit logging.

Although implementing controls will reduce risk, some amount of risk will remain even after controls have been selected and implemented. The risk that remains after risk reduction and mitigation efforts are complete is referred to as residual risk. Organizations must determine how to treat this residual risk. Residual risk can be treated by risk transference, risk avoidance, or risk acceptance.

Risk Transference

Risk transference transfers risk from an organization to a third party. Some types of risk such as financial risk can be reduced by transferring it to a third party via a number of methods. The most common risk transference method is insurance. An organization can transfer its risk to a third party by purchasing insurance. When an organization purchases insurance, it effectively sells its risk to a third party. The insurer agrees to accept the risk in exchange for premium payments made by the insured. If the risk is realized, the insurer compensates the insured party for any incurred losses.

Organizations must be cautious when relying on risk transference because some risk cannot be transferred. Outsourcing sensitive information processing may seem like outsourcing the risk of personnel data breaches. If a breach occurs, surely those affected would understand that the outsourced processor caused the breach and focus their rage there, right? Unfortunately, this is rarely the case. In most instances, the victims seek damages against the organization that originally collected the information in the first place. Organizations must ensure they conduct their due diligence when transferring operations and data to third parties when the organization is still responsible for the protection of the data.

Finally, organizations must be aware of risk that cannot be transferred. While financial risk is easy to transfer to a third party through insurance, reputational damage and customer loyalty is almost impossible to transfer. When an information security risk affects these aspects of an organization, the organization must go on the defensive and engage public relations teams to try to maintain the goodwill of their customers and protect the reputation of their organization.

Risk Avoidance

Another alternative to mitigate risk is to avoid the risk. Risk can be avoided by eliminating the entire situation causing the risk. This could involve disabling system functionality or preventing risky activities when risk cannot be adequately reduced. In drastic measures, it can involve shutting down entire systems or parts of a business.

Risk Acceptance

Residual risk can also be accepted by an organization. A risk acceptance strategy indicates that an organization is willing to accept the risk associated with the potential occurrence of a specific event. It is important that when an organization chooses risk acceptance, it clearly understands the risk that is present, the probability that the loss related to the risk will occur, and the cost that would be incurred if the loss were realized. Organizations may determine that risk acceptance is appropriate when the cost of implementing controls exceeds the anticipated losses.

Risk Visibility and Reporting

Once assessed and quantified or qualified, risk should be reported and recorded. Organizations should have a way to aggregate risk in a centralized function that combines information security risk with other risk such as market risk, legal risk, human capital risk, and financial risk. The organizational risk executive function serves as a way to understand total risk to the organization. Risk is aggregated in a system called a risk register or, in some cases, a risk dashboard. The SSCP must ensure only risk information (not vulnerability or threat information) is reported to the risk register. The risk register serves as a way for the organization to know their possible exposure at a given time.

The risk register will generally be shared with stakeholders, allowing them to be kept aware of issues and providing a means of tracking the response to issues. It can be used to flag new risks and to make suggestions on what course of action to take to resolve any issues. The risk register is there to help with the decision-making process and enables managers and stakeholders to handle risk in the most appropriate way. The risk register is a document that contains information about identified risks, analysis of risk severity, and evaluations of the possible solutions to be applied. Presenting this in a spreadsheet is often the easiest way to manage things so that key information can be found and applied quickly and easily.

The SSCP should be familiar with how to create a risk register for the organization. If you are unsure how to create a risk register, the following is a brief guide on how to get started in just a few steps:

- Create the risk register—Use a spreadsheet to document necessary information, as shown in Figure 3-6.

Figure 3-6 Sample risk register

- Record Active Risks—Keep track of active risks by recording them in the risk register along with the date identified, date updated, target date, and closure date. Other useful information to include is the risk identification number, a description of the risk, type and severity of risk, its impact, possible response action, and the current status of risk.

- Assign a Unique Number to Each Risk Element—This will help to identify each unique risk so that you know what the status of the risk is at any given time.

The risk register addresses risk management in four key steps:

- Identifying the risk

- Evaluating the severity of any identified risks

- Applying possible solutions to those risks

- Monitoring and analyzing the effectiveness of any subsequent steps taken

Security Auditing Overview

A security audit is an evaluation of how well the objectives of a security framework are met and a verification to ensure the security framework is appropriate for the organization. Nothing that comes out of an audit should surprise security practitioners if they have been doing their continuous monitoring. Think of it this way. Monitoring is an ongoing evaluation of a security framework done by the folks who manage the security day-to-day, while an audit is an evaluation of the security framework performed by someone outside of the day-to-day security operations. Security audits serve two purposes for the security practitioner. First, they point out areas where security controls are lacking, policy is not being enforced, or ambiguity exists in the security framework. The second benefit of security audits is that they emphasize security things that are being done right. Auditors, in general, should not be perceived as the “bad guys” that are out to prove what a bad job the organization is doing. On the contrary, auditors should be viewed as professionals who are there to assist the organization in driving the security program forward and to assist security practitioners in making management aware of what security steps are being correctly taken and what more needs to be done.

So, who audits and why? There are two categories of auditors; the first type is an internal auditor. These people work for the company, and these audits can be perceived as an internal checkup. The other type of auditor is an external auditor. These folks either are under contract with the company to perform objective audits or are brought in by other external parties. Audits can be performed for several reasons. This list is by no means inclusive of all the reasons that an audit may be performed, but it covers most of them.

- Annual Audit—Most businesses perform a security audit on an annual basis as dictated by policy.

- Event-Triggered Audit—These audits are often conducted after a particular event occurs, such as an intrusion incident. They are used to both analyze what went wrong and, as with all audits, to confirm due diligence if needed.

- Merger/Acquisition Audit—These audits are performed before a merger/acquisition to give the purchasing company an idea of where the company they are trying to acquire stands on security in relation to their own security framework.

- Regulation Compliance Audit—These audits are used to confirm compliance with the IT–security-related portions of legislated regulations such as Sarbanes–Oxley and HIPAA.

- Ordered Audit—Although rare, there are times when a company is ordered by the courts to have a security audit performed.

What are the auditors going to use as a benchmark to test against? Auditors should use the organization's security framework as a basis for them to audit against; however, to ensure that everything is covered, they will first compare the organization's framework against a well-known and accepted standard. What methodology will the auditors use for the audit? There are many different methodologies used by auditors worldwide. Here are a few of them:

- ISO/IEC 27001:2013 (formerly BS 7799-2:2002)—“Specification for Information Security Management.” ISO/IEC 27001:2013 specifies the requirements for establishing, implementing, maintaining, and continually improving an information security management system within the context of the organization. It also includes requirements for the assessment and treatment of information security risks tailored to the needs of the organization. The requirements set out in ISO/IEC 27001:2013 are generic and are intended to be applicable to all organizations, regardless of type, size, or nature.

- ISO/IEC 27002:2013 (previously named ISO/IEC 17799:2005)—“Code of Practice for Information Security Management.” ISO/IEC 27002:2013 gives guidelines for organizational information security standards and information security management practices including the selection, implementation, and management of controls taking into consideration the organization's information security risk environment(s). It is designed to be used by organizations that intend to:

- Select controls within the process of implementing an Information Security Management System based on ISO/IEC 27001.

- Implement commonly accepted information security controls.

- Develop their own information security management guidelines.

- NIST SP 800-37 R1—“Guide for Applying the Risk Management Framework to Federal Information Systems,” which can be retrofitted for private industry. This publication provides guidelines for applying the Risk Management Framework (RMF) to federal information systems. The six-step RMF includes security categorization, security control selection, security control implementation, security control assessment, information system authorization, and security control monitoring. The RMF promotes the concept of near real-time risk management and ongoing information system authorization through the implementation of robust continuous monitoring processes, provides senior leaders the necessary information to make cost-effective, risk-based decisions with regard to the organizational information systems supporting their core missions and business functions, and integrates information security into the enterprise architecture and system development life cycle. Applying the RMF within enterprises links risk management processes at the information system level to risk management processes at the organization level through a risk executive (function) and establishes lines of responsibility and accountability for security controls deployed within organizational information systems and inherited by those systems (i.e., common controls).

- CobIT (Control Objectives for Information and related Technology)—From the Information Systems Audit and Control Association (ISACA). With COBIT 5, ISACA introduced a framework for information security. It includes all aspects of ensuring reasonable and appropriate security for information resources. Its foundation is a set of principles upon which an organization should build and test security policies, standards, guidelines, processes, and controls:

- Meeting stakeholder needs

- Covering the enterprise end-to-end

- Applying a single integrated framework

- Enabling a holistic approach

- Separating governance from management

Auditors may also use a methodology of their own design or one that has been adapted from several guidelines. What difference does it make to you as a security practitioner which methodology they use? From a technical standpoint, it does not matter; however, it is a good idea to be familiar with the methodology they are going to use to understand how management will be receiving the audit results.3

What Does an Auditor Do?

Auditors collect information about your security processes. Auditors are responsible for:

- Providing independent assurance to management that security systems are effective.

- Analyzing the appropriateness of organizational security objectives.

- Analyzing the appropriateness of policies, standards, baselines, procedures, and guidelines that support security objectives.

- Analyzing the effectiveness of the controls that support security policies.

- Stating and explaining the scope of the systems to be audited.

What Is the Audit Going to Cover?

Before auditors begin an audit, they define the audit scope that outlines what they are going to be looking at. One way to define the scope of an audit is to break it down into eight domains of security responsibility; that is, to break up the IT systems into manageable areas upon which audits may be based. For example, an audit may be broken into eight domains such as:

- User Domain—The users themselves and their authentication methods

- Workstation Domain—Often considered the end-user systems

- System/Application Domain—Applications that you run on your network, such as email, database, and web applications

- LAN Domain—Equipment required to create the internal LAN

- LAN-to-WAN Domain—The transition area between your firewall and the WAN, often where your DMZ resides

- WAN Domain—Usually defined as things outside of your firewall

- Remote Access Domain—How remote or traveling users access your network

- Cloud and Outsourced Domain—In what areas has the organization outsourced data, processing or transmission to other entities?

Auditors may also limit the scope by physical location, or they may just choose to review a subset of your security framework.

The security practitioner will be asked to participate in the audit by helping the auditors collect information and interpret the findings for the auditors. Having said that, there may be times when IT will not be asked to participate. They might be the reason for the audit, and the auditors need to get an unbiased interpretation of the data. There are several areas the security practitioner will be asked to participate in. Before participating in an audit, ensure controls, policies, and standards are in place to support target areas and be sure they are working up to the level prescribed. An audit is not the place to realize that logging or some other function is not working.

Documentation

As part of an audit, the auditors will want to review system documentation. This can include:

- Disaster/Business Recovery Documentation—While some IT practitioners and auditors do not see this as part of a security audit, others feel that because the recovery process involves data recovery and system configuration, it is an important and often overlooked piece of information security.

- Host Configuration Documentation—Auditors are going to want to see the documentation on how hosts are configured on the organization’s network both to see that everything is covered and to verify that the configuration documentation actually reflects what is being done.

- Baseline Security Configuration Documentation for Each Type of Host—As with the host configuration, this documentation does not just reflect the standard configuration data, but specifically what steps are being done related to security.

- Acceptable Use Documentation—Organizations often spell out acceptable use policies under the user responsibilities policy and the administrator use policy. Some also include it for particular hosts. For example, a company may say that there is to be no transfer of business confidential information over the FTP (file transfer protocol) server within the user policies and reiterate that policy both in an acceptable use policy for the FTP server as well as use it as a login banner for FTP services. As long as the message is the same, there is no harm in repeating it in several places.

- Change Management Documentation—There are two different types of change management documentation needed to produce for auditors. The first one would be the policy outlining the change management process. The other would be documentation reflecting changes made to a host.

- Data Classification Documentation—How are data classified? Are there some data the organization should spend more effort on securing than others? Having data classification documentation comes in handy for justifying why some hosts have more security restrictions than others do.

- Business Flow Documentation—Although not exactly related to IT security, documentation that shows how business data flow through the network can be a great aid to auditors trying to understand how everything works. For example, how does order entry data flow through the system from when the order is taken until it is shipped out to the customer? What systems do data reside on and how do data move around the network?

Responding to an Audit

Once the security practitioner has finished helping the auditors gather the required data and finished assisting them with interpreting the information, the practitioner’s work is not over. There are still a few more steps that need to be accomplished to complete the audit process.

Exit Interview

After an audit is performed, an exit interview will alert personnel to glaring issues they need to be concerned about immediately. Besides these preliminary alerts, an auditor should avoid giving detailed verbal assessments, which may falsely set the expectation level of the organization with regard to security preparedness in the audited scope.

Presentation of Audit Findings

After the auditors have finished tabulating their results, they will present the findings to management. These findings will contain a comparison of the audit findings versus the company’s security framework and industry standards or “best practices.” These findings will also contain recommendations for mitigation or correction of documented risks or instances of noncompliance.

Management Response

Management will have the opportunity to review the audit findings and respond to the auditors. This is a written response that becomes part of the audit documentation. It outlines plans to remedy findings that are out of compliance, or it explains why management disagrees with the audit findings.

The security practitioner should be involved in the presentation of the audit findings and assist with the management response. Even if input is not sought for the management response, the security practitioner needs to be aware of what issues were presented and what the management’s responses to those issues were.

Once all these steps are completed, the security cycle starts over again. The findings of the audit need to be fixed, mitigated, or introduced into the organization's security framework.

Security Assessment Activities

The security practitioner will be expected to perform security assessment activities including but not limited to vulnerability scanning, penetration testing, internal-external assessment, and interpreting the outcomes of the results.

Vulnerability Scanning and Analysis

Vulnerability scanning is simply the process of checking a system for weaknesses. These vulnerabilities can take the form of applications or operating systems that are missing patches, misconfiguration of systems, unnecessary applications, or open ports. While these tests can be conducted from outside the network, as an attacker would, it is advisable to do a vulnerability assessment from a network segment that has unrestricted access to the host the security practitioner is conducting the assessment against. Why is this? If the security practitioner only tests a system from the outside world, the security practitioner will identify any vulnerability that can be exploited from the outside. However, what happens if an attacker gains access inside the target network? Now there are vulnerabilities exposed to the attacker that could have easily been avoided. Unlike a penetration test, which is discussed later in this domain, a vulnerability tester has access to network diagrams, configurations, login credentials, and other information needed to make a complete evaluation of the system. The goal of a vulnerability assessment is to study the security level of the systems, identify problems, offer mitigation techniques, and assist in prioritizing improvements.

The benefits of vulnerability testing include the following:

- It identifies system vulnerabilities.

- It allows for the prioritization of mitigation tasks based on system criticality and risk.

- It is considered a useful tool for comparing security posture over time, especially when done consistently each period.

The disadvantages of vulnerability testing include:

- It may not effectively focus efforts if the test is not designed appropriately. Sometimes testers bite off more than they can chew.

- It has the potential to crash the network or host being tested if dangerous tests are chosen. (Innocent and noninvasive tests have been known to cause system crashes.)

Note that vulnerability testing software is often placed into two broad categories:

- General vulnerability

- Application-specific vulnerability

General vulnerability software probes hosts and operating systems for known flaws. It also probes common applications for flaws. Application-specific vulnerability tools are designed specifically to analyze certain types of application software. For example, database scanners are optimized to understand the deep issues and weaknesses of Oracle databases, Microsoft SQL Server, etc., and they can uncover implementation problems therein. Scanners optimized for web servers look deeply into issues surrounding those systems.

Vulnerability scanning software, in general, is often referred to as V/A (vulnerability assessment) software, and sometimes combines a port mapping function to identify which hosts are where and the applications they offer with further analysis that assigns vulnerabilities to applications. Good vulnerability software will offer mitigation techniques or links to manufacturer websites for further research. This stage of security testing is often an automated software process. It is also beneficial to use multiple tools and cross-reference the results of those tools for a more accurate picture. As with any automated process, the security practitioner needs to examine the results closely to ensure that they are accurate for the organization's environment.

Vulnerability testing usually employs software specific to the activity and tends to have the following qualities:

- OS Fingerprinting—This technique is used to identify the operating system in use on a target. OS fingerprinting is the process where a scanner can determine the operating system of the host by analyzing the TCP/IP stack flag settings. These settings vary on each operating system from vendor to vendor or by TCP/IP stack analysis and banner grabbing. Banner grabbing is reading the response banner presented for several ports such as FTP, HTTP, and Telnet. This function is sometimes built into mapping software and sometimes into vulnerability software.

- Stimulus and Response Algorithms—These are techniques to identify application software versions and then reference these versions with known vulnerabilities. Stimulus involves sending one or more packets at the target. Depending on the response, the tester can infer information about the target’s applications. For example, to determine the version of the HTTP server, the vulnerability testing software might send an HTTP GET request to a web server, just like a browser would (the stimulus), and read the reply information it receives back (the response) for information that details the fact that it is Apache version X, IIS version Y, etc.

- Privileged Logon Ability—The ability to automatically log onto a host or group of hosts with user credentials (administrator-level or other level) for a deeper “authorized” look at systems is desirable.

- Cross-Referencing—OS and applications/services (discovered during the port-mapping phase) should be cross-referenced to identify possible vulnerabilities. For example, if OS fingerprinting reveals that the host runs Red Hat Linux 8.0 and that portmapper is one of the listening programs, any pre-8.0 portmapper vulnerabilities can likely be ruled out. Keep in mind that old vulnerabilities have resurfaced in later versions of code even though they were patched at one time. While these instances may occur, the filtering based on OS and application fingerprinting will help the security practitioner better target systems and use the security practitioner's time more effectively.

- Update Capability—Scanners must be kept up to date with the latest vulnerability signatures; otherwise, they will not be able to detect newer problems and vulnerabilities. Commercial tools that do not have quality personnel dedicated to updating the product are of reduced effectiveness. Likewise, open-source scanners should have a qualified following to keep them up to date.

- Reporting Capability—Without the ability to report, a scanner does not serve much purpose. Good scanners provide the ability to export scan data in a variety of formats, including viewing in HTML or PDF format or to third-party reporting software, and are configurable enough to give the ability to filter reports into high-, mid-, and low-level detail depending on the intended audience for the report. Reports are used as basis for determining mitigation activities later. Additionally, many scanners are now feeding automated risk management dashboards using application portal interfaces.

Problems that may arise when using vulnerability analysis tools include:

- False Positives—When scanners use generalized tests or if the scanner does not have the ability to deeply scan the application, it might not be able to determine whether the application actually has vulnerability. It might result in information that says the application might have vulnerability. If it sees that the server is running a remote control application, the test software may indicate that the security practitioner has a “High” vulnerability. However, if the security practitioner has taken care to implement the remote control application to a high standard, the organization's vulnerability is not as high.

- Crash Exposure—V/A software has some inherent dangers because much of the vulnerability testing software includes denial-of-service test scripts (as well as other scripts), which, if used carelessly, can crash hosts. Ensure that hosts being tested have proper backups and that the security practitioner tests during times that will have the lowest impact on business operations.

- Temporal Information—Scans are temporal in nature, which means that the scan results the security practitioner has today become stale as time moves on and new vulnerabilities are discovered. Therefore, scans must be performed periodically with scanners that are up to date with the latest vulnerability signatures.

Scanner Tools

A variety of scanner tools are available:

- Nessus open source scanner—http://www.tenable.com/products/nessus

- eEye Digital Security’s Retina—http://www.beyondtrust.com/Products/RetinaNetworkSecurityScanner/

- SAINT—http://www.saintcorporation.com/

- For a more in-depth list, see http://sectools.org/web-scanners.html

Weeding Out False Positives

Even if a scanner reports a service as vulnerable, or missing a patch that leads to vulnerability, the system is not necessarily vulnerable. Accuracy is a function of the scanner’s quality; that is, how complete and concise the testing mechanisms are built (better tests equal better results), how up to date the testing scripts are (fresher scripts are more likely to spot a fuller range of known problems), and how well it performs OS fingerprinting (knowing which OS the host runs helps the scanner pinpoint issues for applications that run on that OS). Double check the scanner’s work. Verify that a claimed vulnerability is an actual vulnerability. Good scanners will reference documents to help the security practitioner learn more about the issue.

Host Scanning

Organizations serious about security create hardened host configuration procedures and use policy to mandate host deployment and change. There are many ingredients to creating a secure host, but the security practitioner should always remember that what is secure today might not be secure tomorrow, because conditions are ever changing. There are several areas to consider when securing a host or when evaluating its security. These are discussed in the following sections.

Disabling Unneeded Services

Services that are not critical to the role the host serves should be disabled or removed as appropriate for that platform. For the services the host does offer, make sure it is using server programs considered secure, make sure the security practitioner fully understands them, and tighten the configuration files to the highest degree possible. Unneeded services are often installed and left at their defaults, but since they are not needed, administrators ignore or forget about them. This may draw unwanted data traffic to the host from other hosts attempting connections, and it will leave the host vulnerable to weaknesses in the services. If a host does not need a particular host process for its operation, do not install it. If software is installed but not used or intended for use on the machine, it may not be remembered or documented that software is on the machine and therefore will likely not be patched. Port mapping programs use many techniques to discover services available on a host. These results should be compared with the policy that defines this host and its role. One must continually ask the critical questions, for the less a host offers as a service to the world while still maintaining its job, the better for its security (because there is less chance of subverting extraneous applications).

Disabling Insecure Services

Certain programs used on systems are known to be insecure, cannot be made secure, and are easily exploitable; therefore, only use secure alternatives. These applications were developed for private, secure LAN environments, but as connectivity proliferated worldwide, their use has been taken to insecure communication channels. Their weakness falls into three categories:

- They usually send authentication information unencrypted. For example, FTP and Telnet send username and passwords in the clear.

- They usually send data unencrypted. For example, HTTP sends data from client to server and back again entirely in the clear. For many applications, this is acceptable; however, for some it is not.

- SMTP also sends mail data in the clear unless it is secured by the application (e.g., the use of Pretty Good Privacy [PGP] within Outlook).

Common services are studied carefully for weaknesses by people motivated to attack the organization’s systems. Therefore, to protect hosts, one must understand the implications of using these and other services that are commonly hacked. Eliminate them when necessary or substitute them for more secure versions. For example:

- To ensure privacy of login information as well as the contents of client to server transactions, use SSH (secure shell) to log in to hosts remotely instead of Telnet.

- Use SSH as a secure way to send insecure data communications between hosts by redirecting the insecure data into an SSH wrapper. The details for doing this are different from system to system.

- Use SCP (Secure Copy) instead of FTP (file transfer protocol).

Ensuring Least Privilege File System Permissions

Least privilege is the concept that describes the minimum number of permissions required to perform a particular task. This applies to services/daemon processes as well as user permissions. Often systems installed out of the box are at minimum security levels. Make an effort to understand how secure newly installed configurations are, and take steps to lock down settings using vendor recommendations.

Making Sure File System Permissions Are as Tight as Possible

For UNIX-based systems, remove all unnecessary SUID (set used ID) and SGID (set group ID) programs that embed the ability for a program running in one user context to access another program. This ability becomes even more dangerous in the context of a program running with root user permissions as a part of its normal operation. For Windows-based systems, use the Microsoft Management Center (MMC) “security configuration and analysis” and “security templates” snap-ins to analyze and secure multiple features of the operation system, including audit and policy settings and the registry.

Establishing and Enforcing a Patching Policy

Patches are pieces of software code meant to fix a vulnerability or problem that has been identified in a portion of an operating system or in an application that runs on a host. Keep the following in mind regarding patching:

- Patches should be tested for functionality, stability, and security. You should also ensure that the patch does not change the security configuration of the organization's host. Some patches might reinstall a default account or change configuration settings back to a default mode. You need a way to test whether new patches will break a system or an application running on a system. When you are patching highly critical systems, it is advised to deploy the patch in a test environment that mimics the real environment. If the security practitioner does not have this luxury, only deploy patches at noncritical times, have a back-out plan, and apply patches in steps (meaning one by one) to ensure that each one was successful and the system is still operating.

- Use patch reporting systems that evaluate whether systems have patches installed completely and correctly and which patches are missing. Many vulnerability analysis tools have this function built into them, but be sure to understand how often the V/A tool vendor updates this list versus another vendor who specializes in patch analysis systems. Oftentimes, some vendors have better updating systems than others.

- Optimally, tools should test to see if once a patch has been applied to remove a vulnerability, the vulnerability does not still exist. Patch application sometimes includes a manual remediation component like a registry change or removing a user, and if the IT person applied the patch but did not perform the manual remediation component, the vulnerability may still exist.

Examining Applications for Weakness

In a perfect world, applications are built from the ground up with security in mind. Applications should prevent privilege escalation and buffer overflows and a myriad of other threatening problems. However, this is not always the case, and applications need to be evaluated for their ability not to compromise a host. Insecure services and daemons that run on hardened hosts may by nature weaken the host. Applications should come from trusted sources. Similarly, it is inadvisable to download executables from websites the security practitioner knows nothing about. Executables should be hashed and verified with the publisher. Signed executables also provide a level of assurance regarding the integrity of the file. Some programs can help evaluate a host’s applications for problems. In particular, these focus on web-based systems and database systems:

- Nikto (http://www.cirt.net) evaluates Web CGI systems for common and uncommon vulnerabilities in implementation.

- Web Inspect (http://www.purehacking.com.au) is an automated Web server scanning tool.

- Trustwave (https://www.trustwave.com) evaluates applications, especially various types of databases, for vulnerabilities.

The SSCP should do the following:

- Ensure that antivirus and antimalware software is installed and is up to date with the latest scan engine and pattern file offered by the vendor.

- Use products that encourage easy management and updates of signatures; otherwise, the systems may fail to be updated, rendering them ineffective to new exploits.

- Use products that centralize reporting of problems to spot problem areas and trends.

- Use system logging. Logging methods are advisable to ensure that system events are noted and securely stored, in the event they are needed later.

- Subscribe to vendor information. Vendors often publish information regularly, not only to keep their name in front of the security practitioner but also to inform the security practitioner of security updates and best practices for configuring their systems. Other organizations such as Security Focus (http://www.securityfocus.com) and CERT (http://www.cert.org) publish news of vulnerabilities. Some tools also specialize in determining when a system's software platform is out of compliance with the latest patches.

Firewall and Router Testing

Firewalls are designed to be points of data restriction (choke points) between security domains. They operate on a set of rules driven by a security policy to determine what types of data are allowed from one side to the other (point A to point B) and back again (point B to point A). Similarly, routers can also serve some of these functions when configured with access control lists (ACLs). Organizations deploy these devices to not only connect network segments together but also to restrict access to only those data flows that are required. This can help protect organizational data assets. Routers with ACLs, if used, are usually placed in front of the firewalls to reduce the noise and volume of traffic hitting the firewall. This allows the firewall to be more thorough in its analysis and handling of traffic. This strategy is also known as layering or defense in depth.

Changes to devices should be governed by change control processes that specify what types of changes can occur and when they can occur. This prevents haphazard and dangerous changes to devices that are designed to protect internal systems from other potentially hostile networks, such as the Internet or an extranet to which the organization’s internal network connects. Change control processes should include security testing to ensure the changes were implemented correctly and as expected.

Configuration of these devices should be reflected in security procedures, and the rules of the access control lists should be engendered by organizational policy. The point of testing is to ensure machine configurations match approved policy.

With this sample baseline in mind, port scanners and vulnerability scanners can be leveraged to test the choke point’s ability to filter as specified. If internal (trusted) systems are reachable from the external (untrusted) side in ways not specified by policy, a mismatch has occurred and should be assessed. Likewise, internal to external testing should conclude that only the allowed outbound traffic could occur. The test should compare a device’s logs with the tests dispatched from the test host.

Advanced firewall testing will test a device’s ability to perform the following (this is a partial list and is a function of the firewall’s capabilities):

- Limit TCP port scanning reconnaissance techniques (explained earlier in this domain) including SYN, FIN, XMAS, and NULL via the firewall.

- Limit ICMP and UDP port scanning reconnaissance techniques.

- Limit overlapping packet fragments.

- Limit half-open connections to trusted side devices. Attacks like these are called SYN attacks, when the attacker begins the process of opening many connections but never completes any of them, eventually exhausting the target host’s memory resources.

Advanced firewall testing can leverage a vulnerability or port scanner’s ability to dispatch denial-of-service and reconnaissance tests. A scanner can be configured to direct, for example, massive amounts of SYN packets at an internal host. If the firewall is operating properly and effectively, it will limit the number of these half-open attempts by intercepting them so that the internal host is not adversely affected. These tests must be used with care because there is always a chance that the firewall will not do what is expected and the internal hosts might be affected.

Security Monitoring Testing

IDS systems are technical security controls designed to monitor for and alert on the presence of suspicious or disallowed system activity within host processes and across networks. Device logging is used for recording many types of events that occur within hosts and network devices. Logs, whether generated by IDS or hosts, are used as audit trails and permanent records of what happened and when. Organizations have a responsibility to ensure that their monitoring systems are functioning correctly and alerting on the broad range of communications commonly in use. Documenting this testing can also be used to show due diligence. Likewise, the security practitioner can use testing to confirm that IDS detects traffic patterns as claimed by the vendor.

With regard to IDS testing, methods should include the ability to provide a stimulus (i.e., send data that simulate an exploitation of a particular vulnerability) and observe the appropriate response by the IDS. Testing can also uncover an IDS’s inability to detect purposeful evasion techniques that might be used by attackers. Under controlled conditions, stimulus can be crafted and sent from vulnerability scanners. Response can be observed in log files generated by the IDS or any other monitoring system used in conjunction with the IDS. If the appropriate response is not generated, investigation of the causes can be undertaken.

With regard to host logging tests, methods should also include the ability to provide a stimulus (i.e., send data that simulates a “log-able” event) and observe the appropriate response by the monitoring system. Under controlled conditions, stimulus can be crafted in many ways depending on the security practitioner's test. For example, if a host is configured to log an alert every time an administrator or equivalent logs on, the security practitioner can simply log on as the “root” user to the organization's UNIX system. In this example, the response can be observed in the system’s log files. If the appropriate log entry is not generated, investigation of the causes can be undertaken.

The overall goal is to make sure the monitoring is configured to the organization’s specifications and that it has all of the features needed.

The following traffic types and conditions are those the security practitioner should consider testing for in an IDS environment, as vulnerability exploits can be contained within any of them. If the monitoring systems the security practitioner uses do not cover all of them, the organization's systems are open to exploitation:

- Data Patterns That Are Contained within Single Packets—This is considered a minimum functionality because the IDS need only search through a single packet for an exploit.

- Data Patterns Contained within Multiple Packets—This is considered a desirable function because there is often more than one packet in a data stream between two hosts. This function, stateful pattern matching, requires the IDS to “remember” packets it saw in the past to reassemble them as well as perform analysis to determine if exploits are contained within the aggregate payload.

- Obfuscated Data—This refers to data that is converted from ASCII to Hexadecimal or Unicode characters and then sent in one or more packets. The IDS must be able to convert the code among all of these formats. If a signature that describes an exploit is written in ASCII but the exploit arrives at the organization’s system in Unicode, the IDS must convert it back to ASCII to recognize it as an exploit.

- Fragmented Data—IP data can be fragmented across many small packets, which are then reassembled by the receiving host. Fragmentation occasionally happens in normal communications. In contrast, overlapping fragments is a situation where portions of IP datagrams overwrite and supersede one another as they are reassembled on the receiving system (a teardrop attack). This can wreak havoc on a computer, which can become confused and overloaded during the reassembly process. IDS must understand how to reassemble fragmented data and overlapping fragmented data so it can analyze the resulting data. These techniques are employed by attackers to subvert systems and to evade detection.