CHAPTER SIX

Networking

Networking is a necessary foundational component of any cloud application and its ability to connect to data stores, other applications, and available cloud resources. Google Cloud's networking is based on the highly scalable Jupiter network fabric and the high-performance, flexible Andromeda virtual network stack, which are the same technologies that power Google's internal infrastructure and services.

Jupiter provides Google with tremendous bandwidth and scale. For example, Jupiter fabrics can deliver more than 1 petabit per second of total bisection bandwidth. To put this in perspective, that is enough capacity for 100,000 servers to exchange information at a rate of 10 Gbps each, or enough to read the entire scanned contents of the Library of Congress in less than 1/10th of a second.

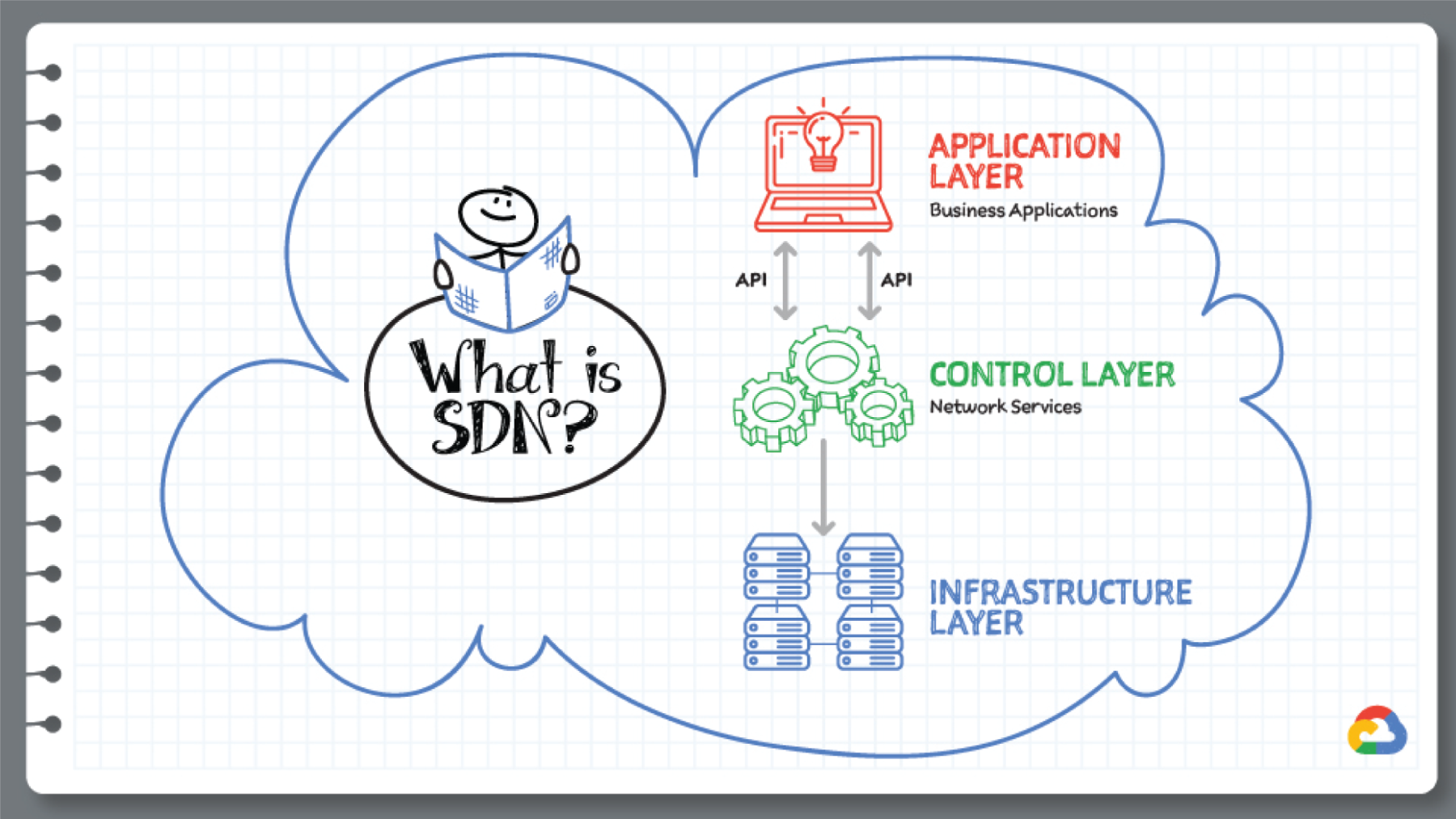

Andromeda is a software-defined networking (SDN) substrate for Google's network virtualization platform, acting as the orchestration point for provisioning, configuring, and managing virtual networks and in-network packet processing. Andromeda lets Google share Jupiter network fabric for Google Cloud services.

This chapter covers the Google Cloud networking infrastructure and the networking services available to you as you connect, scale, secure, modernize, and optimize your applications in Google Cloud.

How Is the Google Cloud Physical Network Organized?

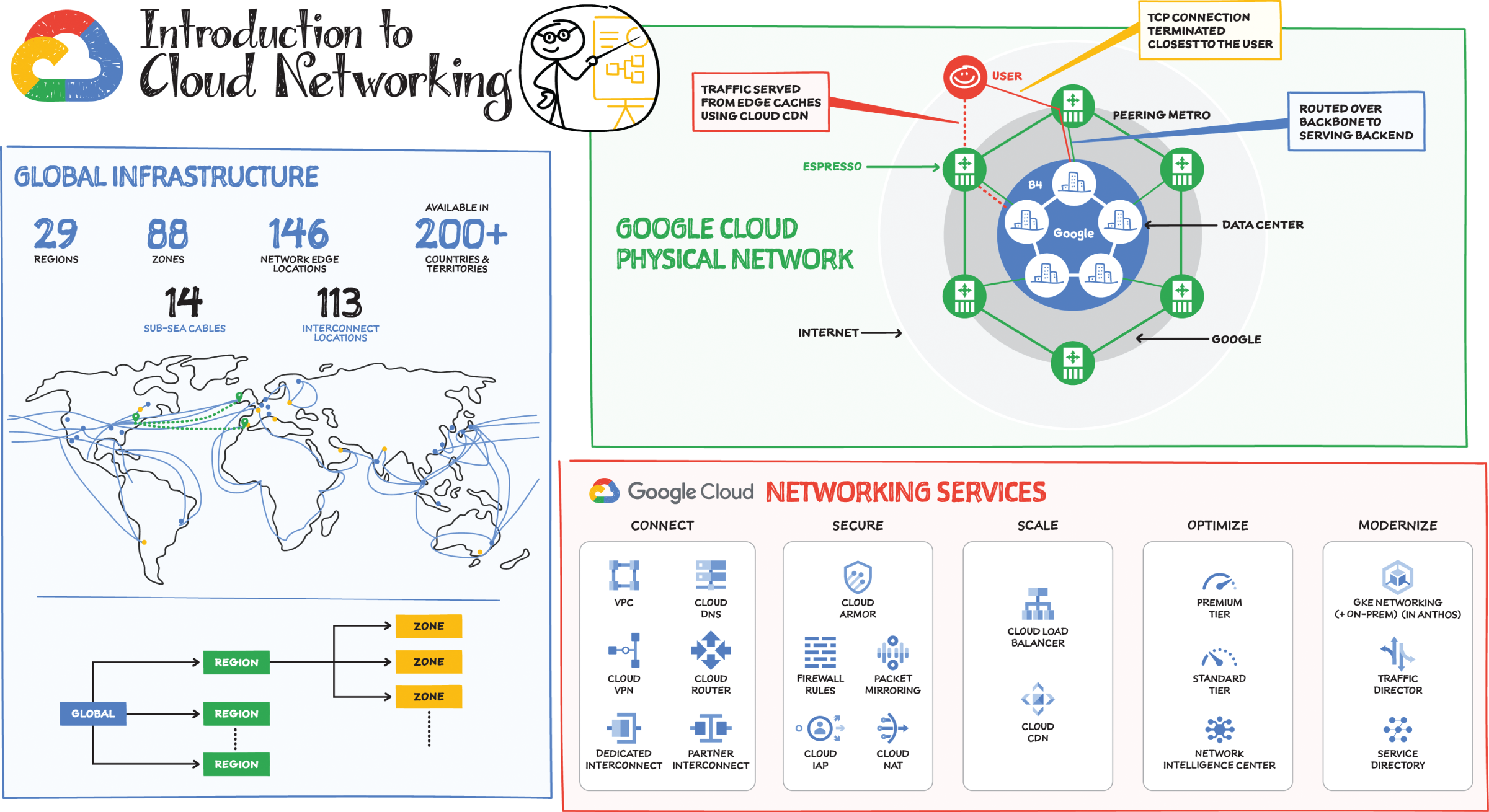

Google Cloud is divided into regions, which are further subdivided into zones:

- A region is a geographic area where the round-trip time (RTT) from one VM to another is typically under 1 ms.

- A zone is a deployment area within a region that has its own fully isolated and independent failure domain.

This means that no two machines in different zones or in different regions share the same fate in the event of a single failure.

As of this writing, Google has 29 regions and 88 zones across 200+ countries. This includes 146 network edge locations and CDN to deliver the content. This is the same network that also powers Google Search, Maps, Gmail, and YouTube.

Google Network Infrastructure

Google network infrastructure consists of three main types of networks:

- A data center network, which connects all the machines in the network together. This includes hundreds of thousands of miles of fiber-optic cables, including more than a dozen subsea cables.

- A software-based private network WAN connects all data centers together.

- A software-defined public WAN for user-facing traffic entering the Google network.

A machine gets connected from the Internet via the public WAN and gets connected to other machines on the network via the private WAN. For example, when you send a packet from your virtual machine running in the cloud in one region to a GCS bucket in another, the packet does not leave the Google network backbone. In addition, network load balancers and layer 7 reverse proxies are deployed at the network edge, which terminates the TCP/SSL connection at a location closest to the user, eliminating the two network round-trips needed to establish an HTTPS connection.

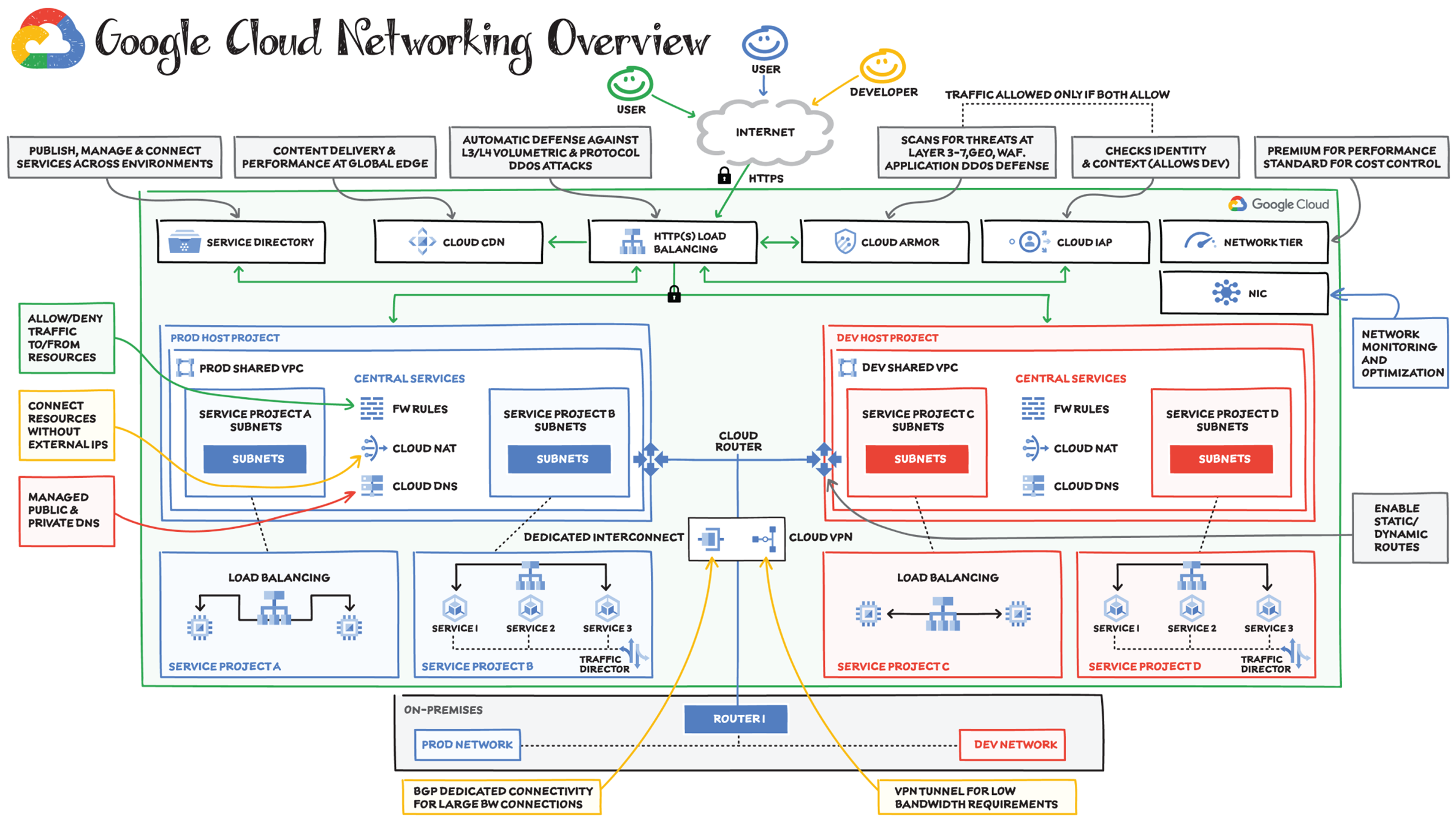

Cloud Networking Services

The Google physical network infrastructure powers the global virtual network that you use to run your applications in the cloud. It offers virtual network capabilities and tools you need to lift-and-shift, expand, and/or modernize your applications:

- Connect — Get started by provisioning the virtual network, connecting to it from other clouds or on-premises, and isolating your resources so that unauthorized projects or resources cannot access the network.

- Secure — Use network security tools for defense against infrastructure distributed denial-of-service (DdoS) attacks, mitigation of data exfiltration risks when connecting with services within Google Cloud, and network address translation to allow controlled internet access for resources without public IP addresses.

- Scale — Quickly scale applications, enable real-time distribution of load across resources in single or multiple regions, and accelerate content delivery to optimize last-mile performance.

- Optimize — Keep an eye on network performance to make sure the infrastructure is meeting your performance needs. This includes visualizing and monitoring network topology, performing diagnostic tests, and visualizing real-time performance metrics.

- Modernize — As you modernize your infrastructure, adopt microservices-based architectures, and employ containerization, make use of tools to help manage the inventory of your heterogeneous services, and route traffic among them.

This chapter covers the major services within the Google Cloud networking toolkit that help with connectivity, scale, security, optimization, and modernization.

With Network Service Tiers, Google Cloud is the first major public cloud to offer a tiered cloud network. Two tiers are available: Premium Tier and Standard Tier.

Premium Tier

Premium Tier delivers traffic from external systems to Google Cloud resources by using Google's highly reliable, low-latency global network. This network consists of an extensive private fiber network with over 100 points of presence (PoPs) around the globe. This network is designed to tolerate multiple failures and disruptions while still delivering traffic.

Premium Tier supports both regional external IP addresses and global external IP addresses for VM instances and load balancers. All global external IP addresses must use Premium Tier. Applications that require high performance and availability, such as those that use HTTP(S), TCP proxy, or SSL proxy load balancers with backends in more than one region, require Premium Tier. Premium Tier is ideal for customers with users in multiple locations worldwide who need the best network performance and reliability.

With Premium Tier, incoming traffic from the Internet enters Google's high-performance network at the PoP closest to the sending system. Within the Google network, traffic is routed from that PoP to the VM in your Virtual Private Cloud (VPC) network or closest Cloud Storage bucket. Outbound traffic is sent through the network, exiting at the PoP closest to its destination. This routing method minimizes congestion and maximizes performance by reducing the number of hops between end users and the PoPs closest to them.

Standard Tier

Standard Tier delivers traffic from external systems to Google Cloud resources by routing it over the Internet. It leverages the double redundancy of Google's network only up to the point where a Google data center connects to a peering PoP. Packets that leave the Google network are delivered using the public Internet and are subject to the reliability of intervening transit providers and ISPs. Standard Tier provides network quality and reliability comparable to that of other cloud providers.

Regional external IP addresses can use either Premium Tier or Standard Tier. Standard Tier is priced lower than Premium Tier because traffic from systems on the Internet is routed over transit (ISP) networks before being sent to VMs in your VPC network or regional Cloud Storage buckets. Standard Tier outbound traffic normally exits Google's network from the same region used by the sending VM or Cloud Storage bucket, regardless of its destination. In rare cases, such as during a network event, traffic might not be able to travel out the closest exit and might be sent out another exit, perhaps in another region.

Standard Tier offers a lower-cost alternative for applications that are not latency or performance sensitive. It is also good for use cases where deploying VM instances or using Cloud Storage in a single region can work.

Choosing a Tier

It is important to choose the tier that best meets your needs. The decision tree can help you decide if Standard Tier or Premium Tier is right for your use case. Because you choose a tier at the resource level — such as the external IP address for a load balancer or VM — you can use Standard Tier for some resources and Premium Tier for others. If you are not sure which tier to use, choose the default Premium Tier and then consider a switch to Standard Tier if you later determine that it's a better fit for your use case.

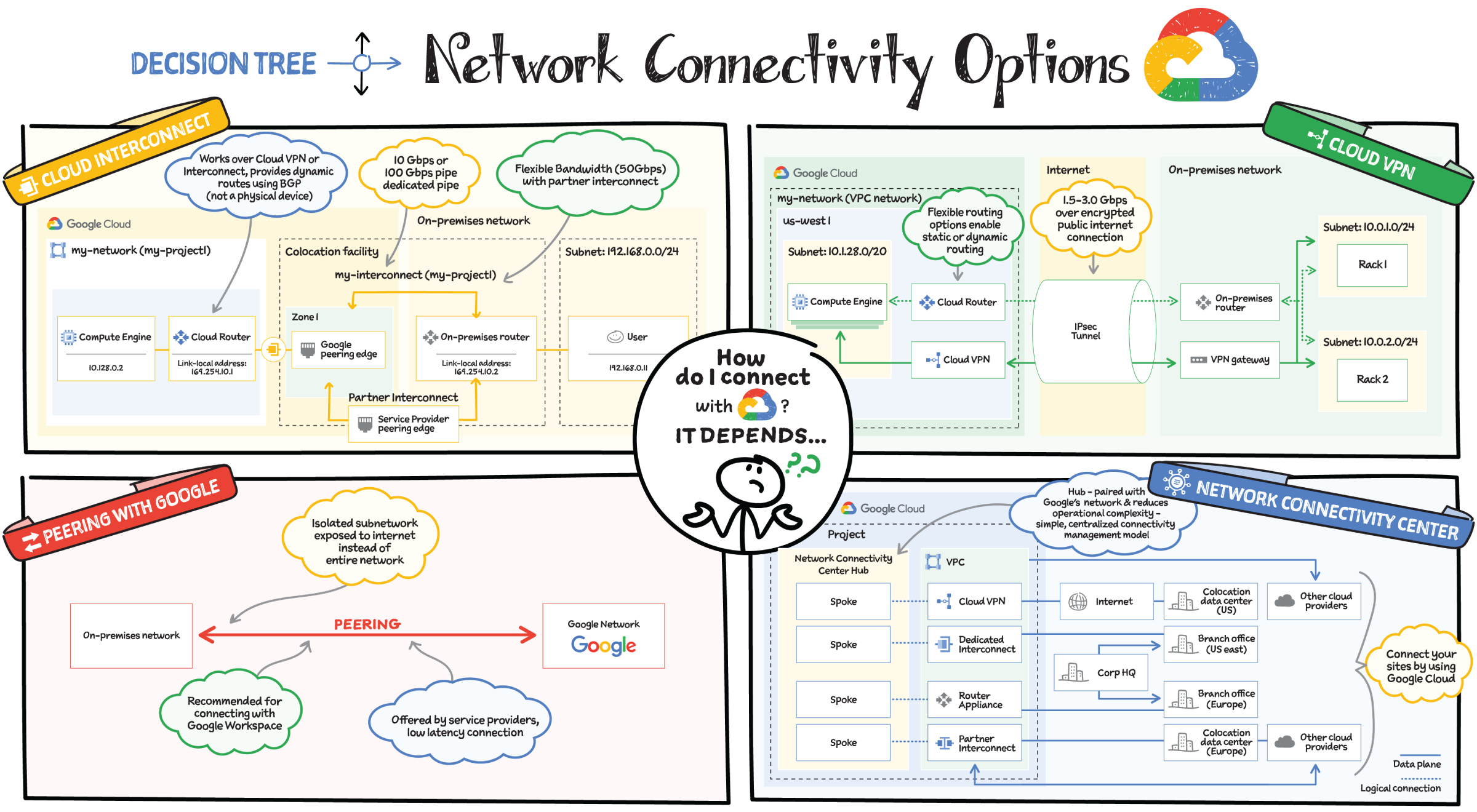

The cloud is an incredible resource, but you can't get the most out of it if you can't interact with it efficiently. And because network connectivity is not a one-size-fits-all situation, you need options for connecting your on-premises network or another cloud provider to Google's network.

When you need to connect to Google's network, you have a few options, let’s explore them.

Cloud Interconnect and Cloud VPN

If you want to encrypt traffic to Google Cloud, you need a lower-throughput solution, or if you are experimenting with migrating your workloads to Google Cloud, you can choose Cloud VPN. If you need an enterprise-grade connection to Google Cloud that has higher throughput, you can choose Dedicated Interconnect or Partner Interconnect.

Cloud Interconnect

Cloud Interconnect provides two options: you can create a dedicated connection (Dedicated Interconnect) or use a service provider (Partner Interconnect) to connect to Virtual Private Cloud (VPC) networks. If your bandwidth needs are high (10 Gpbs to 100 Gbps) and you can reach Google's network in a colocation facility, then Dedicated Interconnect is a cost-effective option. If you don't require as much bandwidth (50 Mbps to 50 Gbps) or can't physically meet Google's network in a colocation facility to reach your VPC networks, you can use Partner Interconnect to connect to service providers that connect directly to Google.

Cloud VPN

Cloud VPN lets you securely connect your on-premises network to your VPC network through an IPsec VPN connection in a single region. Traffic traveling between the two networks is encrypted by one VPN gateway and then decrypted by the other VPN gateway. This action protects your data as it travels over the Internet. You can also connect two instances of Cloud VPN to each other. HA VPN provides an SLA of 99.99 percent service availability.

Network Connectivity Center

Network Connectivity Center (in preview) supports connecting different enterprise sites outside of Google Cloud by using Google's network as a wide area network (WAN). On-premises networks can consist of on-premises data centers and branch or remote offices.

Network Connectivity Center is a hub-and-spoke model for network connectivity management in Google Cloud. The hub resource reduces operational complexity through a simple, centralized connectivity management model. Your on-premises networks connect to the hub via one of the following spoke types: HA VPN tunnels, VLAN attachments, or router appliance instances that you or select partners deploy within Google Cloud.

Peering

If you need access to only Google Workspace or supported Google APIs, you have two options:

- Direct Peering to directly connect (peer) with Google Cloud at a Google edge location.

- Carrier Peering to peer with Google by connecting through an ISP (support provider), which in turn peers with Google.

Direct Peering exists outside of Google Cloud. Unless you need to access Google Workspace applications, the recommended methods of access to Google Cloud are Dedicated Interconnect, Partner Interconnect, or Cloud VPN.

CDN Interconnect

CDN Interconnect (not shown in the image) enables select third-party Content Delivery Network (CDN) providers to establish direct peering links with Google's edge network at various locations, which enables you to direct your traffic from your VPC networks to a provider's network. Your network traffic egressing from Google Cloud through one of these links benefits from the direct connectivity to supported CDN providers and is billed automatically with reduced pricing. This option is recommended for high-volume egress and frequent content updates in the CDN.

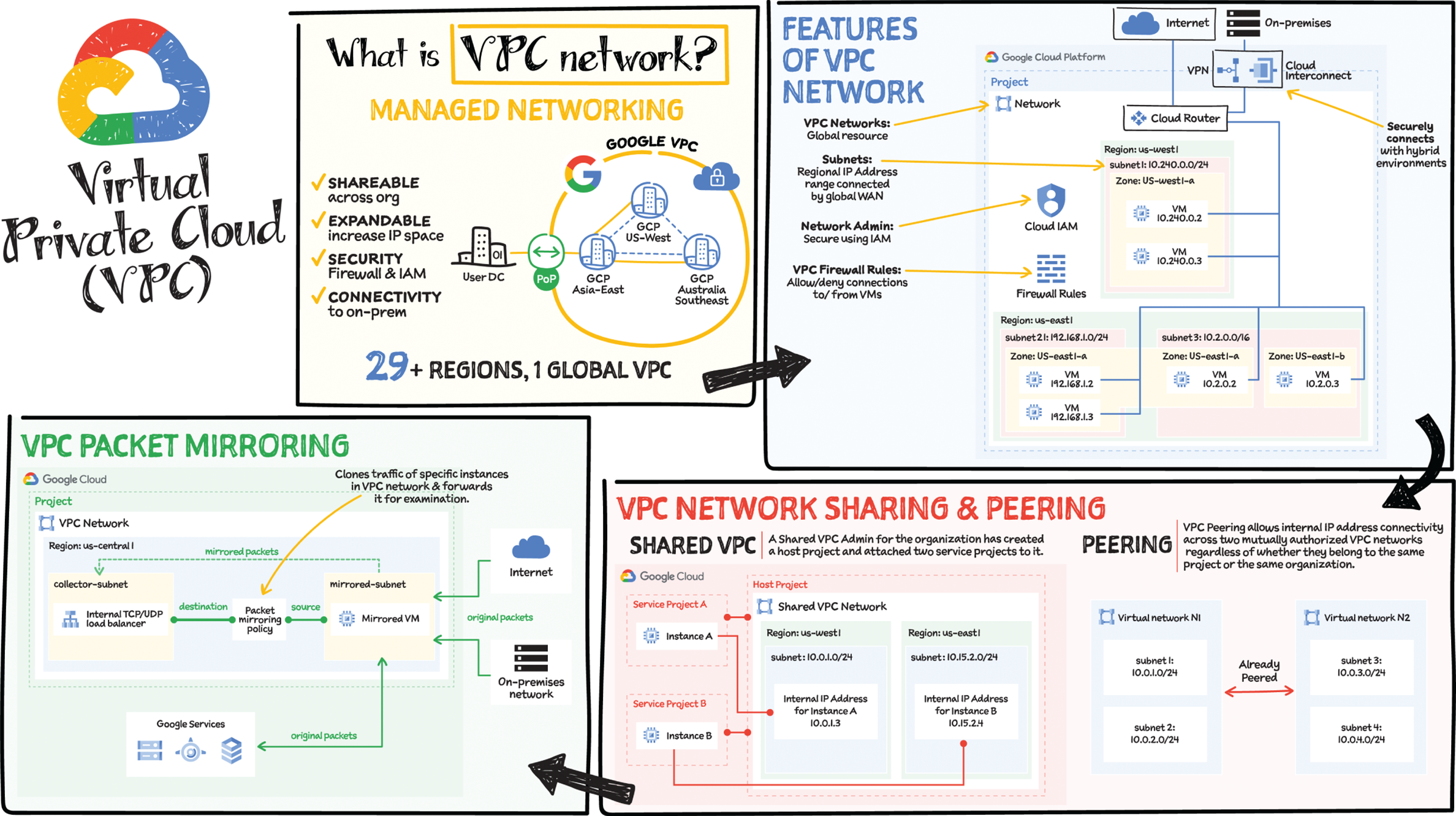

Virtual Private Cloud (VPC) provides networking functionality for your cloud-based resources. You can think of a VPC network the same way you'd think of a physical network, except that it is virtualized within Google Cloud and logically isolated from other networks. A VPC network is a global resource that consists of regional virtual subnetworks (subnets) in data centers, all connected by a global wide area network (Google's SDN).

Features of VPC Networks

A VPC network:

- Provides connectivity for Compute Engine virtual machine (VM) instances, including Google Kubernetes Engine (GKE) clusters, App Engine flexible environment instances, and other Google Cloud products built on Compute Engine VMs.

- Offers built-in Internal TCP/UDP load balancing and proxy systems for internal HTTP(S) Load Balancing.

- Connects to on-premises networks using Cloud VPN tunnels and Cloud Interconnect attachments.

- Distributes traffic from Google Cloud external load balancers to backends.

- VPC firewall rules let you allow or deny connections to or from your VM instances based on a configuration that you specify. Every VPC network functions as a distributed firewall. While firewall rules are defined at the network level, connections are allowed or denied on a per-instance basis. You can think of the VPC firewall rules as existing not only between your instances and other networks, but also between individual instances within the same network.

Shared VPC

Shared VPC enables an organization to connect resources from multiple projects to a common Virtual Private Cloud (VPC) network so that they can communicate with each other securely and efficiently using internal IPs from that network. When you use Shared VPC, you designate a project as a host project and attach one or more other service projects to it. The VPC networks in the host project are called Shared VPC networks. Eligible resources from service projects can use subnets in the Shared VPC network.

VPC Network Peering

Google Cloud VPC Network Peering enables internal IP address connectivity across two VPC networks regardless of whether they belong to the same project or the same organization. Traffic stays within Google's network and doesn't traverse the public Internet.

VPC Network Peering is useful in organizations that have several network administrative domains that need to communicate using internal IP addresses. The benefits of using VPC Network Peering over using external IP addresses or VPNs are lower network latency, added security, and cost savings due to less egress traffic.

VPC Packet Mirroring

Packet Mirroring is useful when you need to monitor and analyze your security status. VPC Packet Mirroring clones the traffic of specific instances in your VPC network and forwards it for examination. It captures all traffic (ingress and egress) and packet data, including payloads and headers. The mirroring happens on the VM instances, not on the network, which means it consumes additional bandwidth on the VMs.

Packet Mirroring copies traffic from mirrored sources and sends it to a collector destination. To configure Packet Mirroring, you create a packet mirroring policy that specifies the source and destination. Mirrored sources are Compute Engine VM instances that you select. A collector destination is an instance group behind an internal load balancer.

How many times have you heard this:

It's not DNS

NO way it is DNS

It was the DNS!

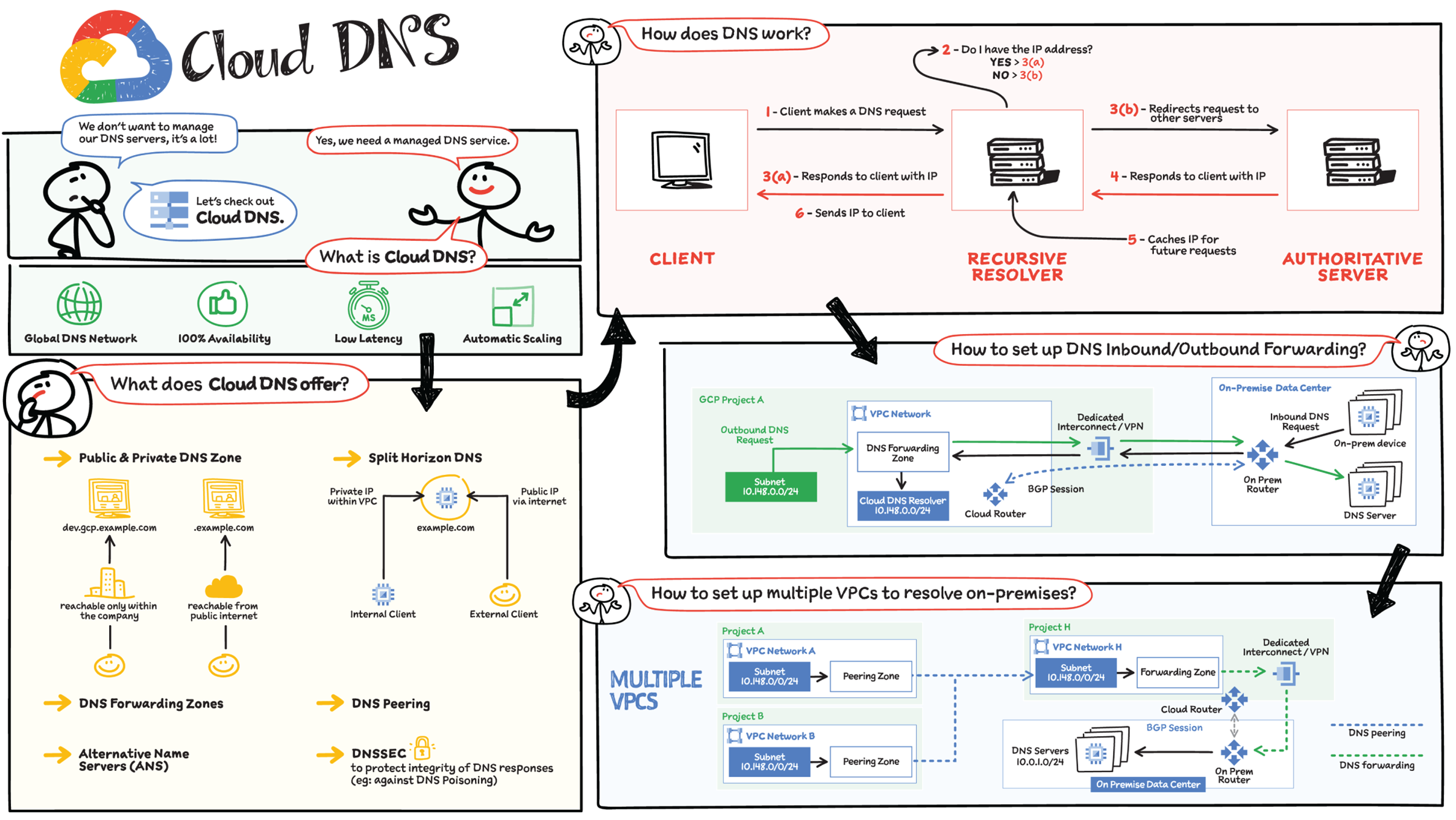

When you are building and managing cloud -native or hybrid cloud applications, you don't want to add more stuff to your plate, especially not DNS. DNS is one of the necessary services for your application to function, but you can rely on a managed service to take care of DNS requirements. Cloud DNS is a managed, low-latency DNS service running on the same infrastructure as Google, which allows you to easily publish and manage millions of DNS zones and records.

How Does DNS Work?

When a client requests a service, the first thing that happens is DNS resolution, which means hostname-to–IP address translation. Here is how the request flow works:

- Step 1 — A client makes a DNS request.

- Step 2 — The request is received by a recursive resolver, which checks if it already knows the response to the request.

- Step 3 (a) — If yes, the recursive resolver responds to the request if it already has it stored in cache.

- Step 3 (b) — If no, the recursive resolver redirects the request to other servers.

- Step 4 — The authoritative server then responds to requests.

- Step 5 — The recursive resolver caches the result for future queries.

- Step 6 — The recursive resolver finally sends the information to the client.

What Does Cloud DNS Offer?

- Global DNS Network: The Managed Authoritative Domain Name System (DNS) service running on the same infrastructure as Google. You don't have to manage your DNS server — Google does it for you.

- 100 Percent Availability and Automatic Scaling: Cloud DNS uses Google's global network of anycast name servers to serve your DNS zones from redundant locations around the world, providing high availability and lower latency for users. Allows customers to create, update, and serve millions of DNS records.

- Private DNS Zones: Used for providing a namespace that is only visible inside the VPC or hybrid network environment. Example: A business organization has a domain dev.gcp.example.com, reachable only from within the company intranet.

- Public DNS Zones: Used for providing authoritative DNS resolution to clients on the public Internet. Example: A business has an external website, example.com, accessible directly from the Internet. Not to be confused with Google Public DNS (8.8.8.8), which is just a public recursive resolver.

- Split Horizon DNS: Used to serve different answers (different resource record sets) for the same name depending on who is asking — internal or external network resource.

- DNS Peering: DNS peering makes available a second method of sharing DNS data. All or a portion of the DNS namespace can be configured to be sent from one network to another and, once there, will respect all DNS configuration defined in the peered network.

- Security: Domain Name System Security Extensions (DNSSEC) is a feature of the Domain Name System (DNS) that authenticates responses to domain name lookups. It prevents attackers from manipulating or poisoning the responses to DNS requests.

Hybrid Deployments: DNS Forwarding

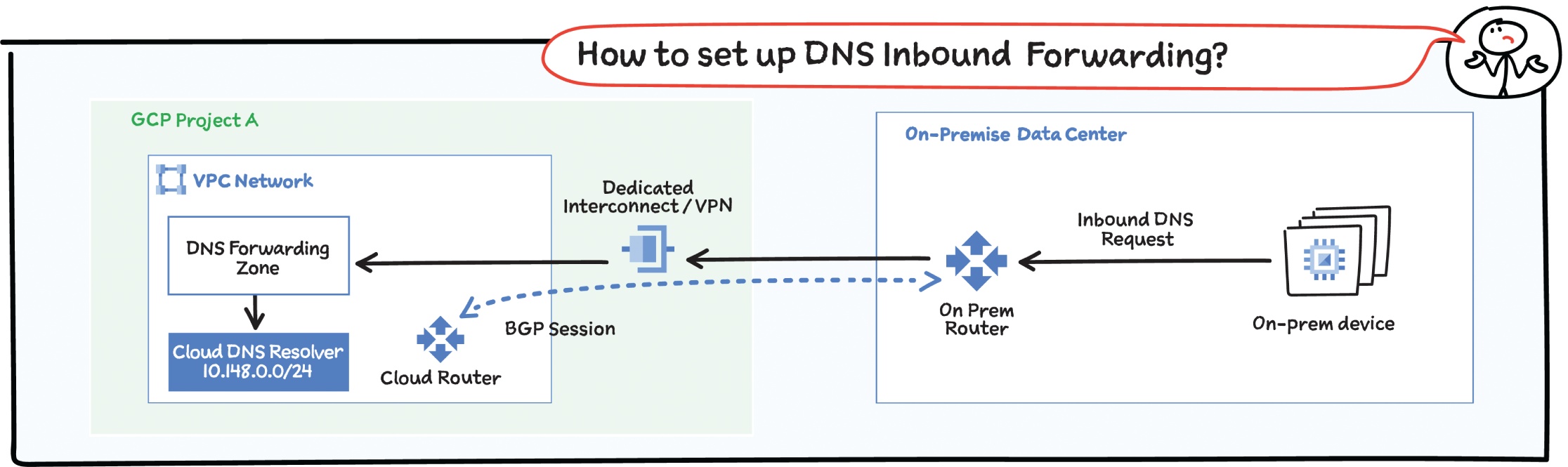

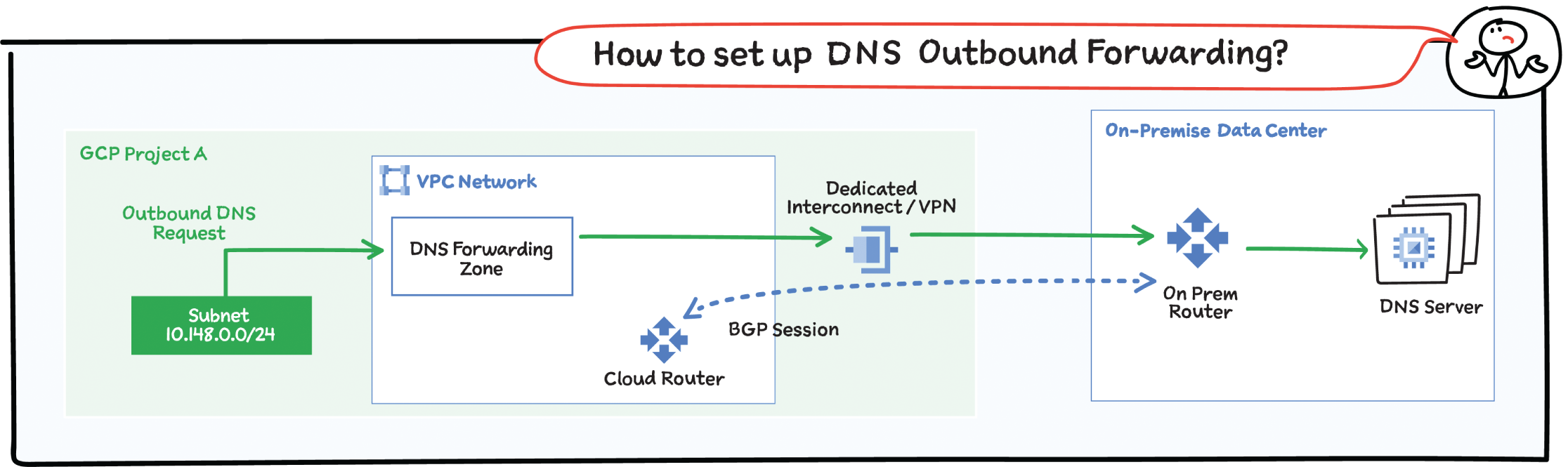

Google Cloud offers inbound and outbound DNS forwarding for private zones. You can configure DNS forwarding by creating a forwarding zone or a Cloud DNS server policy. The two methods are inbound and outbound. You can simultaneously configure inbound and outbound DNS forwarding for a VPC network.

Inbound:

Create an inbound server policy to enable an on-premises DNS client or server to send DNS requests to Cloud DNS. The DNS client or server can then resolve records according to a VPC network's name resolution order. On-premises clients use Cloud VPN or Cloud Interconnect to connect to the VPC network.

Outbound

You can configure VMs in a VPC network to do the following:

- Send DNS requests to DNS name servers of your choice. The name servers can be located in the same VPC network, in an on-premises network, or on the Internet.

- Resolve records hosted on name servers configured as forwarding targets of a forwarding zone authorized for use by your VPC network.

- Create an outbound server policy for the VPC network to send all DNS requests an alternative name server.

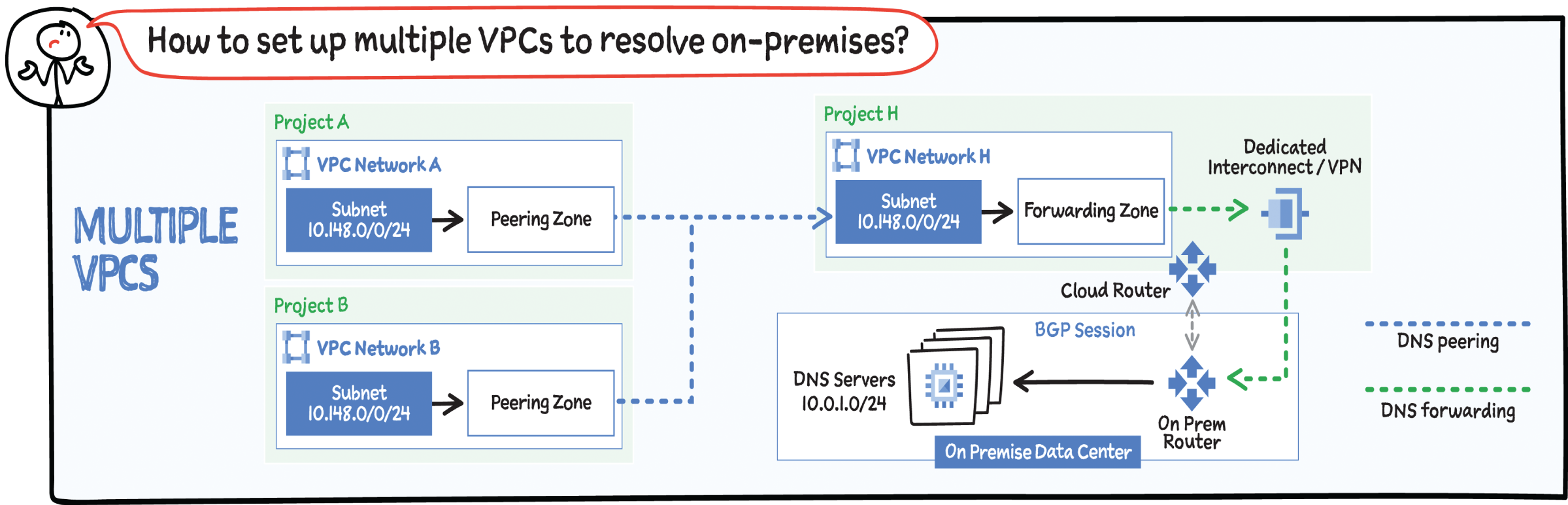

Hybrid Deployments: Hub and Spoke

If you have multiple VPCs that connect to multiple on-premises locations, it's recommended that you utilize a hub-and-spoke model, which helps get around reverse routing challenges due to the usage of the Google DNS proxy range. For redundancy, consider a model where the DNS-forwarding VPC network spans multiple Google Cloud regions, and where each region has a separate path (via interconnect or other means) to the on-premises network. This model allows the VPC to egress queries out of either interconnect path, and allows return queries to return via either interconnect path. The outbound request path always leaves Google Cloud via the nearest interconnect location to where the request originated.

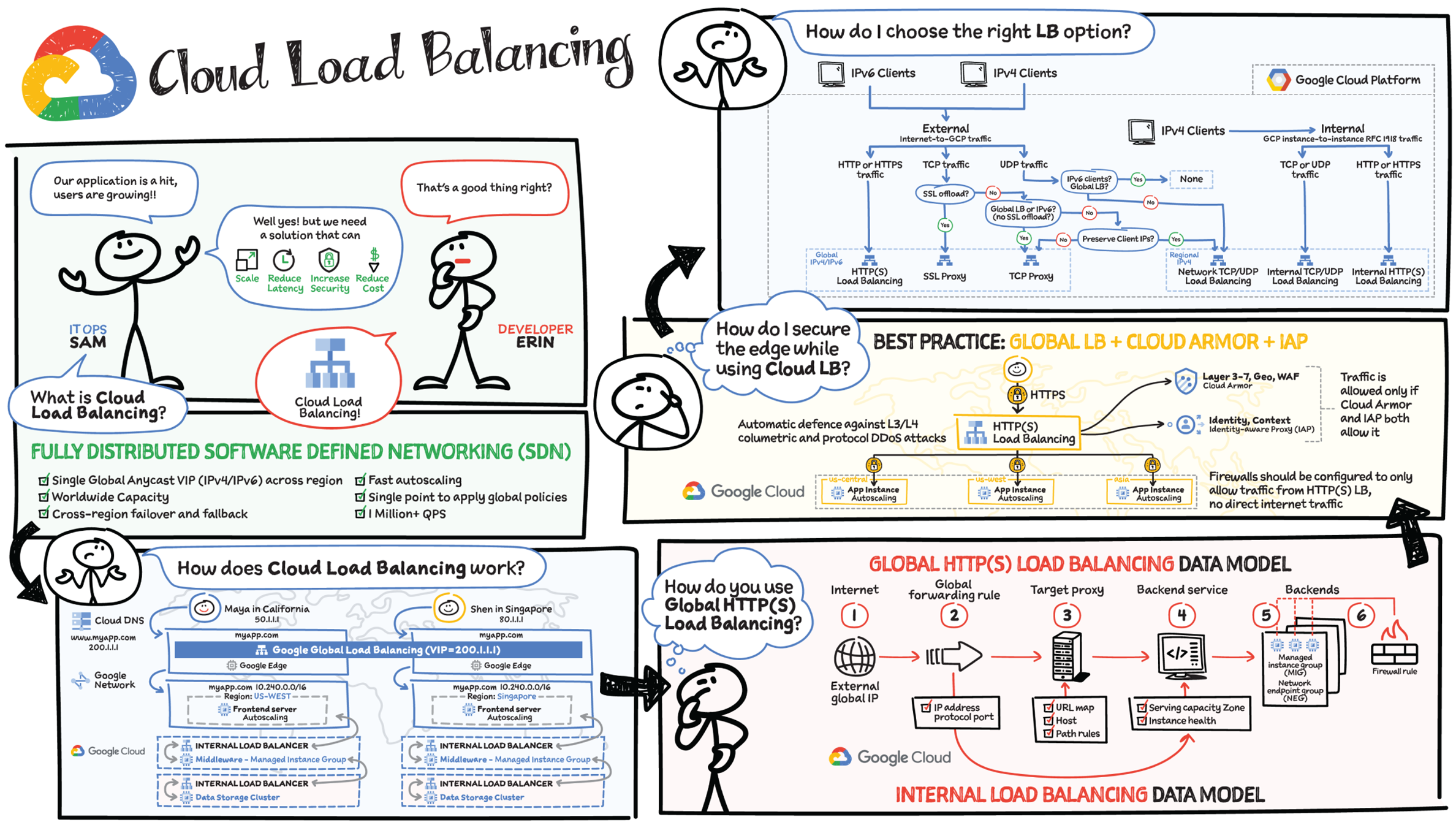

Let's say your new application has been a hit. Usage is growing across the world and you now need to figure out how to scale, optimize, and secure the app while keeping your costs down and your users happy. That's where Cloud Load Balancing comes in.

What Is Cloud Load Balancing?

Cloud Load Balancing is a fully distributed load-balancing solution that balances user traffic — HTTP(s), HTTPS/2 with gRPC, TCP/SSL, UDP, and QUIC — to multiple backends to avoid congestion, reduce latency, increase security, and reduce costs. It is built on the same frontend-serving infrastructure that powers Google, supporting 1 million+ queries per second with consistent high performance and low latency.

- Software-defined network (SDN) — Cloud Load Balancing is not an instance- or a device-based solution, which means you won't be locked into physical infrastructure or face HA, scale, and management challenges.

- Single global anycast IP and autoscaling — Cloud Load Balancing frontends all your backend instances in regions around the world. It provides cross-region load balancing, including automatic multiregion failover, which gradually moves traffic in fractions if backends become unhealthy or scales automatically if more resources are needed.

How Does Cloud Load Balancing Work?

External Load Balancing

Consider the following scenario. You have a user, Shen, in California. You deploy your frontend instances in that region and configure a load-balancing virtual IP (VIP). When your user base expands to another region, all you need to do is create instances in additional regions. There is no change in the VIP or the DNS server settings. As your app goes global, the same patterns follow: Maya from India is routed to the instance closer to her in India. If the instances in India are overloaded and are autoscaling to handle the load, Maya will seamlessly be redirected to the other instances in the meantime and routed back to India when instances have scaled sufficiently to handle the load. This is an example of external load balancing at Layer 7.

Internal Load Balancing

In any three-tier app, after the frontend you have the middleware and the data sources to interact with, in order to fulfill a user request. That's where you need Layer 4 internal load balancing between the frontend and the other internal tiers. Layer 4 internal load balancing is for TCP/UDP traffic behind RFC 1918 VIP, where the client IP is preserved.

You get automatic heath checks and there is no middle proxy; it uses the SDN control and data plane for load balancing.

How to Use Global HTTP(S) Load Balancing

- For global HTTP(s) load balancing, the Global Anycast VIP (IPv4 or IPv6) is associated with a forwarding rule, which directs traffic to a target proxy.

- The target proxy terminates the client session, and for HTTPs you deploy your certificates at this stage, define the backend host, and define the path rules. The URL map provides Layer 7 routing and directs the client request to the appropriate backend service.

- The backend services can be managed instance groups (MIGs) for compute instances, or network endpoint groups (NEGs) for your containerized workloads. This is also where service instance capacity and health is determined.

- Cloud CDN is enabled to cache content for improved performance. You can set up firewall rules to control traffic to and from your backend.

- The internal load balancing setup works the same way; you still have a forwarding rule but it points directly to a backend service. The forwarding rule has the virtual IP address, the protocol, and up to five ports.

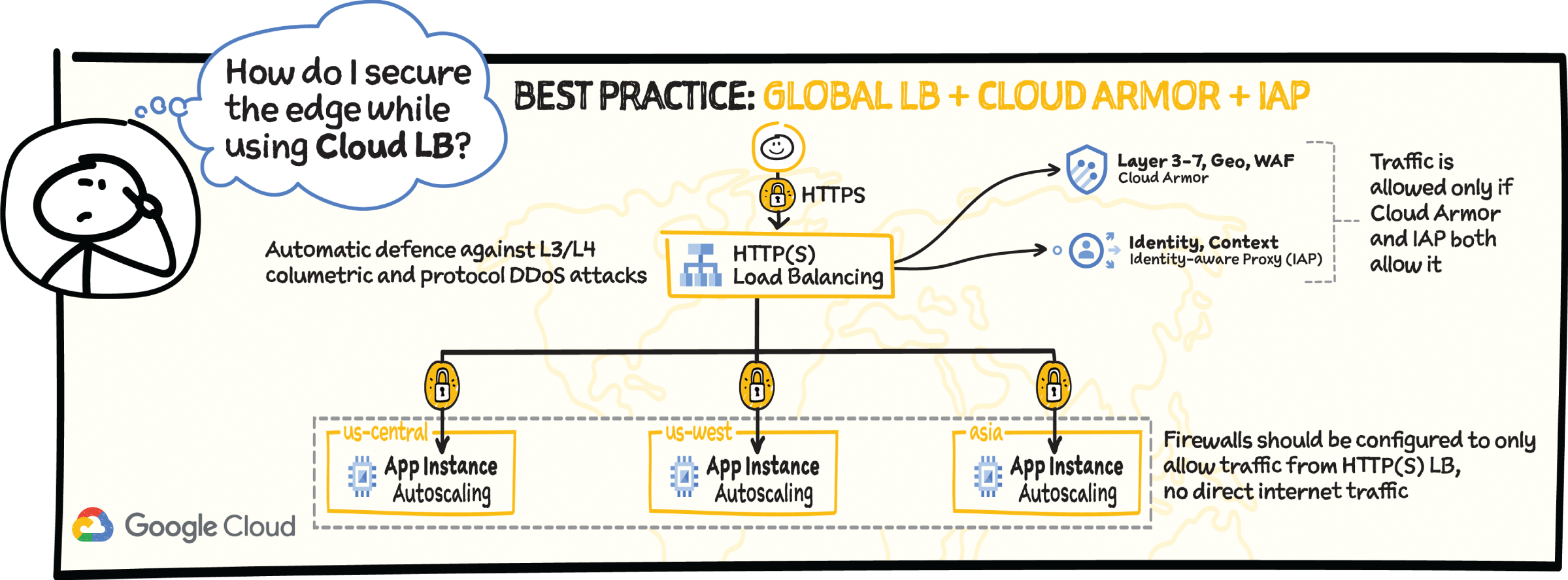

How to Secure Your Application with Cloud Load Balancing

As a best practice, run SSL everywhere. With HTTPS and SSL proxy load balancing, you can use managed certs — Google takes care of the provisioning and managing of the SSL certificate life cycle.

- Cloud Load Balancing supports multiple SSL certificates, enabling you to serve multiple domains using the same load balancer IP address and port.

- It absorbs and dissipates Layer 3 and Layer 4 volumetric attacks across Google's global load-balancing infrastructure.

- Additionally, with Cloud Armor, you can protect against Layer 3–Layer 7 application-level attacks.

- By using Identity-Aware Proxy and firewalls, you can authenticate and authorize access to backend services.

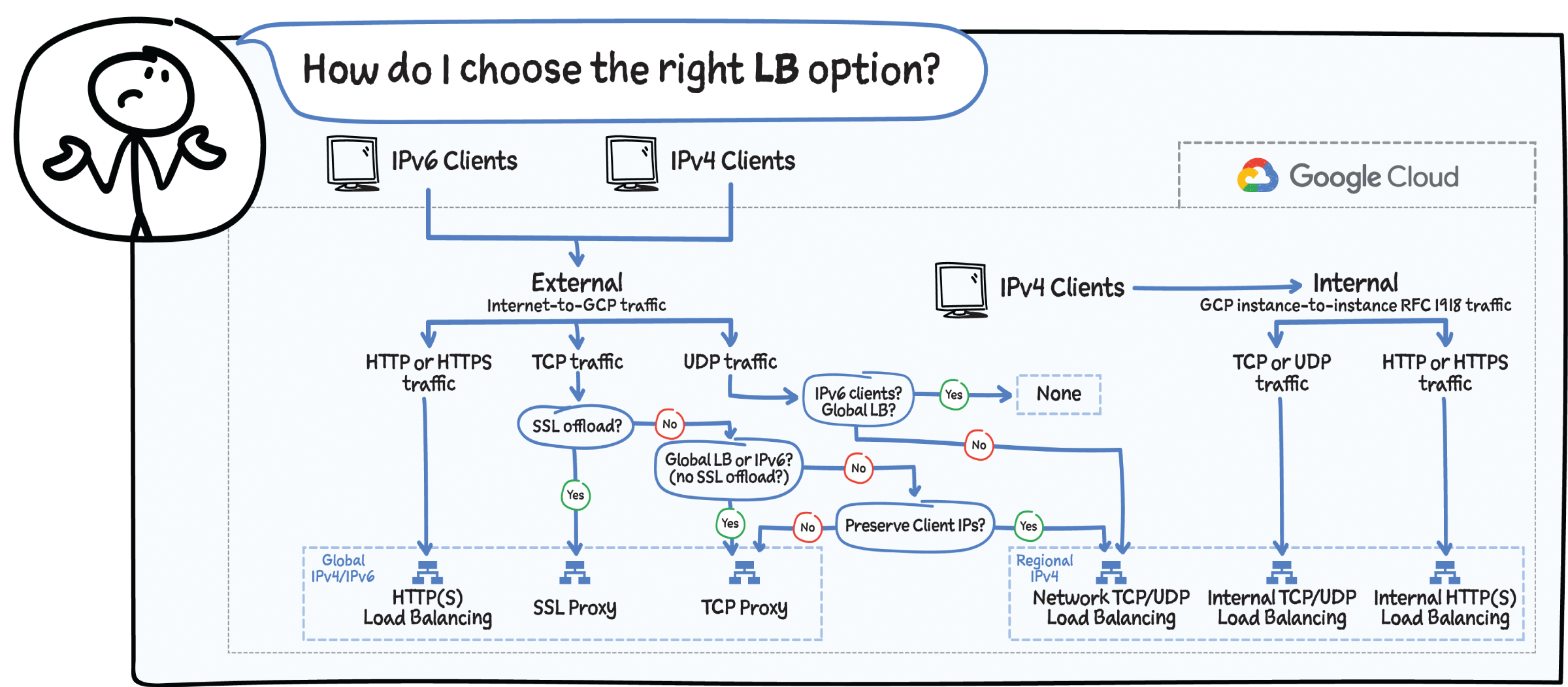

How to Choose the Right Load-Balancing Option

When deciding which load-balancing option is right for your use case, consider factors such as internal vs. external, global vs. regional, and type of traffic (HTTPs, TLS, or UDP).

If you are looking to reduce latency, improve performance, enhance security, and lower costs for your backend systems. then check out Cloud Load Balancing. It is easy to deploy in just a few clicks; simply set up the frontend and backends associated with global VIP, and you are good to go.

No matter what your app or website does, chances are that your users are distributed across various locations and are not necessarily close to your servers. This means the requests travel long distances across the public Internet, leading to inconsistent and sometimes frustrating user experiences. That's where Cloud CDN comes in!

What Is Cloud CDN?

Cloud CDN is a content delivery network that accelerates your web and video content delivery by using Google's global edge network to bring content as close to your users as possible. As a result, latency, cost, and load on your backend servers is reduced, making it easier to scale to millions of users. Global anycast IP provides a single IP for global reach. It enables Google Cloud to route users to the nearest edge cache automatically and avoid DNS propagation delays that can impact availability. It supports HTTP/2 end-to-end and the QUIC protocol from client to cache. QUIC is a multiplexed stream transport over UDP, which reduces latency and makes it ideal for lossy mobile networks.

How Does Cloud CDN Work?

Let's consider an example to understand how Cloud CDN works:

- When a user makes a request to your website or app, the request is routed to the closest Google edge node (we have over 120 of these!) for fast and reliable traffic flow. From there the request gets routed to the global HTTPS Load Balancer to the backend or origin.

- With Cloud CDN enabled, the content gets directly served from the cache — a group of servers that store and manage cacheable content so that future requests for that content can be served faster.

- The cached content is a copy of cacheable web assets (JavaScript, CSS), images, video, and other content that is stored on your origin servers.

- Cloud CDN automatically caches this content when you use the recommended “cache mode” to cache all static content. If you need more control, you can direct Cloud CDN by setting HTTP headers on your responses. You can also force all content to be cached; just know that this ignores the “private,” “no-store,” or “no-cache” directives in Cache-Control response headers.

- When the request is received by Cloud CDN, it looks for the cached content using a cache key. This is typically the URI, but you can customize the cache key to remove protocol, hosts, or query strings.

- If a cached response is found in the Cloud CDN cache, the response is retrieved from the cache and sent to the user. This is called a cache hit. When a cache hit occurs, Cloud CDN looks up the content by its cache key and responds directly to the user, shortening the round-trip time and reducing the load on the origin server.

- The first time that a piece of content is requested, Cloud CDN can't fulfill the request from the cache because it does not have it in cache. This is called a cache miss. When a cache miss occurs, Cloud CDN might attempt to get the content from a nearby cache. If the nearby cache has the content, it sends it to the first cache by using cache-to-cache fill. Otherwise, it just sends the request to the origin server.

- The maximum lifetime of the object in a cache is defined by the TTLs, or time-to-live values, set by the cache directives for each HTTP response or cache mode. When the TTL expires, the content is evicted from cache.

How to Use Cloud CDN

You can set up Cloud CDN through gCloud CLI, Cloud Console, or the APIs. Since Cloud CDN uses Cloud Load Balancing to provide routing, health checking, and anycast IP support, it can be enabled easily by selecting a checkbox while setting up your backends or origins.

Cloud CDN makes it easy to serve web and media content using Cloud Storage. You just upload your content to a Cloud Storage bucket, set up your load balancer, and enable caching. To enable hybrid architectures spanning across clouds and on-premises, Cloud CDN and HTTP(S) Load Balancing also support external backends.

Security

- Data is encrypted at rest and in transit from Cloud Load Balancing to the backend for end-to-end encryption.

- You can programmatically sign URLs and cookies to limit video segment access to authorized users only. The signature is validated at the CDN edge and unauthorized requests are blocked right there!

- On a broader level, you can enable SSL for free using Google managed certs.

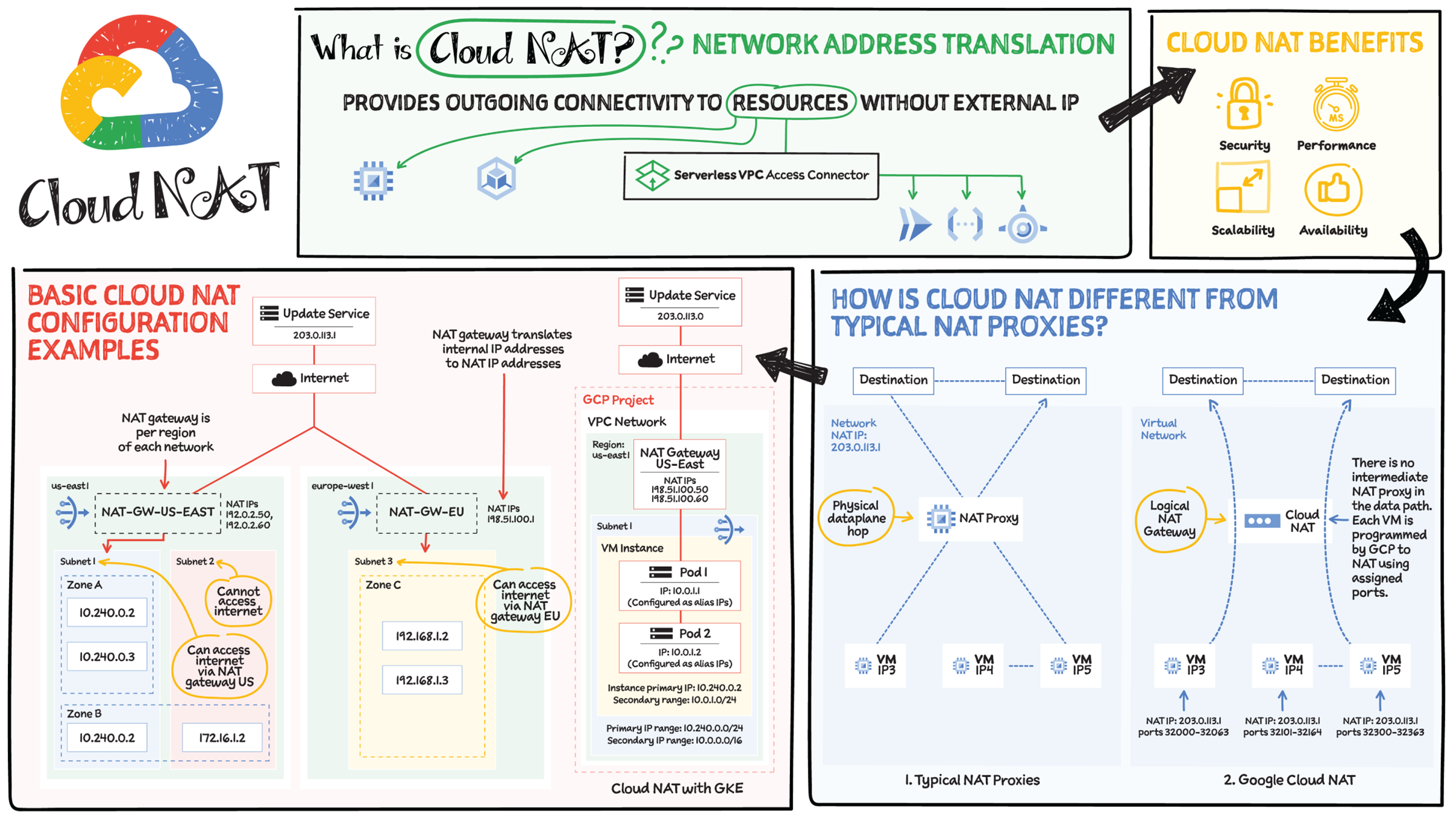

For security, it is a best practice to limit the number of public IP addresses in your network. In Google Cloud, Cloud NAT (network address translation) lets certain resources without external IP addresses create outbound connections to the Internet.

Cloud NAT provides outgoing connectivity for the following resources:

- Compute Engine virtual machine (VM) instances without external IP addresses

- Private Google Kubernetes Engine (GKE) clusters

- Cloud Run instances through Serverless VPC Access

- Cloud Functions instances through Serverless VPC Access

- App Engine standard environment instances through Serverless VPC Access

How Is Cloud NAT Different from Typical NAT Proxies?

Cloud NAT is a distributed, software-defined managed service, not based on proxy VMs or appliances. This proxy-less architecture means higher scalability (no single choke point) and lower latency. Cloud NAT configures the Andromeda software that powers your Virtual Private Cloud (VPC) network so that it provides source network address translation (SNAT) for VMs without external IP addresses. It also provides destination network address translation (DNAT) for established inbound response packets only.

Benefits of Using Cloud NAT

- Security: Helps you reduce the need for individual VMs to each have external IP addresses. Subject to egress firewall rules, VMs without external IP addresses can access destinations on the Internet.

- Availability: Since Cloud NAT is a distributed software-defined managed service, it doesn't depend on any VMs in your project or on a single physical gateway device. You configure a NAT gateway on a Cloud Router, which provides the control plane for NAT, holding configuration parameters that you specify.

- Scalability: Cloud NAT can be configured to automatically scale the number of NAT IP addresses that it uses, and it supports VMs that belong to managed instance groups, including those with autoscaling enabled.

- Performance: Cloud NAT does not reduce network bandwidth per VM because it is implemented by Google's Andromeda software-defined networking.

NAT Rules

In Cloud NAT, the NAT rules feature lets you create access rules that define how Cloud NAT is used to connect to the Internet. NAT rules support source NAT based on destination address. When you configure a NAT gateway without NAT rules, the VMs using that NAT gateway use the same set of NAT IP addresses to reach all Internet addresses. If you need more control over packets that pass through Cloud NAT, you can add NAT rules. A NAT rule defines a match condition and a corresponding action. After you specify NAT rules, each packet is matched with each NAT rule. If a packet matches the condition set in a rule, then the action corresponding to that match occurs.

Basic Cloud NAT Configuration Examples

In the example pictured in Sketchnote, the NAT gateway in the east is configured to support the VMs with no external IPs in subnet-1 to access the Internet. These VMs can send traffic to the Internet by using either gateway's primary internal IP address or an alias IP range from the primary IP address range of subnet-1, 10.240.0.0/16. A VM whose network interface does not have an external IP address and whose primary internal IP address is located in subnet-2 cannot access the Internet.

Similarly, the NAT gateway Europe is configured to apply to the primary IP address range of subnet-3 in the west region, allowing the VM whose network interface does not have an external IP address to send traffic to the Internet by using either its primary internal IP address or an alias IP range from the primary IP address range of subnet-3, 192.168.1.0/24.

To enable NAT for all the containers and the GKE node, you must choose all the IP address ranges of a subnet as the NAT candidates. It is not possible to enable NAT for specific containers in a subnet.

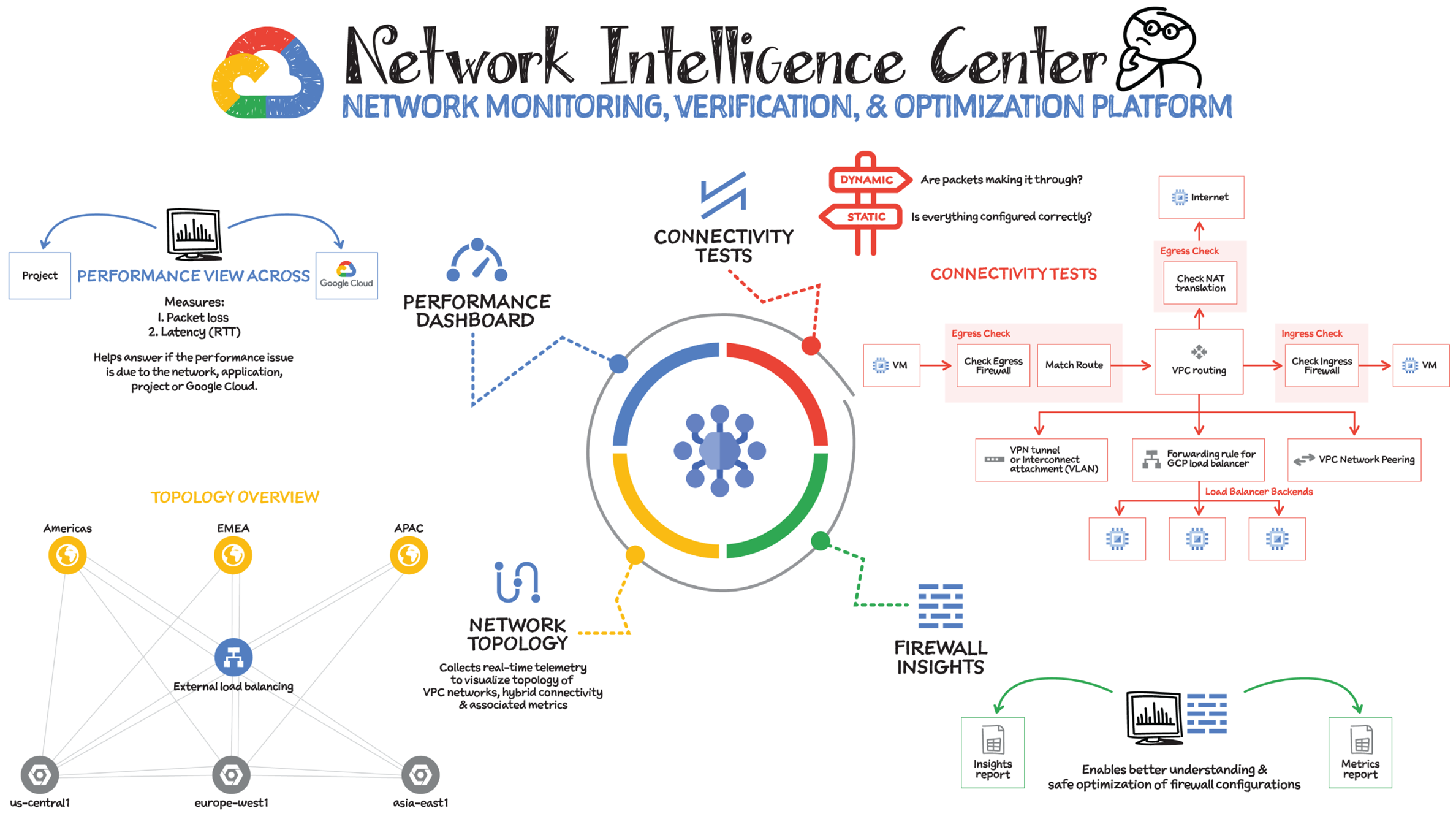

You need visibility into your cloud platform in order to monitor and troubleshoot it. Network Intelligence Center provides that visibility with a single console for Google Cloud network observability, monitoring, and troubleshooting. Currently Network Intelligence Center has four modules:

- Network Topology: Helps you visualize your network topology, including VPC connectivity to on-premises, Internet, and the associated metrics.

- Connectivity Tests: Provides both static and dynamic network connectivity tests for configuration and data plane reachability, to verify that packets are actually getting through.

- Performance Dashboard: Shows packet loss and latency between zones and regions that you are using.

- Firewall Insights: Shows usage for your VPC firewall rules and enables you to optimize their configuration.

Network Topology

Network Topology collects real-time telemetry and configuration data from Google infrastructure and uses it to help you visualize your resources. It captures elements such as configuration information, metrics, and logs to infer relationships between resources in a project or across multiple projects. After collecting each element, Network Topology combines them to generate a graph that represents your deployment. Using this graph, you can quickly view the topology and analyze the performance of your deployment without configuring any agents, sorting through multiple logs, or using third-party tools.

Connectivity Tests

The Connectivity Tests diagnostics tool lets you check connectivity between endpoints in your network. It analyzes your configuration and in some cases performs runtime verification.

To analyze network configurations, Connectivity Tests simulates the expected inbound and outbound forwarding path of a packet to and from your Virtual Private Cloud (VPC) network, Cloud VPN tunnels, or VLAN attachments.

For some connectivity scenarios, Connectivity Tests also performs runtime verification, where it sends packets over the data plane to validate connectivity and provides baseline diagnostics of latency and packet loss.

Performance Dashboard

Performance Dashboard gives you visibility into the network performance of the entire Google Cloud network, as well as the performance of your project's resources. It collects and shows packet loss and latency metrics. With these performance-monitoring capabilities, you can distinguish between a problem in your application and a problem in the underlying Google Cloud network. You can also debug historical network performance problems.

Firewall Insights

Firewall Insights enables you to better understand and safely optimize your firewall configurations. It provides reports that contain information about firewall usage and the impact of various firewall rules on your VPC network.

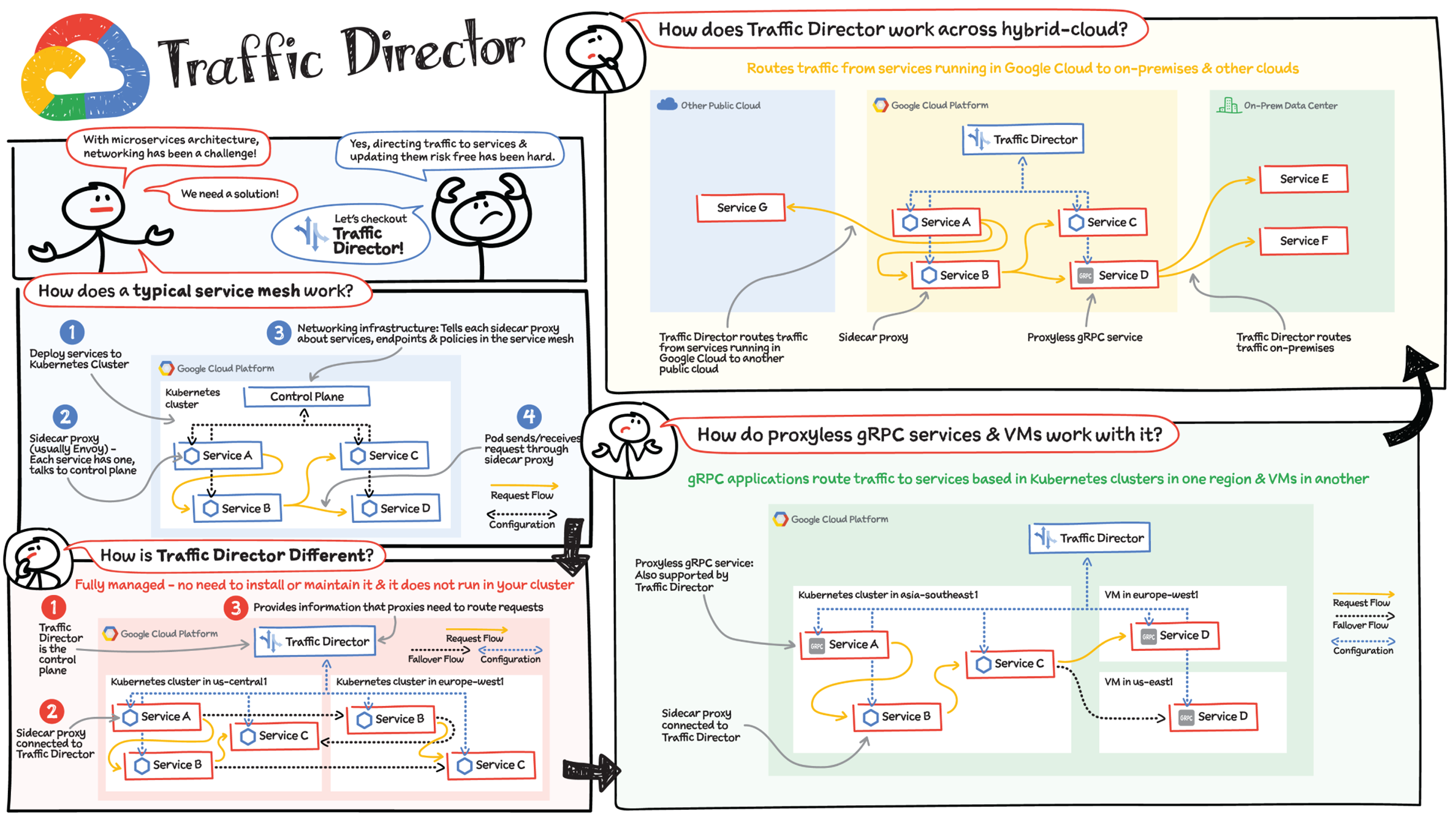

If your application is deployed in a microservices architecture, then you are likely familiar with the networking challenges that come with it. Traffic Director helps you run microservices in a global service mesh. The mesh handles networking for your microservices so that you can focus on your business logic and application code. This separation of application logic from networking logic helps you improve your development velocity, increase service availability, and introduce modern DevOps practices in your organization.

How Does a Typical Service Mesh Work in Kubernetes?

In a typical service mesh, you deploy your services to a Kubernetes cluster.

- Each of the services' Pods has a dedicated proxy (usually Envoy) running as a sidecar container alongside the application container(s).

- Each sidecar proxy talks to the networking infrastructure (a control plane) that is installed in your cluster. The control plane tells the sidecar proxies about services, endpoints, and policies in your service mesh.

- When a Pod sends or receives a request, the request is intercepted by the Pod's sidecar proxy. The sidecar proxy handles the request, for example, by sending it to its intended destination.

The control plane is connected to each proxy and provides information that the proxies need to handle requests. To clarify the flow, if application code in Service A sends a request, the proxy handles the request and forwards it to Service B. This model enables you to move networking logic out of your application code. You can focus on delivering business value while letting the service mesh infrastructure take care of application networking.

How Is Traffic Director Different?

Traffic Director works similarly to the typical service mesh model, but it's different in a few, very crucial ways. Traffic Director provides:

- A fully managed and highly available control plane. You don't install it, it doesn't run in your cluster, and you don't need to maintain it. Google Cloud manages all this for you with production-level service-level objectives (SLOs).

- Global load balancing with capacity and health awareness and failovers.

- Integrated security features to enable a zero-trust security posture.

- Rich control plane and data plane observability features.

- Support for multi-environment service meshes spanning across multicluster Kubernetes, hybrid cloud, VMs, gRPC services, and more.

Traffic Director is the control plane and the services in the Kubernetes cluster, each with sidecar proxies, connect to Traffic Director. Traffic Director provides the information that the proxies need to route requests. For example, application code on a Pod that belongs to Service A sends a request. The sidecar proxy running alongside this Pod handles the request and routes it to a Pod that belongs to Service B.

Multicluster Kubernetes: Traffic Director supports application networking across Kubernetes clusters. In this example, it provides a managed and global control plane for Kubernetes clusters in the United States and Europe. Services in one cluster can talk to services in another cluster. You can even have services that consist of Pods in multiple clusters. With Traffic Director's proximity-based global load balancing, requests destined for Service B go to the geographically nearest Pod that can serve the request. You also get seamless failover; if a Pod is down, the request automatically fails over to another Pod that can serve the request, even if this Pod is in a different Kubernetes cluster.

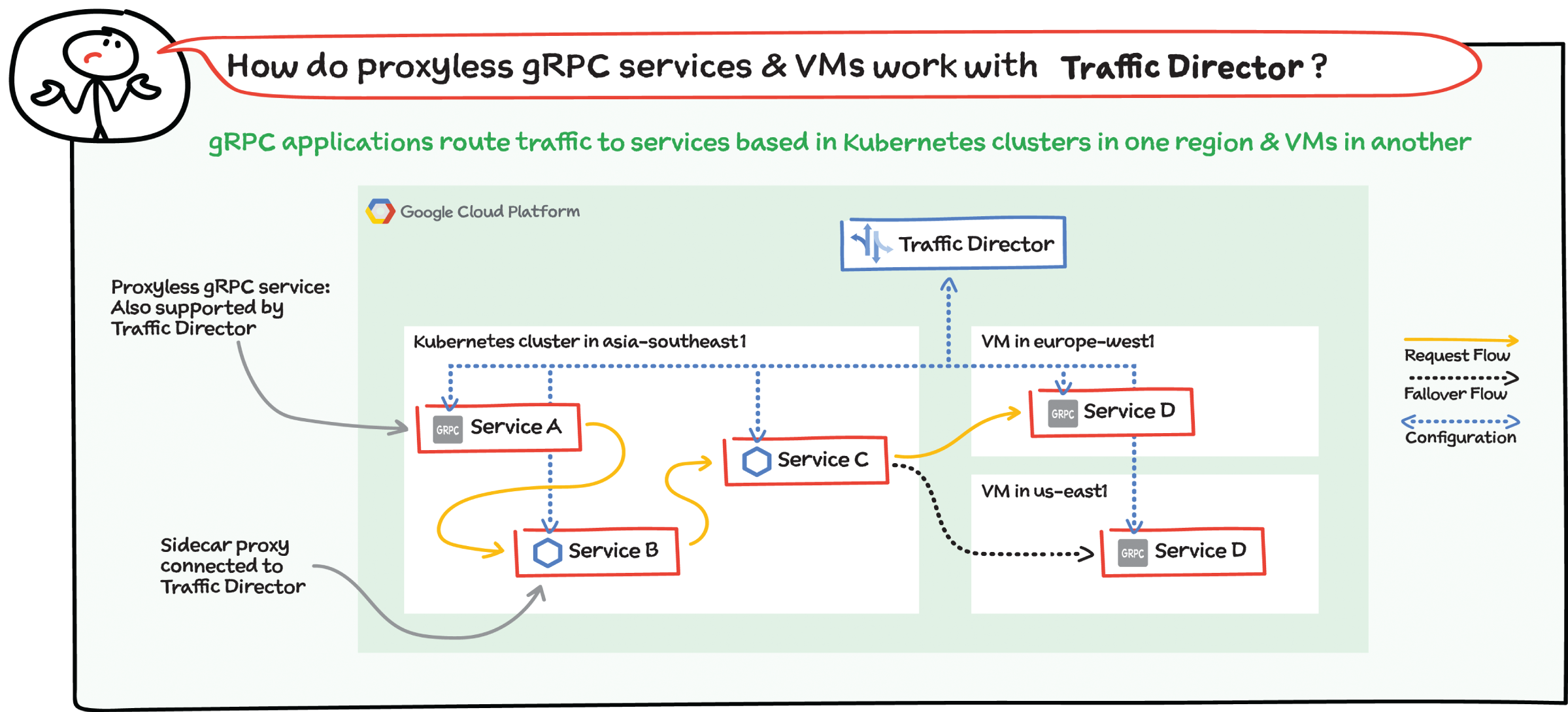

How Does Traffic Director Support Proxy-less gRPC and VMs?

Virtual machines: Traffic Director solves application networking for VM-based workloads alongside Kubernetes-based workloads. You simply add a flag to your Compute Engine VM instance template, and Google seamlessly handles the infrastructure set up, which includes installing and configuring the proxies that deliver application networking capabilities.

As an example, traffic enters your deployment through External HTTP(S) Load Balancing to a service in the Kubernetes cluster in one region and can then be routed to another service on a VM in a totally different region.

gRPC: With Traffic Director, you can easily bring application networking capabilities such as service discovery, load balancing, and traffic management directly to your gRPC applications. This functionality happens natively in gRPC, so service proxies are not required — that's why they're called proxy-less gRPC applications. For more information, refer to Traffic Director and gRPC — proxyless services for your service mesh.

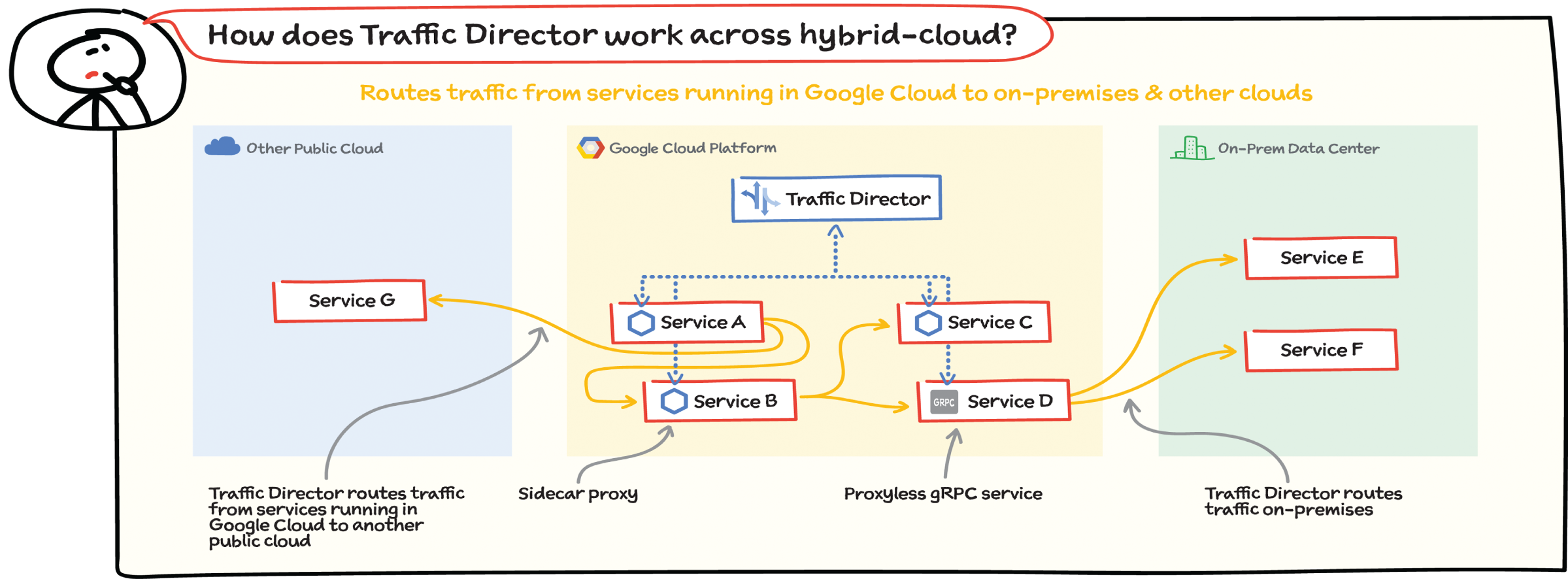

How Does Traffic Director Work Across Hybrid and Multicloud Environments?

Whether you have services in Google Cloud, on-premises, in other clouds, or all of these, your fundamental application networking challenges remain the same. How do you get traffic to these services? How do these services communicate with each other?

Traffic Director can route traffic from services running in Google Cloud to services running in another public cloud and to services running in an on-premises data center. Services can use Envoy as a sidecar proxy or a proxy-less gRPC service. When you use Traffic Director, you can send requests to destinations outside of Google Cloud. This enables you to use Cloud Interconnect or Cloud VPN to privately route traffic from services inside Google Cloud to services or gateways in other environments. You can also route requests to external services reachable over the public Internet.

Ingress and gateways

For many use cases, you need to handle traffic that originates from clients that aren’t configured by Traffic Director. For example, you might need to ingress public internet traffic to your microservices. You might also want to configure a load balancer as a reverse proxy that handles traffic from a client before sending it on to a destination. In such cases Traffic Director works with Cloud Load Balancing to provide a managed ingress experience. You set up an external or internal load balancer, and then configure that load balancer to send traffic to your microservices. Public internet clients reach your services through External HTTP(S) Load Balancing. Clients, such as microservices that reside on your Virtual Private Cloud (VPC) network, use Internal HTTP(S) Load Balancing to reach your services.

For some use cases, you might want to set up Traffic Director to configure a gateway. This gateway is essentially a reverse proxy, typically Envoy running on one or more VMs, that listens for inbound requests, handles them, and sends them to a destination. The destination can be in any Google Cloud region or Google Kubernetes Engine (GKE) cluster. It can even be a destination outside of Google Cloud that is reachable from Google Cloud by using hybrid connectivity.

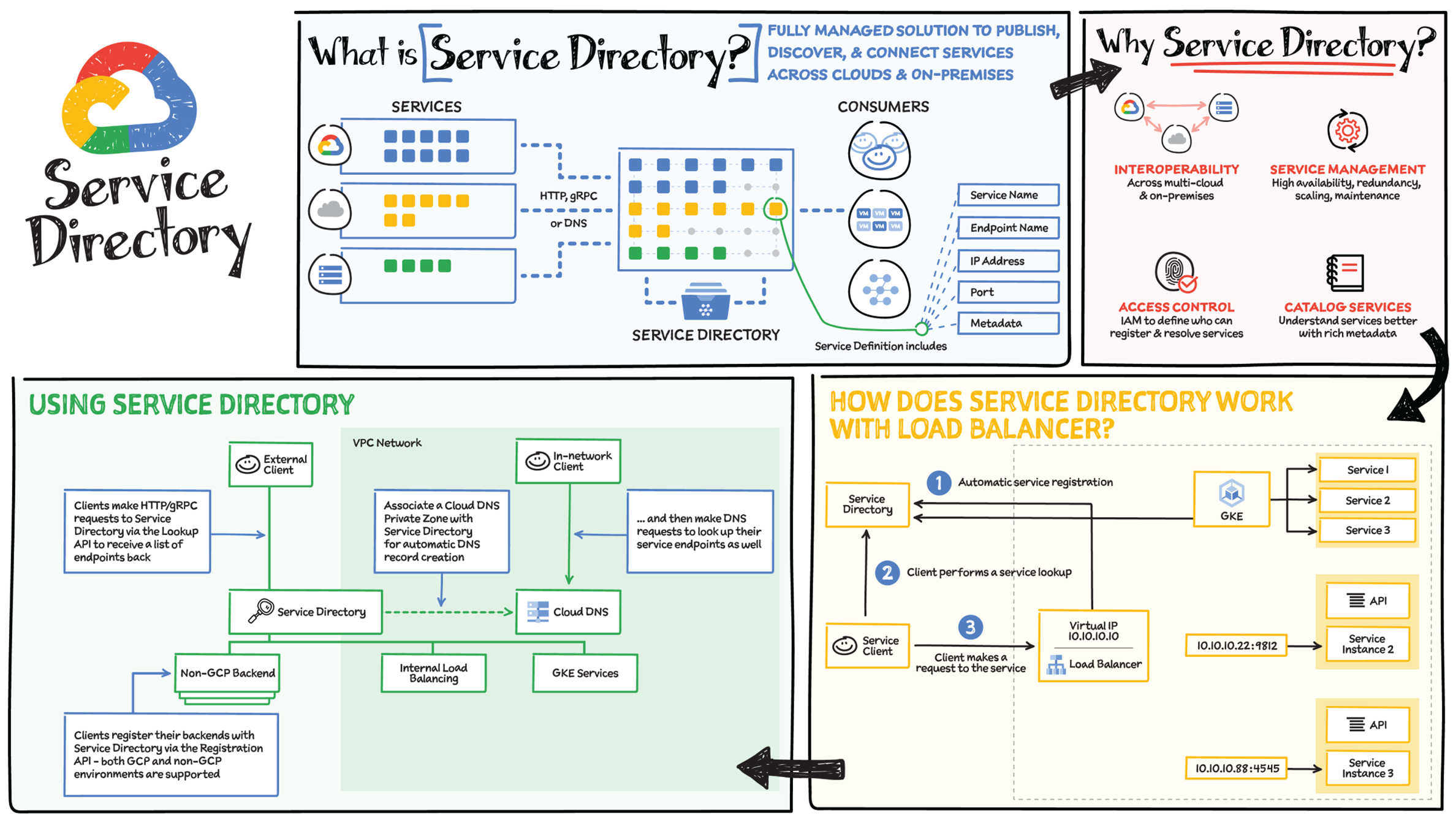

Most enterprises have a large number of heterogeneous services deployed across different clouds and on-premises environments. It is complex to look up, publish, and connect these services, but it is necessary to do so for deployment velocity, security, and scalability. That's where Service Directory comes in!

Service Directory is a fully managed platform for discovering, publishing, and connecting services, regardless of the environment. It provides real-time information about all your services in a single place, enabling you to perform service inventory management at scale, whether you have a few service endpoints or thousands.

Why Service Directory?

Imagine that you are building a simple API and that your code needs to call some other application. When endpoint information remains static, you can hard-code these locations into your code or store them in a small configuration file. However, with microservices and multicloud, this problem becomes much harder to handle as instances, services, and environments can all change.

Service Directory solves this! Each service instance is registered with Service Directory, where it is immediately reflected in Domain Name System (DNS) and can be queried by using HTTP/gRPC regardless of its implementation and environment. You can create a universal service name that works across environments, make services available over DNS, and apply access controls to services based on network, project, and IAM roles of service accounts.

Service Directory solves the following problems:

- Interoperability: Service Directory is a universal naming service that works across Google Cloud, multicloud, and on-premises. You can migrate services between these environments and still use the same service name to register and resolve endpoints.

- Service management: Service Directory is a managed service. Your organization does not have to worry about the high availability, redundancy, scaling, or maintenance concerns of maintaining your own service registry.

- Access control: With Service Directory, you can control who can register and resolve your services using IAM. Assign Service Directory roles to teams, service accounts, and organizations.

- Limitations of pure DNS: DNS resolvers can be unreliable in terms of respecting TTLs and caching, cannot handle larger record sizes, and do not offer an easy way to serve metadata to users. In addition to DNS support, Service Directory offers HTTP and gRPC APIs to query and resolve services.

How Service Directory Works with Load Balancer

Here's how Service Directory works with Load Balancer:

- In Service Directory, Load Balancer is registered as a provider of each service.

- The client performs a service lookup via Service Directory.

- Service Directory returns the Load Balancer address.

- The client makes a call to the service via Load Balancer.

Using Cloud DNS with Service Directory

Cloud DNS is a fast, scalable, and reliable DNS service running on Google's infrastructure. In addition to public DNS zones, Cloud DNS also provides a managed internal DNS solution for private networks on Google Cloud. Private DNS zones enable you to internally name your virtual machine (VM) instances, load balancers, or other resources. DNS queries for those private DNS zones are restricted to your private networks. Here is how you can use Service Directory zones to make service names available using DNS lookups:

- The endpoints are registered directly with Service Directory using the Service Directory API. This can be done for both Google Cloud and non-Google Cloud services.

- Both external and internal clients can look up those services.

- To enable DNS requests, create a Service Directory zone in Cloud DNS that is associated with a Service Directory namespace.

- Internal clients can resolve this service via DNS, HTTP, or gRPC. External clients (clients not on the private network) must use HTTP or gRPC to resolve service names.

Google's physical network infrastructure powers the global virtual network that you need to run your applications in the cloud. It offers virtual networking and tools needed to lift-and-shift, expand, and/or modernize your applications. Let's check out an example of how services help you connect, scale, secure, optimize, and modernize your applications and infrastructure.

Connect

The first thing you need is to provision the virtual network, connect to it from other clouds or on-premises, and isolate your resources so that other projects and resources cannot inadvertently access the network.

- Hybrid Connectivity: Consider Company X, which has an on-premises environment with a production and a development network. They would like to connect their on-premises environment with Google Cloud so that the resources and services can easily connect between the two environments. They can use either Cloud Interconnect for dedicated connection or Cloud VPN for connection via an IPsec secure tunnel. Both work, but the choice would depend on how much bandwidth they need; for higher bandwidth and more data, dedicated interconnect is recommended. Cloud Router would help enable the dynamic routes between the on-premises environment and Google Cloud VPC. If they have multiple networks/locations, they could also use Network Connectivity Center to connect their different enterprise sites outside of Google Cloud by using the Google network as a wide area network (WAN).

- Virtual Private Cloud (VPC): They deploy all their resources in VPC, but one of the requirements is to keep the Prod and Dev environments separate. For this, the team needs to use Shared VPC, which allows them to connect resources from multiple projects to a common Virtual Private Cloud (VPC) network so that they can communicate with each other securely and efficiently using internal IPs from that network.

- Cloud DNS: They use Cloud DNS to manage:

- Public and private DNS zones

- Public/private IPs within the VPC and over the Internet

- DNS peering

- Forwarding

- Split horizons

- DNSSEC for DNS security

Scale

Scaling includes not only quickly scaling applications, but also enabling real-time distribution of load across resources in single or multiple regions, and accelerating content delivery to optimize last-mile performance.

- Cloud Load Balancing: Quickly scale applications on Compute Engine — no pre-warming needed. Distribute load-balanced compute resources in single or multiple regions (and near users) while meeting high-availability requirements. Cloud Load Balancing can put resources behind a single anycast IP, scale up or down with intelligent autoscaling, and integrate with Cloud CDN.

- Cloud CDN: Accelerate content delivery for websites and applications served out of Compute Engine with Google's globally distributed edge caches. Cloud CDN lowers network latency, offloads origin traffic, and reduces serving costs. Once you've set up HTTP(S) load balancing, you can enable Cloud CDN with a single checkbox.

Secure

Networking security tools allow you to defend against infrastructure DDoS attacks, mitigate data exfiltration risks when connecting with services within Google Cloud, and use network address translation to enable controlled Internet access for resources without public IP addresses.

- Firewall Rules: Lets you allow or deny connections to or from your virtual machine (VM) instances based on a configuration that you specify. Every VPC network functions as a distributed firewall. While firewall rules are defined at the network level, connections are allowed or denied on a per-instance basis. You can think of the VPC firewall rules as existing not only between your instances and other networks, but also between individual instances within the same network.

- Cloud Armor: It works alongside an HTTP(S) load balancer to provide built-in defenses against infrastructure DDoS attacks. Features include IP-based and geo-based access control, support for hybrid and multicloud deployments, preconfigured WAF rules, and named IP lists.

- Packet Mirroring: Packet Mirroring is useful when you need to monitor and analyze your security status. VPC Packet Mirroring clones the traffic of specific instances in your Virtual Private Cloud (VPC) network and forwards it for examination. It captures all traffic (ingress and egress) and packet data, including payloads and headers. The mirroring happens on the virtual machine (VM) instances, not on the network, which means it consumes additional bandwidth only on the VMs.

- Cloud NAT: Lets certain resources without external IP addresses create outbound connections to the Internet.

- Cloud IAP: Helps work from untrusted networks without the use of a VPN. Verifies user identity and uses context to determine if a user should be granted access. Uses identity and context to guard access to your on-premises and cloud-based applications.

Optimize

It's important to keep a watchful eye on network performance to make sure the infrastructure is meeting your performance needs. This includes visualizing and monitoring network topology, performing diagnostic tests, and assessing real-time performance metrics.

- Network Service Tiers: The Premium Tier delivers traffic from external systems to Google Cloud resources by using Google's low-latency, highly reliable global network. Standard Tier is used for routing traffic over the Internet. Choose Premium Tier for performance and Standard Tier as a low-cost alternative.

- Network Intelligence Center: Provides a single console for Google Cloud network observability, monitoring, and troubleshooting.

Modernize

As you modernize your infrastructure, adopt microservices-based architectures, and expand your use of containerization, you will need access to tools that can help you manage the inventory of your heterogeneous services and route traffic among them.

- GKE Networking (+ on-premises in Anthos): When you use GKE, Kubernetes and Google Cloud dynamically configure IP filtering rules, routing tables, and firewall rules on each node, depending on the declarative model of your Kubernetes deployments and your cluster configuration on Google Cloud.

- Traffic Director: Helps you run microservices in a global service mesh (outside of your cluster). This separation of application logic from networking logic helps you improve your development velocity, increase service availability, and introduce modern DevOps practices in your organization.

- Service Directory: A platform for discovering, publishing, and connecting services, regardless of the environment. It provides real-time information about all your services in a single place, enabling you to perform service inventory management at scale, whether you have a few service endpoints or thousands.