Floating-point arithmetic is an approximation of real arithmetic that solves the major problem with integer data types — the inability to represernt fractional values. Although floating-point arithmetic is often slower than integer arithmetic, modern CPUs incorporate well-designed floating-point units, thus reducing the performance difference between integer and floating-point arithmetic.

For this reason, the stigma surrounding floating-point arithmetic has diminished since the days when floating-point results were computed using software (rather than hardware). One unfortunate aspect of floating-point’s increasing popularity is that many programmers do not understand the inherent limitations of the floating-point format. Floating-point arithmetic is but an approximation of real arithmetic. The inaccuracies present in this approximation can lead to serious defects in application software if an engineer is not aware of the problems associated with these approximations. In order to write great software that produces correct results when using floating-point arithmetic, programmers must be aware of the machine’s underlying numeric representation and of how floating-point arithmetic approximates real arithmetic.

Floating-point numbers provide only an approximation of real numbers. This is because there is an infinite number of possible real values, while floating-point representation uses a finite number of bits (and, therefore, can only represent a finite number of different values). When a given floating-point format cannot exactly represent some real value, the floating-point number must instead use the closest value that it can exactly represent. This section describes how the floating-point format works so you can better understand the problems with these approximations.

Consider a couple of problems with integer and fixed-point formats. Integers, of course, cannot represent any fractional values. Another problem with most integer representations is that they can only represent values in the range 0..2n−1 or −2n−1..2n−1−1. Fixed-point formats provide the ability to represent fractional values, but at the expense of the range of integer values you can represent. This problem, which the floating-point format solves, is the issue of dynamic range.

Consider a simple 16-bit unsigned fixed-point format that uses 8 bits for fractional component and 8 bits for the integer component of the number. The integer component can represent values in the range 0..255, and the fractional component can represent the values zero and fractions between 2−8 and 1 (with a resolution of about 2−8). Suppose, now, that for a string of calculations you only need two bits to represent the fractional values 0.0, 0.25, 0.5, and 0.75. Unfortunately, the extra six bits in the fractional part of the number go to waste. Wouldn’t it be nice if we could utilize those bits in the integer portion of the number to extend its range from 0..255 to 0..16,383? Well, that’s the basic concept behind the floating-point representation. In a floating-point value, the radix point (binary point) can freely float between digits in the number as needed. So if in a 16-bit binary number you only need two bits of precision for the fractional component of the number, the binary point can float down between bits 1 and 2 in the number, allowing the format to utilize bits 2 through 15 for the integer portion. In order to support a floating-point format, the numeric representation needs one additional field — a field that specifies the position of the radix point within the number. This extra field is equivalent to the exponent present when using scientific notation.

To represent real numbers, most floating-point formats use some number of bits to represent a mantissa and a smaller number of bits to represent an exponent. The mantissa is a base value, that usually falls within a limited range (for example, between zero and one). The exponent is a multiplier that when applied to the mantissa produces values outside this range. The result of separating the number into these two parts is that floating-point numbers can only represent numbers with a specific number of significant digits. As you will soon see, if the difference between the smallest and largest exponent is greater than the number of significant digits in the mantissa (and it usually is), then the floating-point representation cannot exactly represent all the integers between the smallest and largest values the floating-point format can represent.

To easily see the impact of limited-precision arithmetic, we will adopt a simplified decimal floating-point format for our examples. Our floating-point format will provide a mantissa with three significant digits and a decimal exponent with two digits. The mantissa and exponents are both signed values, as shown in Figure 4-1.

Note that this particular floating-point representation can approximate all the values between 0.00 and 9.99 × 1099. However, this format certainly cannot exactly represent all values in this range (that would take 100 digits of precision!). To represent a value like 9,876,543,210, the floating-point format in Figure 4-1 would have to approximate this value with 9.88 × 109 (or 9.88e + 9 in programming language notation, which this book will generally use from this point forward).

The big advantage of the mantissa/exponent configuration is that a floating-point format can represent values across a wide range. There is a subtle disadvantage to this scheme, however: you cannot exactly represent as many different values with a floating-point format as you can with an integer format. This is because the floating-point format can provide multiple representations (that is, different bit patterns) for the same value. In the simplified decimal floating-point format shown in Figure 4-1, for example, 1.00e + 1 and 0.10e + 2 are different representations of the same value. As there are a finite number of different representations possible (given a finite number of bits or digits), whenever a single value has two possible representations, that’s one less different value the format can represent.

Furthermore, the floating-point format, a form of scientific notation, complicates arithmetic somewhat. When adding and subtracting two numbers in scientific notation, you must adjust the two values so that their exponents are the same. For example, when adding 1.23e1 and 4.56e0, you could convert 4.56e0 to 0.456e1 and then add them. This produces 1.686e1. Unfortunately, the result does not fit into the three significant digits of our current format, so we must either round or truncate the result to three significant digits. Rounding generally produces the most accurate result, so let’s round the result to obtain 1.69e1. As you can see, the lack of precision (the number of digits or bits maintained in a computation) affects the accuracy (the correctness of the computation).

In the previous example, we were able to round the result because we maintained four significant digits during the calculation. If our floating-point calculation were limited to three significant digits during computation, we would have had to truncate the last digit of the smaller number, obtaining 1.68e1, which is even less correct. Therefore, to improve the accuracy of floating-point calculations, it is necessary to use extra digits during the calculation. These extra digits are known as guard digits (or guard bits in the case of a binary format). They greatly enhance accuracy during a long chain of computations.

The accuracy lost during a single computation usually isn’t bad unless you are greatly concerned about the accuracy of your computations. However, if you compute a value that is the result of a sequence of floating-point operations, the error can accumulate and greatly affect the computation itself. For example, suppose we add 1.23e3 and 1.00e0. Adjusting the numbers so their exponents are the same before the addition produces 1.23e3 + 0.001e3. The sum of these two values, even after rounding, is 1.23e3. This might seem perfectly reasonable to you; after all, if we can only maintain three significant digits, adding in a small value shouldn’t affect the result. However, suppose we were to add 1.00e0 to 1.23e3 ten times. The first time we add 1.00e0 to 1.23e3 we get 1.23e3. Likewise, we get this same result the second, third, fourth . . . and tenth time we add 1.00e0 to 1.23e3. On the other hand, had we added 1.00e0 to itself ten times, then added the result (1.00e1) to 1.23e3, we would obtain a different result, 1.24e3. This is an important thing to know about limited-precision arithmetic:

Your results will be better when adding or subtracting numbers if their relative magnitudes (that is, the sizes of the exponents) are similar. If you are performing a chain calculation involving addition and subtraction, you should attempt to group the operations so that you can add or subtract values whose magnitudes are close to one another before adding or subtracting values whose magnitudes are not as close.

Another problem with addition and subtraction is that you can wind up with false precision. Consider the computation 1.23e0 − 1.22e0. This produces 0.01e0. Although this is mathematically equivalent to 1.00e − 2, this latter form suggests that the last two digits are both exactly zero. Unfortunately, we only have a single significant digit after this computation, which is in the hundredths place. Indeed, some FPUs or floating-point software packages might actually insert random digits (or bits) into the LO positions. This brings up a second important rule concerning limited-precision arithmetic:

Whenever subtracting two numbers with the same signs or adding two numbers with different signs, the accuracy of the result may be less than the precision available in the floating-point format.

Multiplication and division do not suffer from these same problems because you do not have to adjust the exponents before the operation; all you need to do is add the exponents and multiply the mantissas (or subtract the exponents and divide the mantissas). By themselves, multiplication and division do not produce particularly poor results. However, they tend to exacerbate any accuracy error that already exists in a value. For example, if you multiply 1.23e0 by 2, when you should be multiplying 1.24e0 by 2, the result is even less accurate than it was. This brings up a third important rule when working with limited-precision arithmetic:

When performing a chain of calculations involving addition, subtraction, multiplication, and division, try to perform the multiplication and division operations first.

Often, by applying normal algebraic transformations, you can arrange a calculation so the multiplication and division operations occur first. For example, suppose you want to compute the following:

x × (y + z)

Normally you would add y and z together and multiply their sum by x. However, you will get a little more accuracy if you first transform the previous equation to get the following:

x × y + x × z

This way you can compute the result by performing the multiplications first.[11]

Multiplication and division have other problems, as well. When multiplying two very large or very small numbers, it is quite possible for overflow or underflow to occur. The same situation occurs when dividing a small number by a large number, or when dividing a large number by a small number. This brings up a fourth rule you should attempt to follow when multiplying or dividing values:

When multiplying and dividing sets of numbers, try to multiply and divide numbers that have the same relative magnitudes.

Comparing floating-point numbers is very dangerous. Given the inaccuracies present in any computation (including converting an input string to a floating-point value), you should never compare two floating-point values to see if they are equal. In a binary floating-point format, different computations that produce the same (mathematical) result may differ in their least significant bits. For example, adding 1.31e0 + 1.69e0 should produce 3.00e0. Likewise, adding 1.50e0 + 1.50e0 should produce 3.00e0. However, were you to compare (1.31e0 + 1.69e0) against (1.50e0 + 1.50e0) you might find that these sums are not equal to one another. The test for equality succeeds if and only if all bits (or digits) in the two operands are the same. Because it is not necessarily true that two seemingly equivalent floating-point computations will produce exactly equal results, a straight comparison for equality may fail when, algebraically, such a comparison should succeed.

The standard way to test for equality between floating-point numbers is to determine how much error (or tolerance) you will allow in a comparison, and then check to see if one value is within this error range of the other. The straightforward way to do this is to use a test like the following:

if( (Value1 >= (Value2 × error)) and (Value1 <= (Value2 + error)) then . . .

A more efficient way to handle this is to use a statement of the form:

if( abs(Value1 − Value2) <= error ) then . . .

You must exercise care when choosing the value for error. This should be a value slightly greater than the largest amount of error that will creep into your computations. The exact value will depend upon the particular floating-point format you use and the magnitudes of the values you are comparing. So the final rule is this:

When comparing two floating-point numbers for equality, always compare the values to see if the difference between two values is less than some small error value.

Checking two floating-point numbers for equality is a very famous problem, and almost every introductory programming text discusses this issue. Perhaps less well known is the fact that comparing for less than or greater than creates the same problems. Suppose that a sequence of floating-point calculations produces a result that is only accurate to within plus or minus error, even though the floating-point representation provides better accuracy than error suggests. If you compare such a result against some other calculation computed with less accumulated error, and those two values are very close to one other, then comparing them for less than or greater than may produce incorrect results.

For example, suppose that some chain of calculations in our simplified decimal representation produces the result 1.25, which is only accurate to plus or minus 0.05 (that is, the real value could be somewhere between 1.20 and 1.30). Also assume that a second chain of calculations produces the result 1.27, which is accurate to the full precision of our floating-point result (that is, the actual value, before rounding, is somewhere between 1.265 and 1.275). Now, if we compare the result of the first calculation (1.25) against the value of the second calculation (1.27), we will find that the first calculation is less than the result of the second. Unfortunately, given the inaccuracy present in the first calculation this might not be true. If the correct result of the first computation happens to be in the range 1.27 to 1.30 (exclusive), then reporting that the first calculation is less than the second is false. About the only reasonable test is to see if the two values are within the error tolerance of one another. If so, treat the values as equal (so one wouldn’t be considered less than or greater than the other). If you determine that the values are not equal to one another within the desired error tolerance, then you can compare them to see if one value is less than or greater than the other. This is known as a miserly approach to comparing for less than or greater than (that is, we try to find as few values that are less than or greater than as possible).

The other possibility is to use an eager approach to the comparison. An eager approach attempts to make the result of the comparison true as often as possible. Given two values that you want to compare, and an error tolerance you’re interested in achieving, here’s how you’d eagerly compare the two values for less than or greater than:

if( A < (B + error)) then Eager_A_lessthan_B; if( A > (B − error)) then Eager_A_greaterthan_B;

Don’t forget that calculations like (B + error) are subject to their own inaccuracies, depending on the relative magnitudes of the values B and error, and the inaccuracy of this calculation may very well affect the final result that you achieve in the comparison.

There are other problems that can occur when using floating-point values. This book can only point out some of the major problems and make you aware that you cannot treat floating-point arithmetic like real arithmetic — the inaccuracies present in limited-precision arithmetic can get you into trouble if you are not careful. A good text on numerical analysis or even scientific computing can help fill in the details that are beyond the scope of this book. If you are going to be working with floating-point arithmetic, in any language, you should take the time to study the effects of limited-precision arithmetic on your computations.

When Intel’s 80x86 designers planned to introduce a floating-point unit (FPU) for its original 8086 microprocessor, they were smart enough to realize that the electrical engineers and solid-state physicists who design chips probably didn’t have the necessary numerical analysis background to design a good floating-point representation. So Intel went out and hired the best numerical analyst it could find to design a floating-point format for its 8087 FPU. That person then hired two other experts in the field, and the three of them (Kahn, Coonan, and Stone) designed Intel’s floating-point format. They did such a good job designing the KCS Floating-Point Standard that the IEEE organization used this format as the basis for the IEEE floating-point format.

To handle a wide range of performance and accuracy requirements, Intel actually introduced three floating-point formats: single precision, double precision, and extended precision. The single- and double-precision formats corresponded to C’s float and double types or FORTRAN’s real and double- precision types. Intel intended to use extended precision for long chains of computations. Extended precision contains 16 extra bits that the calculations can use as guard bits before rounding down to a double-precision value when storing the result.

The single-precision format uses a 24-bit mantissa and an 8-bit exponent. The mantissa usually represents a value between 1.0 and just less than 2.0. The HO bit of the mantissa is always assumed to be one and represents a value just to the left of the binary point. The remaining 23 mantissa bits appear to the right of the binary point and represent the value:

1.mmmmmmm mmmmmmmm mmmmmmmm

The presence of the implied one bit is why the mantissa is always greater than or equal to one. Even if the other mantissa bits are all zero, the implied one bit always gives us the value one. Each position to the right of the binary point represents a value (zero or one) times a successive negative power of two, but even if we had an almost infinite number of one bits after the binary point, they still would not add up to two. So the mantissa can represent values in the range 1.0 to just less than 2.0.

Some examples would probably be useful here. Consider the decimal value 1.7997. Here are the steps we could go though to compute the binary mantissa for this value:

Subtract 20 from 1.7997 to produce 0.7997 and %1.00000000000000000000000.

Subtract 2−1 (1/2) from 0.7997 to produce 0.2997 and %1.10000000000000000000000.

Subtract 2−2 (1/4) from 0.2997 to produce 0.0497 and %1.11000000000000000000000.

Subtract 2−5 (1/32) from 0.0497 to produce 0.0185 and %1.11001000000000000000000.

Subtract 2−6 (1/64) from 0.0185 to produce 0.00284 and %1.11001100000000000000000.

Subtract 2−9 (1/512) from 0.00284 to produce 0.000871 and %1.11001100100000000000000.

Subtract 2−10 (1/1,024) from 0.000871 to (approximately) produce zero and %1.11001100110000000000000.

Although there is an infinite number of values between one and two, we can only represent eight million (223) of them because we use a 23-bit mantissa (the 24th bit is always one). This is the reason for inaccuracy in floating-point arithmetic — we only have 23 bits of precision in computations involving single-precision floating-point values.

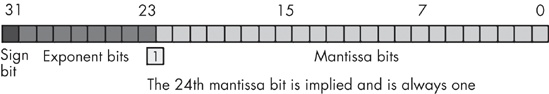

The mantissa uses a one’s complement format rather than two’s complement. This means that the 24-bit value of the mantissa is simply an unsigned binary number, and the sign bit, in bit position 31, determines whether that value is positive or negative. One’s complement numbers have the unusual property that there are two representations for zero (with the sign bit set or clear). Generally, this is important only to the person designing the floating-point software or hardware system. We will assume that the value zero always has the sign bit clear. The single-precision floating-point format takes the form shown in Figure 4-2.

To represent values outside the range 1.0 to just under 2.0, the exponent portion of the floating-point format comes into play. The floating-point format raises two to the power specified by the exponent and then multiplies the mantissa by this value. The exponent is eight bits and uses an excess-127 format (sometimes called bias-127 exponents). In excess-127 format, the exponent 20 is represented by the value 127 ($7f). Therefore, to convert an exponent to excess-127 format, simply add 127 to the exponent value. For example, the single precision representation for 1.0 is $3f800000. The mantissa is 1.0 (including the implied bit) and the exponent is 20, or 127 ($7f) when you add in the excess-127 exponent value.

The use of excess-127 format for the exponent makes it easier to compare floating-point values. As it turns out, if we handle the sign bit (bit 31) separately, we can easily compare two floating-point numbers for less than or greater than by simply comparing them as though they were unsigned integers. To handle the sign bit, we simply note the signs of the two values. If the signs are not equal, then the positive value (the one with bit 31 set to zero) will be greater than the number that has the HO bit set to one.[12] If the sign bits are both zero, then we can use a straight unsigned binary comparison. If the signs are both one, then we do an unsigned comparison but invert the result (so if the sign bits are set, we treat less than as greater than and vice versa). On some CPUs a 32-bit unsigned comparison is much faster than a 32-bit floating-point comparison. In such situations, it’s probably worthwhile to do the comparison using integer arithmetic rather than floating-point arithmetic.

With a 24-bit mantissa, you will get approximately 6 1/2 decimal digits of precision (one half digit of precision means that the first six digits can all be in the range 0..9 but the seventh digit can only be in the range 0..x where x < 9 and is generally close to 5). With an 8-bit excess-127 exponent, the dynamic range of single-precision floating-point numbers is approximately 2±128 or about 10±38.

Although single-precision floating-point numbers are perfectly suitable for many applications, the dynamic range is somewhat limited and is unsuitable for many financial, scientific, and other applications. Furthermore, during long chains of computations, the limited accuracy of the single precision format may introduce serious error. For serious calculations, a floating-point format with more precision is necessary.

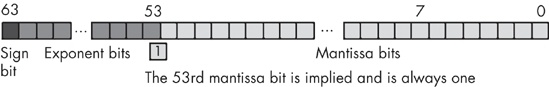

The double-precision format helps overcome the problems of the single-precision floating-point. Using twice the space, the double-precision format has an 11-bit excess-1,023 exponent and a 53-bit mantissa (including an implied HO bit of one) plus a sign bit. This provides a dynamic range of about 10±308 and 14 1/2 digits of precision, which is sufficient for most applications. Double-precision floating-point values take the form shown in Figure 4-3.

In order to help ensure accuracy during long chains of computations involving double-precision floating-point numbers, Intel designed the extended-precision format. The extended-precision format uses 80 bits. Twelve of the additional 16 bits are appended to the mantissa, and 4 of the additional bits are appended to the exponent. Unlike the single- and double-precision values, the extended-precision format’s mantissa does not have an implied HO bit that is always one. Therefore, the extended-precision format provides a 64-bit mantissa, a 15-bit excess-16,383 exponent, and a 1-bit sign. The format for the extended-precision floating-point value appears in Figure 4-4.

On the 80x86 FPUs, all computations are done using the extended-precision form. Whenever you load a single- or double-precision value, the FPU automatically converts it to an extended-precision value. Likewise, when you store a single or double precision value to memory, the FPU automatically rounds the value down to the appropriate size before storing it. By always working with the extended-precision format, Intel guarantees a large number of guard bits are present to ensure the accuracy of your computations. By performing all computations using 80 bits, Intel helps ensure (but not guarantee) that you will get full 32- or 64-bit accuracy in your computations. Because the FPUs do not provide a large number of guard bits in 80-bit computations, some error will inevitably creep into the LO bits of an extended-precision computation. However, if your computation is correct to 64 bits, the 80-bit computation will generally provide at least 64 accurate bits. Most of the time you will get even more. While you cannot assume that you get an accurate 80-bit computation, you can usually do better than 64 bits when using the extended-precision format.

Non-Intel CPUs that support floating-point arithmetic generally provide only the 32-bit and 64-bit formats. As such, calculations on those CPUs may produce less accurate results than the equivalent string of calculations on the 80x86 using 80-bit calculations.

To maintain maximum precision during floating-point computations, most computations use normalized values. A normalized floating-point value is one whose HO mantissa bit contains one. Keeping floating-point numbers normalized is beneficial because it maintains the maximum number of bits of precision in a computation. If several HO bits of the mantissa are all zero, the mantissa has that many fewer bits of precision available for computation. Therefore, a floating-point computation will be more accurate if it involves only normalized values.

Almost any unnormalized value can be normalized by shifting the mantissa bits to the left and decrementing the exponent until a one appears in the HO bit of the mantissa.[13] Remember, the exponent is a binary exponent. Each time you increment the exponent, you multiply the floating-point value by two. Likewise, whenever you decrement the exponent, you divide the floating-point value by two. By the same token, shifting the mantissa to the left one bit position multiplies the floating-point value by two, and shifting the mantissa to the right divides the floating-point value by two. Therefore, shifting the mantissa to the left one position and decrementing the exponent does not change the value of the floating-point number (this is why, as you saw earlier, there are multiple representations for certain numbers in the floating-point format).

Here’s an example of an unnormalized value:

0.100000 × 21

Shift the mantissa to the left one position and decrement the exponent to normalize it:

1.000000 × 20

There are two important cases in which a floating-point number cannot be normalized. Zero is one of these special cases. Obviously it cannot be normalized because the floating-point representation for zero contains no one bits. This, however, is not a problem because we can exactly represent the value zero with only a single bit. The IEEE floating-point formats use all zero bits in the exponent and mantissa fields to denote the value zero. Note that the IEEE floating-point format supports both +0 and −0 (depending on the value of the sign bit). Arithmetic calculations and comparisons treat positive and negative zero as equivalent, and software operating on floating-point values that represent zero can use the sign bit as a flag to indicate different things. For example, you could use the sign bit to indicate that the value is exactly zero (with the sign bit clear) or to indicate that it is actually nonzero but too small to represent with the current format (by setting the sign bit). Intel recommends using the sign bit to indicate that zero was produced via underflow of a negative value (with the sign bit set) or underflow of a positive number (with the sign bit clear). Presumably, their FPUs set the sign bit according to their recommendations when the FPUs produce a zero result. However, for the purposes of calculation, the floating-point formats ignore the sign bit when dealing with the value zero.

The second case in which we cannot normalize a floating-point number is when we have some HO bits in the mantissa that are zero but the biased exponent[14] is also zero (and we cannot decrement it to normalize the mantissa). Rather than disallow certain small values, whose HO mantissa bits and biased exponent are zero (the most negative exponent possible), the IEEE standard allows special denormalized values to represent these smaller values.[15] Although the use of denormalized values allows IEEE floating-point computations to produce better results than if underflow occurred, keep in mind that denormalized values offer fewer bits of precision.

During a calculation, as you have seen, floating-point arithmetic functions may produce a result with greater precision than the floating-point format supports (the guard bits in the calculation maintain this extra precision). When the calculation is complete and the code needs to store the result back into a floating-point variable, something must be done about those extra bits of precision. How the system uses with those extra guard bits to affect the bits it does maintain is known as rounding, and how it is done can affect the accuracy of the computation. Traditionally, floating-point software and hardware use one of four different ways to round values: truncation, rounding up, rounding down, or rounding to nearest.

Truncation is easy, but it generates the least accurate results in a chain of computations. Few modern floating-point systems use truncation except as a means for converting floating-point values to integers (truncation is the standard conversion when coercing a floating-point value to an integer).

Rounding up is another function that is useful on occasion. Rounding up leaves the value alone if the guard bits are all zero, but if the current mantissa does not exactly fit into the destination bits, then rounding up sets the result to the smallest possible larger value in the floating-point format. Like truncation, this is not a normal rounding mode. It is, however, useful for implementing functions like ceil (which rounds a floating-point value to the smallest possible larger integer).

Rounding down is just like rounding up, except it rounds the result to the largest possible smaller value. This may sound like truncation, but there is a subtle difference between truncation and rounding down. Truncation always rounds towards zero. For positive numbers, truncation and rounding down do the same thing. However, for negative numbers, truncation simply uses the existing bits in the mantissa, whereas rounding down will actually add a one bit to the LO position if the result was negative. Like truncation, this is not a normal rounding mode. It is, however, useful for implementing functions like floor (which rounds a floating-point value to the largest possible smaller integer).

Rounding to nearest is probably the most intuitive way to process the guard bits. If the value of the guard bits is less than half the value of the LO bit of the mantissa, then rounding to nearest truncates the result to the largest possible smaller value (ignoring the sign). If the guard bits represent some value that is greater than half of the value of the LO mantissa bit, then rounding to nearest rounds the mantissa to the smallest possible greater value (ignoring the sign). If the guard bits represent a value that is exactly half the value of the LO bit of the mantissa, then the IEEE floating-point standard says that half the time it should round up and half the time it should round down. You do this by rounding the mantissa to the value that has a zero in the LO bit position. That is, if the current mantissa already has a zero in its LO bit, you use the current mantissa value; if the current mantissa value has a one in the LO mantissa position, then you add one to the mantissa to round it up to the smallest possible larger value with a zero in the LO bit. This scheme, mandated by the IEEE floating-point standard, produces the best possible result when loss of precision occurs.

Here are some examples of rounding, using 24-bit mantissas, with 4 guard bits (that is, these examples round 28-bit numbers to 24-bit numbers using the rounding to nearest algorithm):

1.000_0100_1010_0100_1001_0101_0001-> 1.000_0100_1010_0100_1001_0101 1.000_0100_1010_0100_1001_0101_1100-> 1.000_0100_1010_0100_1001_0110 1.000_0100_1010_0100_1001_0101_1000-> 1.000_0100_1010_0100_1001_0110 1.000_0100_1010_0100_1001_0100_0001-> 1.000_0100_1010_0100_1001_0100 1.000_0100_1010_0100_1001_0100_1100-> 1.000_0100_1010_0100_1001_0101 1.000_0100_1010_0100_1001_0100_1000-> 1.000_0100_1010_0100_1001_0100

The IEEE floating-point format provides a special encoding for several special values. In this section we’ll look these special values, their purpose and meaning, and their representation in the floating-point format.

Under normal circumstances, the exponent bits of a floating-point number do not contain all zeros or all ones. An exponent containing all one or zero bits indicates a special value.

If the exponent contains all ones and the mantissa is nonzero (discounting the implied bit), then the HO bit of the mantissa (again discounting the implied bit) determines whether the value represents a quiet not-a-number (QNaN) or a signaling not-a-number (SNaN) (see Table 4-1). These not-a-number (NaN) results tell the system that some serious miscalculation has taken place and that the result of the calculation is completely undefined. QNaNs represent indeterminate results, while SNaNs specify that an invalid operation has taken place. Any calculation involving a NaN produces an NaN result, regardless of the values of any other operand(s). Note that the sign bit is irrelevant for NaNs. The binary representations of NaNs are shown in Table 4-1.

Table 4-1. Binary Representations for NaN

NaN | FP Format | Value |

|---|---|---|

SNaN | 32 bits | %s_11111111_0xxxx...xx (The value of s value is irrelevant — at least one of the x bits must be nonzero.) |

SNaN | 64 bits | %s_1111111111_0xxxxx...x (The value of s is irrelevant — at least one of the x bits must be nonzero.) |

SNaN | 80 bits | %s_1111111111_0xxxxx...x (The value of s is irrelevant — at least one of the x bits must be nonzero.) |

32 bits | %s_11111111_1xxxx...xx (The value of s is irrelevant.) | |

QNaN | 64 bits | %s_1111111111_1xxxxx...x (The value of s is irrelevant.) |

QNaN | 80 bits | %s_1111111111_1xxxxx...x (The value of s is irrelevant.) |

Two other special values are represented when the exponent contains all one bits, and the mantissa contains all zeros. In such a case, the sign bit determines whether the result is the representation for +infinity or −infinity. Whenever a calculation involves infinity as one of the operands, the arithmetic operation will produce one of the (well-defined) values found in Table 4-2.

Table 4-2. Operations Involving Infinity

Operation | Result |

|---|---|

n / ±infinity | 0 |

±infinity × ±infinity | ±infinity |

±nonzero / 0 | ±infinity |

infinity + infinity | infinity |

n + infinity | infinity |

n − infinity | −infinity |

±0 / ±0 | NaN |

infinity − infinity | NaN |

±infinity / ±infinity | NaN |

±infinity × 0 | NaN |

Finally, if the exponent bits are all zero, the sign bit indicates which of the two special values, −0 or +0, the floating-point number represents. Because the floating-point format uses a one’s complement notation, there are two separate representations for zero. Note that with respect to comparisons, arithmetic, and other operations, +0 is equal to −0.

The IEEE floating-point standard defines certain degenerate conditions under which the floating-point processor (or software-implemented floating-point code) should possibly notify the application software. These exceptional conditions include the following:

Of these, inexact result is the least serious, because most floating calculations will produce an inexact result. A denormalized operand also isn’t too serious (though this exception indicates that your calculation may be less accurate as a result of less available precision). The other exceptions indicate a more serious problem, and you shouldn’t ignore them.

How the computer system notifies your application of these exceptions depends on the CPU/FPU, operating system, and programming language, so we can’t really go into how one might handle these exceptions. Generally, though, you can use the exception-handling facilities in your programming language to trap these conditions as they occur in your particular environment. Note that most computer systems require that you explicitly tell them to generate a notification for these exceptional conditions; otherwise, the system will not notify you when one of the exceptional conditions exist.

Although most modern CPUs support a floating-point unit (FPU) that does floating-point arithmetic in hardware, it’s worthwhile to actually develop a set of software floating-point arithmetic routines to get a solid feel for what’s involved in floating-point arithmetic. Generally, when designing a software-based floating-point package, you would use assembly language to write the math functions because speed is a primary design goal for a floating-point package. However, in this chapter we’re only writing this floating-point package to get a clearer picture of what’s involved in floating-point arithmetic, so we’ll opt for code that is easy to write, read, and understand. As it turns out, floating-point addition and subtraction are easy to do in a high-level language like C/C++ or Pascal, so we’ll implement these functions in these languages. Floating-point multiplication and division actually turn out to be easier to do in assembly language than in a high-level language, so this book will write the floating-point multiplication and division routines using HLA.

For the purposes of the floating-point functions we’re about to develop, this section will use the IEEE 32-bit single-precision floating-point format (shown earlier in Figure 4-2), which uses a one’s complement representation for signed values. This means that the sign bit (bit 31) contains a one if the number is negative and a zero if the number is positive. The exponent is an 8-bit excess-127 exponent sitting in bits 23..30, and the mantissa is a 24-bit value with an implied HO bit of one. Because of the implied HO bit, this format does not support denormalized values.

Because the IEEE floating-point format supports signed real values, it turns out that addition and subtraction use essentially the same code. After all, computing X − Y is equivalent to computing X + (−Y). So if we can add a negative number with some other value, then we can also perform subtraction by first negating some number and then adding them. And because the IEEE floating-point format uses the one’s complement representation, negating a value is trivial — we just invert the sign bit.

Because we’re using the standard IEEE 32-bit single-precision floating-point format, we could theoretically get away with using the C/C++ float data type (assuming the underlying C/C++ compiler also uses this format, as most do on modern machines). However, you’ll soon see that when doing floating-point calculations in software, we need to manipulate various fields within the floating-point format as bit strings and integer values. Therefore, it’s more convenient to use a 32-bit unsigned integer type to hold the bit representation for our floating-point values. To avoid confusing our real values with actual integer values in a program, we’ll define the following real data type (which assumes that unsigned longs are 32-bit values in your implementation of C/C++) and declare all our real variables using this type:

typedef long unsigned real;

One advantage of using the same floating-point format that C/C++ uses for float values is that we can assign floating-point literal constants to our real variables, and we can do other floating-point operations such as input and output using existing library routines. However, one potential problem is that C/C++ will attempt to automatically convert between integer and floating-point formats if we use a real variable in a floating-point expression (remember, as far as C/C++ is concerned, real is just a long unsigned integer value). This means that we need to tell the compiler to treat the bit patterns found in our real variables as though they were float objects.

A simple type coercion like (float) realVariable will not work. The C/C++ compiler will emit code to convert the integer it believes realVariable to contain into the equivalent floating-point value. However, the bit pattern in realVariable is a floating-point value, so no conversion is required. We want the C/C++ compiler to treat the bit pattern it finds in realVariable as a float without doing any conversion. The following C/C++ macro is a sneaky way to do this:

#define asreal(x) (*((float *) &x))

Note that this macro requires a single parameter that must be a real variable. The result of this macro is a variable that the compiler believes is a float variable.

Now that we have our float variable, we’ll develop two C/C++ functions to compute floating-point addition and subtraction: fpadd and fpsub. These two functions will each take three parameters: the left and right operands of the operator and a pointer to a destination where these functions will store their result. The prototypes for these functions are the following:

void fpadd( real left, real right, real *dest ); void fpsub( real left, real right, real *dest );

The fpsub function is almost trivial. All it has to do is negate the right operand and call the fpadd function to do the real work. Here’s the code for the fpsub function:

void fpsub( real left, real right, real *dest )

{

right = right ^ 0x80000000; // Invert the sign bit of the right operand.

fpadd( left, right, dest ); // Let fpadd do the real work.

}The fpadd function is where all the real work is done. To make fpadd a little easier to understand and maintain, we’ll decompose the function into several different functions that help with various activities that take place. In an actual software floating-point library routine, you’d probably not do this decomposition because the extra subroutine calls would be a little slower; however, we’re developing fpadd for educational purposes, not for actual use as part of a software floating-point library, and readability is a bit more important than performance in this particular instance. Besides, if you need high-performance floating-point addition, you’ll probably use a hardware FPU rather than a software implementation.

The IEEE floating-point formats are good examples of packed data types. As you’ve seen in previous chapters, packed data types are great for reducing storage requirements for a data type, but they’re not the best format when you need to use the packed fields in actual calculations. Therefore, one of the first things our floating-point functions will do is unpack the sign, exponent, and mantissa fields from the floating-point representation. The following C/C++ functions handle these simple tasks.

The first unpacking function is the extractSign function. This function extracts the sign bit (bit 31) from our packed floating-point representation and returns the value zero (for positive numbers) or one (for negative numbers).

inline int extractSign( real from )

{

return( from >> 31);

}This code could have also extracted the sign bit using this (possibly more efficient) expression:

(from & 0x80000000) != 0

However, shifting bit 31 down to bit 0 is, arguably, easier to understand.

The next utility function we’ll look at unpacks the exponent from bits 23..30 in the packed real format. It does this by shifting the real value to the right by 23 bits, and then it masks out the sign bit. One other thing that this function will do is convert the excess-127 exponent to a two’s complement format (this is easily achieved by subtracting 127 from the excess-127 exponent we extract). Here’s the function that does this:

inline int extractExponent( real from )

{

return ((from >> 23) & 0xff) − 127;

}Extracting the mantissa is easy. All we have to do is mask out the exponent and sign bits and then insert the implied HO bit of one. The only catch is that we must return zero if the entire value is zero. Here’s the function that extracts the mantissa from the real value:

inline int extractMantissa( real from )

{

if( (from & 0x7fffffff) == 0 ) return 0;

return ((from & 0x7FFFFF) | 0x800000 );

}As you learned earlier, whenever adding or subtracting two values using scientific notation (and the IEEE floating-point format uses scientific notation), you must first adjust the two values so that they have the same exponent. For example, consider the addition of the following two decimal (base-10) numbers: 1.2345e3 + 8.7654e1.

To add these two numbers together, we must first adjust one or the other so that their exponents are the same. We can reduce the exponent of the first number by shifting the decimal point to the right. For example, the following values are all equivalent to 1.2345e3:

12.345e2 123.45e1 1234.5 12345e−1

Likewise, we can increase the value of an exponent by shifting the decimal point to the left. The following values are all equal to 8.7654e1:

0.87654e2 0.087654e3 0.0087654e4

For floating-point addition and subtraction involving binary numbers, we can make the binary exponents the same by shifting the mantissa one position to the left and decrementing the exponent, or by shifting the mantissa one position to the right and incrementing the exponent.

A problem with adjusting the exponent of one operand so that it matches the exponent of the other operand is that we only have so many bits to use to represent the mantissa. Shifting the mantissa bits to the right means that we reduce the precision of our number (because the bits wind up going off the LO end of the mantissa). To preserve as much accuracy as possible in our calculations, we shouldn’t truncate the bits we shift out of the mantissa. As noted earlier, we should round the result to the nearest value we can represent with the remaining mantissa bits. These are the IEEE rules for rounding, in the following order:

Truncate the result if the last bit shifted out was a zero.

Bump the mantissa up by one if the last bit shifted out was a one and there was at least one bit set to one in all the other bits that were shifted out.[16]

If the last we shifted out was a one, and all the other bits were zeros, then round the resulting mantissa up by one if the mantissa’s LO bit contains a one.

Shifting the mantissa and rounding it is a relatively complex operation, and it will occur a couple of times in the floating-point addition code. Therefore, it’s another candidate for a utility function. Here’s the C/C++ code that implements this functionality:

// shiftAndRound:

//

// Shifts a mantissa to the right the number of bits specified.

// Rounds the result according to the IEEE rules for rounding,

// which are:

//

// If the bits we shift out are a value that is greater than one-half the

// value of the LO bit we are left with, then we need

// to round the value up by adding one to the LO bit position.

// If the bits we shift out are a value that is less than one-half the value

// of the LO bit we are left with (after denormalization), then we need

// to round the value down (i.e., just leave the value alone).

// If the bits we shift out are exactly one-half the value of the LO bit

// we are left with, then we need to round the value to the next larger

// number that has a zero in the LO bit (round up if there's currently a one,

// or leave the value unchanged if the LO bit contains a zero).

void shiftAndRound( int *valToShift, int bitsToShift )

{

// Masks is used to mask out bits to check for a "sticky" bit.

static unsigned masks[24] =

{

0, 1, 3, 7, 0xf, 0x1f, 0x3f, 0x7f,

0xff, 0x1ff, 0x3ff, 0x7ff, 0xfff, 0x1fff, 0x3fff, 0x7fff,

0xffff, 0x1ffff, 0x3ffff, 0x7ffff, 0xfffff, 0x1fffff, 0x3fffff,

0x7fffff

};

// HOmasks: Masks out the HO bit of the value masked by the masks entry.

static unsigned HOmasks[24] =

{

0,

1, 2, 4, 0x8, 0x10, 0x20, 0x40, 0x80,

0x100, 0x200, 0x400, 0x800, 0x1000, 0x2000, 0x4000, 0x8000,

0x10000, 0x20000, 0x40000, 0x80000, 0x100000, 0x200000, 0x400000

};

// shiftedOut: Holds the value that will be shifted out of a mantissa

// during the denormalization operation (used to round a denormalized

// value).

int shiftedOut;

assert( bitsToShift <= 23 );

// Okay, first grab the bits we're going to shift out (so we can determine

// how to round this value after the shift).

shiftedOut = *valToShift & masks[ bitsToShift ];

// Shift the value to the right the specified number of bits.

// Note: bit 31 is always zero, so it doesn't matter if the C

// compiler does a logical shift right or an arithmetic shift right.

*valToShift = *valToShift >> bitsToShift;

// If necessary, round the value:

if( ( shiftedOut > HOmasks[ bitsToShift ] )

{

// If the bits we shifted out are greater than 1/2 the LO bit, then

// round the value up by one.

*valToShift = *valToShift + 1;

}

else if( shiftedOut == HOmasks[ bitsToShift ] )

{

// If the bits we shifted out are exactly 1/2 of the LO bit's value,

// then round the value to the nearest number whose LO bit is zero.

*valToShift = *valToShift + (*valToShift & 1);

}

// else

// We round the value down to the previous value. The current

// value is already truncated (rounded down), so we don't have to do

// anything.

}The “trick” in this code is that it uses a couple of lookup tables, masks and HOmasks, to extract those bits that the mantissa will use from the shift right operation. The masks table entries contain one bits (set bits) in the positions that will be lost during the shift. The HOmasks table entries contain a single set bit in the position specified by the index into the table; that is, the entry at index zero contains a one in bit position zero, the entry at index one contains a one in bit position one, and so on. This code selects an entry from each of these tables based on the number of mantissa bits it needs to shift to the right.

If the original mantissa value, logically ANDed with the appropriate entry in masks, is greater than the corresponding entry in HOmasks, then the shiftAndRound function rounds the shifted mantissa to the next greater value. If the ANDed mantissa value is equal to the corresponding HOmasks element, this code rounds the shifted mantissa value according to its LO bit (note that the expression (*valToShift & 1) produces one if the mantissa’s LO bit is one, and it produces zero otherwise). Finally, if the ANDed mantissa value is less than the entry from the HOmasks table, then this code doesn’t have to do anything because the mantissa is already rounded down.

Once we’ve adjusted one of the values so that the exponents of both operands are the same, the next step in the addition algorithm is to compare the signs of the values. If the signs of the two operands are both the same, we can simply add their mantissas (using a standard integer add operation). If the signs of the operands are different, we have to subtract, rather than add, the mantissas. Because floating-point values use one’s complement representation, and standard integer arithmetic uses two’s complement, we cannot simply subtract the negative value from the positive value. Instead, we have to subtract the smaller value from the larger value and determine the sign of the result based on the signs and magnitudes of the original operands. Table 4-3 describes how to accomplish this.

Whenever adding or subtracting two 24-bit numbers, it is possible to produce a result that requires 25 bits (this, in fact, is a common result when dealing with normalized values). Immediately after an addition or subtraction, the floating-point code has to check the result for overflow. If this has happened, it needs to shift the mantissa right by one bit, round the result, and then increment the exponent. After completing this step, all that remains is to pack the resulting sign, exponent, and mantissa fields into the packed 32-bit IEEE floating-point format and the addition (or subtraction) is complete. The following packFP function is responsible for packing the sign, exponent, and mantissa fields into the 32-bit floating-point format:

// packFP:

//

// Packs the sign, exponent, and mantissa fields into a

// 32-bit "real" value. Works for normalized values, denormalized

// values, and zero, but does not work for NaNs and infinities.

inline real packFP( int sign, int exponent, int mantissa )

{

return

(real)

(

(sign << 31)

| ((exponent + 127) << 23)

| (mantissa & 0x7fffff)

);

}With the utility routines out of the way, it’s time to take a look at the fpadd function, which adds two floating-point values, producting a 32-bit real result:

// fpadd:

//

// Computes:

// dest = left + right

// where all three operands are "real" values (32-bit floats).

void fpadd( real left, real right, real *dest )

{

// The following variables hold the fields associated with the

// left operand:

int Lexponent;

long unsigned Lmantissa;

int Lsign;

// The following variables hold the fields associated with the

// right operand:

int Rexponent;

long unsigned Rmantissa;

int Rsign;

// The following variables hold the separate fields of the result:

int Dexponent;

long unsigned Dmantissa;

int Dsign;

// Extract the fields so that they're easy to work with:

Lexponent = extractExponent( left );

Lmantissa = extractMantissa( left );

Lsign = extractSign( left );

Rexponent = extractExponent( right );

Rmantissa = extractMantissa( right );

Rsign = extractSign( right );

// Code to handle special operands (infinity and NaNs):

if( Lexponent == 127 )

{

if( Lmantissa == 0 )

{

// If the left operand is infinity, then the result

// depends upon the value of the right operand.

if( Rexponent == 127 )

{

// If the exponent is all one bits (127 after unbiasing)

// then the mantissa determines if we have an infinity value

// (zero mantissa), a QNaN (mantissa = 0x800000) or a SNaN

// (nonzero mantissa not equal to 0x800000).

if( Rmantissa == 0 ) // Do we have infinity?

{

// infinity + infinity = infinity

// −infinity − infinity = −infinity

// −infinity + infinity = NaN

// infinity − infinity = NaN

if( Lsign == Rsign )

{

*dest = right;

}

else

{

*dest = 0x7fC00000; // +QNaN

}

}

else // Rmantissa is nonzero, so it's a NaN

{

*dest = right; // Right is a NaN, propagate it.

}

}

}

else // Lmantissa is nonzero, Lexponent is all ones.

{

// If the left operand is some NaN, then the result will

// also be the same NaN.

*dest = left;

}

// We've already calculated the result, so just return.

return;

}

else if( Rexponent == 127 )

{

// Two case: right is either a NaN (in which case we need to

// propagate the NaN regardless of left's value) or it is

// +/− infinity. Because left is a "normal" number, we'll also

// wind up propagating the infinity because any normal number

// plus infinity is infinity.

*dest = right; // Right is a NaN, propagate it.

return;

}

// Okay, we've got two actual floating-point values. Let's add them

// together. First, we have to "denormalize" one of the operands if

// their exponents aren't the same (when adding or subtracting values,

// the exponents must be the same).

//

// Algorithm: choose the value with the smaller exponent. Shift its

// mantissa to the right the number of bits specified by the difference

// between the two exponents.

Dexponent = Rexponent;

if( Rexponent > Lexponent )

{

shiftAndRound( &Lmantissa, (Rexponent − Lexponent));

}

else if( Rexponent < Lexponent )

{

shiftAndRound( &Rmantissa, (Lexponent − Rexponent));

Dexponent = Lexponent;

}

// Okay, add the mantissas. There is one catch: if the signs are opposite

// then we've actually got to subtract one value from the other (because

// the FP format is one's complement, we'll subtract the larger mantissa

// from the smaller and set the destination sign according to a

// combination of the original sign values and the largest mantissa).

if( Rsign ^ Lsign )

{

// Signs are different, must subtract one value from the other.

if( Lmantissa > Rmantissa )

{

// The left value is greater, so the result inherits the

// sign of the left operand.

Dmantissa = Lmantissa − Rmantissa;

Dsign = Lsign;

}

else

{

// The right value is greater, so the result inherits the

// sign of the right operand.

Dmantissa = Rmantissa − Lmantissa;

Dsign = Rsign;

}

}

else

{

// Signs are the same, so add the values:

Dsign = Lsign;

Dmantissa = Lmantissa + Rmantissa;

}

// Normalize the result here.

//

// Note that during addition/subtraction, overflow of one bit is possible.

// deal with that possibility here (if overflow occurred, shift the

// mantissa to the right one position and adjust for this by incrementing

// the exponent). Note that this code returns infinity if overflow occurs

// when incrementing the exponent (infinity is a value with an exponent

// of $FF);

if( Dmantissa >= 0x1000000 )

{

// Never more than one extra bit when doing addition/subtraction.

// Note that by virtue of the floating-point format we're using,

// the maximum value we can produce via addition or subtraction is

// a mantissa value of 0x1fffffe. Therefore, when we round this

// value it will not produce an overflow into the 25th bit.

shiftAndRound( &Dmantissa, 1 ); // Move result into 24 bits.

++Dexponent; // Shift operation did a div by two,

// this counteracts the effect of

// the shift (incrementing exponent

// multiplies the value by two).

}

else

{

// If the HO bit is clear, normalize the result

// by shifting bits up and simultaneously decrementing

// the exponent. We will treat zero as a special case

// because it's a common enough result.

if( Dmantissa != 0 )

{

// The while loop multiplies the mantissa by two (via a shift

// left) and then divides the whole number by two (by

// decrementing the exponent. This continues until the HO bit of

// Dmantissa is set or the exponent becomes −127 (zero in the

// biased-127 form). If Dexponent drops down to −128, then we've

// got a denormalized number and we can stop.

while( (Dmantissa < 0x800000) && (Dexponent > −127 ))

{

Dmantissa = Dmantissa << 1;

--Dexponent;

}

}

else

{

// If the mantissa went to zero, clear everything else, too.

Dsign = 0;

Dexponent = 0;

}

}

// Reconstruct the result and store it away:

*dest = packFP( Dsign, Dexponent, Dmantissa );

}To conclude this discussion of the software implementation of the fpadd and fsub functions, here’s a C main function that demonstrates the use of these functions:

// A simple main program that does some trivial tests on fpadd and fpsub.

int main( int argc, char **argv )

{

real l, r, d;

asreal(l) = 1.0;

asreal(r) = 2.0;

fpadd( l, r, &d );

printf( "dest = %x

", d );

printf( "dest = %12E

", asreal( d ));

l = d;

asreal(r) = 4.0;

fpsub( l, r, &d );

printf( "dest2 = %x

", d );

printf( "dest2 = %12E

", asreal( d ));

}Most software floating-point libraries are actually written in hand-optimized assembly language, not in a high-level language. As the previous section shows, it’s perfectly possible to write floating-point routines in a high-level language and, particularly in the case of single-precision floating-point addition and subtraction, you could actually write the code efficiently. Given the right library routines, it’s also possible to write the floating-point multiplication and division routines in a high-level language. This section presents an HLA implementation of the single-precision floating-point multiplication and division algorithms, however, because it turns out that their implementation is actually easier in assembly language than in a high-level language like C/C++.

The HLA code in this section implements two functions, fpmul and fpdiv, that have the following prototypes:

procedure fpmul( left:real32; right:real32 ); @returns( "eax" ); procedure fpdiv( left:real32; right:real32 ); @returns( "eax" );

Beyond the fact that this code is written in assembly language rather than C, there are two main differences you should note between the code in this section and the code in the previous section. First, the HLA code uses the built-in real32 data type rather than creating a new data type for our real values. This code can do that because we can easily coerce any 32-bit memory object to real32 or dword in assembly language. Therefore, there is no reason to play games with the data types. The second thing you’ll notice about these prototypes is that they only support two parameters; there is no destination parameter. These functions simply return the real32 result in the EAX register.[17]

Whenever you multiply two values in scientific notation, you compute the result sign, exponent, and mantissa as follows:

The result sign is the exclusive-OR of the operand signs. That is, the result is positive if both operand signs were the same, and the result sign is negative if the operand signs were different.

The result exponent is the sum of the operands’ exponents.

The result mantissa is the integer (fixed-point) product of the two operand mantissas.

Beyond these rules, there are a few additional rules that affect the floating-point multiplication algorithm that are a direct result of the IEEE floating-point format:

If either, or both, of the operands are zero, the result is zero (this is a special case because the representation for zero is special).

If either operand is infinity, the result is infinity.

If either operand is a NaN, the result is that same NaN.

The fpmul procedure begins by checking the operands to see if either of them is zero. If so, the function immediately returns a 0.0 result to the caller. Next, the fpmul code checks for NaN or infinity values in the left and right operands. If it finds one of these values, the fpmul procedure returns that same value to the caller.

If both of the fpmul operands are reasonable floating-point values, then the fpmul code extracts the sign, exponent, and mantissa fields of the packed floating-point value. Actually, “extract” isn’t the correct term for fpmul; isolate is probably a better description of what this code does to the sign and exponent fields. Look at the code that isolates the sign bits of the two operands and computes the result sign:

mov( (type dword left), ebx ); // Result sign is the XOR of the xor( (type dword right), ebx ); // operand signs. and( $8000_0000, ebx ); // Keep only the sign bit.

This code exclusive-ORs the HO bits of the two operands (as well as all the other bits) and then masks out bits 0..30, leaving only the result sign value in bit 31 of the EBX register. This procedure doesn’t bother moving the sign bit down to bit 0 (as you’d normally do when unpacking data) because it would just have to move this bit back to bit 31 when it repacks the floating-point value later.

The fpmul procedure uses the same trick when processing the exponent. It simply isolates bits 23..30 and operates on the exponent in place. When multiplying two values using scientific notation, you must add the values of the exponents together. Note, however, that the floating-point exponents use an excess-127 format; simply adding the exponents together creates a problem because the bias winds up being added twice. Therefore, the exponent-processing code must subtract 127 from the exponent’s sum first. The following code isolates the exponent bits, adjusts for the extra bias, and adds the exponents together:

mov( (type dword left), ecx ); // Exponent goes into bits 23..30

and( $7f80_0000, ecx ); // of ECX; mask these bits.

sub( 126 << 23, ecx ); // Eliminate the bias of 127.

mov( (type dword right), eax );

and( $7f80_0000, eax );

// For multiplication, we need to add the exponents:

add( eax, ecx ); // Exponent value is now in bits

// 23..30 of ECX.First, you’ll notice that this code subtracts 126 rather than 127 (the value you’d normally expect to have to subtract in order to eliminate the extra bias). The reason for this is that later on we will need to double the result of the multiplication of the mantissas. Subtracting 126 rather than 127 does this multiplication by two implicitly for us (saving an instruction later on).

If the sum of the exponents with add( eax, ecx ), above, is too large to fit into eight bits, there will be a carry out of bit 30 into bit 31 of ECX, which will set the 80x86 overflow flag. If overflow occurs on a multiplication, our code will return infinity as the result.

If overflow does not occur, then the fpmul procedure needs to set the implied HO bit of the two mantissa values. The following code handles this chore, as well as stripping out all the exponent and sign bits from the mantissas. This code also left justifies the mantissa bits up against bit position 31 in EAX and EDX.

mov( (type dword left), eax );

mov( (type dword right), edx );

// If we don't have a zero value then set the implied HO bit of the mantissa:

if( eax <> 0 ) then

or( $80_0000, eax ); // Set the implied bit to one.

endif;

shl( 8, eax ); // Moves mantissa to bits 8..31 and removes sign/exp.

// Repeat this for the right operand.

if( edx <> 0 ) then

or( $80_0000, edx );

endif;

shl( 8, edx );Once this code shifts the mantissas to bit 31 in EAX and EDX, it does the multiplication by using the 80x86 mul instruction:

mul( edx );

This instruction computes the 64-bit product of EAX and EDX, leaving the product in EDX:EAX (the HO double word is in EDX, and the LO double word is in EAX). Note that the product of any two n-bit integers produces a number that could require as many as 2*n bits. That’s why the mul instruction computes EDX:EAX = EAX*EDX. Left justifying the mantissas in EAX and EDX before doing the multiplication is what ensures the mantissa of the product winds up in bits 7..30 of EDX (it would have been nice to have them wind up in bit positions 8..31 of EDX, but fixed-point multiplication winds up shifting the value down one bit in this case; that’s why this code only subtracted 126 when adjusting for the excess-127 value). As these numbers were normalized prior to the multiplication, bit 30 of EDX will contain a one after the multiplication unless the result is zero. Note that the 32-bit IEEE real format does not support denormalized values, so we don’t have to worry about this case when using 32-bit floating-point values.

Because the mantissas were actually 24 bits each, the product of the mantissas that the mul instruction produces could have as many as 48 significant bits. However, our result mantissa can only hold 24 bits, so we need to round the value to produce a 24-bit result (using, of course, the IEEE rounding algorithm — see 4.4 Rounding). Here’s the code that rounds the value in EDX to 24 significant bits (in positions 8..31):

test( $80, edx ); // Clears zero flag if bit seven of EDX = 1.

if( @nz ) then

add( $FFFF_FFFF, eax ); // Sets carry if EAX <> 0.

adc( $7f, dl ); // Sets carry if DL:EAX > $80_0000_0000.

if( @c ) then

// If DL:EAX > $80_0000_0000 then round the mantissa

// up by adding one to bit position eight:

add( 1 << 8, edx );

else // DL:EAX = $80_0000_0000

// We need to round to the value that has a zero

// in bit position zero of the mantissa (bit #8 of EDX):

test( 8, edx ); // Clears zero flag if bit #8 contains a one.

if( @nz ) then

add( 1 << 8, edx ); // Adds a one starting at bit position eight.

// If there was an overflow, renormalize:

if( @c ) then

rcr( 1, edx ); // Shift overflow (in carry) back into EDX.

inc( ecx ); // Shift did a divide by two. Fix that.

endif;

endif;

endif;

endif;An interesting thing to note about this rounding code is that it may need to renormalize the number after rounding. If the mantissa contains all one bits and needs to be rounded up, this will produce an overflow out of the HO bit of the mantissa. The rcr and inc instructions at the end of this code sequence put the overflow bit back into the mantissa if overflow occurs.

The only thing left for fpmul to do after this is pack the destination sign, exponent, and mantissa into the 32-bit EAX register. The following code does this:

shr( 8, edx ); // Move mantissa into bits 0..23. and( $7f_ffff, edx ); // Clear the implied bit. lea( eax, [edx+ecx] ); // Merge mantissa and exponent into EAX. or( ebx, eax ); // Merge in the sign.

The only tricky thing in this code is the use of the lea (load effective address) instruction to compute the sum of EDX (the mantissa) and ECX (the exponent) and move the result to EAX all with a single instruction.

Floating-point division is a little bit more involved than multiplication because the IEEE floating-point standard says many things about degenerate conditions that can occur during division. We’re not going to discuss all the code that handles those conditions here. Instead, see the discussion of the conditions for fpmul earlier, and check out the complete code listing for fdiv later in this section.

Assuming we have reasonable numbers to divide, the division algorithm first computes the result sign using the same algorithm (and code) as for multiplying. When dividing two values using scientific notation, we have to subtract their exponents. Unlike the multiplication algorithm, it’s going to be more convenient to truly unpack the exponents for the two division operands and convert them from excess-127 to two’s complement form. Here’s the code that does this:

mov( (type dword left), ecx ); // Exponent comes from bits 23..30. shr( 23, ecx ); and( $ff, ecx ); // Mask out the sign bit (in bit 8). mov( (type dword right), eax ); shr( 23, eax ); and( $ff, eax ); // Eliminate the bias from the exponents: sub( 127, ecx ); sub( 127, eax ); // For division, we need to subtract the exponents: sub( eax, ecx ); // Leaves result exponent in ECX.

The 80x86 div instruction absolutely, positively requires the quotient to fit into 32 bits. If this condition is not true, the CPU may abort the operation with a divide exception. As long as the HO bit of the divisor contains a one and the HO two bits of the dividend contain %01, we will not get a division error. Here’s the code the prepares the operands prior to the division operation:

mov (type dword left), edx );

if( edx <> 0 ) then

or( $80_0000, edx ); // Set the implied bit to one in the left operand.

shl( 8, edx );

endif;

mov( (type dword right), edi );

if( edi <> 0 ) then

or( $80_0000 ); // Set the implied bit to one in the right operand.

shl( 8, edi );

else

// Division by zero error, here.

endif;The next step is to actually do the division. As noted earlier, in order to prevent a division error, we have to shift the dividend one bit to the right (to set the HO two bits to %01). The code that does this shift and then the division is as follows:

xor( eax, eax ); // EAX := 0; shr( 1, edx ); // Shift EDX:EAX to the right one bit to rcr( 1, eax ); // prevent a division error. div( edi ); // Compute EAX = EDX:EAX / EDI.

Once the div instruction executes, the quotient is sitting in the HO 24 bits of EAX, and the remainder is in AL:EDX. We now need to normalize and round the result. Rounding is a little easier because AL:EDX contains the remainder after the division; it will contain a value less than $80:0000_0000 (that is, the 80x86 AL register contains $80 and EDX contains zero) if we need to round down, it will contain a value greater than $80:0000_0000 if we need to round up, and it will contain exactly $80:0000_0000 if we need to round to the nearest value.

Here’s the code that does this:

test( $80, al ); // See if the bit just below the LO bit of the

if( @nz ) then // mantissa contains a zero or one.

// Okay, the bit just below the LO bit of our mantissa contains a one.

// If all other bits below the mantissa and this bit contain zeros,

// we have to round to the nearest mantissa value whose LO bit is zero.

test( $7f, al ); // Clears zero flag if bits 0..6 <> 0.

if( @nz || edx <> 0 ) then // If bits 0..6 in AL are zero and EDX

// is zero.

// We need to round up:

add( $100, eax ); // Mantissa starts in bit #8 );

if( @c ) then // Carry set if mantissa overflows.

// If there was an overflow, renormalize.

rcr( 1, eax );

inc( ecx );

endif;

else

// The bits below the mantissa are exactly 1/2 the value

// of the LO mantissa bit. So we need to round to the value

// that has a LO mantissa bit of zero:

test( $100, eax );

if( @nz ) then

add( $100, eax );

if( @c ) then

// If there was an overflow, renormalize.

rcr( 1, eax ); // Put overflow bit back into EAX.

inc( ecx ); // Adjust exponent accordingly.

endif;

endif;

endif;

endif;The last step in fpdiv is to add the bias back into the exponent (and verify that overflow doesn’t occur) and then pack the quotient’s sign, exponent, and mantissa fields into the 32-bit floating-point format. Here’s the code that does this:

if( (type int32 ecx) > 127 ) then

mov( $ff−127, ecx ); // Set exponent value for infinity

xor( eax, eax ); // because we just had overflow.

elseif( (type int32 ecx) < −128 ) then

mov( −127, ecx ); // Return zero for underflow (note that

xor( eax, eax ); // next we add 127 to ECX).

endif;

add( 127, ecx ); // Add the bias back in.

shl( 23, ecx ); // Move the exponent to bits 23..30.

// Okay, assemble the final real32 value:

shr( 8, eax ); // Move mantissa into bits 0..23.

and( $7f_ffff, eax ); // Clear the implied bit.

or( ecx, eax ); // Merge mantissa & exponent into EAX.

or( ebx, eax ); // Merge in the sign.Whew! This has been a lot of code. However, it’s worthwhile to go through all this just to see how floating-point operations work (so you can gain an appreciation of exactly what an FPU is doing for you).

Donald Knuth’s The Art of Computer Programming, Volume Two: Seminumerical Algorithms, provides an in-depth discussion of floating-point arithmetic and floating-point formats. This book is required reading for someone who wants to fully understand how floating-point arithmetic operates. Also, Intel’s documentation on its Pentium processors explains its floating-point formats, exceptional conditions, and other issues related to the use of its FPU. Likewise, the manufacturer’s literature for any CPU that supports floating-point arithmetic will explain the specifics for the use of that CPU’s floating-point unit.

Those interested in trapping floating-point exceptions from a high-level language will need to check their language vendor’s documentation to determine how this is done. Unfortunately, there are few standards around, so most compiler vendors use a proprietary scheme or a CPU- or OS-dependent scheme to trap these exceptions. For general information about the effects of precision and accuracy in floating-point calculations, a good textbook on numerical analysis would be a reasonable place to start.

Finally, The Art of Assembly Language (No Starch Press) contains lots of additional information related to floating-point arithmetic including the implementation of various transcendental and other functions. The UCR Standard Library for 80x86 Assembly Language Programmers (a software package I’ve developed that is available at http://webster.cs.ucr.edu; check out the “Assembler Tools” link and look under MASM) contains a full software-based floating-point package in 16-bit 8086 assembly language. The HLA Standard Library includes source code for several FPU support routines, including floating-point I/O and conversion. Check out the Webster website for more details (look under “HLA” when following the “Assembler Tools” link).