Chapter 9

Industry Trends

With the progression of the art of 3D animation being so closely tied to technology it is created with, it is worth looking ahead to the next big technology push in the 3D animation industry. New technologies are released yearly in many forms—ranging from computer hardware with more speed and faster data transfers, software with advanced capabilities, and technologies that make the workflow more seamless. Some of the new trends being pursued by the 3D animation industry include full-body and detail motion capture, stereoscopic 3D output, point-cloud data, real-time workflow capabilities, and virtual studios. Some of these technologies are in use today but not available to all consumers. For others, consumer use is still over the horizon. Each will provide a faster turnaround on projects and will enable artists to focus on the art of the project and not the technical hurdles of the production pipeline.

- Using motion capture

- Creating stereoscopic 3D

- Integrating point-cloud data

- Providing real-time capabilities

- Working in virtual studios

Motion capture, or mocap, is the technique of recording the movement of a real person so that it can be applied to a digital character. Chapter 6, “Animation and Rigging,” covered some motion-capture techniques. This section details the current technology and where it’s going in the future.

Most people today likely associate motion capture with the film and video game industry. We’ve all seen behind-the-scenes footage showing actors completing motion-capture sessions. But motion capture is used in many other fields—including the military, medicine, and biomechanics—for various reasons.

In all of these industries, two systems—marker and markerless—are used to capture the motion data. You’ll look at each of these systems in depth in this section.

Marker Systems

Marker systems apply a set of markers on and around the joints of a performer, as shown in Figure 9-1. A camera system then performs three-dimensional data tracking by triangulating each marker.

Triangulating

Triangulating is the process of determining the location of a point in 3 dimensional spaces by measuring them from fixed points in space. In motion capture camera marker systems this is created by fixing cameras in space and then tracking from the cameras the points of the body. As long as three cameras can see the marker the system can track the point.

These markers can be passive or active, depending on the system. Passive markers are made of a retroreflective material, just like the reflective material on running shoes. Retroreflective materials reflect light back at a light source with very little light scattering, so the cameras usually have light-emitting diodes (LEDs) on them. Active markers are LEDs that self-illuminate for the optical camera system to track.

Figure 9-1: Vicon Motion Capture System

The marker system uses a capture volume, a virtual three-dimensional space that is created by placing multiple cameras around a performance space. A 0,0,0 space (the center XYZ location for a Cartesian coordinate system) is created within the software to capture the markers. A performer walks into the capture volume and stands in a T-pose for an initial marker capture. The software takes the initial capture and codes each marker with a name so it can track the markers from that point on. The performer then delivers a performance, and the software assigns translation and rotation values to a skeleton that will be applied to a character rig later.

Marker systems have the following advantages and disadvantages:

Advantages

- Very high frame-per-second capture rates

- Very accurate capture of position data

- Can capture multiple actors at one time

Disadvantages

- Cameras can lose track of markers, which means extra cleanup later in the animation stage.

- Capture volume can be very limiting in physical size because of marker visibility from distance limitation of the capture camera.

Markerless Systems

Markerless systems typically allow the user to have more control over where the capture can happen, because the capture space can be larger and not confined to a stage. The typical markerless system is suit-based. A performer wears a suit, like a robotic outer shell, that measures the rotations of each body joint. These systems can use cameras, but often no camera is involved at all. Instead, the suit may transmit data directly to a computer. The suits provide motion capture that is accurate enough for the entertainment industry, but they are not commonly used in medical or biomechanical applications.

Camera-based markerless capture systems are similar to marker systems, in that they use cameras calibrated to a specific point to capture the motion of a performer within a volume. The advantage to camera-based markerless systems is that the performers can wear almost any type of clothing they want. There are even a few motion-capture systems based on the Microsoft Kinect system for the Xbox, which uses a 3D stereoscopic camera system to read and understand motion that is in front of it. Much like the suit-based system, these camera-based markerless systems are used in entertainment and education more than in medicine or biomechanics.

Markerless systems have the following advantages and disadvantages:

Advantages

- Larger capture space

- Typically faster setup for capture

Disadvantages

- Not as accurate, which is important in medical and biomechanical industries

- Difficult to capture more than one performer at a time with realistic interactions

Stereoscopic 3D is the technology used in 3D movies today to create the illusion of depth on a two-dimensional screen. There are many techniques for creating this effect onscreen or on paper—wearing special glasses, crossing your eyes, closing one eye, using special screens to split light into different eyes, and using color anaglyphs to separate a single image.

Some might consider today’s 3D images and movies a passing fad, but with the incorporation of stereoscopic 3D into video games and other applications such as production visualization and architecture renderings, 3D is likely here to stay. So as 3D artists, we need to look at stereoscopic 3D as another tool to help convey our ideas and products to a general audience.

So how does stereoscopy work? Your eyes can be thought of as two cameras that are focusing on the same point, and your brain ties the two images together as one. Your brain interprets the differences in the images as a visual queue to depth, which enables you to see in three dimensions. So to create this effect for television, movies, or even printed images, we need to show two images at once and have the viewer’s brain or eyes separate the images to break out the differences and create the impression of depth.

Over time, various tools and techniques have been used to create 3D images. The stereoscope, shown in Figure 9-2, was one of the first 3D viewing devices. Looking through the stereoscope, a viewer would see two prints of the same 2D picture, slightly offset from one another. Each eye would see only one of the images, creating the 3D effect. You might remember a device called the View-Master from your childhood; it is a miniature version of the stereoscope.

Stereoscopic 3D’s Data Demands

One issue of stereoscopic 3D in animation is data storage. Because 3D requires two images to create one final image, a 3D software package has to render two images for every frame of a project. In other words, double the storage is required for all of the frames. This is not a big deal because data storage is becoming cheaper every day, but it’s something to think about.

The three main techniques and devices used today for viewing 3D film, video, and television are color anaglyphs, polarized glasses, and shutter glasses.

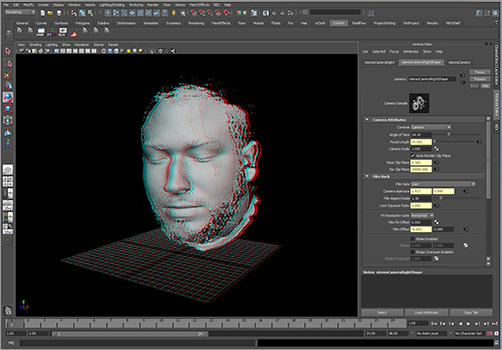

Color anaglyphs are images composed of two layers—one red, and the other cyan. When wearing glasses with one red and one cyan lens (shown in Figure 9-3), each eye can see only one image, creating the 3D effect. You have probably worn these glasses before. In addition, 3D software such as Autodesk Maya has an anaglyph mode, enabling users to easily see a 3D effect in the work view (see Figure 9-4). Using anaglyphs is an inexpensive way to create a 3D effect, The biggest drawback to this method is the color and overall visual-fidelity loss due to the red and cyan lens that will filter in our eyes.

Figure 9-4: Autodesk Maya with the anaglyph viewing display on

Creating 3D with polarized glasses is a favorite right now for movie theaters. Two projectors project separate images onto the same screen through a polarizing filter. The polarizing filter will separate the light into different types of waves so that the viewer can wear inexpensive glasses with polarized lenses that filter the light, allowing each eye to see only one of the images.

The use of shutter glasses is the most popular method for 3D television. The viewer wears a pair of glasses with liquid crystal that can block light passing through each lens. The glasses are then synced up to the television, so that each lens blocks the image on every other frame. Video is played at twice its normal speed—so, for instance, video otherwise viewed at 30 fps is played at 60 fps, with alternating eye images per frame. The viewer sees only the image per eye they are supposed to see to create the 3D effect. This works because of the Persistence of Vision theory discussed in Chapter 3, “Understanding Digital Imaging and Video.” Viewers are not aware of the flickering of images. The drawbacks to this method are that when you look away from the television, you will see an odd flickering, and you also have to be in a small viewing range of the television that is not too far left or right of the screen.

Since the 1800s, we have used 3D stereoscopic tricks as sideshow acts or as a gimmicky way to sell tickets to horror films. Until now, reliable technology for easily and accurately creating and displaying 3D images was not available. But today we have various ways to create 3D imagery with minimal quality loss. You can now buy 3D-ready cameras, televisions, and Blu-ray players. The next big push will be HD television with no glasses, called autostereoscopy, and no loss of quality, which is going to be a reality very soon.

A point cloud is a very large set of vertices in a 3D environment that represents the 3D shape of objects. These points can be colored to add texture to the objects. With enough points, you can create a solid surface with as much detail as you want, as shown in Figure 9-5.

This relatively new type of data has not been fully incorporated into 3D animation. Some applications, including Pointools View Pro and Leica Cyclone Viewer Pro, can read and display point-cloud data, but most cannot without the data being converted into polygon or NURBS surfaces. With that conversion to a mesh, much of the detail is lost. However, many other industries are using point-cloud data today, such as manufacturing, medicine, and forensic science.

Figure 9-5: Point-cloud data in Pointools View Pro software

The primary way of creating point clouds is with 3D scanning (which is discussed in Chapter 8, “Hardware and Software Tools of the Trade”). Although a few companies have indicated that they can run a 3D point-cloud game engine in real time and provide almost unlimited detail in the objects, we are still years away from putting this type of model in consumers’ hands. Offering unlimited detail is what makes point-cloud data an exciting new technology. Having no texture or geometry resolution will completely change the workflow and output quality for the game and film industries.

Providing Real-Time Capabilities

A real-time working environment may be the holy grail of the 3D animation industry. A real-time workflow would enable you to see a completely rendered 3D asset while actively editing that asset throughout the entire production pipeline. Each artist would not have to wait on technical hurdles and waste time waiting on interface updates and slow renders.

Parts of that real-time workflow dream are already a reality. We are able to render video games in real time and the player has complete control of the action of the game at almost all times. But the creation (modeling, texturing, rigging, and animation) of those characters and environments had to be completed by an artist and then painstakingly reduced and optimized to be able to work in real time within the game engine.

We need still more computing power before the real-time workflow can happen. But we do have some real-time capabilities within the 3D animation production pipeline. For instance, real-time high-resolution polygon modeling and texturing are available in software such as Pixologic’s ZBrush and Autodesk Mudbox. These two software packages allow modeling and texturing artists to move freely in real time around extremely heavy polygon models to edit the geometry and create color maps directly on that model. This was not even an option only a few years ago. Both of these software packages began by looking at modeling from a different view and recoded their software to use today’s faster computers to allow for this real-time interaction. The drawback to these software programs is that they are specialty applications for modeling and texturing, with little to no animation or other 3D workflows.

From a hardware side, dedicated GPU processors on graphics cards and multicore CPU processors are enabling a real-time workflow. Moore’s law states that the number of transistors per square inch on integrated circuits has doubled every two years since the integrated circuit was invented. This means that computers double their speed and functionality every two years as well. If Moore’s law stays true, by the year 2020, the CPU will have manufactured parts on a molecular scale. This will have a huge effect on the way we use computers and will lead to new types of technologies using computer processing speeds not even imaginable today.

However, even with all of these technology boosts and speed jumps, rendering time in 3D animation has increased over the years. For example, Pixar’s Toy Story took an average of 2 hours per frame to render in 1995. In Cars 2, released in 2011, the average render time per frame was 11 hours. So why are the render times going up instead of down? The answer is in the detail that can be added to today’s 3D animation projects. Every time we master a new tool set, we add two or three rendering techniques that are even more complicated for the computer to render. But this striving for real-time render capabilities has pushed the technology to allow for these new complicated render options to be rendered faster and more efficiently and will only continue to push the technology and techniques in the future.

The four main bottlenecks of this complete real-time workflow are rendering with raytracing, complicated shader networks with very high texture maps, animation with complicated rigs and deformers applied to the objects, and animation of complicated simulations for visual effects, all of which are discussed in the following sections.

Real-Time Rendering

Real-time rendering does exist in video games today. The video game engine that you play games in is a render engine showing you anywhere from 15 to more than 100 frames per second of rendered imagery. A lot of cheats and methods exist to allow these game engines to be able to render in real time, such as baking texture maps with some of the lighting and shadow information into the models. This baking of lighting and shadows works as long as the object does not move, because the baked shadow will not move. Reflections are not an easy render option, so cheats of prebaked reflection maps are created. Also there are limits on the resolution size of the texture maps that are applied to objects and characters. There are also geometry limits placed on objects and characters to make sure the process for the hardware to render in real time can be achieved. With each new game system and computer upgrade that is released, the game engines can allow for more-complicated shader networks, texture resolution, geometry resolution, and real-time light and shadow effects because the hardware can process more information.

Baking textures allows the artist to prerender any of the color information into the texture maps to be applied to the object.

So why can’t we get real-time rendering in other 3D animation fields? The answer has to do primarily with raytracing—a rendering technique that shoots rays from a camera to sample each pixel. When the rays hit an object in the scene, they are absorbed, reflected, or split with refractions in transparent materials. If reflected or refracted, they will need to create a new ray or rays, depending on what is needed, as demonstrated in Figure 9-6. All of these rays will continue to bounce or split until they are absorbed or the user defines the number of times the ray can move. This process allows for very realistic renders but takes a lot of time to compute. This does not even take into account factors such as translucency, subsurface sampling, global illumination, or raytraced shadows, which all incorporate the raytrace rendering algorithm explained in Chapter 7, “Lighting, Rendering, and Visual Effects.”

So where are we today with real-time rendering? A few examples of real-time raytrace rendering have been publicly shown at conventions and on the Internet. Many hobbyists have been trying to create a usable form of real-time raytracing, but with no major universal breakthroughs yet. So we are still just waiting for the hardware to allow for it or for a new breakthrough in the realistic rendering world to emerge. For now, we still have long render times but are getting better results each year, and better render times with those results.

Figure 9-6: Example of raytracing

Real-Time Animation

Real-time animation is the act of being able to move a character rig or apply motion capture data to a 3D character in real time. Animators do not like having to wait for character rigs or object rigs to update while they are animating, so for years rigging teams have been creating different levels of detail to enable real-time animation. For instance, dense and heavy deformers that simulate skin movement and skin collisions tend to slow down a rig. These components are not needed until the end of the workflow, when the character is fine-tuned. So animators work on movement without those components. Thanks to today’s hardware, that level of detail can remain relatively high while enabling animators to work in real time.

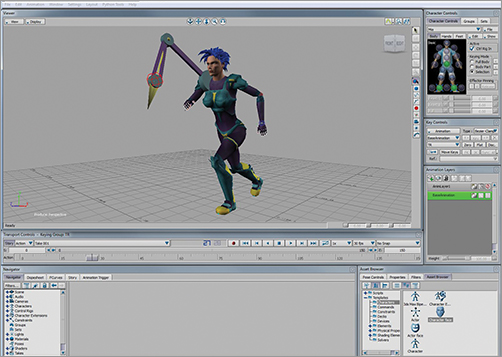

A software solution to real-time animation is Autodesk MotionBuilder, which enables animators to animate and edit in real time with fairly high levels of geometry. Figure 9-7 illustrates the difference between a low level of detail and a high level. MotionBuilder also allows for a simple transfer of motion-capture data to a rig in real time, as shown in Figure 9-8. This means that a director can see what a real actor is doing on the motion-capture stage and then see it applied to a 3D character within MotionBuilder.

Figure 9-7: A low-level geometry rig (left) and a high-level one (right)

Figure 9-8: A character in Autodesk MotionBuilder with motion-capture data applied

The one thing that is still a way off is to have no animator at all, and to have the computer create the motion for the character in some way. We are beginning to see this technique in what is called procedural animation with software such as Euphoria by NaturalMotion. This software places a virtual nervous system and artificial intelligence into a character to enable it to walk, run, jump, and react in a proper way to its environment. This technology is being used in video games and in some background characters for film and television. But again, as in rendering, we are still far from the time when an animator will not animate our main characters.

Other aspects of animation that would really benefit from a real-time workflow are simulation and visual effects. These effects are very taxing on a computer system. Some effects require millions of particles to be created and simulated, and effects such as cloth and water are even more complicated. Today’s companies are able to cache out simulations in hours that would have taken days just a few years ago. (See the “Using Caches to Save Time” sidebar.) So real-time simulations. although not available now, will becomes a reality one day soon.

Using Caches to Save Time

Caches in 3D animation are files that store data from simulated or baked objects. Creating caches can be time-consuming, but once you’ve cached a simulation, it will not need to be simulated again unless you make a change. Cache files can be very large, depending on the data being stored.

Real-Time Motion Performance

Real-time motion performance is where the 3D animation industry would like to see this technology of motion capture go. It would mean the complete capture of an actor’s performance in just one take—the body motion, face shapes, and all appendages including fingers and toes. As of right now, we can capture all of these parts of a human performer, but we have to capture them separately. Most of today’s systems can capture most of a performance in one take, but captures are still not as complete as the 3D animation industry would like.

The movie Avatar and the video game L.A. Noire have both pushed the limits of motion performance capture in their respective industries. Avatar created many new real-time capture techniques that allowed director James Cameron to view proxy-level CG models moving in real time on a monitor while the human actors were on the capture set. The digital artists on Avatar were also able to motion capture the faces of the real actors in time with the motion capture of the body performances with a high degree of detail, which had not been a straightforward workflow before. LA Noire, developed for consoles by Team Bondi and published by Rockstar Games, created a whole new system of motion capture called MotionScan that allowed a great amount of detail in the facial animation that to this point remains unmatched. This system enables you to create 3D mesh deforming in real time with the use of many HD cameras calibrated to a specific area.

The Future of Laser Scanning and Motion Capture

A real-time, hybrid version of laser scanning and motion capture enables very fast and accurate 3D scanning to capture shape movement and even texture with an attached camera. Drawbacks are that the quality is not great, you can capture only what is right in front of the scanner, and there’s no simple way to attach this laser scanned geometry to a traditional 3D animation rig and geometry.

This technology holds much promise for the television world, however. I have seen tests in which real-time motion capture is taken from people wearing green-screen suits and interacting with human actors on a set, and seen the motion-capture people be replaced with digital characters in real time. This would allow actors to interact with animated characters in real time on a virtual set for a live television broadcast. This is an intriguing technology, and it will be interesting to see what it will do in the next few years.

A virtual studio is typically a television studio equipped with software and hardware that enables real-time interaction of real people with a computer-generated environment and objects. The background of the real set is a green screen that will be chroma-keyed out of the final image and replaced with the computer-generated background.

Chroma-key is the compositing technique of green screen or blue screen removal.

The real studio cameras’ movements are tracked by the virtual studio system—either through the use of markers on the walls and floors and viewed through the lens of the camera system, via a motion capture system tracking the movement of the cameras, or with the use of a pedestal tracking system for the cameras to sit on that allows all movements of the camera to be tracked. The lens zoom can also be tracked, so as the camera zooms in or out on the real person or object, the virtual 3D environment will also move accordingly.

The film and VFX industries have been creating this type of effect by green-screening the talent and in postproduction adding a new background that is computer generated or from a different location. In contrast, on a virtual set there is no postproduction; it is all done in real time.

There are advantages and disadvantages to this type of system. One advantage is being able to provide a fast turnaround of the final footage because postproduction work is not needed. This system enables actors to have a better sense of the scene they are in because they can see the virtual set on monitors behind the scenes. Finally, it allows television studios to have many different sets on one green-screen stage and thereby cut down on the physical real estate needed to run numerous shows.

Disadvantages of virtual studios include low resolution models and low texture resolution for the environment models, so it can be difficult to create highly photorealistic and complicated sets. In addition, computer-generated characters cannot be animated in real time, making it challenging for actors to interact with the virtual set. And interaction with other virtual elements can be difficult or impossible. The physical green-screen set is self-contained and immobile; it cannot be moved to different locations if needed.

The Essentials and Beyond

Looking at the industry trends, it is exciting to consider what will come next. Today’s trends include motion capture, stereoscopic 3D, and point-cloud data, and the main goal is to have them all run in real time one day. Real time is the big task that developers and hardware engineers are striving for. As 3D artists, we have more tools and faster render times each year, enabling us to focus on more-artistic functions of 3D animation instead of the technical side. That means we will continue to be able to push the boundaries of what is seen and created. This industry is truly exciting to work in right now because the 3D art form is still so young that you could one day create a new and defining technique or workflow that will be used around the world.

Review Questions

1. Which of these stereoscopic techniques uses red and cyan glasses?

A. Anaglyph glasses

B. Shutter glasses

C. Polarized glasses

D. Stereoscope

2. True or false: Real-time raytracing rendering is possible today.

3. Which of these new capabilities is the most critical to the future of 3D animation?

A. Motion performance

B. 3D stereoscopic

C. Real-time workflow

D. Virtual studios

4. Which industry will the virtual studio most benefit?

A. Film

B. Visual effects

C. Television

D. Video games

5. Which of these options are advantages for marker-based motion capture?

A. Multiple-person capture

B. Accurate captures

C. High-fps captures

D. All of the above

6. What factors make real-time rendering impossible today?

A. Lack of sufficient technology

B. Use of complicated render options

C. The large size of texture files

D. All of the above

7. What is stereoscopic 3D?

A. An illusion of three-dimensional space on a two-dimensional plane

B. A reason to wear glasses

C. The use of 3D models in a computer

D. All of the above

8. Which video game was first to use MotionScan?

A. Halo

B. LA Noire

C. Super Mario Bros.

D. All of the above

9. A virtual studio is what?

A. A space that can be virtually shown anywhere the production wants it to be

B. Television set that is set to be chroma-keyed out of the life frame and a virtual 3D set is placed in real-time

C. A production set that is virtually created in postproduction

D. The set the actors visualize while on a visual effects set

10. Which of these options is a disadvantage for markerless motion capture?

A. Difficult multiple-person capture

B. Large capture space

C. Fast setup

D. All of the above