CHAPTER 6

Experiment

Little changes limit disruption and allow people to learn.

BIG CHANGES ARE OFTEN PERCEIVED AS THREATS. BIG CHANGES are also big bets. The greater the scale, the more that’s at stake and the greater the chance of business disruption. Even with a good idea, forethought, and planning, there’s always a chance that things won’t work as hoped. This reality can be summed up in a tweet: “No matter how detailed the plan, it cannot make the uncertain certain.”1 Even when a change doesn’t encompass thousands of people, disruption can be severe. So, think small—really small. Working small on influencing factors is often the only way to make progress on big problems.

I visited Rollo shortly after Jazmin, the vice president of engineering, had implemented a change that affected the entire development department and rippled out to most of the organization.

The software developers at Rollo worked in subgroups, each focused on a specific feature area for the company’s primary product. Most subgroups had a tech lead and five to seven developers. Testers were a separate group with their own manager. In theory the testers weren’t organized around features as the developers were. In practice, over time, they had built knowledge silos and expertise that mirrored the development groups.

Jazmin realized that the way work flowed into the development group was less than ideal. One group might be overwhelmed with feature requests and production issues while other groups had time on their hands.

Jazmin had an idea based on her recent study of queues. Her plan was to eliminate the subgroups and establish a single work queue for the developers and another one for the testers. Whenever a developer or tester finished a task, they’d pick up the next item in their respective queue. The workload across each group would smooth out. Based on her analysis of the current workflow, Jazmin was sure this change would increase throughput.

Unfortunately, Jazmin underestimated the depth of feature-specific knowledge and expertise required in each subgroup (and how much was concentrated with individuals in those groups), and she overestimated the overlap in feature understanding across groups. She also failed to anticipate the reactions from both developers and testers: Both groups resented the imposed process. For them it meant more work (learning new features, understanding feature-specific test harnesses) and more waiting (finding time with a colleague who could answer questions about a new-to-them feature). Everything took longer, and their sense of accomplishment dribbled away, leaving them feeling defeated and angry.

Jazmin’s insight about workflow and queues was spot-on. Her analysis was substantially correct; yet her change, which directly affected only about 50 people, caused disruption for most of the company.

A different approach to achieving the change would have served her better: working by experiment. With experiments the pressure is off to get it right the first time. There are many chances to identify the humps that need to be smoothed out—the shifts necessary to achieve an outcome. For example, had Jazmin involved a feature team in an experiment with a single queue, the siloed knowledge issue would have been evident right away. Better yet, she might have involved the team in identifying the factors that influenced uneven workflow (described in chapter 4) and then done an experiment based on an informed hypothesis (and probably with buy-in and eventual engagement).

Experiments in Complex Change

It might be easiest to start by saying what experiments are not—at least the way I use the term. These are not the experiments from high school chemistry class that (at least in my school) involved following a set of instructions to achieve a predetermined result. We weren’t discovering anything about chemistry; we were learning bench skills. Nor are these experiments like scientific experiments, with carefully isolated variables and controls. In solving complex organizational problems, there are too many factors that can’t be isolated and controlled for, and they are all tangled up with each other. Further, if you try one thing that results in learning or some other structural change, it is impossible to reproduce the results because the system is different.

Nor are experiments pilot projects, which assume testing or confirming a candidate solution with a small group or subset of the organization. They aren’t bet-the-farm, organization-wide gambles that try out an unproved solution (or one that worked in some other context) in real time.

As I’m using the term, experiments are tiny interventions intended to learn about how a system responds, to nudge things in a better direction, and to suggest what to experiment with next. These experiments are FINE grained: they provide fast feedback, are (ideally) inexpensive, require no permission, and are easy.2 They are more like the little tweaks I tried when adapting the environment back when I was programmer.

By doing experiments people get their fingerprints on the larger change. Experiments effectively handle some of the biggest pitfalls of top-down change. They instill ownership by involving the people affected by the change. That in turn reduces the likelihood that people will feel like victims. Experiments make it more likely that a change will fit the context. And because experiments have fast feedback loops, they lower the risk of overcorrection, which can lead to crazy-making oscillation.3

Keep It Small

Keep an eye on the end goal, but keep experiments small, even tiny. This notion can go against the grain. People are encouraged to go for grand goals and the big win at work and in life. Yet smaller steps provide an opportunity for near-term accomplishment and reassessing whether the big audacious goal is still necessary. Landing zones4 connect big hopes with advances that feel achievable. They link “near-neighbor states” with long-term desires. They help people think small.

One of my clients had experienced several big (and I mean big) customer outages that were traced back to quality problems with their product. Each had a VP assigned to manage the customer relationship and remedy. The teams tasked on working to improve product quality felt overwhelmed. The goal seemed impossible given the magnitude of the problem. The team identified five states. They started with the bookends best (which seemed unattainable) and worst (which would upset everyone and trigger damage clauses but was quite possible). Then they filled in the current state, which described factors within the group’s control that contributed to remedy cases.

Finally, they described the near-neighbor condition—the next nudge that seemed possible. They recognized that it wasn’t a permanent state, but it was an improvement that would feel like an accomplishment, as well as a pause point to catch breath, reassess, and plan how to achieve the next better thing. Rather than try to imagine all the intermediate achievements, they thought about the next two. This limit acknowledged that they might see new possibilities once they reached the first near-neighbor. SEE 6.1

6.1 Articulating landing zones identifies near-neighbor states and helps people think about what conditions are necessary to achieve different outcomes.

Once they had landing zones, they could think more effectively about how to get from now to next.

Work on Influencing Factors

These tiny changes may feel like dancing around the real problem instead of facing it directly—but many big problems cannot be addressed directly; progress comes by working on the influencing factors. The key is to find something you can act on now, without a budget, permission, or an act of God (or some large number of vice presidents). Don’t agonize over failure: ill effects will mostly likely be small and contained. Let the ideas that are not useful simply scroll by.

One of my clients had held a leading position in their market for some time. Now new technologies were changing the competitive landscape, and they needed to get a new version to market or risk losing their edge. They weren’t delivering fast enough, and their B2B customers told them so at every update meeting.

The cycle time to deliver a small feature was more than two months. With 30-plus teams and two independent testing departments, it took weeks to integrate, test, and deliver. They were well aware that shortening the schedule by dictate, reducing time for independent testing and integration, guaranteed worse quality. They also knew that they had to work obliquely, and in parallel, addressing the factors that contributed to long cycle times.

Fortunately, they had data—lots of it. The managers analyzed that data and determined shortest, average, median, and longest delivery times as measured from approval, to build, to delivery to the customer. Based on those categorizations, the managers split into three groups to investigate further. The groups reconvened to share observations and findings, then they made a list of influencing factors.

It was a long list full of vicious cycles. In one example, developers, responding to pressure, had started working on features with undefined needs. This led to errors, which led to rework, which led to missing goals, which led to more pressure. More errors turned up in integration, which meant diverting attention from current work and backtracking to fix bugs. This led to even more pressure. Instead of steadily reducing their work queue, they were increasing it. Managers looked for ways to stop the vicious cycles and gain some breathing space for the teams.

While they kept an eye on the outcome, they couldn’t wait two months to know if their interventions were working. They shaped experiments so that they would get feedback within days or a week or two at the most.

Shaping Experiments

Consider an organization’s system of rewards of recognition, for example, aimed at inspiring a more highly motivated, engaged, and productive workforce. An organization might have a formal system that includes such things as cash bonuses or stock rewards for meeting specific sales or performance targets, as well as an informal system that includes peer recognition and verbal and written thank-you messages from supervisors. Within that tangled web (which just scratches the surface in most organizations), any observed phenomenon might be both cause and effect, depending on what you’re looking at. And causation may shift over time. The initial cause may fade, but other factors arise that perpetuate the pattern. Because of this, I tend not to talk about causes but instead about influences. Many factors may influence a situation, and trying to find the singular factor is either impossible or not worth the effort. Try something very small and see what happens.

An experiment doesn’t have to be perfectly designed to be of value. We’re not talking about human trials. Experiments aim to try various things to see what works—but that doesn’t mean doing any old thing. Every experiment should have a rational hypothesis, informed by what has happened in the system up until now and the difference that is desired.5 Design experiments to manage risk, not eliminate it.

The following questions were tested by dozens of coaches and consultants to aid them in forming that lead to learning (and limiting disruption).

1. What factors may contribute to the current problem or situation? (Curiosity and observation will help you here; see chapter 8.) First remove blame and state the issue in neutral language. For example, rather than “lousy sales results,” say “sales.” A neutral term encourages the brain to think of factors that influence positively as well as those that influence in an undesirable direction. Brainstorm a list of factors that likely influence the situation. Refine the list so that it is easier to work with when it comes to observing effects. Identifying how to detect a change in “lousy sales results” can be confusing.

2. Which factors can you control or influence? Everything touches everything in complex systems. Look for the things that you can touch or act on. There is always something, if only the way you respond to a situation.

3. What is your rationale for choosing this experiment? Articulate the reasoning behind the action. Describe your theory in terms of how the experiment might influence the situation and how it is consistent with observations and possibilities.

4. What question are you trying to answer? Identify the useful learning that might come from the experiment. Relate the learning to the issue you are working with.

5. What can you observe about the situation as it is now? Describe your observations and what you can currently notice about influencing factors. Outcome is important, but so is being able to detect small changes in influencing factors—and these arise, and are detectable, in the present.

6. How might you detect that the experiment is moving in the desired direction? Describe what you expect to see, not only in terms of outcome but regarding influencing factors as well.

7. How might you detect that the experiment is moving in an undesirable direction? That is, how will you notice negative side effects? This is just as important as knowing whether something is working. Scanning for disconfirming data gives warning when something isn’t going as hoped or expected.

8. What is the natural time scale? That is, when might you expect to see impacts? If the expected results are in the distant future (many weeks), make the experiment smaller—much smaller. Fast feedback is key. Challenge yourself to go two steps down: from months, to weeks, to days, to hours. If the current form of the experiment will provide feedback in a month, how could you modify it to learn something in a day? If feedback won’t come for a week, what could give feedback in an hour?6

9. If things get worse, how will you recover the situation? Describe what steps would be necessary to dampen undesirable effects.

10. If things improve, how will you amplify the experiment? Designing a companywide rollout for something unproven can be remarkably wasteful (even though people do it all the time). An experiment at best indicates that an idea is helpful in a particular context and may be useful in others. Before spreading an idea, method, process, or practice, consider context. Recognize in what ways the people, work, environment, and culture are similar, as well as how they are different. Differences may require adaptation or render a practice useless.

Detect Effects

In my experience, questions 6 and 7 are often the most difficult to answer. Many of us have been trained to think about measurement with a capital M, and we look for big things to count related to the big problem. That’s not what is needed with experiments. Instead look for indicators that the experiment is having an effect, even a very subtle effect.

At another client, when teams didn’t produce the desired number of deliverables (which was established without significant team involvement), the initial management response had been to pressure the teams to deliver to commitments (even though the teams hadn’t made the commitments). Our approach was to work with the managers but also to see what we could shift with the people making commitments, a group of product owners who reported up through a different department.

The first experiment involved posting flip chart pages with team capacity data in the conference room where the commitment meetings took place. The product owners glanced at it, then proceeded to overcommit.

The next experiment involved providing the product owners with physical tokens that represented team capacity. Each time they added a request for a team, tokens were removed. The pile of tokens was gone before all the requests were accounted for. The response was… interesting—not quite wailing and tearing of hair but close. It also started a discussion, which led to different thinking, which led to different actions, and, eventually, finding another influencing factor. This wasn’t an outcome measure, and it wouldn’t have been conducive to traditional measurement programs. But it was easy to notice in the meeting and was a quite useful indicator that our experiment had an effect and helped unravel the tangle of influences behind overcommitments.

Accept Failures (They’ll Be Small)

If it is difficult to imagine an experiment failing, your experiment might be masquerading as a mini–rollout plan. Experiments don’t come with a guarantee of success, unless learning is the goal. Learning and innovation both require stepping into an unknown. Mistakes happen. Ideas don’t pan out. That is a normal part of life, projects, and change. Failure, however, isn’t something that’s rewarded in many cultures and organizations.

One of the ways companies (quite reasonably) protect against failure is through approval processes that require financial analysis proving that the benefits outweigh the risks and costs. This makes sense for big bets, such as entering new markets and launching new products, but that level of rigor applied to new ideas and small experiments stifles innovation. Not much new emerges when new ideas are subjected to rigorous standards of proof and financial viability. Many “failures” and much learning, conversation, and collaboration precede almost every innovation. Disruptive creativity seldom springs fully formed from a sole inventor’s head (or comes with a stellar cost/benefit analysis).

Pay close attention to how managers respond to failed experiments—and then design experiments to shift responses toward a learning orientation. Treat a failed experiment with curiosity.

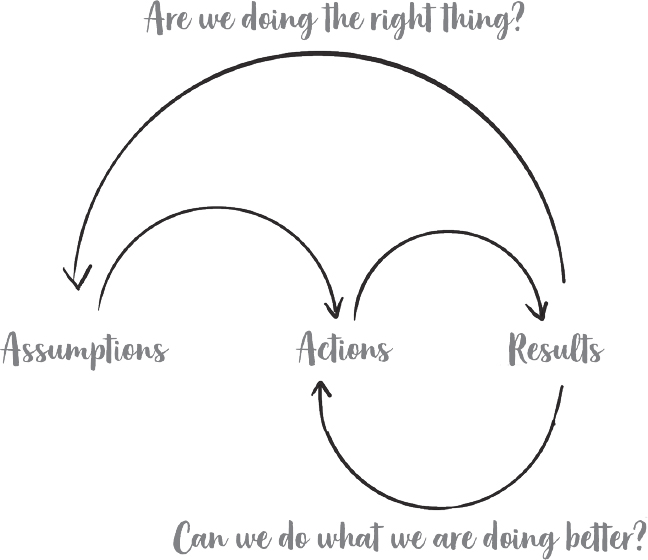

Add Double-Loop Learning

Single-loop learning asks, How can we do what we are doing better? Double-loop learning asks, Are we doing the right thing? and involves scrutinizing, thinking, and assumptions. SEE 6.2 Transformational improvement and significant learning come from making beliefs, assumptions, and thinking explicit, then testing them and experimenting.

6.2 Single-loop learning asks, Can we do what we are doing better? Examine results and adjust action. Double-loop learning asks, Are we doing the right thing? It examines the assumptions and thinking behind an action and may lead to different actions.

Jumping into the second loop isn’t second nature, but it’s helpful with experiments and any sort of improvement or change. The following questions can help surface and test assumptions.

![]() What would have to be true for ____________ to work?

What would have to be true for ____________ to work?

![]() [Practice or action] makes sense when ____________.

[Practice or action] makes sense when ____________.

![]() [Practice or action] will work perfectly when ____________.

[Practice or action] will work perfectly when ____________.

![]() [Practice or action] will work well enough when ____________.

[Practice or action] will work well enough when ____________.

![]() [Practice or action] will be harmful when ____________.

[Practice or action] will be harmful when ____________.

![]() What do we know to be true? How do we know that?

What do we know to be true? How do we know that?

![]() What do we assume to be true? Can we confirm that?

What do we assume to be true? Can we confirm that?

![]() Why do we believe that?

Why do we believe that?

![]() What is untrue, based on our investigation?

What is untrue, based on our investigation?

![]() If an outsider watched us, what would they say we are trying to do?

If an outsider watched us, what would they say we are trying to do?

![]() What is the gap? How can we make the gap smaller?

What is the gap? How can we make the gap smaller?

![]() How could we make things worse?

How could we make things worse?

The questions prompt people to examine assumptions, take a broader view, and look at things differently. The last question is a particularly interesting one because along with challenging assumptions it can reveal control points and useful areas for another experiment.

KEY TAKEAWAYS

![]() Complex problems don’t often come with well-known solutions. Even when there are fixes that have worked elsewhere, implementing a new process or method often requires learning as well as changes to auxiliary processes and policies. Explore those with experimentation.

Complex problems don’t often come with well-known solutions. Even when there are fixes that have worked elsewhere, implementing a new process or method often requires learning as well as changes to auxiliary processes and policies. Explore those with experimentation.

![]() Big changes cause big disruptions. Small changes limit disruption, fear, and ill effects. Experiments support learning and winnow out unsuitable ideas without huge investment.

Big changes cause big disruptions. Small changes limit disruption, fear, and ill effects. Experiments support learning and winnow out unsuitable ideas without huge investment.

![]() Experiments are a way to address massively entangled causation. Many problems can’t be addressed head-on because they result from many factors interacting with one another across the entire organization. Experiments work on the influencing factors, changing system behavior and results.

Experiments are a way to address massively entangled causation. Many problems can’t be addressed head-on because they result from many factors interacting with one another across the entire organization. Experiments work on the influencing factors, changing system behavior and results.

![]() Experiments in complex environments (that is, most situations that involve humans) don’t occur under lab conditions. Causation isn’t direct, or even knowable, and trying to control all the variables is futile. It’s okay, and even desirable, to run multiple experiments. Try, learn, and see what moves the situation in a better direction.

Experiments in complex environments (that is, most situations that involve humans) don’t occur under lab conditions. Causation isn’t direct, or even knowable, and trying to control all the variables is futile. It’s okay, and even desirable, to run multiple experiments. Try, learn, and see what moves the situation in a better direction.

![]() Every experiment needs a rationale—an articulation of why it is consistent with what has happened and what might happen. Look for disconfirming data as well as confirming data. Consider how to shut things down if they go wrong.

Every experiment needs a rationale—an articulation of why it is consistent with what has happened and what might happen. Look for disconfirming data as well as confirming data. Consider how to shut things down if they go wrong.

![]() Experiments are a test of organizational tolerance for learning and innovation. Management’s inability to accept failure at this level bodes ill for both learning potential and the positive evolution of the system.

Experiments are a test of organizational tolerance for learning and innovation. Management’s inability to accept failure at this level bodes ill for both learning potential and the positive evolution of the system.

![]() Experiments can help people develop tolerance of failure and teach them to contain risk rather than try to eliminate it.

Experiments can help people develop tolerance of failure and teach them to contain risk rather than try to eliminate it.

![]() Involving people in experiments helps them learn and take ownership. “Experiment” conveys We’re going to try something. It doesn’t imply that life is going to change irrevocably. At the end of an experiment, choose whether to continue, amplify, or drop it.

Involving people in experiments helps them learn and take ownership. “Experiment” conveys We’re going to try something. It doesn’t imply that life is going to change irrevocably. At the end of an experiment, choose whether to continue, amplify, or drop it.