Chapter 9

Security Design in the Cloud

The only truly secure system is one that is powered off, cast in a block of concrete, and sealed in a lead-lined room with armed guards.

—Gene Spafford, professor, Purdue University

Prior to cloud computing, buyers of commercial software products did not demand the level of security from vendors that they do today. Software that was purchased and installed locally within the enterprise provided various security features for the buyer to configure in order to secure the application. The vendors would make it easy to integrate with enterprise security data stores such as Active Directory and provide single sign-on (SSO) capabilities and other features so that buyers could configure the software to meet their security requirements. These commercial software products were run within the buyer’s perimeter behind the corporate firewall. With cloud computing, vendors have a greater responsibility to secure the software on behalf of the cloud consumers. Since consumers are giving up control and often allowing their data to live outside of their firewall, they are now requiring that the vendors comply with various regulations. Building enterprise software in the cloud today requires a heavy focus on security. This is a welcome change for those of us who have been waving the red flag the past several years about the lack of focus on application security in the enterprise. There is a common myth that critical data in the cloud cannot be secure. The reality is that security must be architected into the system regardless of where the data lives. It is not a matter of where the data resides; it is a matter of how much security is built into the cloud service.

This chapter will discuss the impacts of data as it pertains to cloud security and both the real and perceived ramifications. From there, we will discuss how much security is required—an amount that is different for every project. Then we will discuss the responsibilities of both the cloud service provider and the cloud service consumer for each cloud service model. Finally, we will discuss security strategies for these focus areas: policy enforcement, encryption, key management, web security, application programming interface (API) management, patch management, logging, monitoring, and auditing.

The Truth about Data in the Cloud

Many companies are quick to write off building services in the public cloud because of the belief that data outside of their firewalls cannot be secured and cannot meet the requirements of regulations such as PCI DSS (Payment Card Industry Data Security Standard) and HIPAA (Health Information Portability and Accountability Act). The fact is, none of these regulations declare where the data can and cannot reside. What the regulations do dictate is that PII (personally identifiable information) such as demographic data, credit card numbers, or health-related information must be encrypted at all times. These requirements can be met regardless of whether the data lives in the public cloud.

The next issue deals with a government’s ability to seize data from cloud service providers. In the United States, the USA Patriot Act of 2001 was made law shortly after the 2001 terrorist attacks on the World Trade Center. This law gave the U.S. government unprecedented access to request data from any U.S.-based company regardless of what country its data center is in. In other words, any U.S.-based company with a global presence is required by law to comply with the U.S. government’s request for data even if the data contains information for non-U.S. citizens on servers outside of U.S. soil. Many other countries have similar laws even though these laws are not as highly publicized as the Patriot Act.

Due to laws like the Patriot Act, many companies make the assumption that they just cannot put their data at risk by allowing a cloud service provider to store the data on their behalf. This assumption is only partially true and can easily be mitigated. First, the government can request data from any company regardless of whether the data is in the cloud. Keeping the data on-premises does not protect a company from being subject to a government request for data. Second, it is true that the odds of a company being impacted by a government search increase if the data is in a shared environment with many other customers. However, if a company encrypts its data, the government would have to request that the company unencrypts the data so that it can inspect it. Encrypting data is the best way to mitigate the risks of government requests for data and invalidates the myth that critical data can’t live in the public cloud.

In June 2013, Edward Snowden, a contractor working for the National Security Agency (NSA) in the United States, leaked documents that revealed that the NSA was extracting large volumes of data from major U.S. companies such as Verizon, Facebook, Google, Microsoft, and others. Many people interpreted this news to mean that the public cloud was no longer safe. What many people did not realize was that much of the data that was retrieved from these big companies’ data centers was not hosted in the public cloud. For example, Verizon runs its own data centers and none of the phone call metadata that the government retrieved from Verizon was from servers in the cloud. The same holds true for Facebook, which owns its own private cloud. The reality is that no data is safe anywhere from a request for data by government agencies when national security is at stake.

How Much Security Is Required

The level of security required for a cloud-based application or service depends on numerous factors such as:

- Target industry

- Customer expectations

- Sensitivity of data being stored

- Risk tolerance

- Maturity of product

- Transmission boundaries

The target industry often determines what regulations are in scope. For example, if the cloud services being built are in the health care, government, or financial industries, the level of security required is usually very high. If the cloud services are in the online games or social web industries, the level of security required is likely more moderate. Business-to-business (B2B) services typically require a higher level of security, because most companies consuming cloud services require that all third-party vendors meet a minimum set of security requirements. Consumer-facing services or business-to-consumer (B2C) services usually offer a use-at-your-own-risk service with terms of service that focus on privacy but make very limited promises about security and regulations. For example, Facebook has a terms-of-service agreement that states that the security of your account is your responsibility. Facebook gives you a list of 10 things you agree to if you accept its terms of service.

Customer expectation is an interesting factor when determining how much security and controls to put in place. Sometimes it is the customers’ perception of the cloud that can drive the security requirements. For example, a company may plan on building its solution 100 percent in a public cloud but encounters an important, large client that refuses to have any of its data in the public cloud. If the client is important enough, the company may decide to adopt a hybrid cloud approach in order not to lose this customer even though there might be no reason other than customer preference driving that decision. This is common for both retail and health care customers. In the two start-ups that I worked at, both were deployed 100 percent in the public cloud until we encountered some very profitable and important customers that forced us to run some of our services in a private data center.

The sensitivity of the data within cloud services has a major impact on the security requirements. Social media data such as tweets, photos from photo-sharing applications like Instagram and Pinterest, and Facebook wall messages are all public information. Users agree that this information is public when they accept the terms of service. These social media services do not have the requirement to encrypt the data at rest in the database. Companies processing medical claims, payments, top-secret government information, and biometric data will be subject to regulatory controls that require encryption of data at rest in the database and a higher level of process and controls in the data center.

Risk tolerance can drive security requirements, as well. Some companies may feel that a security breach would be so disruptive to their businesses, create such bad public relations, and damage customer satisfaction so much that they apply the strongest security controls even though the industry and the customers don’t demand it. A start-up or smaller company may be very risk tolerant and rank getting to market quickly at a low cost as a higher priority than investing heavily in security. A larger company may rank strong security controls higher than speed-to-market because of the amount of publicity it would receive if it had a breach and the impact of that publicity on its shareholders and customers.

The maturity of the product often drives security requirements, as well. Building products is an evolutionary process. Often, companies have a product roadmap that balances business features with technical requirements like security, scalability, and so on. Many products don’t need the highest level of security and scalability on day one but will eventually need to add these features over time as the product matures and gains more traction in the marketplace.

Transmission boundaries refer to what endpoints the data travels to and from. A cloud service that is used internally within a company where both endpoints are contained within the company’s virtual private network (VPN) will require much less security than a cloud service that travels outside of the company’s data center over the Internet. Data that crosses international boundaries can be required to address country-specific security requirements. The U.S.-EU Safe Harbor regulation requires U.S. companies to comply with the EU Data Protection Directive controls in order to transfer personal data outside the European Union. As of the writing of this book, U.S. companies self-certify. After the recent NSA scandal, this law could change in the near future and a formal certification may be required.

Once a company considers these factors and determines how much security is required for its cloud service, the next questions to ask are who is going to do the work (build versus buy), how will the security requirements be met, and by when is it needed. For each security requirement there should be an evaluation to determine if there is a solution available in the marketplace or if the requirement should be met by building the solution internally. There are many open source, commercial, and Software as a Service (SaaS)–based security solutions in the marketplace today. Security is a dynamic field and keeping software current enough to address the most recent security threats and best practices is a daunting task. A best practice is to leverage a combination of open source or commercial products or Security as a Service (SecaaS)–based software to meet requirements such as SSO, federated security, intrusion detection, intrusion prevention, encryption, and more.

Responsibilities for Each Cloud Service Model

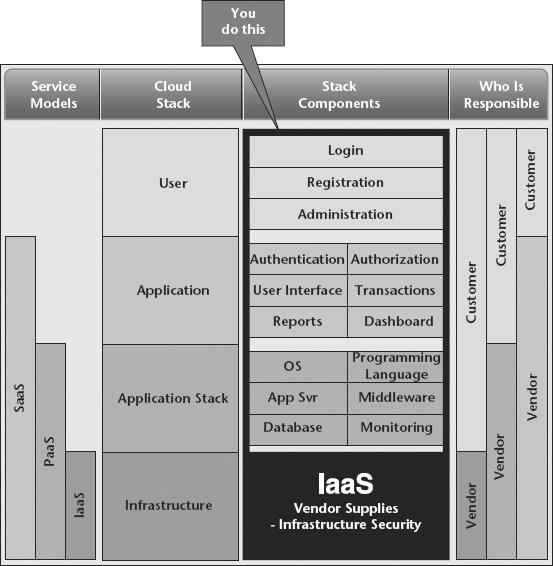

When leveraging the cloud, the cloud service consumer (CSC) and the cloud service provider (CSP) have a shared responsibility for securing the cloud services. As shown in Figure 9.1, the further up the cloud stack consumers go, the more they shift the responsibility to the provider.

Figure 9.1 Infrastructure as a Service

The cloud stack consists of four categories. At the bottom is the infrastructure layer, which is made up of physical things like data centers, server-related hardware and peripherals, network infrastructure, storage devices, and more. Companies that are not leveraging cloud computing or are building their own private clouds have to provide the security for all of this physical infrastructure. For those companies leveraging a public cloud solution, the public cloud service provider manages the physical infrastructure security on behalf of the consumer.

Some companies might cringe at the thought of outsourcing infrastructure security to a vendor, but the fact of the matter is most public Infrastructure as a Service (IaaS) providers invest a substantial amount of time, money, and human capital into providing world-class security at levels far greater than most cloud consumers can feasibly provide. For example, Amazon Web Services (AWS) has been certified in ISO 27001, HIPAA, PCI, FISO, SSAE 16, FedRAMP, ITAR, FIPS, and other regulations. Many companies would be hard pressed to invest in that amount of security and auditing within their data centers.

As we move up to the application stack layer, where PaaS solutions take root, we see a shift in responsibility to the providers for securing the underlying application software, such as operating systems, application servers, database software, and programming languages like .NET, Ruby, Python, Java, and many others. There are a number of other application stack tools that provide on-demand services like caching, queuing, messaging, e-mail, logging, monitoring, and others. In an IaaS service model, the service consumer would own managing and securing all of these services, but with Platform as a Service (PaaS), this is all handled by the service provider in some cases. Let’s elaborate.

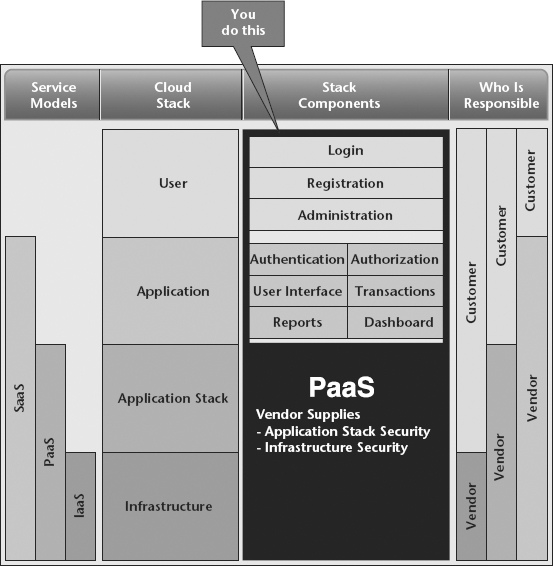

There are actually six distinct deployment models for PaaS, as shown in Figure 9.2.

Figure 9.2 Platform as a Service

The public hosted deployment model is where the provider provides the IaaS in the provider’s own public cloud. Examples are Google App Engine, Force.com, and Microsoft Azure. In this model, the provider is responsible for all of the security for both the infrastructure and application stack. In some cases, the PaaS provider runs on top of another provider’s infrastructure. For example, Heroku and Engine Yard both run on AWS. In the consumers’ eyes, the PaaS provider is responsible for all of the infrastructure and application stack security, but in reality, the PaaS provider manages the application stack security but leverages the IaaS provider to provide the infrastructure security. In the public-hosted model, only the PaaS provider is responsible for securing the actual PaaS software. The PaaS software is a shared service consumed by all PaaS consumers.

The public-managed deployment model is where the PaaS provider deploys on a public cloud of the CSC’s choice and hires the PaaS provider or some other third party to manage the PaaS software and the application stack on its behalf. (Note: Not all PaaS providers have the ability to run on multiple public clouds.) In the public-managed model, the PaaS software needs to be managed by the customer, meaning it is up to the customer and its managed service provider to determine when to update the PaaS software when patches and fixes come out. Although the consumer still shifts the responsibility of security for the PaaS software and the application stack, the consumer is still involved in the process of updating software. In the public-hosted model, this all happens transparently to the consumer.

The public-unmanaged deployment model is where the PaaS provider deploys on an IaaS provider’s public cloud, and the consumer takes the responsibility of managing and patching both the PaaS software and application stack. This is a common deployment model within enterprises when a hybrid cloud is chosen. Often with a hybrid PaaS solution, the consumer must choose a PaaS that can be deployed in any cloud, public or private. PaaS providers that meet this requirement only deliver PaaS as software and do not handle the infrastructure layer. An example of this model would be deploying an open-source PaaS like Red Hat’s OpenShift on top of an open source IaaS solution like OpenStack, which can be deployed both within the consumer’s data center for some workloads and in a public cloud IaaS provider like Rackspace for other workloads.

The private-hosted model is where a private PaaS is deployed on an externally hosted private IaaS cloud. In this model the consumer shifts the responsibility of the infrastructure layer to the IaaS provider, but still owns managing and securing the application stack and the PaaS software. An example of this model would be deploying an open source PaaS like Cloud Foundry on top of an open source IaaS solution like OpenStack, which can be deployed in a private cloud IaaS provider like Rackspace for other workloads. (Note: Rackspace provides both public and private IaaS solutions.)

The private-managed model is similar to the public-hosted model except that the IaaS cloud is a private cloud, either externally hosted or within the consumer’s own data center. If the IaaS cloud is externally hosted, then the only difference between the private-hosted and the private-managed model is that the consumer hires a service provider to manage and secure the PaaS and application stack and relies on the IaaS provider to manage and secure the infrastructure layer. If the IaaS cloud is internal, then the consumer owns the responsibility for managing and securing the infrastructure layer, while the managed service provider manages and secures the PaaS software and the application stack.

The private-unmanaged model is where the consumer is in charge of securing the entire cloud stack plus the PaaS software. In reality, this is a private IaaS with the addition of managing a PaaS solution in the data center. This is a popular option for enterprises that want to keep data out of the public cloud and want to own the security responsibility. Another reason is the consumer may want to run on specific hardware specifications not available in the public cloud or it may want to shift certain workloads to bare-metal (nonvirtualized) machines to gain performance improvements. An example of this model is deploying a .NET PaaS like Apprenda on top of OpenStack running in an internal private cloud.

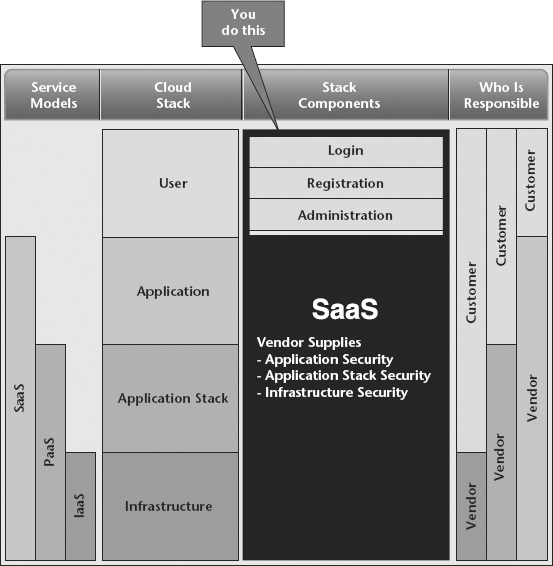

The next layer up is the application layer. This is where application development must focus on things like using secure transmission protocols (https, sFTP, etc.), encrypting data, authenticating and authorizing users, protecting against web vulnerabilities, and much more. For SaaS solutions, the responsibility for application security shifts to the provider as shown in Figure 9.3.

Figure 9.3 Software as a Service

At the top of the stack is the user layer. At this layer the consumer performs user administration tasks such as adding users to a SaaS application, assigning roles to a user, granting access to allow developers to build on top of cloud services, and so on. In some cases, the end user may be responsible for managing its own users. For example, a consumer may build a SaaS solution on top of a PaaS or IaaS provider and allow its customers to self-manage access within their own organizations.

To sum up the security responsibilities, choosing cloud service and cloud deployment models determines which responsibilities are owned by the provider and which are owned by the consumer. Once a consumer determines what the provider is responsible for, it should then evaluate the provider’s security controls and accreditations to determine whether the provider can meet the desired security requirements.

Security Strategies

Most applications built in the cloud, public or private, are distributed in nature. The reason many applications are built like this is so they can be programmed to scale horizontally as demand goes up. A typical cloud architecture may have a dedicated web server farm, a web service farm, a caching server farm, and a database server farm. In addition, each farm may be redundant across multiple data centers, physical or virtual. As one can imagine, the number of servers in a scalable cloud architecture can grow to a high level. This is why a company should apply the following three key strategies for managing security in a cloud-based application:

Centralization refers to the practice of consolidating a set of security controls, processes, policies, and services and reducing the number of places where security needs to be managed and implemented. For example, a common set of services should be built for allowing users to be authenticated and authorized to use cloud services as opposed to having each application provide different solutions. All of the security controls related to the application stack should be administered from one place, as well.

Here is an analogy to explain centralization. A grocery store has doors in two parts of the building. There are front doors, which are the only doors that customers (users) can come in and there are back doors where shipments (deployments) and maintenance workers (systems administrators) enter. The rest of the building is double solid concrete walls with security cameras everywhere. The back door is heavily secured and only authorized personnel with the appropriate badge can enter. The front door has some basic protections like double pane glass, but any customer willing to spend money is welcome during hours of operation. If the grocery store changes its hours of operation (policy), it simply changes a time setting in the alarm system as to when the doors lock. This same mentality should be applied to cloud-based systems. There should be a limited way for people or systems to get access. Customers should all enter the same way through one place where they can be monitored. IT people who need access should have a single point of entry with the appropriate controls and credentials required to enter parts of the system that no others can. Finally, policies should be centralized and configurable so changes can be made and tracked consistently and quickly.

Standardization is the next important strategy. Security should be thought of as a core service that can be shared across the enterprise, not a solution for a specific application. Each application having its own unique security solutions is the equivalent of adding doors all over the side of the building in the grocery store analogy. Companies should look at implementing industry standards for accessing systems, such as OAuth and OpenID, when connecting to third parties. Leveraging standard application protocols like Lightweight Directory Access Protocol (LDAP) for querying and modifying directory services like Active Directory or ApacheDS is highly recommended, as well. In Chapter 10, “Creating a Centralized Logging Strategy,” we will discuss standards around log and error messages.

Standardization applies to three areas. First, we should subscribe to industry best practices when it comes to implementing security solutions and selecting things like the method of encryption, authorization, API tokenization, and the like. Second, security should be implemented as a stand-alone set of services that are shared across applications. Third, all of the security data outputs (logs, errors, warnings, debugging data, etc.) should follow standard naming conventions and formats (we will discuss this more in Chapter 10).

The third strategy is automation. A great example of the need for automation comes from the book called The Phoenix Project. This book tells a fictional, yet relevant, story of a company whose IT department was always missing dates and never finding time to implement technical requirements such as a large number of security tasks. Over time, they started figuring out what steps were repeatable so that they could automate them. Once they automated the process for creating environments and deploying software, they were able to implement the proper security controls and process within the automation steps. Before they implemented automation, development and deployments took so much time that they never had enough time left in their sprints to focus on nonfunctional requirements such as security.

Another reason automation is so important is because in order to automatically scale as demand increases or decreases, virtual machines and code deployments must be scripted so that no human intervention is required to keep up with demand. All cloud infrastructure resources should be created from automated scripts to ensure that the latest security patches and controls are automatically in place as resources are created on-demand. If provisioning new resources requires manual intervention, the risks of exposing gaps in security increase due to human error.

Areas of Focus

In addition to the three security strategies, a strategy I call PDP, which consists of three distinct actions, must be implemented. Those three actions are:

Protection is the first area of focus and is one that most people are familiar with. This is where we implement all of the security controls, policies, and processes to protect the system and the company from security breaches. Detection is the process of mining logs, triggering events, and proactively trying to find security vulnerabilities within the systems. The third action is prevention, where if we detect something, we must take the necessary steps to prevent further damage. For example, if we see a pattern where a large number of failed attempts are being generated by a particular IP, we must implement the necessary steps to block that IP to prevent any damage. As an auditor once told me, “It’s nice that your intrusion detection tools detected that these IPs are trying to log into your systems, but what are you going to do about it? Where is the intrusion prevention?”

In order to secure cloud-based systems, there are a number of areas to focus the security controls on. Here are some of the most important areas:

- Policy enforcement

- Encryption

- Key management

- Web security

- API management

- Patch management

- Logging

- Monitoring

- Auditing

Policy Enforcement

Policies are rules that are used to manage security within a system. A best practice is to make these rules configurable and decouple them from the applications that use them. Policies are maintained at every layer of the cloud stack. At the user layer, access policies are often maintained in a central data store like Active Directory, where user information is maintained and accessed through protocols like LDAP. Changes to user data and rules should be managed within the central data store and not within the application, unless the rules are specific to the application.

At the application layer, application-specific rules should also be maintained in a centralized data store abstracted from the actual application. The application should access its own central data store, which can be managed by a database, an XML file, a registry, or some other method, via API so that if the policies change, they can be changed in one place.

At the application stack layer, operating systems, databases, application servers, and development languages all are configurable already. The key to policy enforcement at this level is automation. In an IaaS environment, this is accomplished by scripting the infrastructure provisioning process. A best practice is to create a template for each unique machine image that contains all of the security policies around access, port management, encryption, and so on. This template, often referred to as the “gold image,” is used to build the cloud servers that run the application stack and the applications. When policies change, the gold image is updated to reflect those changes. Then new servers are provisioned while the old, outdated servers are deprovisioned. This entire process can be scripted to be fully automated to eliminate human error. This method is much easier than trying to upgrade or patch existing servers, especially in an environment that has hundreds or thousands of servers.

Recommendation: Identify policies at each layer of the cloud stack. Isolate policies into a centralized data store. Standardize all access to policies (e.g., API, standard protocols, or scripts). Automate policies when the steps are repeatable (e.g., golden image, deployments).

Encryption

Sensitive data processed in the cloud should always be encrypted. Any message that is processed over the Internet that contains sensitive data should use a secure protocol such as https, sFTP, or SSL. But securing data in transit is not enough. Some attributes will need to be encrypted at rest. At rest refers to where the data is stored. Often data is stored in a database, but sometimes it is stored as a file on a file system.

Encryption protects data from being read by the naked eye, but it comes at a cost. For an application to understand the contents of the data, the data must be unencrypted to be read, which adds time to the process. Because of this, simply encrypting every attribute is often not feasible. What is required is that personally identifiable information (PII) data is encrypted. Here are the types of data that fall under PII:

- Demographics information (full name, Social Security number, address, etc.)

- Health information (biometrics, medications, medical history, etc.)

- Financial information (credit card numbers, bank account numbers, etc.)

It is also wise to encrypt any attributes that might give hints to an unauthorized user about system information that could aid in attacks. Examples of this kind of information are:

- IP addresses

- Server names

- Passwords

- Keys

There are a variety of ways to handle encryption. In a database, sensitive data can be encrypted at the attribute level, the row level, or the table level. At one start-up that I worked at, we were maintaining biometric data for employees of our corporate customers who had opted into our health and wellness application. The biometric data was only needed the first time the employee logged onto the system. What we decided to do was create an employee-biometric table that related back to the employee table that isolated all of the PII data to a single table and encrypted that table only. This gave us the following advantages:

- Simplicity. We could manage encryption at the table level.

- Performance. We kept encryption out of the employee table, which was accessed frequently and for many different reasons.

- Traceability. It is much easier to produce proof of privacy since all attributes in scope are isolated and all API calls to the table are tracked.

For sensitive data that is stored outside of databases, there are many options, as well. The data can be encrypted before transmission and stored in its encrypted state. The file system or folder structure that the data is stored in can be encrypted. When files are accessed they can be password protected and require a key to unencrypt. Also, there are many cloud storage providers that provide certified storage services where data can be sent securely to the cloud service where it is encrypted and protected. These types of services are often used to store medical records for HIPAA compliance.

Recommendation: Identify all sensitive data that flows in and out of the system. Encrypt all sensitive data in flight and at rest. Design for simplicity and performance. Isolate sensitive data where it makes sense to minimize the amount of access and to minimize performance issues due to frequent decryption. Evaluate cloud vendors to ensure that they can provide the level of encryption your application requires.

Key Management

Key management is a broad topic that could merit its own chapter, but I will cover it at a high level. For this discussion, I will focus on public and private key pairs. The public and private keys are two uniquely and mathematically related cryptographic keys. Whatever object is protected by a public key can only be decrypted by the corresponding private key and vice versa. The advantage of using public–private key pairs is that if an authorized person or system gets access to the data, they cannot decrypt and use the data without the corresponding keys. This is why I recommend using encryption and keys to protect against the Patriot Act and other government surveillance policies that many countries have.

A critical part of maintaining secure systems is the management of these keys. There are some basic rules to follow to ensure that the keys do not get discovered by people or systems without permission. After all, keys are worthless if they are in the hands of the wrong people. Here are some best practices.

Keys should not be stored in clear text. Make sure that the keys are encrypted before they are stored. Also, keys should not be referenced directly in the code. Centralized policy management should be applied to keys, as well. Store all keys outside of the applications and provide a single secure method of requesting the keys from within the applications. When possible, keys should be rotated every 90 days. This is especially important for the keys provided by the cloud service provider. For example, when signing up for an AWS account a key pair is issued. Eventually the entire production system is deployed under this single account. Imagine the damage if the key for the AWS account got into the wrong hands. If keys are never rotated, there is a risk when people leave the company and still know the keys. Key rotation should be an automated process so it can be executed during critical situations. For example, let’s say that one of the systems within the cloud environment was compromised. Once the threat was removed it would be wise to rotate the keys right away to mitigate the risk in case the keys were stolen. Also, if an employee who had access to the keys leaves the company, the keys should be rotated immediately.

Another best practice is to make sure that the keys are never stored on the same servers that they are protecting. For example, if a public–private key pair is being used to protect access to a database, don’t store the keys on the database server. Keys should be stored in an isolated, protected environment that has limited access, has a backup and recovery plan, and is fully auditable. A loss of a key is equivalent to losing the data because without the keys, nobody can decipher the contents of the data.

Recommendation: Identify all the areas within the system that require public–private key pairs. Implement a key management strategy that includes the best practices of policy management discussed earlier. Make sure keys are not stored in clear text, are rotated regularly, and are centrally managed in a highly secure data store with limited access.

Web Security

One of the most common ways that systems get compromised is through web-based systems. Without the proper level of security, unauthorized people and systems can intercept data in transit, inject SQL statements, hijack user sessions, and perform all kinds of malicious behaviors. Web security is a very dynamic area because as soon as the industry figures out how to address a current threat, the attackers figure out a new way to attack. A best practice for companies building web applications is to leverage the web frameworks for the corresponding application stack. For example, Microsoft developers should leverage the .NET framework, PHP developers should leverage a framework like Zend, Python developers would be wise to leverage the Django framework, and Ruby developers can leverage Rails.

The frameworks are not the answer to web security; there is much more security design that goes into building secure systems (and I touch on many of them in this chapter). However, most of these frameworks do a very good job of protecting against the top 10 web threats. The key is to ensure that you are using the most recent version of the framework and keep up with the patches because security is a moving target.

It is also wise to leverage a web vulnerability scanning service. There are SaaS solutions for performing these types of scans. They run continuously and report on vulnerabilities. They rank the severity of the vulnerabilities and provide detailed information of both the issue and some recommended solutions. In some cases, cloud service consumers may demand web vulnerability scanning in their contracts. In one of my start-ups, it was common for customers to demand scans because of the sensitivity of the types of data we were processing.

Recommendation: Leverage updated web frameworks to protect against the top 10 web vulnerabilities. Proactively and continuously run vulnerability scans to detect security gaps and address them before they are discovered by those with malicious intent. Understand that these frameworks will not guarantee that the systems are secure but will improve the security of the web applications immensely over rolling your own web security.

API Management

Back in Chapter 6 we discussed Representational State Transfer or RESTful web APIs in great detail. One of the advantages of cloud-based architectures is how easily different cloud services can be integrated by leveraging APIs. However, this creates some interesting security challenges because each API within the system has the potential to be accessed over the web. Luckily, a number of standards have emerged so that each company does not have to build its own API security from scratch. In fact, for companies sharing APIs with partners and customers, it is an expectation that your APIs support OAuth and OpenID. If there are scenarios where OAuth or OpenID cannot be used, use basic authentication over SSL. There are also several API management SaaS solutions that are available, such as Apigee, Mashery, and Layer7. These SaaS providers can help secure APIs as well as provide many other features such as monitoring, analytics, and more.

Here are some best practices for building APIs. Try not to use passwords and instead use API keys between the client and the provider. This approach removes the dependency on needing to maintain user passwords since they are very dynamic. The number one reason for using keys instead of passwords is that the keys are much more secure. Most passwords are no more than eight characters in length because it is hard for users to remember passwords longer than that. In addition, many users do not create strong passwords. Using keys results in a longer and more complex key value, usually 256 characters, and passwords are created by systems instead of users. The number of combinations that a password bot would need to try to figure out the password for a key value is many times greater than an eight-digit password. If you have to store passwords for some reason, make sure they are encrypted with an up-to-date encryption utility like bcrypt.

A best practice is to avoid sessions and session state to prevent session hijacking. If you are building RESTful services the correct way, this should not be hard to implement. If you are using SOAP instead of REST, then you will be required to maintain sessions and state and expose yourself to session hijacking. Another best practice is to reset authentication on every request so if an unauthorized user somehow gets authenticated, he is no longer able to access the system after that request is terminated, whereas if the authentication is not terminated, the unauthorized user can stay connected and do even more damage. The next recommendation is to base the authentication on the resource content of the request, not the URL. URLs are easier to discover and more fragile than the resource content. A common mistake that auditors pick up on is that developers often leave too much information in the resource content. Make sure that debug information is not on in production and also ensure that information describing version numbers or descriptions of the underlying application stack are excluded from the resource content. For example, an auditor once flagged one of our APIs because it disclosed the version of Apache we were running. Any information that is not needed by the service consumer should be removed from the resource content.

Recommendation: Do not roll your own security. Use industry standards like OAuth. Refrain from using passwords, always use SSL, encrypt sensitive attributes within the resource content, and only include information in the resource content that is absolutely necessary. Also evaluate Security as a Service solutions and API management solutions, and leverage them where it makes sense within the architecture.

Patch Management

Patching servers applies to the IaaS cloud service model and the private cloud deployment model. When leveraging IaaS, the cloud service consumer is responsible for the application stack and therefore must manage the security of the operating system, database server, application server, the development language, and all other software and servers that make up the system. The same is true for private PaaS solutions. For regulations that focus on security, like the SSAE16 SOC 2 audit, patching servers must be performed at least every 30 days. Not only do the servers require patching, but the auditors need to see proof that the patching occurred and a log of what patches were applied.

There are many ways to manage patching but whatever method is chosen should rely on automation as much as possible. Any time manual intervention is allowed there is a risk of not only creating issues in production but also missing or forgetting to apply certain patches. A common approach to patching is to use the golden image method described earlier. Each unique server configuration should have an image that has the most current security patches applied to it. This image should be checked into a source code repository and versioned to produce an audit trial of the changes and also allow for rollbacks in case the deployment causes issues. Every 30 days, the latest golden image should be deployed to production and the old server image should be retired. It is not recommended to apply security patches to existing servers. Live by the rule of create new servers and destroy the old. There are two main reasons for this strategy. First, it is much simpler and less risky to leave the existing servers untouched. Second, if major issues occur when the new images are deployed, it is much easier and safer to redeploy the previous image than to back software and patches out of the existing image.

For companies that have continuous delivery in place, patching is a much simpler undertaking. With continuous delivery, both the software and the environment are deployed together, which ensures that deployments always deploy the most recent version of the golden image. In most continuous delivery shops, software is deployed anywhere from every two weeks, to every day, and in some cases, multiple times a day. In these environments, the servers are being refreshed frequently, much less than every 30 days. A patching strategy in this scenario entails updating the golden image at least once every 30 days, but there is no need to schedule a security patch deployment because the latest golden image gets deployed regularly.

Recommendation: Create and validate a golden image that contains all of the latest and greatest security patches and check it into the source code repository at least once every 30 days. Automate the deployment process to pull the latest golden image from the source code repository and deploy both the new software and the new environment based on the golden image, while retiring the current production servers containing the previous version of the golden image. Do not try to update servers—simply replace them.

Logging, Monitoring, and Auditing

Logging refers to the collection of all system logs. Logs come from the infrastructure, the application stack, and the application. It is a best practice to write a log entry for every event that happens within a system, especially events that involve users or systems requesting access. Logging is covered in detail in Chapter 10.

Monitoring refers to the process of watching over a system through a set of tools that provide information about the health and activity occurring on a system. A best practice is to implement a set of monitors that observe activity on the system and look for security risks. Monitoring involves both looking at real-time activity and mining log files. Monitoring is covered in Chapter 12. Auditing is the process of reviewing the security processes and controls to ensure that the system is complying with the required regulatory controls and meeting the security requirements and SLAs of the system. Auditing is covered in Chapter 7.

Summary

The popularity of cloud computing has raised awareness about the importance of building secure applications and services. The level of responsibility a cloud service provider takes on depends on which cloud service model and deployment model is chosen by the cloud service consumer. Consumers must not rely solely on their providers for security. Instead, consumers must take a three-pronged approach to security by applying security best practices to the applications and services, monitoring and detecting security issues, and practicing security prevention by actively addressing issues found by monitoring logs. Providers provide the tools to build highly secure applications and services. Consumers building solutions on top of providers must use these tools to build in the proper level of security and comply with the regulations demanded by their customers.

References

Barker, E., W. Barker, W. Burr, W. Polk, and M. Smid (2007, March). “Computer Security: NIST Special Publication 800–57.” Retrieved from http://csrc.nist.gov/publications/nistpubs/800–57/sp800–57-Part1-revised2_Mar08–2007.pdf.

Chickowski, E. (2013, February 12). “Database Encryption Depends on Effective Key Management.” Retrieved from http://www.darkreading.com/database/database-encryption-depends-on-effective/240148441.

Hazelwood, Les (2012, July). “Designing a Beautiful REST+JSON API.” San Francisco. Retrieved from https://www.youtube.com/watch?v=5WXYw4J4QOU&list=PL22B1B879CCC56461&index=9.

Kim, G., K. Behr, and G. Spafford (2013). The Phoenix Project: A Novel About IT, DevOps and Helping Your Business Win. Portland, OR: IT Revolution Press.