Chapter 6. Building Evolvable Architectures

Until now, we’ve addressed the three primary aspects of evolutionary architecture—fitness functions, incremental change, and appropriate coupling—separately. Now we have enough context to tie them together.

Many of the concepts we discussed aren’t new ideas, but rather viewed through a new lens. For example, testing has existed for years, but not with the fitness function emphasis on architectural verification. Continuous Delivery defined the idea of deployment pipelines. Evolutionary architecture shows architects the real utility of that capability.

Many organizations pursue Continuous Delivery practices as a way to increase engineering efficiency for software development, a worthy goal in itself. However, we’re taking the next step, using those capabilities to create something more sophisticated—architectures that evolve with the real world.

So how can developers take advantage of these techniques on projects, both existing and new?

Mechanics

Architects can operationalize these techniques for building an evolutionary architecture in three steps:

1. Identify Dimensions Affected by Evolution

First, architects must identify which dimensions of the architecture they want to protect as it evolves. This always includes technical architecture, and usually things like data design, security, scalability, and the other “-ilities” architects have deemed important. This must involve other interested teams within the organization, including business, operations, security, and other affected parties. The Inverse Conway Maneuver (described in “Conway’s Law”) is helpful here because it encourages multirole teams. Basically, this is the common behavior of architects at the onset of projects when identifying the architectural characteristics they want to support.

2. Define Fitness Function(s) for Each Dimension

A single dimension often contains numerous fitness functions. For example, architects commonly wire a collection of code metrics into the deployment pipeline to ensure architectural characteristics of the code base, such as preventing component dependency cycles. Architects document decisions about which dimensions deserve ongoing attention in a lightweight format such as a wiki. Then, for each dimension, they decide what parts may exhibit undesirable behavior when evolving, eventually defining fitness functions. Fitness functions may be automated or manual, and ingenuity will be necessary in some cases.

3. Use Deployment Pipelines to Automate Fitness Functions

Lastly, architects must encourage incremental change on the project, defining stages in a deployment pipeline to apply fitness functions and managing deployment practices like machine provisioning, testing, and other DevOps concerns. Incremental change is the engine of evolutionary architecture, allowing aggressive verification of fitness functions via deployment pipelines and a high degree of automation to make mundane tasks like deployment invisible. Cycle time is the Continuous Delivery measure of engineering efficiency. Part of the responsibility of developers on projects that support evolutionary architecture is to maintain good cycle time. Cycle time is an important aspect of incremental change because many other metrics derive from it. For example, the velocity of new generations appearing in an architecture is proportional to its cycle time. In other words, if a project’s cycle time lengthens, it slows down how fast the project can deliver new generations, which affects evolvability.

While the identification of dimensions and fitness functions occurs at the beginning of a new project, it is also an ongoing activity for both new and existing projects. Software suffers from the unknown unknowns problem: developers cannot anticipate everything. During construction, some part of the architecture often shows troubling signs, and building fitness functions can prevent this dysfunction from growing. While some fitness functions will naturally come to light at the beginning of a project, many won’t reveal themselves until an architectural stress point appears. Architects must vigilantly watch for situations where nonfunctional requirements break and retrofit the architecture with fitness functions to prevent future problems.

Greenfield Projects

Building evolvability into new projects is much easier than retrofitting existing ones. First, developers have the opportunity to utilize incremental change right away, building a deployment pipeline at project inception. Fitness functions are easier to identify and plan before any code exists, making it easier to accommodate complex fitness functions because scaffolding has existed since inception. Second, architects don’t have to untangle any undesirable coupling points that creep into existing projects. The architect can also put metrics and other verifications in place to ensure architectural integrity as the project changes.

Building new projects that handle unexpected change is easier if a developer chooses the correct architectural patterns and engineering practices to facilitate evolutionary architecture. For example, microservices architectures offer extremely low coupling and a high degree of incremental change, making that style an obvious candidate (and another contributing factor to its popularity).

Retrofitting Existing Architectures

Adding evolvability to existing architectures depends on three factors: component coupling, engineering practice maturity, and developer ease in crafting fitness functions.

Appropriate Coupling and Cohesion

Component coupling largely determines the evolvability of the technical architecture. Yet the best possible evolvable technical architecture is doomed if the data schema is rigid and fossilized. Cleanly decoupled systems make evolution easy; nests of exuberant coupling harm it. To build truly evolvable systems, architects must consider all affected dimensions of an architecture.

Beyond the technical aspects of coupling, architects must also consider and defend the functional cohesion of the components of their system. When migrating from one architecture to another, the functional cohesion determines the ultimate granularity of restructured components. That doesn’t mean architects can’t decompose components to a ridiculous level, but rather that components should have an appropriate size based on the problem context. For example, some business problems are more coupled than others, such as in the case of heavily transactional systems. Trying to build an extremely decoupled architecture that is counter to the problem is unproductive.

Tip

Understand the business problem before choosing an architecture.

While this advice seems obvious, we constantly see teams that have chosen the shiniest new architectural pattern rather than the most appropriate one suffer. Part of choosing an architecture lies in understanding where the problem and physical architecture come together.

Engineering Practices

Engineering practices matter when defining how evolvable an architecture can be. While Continuous Delivery practices don’t guarantee evolutionary architecture, it is almost impossible without them.

Many teams embark on improved engineering practices for the sake of efficiency. However, once those practices cement, they become building blocks for advanced capabilities such as evolutionary architecture. Thus, the ability to build an evolutionary architecture is an incentive to improving efficiency.

Many companies reside in the transition zone between older practices and new. They may have solved low hanging fruit like continuous integration but still have largely manual testing. While it slows cycle time, it is important to include manual stages in deployment pipelines. First, it treats each stage of an application’s build the same—as a stage in the pipeline. Second, as teams slowly automate more pieces of deployment, manual stages may become automated ones with no disruption. Third, elucidating each stage brings awareness about the mechanical parts of the build, creating a better feedback loop and encouraging improvements.

The biggest single common impediment to building evolutionary architecture is intractable operations. If developers cannot easily deploy changes, all parts of the feedback cycle are hampered.

Fitness Functions

Fitness functions form the protective substrate of an evolutionary architecture. If architects design a system around particular characteristics, those features may be orthogonal to testability. Fortunately, modern engineering practices have vastly improved around testability, making formerly difficult architectural characteristics automatically verifiable. This is the area of evolutionary architecture that requires the most work, but fitness functions allows equal treatment for formerly disparate concerns.

We encourage architects to start thinking of all kinds of architectural verification mechanisms as fitness functions, including things they have previously considered ad hocly. For example, many architectures have a service-level agreement around scalability and corresponding tests. They also have rules around security requirements, with accompanying verification mechanisms. Architects often think of these as separate categories, but both intents are the same: verify some feature of the architecture. By thinking of all architectural verification as fitness functions, there is more consistency when automation and other beneficial synergistic interactions are defined.

COTS Implications

In many organizations, developers don’t own all the parts that make up their ecosystem. COTS (Commercial off-the-shelf) and package software is prevalent in large companies, creating challenges for architects building evolvable systems.

COTS systems must evolve alongside other applications within an enterprise. Unfortunately, these systems don’t support evolution well.

- Incremental change

-

Most commercial software falls woefully short of industry standards for automation and testing. Architects and developers must often ring fence integration points and build whatever testing is possible, frequently treating the entire system as a black box. Enforcing agility in terms of deployment pipelines, DevOps, and other modern practices offers challenges to development teams.

- Appropriate coupling

-

Package software often commits the worst sins in terms of coupling. Generally, the system is opaque, with a defined API developers use to integrate. Inevitably, that API suffers from the problem described in “Antipattern: Last 10% Trap”, allowing almost (but not quite) enough flexibility for developers to get useful work done.

- Fitness functions

-

Adding fitness functions to package software is perhaps the biggest hurdle to enable evolvability. Generally, tools of this ilk don’t expose enough internals to allow unit or component testing, making behavioral integration testing the last resort. These tests are less desirable because they are necessarily coarse grained, must run in a complex environment, and must test a large swath of behavior of the system.

Tip

Work diligently to hold integration points to your level of maturity. If that isn’t possible, realize that some parts of the system will be easier for developers to evolve than others.

Another worrisome coupling point introduced by many package software vendors is opaque database ecosystems. In the best-case scenarios, the package software manages the state of the database entirely, exposing selected appropriate values via integration points. In the worst case, the vendor database is the integration point to the rest of the system, vastly complicating changes on either side of the API. In this case, architects and DBAs must wrestle control of the database away from the package software for any hope of evolvability.

If trapped with necessary package software, architects should build as robust a set of fitness functions as possible and automate their running at every possible opportunity. Lack of access to internals relegates testing to less desirable techniques.

Migrating Architectures

Many companies end up migrating from one architectural style to another. For example, architects choose simple-to-understand architecture patterns at the beginning of a company’s IT history, often layered architecture monoliths. As the company grows, the architecture comes under stress. One of the most common paths of migration is from monolith to some kind of service-based architecture, for reasons of the general domain-centric shift in architectural thinking, covered in “Microservices”. Many architects are tempted by the highly evolutionary microservices architecture as a target for migration, but this is often quite difficult, primarily because of existing coupling.

When architects think of migrating architecture, they typically think of the coupling characteristics of classes and components, but ignore many other dimensions affected by evolution, such as data. Transactional coupling is as real as coupling between classes, and just as insidious to eliminate when restructuring architecture. These extra-class coupling points become a huge burden when trying to break the existing modules into too-small pieces.

Many senior developers build the same types of applications year after year, and become bored with the monotony. Most developers would rather write a framework than use a framework to create something useful: Meta-work is more interesting than work. Work is boring, mundane, and repetitive, whereas building new stuff is exciting.

This manifests in two ways. First, many senior developers start writing the infrastructure that other developers use, rather than using existing (often open source) software. We once worked with a client who had once been on the cutting edge of technology. They built their own application server, web framework in Java, and just about every other bit of infrastructure. At one point, we asked if they had built their own operating system, too, and when they said, “No,” we asked, “Why not?!? You built everything else from scratch!”

Upon reflection, the company needed capabilities that weren’t available. However, when open-source tools became available, they already owned their lovingly hand-crafted infrastructure. Rather than cut over to the more standard stack, they opted to keep their own because of minor differences in approach. A decade later, their best developers worked in full-time maintenance mode, fixing their application server, adding features to their web framework, and other mundane chores. Rather than applying innovation on building better applications, they permanently slaved away on plumbing.

Architects aren’t immune to the “meta-work is more interesting than work” syndrome, which manifests in choosing inappropriate but buzz-worthy architectural styles like microservices.

Tip

Don’t build an architecture just because it will be fun meta-work.

Migration Steps

Many architects find themselves faced with the challenge of migrating an outdated monolithic application to a more modern service-based approach. Experienced architects realize that a host of coupling points exist in applications, and one of the first tasks when untangling a code base is understanding how things are joined. When decomposing a monolith, the architect must take coupling and cohesion into account to find the appropriate balance. For example, one of the most stringent constraints of the microservices architectural style is the insistance that the database reside inside the service’s bounded context. When decomposing a monolith, even if it is possible to break the classes into small enough pieces, breaking the transactional contexts into similar pieces may present an unsurmountable hurdle.

Tip

When restructuring architecture, consider all the affected dimensions.

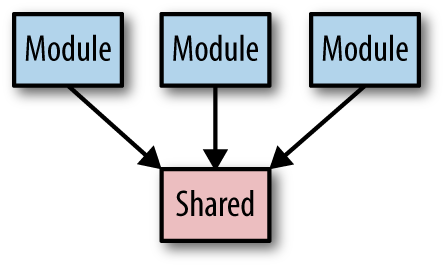

Many architects end up migrating from monolithic applications to service-based architectures. Consider the starting point architecture shown in Figure 6-1.

Figure 6-1. A monolith architecture as the starting point for migration, a “share everything” architecture

Building extremely granular services is easier in new projects but difficult in existing migrations. So, how can we migrate the architecture in Figure 6-1 to the service-based architecture shown in Figure 6-2?

Figure 6-2. The service-based, “share as little as possible” end result of the migration

Performing the kind of migration shown in Figures 6-1 and 6-2 comes with a host of challenges: service granularity, transactional boundaries, database issues, and things like how to handle shared libraries. Architects must understand why they want to perform this migration, and it must be a better reason than “it’s the current trend.” Splitting the architecture into domains, along with better team structure and operational isolation, allows for easier incremental change, one of the building blocks of evolutionary architecture, because the focus of work matches the physical work artifacts.

When decomposing a monolithic architecture, finding the correct service granularity is key. Creating large services alleviates problems like transactional contexts and orchestration, but does little to break the monolith into smaller pieces. Too-fine-grained components lead to too much orchestration, communication overhead, and interdependency between components.

For the first step in migrating architecture, developers identify new service boundaries. Teams may decide to break monoliths into services via a variety of partitioning as follows:

- Business functionality groups

-

A business may have clear partitions that mirror IT capabilities directly. Building software that mimics the existing business communication hierarchy falls distinctly into an applicable use of Conway’s Law (see “Conway’s Law”).

- Transactional boundaries

-

Many businesses have extensive transaction boundaries they must adhere to. When decomposing a monolith, architects often find that transactional coupling is the hardest to break apart, as discussed in “Two-Phase Commit Transactions”.

- Deployment goals

-

Incremental change allows developers to selectively release code on different schedules. For example, the marketing department might want a much higher cadence of updates than inventory. Partitioning services around operational concerns like speed to release makes sense if that criteria is highly important. Similarly, a portion of the system may have extreme operational characteristics (like scalability). Partitioning services around operational goals allows developers to track (via fitness functions) health and other operational metrics of the service.

Coarser service granularity means many of the coordination problems inherent in microservices go away because more of the business context resides inside a single service. However, the larger the service, the more operational difficulties tend to escalate (another architectural tradeoff).

Evolving Module Interactions

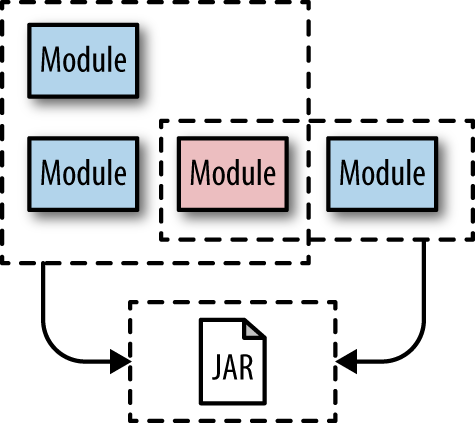

Migrating shared modules (including components) is another common challenge faced by developers. Consider the structure shown in Figure 6-3.

In Figure 6-3, all three modules share the same library. However, the architect needs to split these modules into separate services. How can she maintain this dependency?

Sometimes, the library may be split cleanly, preserving the separate functionality each module needs. Consider the situation shown in Figure 6-4.

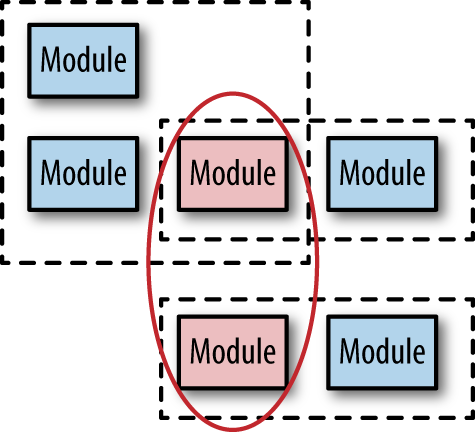

In Figure 6-4, both modules need the conflicting one shown in red (bold border). If developers are lucky, the functionality may be cleanly split down the middle, partitioning the shared library into the relevant parts needed by each dependent, as shown in Figure 6-5.

However, it’s more likely the shared library won’t split that easily. In that case, developers can extract the module into a shared library (such as a JAR, DLL, gem, or some other component mechanism) and use it from both locations, as shown in Figure 6-6.

Sharing is a form of coupling, which is highly discouraged in architectures like microservices. An alternative to sharing a library is replication, as illustrated in Figure 6-7.

In a distributed environment, developers may achieve the same kind of sharing using messaging or service invocation.

When developers have identified the correct service partitioning, the next step is separation of the business layers from the UI. Even in microservices architectures, the UIs often resolve back to a monolith—after all, developers must show a unified UI at some point. Thus, developers commonly separate the UIs early in the migration, creating a mapping proxy layer between UI components and the back-end services they call. Separating the UI also creates an anticorruption layer, insulating UI changes from architecture changes.

The next step is service discovery, allowing services to find and call one another. Eventually, the architecture will consist of services that must coordinate. By building the discovery mechanism early, developers can slowly migrate parts of the system that are ready to change. Developers often implement service discovery as a simple proxy layer: each component calls the proxy, which in turn maps to the specific implementation.

All problems in computer science can be solved by another level of indirection, except of course for the problem of too many indirections.

Dave Wheeler and Kevlin Henney

Of course, the more levels of indirection developers add, the more confusing navigating the services becomes.

When migrating an application from a monolithic application architecture to a more services-based one, the architect must pay close attention to how modules are connected in the existing application. Naive partitioning introduces serious performance problems. The connection points in application become integration architecture connections, with the attendant latency, availability, and other concerns. Rather than tackle the entire migration at once, a more pragmatic approach is to gradually decompose the monolithic into services, looking at factors like transaction boundaries, structural coupling, and other inherent characteristics to create several restructuring iterations. At first, break the monolith into a few large “portions of the application” chunks, fix up the integration points, and rinse and repeat. Gradual migration is preferred in the microservices world.

When migrating from a monolith, build a small number of larger services first.

Sam Newman, Building Microservices

Next, developers choose and detach the chosen service from the monolith, fixing any calling points. Fitness functions play a critical role here—developers should build fitness functions to make sure the newly introduced integration points don’t change, and add consumer-driven contracts.

Guidelines for Building Evolutionary Architectures

We’ve used a few biology metaphors throughout the course of the book, and here is another. Our brains evolved not in a nice, pristine environment where each capability was carefully built. Instead, each layer is based on primeval layers beneath. Much of our core autonomic behavior (like breathing, hunger, and so on) resides in parts of our brain not very different from reptilian brains. Instead of wholesale replacement of core mechanisms, evolution builds new layers on top.

Software architecture in large enterprises follows a similar pattern. Rather than rebuild each capability anew, most companies try to adapt whatever is present. As much as we like to talk about architecture in pristine, idealized settings, the real world often exhibits a contrary mess of technical debt, conflicting priorities, and limited budgets. Architecture in large companies is built like the human brain—lower-level systems still handle critical plumbing details but have some old baggage. Companies hate to decommission something that works, leading to escalating integration architecture challenges.

Retrofitting evolvability into an existing architecture is challenging—if developers never built easy change into the architecture, it is unlikely to appear spontaneously. No architect, now matter how talented, can transform a Big Ball of Mud into a modern microservices architecture without immense effort. Fortunately, projects can receive benefits without changing their entire architecture by building some flexibility points into the existing one.

Remove Needless Variability

One of the goals of Continuous Delivery is stability—building on known good parts. A common manifestation of this goal is the modern DevOps perspective on building immutable infrastructure. We discussed the dynamic equilibrium of the software development ecosystem in Chapter 1—nowhere is that more apparent in how much the foundation shifts around software dependencies. Software systems undergo constant change, as developers update capabilities, issue service packs, and generally tweak their software. Operating systems are a great example, as they endure constant change.

Modern DevOps has solved the dynamic equilibrium problem locally by replacing snowflakes with immutable infrastructure. Snowflake computers are ones that have been manually crafted by an operations person, and all future maintenance is done by hand. Chad Fowler coined the term immutable infrastructure in his blog post, “Trash Your Servers and Burn Your Code: Immutable Infrastructure and Disposable Components”. Immutable infastructure refers to systems defined entirely programmatically. All changes to the system must occur via the source code, not by modifying the running operating system. Thus, the entire system is immutable from an operational standpoint—once the system is bootstrapped, no other changes occur.

While immutability may sound like the opposite of evolvability, quite the opposite is true. Software systems comprise thousands of moving parts, all interlocking in tight dependencies. Unfortunately, developers still struggle with unanticipated side effects of changes to one of those parts. By locking down the possibility of unanticipated change, we control more of the factors that make systems fragile. Developers strive to replace variables in code with constants to reduce vectors of change. DevOps introduced this concept to operations, making it more declarative.

Immutable infrastructure follows our advice to remove needless variables. Building software systems that evolve means controlling as many unknown factors as possible. It is virtually impossible to build fitness functions that can anticipate how the latest service pack of the operating system might affect the application. Instead, developers build the infrastructure anew each time the deployment pipeline executes, catching breaking changes as aggressively as possible. If developers can remove known foundational, changeable parts such as the operating system as a possibility, they have less ongoing testing burden to carry.

Architects can find all sorts of avenues to convert changeable things to constants. Many teams extend the immutable infrastructure advice to the development environment as well. How many times has some team member exclaimed, “But it works on my machine!”? By ensuring every developer has the exact same image, a host of needless variables disappear. For example, most development teams automate the update of development libraries through repositories, but what about updates to tools like IDEs? By capturing the development environment as immutable infrastructure, developers always work on the same foundation.

Building an immutable development environment also allows useful tools to spread throughout projects. Pair programming is a common practice in many agile engineering-focused development teams, including pair rotation, where each team member changes on a regular basis, from every few hours to every few days. However, it’s frustrating when a tool appears on the computer a developer used yesterday that isn’t present today. By building a single source for developer systems, it becomes easy to add useful tools to all systems at once.

Make Decisions Reversible

Inevitably, systems that aggressively evolve will fail in unanticipated ways. When these failures occur, developers need to craft new fitness functions to prevent future occurrences. But how do you recover from a failure?

Many DevOps practices exist to allow reversible decisions—decisions that need to be undone. For example blue/green deployments, where operations have two identical (probably virtual) ecosystems—blue and green ones—common in DevOps. If the current production system is running on blue, green is the staging area for the next release. When the green release is ready, it becomes the production system and blue temporarily shifts to backup status. If something goes awry with green, operations can go back to blue without too much pain. If green is fine, blue becomes the staging area for the next release.

Feature toggles are another common way developers make decisions reversible. By deploying changes underneath feature toggles, developers can release them to a small subset of users (called canary releasing) to vet the change. If a feature behaves unexpectedly, developers can switch the toggle back to the original and correct the fault before trying again. Make sure you remove the outdated ones!

Using feature toggles greatly reduces risk in these scenarios. Service routing—routing to a particular instance of a service based on request context—is another common method to canary release in microservices ecosystems.

Tip

Make as many decisions as possible reversible (without over-engineering).

Prefer Evolvable over Predictable

…because as we know, there are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns—the ones we don’t know we don’t know.

former US Secretary of Defense Donald Rumsfeld

Unknown unknowns are the nemesis of software systems. Many projects start with a list of known unknowns: things developers know they must learn about the domain and technology. However, projects also fall victim to unknown unknowns: things no one knew were going to crop up yet have appeared unexpectedly. This is why all Big Design Up Front software efforts suffer—architects cannot design for unknown unknowns.

All architectures become iterative because of unknown unknowns; agile just recognizes this and does it sooner.

Mark Richards

While no architecture can survive the unknown, we know that dynamic equilibrium renders predictability useless in software. Instead, we prefer to build evolvability into software: if projects can easily incorporate changes, architects don’t need a crystal ball. Architecture is not a solely upfront activity—projects constantly change in both explicit and unexpected ways throughout their life. One technique commonly used by developers to insulate themselves from change is to use an anticorruption layer.

Build Anticorruption Layers

Projects often need to couple themselves to libraries that provide incidental plumbing: message queues, search engines, and so on. The abstraction distraction anti-pattern describes the scenario where a project “wires” itself too much to an external library, either commercial or open source. Once it becomes time for developers to upgrade or switch the library, much of the application code utilizing the library has baked-in assumptions based on the previous library abstractions. Domain-driven design includes a safeguard against this phenomenon called an anticorruption layer. Here is an example.

Agile architects prize the last responsible moment principle when making decisions, which is used to counter the common hazard in projects of buying complexity too early. We worked intermittently on a Ruby on Rails project for a client who managed wholesale car sales. After the application went live, an unexpected workflow arose. It turned out that used car dealers tended to upload new cars to the auction site in large batches, both in number of cars and number of pictures per car. We realized that, as much as the general public doesn’t trust used-car dealers, dealers really don’t trust each other; thus, each car must include a photo covering essentially every molecule of the car. Users wanted a way to begin an upload, then either get progress via some UI mechanism like a progress bar or check back later to see if the batch was done. Translated to technical terms, they wanted asynchronous upload.

A message queue is one traditional architectural solution to this problem, and the team discussed whether to add an open source queue to the architecture. A common trap at this juncture for many projects is the attitude of, “We know we’ll need a message queue for lots of stuff eventually, so let’s get the fanciest one we can now and grow into it later.” The problem with this approach is technical debt: stuff that’s part of your project that isn’t supposed to be there and is in the way of stuff that is supposed to be there. Most developers treat crufty old code as the only form of technical debt, but projects can inadvertently buy technical debt as well via premature complexity.

For the project, the architect encouraged developers to find a simpler way. One developer discovered BackgrounDRb, an extraordinarily simple open source library that simulates a single message queue backed by a relational database. The architect knew this simple tool would probably never scale to other future problems, but she didn’t have other objections. Rather than try to predict future usage, she instead made it relatively easy to replace by placing it behind an API. In the last responsible moment answer questions such as “Do I have to make this decision now?”, “Is there a way to safely defer this decision without slowing any work?”, and “What can I put in place now that will suffice but I can easily change later if needed?”

Around the one-year anniversary, a second request for asynchronicity appeared in the form of timed events around sales. The architect evaluated the situation and decided that a second instance of BackgrounDRb would suffice, put it in place, and moved on. At around the two-year anniversary, a third request appeared for constantly updating values like caches and summaries. The team realized that the current solution couldn’t handle the new workload. However, they now had a good idea about what kind of asynchronous behavior the application needed. At that point, the project switched over to Starling, a simple but more traditional message queue. Because the original solution was isolated behind an interface, it took one pair of developers less than one iteration (one week on that project) to complete the transition—without disrupting other developers’ work on the project.

Because the architect put an anticorruption layer in place with an interface, replacing one piece of functionality became a mechanical exercise. Building an anticorruption layer encourages the architect to think about the semantics of what they need from the library, not the syntax of the particular API. But this is not an excuse to abstract all the things! Some development communities love preemptive layers of abstraction to a distracting degree but understanding suffers when you must call a Factory to get a proxy to a remote interface to a Thing. Fortunately, most modern languages and IDEs allow developers to be just in time when extracting interfaces. If a project finds themselves bound to an out-of-date library in need of change, the IDE can extract interface on behalf of the developer, making a Just In Time (JIT) anticorruption layer.

Tip

Build just-in-time anticorruption layers to insulate against library changes.

Controlling the coupling points in an application, especially to external resources, is one of the key responsibilities of an architect. Try to find the pragmatic time to add dependencies. As an architect, remember dependencies provide benefits but also impose constraints. Make sure the benefits outweigh the cost in updates, dependency management, and so on.

Developers understand the benefits of everything and the tradeoffs of nothing!

Rich Hickey, creator of Clojure

Architects must understand both benefits and tradeoffs and build engineering practices accordingly.

Using anticorruption layers encourages evolvability. While architects can’t predict the future, we can at least lower the cost of change so that it doesn’t impact us so negatively.

Case Study: Service Templates

Microservices architectures are designed to be share nothing architectures—each component is as decoupled as possible from other components, adhering to the bounded context principle. However, the shunning of coupling between services pertains primarily to domain classes, database schemas, and other coupling points that harm the ability to evolve. Development teams often want to manage some aspects of the technical coupling uniformly—adhering to our remove needless variables advice—to ensure uniformity. For example, monitoring, logging, and other diagnostics are critical in this architectural style due to the profusion of moving parts. When operations must manage thousands of services, when service teams forget to add monitoring capabilities to their service the results can be disasterous. Upon deployment, the service will disappear into a black hole, because in these environments, if it can’t be monitored, it is invisible. Yet, in a highly decoupled environment, how can teams enforce consistency?

Service templates are one common solution for ensuring consistency. These are preconfigured sets of common infrastructure libraries like service discovery, monitoring, logging, metrics, authentication/authorization, and so on. In large organizations, a shared infrastructure team manages the service templates. Service implementation teams use the template as scaffolding, writing their behavior within. If the logging tool requires an upgrade, the shared infrastructure team can manage it orthogonally from the service teams—they should never know (or care) that the change occurred. If a breaking change occurs, it fails during the provisioning phase of the deployment pipeline, alerting developers to the problem as soon as possible.

This is a good example of what we mean when we espouse appropriate coupling. Duplicating technical architecture functionality across services creates a slew of well-known problems. By finding exactly the level of coupling we need, we can free evolution without creating new problems.

Tip

Use service templates to couple just the appropriate parts of architecture together—the infrastructure elements that allow teams to benefit from coupling.

Service templates exemplify adaptability. Eliminating the technical architecture as the primary structure of the system makes it easier to target changes to just that dimension of architecture. When developers build a layered architecture, change is easy within each layer but highly coupled across layers. While a layered architecture partitions the technical architecture parts together, it entangles other concerns like domain, security, operations, etc. By building a part of the architecture solely for technical architecture concerns (e.g., service templates), developers can isolate and unify change to that entire dimension. We discuss how to think about architectural elements as deployable units in Chapter 4.

Build Sacrificial Architectures

In his book Mythical Man Month, Fred Brooks says to Plan to Throw One Away when building a new software system.

The management question, therefore, is not whether to build a pilot system and throw it away. You will do that. […] Hence plan to throw one away; you will, anyhow.

Fred Brooks

His point was that once a team has built a system, they know all the unknown unknowns and proper architecture decisions that are never clear from the outset—the next version will profit from all those lessons. At an architectural level, developers struggle to anticipate radically changing requirements and characteristics. One way to learn enough to choose a correct architecture is build a proof of concept. Martin Fowler defines a sacrificial architecture as an architecture designed to be thrown away if the concept proves successful. For example, eBay started as a set of Perl scripts in 1995, migrated to C++ in 1997, and then to Java in 2002. Obviously, eBay has been a resounding success in spite of rearchitecting their system several times. Twitter is another good example of successful utilization of this approach. When Twitter released, it was written in Ruby on Rails to achieve fast time-to-market. However, as Twitter became popular, the platform couldn’t support the scale, resulting in frequent crashes and limited availability. Many early users became all too familiar with their failure beacon, shown in Figure 6-8.

Figure 6-8. Twitter’s famous Fail Whale

Thus, Twitter restructured their architecture to replace the backend with something more robust. However, it could be argued that this tactic is the reason the company survived. If the Twitter engineers had built the final, robust platform from the beginning, it would have delayed their entry into the market long enough for Snitter or some alternative short-form messaging service to beat them to market. Despite the growing pains, starting with a sacrificial architecture eventually paid off.

Cloud environments make sacrificial architecture more attractive. If developers have a project they want to test, building the initial version in the cloud greatly reduces the resources required to release the software. If the project is successful, architects can take the time to build a more suitable architecture. If developers are careful about anticorruption layers and other evolutionary architecture practices, they can mitigate some of the pains of the migration.

Many companies build a sacrificial architecture to achieve a minimum viable product to prove a market exists. While this is a good strategy, the team must eventually allocate time and resources to build a more robust architecture, hopefully less visibly than Twitter.

One other aspect of technical debt impacts many initially successful projects, elucidated again by Fred Brooks, when he refers to the second system syndrome—the tendency of small, elegant, and successful systems to evolve into giant, feature-laden monstrosities due to inflated expectations. Business people hate to throw away functioning code, so architecture tends toward always adding, never removing, or decommissioning.

Technical debt works effectively as a metaphor because it resonates with project experience, and represents faults in design, regardless of the driving forces behind them. Technical debt aggravates inappropriate coupling on projects—poor design frequently manifests as pathological coupling and other antipatterns that make restructuring code difficult. As developers restructure architecture, their first step should be to remove the historical design compromises that manifest as technical debt.

Mitigate External Change

A common feature of every development platform is external dependencies: tools, frameworks, libraries, and other assets provided by and (more importantly) updated via the Internet. Software development sits on a towering stack of abstractions, each built on the abstractions before. For example, operating systems are an external dependency outside the developer’s control. Unless companies want to write their own operating system and all other supporting code, they must rely on external dependencies.

Most projects rely on a dizzying array of third-party components, applied via build tools. Developers like dependencies because they provide benefits, but many developers ignore the fact that they come with a cost as well. When relying on code from a third party, developers must create their own safeguards against unexpected occurrences: breaking changes, unannounced removal, and so on. Managing these external parts of projects is critical to creating evolutionary architecture.

Edsger Dijkstra, a legendary figure in computer science, famously observed in 1968 that “Go To Statement Considered Harmful,” where he punctured the existing best practice of unstructured coding, leading eventually to the structured programming revolution. Since that time, “considered harmful” has become a trope in software development.

Transitive dependency management is our “considered harmful” moment.

Chris Ford (no relation to Neal)

Chris’ point is that, until we recognize the severity of the problem, we cannot determine a solution. While we’re not offering a solution to the problem, we need to highlight it because it critically affects evolutionary architecture. Stability is one of the foundations of both Continuous Delivery and evolutionary architecture. Developers cannot build repeatable engineering practices atop uncertainty. Allowing third parties to make changes to core dependencies defies this principle.

We recommend that developers take a more proactive approach to dependency management. A good start on dependency management models external dependencies using a pull model. For example, set up an internal version-control repository to act as a third-party component store, and treat changes from the outside world as pull requests to that repository. If a beneficial change occurs, allow it into the ecosystem. However, if a core dependency disappears suddenly, reject that pull request as a destabilizing force.

Using a Continuous Delivery mindset, the third-party component repository utilizes its own deployment pipeline. When an update occurs, the deployment pipeline incorporates the change, then performs a build and smoke test on the affected applications. If successful, the change is allowed into the ecosystem. Thus, third-party dependencies use the same engineering practices and mechanisms of internal development, usefully blurring the lines across this often unimportant distinction between in-house written code and dependencies from third parties—at the end of the day, it’s all code in a project.

Updating Libraries Versus Frameworks

Architects make a common distinction between libraries and frameworks, with the colloquial definition of “a developer’s code calls library whereas the framework calls a developer’s code.” Generally, developers subclass from frameworks (which in turn calls those derived classes), thus the distinction that the framework calls code. Conversely, library code generally comes as a collection of related classes and/or functions developers call as needed. Because the framework calls the developer’s code, it creates a high degree of coupling to the framework. Contrast that with library code, which is generally more utilitarian code (like XML parsers, network libraries, etc.) and has a lower degree of coupling.

We prefer libraries because they introduce less coupling to your application, making them easier to swap out when the technical architecture needs to evolve.

One reason to treat libraries and frameworks differently comes down to engineering practices. Frameworks include capabilities such as UI, object-relational mapper, scaffolding like model-view-controller, and so on. Because the framework forms the scaffolding for the remainder of the application, all the code in the application is subject to impact by changes to the framework. Many of us have felt this pain viscerally—any time a team allows a fundamental framework to become outdated by more than two major versions, the effort (and pain) to finally update it is excruciating.

Because frameworks are a fundamental part of applications, teams must be aggressive about pursuing updates. Libraries generally form less brittle coupling points than frameworks do, allowing teams to be more casual about upgrades. One informal governance model treats framework updates as push updates and library updates as pull updates. When a fundamental framework (one whose afferent/efferent coupling numbers are above a certain threshold) updates, teams should apply the update as soon as the new version is stable and the team can allocate time for the change. Even though it will take time and effort, the time spent early is a fraction of the cost if the team perpetually procrastinates on the update.

Because most libraries provide utilitarian functionality, teams can afford to update them only when new desired functionality appears, using more of an “update when needed” model.

Tip

Update framework dependencies aggressively; update libraries passively.

Prefer Continuous Delivery to Snapshots

Many dependency management tools use a mechanism called snapshots to model in-flight development. A snapshot build was originally meant to indicate a component almost ready for release but still under development, the implication being that the code might change on a regular basis. Once a component is “blessed” with a version number, the -SNAPSHOT moniker drops away.

Developers use snapshots because of the historical assumption that testing is difficult and time consuming, leading developers to try to segregate things changing from things not changing.

In evolutionary architecture, we expect all things to change all the time, and build engineering practices and fitness functions to accommodate change. For example, when a project has excellent test coverage and a deployment pipeline, developers test every change to every component via the automated deployment pipeline. Developers have no reason to keep a “special” repository for each part of the project.

Tip

Prefer Continuous Delivery over snapshots for (external) dependencies.

Snapshots are an artifact from a development era where comprehensive testing wasn’t common, storage was expensive, and verification was difficult. Today’s updated engineering practices avoid inefficient handling of component dependencies.

Continuous Delivery suggested a more nuanced way to think about dependencies, repeated here. Currently, developers only have static dependencies, linked via version numbers captured as metadata in a build file somewhere. However, this isn’t sufficient for modern projects, which need a mechanism to indicate speculative updating. Thus, as the book suggests, developers should introduce two new designations for external dependencies: fluid and guarded. Fluid dependencies try to automatically update themselves to the next version, using mechanisms like deployment pipelines. For example, say that order fluidly relies on version 1.2 of framework. When framework updates itself to version 1.3, order tries to incorporate that change via its deployment pipeline, which is set up to rebuild the project anytime any part of it changes. If the deployment pipeline runs to completion, the fluid dependency between the components is updated. However, if something prevents successful completion—failed test, broken diamond dependency, or some other problem—the dependency is updated to a guarded reliance on framework1.2, which means the developer should try to determine and fix the problem, restoring the fluid dependency. If the component is truly incompatible, developers create a permanent static reference to the old version, eschewing future automatic updates.

None of the popular build tools support this level of functionality yet—developers must build this intelligence atop existing build tools. However, this model of dependencies works extremely well in evolutionary architectures, where cycle time is a critical foundational value, being proportional to many other key metrics.

Version Services Internally

In any integration architecture, developers inevitably must version service endpoints as the behavior evolves. Developers use two common patterns to version endpoints, version numbering or internal resolution. For version numbering, developers create a new endpoint name, often including the version number, when a breaking change occurs. This allows older integration points to call the legacy version while newer ones call the newer version. The alternative is internal resolution, where callers never change the endpoint—instead, developers build logic into the endpoint to determine the context of the caller, returning the correct version. The advantage of retaining the name forever is less coupling to specific version numbers in calling applications.

In either case, severely limit the number of supported versions. The more versions, the more testing and other engineering burdens. Strive to support only two versions at a time, and only temporarily.

Case Study: Evolving PenultimateWidgets’ Ratings

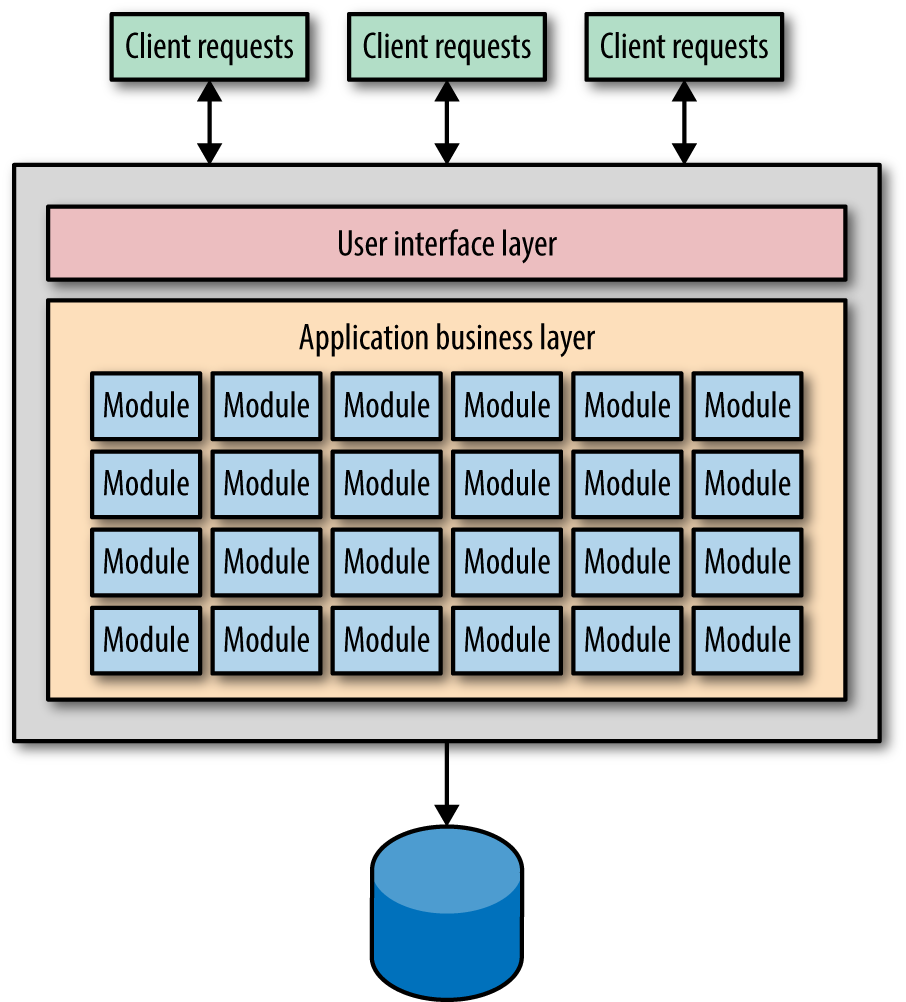

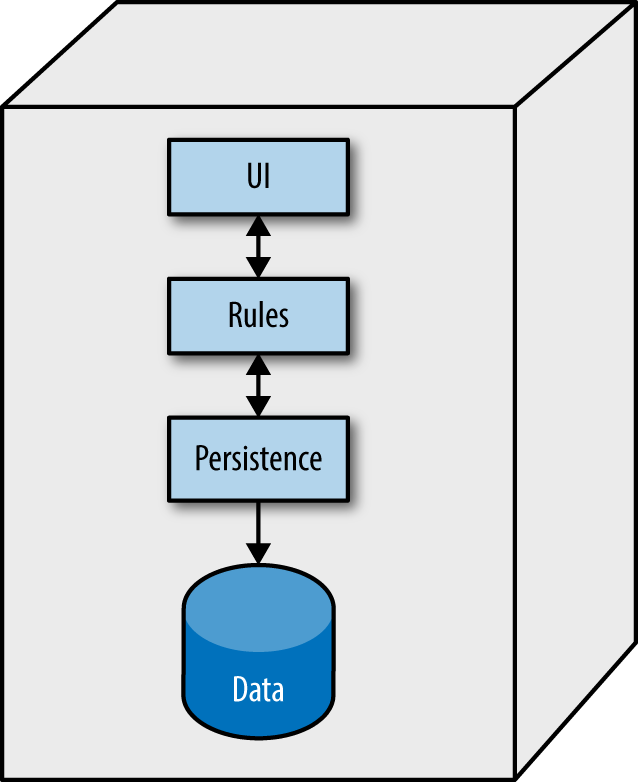

PenultimateWidgets has a microservices architecture so developers can make small changes. Let’s look closer at details of one of those changes, switching star ratings, as outlined in Chapter 3. Currently, PenultimateWidgets has a star rating service, whose parts are shown in Figure 6-9.

Figure 6-9. The internals of Widgetco’s StarRating service

As shown in Figure 6-9, the star rating service consists of a database and a layered architecture, with persistence, business rules, and a UI. Not all of PenultimateWidgets’ microservices include the UI. Some services are primarily informational, whereas others have UIs tightly coupled to the service’s behavior, as is the case with star ratings. The database is a traditional relational database that includes a column to track ratings for a particular item ID.

When the team decided to update the service to support half-star ratings, they modified the original service as shown in Figure 6-10.

Figure 6-10. The transitional phase, where StarRating supports both types

In Figure 6-10, they added a new column to the database to handle the additional data—whether a rating has an additional half star. The architects also added a proxy component to our service to resolve the return differences at the service boundary. Rather than force calling services to “understand” the version numbers of this service, the star rating service resolves the request type, sending back whichever format is requested. This is an example of using routing as an evolutionary mechanism. The star rating service can exist in this state as long as some services still want star ratings.

Once the last dependent service has evolved away from whole-star ratings, developers can remove the old code path, as shown in Figure 6-11.

Figure 6-11. The ending state of StarRating, supporting only the new type of rating

As shown in Figure 6-11, developers can remove the old code path, and perhaps remove the proxy layer to handle version differences (or perhaps leave it to support future evolution).

In this case, PenultimateWidgets’ change wasn’t difficult from a data evolution standpoint because the developers were able to make an additive change, meaning they can add to the database schema rather than change it. What about the case where the database must change as well because of a new feature? Refer back to the discussion on evolutionary data design in Chapter 5.