Chapter 2. Understanding Reactive Microservices and Vert.x

Microservices are not really a new thing. They arose from research conducted in the 1970s and have come into the spotlight recently because microservices are a way to move faster, to deliver value more easily, and to improve agility. However, microservices have roots in actor-based systems, service design, dynamic and autonomic systems, domain-driven design, and distributed systems. The fine-grained modular design of microservices inevitably leads developers to create distributed systems. As I’m sure you’ve noticed, distributed systems are hard. They fail, they are slow, they are bound by the CAP and FLP theorems. In other words, they are very complicated to build and maintain. That’s where reactive comes in.

But what is reactive? Reactive is an overloaded term these days. The Oxford dictionary defines reactive as “showing a response to a stimulus.” So, reactive software reacts and adapts its behavior based on the stimuli it receives. However, the responsiveness and adaptability promoted by this definition are programming challenges because the flow of computation isn’t controlled by the programmer but by the stimuli. In this chapter, we are going to see how Vert.x helps you be reactive by combining:

-

Reactive programming—A development model focusing on the observation of data streams, reacting on changes, and propagating them

-

Reactive system—An architecture style used to build responsive and robust distributed systems based on asynchronous message-passing

A reactive microservice is the building block of reactive microservice systems. However, due to their asynchronous aspect, the implementation of these microservices is challenging. Reactive programming reduces this complexity. How? Let’s answer this question right now.

Reactive Programming

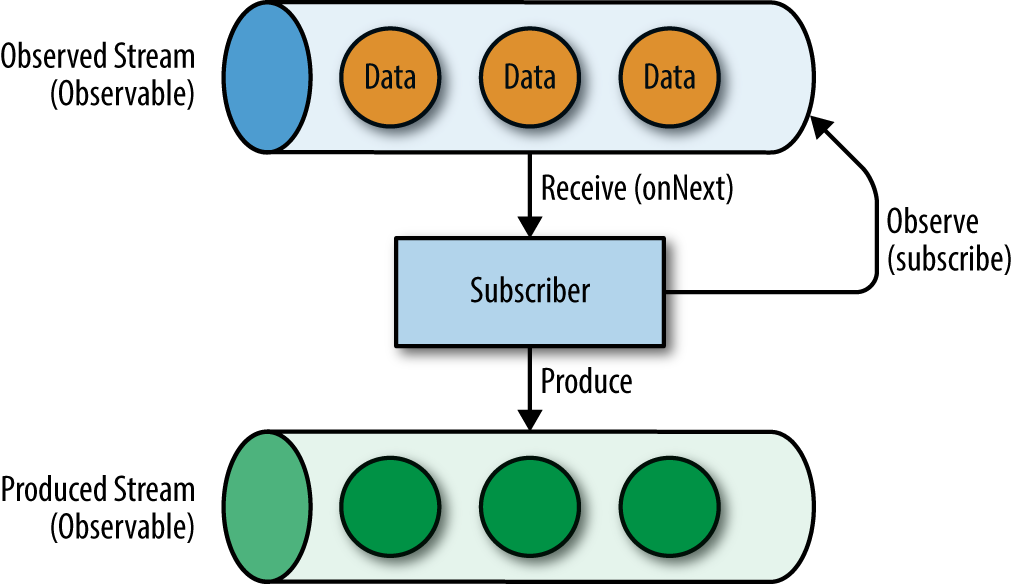

Figure 2-1. Reactive programming is about flow of data and reacting to it

Reactive programming is a development model oriented around data flows and the propagation of data. In reactive programming, the stimuli are the data transiting in the flow, which are called streams. There are many ways to implement a reactive programming model. In this report, we are going to use Reactive Extensions (http://reactivex.io/) where streams are called observables, and consumers subscribe to these observables and react to the values (Figure 2-1).

To make these concepts less abstract, let’s look at an example using RxJava (https://github.com/ReactiveX/RxJava), a library implementing the Reactive Extensions in Java. These examples are located in the directory reactive-programming in the code repository.

observable.subscribe(data->{// onNextSystem.out.println(data);},error->{// onErrorerror.printStackTrace();},()->{// onCompleteSystem.out.println("No more data");});

In this snippet, the code is observing (subscribe) an Observable and is notified when values transit in the flow. The subscriber can receive three types of events. onNext is called when there is a new value, while onError is called when an error is emitted in the stream or a stage throws an Exception. The onComplete callback is invoked when the end of the stream is reached, which would not occur for unbounded streams. RxJava includes a set of operators to produce, transform, and coordinate Observables, such as map to transform a value into another value, or flatMap to produce an Observable or chain another asynchronous action:

// sensor is an unbound observable publishing values.sensor// Groups values 10 by 10, and produces an observable// with these values..window(10)// Compute the average on each group.flatMap(MathObservable::averageInteger)// Produce a json representation of the average.map(average->"{'average': "+average+"}").subscribe(data->{System.out.println(data);},error->{error.printStackTrace();});

RxJava v1.x defines different types of streams as follows:

-

Observables are bounded or unbounded streams expected to contain a sequence of values. -

Singles are streams with a single value, generally the deferred result of an operation, similar to futures or promises. -

Completables are streams without value but with an indication of whether an operation completed or failed.

What can we do with RxJava? For instance, we can describe sequences of asynchronous actions and orchestrate them. Let’s imagine you want to download a document, process it, and upload it. The download and upload operations are asynchronous. To develop this sequence, you use something like:

// Asynchronous task downloading a documentFuture<String>downloadTask=download();// Create a single completed when the document is downloaded.Single.from(downloadTask)// Process the content.map(content->process(content))// Upload the document, this asynchronous operation// just indicates its successful completion or failure..flatMapCompletable(payload->upload(payload)).subscribe(()->System.out.println("Document downloaded, updatedand uploaded"),t->t.printStackTrace());

You can also orchestrate asynchronous tasks. For example, to combine the results of two asynchronous operations, you use the zip operator combining values of different streams:

// Download two documentsSingle<String>downloadTask1=downloadFirstDocument();Single<String>downloadTask2=downloadSecondDocument();// When both documents are downloaded, combine themSingle.zip(downloadTask1,downloadTask2,(doc1,doc2)->doc1+" "+doc2).subscribe((doc)->System.out.println("Document combined: "+doc),t->t.printStackTrace());

The use of these operators gives you superpowers: you can coordinate asynchronous tasks and data flow in a declarative and elegant way. How is this related to reactive microservices? To answer this question, let’s have a look at reactive systems.

Reactive Systems

While reactive programming is a development model, reactive systems is an architectural style used to build distributed systems (http://www.reactivemanifesto.org/). It’s a set of principles used to achieve responsiveness and build systems that respond to requests in a timely fashion even with failures or under load.

To build such a system, reactive systems embrace a message-driven approach. All the components interact using messages sent and received asynchronously. To decouple senders and receivers, components send messages to virtual addresses. They also register to the virtual addresses to receive messages. An address is a destination identifier such as an opaque string or a URL. Several receivers can be registered on the same address—the delivery semantic depends on the underlying technology. Senders do not block and wait for a response. The sender may receive a response later, but in the meantime, he can receive and send other messages. This asynchronous aspect is particularly important and impacts how your application is developed.

Using asynchronous message-passing interactions provides reactive systems with two critical properties:

-

Elasticity—The ability to scale horizontally (scale out/in)

-

Resilience—The ability to handle failure and recover

Elasticity comes from the decoupling provided by message interactions. Messages sent to an address can be consumed by a set of consumers using a load-balancing strategy. When a reactive system faces a spike in load, it can spawn new instances of consumers and dispose of them afterward.

This resilience characteristic is provided by the ability to handle failure without blocking as well as the ability to replicate components. First, message interactions allow components to deal with failure locally. Thanks to the asynchronous aspect, components do not actively wait for responses, so a failure happening in one component would not impact other components. Replication is also a key ability to handle resilience. When one node-processing message fails, the message can be processed by another node registered on the same address.

Thanks to these two characteristics, the system becomes responsive. It can adapt to higher or lower loads and continue to serve requests in the face of high loads or failures. This set of principles is primordial when building microservice systems that are highly distributed, and when dealing with services beyond the control of the caller. It is necessary to run several instances of your services to balance the load and handle failures without breaking the availability. We will see in the next chapters how Vert.x addresses these topics.

Reactive Microservices

When building a microservice (and thus distributed) system, each service can change, evolve, fail, exhibit slowness, or be withdrawn at any time. Such issues must not impact the behavior of the whole system. Your system must embrace changes and be able to handle failures. You may run in a degraded mode, but your system should still be able to handle the requests.

To ensure such behavior, reactive microservice systems are comprised of reactive microservices. These microservices have four characteristics:

-

Autonomy

-

Asynchronisity

-

Resilience

-

Elasticity

Reactive microservices are autonomous. They can adapt to the availability or unavailability of the services surrounding them. However, autonomy comes paired with isolation. Reactive microservices can handle failure locally, act independently, and cooperate with others as needed. A reactive microservice uses asynchronous message-passing to interact with its peers. It also receives messages and has the ability to produce responses to these messages.

Thanks to the asynchronous message-passing, reactive microservices can face failures and adapt their behavior accordingly. Failures should not be propagated but handled close to the root cause. When a microservice blows up, the consumer microservice must handle the failure and not propagate it. This isolation principle is a key characteristic to prevent failures from bubbling up and breaking the whole system. Resilience is not only about managing failure, it’s also about self-healing. A reactive microservice should implement recovery or compensation strategies when failures occur.

Finally, a reactive microservice must be elastic, so the system can adapt to the number of instances to manage the load. This implies a set of constraints such as avoiding in-memory state, sharing state between instances if required, or being able to route messages to the same instances for stateful services.

What About Vert.x ?

Vert.x is a toolkit for building reactive and distributed systems using an asynchronous nonblocking development model. Because it’s a toolkit and not a framework, you use Vert.x as any other library. It does not constrain how you build or structure your system; you use it as you want. Vert.x is very flexible; you can use it as a standalone application or embedded in a larger one.

From a developer standpoint, Vert.x a set of JAR files. Each Vert.x module is a JAR file that you add to your $CLASSPATH. From HTTP servers and clients, to messaging, to lower-level protocols such as TCP or UDP, Vert.x provides a large set of modules to build your application the way you want. You can pick any of these modules in addition to Vert.x Core (the main Vert.x component) to build your system. Figure 2-2 shows an excerpt view of the Vert.x ecosystem.

Figure 2-2. An incomplete overview of the Vert.x ecosystem

Vert.x also provides a great stack to help build microservice systems. Vert.x pushed the microservice approach before it became popular. It has been designed and built to provide an intuitive and powerful way to build microservice systems. And that’s not all. With Vert.x you can build reactive microservices. When building a microservice with Vert.x, it infuses one of its core characteristics to the microservice: it becomes asynchronous all the way.

Asynchronous Development Model

All applications built with Vert.x are asynchronous. Vert.x applications are event-driven and nonblocking. Your application is notified when something interesting happens. Let’s look at a concrete example. Vert.x provides an easy way to create an HTTP server. This HTTP server is notified every time an HTTP request is received:

vertx.createHttpServer().requestHandler(request->{// This handler will be called every time an HTTP// request is received at the serverrequest.response().end("hello Vert.x");}).listen(8080);

In this example, we set a requestHandler to receive the HTTP requests (event) and send hello Vert.x back (reaction). A Handler is a function called when an event occurs. In our example, the code of the handler is executed with each incoming request. Notice that a Handler does not return a result. However, a Handler can provide a result. How this result is provided depends on the type of interaction. In the last snippet, it just writes the result into the HTTP response. The Handler is chained to a listen request on the socket. Invoking this HTTP endpoint produces a simple HTTP response:

HTTP/1.1 200 OK Content-Length: 12 hello Vert.x

With very few exceptions, none of the APIs in Vert.x block the calling thread. If a result can be provided immediately, it will be returned; otherwise, a Handler is used to receive events at a later time. The Handler is notified when an event is ready to be processed or when the result of an asynchronous operation has been computed.

In traditional imperative programming, you would write something like:

intres=compute(1,2);

In this code, you wait for the result of the method. When switching to an asynchronous nonblocking development model, you pass a Handler invoked when the result is ready:1

compute(1,2,res->{// Called with the result});

In the last snippet, compute does not return a result anymore, so you don’t wait until this result is computed and returned. You pass a Handler that is called when the result is ready.

Thanks to this nonblocking development model, you can handle a highly concurrent workload using a small number of threads. In most cases, Vert.x calls your handlers using a thread called an event loop. This event loop is depicted in Figure 2-3. It consumes a queue of events and dispatches each event to the interested Handlers.

Figure 2-3. The event loop principle

The threading model proposed by the event loop has a huge benefit: it simplifies concurrency. As there is only one thread, you are always called by the same thread and never concurrently. However, it also has a very important rule that you must obey:

Don’t block the event loop.

Vert.x golden rule

Because nothing blocks, an event loop can deliver a huge number of events in a short amount of time. This is called the reactor pattern (https://en.wikipedia.org/wiki/Reactor_pattern).

Let’s imagine, for a moment, that you break the rule. In the previous code snippet, the request handler is always called from the same event loop. So, if the HTTP request processing blocks instead of replying to the user immediately, the other requests would not be handled in a timely fashion and would be queued, waiting for the thread to be released. You would lose the scalability and efficiency benefit of Vert.x. So what can be blocking? The first obvious example is JDBC database accesses. They are blocking by nature. Long computations are also blocking. For example, a code calculating Pi to the 200,000th decimal point is definitely blocking. Don’t worry—Vert.x also provides constructs to deal with blocking code.

In a standard reactor implementation, there is a single event loop thread that runs around in a loop delivering all events to all handlers as they arrive. The issue with a single thread is simple: it can only run on a single CPU core at one time. Vert.x works differently here. Instead of a single event loop, each Vert.x instance maintains several event loops, which is called a multireactor pattern, as shown in Figure 2-4.

Figure 2-4. The multireactor principle

The events are dispatched by the different event loops. However, once a Handler is executed by an event loop, it will always be invoked by this event loop, enforcing the concurrency benefits of the reactor pattern. If, like in Figure 2-4, you have several event loops, it can balance the load on different CPU cores. How does that work with our HTTP example? Vert.x registers the socket listener once and dispatches the requests to the different event loops.

Verticles—the Building Blocks

Vert.x gives you a lot of freedom in how you can shape your application and code. But it also provides bricks to easily start writing Vert.x applications and comes with a simple, scalable, actor-like deployment and concurrency model out of the box. Verticles are chunks of code that get deployed and run by Vert.x. An application, such as a microservice, would typically be comprised of many verticle instances running in the same Vert.x instance at the same time. A verticle typically creates servers or clients, registers a set of Handlers, and encapsulates a part of the business logic of the system.

Regular verticles are executed on the Vert.x event loop and can never block. Vert.x ensures that each verticle is always executed by the same thread and never concurrently, hence avoiding synchronization constructs. In Java, a verticle is a class extending the AbstractVerticle class:

importio.vertx.core.AbstractVerticle;publicclassMyVerticleextendsAbstractVerticle{@Overridepublicvoidstart()throwsException{// Executed when the verticle is deployed}@Overridepublicvoidstop()throwsException{// Executed when the verticle is un-deployed}}

Verticles have access to the vertx member (provided by the AbstractVerticle class) to create servers and clients and to interact with the other verticles. Verticles can also deploy other verticles, configure them, and set the number of instances to create. The instances are associated with the different event loops (implementing the multireactor pattern), and Vert.x balances the load among these instances.

From Callbacks to Observables

As seen in the previous sections, the Vert.x development model uses callbacks. When orchestrating several asynchronous actions, this callback-based development model tends to produce complex code. For example, let’s look at how we would retrieve data from a database. First, we need a connection to the database, then we send a query to the database, process the results, and release the connection. All these operations are asynchronous. Using callbacks, you would write the following code using the Vert.x JDBC client:

client.getConnection(conn->{if(conn.failed()){/* failure handling */}else{SQLConnectionconnection=conn.result();connection.query("SELECT * from PRODUCTS",rs->{if(rs.failed()){/* failure handling */}else{List<JsonArray>lines=rs.result().getResults();for(JsonArrayl:lines){System.out.println(newProduct(l));}connection.close(done->{if(done.failed()){/* failure handling */}});}});}});

While still manageable, the example shows that callbacks can quickly lead to unreadable code. You can also use Vert.x Futures to handle asynchronous actions. Unlike Java Futures, Vert.x Futures are nonblocking. Futures provide higher-level composition operators to build sequences of actions or to execute actions in parallel. Typically, as demonstrated in the next snippet, we compose futures to build the sequence of asynchronous actions:

Future<SQLConnection>future=getConnection();future.compose(conn->{connection.set(conn);// Return a future of ResultSetreturnselectProduct(conn);})// Return a collection of products by mapping// each row to a Product.map(result->toProducts(result.getResults())).setHandler(ar->{if(ar.failed()){/* failure handling */}else{ar.result().forEach(System.out::println);}connection.get().close(done->{if(done.failed()){/* failure handling */}});});

However, while Futures make the code a bit more declarative, we are retrieving all the rows in one batch and processing them. This result can be huge and take a lot of time to be retrieved. At the same time, you don’t need the whole result to start processing it. We can process each row one by one as soon as you have them. Fortunately, Vert.x provides an answer to this development model challenge and offers you a way to implement reactive microservices using a reactive programming development model. Vert.x provides RxJava APIs to:

-

Combine and coordinate asynchronous tasks

-

React to incoming messages as a stream of input

Let’s rewrite the previous code using the RxJava APIs:

// We retrieve a connection and cache it,// so we can retrieve the value later.Single<SQLConnection>connection=client.rxGetConnection();connection.flatMapObservable(conn->conn// Execute the query.rxQueryStream("SELECT * from PRODUCTS")// Publish the rows one by one in a new Observable.flatMapObservable(SQLRowStream::toObservable)// Don't forget to close the connection.doAfterTerminate(conn::close))// Map every row to a Product.map(Product::new)// Display the result one by one.subscribe(System.out::println);

In addition to improving readability, reactive programming allows you to subscribe to a stream of results and process items as soon as they are available. With Vert.x you can choose the development model you prefer. In this report, we will use both callbacks and RxJava.

Let’s Start Coding!

It’s time for you to get your hands dirty. We are going to use Apache Maven and the Vert.x Maven plug-in to develop our first Vert.x application. However, you can use whichever tool you want (Gradle, Apache Maven with another packaging plug-in, or Apache Ant). You will find different examples in the code repository (in the packaging-examples directory). The code shown in this section is located in the hello-vertx directory.

Project Creation

Create a directory called my-first-vertx-app and move into this directory:

mkdir my-first-vertx-app

cd my-first-vertx-app

Then, issue the following command:

mvn io.fabric8:vertx-maven-plugin:1.0.5:setup-DprojectGroupId=io.vertx.sample-DprojectArtifactId=my-first-vertx-app-Dverticle=io.vertx.sample.MyFirstVerticle

This command generates the Maven project structure, configures the vertx-maven-plugin, and creates a verticle class (io.vertx.sample.MyFirstVerticle), which does nothing.

Write Your First Verticle

It’s now time to write the code for your first verticle. Modify the src/main/java/io/vertx/sample/MyFirstVerticle.java file with the following content:

packageio.vertx.sample;importio.vertx.core.AbstractVerticle;/*** A verticle extends the AbstractVerticle class.*/publicclassMyFirstVerticleextendsAbstractVerticle{@Overridepublicvoidstart()throwsException{// We create a HTTP server objectvertx.createHttpServer()// The requestHandler is called for each incoming// HTTP request, we print the name of the thread.requestHandler(req->{req.response().end("Hello from "+Thread.currentThread().getName());}).listen(8080);// start the server on port 8080}}

To run this application, launch:

mvn compile vertx:run

If everything went fine, you should be able to see your application by opening http://localhost:8080 in a browser. The vertx:run goal launches the Vert.x application and also watches code alterations. So, if you edit the source code, the application will be automatically recompiled and restarted.

Let’s now look at the application output:

Hello from vert.x-eventloop-thread-0

The request has been processed by the event loop 0. You can try to emit more requests. The requests will always be processed by the same event loop, enforcing the concurrency model of Vert.x. Hit Ctrl+C to stop the execution.

Using RxJava

At this point, let’s take a look at the RxJava support provided by Vert.x to better understand how it works. In your pom.xml file, add the following dependency:

<dependency><groupId>io.vertx</groupId><artifactId>vertx-rx-java</artifactId></dependency>

Next, change the <vertx.verticle> property to be io.vertx.sample.MyFirstRXVerticle. This property tells the Vert.x Maven plug-in which verticle is the entry point of the application. Create the new verticle class (io.vertx.sample.MyFirstRXVerticle) with the following content:

packageio.vertx.sample;// We use the .rxjava. package containing the RX-ified APIsimportio.vertx.rxjava.core.AbstractVerticle;importio.vertx.rxjava.core.http.HttpServer;publicclassMyFirstRXVerticleextendsAbstractVerticle{@Overridepublicvoidstart(){HttpServerserver=vertx.createHttpServer();// We get the stream of request as Observableserver.requestStream().toObservable().subscribe(req->// for each HTTP request, this method is calledreq.response().end("Hello from "+Thread.currentThread().getName()));// We start the server using rxListen returning a// Single of HTTP server. We need to subscribe to// trigger the operationserver.rxListen(8080).subscribe();}}

The RxJava variants of the Vert.x APIs are provided in packages with rxjava in their name. RxJava methods are prefixed with rx, such as rxListen. In addition, the APIs are enhanced with methods providing Observable objects on which you can subscribe to receive the conveyed data.

Packaging Your Application as a Fat Jar

The Vert.x Maven plug-in packages the application in a fat jar. Once packaged, you can easily launch the application using java -jar <name>.jar:

mvn clean package

cd target

java -jar my-first-vertx-app-1.0-SNAPSHOT.jar

The application is up again, listening for HTTP traffic on the port specified. Hit Ctrl+C to stop it.

As an unopinionated toolkit, Vert.x does not promote one packaging model over another—you are free to use the packaging model you prefer. For instance, you could use fat jars, a filesystem approach with libraries in a specific directory, or embed the application in a war file and start Vert.x programmatically.

In this report, we will use fat jars, i.e., self-contained JAR embedding the code of the application, its resources, as well as all of its dependencies. This includes Vert.x, the Vert.x components you are using, and their dependencies. This packaging model uses a flat class loader mechanism, which makes it easier to understand application startup, dependency ordering, and logs. More importantly, it helps reduce the number of moving pieces that need to be installed in production. You don’t deploy an application to an existing app server. Once it is packaged in its fat jar, the application is ready to run with a simple java -jar <name.jar>. The Vert.x Maven plug-in builds a fat jar for you, but you can use another Maven plug-in such as the maven-shader-plugin too.

Logging, Monitoring, and Other Production Elements

Having fat jar is a great packaging model for microservices and other types of applications as they simplify the deployment and launch. But what about the features generally offered by app servers that make your application production ready? Typically, we expect to be able to write and collect logs, monitor the application, push external configuration, add health checks, and so on.

Don’t worry—Vert.x provides all these features. And because Vert.x is neutral, it provides several alternatives, letting you choose or implement your own. For example, for logging, Vert.x does not push a specific logging framework but instead allows you to use any logging framework you want, such as Apache Log4J 1 or 2, SLF4J, or even JUL (the JDK logging API). If you are interested in the messages logged by Vert.x itself, the internal Vert.x logging can be configured to use any of these logging frameworks. Monitoring Vert.x applications is generally done using JMX. The Vert.x Dropwizard Metric module provides Vert.x metrics to JMX. You can also choose to publish these metrics to a monitoring server such as Prometheus (https://prometheus.io/) or CloudForms (https://www.redhat.com/en/technologies/management/cloudforms).

Summary

In this chapter we learned about reactive microservices and Vert.x. You also created your first Vert.x application. This chapter is by no means a comprehensive guide and just provides a quick introduction to the main concepts. If you want to go further on these topics, check out the following resources:

1 This code uses the lambda expressions introduced in Java 8. More details about this notation can be found at http://bit.ly/2nsyJJv.