Chapter 9

Software Development Security

Terms you’ll need to understand:

Acceptance testing

Acceptance testing Cohesion

Cohesion Coupling

Coupling Tuple

Tuple Polyinstantiation

Polyinstantiation Inference

Inference Fuzzing

Fuzzing Bytecode

Bytecode Database

Database Buffer overflow

Buffer overflow

Topics you’ll need to master:

The role of security in the software development lifecycle

The role of security in the software development lifecycle Database design

Database design The Capability Maturity Model

The Capability Maturity Model The steps of the development lifecycle

The steps of the development lifecycle How to determine the effectiveness of software security

How to determine the effectiveness of software security The impact of acquired software security

The impact of acquired software security Different types of application design techniques

Different types of application design techniques The role of change management

The role of change management The primary types of database

The primary types of database

Introduction

Software plays a key role in the productivity of most organizations, but in many cases, we must contend with bugs and vulnerabilities in software. If you were to buy a defective car that exploded in minor accidents, the manufacturer would be forced to recall the car. However, if you buy a buggy piece of software, you have little recourse: You could wait for a patch, buy an upgrade, or maybe just buy another vendor’s product. Well-written applications are essential for good security. This chapter focuses on topics a security professional must know in order to apply security in the context of the CIA triad (confidentiality, integrity, and availability) to the software development lifecycle (SDLC), including programming languages, application design methodologies, change management, and database design.

Databases contain some of the most critical assets of an organization and are often targeted by hackers. As a security professional, you must understand design, security issues, control mechanisms, and common vulnerabilities of databases. In addition to protecting the corporation’s database from attacks, a security professional must be sensitive to the interconnectivity of databases and the rise of large online cloud databases.

Integrating Security into the Development Lifecycle

As a security professional, you are not expected to be an expert programmer or understand the inner workings of a Python script. You must, however, know the overall environment in which software and systems are developed. You must understand and integrate security into the SDLC. You must also understand the software development process and be able to recognize whether adequate controls have been developed and implemented. It is important to keep in mind that it’s always cheaper to build in security up front than it is to add it later. Organizations accomplish this by using a structured approach that offers a number of benefits:

Minimizes risk

Minimizes risk Maximizes return on investment from using the software

Maximizes return on investment from using the software Establishes security controls so that the risk associated with using software is mitigated

Establishes security controls so that the risk associated with using software is mitigated

New systems are created when new opportunities are discovered; organizations take advantage of these technologies to solve existing problems, accelerate business processes, and improve productivity. Although it’s easy to see the need to incorporate security from the beginning of the process, the historical reality of design and development has been deficient in this regard. Most organizations are understaffed, and duties are not properly separated. Too often, inadequate consideration is given to the implementation of access-limiting controls from within a program’s code.

Code that is not properly secured has exposure points and vulnerabilities. New technologies and developments such as cloud computing and the Internet of Things (IoT) have made using a structured, secure development process even more important than in the past. It is critical that development teams enforce a structured SDLC that has checks and balances and where security is considered from start to finish.

Avoiding System Failure

No matter how hard we plan, systems fail. Organizations must prepare for failures through the use of compensating controls that help limit the damage. Some examples of compensating controls are checks and application controls and fail-safe procedures.

Checks and Application Controls

The easiest way to minimize problems in the processing of data is to ensure that only accurate, complete, and timely inputs can occur. Even poorly written applications can be made more robust by adding controls that check limits, data formats, and data lengths; these controls provide data input validation. Controls verifying that data is only processed through authorized routines should be in place. These application controls should be designed to detect any problems and to initiate corrective action. If there are mechanisms in place that permit the override of these security controls, their use should be logged and reviewed. Table 9.1 lists some common types of controls.

TABLE 9.1 Checks and Controls

Check or Application Control |

Description |

|---|---|

Sequence check |

Verifies that all sequence numbers fall within a specific series. For example, checks are numbered sequentially. If the day’s first-issued check is number 120, and the last check is number 144, all checks issued that day should fall between those numbers, and none should be missing. |

Limit check |

Ensures that data to be processed does not exceed a predetermined limit. For example, if a sale item is limited to five per customer, sales over that quantity should trigger an alert. |

Range check |

Ensures that data is within a predetermined range. For example, a date range check might verify that any date input is after 01/01/2021 and before 01/01/2025. |

Validity check |

Looks at the logical appropriateness of data. For example, orders to be processed today should be dated with the current date. |

Table lookups |

Verifies that a data point matches a data point in a set of values entered into a lookup table. |

Existence check |

Verifies that all required data is entered and appropriate. |

Completeness check |

Ensures that all required data has been added and that no fields contain null values. |

Duplicate check |

Ensures that a transaction is not a duplicate. For example, before a payment is made on invoice 833 for $1,612, accounts payable should verify that invoice number 833 has not already been paid. |

Logic check |

Verifies logic between data fields. For example, if Michael lives in Houston, his zip code cannot be the Dallas zip code 76450. |

Failure States

Because all applications can fail, it is important that developers create mechanisms for safe failure with damage contained. Well-coded applications have built-in recovery procedures that are triggered if failure is detected; to protect a system from compromise, services can be terminated and systems can be disabled until the cause of failure can be investigated.

Tip

Systems that recover into a fail-open state are open to compromise as an attacker could easily strike. Systems should not typically fail open because of the security risk. However, some intrusion detection systems/intrusion prevention systems (IDSs/IPSs) go into fail-open state to prevent disruption of traffic.

The Software Development Lifecycle

The software development lifecycle (SDLC), also referred to as the system development lifecycle, is a framework for system development that can facilitate and structure the development process. It describes a process for planning, creating, testing, and deploying an information system. The National Institute of Standards and Technology (NIST) defines the SDLC in NIST SP 800-34 as “the scope of activities associated with a system, encompassing the system’s initiation, development and acquisition, implementation, operation and maintenance, and ultimately its disposal that instigates another system initiation.”

Many other framework models exist, such as the Microsoft Security Development Lifecycle (SDL), which consists of the phases training, requirements, design, implementation, verification, release, and response. Although the stages and specific terms vary from one framework to the next, security concerns should be integrated into every stage. The overall goal is the same: to control the development process and add security at each level or stage of the process.

The SDLC has seven distinct stages:

Project initiation

Functional requirements and planning

Software design specifications

Software development and build

Acceptance testing and implementation

Operational/maintenance

Disposal

ExamAlert

Read all CISSP exam questions carefully to make sure you understand the context in which SDL, SDLC, and other terms are being used.

Regardless of the names that a given framework might assign to the various steps, the goal of a framework is to provide security in the software development lifecycle. The failure to adopt a structured development model increases a product’s risk of failure, and it is likely that the final product will not meet the customer’s needs. Table 9.2 describes the stages of development and the activities that occur in each phase.

TABLE 9.2 SDLC Stages and Activities

Stage |

Description |

Activities |

|---|---|---|

1 |

Project initiation |

Determine project feasibility, cost, and benefit analysis Conduct payback analysis Establish a preliminary project timeline Conduct risk analysis |

2 |

Functional requirements and planning |

Define the need for the solution Identify the requirements Review proposed security controls |

3 |

Software design specifications |

Develop detailed design specifications Review support documentation Examine adequacy of security controls |

4 |

Software development and build |

Develop code Check modules |

5 |

Acceptance testing and implementation |

Enforce separation of duties Perform testing |

6 |

Operational/maintenance |

Release into production Perform certification and accreditation |

7 |

Disposal |

At end of life, remove data from system or application and then from production Define the level of sanitization and destruction of unneeded data that is appropriate for classification |

Project Initiation

The project initiation stage usually involves a meeting that includes everyone involved with the project to answer big questions like What are we doing? Why are we doing it? and Who is our customer? At this meeting, the feasibility of the project is considered. The cost of the project must be discussed, as well as the potential benefits that the product is expected to bring to the system’s users. A payback analysis should be performed to determine how long the project will take to pay for itself. In other words, the payback analysis determines how much time will lapse before accrued benefits overtake accrued and continuing costs.

If the decision is to move the project forward, the team should develop a preliminary timeline. Discussions should be held to determine the level of risk involved with handling data and to establish the ramifications of accidental exposure. This activity clarifies the precise type and nature of information that will be processed, as well as its level of sensitivity. This first look at security must be completed before the functional requirements and planning stage begins.

ExamAlert

For the CISSP exam, you should understand that users should be brought into the process as early as possible. You are building something for them and must make sure that the designed system/product meets their needs.

Functional Requirements and Planning

The functional requirements and planning phase is the time to fully define the need for the solution and map how the proposed solution meets the need. This stage requires the participation of management as well as users. Users need to identify requirements and desires they have regarding the design of the application. Security representatives must verify the identified security requirements and determine whether adequate security controls are being defined.

An entity relationship diagram (ERD) is often used to help map the identified and verified requirements to the needs being met. An ERD defines the relationship between the many elements of a project. An ERD is similar to a database, grouping together like data elements. Each entity has a specific attribute, called the primary key, which is drawn as a rectangular box containing an identifying name. Relationships, drawn as diamonds, describe how the various entities are related to each other.

Figure 9.1 shows the basic design of an ERD. An ERD can be used to help define a data dictionary. After the data dictionary is designed, the database schema is developed to further define tables and fields and the relationships between them. The completed ERD becomes the blueprint for the design and is referred to during the design phase.

FIGURE 9.1 Entity Relationship Diagram

Software Design Specifications

Detailed design specifications can be generated either for a program that will be created or in support of the acquisition of an existing program. All functions and operations are described during the software design specifications stage. Programmers design screen layouts and create process diagrams. Supporting documentation is also generated. The output of the software design specifications stage is a set of specifications that delineates the new system as a collection of modules and subsystems.

Scope creep—the expansion of the scope of the project—most often occurs during this stage. Small changes in the design can add up over time. Although little changes might not appear to have big costs or impacts on the schedule of a project, these changes can have a cumulative effect and increase both the length and cost of the project.

Proper detail at this stage plays a large role in the overall security of the final product. Security should be the focus here as controls are developed to ensure input, output, audit mechanisms, and file protection. Sample input controls include dollar counts, transaction counts, error detection, and correction. Sample output controls include validity checking and authorization controls.

Software Development and Build

During the software development and build stage, programmers work to develop the application code specified in the previous stage, as illustrated in Figure 9.2.

FIGURE 9.2 Development and Build Stage Activities

Programmers should strive to develop modules that have high cohesion and low coupling. Cohesion refers to the ability of a module to perform a single task with low input from other modules. Coupling refers to the interconnections or dependencies between modules. Low coupling means that a change to one module should not affect another, and the module has high cohesion.

Tip

Sometimes you may not actually need to build software because purchasing a previously developed product is easier. In such situations, you still need to consider security. You need to fully test the acquired software to verify its security implications. With purchased software, the source code might not be available, and you may need to perform other types of testing.

This stage includes testing of the individual modules developed, and accurate results have a direct impact on the next stage: integrated testing with the main program. Maintenance hooks are sometimes used at this point in the process to allow programmers to test modules separately without using normal access control procedures. It is important that these maintenance hooks, also referred to as backdoors, be removed before the software code goes to production. Programmers might use online programming facilities to access the code directly from their workstations. Although this typically increases productivity, using online facilities and leaving maintenance hooks in place increases the risk that someone will gain unauthorized access to the program library.

ExamAlert

For the CISSP exam, you should understand that separation of duties is of critical importance during the SDLC process. Activities such as development, testing, and production should be properly separated, and duties should not overlap. For example, programmers should not have direct access to production (or released) code or have the ability to change production or released code.

Caution

Maintenance hooks, or backdoors, are software mechanisms that are installed to bypass the system’s security protections during the development and build stage. To prevent a potential security breach, these hooks must be removed before a product is released into production. As an example, The TextPortal application did not remove a maintenance hook, and it therefore had a weakness that could have enabled an attacker to obtain unauthorized access (see www.securityfocus.com/bid/7673/discuss). With this application, the undocumented password god2 could be used for the default administrative user account.

Several types of controls should be built into a program during this stage:

Preventive controls: These controls include user authentication and data encryption.

Preventive controls: These controls include user authentication and data encryption. Detective controls: These controls provide audit trails and logging mechanisms.

Detective controls: These controls provide audit trails and logging mechanisms. Corrective controls: These controls add fault tolerance and data integrity mechanisms.

Corrective controls: These controls add fault tolerance and data integrity mechanisms.

Three types of testing can be used to validate the security of the application:

Unit testing: This type of testing examines an individual program or module.

Unit testing: This type of testing examines an individual program or module. Interface testing: This type of testing examines hardware or software to evaluate how well data can be passed from one entity to another.

Interface testing: This type of testing examines hardware or software to evaluate how well data can be passed from one entity to another. System testing: This series of tests starts in this phase and continues into the acceptance testing phase. It includes recovery testing, security testing, stress testing, volume testing, and performance testing.

System testing: This series of tests starts in this phase and continues into the acceptance testing phase. It includes recovery testing, security testing, stress testing, volume testing, and performance testing.

Caution

Reverse engineering can be used to reduce development time. However, reverse engineering is somewhat controversial because it can be used to bypass normal access control mechanisms or disassemble another organization’s program illegally. Most software licenses make it illegal to reverse engineer the associated code. In addition, laws such as the Digital Millennium Copyright Act (DMCA) can also prohibit the reverse engineering of code.

Acceptance Testing and Implementation

The acceptance testing and implementation stage, which occurs when the application coding is complete, should not be performed by the programmers who created the code. Instead, testing should be performed by test experts or quality assurance engineers. An important concept here is separation of duties. If the code were built and verified by the same individuals, errors might be overlooked, and security functions might be bypassed.

You need to conduct this stage of the SDLC even when acquiring software instead of building your own. If you acquire software, you must assess the security impact of the software. You should have in place a software assurance policy that defines the software acquisition process:

Planning

Planning Procurement and contracting

Procurement and contracting Implementation and acceptance

Implementation and acceptance Follow-on

Follow-on

Note

Because usability, not security, is typically the central goal with a purchased piece of software, it is critical that security be included in a product specification.

Models vary greatly on specifically what tests should be completed and how much, if any, iteration is necessary within that testing. Table 9.3 lists some common types of acceptance and verification tests of which you should be aware.

TABLE 9.3 Acceptance and Verification Test Types

Test Type |

Description |

|---|---|

Alpha test |

This type of test is used to evaluate the first and earliest version of a completed prerelease application. |

Pilot/beta test |

This type of test is used to evaluate and verify the functionality of a prerelease application with limited users on limited production systems. |

Whitebox test |

This type of test verifies the inner program logic; it can be cost-prohibitive for large applications or systems. |

Blackbox test |

This is a type of integrity-based testing that looks at inputs and outputs but not the inner workings. |

Function test |

This type of test validates an application against a checklist of requirements. |

Regression test |

This type of test is used after a change is made to verify that inputs and outputs are correct and that interconnected systems show no abnormalities in how subsystems and processes are affected by the change. |

Parallel test |

This type of test is used to verify a new or changed application by feeding data into the new application and simultaneously into the old, unchanged application and comparing the results. |

Sociability test |

This type of test verifies that the application can operate in its targeted environment. |

Final test |

This type of test is usually performed after project staff are satisfied with all other tests and just before the application is ready to be deployed. |

When all pertinent issues and concerns have been worked out between the QA engineers, the security professionals, and the programmers, an application is ready for deployment.

ExamAlert

For the CISSP exam, you should understand that fuzzing is a form of blackbox testing that involves entering malformed data inputs and monitoring the application’s response. Fuzzing is commonly referred to as “garbage in, garbage out” testing because it throws “garbage” at the application to see what it can handle.

Operations/Maintenance

During the operations/maintenance phase, an application is prepared for release into its intended environment. This is the final opportunity to assess the effectiveness of the software’s security. Logging ensures accountability and nonrepudiation, and it facilitates audits. Some guidelines and best practices for logging include NIST SP 800-92 and the Open Web Application Security Project (OWASP) Cheat Sheet on logging.

This is the stage where final user acceptance is performed, and any required certification and/or accreditation is achieved. It is also the stage at which management accepts the application and agrees that it is ready for use.

Certification requires a technical review of a system or an application to ensure that it does what it is supposed to do. Certification testing often includes an audit of security controls, a risk assessment, and/or a security evaluation. Typically, the results of certification testing are compiled into a report that becomes the basis for accreditation, which is management’s formal acceptance of a system or an application. Management might request additional testing, ask questions about the certification report, or simply accept the results. When the system or application is accepted, a formal acceptance statement is usually issued.

Tip

Certification is a technical evaluation and analysis of the security features and safeguards of a system or an application to establish the extent to which the security requirements are satisfied and vendor claims are verified.

Accreditation is a formal process in which management officially approves the certification.

Operations management begins when an application is rolled out. Maintenance, support, and technical response must be addressed. Data conversion might also need to be considered. If an existing application is being replaced, data from the old application might need to be migrated to the new application. The rollout of the application might occur all at once or in a phased manner over time. Changeover techniques include the following:

Parallel operation: The old and new applications are run simultaneously, with all the same inputs, and the results between the two applications are compared. Fine-tuning can be performed on the new application as needed. As confidence in the new application improves, the old application can be shut down. The primary disadvantage of this method is that both applications must be maintained for a period of time.

Parallel operation: The old and new applications are run simultaneously, with all the same inputs, and the results between the two applications are compared. Fine-tuning can be performed on the new application as needed. As confidence in the new application improves, the old application can be shut down. The primary disadvantage of this method is that both applications must be maintained for a period of time. Phased changeover: With a large application, a phased changeover might be possible. With this method, applications are upgraded one piece at a time.

Phased changeover: With a large application, a phased changeover might be possible. With this method, applications are upgraded one piece at a time. Hard changeover: This method establishes a date on which users are forced to change over. The advantage of a hard changeover is that it forces all users to change at once. However, it introduces a level of risk into the environment because things can go wrong.

Hard changeover: This method establishes a date on which users are forced to change over. The advantage of a hard changeover is that it forces all users to change at once. However, it introduces a level of risk into the environment because things can go wrong.

Disposal

The disposal stage of the SDLC is reached when the application or system is no longer needed. Those involved in this stage of the process must consider how to dispose of the application securely, archive any information or data that might be needed in the future, perform disk sanitization (to ensure confidentiality), and dispose of equipment. This is an important step that is sometimes overlooked.

Development Methodologies

A crucial part of system development is finding a good framework and adhering to the process it entails. The sections that follow explain several proven software development processes. Each of these models involves a predictable lifecycle. Each model has strengths and weaknesses. Some work well when a time-sensitive or high-quality product is needed, whereas others offer greater quality control and can scale to very large projects.

The Waterfall Model

Probably the most well-known software development process is the waterfall model. This model, which was developed by Winston Royce in 1970, operates as the name suggests, progressing from one level down to the next. An advantage of the waterfall method is that it provides a sense of order and is easily documented.

The original waterfall model prevented developers from returning to stages once they were complete; the process flowed logically from one stage to the next. Modified versions of the model are common today, including the V-shaped methodology shown in Figure 9.3.

FIGURE 9.3 The V-Shaped Modified Waterfall Model

In the V-shaped model, instead of moving down only, the process steps are bent upward after the coding phase, to form the V shape for which the model is named. The V-shaped model requires testing during the entire development process. It is best used with projects that are small in scope. The primary disadvantage is that it does not work for large and complex projects because it does not allow for much revision.

The Spiral Model

In the spiral model, which was developed in 1988 by Barry Boehm, each phase starts with a design goal and ends with the client review. The client can be either internal or external and is responsible for reviewing progress. Analysis and engineering efforts are applied at each phase of the project.

An advantage of the spiral model is that it takes risk very seriously. Each phase of a project contains its own risk assessment, and each time a risk assessment is performed, the schedules and estimated cost to complete are reviewed, and a decision is made to continue or cancel the project. The spiral model works well for large projects. The disadvantage of this method is that it is much slower and takes longer to complete than the waterfall model. Figure 9.4 illustrates this model.

FIGURE 9.4 The Spiral Model

Joint Application Development (JAD)

Joint application development (JAD) is a process developed at IBM in 1977 that accelerates the design of information technology solutions. An advantage of JAD is that it helps developers work effectively with the users who will be using the applications developed. A disadvantage is that it requires users, expert developers, and technical experts to work closely together throughout the entire process. Projects that are good candidates for JAD have some of the following characteristics:

Involve a group of users whose responsibilities cross department or division boundaries

Involve a group of users whose responsibilities cross department or division boundaries Are considered critical to the future success of the organization

Are considered critical to the future success of the organization Involve users who are willing to participate

Involve users who are willing to participate Are developed in a workshop environment

Are developed in a workshop environment Use a facilitator who has no vested interest in the outcome

Use a facilitator who has no vested interest in the outcome

Rapid Application Development (RAD)

Rapid application development (RAD) is a fast application development process that delivers results quickly. RAD is not suitable for all projects, but it works well for projects that are on strict time limits. However, the decisions made quickly in RAD can lead to poor design and product. RAD is therefore not used for critical applications, such as shuttle launches. Two of the most popular RAD tools for Microsoft Windows are Delphi and Visual Basic.

Incremental Development

Incremental development is an approach that involves staged development of systems. Work is defined so that development is completed one step at a time. A minimal working application might be deployed, with subsequent releases to enhance functionality and/or scope.

Prototyping

Prototyping frameworks aim to reduce the time required to deploy applications. These frameworks use high-level code to quickly turn design requirements into application screens and reports that users can review. User feedback is gathered to fine-tune an application and improve it. Top-down testing works best with this development construct. Although prototyping clarifies user requirements, it also leads to the quick creation of a skeleton of a product with no guts surrounding it. Seeing complete forms and menus can confuse users and clients and lead to overly optimistic project timelines. Also, because change happens quickly, changes might not be properly documented, and scope creep might occur. Prototyping is often used for proprietary products being designed for specific customers.

ExamAlert

Prototyping is the process of building a proof-of-concept model that can be used to test various aspects of a design and verify its marketability. Prototyping is widely used during the development process.

Modified Prototype Model (MPM)

The modified prototype model (MPM) was designed to be used for web development. MPM focuses on quickly deploying basic functionality and then gathering user feedback to expand that functionality. MPM is especially useful when the final nature of the product is unknown.

Computer-Aided Software Engineering (CASE)

Computer-aided software engineering (CASE) enhances the SDLC by using software tools and automation to perform systematic analysis, design, development, and implementation of software products. The tools are useful for large, complex projects that involve multiple software components and lots of people. Its disadvantages are that it requires building and maintaining software tools and training developers to understand how to use the tools effectively. CASE can be used in the following cases:

For modeling real-world processes and data flows through applications

For modeling real-world processes and data flows through applications For developing data models to better understand processes

For developing data models to better understand processes For developing process and functional descriptions of models

For developing process and functional descriptions of models For producing databases and database management procedures

For producing databases and database management procedures For debugging and testing code

For debugging and testing code

Note

There are many different approaches in software development. Some are new takes on old methods and others have adapted a relatively new approach. Lean software development is one example and is a translation of lean manufacturing principles and practices to the software development domain. Its main goal is continuous product improvement at all operational levels and stages.

Agile Development Methods

Agile software development allows teams of programmers and business experts to work together closely.

According to the agile manifesto (see agilemanifesto.org):

We are uncovering better ways of developing software by doing it and helping others do it. Through this work, we have come to value:

Individuals and interactions over processes and tools.

Individuals and interactions over processes and tools. Working software over comprehensive documentation.

Working software over comprehensive documentation. Customer collaboration over contract negotiation.

Customer collaboration over contract negotiation. Responding to change over following a plan.

Responding to change over following a plan.

Agile project requirements are developed using an iterative approach, and an agile project is mission driven and component based. Agile development may make use of an integrated product team (IPT), which is a multitalented group of people who are responsible for creating and delivering a specified process or product. IPTs should be formed to manage the development of individual product elements or sustainment processes. These teams should be empowered to make critical decisions. Popular agile development models include the following:

Extreme programming (XP): The XP development model requires that teams include business managers, programmers, and end users. These teams are responsible for developing usable applications in short time frames. One potential problem with XP is that teams are responsible not only for coding but also for writing the tests used to verify the code. In addition, there is minimal focus on structured documentation, and XP does not scale well for large projects.

Extreme programming (XP): The XP development model requires that teams include business managers, programmers, and end users. These teams are responsible for developing usable applications in short time frames. One potential problem with XP is that teams are responsible not only for coding but also for writing the tests used to verify the code. In addition, there is minimal focus on structured documentation, and XP does not scale well for large projects. Scrum: Scrum is an iterative development method in which repetitions referred to as sprints typically last 30 days each. Scrum is typically used with object-oriented technology and requires strong leadership and a team that can meet at least briefly each day. The planning and direction of tasks passes from the project manager to the team. The project manager’s main task is to work on removing any obstacles from the team’s path. The scrum development method owes its name to the team dynamic structure of rugby.

Scrum: Scrum is an iterative development method in which repetitions referred to as sprints typically last 30 days each. Scrum is typically used with object-oriented technology and requires strong leadership and a team that can meet at least briefly each day. The planning and direction of tasks passes from the project manager to the team. The project manager’s main task is to work on removing any obstacles from the team’s path. The scrum development method owes its name to the team dynamic structure of rugby.

Note

Toyota developed an efficient development methodology known as kanban that stresses the use of virtual walls to track the various activities the team is tracking. These walls are typically divided into three columns: Planned, In Progress, and Done.

Maturity Models

The Capability Maturity Model (CMM) was designed as a framework for software developers to improve the software development process. It allows software developers to progress from an anything-goes type of development to a highly structured, repeatable process. As software developers grow and mature, their productivity increases, and the quality of their software products becomes more robust. Through the standardization activities of ISO 15504, the CMM officially became the Capability Maturity Model Integration (CMMI) in 2007. The CMMI includes five maturity levels, as shown in Table 9.4.

TABLE 9.4 CMMI Levels

Maturity Level |

Description |

|---|---|

Initial |

This is an ad hoc process with no assurance of repeatability. |

Managed |

Change control and quality assurance are in place and controlled by management, although formal processes are not defined. |

Defined |

Defined processes and procedures are in place and used. Qualitative process improvement is in place. |

Quantitatively managed |

Data is collected and analyzed. A process improvement program is used. |

Optimizing |

Continuous process improvement is in place and considered in the budget. |

Note

The five levels of the CMMI, as shown in Figure 9.5, have similarities with agile development methods, such as XP and scrum. The CMMI contains process areas and goals, and each goal comprises practices.

FIGURE 9.5 The CMMI

Carnegie Mellon University introduced the IDEAL model for software process improvement. It is a process-improvement and defect-reduction methodology. Table 9.5 outlines the maturity levels of the IDEAL model.

TABLE 9.5 IDEAL Model

Maturity Level |

Description |

|---|---|

Initiating |

Define the process improvement project. |

Diagnosing |

Identify the baseline of the current software development process. |

Establishing |

Define a strategic plan to improve the current software development process. |

Acting |

Implement the software process improvement plan. |

Leveraging |

Maintain and improve the software based on lessons learned. |

Scheduling

Scheduling involves linking individual tasks. The link relationships are based on earliest start date or latest expected finish date. Gantt charts provide a way to display these relationships.

The Gantt chart was developed in the early 1900s as a tool to assist the scheduling and monitoring of activities and progress. Gantt charts show the start and finish dates of each element of a project. Gantt charts also show the relationships between activities in a calendar-like format. They have become some of the primary tools used to communicate project schedule information. The baseline of a Gantt chart illustrates what will happen if a task is finished early or late.

Program evaluation and review technique (PERT) is the preferred tool for estimating time when a degree of uncertainty exists. PERT uses a critical path method that applies a weighted average duration estimate.

PERT uses probabilistic time estimates to create a three-point—best, worst, and most likely time—evolution of activities. The PERT weighted average is calculated as follows:

PERT weighted average = Optimistic time + 4 × Most likely time + Pessimistic time / 6

Every task branches out to three estimates:

One: The most optimistic time in which the task can be completed.

One: The most optimistic time in which the task can be completed. Two: The most likely time in which the task will be completed.

Two: The most likely time in which the task will be completed. Three: The worst-case scenario or longest time in which the task might be completed.

Three: The worst-case scenario or longest time in which the task might be completed.

Change Management

Change management is a formalized process for controlling modifications made to systems and programs: analyze a request, examine its feasibility and impact, and develop a timeline for implementing the approved changes. The change management process provides all concerned parties with an opportunity to voice their opinions and concerns before changes are made. Although types of changes vary, change control follows a predictable process with the following typical steps:

Request the change.

Approve the change request.

Document the change request.

Test the proposed change.

Present the results to the change control board.

Implement the change, if approved.

Document the new configuration.

Report the final status to management.

Tip

One important piece of change management that is sometimes overlooked is a way to back out of the change. Sometimes things can go wrong, and a change needs to be undone.

DevOps is an example of a change management approach that uses agile principles. DevOps, which combines development and operations, includes the following elements:

Testability: Develop/test against simulated production systems.

Testability: Develop/test against simulated production systems. Deployability: Deploy with automated processes that are iterative, repeatable, frequent, and reliable.

Deployability: Deploy with automated processes that are iterative, repeatable, frequent, and reliable. Monitorability: Monitor the application to address issues early on.

Monitorability: Monitor the application to address issues early on. Modifiability: Allow for efficient feedback by creating effective communication channels.

Modifiability: Allow for efficient feedback by creating effective communication channels.

Documentation is the key to a good change control process. Tracking should include receiving the request, evaluating the cost/benefit, and prioritizing the work of the developers and team. The system maintenance staff of the department requesting a change should keep a copy of that change’s approval. Without a change control process in place, there is significant potential for security breaches. The following are indicators of poor change control:

There is no formal change control process in place.

There is no formal change control process in place. Changes are implemented directly by the software vendors or others without internal control; this can indicate a lack of separation of duties.

Changes are implemented directly by the software vendors or others without internal control; this can indicate a lack of separation of duties. Programmers place code in an application that is not tested or validated.

Programmers place code in an application that is not tested or validated. A change that is made was not authorized by the change review board.

A change that is made was not authorized by the change review board. The programmer has access to both the object code and the production library; this situation presents a threat because the programmer might be able to make unauthorized changes to production code.

The programmer has access to both the object code and the production library; this situation presents a threat because the programmer might be able to make unauthorized changes to production code. Version control is not implemented.

Version control is not implemented.

In some cases, such as emergency situations, a change might occur without going through the change control process. These emergencies typically are in response to situations that endanger production or could halt a critical process. If programmers are to be given special access or provided with an increased level of control, the security professional with oversight should make sure that checks are in place to track those programmers’ access and record any changes made.

Database Management

Databases are important to business and government organizations as well as to individuals because they provide a way to catalog, index, and retrieve related pieces of information and facts. These repositories of data are widely used. If you have booked a reservation on a plane, looked up the history of a used car you were thinking about buying, or researched the ancestry of your family, you have most likely used a database during your quest.

A database can be centralized or distributed, depending on the database management system (DBMS) that has been implemented. The DBMS allows the database administrator to control all aspects of the database, including design, functionality, and security. There are several popular types of database management systems:

Hierarchical database management system: A hierarchical database links structures into a tree structure. Each record can have only one owner. Because of this, a hierarchical database often can’t be used to relate to structures in the real world.

Hierarchical database management system: A hierarchical database links structures into a tree structure. Each record can have only one owner. Because of this, a hierarchical database often can’t be used to relate to structures in the real world. Network database management system: This type of database system was developed to be more flexible than a hierarchical DBMS. The network database model is referred to as a lattice structure because each record can have multiple parent and child records.

Network database management system: This type of database system was developed to be more flexible than a hierarchical DBMS. The network database model is referred to as a lattice structure because each record can have multiple parent and child records. Relational database management system: A relational database consists of a collection of tables linked to each other by their primary keys. Many organizations use this model. Most relational databases use SQL as their query language. A relational DBMS (RDBMS) is a collection based on set theory and relational calculations. This type of database groups data into ordered pairs of relationships (each pair consisting of a row and column) known as a tuple. The majority of modern databases are relational.

Relational database management system: A relational database consists of a collection of tables linked to each other by their primary keys. Many organizations use this model. Most relational databases use SQL as their query language. A relational DBMS (RDBMS) is a collection based on set theory and relational calculations. This type of database groups data into ordered pairs of relationships (each pair consisting of a row and column) known as a tuple. The majority of modern databases are relational. Object-relational database system: This type of database system is similar to an RDBMS but is written in an object-oriented programming language. This allows it to support extensions to the data model and to be a middle ground between relational databases and object-oriented databases.

Object-relational database system: This type of database system is similar to an RDBMS but is written in an object-oriented programming language. This allows it to support extensions to the data model and to be a middle ground between relational databases and object-oriented databases.

Database Terms

In case you are not familiar with the world of databases, this section provides a review of some common database-related terms that security professionals should be familiar with (see Figure 9.6):

Aggregation: The process of combining several low-sensitivity items and drawing medium- or high-sensitivity conclusions.

Aggregation: The process of combining several low-sensitivity items and drawing medium- or high-sensitivity conclusions. Inference: The process of deducing privileged information from available unprivileged sources.

Inference: The process of deducing privileged information from available unprivileged sources. Attribute: A characteristic about a piece of information. Where a row in a database table represents a database object, each column in that row represents an attribute of that object.

Attribute: A characteristic about a piece of information. Where a row in a database table represents a database object, each column in that row represents an attribute of that object. Field: The smallest unit of data within a database.

Field: The smallest unit of data within a database. Foreign key: An attribute in one table that cross-references to an existing value that is the primary key in another table.

Foreign key: An attribute in one table that cross-references to an existing value that is the primary key in another table. Granularity: Refers to the level of control a program has over the view of the data that someone can access. Highly granular databases make it possible to restrict views, according to the user’s clearance, at the field or row level.

Granularity: Refers to the level of control a program has over the view of the data that someone can access. Highly granular databases make it possible to restrict views, according to the user’s clearance, at the field or row level. Relation: A defined interrelationship between the data elements in a collection of tables.

Relation: A defined interrelationship between the data elements in a collection of tables. Tuple: A record used to represent a relationship among a set of values. In an RDBMS, a tuple identifies a column and a row.

Tuple: A record used to represent a relationship among a set of values. In an RDBMS, a tuple identifies a column and a row. Schema: The totality of the defined tables and interrelationships for an entire database, which defines how the database is structured.

Schema: The totality of the defined tables and interrelationships for an entire database, which defines how the database is structured. Primary key: A key that uniquely identifies each row and assists with indexing a table.

Primary key: A key that uniquely identifies each row and assists with indexing a table. View: The database construct that an end user can see or access.

View: The database construct that an end user can see or access.

FIGURE 9.6 Illustration of Database Terms

Integrity

The integrity of data refers to its accuracy. To protect the integrity of the data in a database, specialized controls are used, including rollbacks, checkpoints, commits, and savepoints. There are two types of data integrity:

Semantic integrity: Assures that the data in any field is of the appropriate type. Controls that check for the logic of data and operations affect semantic integrity.

Semantic integrity: Assures that the data in any field is of the appropriate type. Controls that check for the logic of data and operations affect semantic integrity. Referential integrity: Assures the accuracy of cross-references between tables. Controls that ensure that foreign keys only reference existing primary keys affect referential integrity.

Referential integrity: Assures the accuracy of cross-references between tables. Controls that ensure that foreign keys only reference existing primary keys affect referential integrity.

Transaction Processing

Transaction management is critical in assuring integrity. Without proper locking mechanisms, multiple users could be altering the same record simultaneously, and there would be no way to ensure that transactions were valid and complete. This is especially important with online systems that respond in real time. These systems, known as online transaction processing (OLTP) systems, are used in many industries, including banking, airlines, mail order, supermarkets, and manufacturing. Programmers involved in database management use the ACID test when discussing whether a database management system has been properly designed to handle OLTP:

Atomicity: Results of a transaction are either all or nothing.

Atomicity: Results of a transaction are either all or nothing. Consistency: Transactions are processed only if they meet system-defined integrity constraints.

Consistency: Transactions are processed only if they meet system-defined integrity constraints. Isolation: The results of a transaction are invisible to all other transactions until the original transaction is complete.

Isolation: The results of a transaction are invisible to all other transactions until the original transaction is complete. Durability: Once a transaction is complete, the results of the transaction are permanent.

Durability: Once a transaction is complete, the results of the transaction are permanent.

Database Vulnerabilities and Threats

Protecting databases is not an easy task. Database attacks are some of the most common attack vectors, and SQL injection has topped the OWASP list for more than 10 years. Many database security issues are directly attributed to poor development practices. As a security professional, you should understand the following common vulnerabilities and threats:

SQL injection: This type of attack, typically caused by unsanitized input, allows the attacker to inject a SQL query via the input data from the client to the application.

SQL injection: This type of attack, typically caused by unsanitized input, allows the attacker to inject a SQL query via the input data from the client to the application. Default, blank, or weak passwords: Authentication credentials should be strong, and all weak and blank passwords removed.

Default, blank, or weak passwords: Authentication credentials should be strong, and all weak and blank passwords removed. Extensive privileges: The level of access provided should be only what’s needed to do the task. Administrator privileges should not be provided.

Extensive privileges: The level of access provided should be only what’s needed to do the task. Administrator privileges should not be provided. Broken configuration management: Active measurement of the environment is required to detect undocumented changes, which typically reduce security.

Broken configuration management: Active measurement of the environment is required to detect undocumented changes, which typically reduce security. Enabled features that are not needed: It is important to remove, block, and disable all features that are not needed. Reducing the attack surface makes it harder for an attacker to succeed.

Enabled features that are not needed: It is important to remove, block, and disable all features that are not needed. Reducing the attack surface makes it harder for an attacker to succeed. Privilege escalation: This is a vulnerability in which an attacker gains an additional level of access.

Privilege escalation: This is a vulnerability in which an attacker gains an additional level of access. Denial of service (DoS): A DoS attack may not give the attacker access, but it can disrupt normal operations and block others from accessing the database.

Denial of service (DoS): A DoS attack may not give the attacker access, but it can disrupt normal operations and block others from accessing the database. Unpatched database: Unpatched systems are common vulnerabilities. Patching is a key component of security.

Unpatched database: Unpatched systems are common vulnerabilities. Patching is a key component of security. Unencrypted sensitive data: Encryption is one of the key controls to protecting sensitive data.

Unencrypted sensitive data: Encryption is one of the key controls to protecting sensitive data. Buffer overflow: This is yet another common vulnerability. All data should have proper input validation done to ensure that the data is formatted correctly and with normal bounds of operation. (Buffer overflows are discussed later in this chapter.)

Buffer overflow: This is yet another common vulnerability. All data should have proper input validation done to ensure that the data is formatted correctly and with normal bounds of operation. (Buffer overflows are discussed later in this chapter.)

Tip

When you take the CISSP exam, be sure to read the questions closely as a single word can make an answer right or wrong. For example, configuration management is an active measurement of the environment to detect undocumented changes, whereas change management is a mandatory process that involves documenting planned changes to the environment.

Artificial Intelligence and Expert Systems

An expert system is a computer program that contains a knowledge discovery database, a set of rules, and an inference engine. This data mining technique can be used to discover nontrivial information and extract knowledge from a large amount of data. At the heart of such a system is the knowledge base—a repository of information against which the rules are applied.

Expert systems are typically designed for specific purposes and have the capability to infer. For example, a hospital might have a knowledge base that contains various types of medical information; if a doctor enters the symptoms weight loss, emotional disturbances, impaired sensory perception, pain in the limbs, and periods of irregular heart rate, the expert system can scan the knowledge base and diagnose the patient as suffering from beriberi.

Tip

How advanced are expert systems? A computer named Watson, created by IBM, can win at Jeopardy! and beat human opponents by looking for the answers in unstructured data using a natural query software language (see https://www.ibm.com/ibm/history/ibm100/us/en/icons/watson/).

The challenge in the creation of knowledge bases is to ensure that their data is accurate, that access controls are in place, that the proper level of expertise was used in developing the system, and that the knowledge base is secured.

Neural networks are networks that are capable of learning new information (see Figure 9.7). Artificial intelligence (AI) is possible thanks to the combination of expert systems and neural networks. Neural networks make use of multiple levels of nodes to filter data and apply weights; they mimic processes used by the human brain. Eventually, an output is triggered, and a fuzzy solution is provided. It’s called a fuzzy solution because it can lack exactness.

FIGURE 9.7 Artificial Neural Network

Programming Languages, Secure Coding Guidelines, and Standards

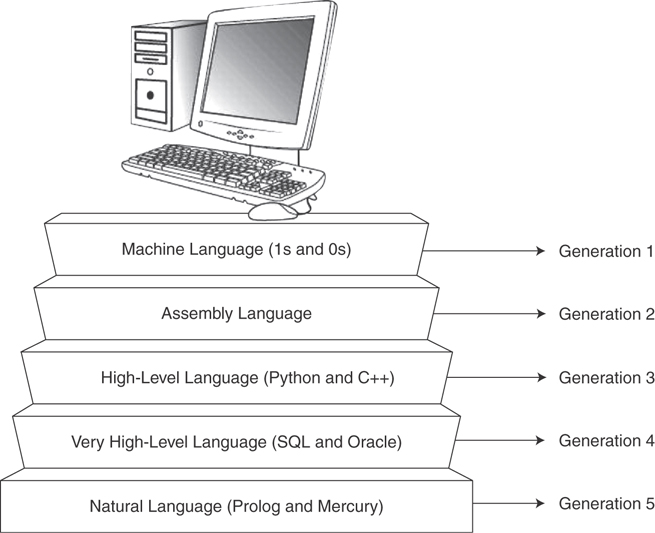

Programming languages permit the creation of instructions that a computer can understand. The types of tasks that get programmed and the instructions or code used to create a program depend on the nature of the organization. Programming has evolved through five generations of languages, as illustrated in Figure 9.8 and described in the list that follows:

Generation 1: Machine language, the native language of a computer, consisting of binary ones and zeros.

Generation 1: Machine language, the native language of a computer, consisting of binary ones and zeros. Generation 2: Assembly language, human-readable notation that translates easily into machine language.

Generation 2: Assembly language, human-readable notation that translates easily into machine language. Generation 3: High-level programming language. The 1960s through the 1980s saw the emergence and growth of many third-generation languages (3GLs), such as Fortran, COBOL, C+, and Pascal.

Generation 3: High-level programming language. The 1960s through the 1980s saw the emergence and growth of many third-generation languages (3GLs), such as Fortran, COBOL, C+, and Pascal.

FIGURE 9.8 Programming Languages

Generation 4: Very high-level language. This generation of languages grew from the 1970s through the early 1990s. Fourth-generation languages (4GLs), such as SQL, are typically used to access databases.

Generation 4: Very high-level language. This generation of languages grew from the 1970s through the early 1990s. Fourth-generation languages (4GLs), such as SQL, are typically used to access databases. Generation 5: Natural language. Fifth-generation languages (5GLs) took off in the 1990s and were considered the wave of the future. 5GLs are categorized by their use of inference engines and natural language processing. Mercury and Prolog are two examples of fifth-generation languages.

Generation 5: Natural language. Fifth-generation languages (5GLs) took off in the 1990s and were considered the wave of the future. 5GLs are categorized by their use of inference engines and natural language processing. Mercury and Prolog are two examples of fifth-generation languages.

After the code is written, it must be translated into a format that the computer will understand. These are the three most common methods:

Assembler: An assembler translates assembly language into machine language.

Assembler: An assembler translates assembly language into machine language. Compiler: A compiler translates a high-level language into machine language.

Compiler: A compiler translates a high-level language into machine language. Interpreter: Instead of compiling an entire program, an interpreter translates a program line by line. Interpreters have a fetch-and-execute cycle. An interpreted language is much slower to execute than a compiled or assembled program, but it does not need a separate compilation or assembly step.

Interpreter: Instead of compiling an entire program, an interpreter translates a program line by line. Interpreters have a fetch-and-execute cycle. An interpreted language is much slower to execute than a compiled or assembled program, but it does not need a separate compilation or assembly step.

Hundreds of different programming languages exist. Many have been written for specific niches or to meet market demands. The following are some examples of common programming languages:

ActiveX: This language provides a foundation for higher-level software services, such as transferring and sharing information among applications. ActiveX controls are a Component Object Model (COM) technology. COM is designed to hide the details of an individual object and focus on the object’s capabilities. An extension to COM is COM+.

ActiveX: This language provides a foundation for higher-level software services, such as transferring and sharing information among applications. ActiveX controls are a Component Object Model (COM) technology. COM is designed to hide the details of an individual object and focus on the object’s capabilities. An extension to COM is COM+. C, C+, C++, and C#: The C programming language, which replaced B, was designed by Dennis Ritchie. C was originally designed for UNIX and is very popular and widely used. From a security perspective, some C functions are known to be susceptible to buffer overflows.

C, C+, C++, and C#: The C programming language, which replaced B, was designed by Dennis Ritchie. C was originally designed for UNIX and is very popular and widely used. From a security perspective, some C functions are known to be susceptible to buffer overflows. HTML: Hypertext Markup Language (HTML) is a markup language that is used to create web pages.

HTML: Hypertext Markup Language (HTML) is a markup language that is used to create web pages. Java: This is a general-purpose computer programming language, developed in 1995 by Sun Microsystems.

Java: This is a general-purpose computer programming language, developed in 1995 by Sun Microsystems. Visual Basic: This programming language was designed to be used by anyone and enables rapid development of practical programs.

Visual Basic: This programming language was designed to be used by anyone and enables rapid development of practical programs. Ruby: This object-oriented programming language was developed in the 1990s for general-purpose use. It has been used in the development of such projects as Metasploit.

Ruby: This object-oriented programming language was developed in the 1990s for general-purpose use. It has been used in the development of such projects as Metasploit. Scripting languages: A scripting language is a type of programming language that is usually interpreted rather than compiled and allows some control over a software application. Perl, Python, and Java are examples of scripting languages.

Scripting languages: A scripting language is a type of programming language that is usually interpreted rather than compiled and allows some control over a software application. Perl, Python, and Java are examples of scripting languages. XML: Extensible Markup Language (XML) is a markup language that specifies rules for encoding documents. XML is widely used on the Internet.

XML: Extensible Markup Language (XML) is a markup language that specifies rules for encoding documents. XML is widely used on the Internet.

Object-Oriented Programming

Multiple development frameworks have been created to assist in defining, grouping, and reusing both code and data. Methods include data-oriented system programming, component-based programming, web-based applications, and object-oriented programming. Of these, the most commonly deployed is object-oriented programming (OOP), an object technology that grew from modular programming. OOP allows a programmer to reuse and interchange code between programs in modular fashion without starting over from scratch. It has been widely embraced because it is efficient and results in relatively low programming costs. Because OOP makes use of modules, a programmer can easily modify an existing program. Java and C++ are two examples of OOP languages.

In OOP, objects are grouped into classes, and all objects in a class share a particular structure and behavior. Characteristics from one class can be passed down to another through the process of inheritance. OOP relies on the following concepts:

Encapsulation: This is the process of hiding the functionality of an object inside that object or, for a process, hiding the functionality inside that process’s class. Encapsulation permits a developer to keep information disjointed—that is, to separate distinct elements so that there is no direct unnecessary sharing or interaction between the various parts.

Encapsulation: This is the process of hiding the functionality of an object inside that object or, for a process, hiding the functionality inside that process’s class. Encapsulation permits a developer to keep information disjointed—that is, to separate distinct elements so that there is no direct unnecessary sharing or interaction between the various parts. Polymorphism: In general, polymorphism means that one thing has the capability to take on many appearances or make copies of itself. In OOP, polymorphism is used to invoke a method on a class without needing to care about how the invocation is accomplished. Likewise, the specific results of the invocation can vary because objects have different variables that respond differently.

Polymorphism: In general, polymorphism means that one thing has the capability to take on many appearances or make copies of itself. In OOP, polymorphism is used to invoke a method on a class without needing to care about how the invocation is accomplished. Likewise, the specific results of the invocation can vary because objects have different variables that respond differently. Polyinstantiation: In general, polyinstantiation means that multiple instances of information are being generated. Polyinstantiation is used in many settings. For example, polyinstantiation is used to display different results to different individuals who pose identical queries on identical databases, due to those individuals possessing different security levels. It is widely used by the government and military to unify information bases while protecting sensitive or classified information. Without polyinstantiation, an attacker might be able to aggregate information from various sources to mount an inference attack and determine secret information. Initially, a piece of information by itself appears useless, like a piece to a puzzle, but when you put together several pieces of the puzzle, you begin to form an accurate picture.

Polyinstantiation: In general, polyinstantiation means that multiple instances of information are being generated. Polyinstantiation is used in many settings. For example, polyinstantiation is used to display different results to different individuals who pose identical queries on identical databases, due to those individuals possessing different security levels. It is widely used by the government and military to unify information bases while protecting sensitive or classified information. Without polyinstantiation, an attacker might be able to aggregate information from various sources to mount an inference attack and determine secret information. Initially, a piece of information by itself appears useless, like a piece to a puzzle, but when you put together several pieces of the puzzle, you begin to form an accurate picture.

Object-oriented design (OOD) is used to bridge the gap between a real-world problem and a software solution. OOD modularizes data and procedures, making it possible to provide a detailed description of how a system is to be built. Object-oriented analysis (OOA) and OOD are sometimes combined as object-oriented analysis and design (OOAD).

CORBA

Functionality that exists in a different environment from your code can be accessed and shared using vendor-independent middleware known as Common Object Request Broker Architecture (CORBA). CORBA’s purpose is to allow different vendor products, such as computer languages, to work seamlessly across distributed networks of diversified computers. The heart of the CORBA system is the Object Request Broker (ORB), which simplifies the process of requesting server objects for clients. The ORB locates a requested object, transparently activates it as necessary, and then delivers the requested object to the client.

Security of the Software Environment

The security of software is a critical concern. Protection of the confidentiality, integrity, and availability of data and program variables is one of the top concerns of a security professional. During the software design phase, you should consider risk analysis and mitigation. It’s critical that the software development team identify, understand, and mitigate any risks that might make the organization vulnerable. Total risk refers to the probability and size of a potential loss. Every development project has elements of risk. For example, in an application that will deal with order quantities, the numbers should be positive; if you made it possible for someone to order negative 17 of an item, you would face added risk. Sanitizing inputs and outputs to allow only qualified values reduces the attack surface. Think of the attack surface as all of the potential ways in which an attacker can attack the application. Integrity of your code can be verified with hashing tools such as MD5sum or SHA1sum.

A vulnerability is a flaw, loophole, or weakness in an application that leads it to process critical data insecurely. A threat actor who can exploit vulnerabilities can gain access to software. Some common software vulnerabilities include escalation of privileges, buffer overflow, SQL injection, cross-site request forgery (CSRF), and cross-site scripting (XSS). It is important to remember that fixing security weaknesses and vulnerabilities at the source code level is much cheaper than waiting until later in the process. Penetration testing and fuzzing are much more expensive than fixing a vulnerability before the build process is complete.

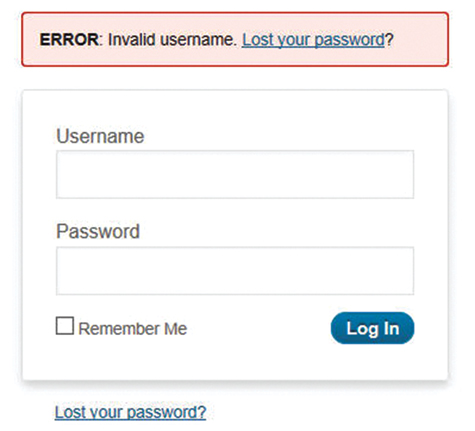

Threat modeling is another technique that can be used to reduce risk and calculate the attack surface. Threat modeling details the potential attacks, targets, and any vulnerabilities of an application. It can also help determine the types of controls needed to prevent an attack. For example, when you enter an incorrect username or password, do you get a generic response, or does the application respond with too much data, as shown in Figure 9.9? Just keep in mind that the best practice from a security standpoint is to not identify which entry was invalid and provide only a generic message.

FIGURE 9.9 Non-generic Response That Should Be Flagged by Threat Modeling

A security professional should also consider the following:

What is the software environment? Where is the software used? Is it on a mainframe, or maybe a publicly available website? Is the software run on a server, or is it downloaded and executed on the client (mobile code)?

What is the software environment? Where is the software used? Is it on a mainframe, or maybe a publicly available website? Is the software run on a server, or is it downloaded and executed on the client (mobile code)? What programming language and toolset were used? Some languages, such as C, are known to be vulnerable to buffer overflows.

What programming language and toolset were used? Some languages, such as C, are known to be vulnerable to buffer overflows.

Note

Java is estimated to be installed on more than 850 million computers, 3 billion phones, and millions of TVs, but it was not until August 2014 that the company changed its update software to remove older, vulnerable versions of Java during the installation process.

What security issues and concerns are present in the source code? Depending on how the code is processed, it may or may not be easy to identify problems. For example, a compiler translates a high-level language into machine language, whereas an interpreter translates the program line by line. It is important to determine whether an attacker can change input, process, or output data and whether the program will flag on these errors.

What security issues and concerns are present in the source code? Depending on how the code is processed, it may or may not be easy to identify problems. For example, a compiler translates a high-level language into machine language, whereas an interpreter translates the program line by line. It is important to determine whether an attacker can change input, process, or output data and whether the program will flag on these errors. How do you identify malware and defend against it? At a minimum, malware protection (antivirus) software needs to be deployed and methods to detect unauthorized changes need to be implemented.

How do you identify malware and defend against it? At a minimum, malware protection (antivirus) software needs to be deployed and methods to detect unauthorized changes need to be implemented.

Note

Regardless of the programming language used there are multiple secure coding standards to help build robust, secure applications. These standards include Application Security and Development Security Technical Implementation Guide (ASD STIG), CERT Software Engineering Institute (SEI), Open Web Application Security Project (OWASP) Top 10, and the Center of Internet Security (CIS) Top 20.

A risk assessment should be conducted for all application programming interfaces (APIs). Fuzz testing can be used to validate security controls and reduce the attack surface. APIs use function calls and offer developers the ability to bypass traditional web pages and interact directly with the underlying service. This type of functionality also comes with risk. Simple Object Access Protocol (SOAP) and Representational State Transfer (REST) are examples of API styles. REST is an architectural style and uses uniform service locators. SOAP is a protocol and uses service interfaces. It is a standardized protocol that aids in sending messages using other protocols, such as HTTP and SMTP. REST was created to address the problems of SOAP. Three methods of authentication for RESTful APIs include the following:

Basic: This method provides the lowest security. Basic authentication should not be used.

Basic: This method provides the lowest security. Basic authentication should not be used. OAuth 1.0a: This method is a secure but complicated process.

OAuth 1.0a: This method is a secure but complicated process. OAuth 2.0: This method is similar to OAuth 1.0a but is less complex and lacks a signature.

OAuth 2.0: This method is similar to OAuth 1.0a but is less complex and lacks a signature.

Security doesn’t stop after the software development process. The longer a program has been in use, the more vulnerable it becomes as attackers have more time to probe and explore methods to exploit it. Attackers might even analyze patches to determine what the patches are trying to fix and figure out how such vulnerabilities might be exploited.

Security professionals need to do proper planning for timely patch and update deployment. A patch is a fix to a particular problem in software applications or operating system code that does not create a security risk but does create problems with the application. A hot fix is quick but lacks full integration and testing, and it addresses only a specific issue. A service pack is a collection of all the patches to date; it is considered critical and should be installed as soon as possible.

Mobile Code