Chapter 4

Security Architecture and Engineering

Terms you’ll need to understand:

Buffer overflows

Buffer overflows Security models

Security models Rings of protection

Rings of protection Public key infrastructure

Public key infrastructure Digital signatures

Digital signatures Common Criteria

Common Criteria Reference monitor

Reference monitor Trusted computing base

Trusted computing base Open and closed systems

Open and closed systems Emanations

Emanations Encryption

Encryption

Topics you’ll need to master:

How to select controls based on system security requirements

How to select controls based on system security requirements Use of confidentiality models such as Bell-LaPadula

Use of confidentiality models such as Bell-LaPadula How to identify integrity models such as Biba and Clark-Wilson

How to identify integrity models such as Biba and Clark-Wilson Common flaws and security issues associated with security architecture designs

Common flaws and security issues associated with security architecture designs Cryptography and how it is used to protect sensitive information

Cryptography and how it is used to protect sensitive information The need for and placement of physical security control

The need for and placement of physical security control

Introduction

The CISSP exam Security Architecture and Engineering domain deals with hardware, software, security controls, and documentation. When hardware is designed, it needs to be built to specific standards that should provide mechanisms to protect the confidentiality, integrity, and availability of the data. The operating systems (OS) that will run on the hardware must also be designed in such a way as to ensure security.

Building secure hardware and operating systems is just a start. Both vendors and customers need to have a way to verify that hardware and software perform as stated, to rate these systems, and to have some level of assurance that such systems will function in a known manner. Evaluation criteria allow the parties involved to have a level of assurance.

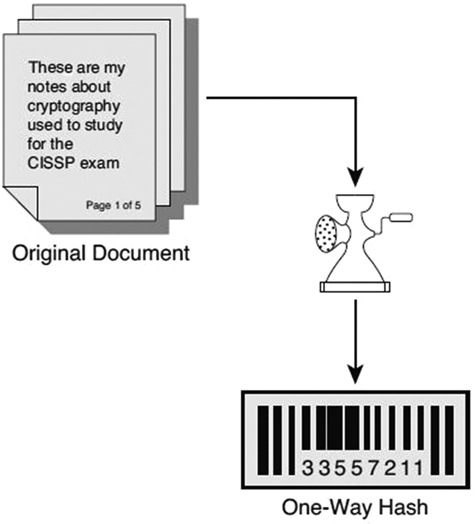

This chapter introduces cryptography and how it can be used at multiple layers to enhance security. To pass the CISSP exam, you need to understand system hardware and software models and how physical and logical controls can be used to secure systems. This chapter also covers cryptography (both symmetric and asymmetric), hashing, and digital signatures, which are also potential test topics.

Secure Design Guidelines and Governance Principles

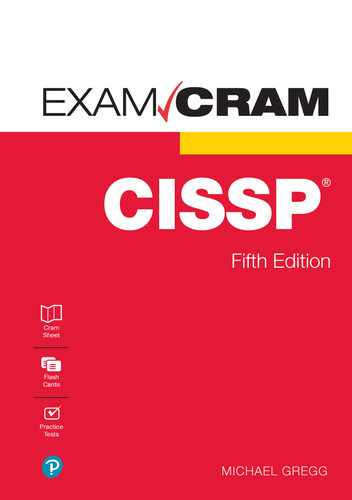

Building in security from the beginning of an architecture build is much cheaper than attempting to add it later. Part of this proactive approach should include an assessment to determine whether sensitive assets require any additional levels of security pertaining to confidentiality and integrity. Figure 4.1 illustrates the defense-in-depth design process.

There are two types of security controls:

Physical security controls: These controls can be used to restrict work areas, provide media security controls, restrict server room access, and maintain proper data storage and access.

Physical security controls: These controls can be used to restrict work areas, provide media security controls, restrict server room access, and maintain proper data storage and access. Logical security controls: These controls can be deployed through the application of cryptographic controls.

Logical security controls: These controls can be deployed through the application of cryptographic controls.

FIGURE 4.1 Defense-in-Depth Design Process for Security Architecture

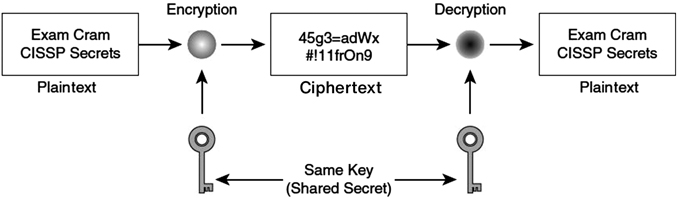

Various types of cryptographic controls can be used. Choosing the appropriate type requires determining specific characteristics, such as the type of algorithm used, the key length, and the application.

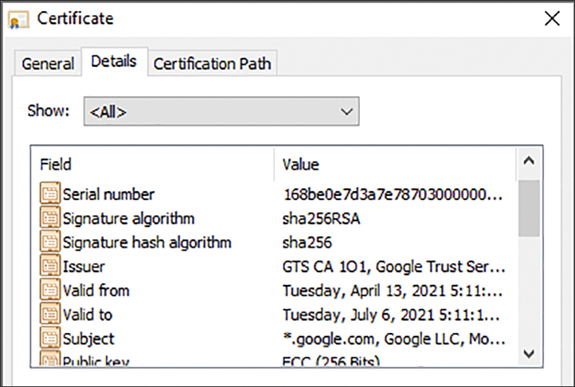

A public key infrastructure (PKI) is an industry standard framework that establishes third-party trust between two different parties. Key management is a critical component of a PKI and includes cryptographic key generation, distribution, storage, validation, and destruction, all of which are critical components for key management.

It is important to remember that all systems can be attacked, and it is critical to choose a cryptographic system that is strong enough. Cryptographic keys can be compromised. Compromises can be due to weak algorithms or weak keys. Many methods of cryptanalytic attacks exist to compromise keys.

Note

Data at rest can be protected with a Trusted Platform Module (TPM) chip, which is a cryptographic hardware processor that can be used to provide a greater level of security than is provided through software encryption. A TPM chip installed on the motherboard of a client computer can also be used for system state authentication. A TPM chip can also be used to store encryption keys.

TPM chips are addressed in ISO 11889-1:2009 and can be used with other forms of data and system protections to provide a layered approach referred to as defense in depth.

A framework is used to categorize an information system or business and used to guide which controls or standards are applicable. These frameworks are typically tied to governance, which should focus on the availability of services, integrity of information, and protection of data confidentiality.

One early framework is Saltzer and Schroeder’s principles for effective security titled “The Protection of Information in Computer Systems.” This 1975 paper may seem somewhat dated today, but it is still relevant and often covered in college and university courses. In this paper, Saltzer and Schroeder define a framework for secure systems design that is based on eight architectural principles:

Complete mediation

Complete mediation Economy of mechanism

Economy of mechanism Fail-safe defaults

Fail-safe defaults Least privilege

Least privilege Least common mechanism

Least common mechanism Open design

Open design Psychological acceptability

Psychological acceptability Separation of privilege

Separation of privilege

Another approach is the ISO/IEC 19249, Security Techniques—Catalogue of Architectural and Design Principles for Secure Products, Systems and Applications, which breaks out design principles into two groupings, each with five items:

Architectural principles:

Architectural principles: Domain separation

Domain separation Layering

Layering Encapsulation

Encapsulation Redundancy

Redundancy Virtualization

Virtualization

Design principles:

Design principles: Least privilege

Least privilege Attack surface minimization

Attack surface minimization Centralized parameter validation

Centralized parameter validation Centralized general security services

Centralized general security services Preparing for error and exception handling

Preparing for error and exception handling

Another governance framework is the IT Infrastructure Library (ITIL). ITIL specifies a set of processes, procedures, and tasks that can be integrated with an organization’s strategy to deliver value and maintain a minimum level of competency. ITIL can be used to create a baseline from which the organization can plan, implement, and measure its governance progress. ITIL presents a service lifecycle that includes the following components:

Continual service improvement

Continual service improvement Service strategy

Service strategy Service design

Service design Service transition

Service transition Service operation

Service operation

Enterprise Architecture

Security and governance can be enhanced by implementing an enterprise architecture (EA) plan. EA is the practice in information technology of organizing and documenting a company’s IT assets to enhance planning, management, and expansion. The primary purpose of using EA is to ensure that business strategy and IT investments are aligned. The benefit of EA is that it provides a means of traceability that extends from the highest level of business strategy down to the fundamental technology.

One early EA model is the Zachman Framework, which was designed to allow companies to structure policy documents for information systems so they focus on who, what, where, when, why, and how (see Figure 4.2).

FIGURE 4.2 Zachman Model

Federal law requires each government agency to set up its EA and a structure for its governance. This process is guided by the Federal Enterprise Architecture (FEA) framework, which is designed to use five models:

Performance reference model: A framework used to measure performance of major IT investments

Performance reference model: A framework used to measure performance of major IT investments Business reference model: A framework used to provide an organized, hierarchical model for day-to-day business operations

Business reference model: A framework used to provide an organized, hierarchical model for day-to-day business operations Service component reference model: A framework used to classify service components with respect to how they support business or performance objectives

Service component reference model: A framework used to classify service components with respect to how they support business or performance objectives Technical reference model: A framework used to categorize the standards, specifications, and technologies that support and enable the delivery of service components and capabilities

Technical reference model: A framework used to categorize the standards, specifications, and technologies that support and enable the delivery of service components and capabilities Data reference model: A framework used to provide a standard means by which data can be described, categorized, and shared

Data reference model: A framework used to provide a standard means by which data can be described, categorized, and shared

An independently designed, but later integrated, subset of the Zachman Framework is the Sherwood Applied Business Security Architecture (SABSA). Like the Zachman Framework, the SABSA model and methodology was developed for risk-driven enterprise information security architectures. It asks what, why, how, and where. For more information on the SABSA model, see www.sabsa-institute.org.

The ISO 27000 series is part of a family of governance standards that can trace their origins back to BS 7799. Organizations can become ISO 27000 certified by verifying their compliance with an accredited testing entity. Some of the core ISO standards include the following:

ISO 27001: This document describes requirements for establishing, implementing, operating, monitoring, reviewing, and maintaining an information security management system (ISMS). It follows the Plan-Do-Check-Act model.

ISO 27001: This document describes requirements for establishing, implementing, operating, monitoring, reviewing, and maintaining an information security management system (ISMS). It follows the Plan-Do-Check-Act model. ISO 27002: This document, which began as the BS 7799 standard and was republished as the ISO 17799 standard, describes ways to develop a security program within an organization.

ISO 27002: This document, which began as the BS 7799 standard and was republished as the ISO 17799 standard, describes ways to develop a security program within an organization. ISO 27003: This document focuses on implementation.

ISO 27003: This document focuses on implementation. ISO 27004: This document describes the ways to measure the effectiveness of an information security program.

ISO 27004: This document describes the ways to measure the effectiveness of an information security program. ISO 27005: This document describes the code of practice in information security.

ISO 27005: This document describes the code of practice in information security.

True security is a layered process and requires more than governance. The items discussed in the following sections can be used to build a more secure organization.

Regulatory Compliance and Process Control

One area of concern for a security professional is protection of sensitive information, including financial data. One attempt to provide this protection is the Payment Card Industry Data Security Standard (PCI-DSS). This multinational standard, which was first released in 2004, was created to enforce strict standards of control for the protection of credit card, debit card, ATM card, and gift card numbers by mandating policies, security devices, controls, and network monitoring. PCI also sets standards for the protection of personally identifiable information that is associated with the cardholder on an account. Participating vendors include American Express, MasterCard, Visa, and Discover.

Whereas PCI is used to protect financial data, Control Objectives for Information and Related Technology (COBIT) was developed to meet the requirements of business and IT processes. It is a standard used for auditors worldwide and was developed by the Information Systems Audit and Control Association (ISACA). COBIT is divided into four control areas:

Planning and Organization

Planning and Organization Acquisition and Implementation

Acquisition and Implementation Delivery and Support

Delivery and Support Monitoring

Monitoring

Fundamental Concepts of Security Models

Modern computer systems can be broken down into four groupings, or layers:

Hardware

Hardware Kernel and device drivers

Kernel and device drivers Operating system

Operating system Applications

Applications

Hardware interacts with software, such as the operating system kernel, and operating systems and applications do the things we need done. At the core of every computer system are the central processing unit (CPU) and the hardware that makes it run. The CPU is just one of the items that you can find on the motherboard, which serves as the base for most crucial system components.

The following sections examine the various parts of a computer system, starting at the heart of the system.

Central Processing Unit

The CPU is the heart of a computer system and serves as the brain of the computer. The CPU consists of the following:

Arithmetic logic unit (ALU): The ALU performs arithmetic and logical operations. It is the brain of the CPU.

Arithmetic logic unit (ALU): The ALU performs arithmetic and logical operations. It is the brain of the CPU. Control unit: The control unit manages the instructions it receives from memory. It decodes and executes the requested instructions and determines what instructions have priority for processing.

Control unit: The control unit manages the instructions it receives from memory. It decodes and executes the requested instructions and determines what instructions have priority for processing. Memory: Memory is used to hold instructions and data to be processed. CPU memory is not typical memory; it is much faster than non-CPU memory.

Memory: Memory is used to hold instructions and data to be processed. CPU memory is not typical memory; it is much faster than non-CPU memory.

A CPU is capable of executing a series of basic operations, including fetch, decode, execute, and write operations. Pipelining combines multiple steps into one process. A CPU has the capability to fetch instructions and then process them. A CPU can operate in one of four states:

Supervisor state: The program can access the entire system.

Supervisor state: The program can access the entire system. Problem state: Only non-privileged instructions can be executed.

Problem state: Only non-privileged instructions can be executed. Ready state: The program is ready to resume processing.

Ready state: The program is ready to resume processing. Wait state: The program is waiting for an event to complete.

Wait state: The program is waiting for an event to complete.

Because CPUs have very specific designs, the operating system as well as applications must be developed to work with the CPU. CPUs also have different types of registers to hold data and instructions. The base register contains the beginning address assigned to a process, and the limit address marks the end of the memory segment. Together, these components are responsible for the recall and execution of programs.

CPUs have made great strides, as illustrated in Table 4.1. As the size of transistors has decreased, the number of transistors that can be placed on a CPU has increased. Thanks to increases in the total number of transistors and in clock speed, the power of CPUs has increased exponentially. Today, a 3.06 GHz Intel Core i7 can perform about 18 million instructions per second (MIPS).

TABLE 4.1 CPU Advancements

CPU |

Year |

Number of Transistors |

Clock Speed |

|---|---|---|---|

8080 |

1974 |

6,000 |

2 MHz |

80386 |

1986 |

275,000 |

12.5 MHz |

Pentium |

1993 |

3,100,000 |

60 MHz |

Intel Core 2 |

2006 |

291,000,000 |

2.66 GHz |

Intel Core i7 |

2009 |

731,000,000 |

4.00 GHz |

Intel Core M |

2014 |

1,300,000,000 |

2.6 GHz |

Note

Processor speed is measured in MIPS (millions of instructions per second). This standard is used to indicate how fast a CPU can work.

Two basic designs of CPUs are manufactured for modern computer systems:

Reduced instruction set computer (RISC): Uses simple instructions that require a reduced number of clock cycles

Reduced instruction set computer (RISC): Uses simple instructions that require a reduced number of clock cycles Complex instruction set computer (CISC): Performs multiple operations for a single instruction

Complex instruction set computer (CISC): Performs multiple operations for a single instruction

The CPU requires two inputs to accomplish its duties: instructions and data. The data is passed to the CPU for manipulation, where it is typically worked on in either the problem state or the supervisor state. In the problem state, the CPU works on the data with non-privileged instructions. In the supervisor state, the CPU executes privileged instructions.

ExamAlert

A superscalar processor is a processor that can execute multiple instructions at the same time; a scalar processor can execute only one instruction at a time. You need to know this distinction for the CISSP exam.

A CPU can be classified into one of several categories, depending on its functionality. When the computer’s CPU, motherboard, and operating system all support the functionality, the computer system is also categorized according to the following:

Multiprogramming: Can interleave two or more programs for execution at any one time

Multiprogramming: Can interleave two or more programs for execution at any one time Multitasking: Can perform one or more tasks or subtasks at a time

Multitasking: Can perform one or more tasks or subtasks at a time Multiprocessor: Supports one or more CPUs

Multiprocessor: Supports one or more CPUs

A multiprocessor system can work in symmetric or asymmetric mode. With symmetric mode, all processors are equal and can handle any tasks equally with all devices (peripherals being equally accessible) or no specialized path is required for resources. With asymmetric mode, one CPU schedules and coordinates tasks between other processes and resources.

The data that CPUs work with is usually part of an application or a program. These programs are tracked using a process ID (PID). Anyone who has ever looked at Task Manager in Windows or executed a ps command on a Linux machine has probably seen a PID number. You can manipulate the priority of these tasks as well as start and stop them. Fortunately, most programs do much more than the first C code you wrote, which probably just said “Hello World.” Each line of code or piece of functionality that a program has is known as a thread.

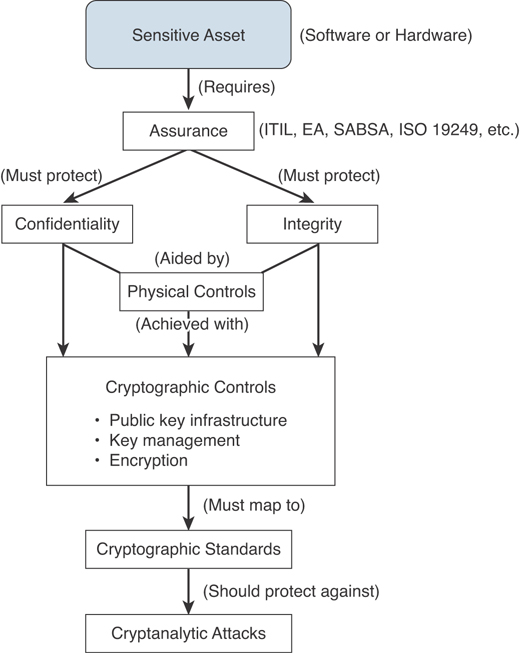

A program that has the capability to carry out more than one thread at a time is referred to as multithreaded (see Figure 4.3).

FIGURE 4.3 Processes and Threads

Process activity uses process isolation to separate processes. Four process isolation techniques are used to ensure that each application receives adequate processor time to operate properly:

Encapsulation of processes or objects: Other processes do not interact with the application.

Encapsulation of processes or objects: Other processes do not interact with the application. Virtual mapping: The application is written in such a way that it believes it is the only application running.

Virtual mapping: The application is written in such a way that it believes it is the only application running. Time multiplexing: This allows the application or process to share the computer’s resources.

Time multiplexing: This allows the application or process to share the computer’s resources. Naming distinctions: Processes are assigned their own unique names.

Naming distinctions: Processes are assigned their own unique names.

ExamAlert

To get a good look at naming distinctions, run ps -aux from the terminal of a Linux system and note the unique PID values.

An interrupt is another key piece of a computer system. It is an electrical connection between a device and a CPU. The device can put an electrical signal on this connection to get the attention of the CPU. The following are common interrupt methods:

Programmed I/O: Used to transfer data between a CPU and a peripheral device

Programmed I/O: Used to transfer data between a CPU and a peripheral device Interrupt-driven I/O: A more efficient input/output method that requires complex hardware

Interrupt-driven I/O: A more efficient input/output method that requires complex hardware I/O using DMA: I/O based on direct memory access that can bypass the processor and write the information directly to main memory

I/O using DMA: I/O based on direct memory access that can bypass the processor and write the information directly to main memory Memory-mapped I/O: A method that requires the CPU to reserve space for I/O functions and to make use of the address for both memory and I/O devices

Memory-mapped I/O: A method that requires the CPU to reserve space for I/O functions and to make use of the address for both memory and I/O devices Port-mapped I/O: A method that uses a special class of instruction that can read and write a single byte to an I/O device

Port-mapped I/O: A method that uses a special class of instruction that can read and write a single byte to an I/O device

ExamAlert

Interrupts can be maskable and non-maskable. Maskable interrupts can be ignored by the application or the system, whereas non-maskable interrupts cannot be ignored by the system. An example of a non-maskable interrupt in Windows is the interrupt that occurs when you press Ctrl+Alt+Delete.

There is a natural hierarchy to memory, and there must therefore be a way to manage memory and ensure that it does not become corrupted. That is the job of the memory management system. Memory management systems on multitasking operating systems are responsible for the following tasks:

Relocation: The system maintains the ability to copy memory contents from memory to secondary storage as needed.

Relocation: The system maintains the ability to copy memory contents from memory to secondary storage as needed. Protection: The system provides control to memory segments and restricts what process can write to memory.

Protection: The system provides control to memory segments and restricts what process can write to memory. Sharing: The system allows sharing of information based on a user’s security level for access control. For instance, Mike may be able to read an object, whereas Shawn may be able to read and write to the object.

Sharing: The system allows sharing of information based on a user’s security level for access control. For instance, Mike may be able to read an object, whereas Shawn may be able to read and write to the object. Logical organization: The system provides for the sharing of and support for dynamic link libraries.

Logical organization: The system provides for the sharing of and support for dynamic link libraries. Physical organization: The system provides for the physical organization of memory.

Physical organization: The system provides for the physical organization of memory.

Storage Media

A computer is not just a CPU; memory is also an important component. The CPU uses memory to store instructions and data. Therefore, memory is an important type of storage media. The CPU is the only component that can directly access memory. Systems are designed this way because the CPU has a high level of system trust.

A CPU can use different types of addressing schemes to communicate with memory, including absolute addressing and relative addressing. In addition, memory can be addressed either physically or logically. Physical addressing refers to the hard-coded address assigned to memory. Applications and programmers writing code use logical addresses. Relative addressing involves using a known address with an offset applied.

Not only can memory be addressed in different ways, but there are also different types of memory. Memory can be either nonvolatile or volatile. The sections that follow provide examples of both of these types.

Tip

Two important security concepts associated with storage are protected memory and memory addressing. For the CISSP exam, you should understand that protected memory prevents other programs or processes from gaining access or modifying the contents of address space that has previously been assigned to another active program. Memory can be addressed either physically or logically. Memory addressing describes the method used by the CPU to access the contents of memory. This is especially important for understanding the root causes of buffer overflow attacks.

RAM

Random-access memory (RAM) is volatile memory. If power is lost, the data in RAM is destroyed. Types of RAM include static RAM, which uses circuit latches to represent binary data, and dynamic RAM, which must be refreshed every few milliseconds. RAM can be configured as dynamic random-access memory (DRAM) or static random-access memory (SRAM).

SRAM doesn’t require a refresh signal, as DRAM does. SRAM chips are more complex and faster, and thus they are more expensive. DRAM access times are around 60 nanoseconds (ns) or more; SRAM has access times as fast as 10 ns. SRAM is often used for cache memory.

DRAM chips can be manufactured inexpensively. Dynamic refers to the memory chips’ need for a constant update signal (also called a refresh signal) to retain the information that is written there. Currently, there are five popular implementations of DRAM:

Synchronous DRAM (SDRAM): SDRAM shares a common clock signal with the transmitter of the data. The computer’s system bus clock provides the common signal that all SDRAM components use for each step to be performed.

Synchronous DRAM (SDRAM): SDRAM shares a common clock signal with the transmitter of the data. The computer’s system bus clock provides the common signal that all SDRAM components use for each step to be performed. Double data rate (DDR): DDR supports a double transfer rate compared to ordinary SDRAM.

Double data rate (DDR): DDR supports a double transfer rate compared to ordinary SDRAM. DDR2: DDR2 splits each clock pulse in two, doubling the number of operations it can perform.

DDR2: DDR2 splits each clock pulse in two, doubling the number of operations it can perform. DDR3: DDR3 is a DRAM interface specification that offers the ability to transfer data at twice the rate (eight times the speed of its internal memory arrays), enabling higher bandwidth or peak data rates.

DDR3: DDR3 is a DRAM interface specification that offers the ability to transfer data at twice the rate (eight times the speed of its internal memory arrays), enabling higher bandwidth or peak data rates. DDR4: DDR4 offers higher speed than DDR2 or DDR3 and is one of the latest variants of DRAM. It is not compatible with any earlier type of RAM.

DDR4: DDR4 offers higher speed than DDR2 or DDR3 and is one of the latest variants of DRAM. It is not compatible with any earlier type of RAM.

ExamAlert

Memory leaks occur when programs or processes use RAM but cannot release it. Programs that suffer from memory leaks will eventually use up all available memory and can cause a system to halt or crash.

ROM

Read-only memory (ROM) is nonvolatile memory that retains information even if power is removed. ROM is typically used to load and store firmware. Firmware is embedded software much like BIOS or UEFI.

Tip

Most modern computer systems use Unified Extensible Firmware Interface (UEFI) instead of BIOS. UEFI offers several advantages over BIOS, including support for remote diagnostics and repair of systems even if no OS is installed.

Some common types of ROM include the following:

Erasable programmable read-only memory (EPROM)

Erasable programmable read-only memory (EPROM) Electrically erasable programmable read-only memory (EEPROM)

Electrically erasable programmable read-only memory (EEPROM) Flash memory

Flash memory Programmable logic devices (PLDs)

Programmable logic devices (PLDs)

Secondary Storage

Memory plays an important role in the world of storage, but other long-term types of storage are also needed. One of these is sequential storage. Tape drives are a type of sequential storage that must be read sequentially from beginning to end.

Another well-known type of secondary storage is direct-access storage. Direct-access storage devices do not have to be read sequentially; the system can identify the location of the information and go directly to it to read the data. A hard drive is an example of a direct-access storage device: A hard drive has a series of platters, read/write heads, motors, and drive electronics contained within a case designed to prevent contamination. Hard drives are used to hold data and software. Software is an operating system or application that you’ve installed on a computer system.

Compact discs (CDs) are a type of optical media. They use a laser/opto-electronic sensor combination to read or write data. A CD can be read-only, write-once, or rewriteable. CDs can hold up to around 800 MB on a single disk. A CD is manufactured by applying a thin layer of aluminum to what is primarily hard clear plastic. During manufacture or when a CD/R is burned, small bumps or pits are placed in the surface of the disc. These bumps or pits are converted into binary ones or zeros. Unlike a floppy disk, which has tracks and sectors, a CD comprises one long spiral track that begins at the inside of the disc and continues toward the outer edge.

Digital video discs (DVDs) are very similar to CDs in that both are optical media: DVDs just hold more data. The current version of optical storage is the Blu-ray disc. These optical disks can hold 50 GB or more of data.

More and more systems today are moving to solid-state drives (SSDs) and flash memory storage. Sizes up to 2 TB are now common.

I/O Bus Standards

The data that a CPU is working with must have a way to move from the storage media to the CPU. This is accomplished by means of a bus. A bus is lines of conductors that transmit data between the CPU, storage media, and other hardware devices. You need to understand two bus-related terms for the CISSP exam:

Northbridge: The northbridge, which is considered the memory controller hub (MCH), connects CPU, RAM, and video memory.

Northbridge: The northbridge, which is considered the memory controller hub (MCH), connects CPU, RAM, and video memory. Southbridge: The southbridge is used by the I/O controller hub (ICH) to connect input/output devices such as the hard drive, DVD drive, keyboard, mouse, and so on.

Southbridge: The southbridge is used by the I/O controller hub (ICH) to connect input/output devices such as the hard drive, DVD drive, keyboard, mouse, and so on.

From the point of view of the CPU, the various adapters plugged in to a computer are external devices. These connectors and the bus architecture used to move data to the devices have changed over time. The following are some bus architectures with which you need to be familiar:

Industry Standard Architecture (ISA): The ISA bus started as an 8-bit bus designed for IBM PCs. It is now obsolete.

Industry Standard Architecture (ISA): The ISA bus started as an 8-bit bus designed for IBM PCs. It is now obsolete. Peripheral Component Interconnect (PCI): The PCI bus was developed by Intel and served as a replacement for ISA and other bus standards. PCI Express is now the standard.

Peripheral Component Interconnect (PCI): The PCI bus was developed by Intel and served as a replacement for ISA and other bus standards. PCI Express is now the standard. Peripheral Component Interface Express (PCIe): The PCIe bus was developed as an upgrade to PCI. It offers several advantages, such as greater bus throughput, smaller physical footprint, better performance, and better error detection and reporting.

Peripheral Component Interface Express (PCIe): The PCIe bus was developed as an upgrade to PCI. It offers several advantages, such as greater bus throughput, smaller physical footprint, better performance, and better error detection and reporting. Serial ATA (SATA): The SATA standard is the current standard for connecting hard drives and solid-state drives to computers. It uses a serial design and smaller cables and offers greater speeds and better airflow inside the computer case.

Serial ATA (SATA): The SATA standard is the current standard for connecting hard drives and solid-state drives to computers. It uses a serial design and smaller cables and offers greater speeds and better airflow inside the computer case. Small Computer Systems Interface (SCSI): The SCSI bus allows a variety of devices to be daisy-chained off a single controller. Many servers use the SCSI bus for their preferred hard drive solution.

Small Computer Systems Interface (SCSI): The SCSI bus allows a variety of devices to be daisy-chained off a single controller. Many servers use the SCSI bus for their preferred hard drive solution.

Universal Serial Bus (USB) has gained wide market share. USB overcame the limitations of traditional serial interfaces. USB 2.0 devices can communicate at speeds up to 480 Mbps or 60 MBps, whereas USB 3.0 devices have a maximum bandwidth rate of 5 Gbps or 640 MBps. Devices can be chained together so that up to 127 devices can be connected to one USB slot of one hub in a “daisy chain” mode, eliminating the need for expansion slots on the motherboard. The newest USB standard is 3.2. The biggest improvement for the USB 3.2 standard is a boost in data transfer bandwidth of up to 10 Gbps.

USB is used for flash memory, cameras, printers, external hard drives, and phones. USB has two fundamental advantages: It has broad product support and devices are typically recognized immediately when connected.

Many Apple computers make use of the Thunderbolt interface, and a few legacy FireWire (IEEE 1394) interfaces are still found on digital audio and video equipment.

Virtual Memory and Virtual Machines

Modern computer systems have developed specific ways to store and access information. One of these is virtual memory, which is the combination of the computer’s primary memory (RAM) and secondary storage (the hard drive or SSD). When these two technologies are combined, the OS can make the CPU believe that it has much more memory than it actually has. Examples of virtual memory include the following:

Page file

Page file Swap space

Swap space Swap partition

Swap partition

These virtual memory types are user defined in terms of size, location, and other factors. When RAM is nearly depleted, the CPU begins saving data onto the computer’s hard drive in a process called paging. Paging takes a part of a program out of memory and uses the page file to save those parts of the program. If the system requires more RAM than paging provides, it writes an entire process out to the swap space. This process uses a paging file/swap file so that the data can be moved back and forth between the hard drive and RAM as needed. A specific drive can even be configured to hold such data and is therefore called a swap partition. Individuals who have used a computer’s hibernation function or who have ever opened more programs on their computers than they’ve had enough memory to support are familiar with the operation of virtual memory.

Closely related to virtual memory are virtual machines, such as VMware Workstation and Oracle VM VirtualBox. VMware is one of the leaders in the machine virtualization market. A virtual machine enables the user to run a second OS within a virtual host. For example, a virtual machine can let you run another Windows OS, Linux x86, or any other OS that runs on x86 processor and supports standard BIOS/UEFI booting.

Virtual systems make use of a hypervisor to manage the virtualized hardware resources to run a guest operating system. A Type 1 hypervisor runs directly on the hardware, with VM resources provided by the hypervisor, whereas a Type 2 hypervisor runs on a host operating system above the hardware. Virtual machines can be used for development and system administration, production, and to reduce the number of physical devices needed. Hypervisors are also being used to design virtual switches, routers, and firewalls.

Tip

Virtualization has been very important in the workplace, but cloud-based systems have more recently begun to take the place of VMs. Cloud-based systems enable employees to work from many different locations. The applications and data can reside in the cloud, and a user can access this content from any location that has connectivity. The potential disadvantage of cloud computing is that security can be an issue. It is important to consider who owns the cloud. Is it a private cloud (owned by company) or a public cloud (owned by someone else)? In addition, what is the physical location of the cloud, who has access to the cloud, and is it shared (co-tenancy)? It is critical to consider each of these factors before placing any corporate assets in the cloud.

Computer Configurations

The following are some of the most commonly used computer and device configurations:

Print server: Print servers are usually located close to printers and allow many users to access the same printer and share its resources.

Print server: Print servers are usually located close to printers and allow many users to access the same printer and share its resources. File server: File servers allow users to have a centralized site to store files. A file server provides an easy way to perform backups because it can be done on one server rather than on all the client computers. It also allows for group collaboration and multiuser access.

File server: File servers allow users to have a centralized site to store files. A file server provides an easy way to perform backups because it can be done on one server rather than on all the client computers. It also allows for group collaboration and multiuser access. Application server: An application server allows users to run applications that are not installed on an end user’s system. It is a very popular concept in thin client environments, which depend on a central server for processing power. Licensing is an important consideration with application servers.

Application server: An application server allows users to run applications that are not installed on an end user’s system. It is a very popular concept in thin client environments, which depend on a central server for processing power. Licensing is an important consideration with application servers. Web server: Web servers provide web services to internal and external users via web pages. A sample web address or URL (uniform resource locator) is www.thesolutionfirm.com.

Web server: Web servers provide web services to internal and external users via web pages. A sample web address or URL (uniform resource locator) is www.thesolutionfirm.com. Database server: Database servers store and access data, including information such as product inventories, price lists, customer lists, and employee data. Because databases hold sensitive information, they require well-designed security controls. A database server typically sits in front of a database and brokers requests, acting as middleware between the untrusted users and the database holding the data.

Database server: Database servers store and access data, including information such as product inventories, price lists, customer lists, and employee data. Because databases hold sensitive information, they require well-designed security controls. A database server typically sits in front of a database and brokers requests, acting as middleware between the untrusted users and the database holding the data. Laptops and tablets: These are mobile devices that are easily lost or stolen. Mobile devices have become very powerful and must be properly secured.

Laptops and tablets: These are mobile devices that are easily lost or stolen. Mobile devices have become very powerful and must be properly secured. Smartphones: Today’s smartphones are handheld computers that have large amounts of processing capability. They can take photos and offer onboard storage, Internet connectivity, and the ability to run applications. These devices are of particular concern as more companies start to support bring your own device (BYOD) policies. Such devices can easily fall outside of company policies and controls.

Smartphones: Today’s smartphones are handheld computers that have large amounts of processing capability. They can take photos and offer onboard storage, Internet connectivity, and the ability to run applications. These devices are of particular concern as more companies start to support bring your own device (BYOD) policies. Such devices can easily fall outside of company policies and controls. Industrial control systems (ICS): ICSs are typically used for industrial process control, such as with manufacturing systems on factory floors. ICSs can be used to operate and/or automate industrial processes. There are several categories of ICSs, including supervisory control and data acquisition (SCADA) systems, distributed control systems (DCSs), and field devices.

Industrial control systems (ICS): ICSs are typically used for industrial process control, such as with manufacturing systems on factory floors. ICSs can be used to operate and/or automate industrial processes. There are several categories of ICSs, including supervisory control and data acquisition (SCADA) systems, distributed control systems (DCSs), and field devices. Embedded devices / Internet of Things (IoT): Embedded devices / IoT include ATMs, point-of-sale terminals, and smartwatches. More and more devices include embedded technology, such as smart refrigerators and Bluetooth-enabled toilets. The security of embedded devices is a growing concern, as these devices may not be patched or updated on a regular basis.

Embedded devices / Internet of Things (IoT): Embedded devices / IoT include ATMs, point-of-sale terminals, and smartwatches. More and more devices include embedded technology, such as smart refrigerators and Bluetooth-enabled toilets. The security of embedded devices is a growing concern, as these devices may not be patched or updated on a regular basis.

Note

We can expect more and more devices to have embedded technology as the Internet of Things (IoT) grows. Several companies even sell toilets with Bluetooth and SD card technology built in; like other devices, they are not immune to hacking (see www.extremetech.com/extreme/163119-smart-toilets-bidet-hacked-via-bluetoothgives-new-meaning-to-backdoor-vulnerability).

Security Architecture

Although a robust functional architecture is a good start, real security requires that you have a security architecture in place to control processes and applications. Concepts related to security architecture include the following:

Protection rings

Protection rings Trusted computing base (TCB)

Trusted computing base (TCB) Open and closed systems

Open and closed systems Security modes of operation

Security modes of operation Operating states

Operating states Recovery procedures

Recovery procedures Process isolation

Process isolation

Protection Rings

An operating system knows who and what to trust by relying on protection rings. Protection rings work much like your network of family members, friends, coworkers, and acquaintances. The people who are closest to you, such as your spouse and children, have the highest level of trust. Those who are distant acquaintances or are unknown to you probably have a lower level of trust. For example, when you see a guy on Canal Street in New York City hawking new Rolex watches for $100, you should have little trust in him and his relationship with the Rolex company!

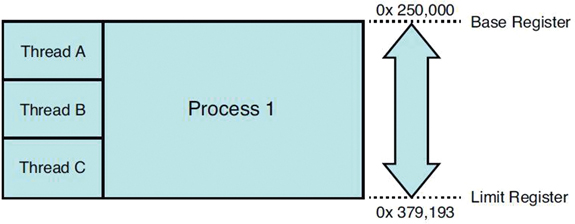

Protection rings are conceptual rather than physical entities. Figure 4.4 illustrates the protection rings schema. The first implementation of such a system was in MIT’s Multics time-shared operating system.

FIGURE 4.4 Protection Rings

The protection rings model provides the operating system with various levels at which to execute code or to restrict that code’s access. The idea is to use engineering design to build in layers of control using secure design principles. The rings provide much greater granularity than a system that just operates in user and privileged modes. As code moves toward the outer bounds of the model, the layer number increases, and the level of trust decreases. This model includes the following layers:

Layer 0: This is the most trusted level. The operating system kernel resides at this level. Any process running at layer 0 is said to be operating in privileged mode.

Layer 0: This is the most trusted level. The operating system kernel resides at this level. Any process running at layer 0 is said to be operating in privileged mode. Layer 1: This layer contains non-privileged portions of the operating system.

Layer 1: This layer contains non-privileged portions of the operating system. Layer 2: This is where I/O drivers, low-level operations, and utilities reside.

Layer 2: This is where I/O drivers, low-level operations, and utilities reside. Layer 3: This layer is where applications and processes operate. It is the level at which individuals usually interact with the operating system. Applications operating here are said to be working in user mode, which is often referred to as problem mode because this is where the less-trusted applications run; it is, therefore, where most problems occur.

Layer 3: This layer is where applications and processes operate. It is the level at which individuals usually interact with the operating system. Applications operating here are said to be working in user mode, which is often referred to as problem mode because this is where the less-trusted applications run; it is, therefore, where most problems occur.

Not all systems use all rings in the protection rings model. Most systems that are used today operate in two modes: user mode and supervisor (privileged) mode.

Items that need high security, such as the operating system security kernel, are located in the center ring. This ring is unique because it has access rights to all domains in the system. Protection rings are part of the trusted computing base concept, which is described next.

Trusted Computing Base

The trusted computing base (TCB) is the sum of all the protection mechanisms within a computer and is responsible for enforcing the security policy. The TCB includes hardware, software, controls, processes and is responsible for confidentiality and integrity. The TCB is the only portion of a system that operates at a high level of trust. It monitors four basic functions:

Input/output (I/O) operations: I/O operations are a security concern because operations from the outermost rings might need to interface with rings of greater protection. These cross-domain communications must be monitored.

Input/output (I/O) operations: I/O operations are a security concern because operations from the outermost rings might need to interface with rings of greater protection. These cross-domain communications must be monitored. Execution domain switching: Applications running in one domain or level of protection often invoke applications or services in other domains. If these requests are to obtain more sensitive data or service, their activity must be controlled.

Execution domain switching: Applications running in one domain or level of protection often invoke applications or services in other domains. If these requests are to obtain more sensitive data or service, their activity must be controlled. Memory protection: To truly provide security, the TCB must monitor memory references to verify confidentiality and integrity in storage.

Memory protection: To truly provide security, the TCB must monitor memory references to verify confidentiality and integrity in storage. Process activation: Registers, process status information, and file access lists are vulnerable to loss of confidentiality in a multiprogramming environment. This type of potentially sensitive information must be protected.

Process activation: Registers, process status information, and file access lists are vulnerable to loss of confidentiality in a multiprogramming environment. This type of potentially sensitive information must be protected.

ExamAlert

For the CISSP exam, you should understand not only that the TCB is tasked with enforcing security policy but also that the TCB is the sum of all protection mechanisms within a computer system that have also been evaluated for security assurance. It consists of hardware, firmware, and software.

Components that have not been evaluated are said to fall outside the security perimeter.

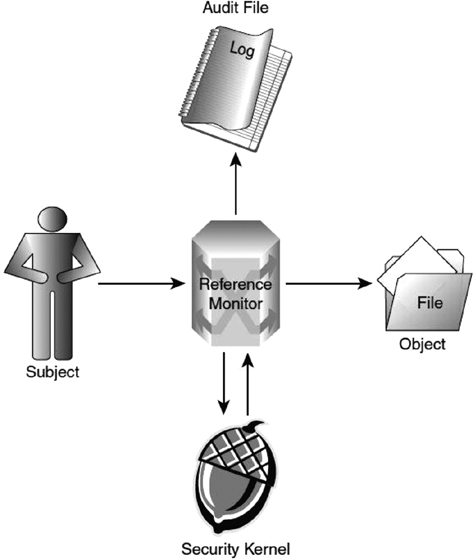

The TCB monitors the functions in the preceding list to ensure that the system operates correctly and adheres to security policy. The TCB follows the reference monitor concept. The reference monitor is an abstract machine that is used to implement security. The reference monitor’s job is to validate access to objects by authorized subjects. The reference monitor operates at the boundary between the trusted and untrusted realms. The reference monitor has three properties:

It cannot be bypassed and controls all access, as it must be invoked for every access attempt.

It cannot be bypassed and controls all access, as it must be invoked for every access attempt. It cannot be altered and is protected from modification or change.

It cannot be altered and is protected from modification or change. It must be small enough to be verified and tested correctly.

It must be small enough to be verified and tested correctly.

ExamAlert

For the CISSP exam, you should understand that the reference monitor enforces the security requirement for the security kernel.

The reference monitor is much like the bouncer at a club, standing between each subject and object and verifying that each subject meets the minimum requirements for access to an object (see Figure 4.5).

FIGURE 4.5 Reference Monitor

Note

Subjects are active entities such as people, processes, or devices.

Objects are passive entities that are designed to contain or receive information. Objects can be processes, software, or hardware.

The reference monitor can be designed to use tokens, capability lists, or labels:

Tokens: Communicate security attributes before requesting access

Tokens: Communicate security attributes before requesting access Capability lists: Offer faster lookup than security tokens but are not as flexible

Capability lists: Offer faster lookup than security tokens but are not as flexible Security labels: Used by high-security systems because these labels offer permanence

Security labels: Used by high-security systems because these labels offer permanence

At the heart of the operating system is the security kernel. The security kernel handles all user/application requests for access to system resources. A small security kernel is easy to verify, test, and validate as secure. However, in real life, the security kernel might be bloated with some unnecessary code because processes located inside can function faster and have privileged access. Vendors have taken different approaches to developing operating systems. For example, DOS used a monolithic kernel. Several of these designs are shown in Figure 4.6 and are described here:

Monolithic architecture: All of the OS processes work in kernel mode.

Monolithic architecture: All of the OS processes work in kernel mode. Layered OS design: This design separates system functionality into different layers.

Layered OS design: This design separates system functionality into different layers. Microkernel: A smaller kernel supports only critical processes.

Microkernel: A smaller kernel supports only critical processes. Hybrid microkernel: The kernel structure is similar to a microkernel but implemented in terms of a monolithic design.

Hybrid microkernel: The kernel structure is similar to a microkernel but implemented in terms of a monolithic design.

Although the reference monitor is conceptual, the security kernel can be found at the heart of every system. The security kernel is responsible for running the required controls used to enforce functionality and resist known attacks. As mentioned previously, the reference monitor operates at the security perimeter: the boundary between the trusted and untrusted realms. Components outside the security perimeter are not trusted. All trusted access control mechanisms are inside the security perimeter.

FIGURE 4.6 Operating System Architecture

Source: http://upload.wikimedia.org/wikipedia/commons/d/d0/OS-structure2.svg

Open and Closed Systems

Open systems accept input from other vendors and are based on standards and practices that allow connection to different devices and interfaces. The goal is to promote full interoperability whereby the system can be fully utilized.

Closed systems are proprietary. They use devices that are not based on open standards and that are generally locked. They lack standard interfaces to allow connection to other devices and interfaces.

For example, in the U.S. cell phone industry, AT&T and T-Mobile cell phones are based on the worldwide Global System for Mobile Communications (GSM) standard and can be used overseas easily on other networks with a simple change of the subscriber identity module (SIM). These are open-system phones. Phones that use Code Division Multiple Access (CDMA), such as Sprint and Verizon phones, do not have the same level of support and have almost completely been phased out. In 2010, carriers worldwide started this process when agreeing to switch to LTE, a 4G network with 2023 listed as the final drop date.

Note

The concept of open and closed can apply to more than just hardware. With open software, others can view and/or alter the source code, but with closed software, they cannot. For example, a Samsung Galaxy phone runs the open-source Android operating system, whereas an Apple iPhone runs the closed-source iOS.

Security Modes of Operation

Several security modes of operation are based on Department of Defense (DoD) 5220.22-M classification levels. According to the DoD, information being processed on a system and the clearance level of authorized users can be classified into one of four modes (see Table 4.2):

Dedicated: A need to know is required to access all information stored or processed. Every user requires formal access with clearance and approval and must have executed a signed nondisclosure agreement (NDA) for all the information stored and/or processed. This mode must also support enforced system access procedures. All hard-copy output and media removed will be handled at the level for which the system is accredited until reviewed by a knowledgeable individual. As the system is dedicated to processing of one particular type or classification of information all authorized users can access all data.

Dedicated: A need to know is required to access all information stored or processed. Every user requires formal access with clearance and approval and must have executed a signed nondisclosure agreement (NDA) for all the information stored and/or processed. This mode must also support enforced system access procedures. All hard-copy output and media removed will be handled at the level for which the system is accredited until reviewed by a knowledgeable individual. As the system is dedicated to processing of one particular type or classification of information all authorized users can access all data. System high: All users have a security clearance; however, a need to know is required only for some of the information contained within the system. Every user requires access approval and needs to have signed NDAs for all the information stored and/or processed. Access to an object by users not already possessing access permission must only be assigned by authorized users of the object. This mode must be capable of providing an audit trail that records time, date, user ID, terminal ID (if applicable), and filename. All users can access some data based on their need to know.

System high: All users have a security clearance; however, a need to know is required only for some of the information contained within the system. Every user requires access approval and needs to have signed NDAs for all the information stored and/or processed. Access to an object by users not already possessing access permission must only be assigned by authorized users of the object. This mode must be capable of providing an audit trail that records time, date, user ID, terminal ID (if applicable), and filename. All users can access some data based on their need to know. Compartmented: Valid need to know is required for some of the information on the system. All users must have formal access approval for all information they will access on the system and require proper clearance for the highest level of data classification on the system. All users must have signed NDAs for all information they will access on the system. All users can access some data based on their need to know and formal access approval.

Compartmented: Valid need to know is required for some of the information on the system. All users must have formal access approval for all information they will access on the system and require proper clearance for the highest level of data classification on the system. All users must have signed NDAs for all information they will access on the system. All users can access some data based on their need to know and formal access approval. Multilevel: Every user has a valid need to know for some of the information that is on the system, and more than one classification level can be processed at the same time. Users must have formal access approval and must have signed NDAs for all information they will access on the system. Mandatory access controls provide a means of restricting access to files based on their sensitivity label. All users can access some data based on their need to know, clearance, and formal access approval.

Multilevel: Every user has a valid need to know for some of the information that is on the system, and more than one classification level can be processed at the same time. Users must have formal access approval and must have signed NDAs for all information they will access on the system. Mandatory access controls provide a means of restricting access to files based on their sensitivity label. All users can access some data based on their need to know, clearance, and formal access approval.

TABLE 4.2 Security Modes of Operation

Mode |

Dedicated |

System High |

Compartmented |

Multilevel |

|---|---|---|---|---|

Signed NDA |

All |

All |

All |

All |

Clearance |

All |

All |

All |

Some |

Approval |

All |

All |

Some |

Some |

Need to know |

All |

Some |

Some |

Some |

Note

The term sensitivity or security labels denotes high-security Mandatory access control (MAC)-based systems.

Operating States

When systems are used to process and store sensitive information, there must be some agreed-on methods for how this will work. Generally, these concepts were developed to meet the requirements of handling sensitive government information with categories such as sensitive, secret, and top secret. The burden of handling this task can be placed on either administration or the system itself.

Generally, two designs are used:

Single-state systems: This type of system is designed and implemented to handle one category of information. The burden of management falls on the administrator, who must develop the policy and procedures to manage the system. The administrator must also determine who has access and what type of access the users have. These systems are dedicated to one mode of operation, so they are sometimes referred to as dedicated systems.

Single-state systems: This type of system is designed and implemented to handle one category of information. The burden of management falls on the administrator, who must develop the policy and procedures to manage the system. The administrator must also determine who has access and what type of access the users have. These systems are dedicated to one mode of operation, so they are sometimes referred to as dedicated systems. Multistate systems: These systems depend not on the administrator but on the system itself. More than one person can log in to a multistate system and access various types of data, depending on the level of clearance. As you would probably expect, these systems can be expensive. The XTS-400 that runs the Secure Trusted Operating Program (STOP) OS from BAE Systems is an example of a multistate system. A multistate system can operate as a compartmentalized system. This means that Mike can log in to the system with a secret clearance and access secret-level data, whereas Dwayne can log in with top-secret-level clearance and access a different level of data. These systems are compartmentalized and can segment data on a need-to-know basis.

Multistate systems: These systems depend not on the administrator but on the system itself. More than one person can log in to a multistate system and access various types of data, depending on the level of clearance. As you would probably expect, these systems can be expensive. The XTS-400 that runs the Secure Trusted Operating Program (STOP) OS from BAE Systems is an example of a multistate system. A multistate system can operate as a compartmentalized system. This means that Mike can log in to the system with a secret clearance and access secret-level data, whereas Dwayne can log in with top-secret-level clearance and access a different level of data. These systems are compartmentalized and can segment data on a need-to-know basis.

Tip

Security-Enhanced Linux and TrustedBSD are freely available implementations of operating systems with limited multistate capabilities. Security evaluation is a problem for these free MLS implementations because of the expense and time it would take to fully qualify these systems.

Recovery Procedures

Unfortunately, things don’t always operate normally; they sometimes go wrong, and system failure can occur. A system failure could potentially compromise a system by corrupting integrity, opening security holes, or causing corruption. Efficient designs have built-in recovery procedures to recover from potential problems. There are two basic types of recovery procedures:

Fail safe: If a failure is detected, the system is protected from compromise by termination of services.

Fail safe: If a failure is detected, the system is protected from compromise by termination of services. Fail soft: A detected failure terminates the noncritical process. Systems in fail soft mode are still able to provide partial operational capability.

Fail soft: A detected failure terminates the noncritical process. Systems in fail soft mode are still able to provide partial operational capability.

It is important to be able to recover when an issue arises. The best way to ensure recovery is to take a proactive approach and back up all critical files on a regular schedule. The goal of recovery is to recover to a known state. Common issues that require recovery include the following:

System reboot: An unexpected/unscheduled event can cause a system reboot.

System reboot: An unexpected/unscheduled event can cause a system reboot. System restart: This automatically occurs when a system goes down and forces an immediate reboot.

System restart: This automatically occurs when a system goes down and forces an immediate reboot. System cold start: This results from a major failure or component replacement.

System cold start: This results from a major failure or component replacement. System compromise: This can be caused by an attack or a breach of security.

System compromise: This can be caused by an attack or a breach of security.

Process Isolation

Process isolation is required to maintain a high level of system trust. For a system to be certified as a multilevel security system, it must support process isolation. Without process isolation, there would be no way to prevent one process from spilling over into another process’s memory space, corrupting data, or possibly making the whole system unstable. Process isolation is performed by the operating system; its job is to enforce memory boundaries. Separation of processes is an important topic; without it, a system could be designed with a single point of failure (SPOF) so that one flaw in the design or configuration could cause the entire system to stop operating.

For a system to be secure, the operating system must prevent unauthorized users from accessing areas of the system to which they should not have access, it should be robust, and it should have no single point of failure. Sometimes all this is accomplished through the use of a virtual machine. A virtual machine allows users to believe that they have the use of the entire system, but in reality, processes are completely isolated. To take this concept a step further, some systems that require truly robust security also implement hardware isolation so that the processes are segmented not only logically but also physically.

Note

Java uses a form of virtual machine because it uses a sandbox to contain code and allows it to function only in a controlled manner.

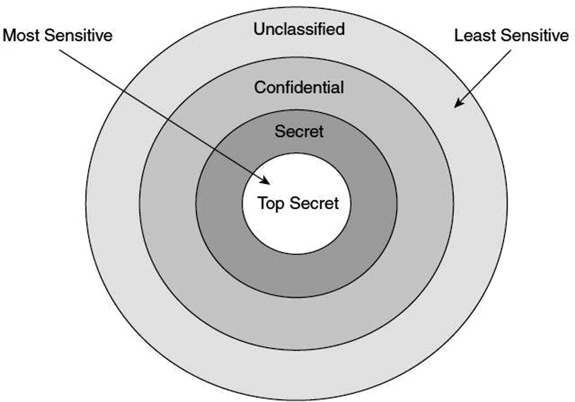

Common Formal Security Models

Security models are used to determine how security will be implemented, what subjects can access the system, and what objects they will have access to. Simply stated, a security model formalizes security policy. Security models of control are typically implemented by enforcing integrity, confidentiality, or other controls. Keep in mind that each of these models lays out broad guidelines and is not specific in nature. It is up to the developer to decide how these models will be used and integrated into specific designs (see Figure 4.7).

The sections that follow discuss the different security models of control in greater detail. The first three models discussed are considered lower-level models.

FIGURE 4.7 Security Model Fundamental Concepts Used in the Design of an OS

State Machine Model

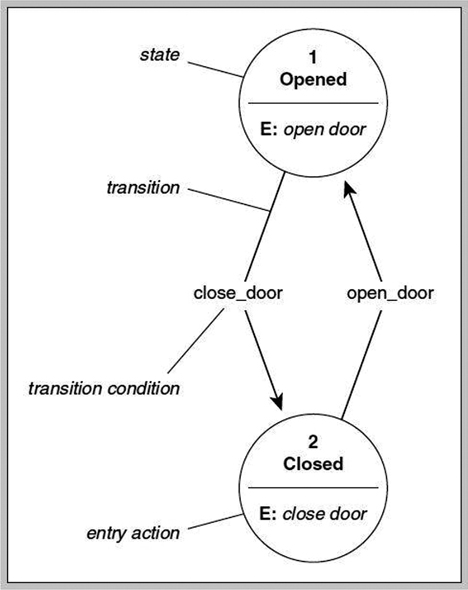

The state machine model is based on a finite state machine (see Figure 4.8). State machines are used to model complex systems and deal with acceptors, recognizers, state variables, and transaction functions. A state machine defines the behavior of a finite number of states, the transitions between those states, and actions that can occur.

The most common representation of a state machine is through a state machine table. For example, as Table 4.3 illustrates, if the state machine is at the current state B and condition 2, the next state would be C and condition 3 as we progress through the options.

FIGURE 4.8 Finite State Model

TABLE 4.3 State Machine Table

State Transaction |

State A |

State B |

State C |

|---|---|---|---|

Condition 1 |

… |

… |

… |

Condition 2 |

… |

Current state |

… |

Condition 3 |

… |

… |

… |

A state machine model monitors the status of the system to prevent it from slipping into an insecure state. Systems that support the state machine model must have all their possible states examined to verify that all processes are controlled in accordance with the system security policy. The state machine concept serves as the basis of many security models. The model is valued for knowing in what state the system will reside. For example, if the system boots up in a secure state, and every transaction that occurs is secure, it must always be in a secure state and will not fail open. (To fail open means that all traffic or actions are allowed rather than denied.)

Information Flow Model

The information flow model is an extension of the state machine concept and serves as the basis of design for both the Biba and Bell-LaPadula models, which are discussed later in this chapter. The information flow model consists of objects, state transitions, and lattice (flow policy) states. The goal with this model is to prevent unauthorized, insecure information flow in any direction. This model and others can make use of guards, which allow the exchange of data between various systems.

Noninterference Model

The noninterference model, defined by Goguen and Meseguer, was designed to make sure that objects and subjects of different levels don’t interfere with objects and subjects of other levels. The model uses inputs and outputs of either low or high sensitivity. Each data access attempt is independent of all others, and data cannot cross security boundaries.

Confidentiality

Although the models described so far serve as a basis for many security models developed later, one major concern with those earlier models is confidentiality. Government entities such as the DoD are concerned about the confidentiality of information. The DoD divides information into categories to ease the burden of managing who has access to various levels of information. The DoD information classifications are sensitive but unclassified (SBU), confidential, secret, and top secret. The Bell-LaPadula model was one of the first models to address the confidentiality needs of the DoD.

Bell-LaPadula Model

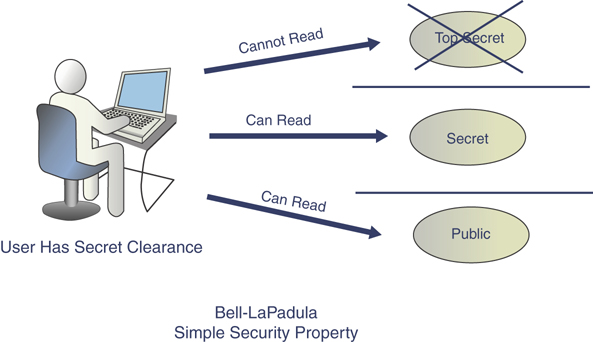

The Bell-LaPadula state machine model enforces confidentiality. This model uses mandatory access control to enforce the DoD multilevel security policy. For subjects to access information, they must have a clear need to know and must meet or exceed the information’s classification level.

The Bell-LaPadula model is defined by the following properties:

Simple security (ss) property: This property states that a subject at one level of confidentiality is not allowed to read information at a higher level of confidentiality. This is sometimes referred to as “no read up.” Figure 4.9 provides an example.

Simple security (ss) property: This property states that a subject at one level of confidentiality is not allowed to read information at a higher level of confidentiality. This is sometimes referred to as “no read up.” Figure 4.9 provides an example.

FIGURE 4.9 Bell-LaPadula Simple Security Model

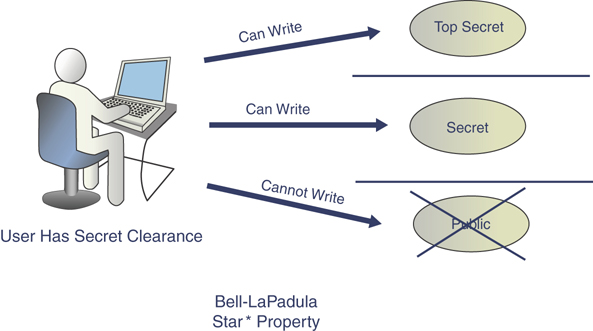

Star (*) security property: This property states that a subject at one level of confidentiality is not allowed to write information to a lower level of confidentiality. This is also known as “no write down.” Figure 4.10 provides an example.

Star (*) security property: This property states that a subject at one level of confidentiality is not allowed to write information to a lower level of confidentiality. This is also known as “no write down.” Figure 4.10 provides an example.

FIGURE 4.10 Bell-LaPadula Star Property

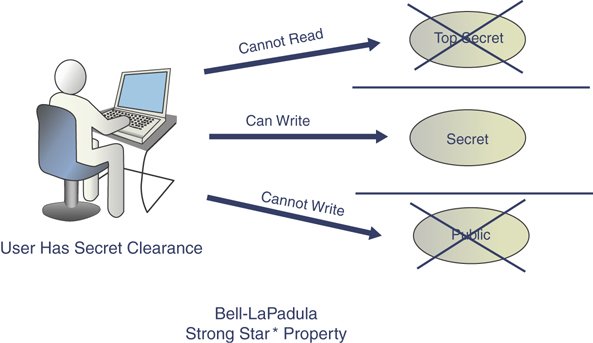

Strong star property: This property states that a subject cannot read or write to an object of higher or lower sensitivity. Figure 4.11 provides an example.

Strong star property: This property states that a subject cannot read or write to an object of higher or lower sensitivity. Figure 4.11 provides an example.

FIGURE 4.11 Bell-LaPadula Strong Star Property

ExamAlert

Review the Bell-LaPadula simple security and star security models closely; they are easy to confuse with Biba’s two defining properties.

Tip

A fourth but rarely implemented property of the Bell-LaPadula model called the discretionary security property allows users to grant access to other users at the same clearance level by means of an access matrix.

Although the Bell-LaPadula model goes a long way in defining the operation of secure systems, the model is not perfect. It does not address security issues such as covert channels. It was designed in an era when mainframes were the dominant platform. It was designed for multilevel security and takes only confidentiality into account.

Tip

It is important to know that the Bell-LaPadula model deals with confidentiality. This means that reading information at a higher level than is allowed endangers confidentiality.

Integrity

Integrity is a good thing. It is one of the basic elements of the security triad, along with confidentiality and availability. Integrity plays an important role in security because it can be used to verify that unauthorized users are not modifying data, authorized users don’t make unauthorized changes, and databases balance and data remains internally and externally consistent. Whereas governmental entities are typically very concerned with confidentiality, other organizations might be more focused on the integrity of information. In general, integrity has four goals:

Prevent data modification by unauthorized parties

Prevent data modification by unauthorized parties Prevent unauthorized data modification by authorized parties

Prevent unauthorized data modification by authorized parties Reflect the real world

Reflect the real world Maintain internal and external consistency

Maintain internal and external consistency

Note

Some sources list only three goals of security by combining the third and fourth goals into one: maintain internal and external consistency and ensure that the data reflects the real world.

Two security models that address secure systems integrity include Biba and Clark-Wilson models, which are covered in the following sections. The Biba model addresses only the first integrity goal, and the Clark-Wilson model addresses all four goals.

Biba Model

The Biba model was the first model developed to address integrity concerns. Originally published in 1977, this lattice-based model has the following defining properties:

Simple integrity property: This property states that a subject at one level of integrity is not permitted to read an object of lower integrity.

Simple integrity property: This property states that a subject at one level of integrity is not permitted to read an object of lower integrity. Star (*) integrity property: This property states that an object at one level of integrity is not permitted to write to an object of higher integrity.

Star (*) integrity property: This property states that an object at one level of integrity is not permitted to write to an object of higher integrity. Invocation property: This property prohibits a subject at one level of integrity from invoking a subject at a higher level of integrity.

Invocation property: This property prohibits a subject at one level of integrity from invoking a subject at a higher level of integrity.

Tip

The star property in both the Biba and Bell-LaPadula models deals with writes. One easy way to remember these rules is to think, “It’s written in the stars!”

The Biba model addresses only the first goal of integrity: protecting the system from access by unauthorized users. Other types of concerns such as confidentiality are not examined. This model also assumes that internal threats are being protected by good coding practices, and it therefore focuses on external threats.

Tip

To remember the purpose of the Biba model, you can think that the i in Biba stands for integrity.

Tip

Remember that the Biba model deals with integrity and, as such, writing to an object of a higher level might endanger the integrity of the system.

Clark-Wilson Model

The Clark-Wilson model, which was created in 1987, differs from previous models because it was developed to be used for commercial activities. This model addresses all four goals of integrity. The Clark-Wilson model dictates that the separation of duties must be enforced, subjects must access data through an application, and auditing is required. Some terms associated with this model include the following:

User

User Transformation procedure

Transformation procedure Unconstrained data item

Unconstrained data item Constrained data item

Constrained data item Integrity verification procedure

Integrity verification procedure

The Clark-Wilson model features an access control triple, where subjects must access programs before accessing objects (subject–program–object). The access control triple is composed of the user, a transformational procedure, and the constrained data item. It was designed to protect integrity and prevent fraud. Authorized users cannot change data in an inappropriate way. The Clark-Wilson model checks three attributes: tampered, logged, and consistent (TLC).