Chapter 8. Optimizing Query Response

Fast Query Response Explained

When processing large amounts of data in a distributed environment, a naive query plan might take orders of magnitude more time than the optimal plan. In some cases, the query execution will not complete, even after several hours, as shown in our experimental study.1 Pivotal’s Query Optimizer (PQO) is designed to find the optimal way to execute user queries in distributed environments such as Pivotal’s Greenplum Database and HAWQ. The open source version of PQO is called GPORCA. To generate the fastest plan, GPORCA considers thousands of alternative query execution plans and makes a cost-based decision.

As with most commercial and scientific database systems, user queries are submitted to the database engine via SQL. SQL is a declarative language that is used to define, manage and query the data that is stored in relational/stream data management systems.

Declarative languages describe the desired result, not the logic required to produce it. The responsibility for generating an optimal execution plan lies solely with the query optimizer employed in the database management system. To understand how query processing works in Greenplum, there is an excellent description in the documentation.

GPORCA is a top-down query optimizer based on the Cascades optimization framework,2 which is not tightly coupled with the host system. This unique feature enables GPORCA to run as a standalone service outside the database system. Therefore, GPORCA supports products with different computing architectures (e.g., MPP and Hadoop) using a single optimizer. It also takes advantage of the extensive legacy of relational optimization in different query processing paradigms like Hadoop. For a given user query, there can be a significantly large number of ways to produce the desired result set, some much more efficient than others. While searching for an optimal query execution plan, the optimizer must examine as many plans as possible and follow heuristics and best practices for choosing and ignoring alternative plans. Statistics capturing the characteristics of the stored data, such as skew, number of distinct values, histograms, and percentage of null values, as well as the cost model for the various operations are crucial ingredients that the query optimizer relies on when navigating the space of all viable execution plans. For GPORCA to be most effective, it is crucial for DBAs to maintain up-to-date statistics for the queried data.

Until recently, Greenplum used what is referred to as the legacy query optimizer (LQO). This is a derivative of the original PostgreSQL planner that was adapted to the Greenplum code base initially. The PostgreSQL planner was originally built for single-node PostgreSQL optimized for OLTP queries. In contrast, an MPP engine is built for long running Online Analytical Processing (OLAP) queries. For this reason, the PostgreSQL planner was not built with an MPP database in mind. Although features like join ordering were carefully thought out, the architecture and design choices make maintenance and adding new features increasingly difficult.

At the end of 2010, Greenplum began an internal effort to produce a modern query optimizer, which made its first appearance in Greenplum version 4.3.5. as GPORCA.

What makes GPORCA particularly useful is its ability to generate more efficient code for some of the complex situations that commonly arise in analytic data warehouses, including the following:

Smarter partition elimination

Subquery unnesting

Common table expressions (CTE)

Multilevel partitioning

Improved join ordering

Join aggregate reordering

Sort order optimization

Skew awareness

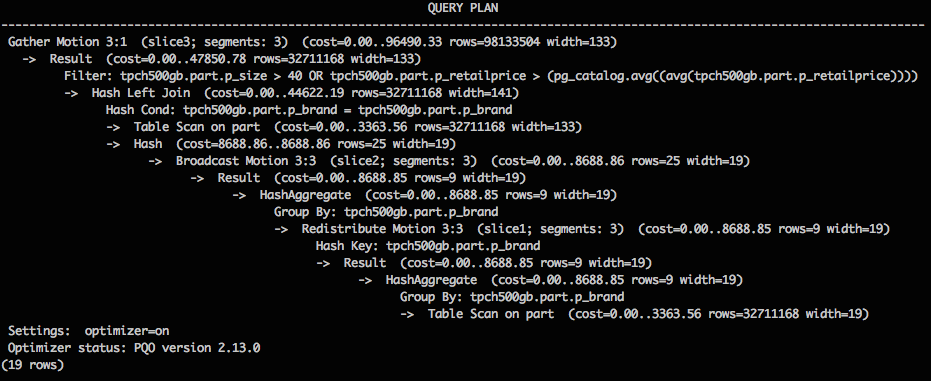

Previously, the legacy query optimizer was set as the default, but as of Greenplum 5.0, GPORCA is the default query optimizer (see Figure 8-1). You can change the default at the database level or the session level by setting the GUC parameter optimizer = on. When enabling GPORCA, we request that users or DBAs ensure that statistics have been collected on the root partition of a partitioned table. This is because, unlike the legacy planner, GPORCA uses the statistics at the root partitions rather than using statistics of individual leaf partitions.

Figure 8-1. Query flow when GPORCA is enabled

Let’s look at an example. Following is the schema for the table part from the TPC-H benchmark:

CREATE TABLE part (

p_partkey integer NOT NULL,

p_name character varying(55) NOT NULL,

p_mfgr character(25) NOT NULL,

p_brand character(10) NOT NULL,

p_type character varying(25) NOT NULL,

p_size integer NOT NULL,

p_container character(10) NOT NULL,

p_retailprice numeric(15,2) NOT NULL,

p_comment character varying(23) NOT NULL

) distributed by (p_partkey);

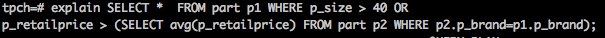

Consider the correlated query shown in Figure 8-2, which fetches all parts with size greater than 40 or retail price greater than the average price of all parts that have the same brand.

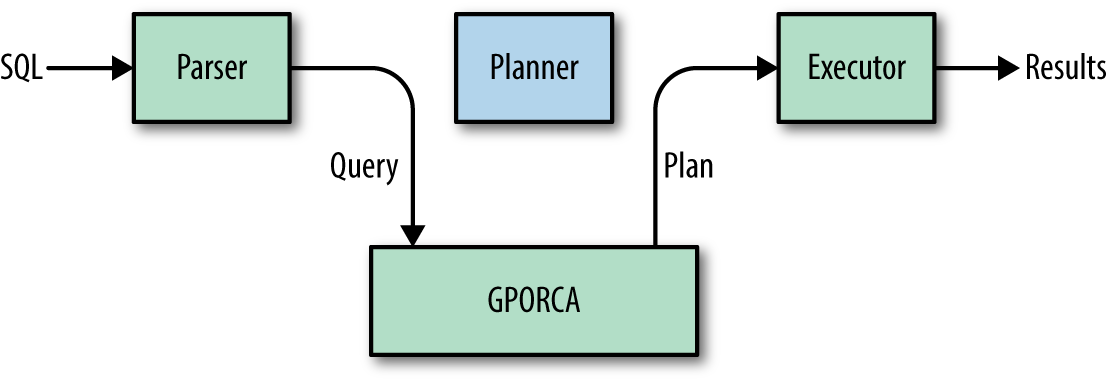

Figure 8-3 presents the explain plan produced by GPORCA, the optimizer status denotes the version of GPORCA used to generate the plan.

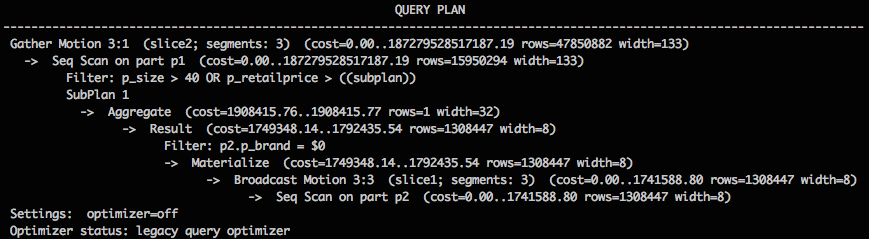

In comparison, Figure 8-4 shows an LQO plan that employs a correlated execution strategy.

Figure 8-4. Legacy query optimizer plan for a correlated subquery on the part table

Note

The cost models used by the two optimizers are different. For instance, the top node for the GPORCA plan has the cost of 98133504, whereas that of the legacy query optimizer is 187279528517187. These numbers make sense within a particular optimizer, but they are not comparable between the two different optimizers.

GPORCA excels on partitioned tables. By comparison, the LQO can only eliminate partitions statically. For example, if a table is partitioned by date, a WHERE clause that limits the date range would eliminate any partitions in which the limited date range could not occur. However, it cannot handle dynamic conditions in which the WHERE clause has a subquery that determines the range. Furthermore, many large fact tables in a data warehouse might have a significantly large number of partitions. The legacy planner could encounter Out Of Memory (OOM) errors in cases for which GPORCA would not.

Modern data analytics and business intelligence (BI) often produce SQL with correlated subqueries, where the inner subquery requires knowledge of the outer query. Consider the preceding example that fetches parts with size > 40 or retail price greater than the average price of all parts that have the same brand. In the plan shown in Figure 8-4 generated by the LQO, for the tuple in the outer part table p1, the plan executes a subplan that computes the average part price of all parts having the same brand as the tuple from table part p1. This computed intermediate value is used to determine whether that tuple in p1 will be in the query result or not. Because the legacy query optimizer plan repeatedly executes the subplan for each tuple in the part table p1, the plan is considered a correlated execution. Such a correlated plan is suboptimal because it does extraneous work that could be avoided. In the worst case, if all the parts belong to the same brand, we will be computing the average price one too many times.

In contrast, GPORCA generates a de-correlated plan in which it first computes average price for each brand. This is done only once. The intermediate results then are joined with the parts table to generate a list of parts that meets the user’s criteria.

Un-nesting correlated queries is very important in analytic data warehouses due to the way that BI tools are built. They are also common in handwritten SQL code. This is evident by the fact that 20 and 40 percent of the workloads in the TPC-DS and TPC-H benchmarks, respectively, have correlated subqueries.

With these and other optimizations, SQL optimized by GPORCA can achieve increases in speed of a factor of 10 or more. There are other queries, albeit a small number, for which GPORCA has not yet produced an improvement in performance. As more capabilities are added to GPORCA over time, it will be the rare case for which the LQO provides better performance.

Learning More

To read more about query optimization, go to the following sites:

1 “New Benchmark Results: Pivotal Query Optimizer Speeds Up Big Data Queries Up To 1000x”

2 G. Graefe. 1995. “The Cascades Framework for Query Optimization.” IEEE Data Eng Bull, 18(3).