Evaluate Early, Evaluate Often, Evaluate Continuously

If the first mistake is skipping architecture evaluations, the second mistake is waiting too late to start. The sooner you start testing your designs, the sooner you’ll be able to improve them. Better still, make evaluation a regular part of your development routine.

There are dozens of opportunities every day to confirm (or amend) design decisions. Every day we walk through the architecture and tell stories about how it promotes quality attributes. We submit code for peer review. We pair program as a regular part of our everyday workflow.

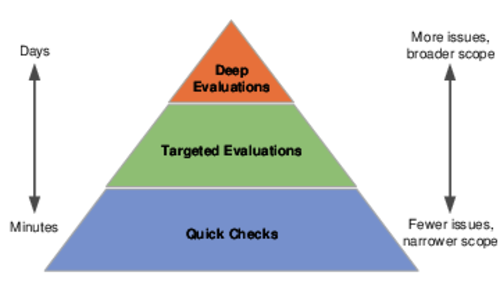

Balance Cost and Value with the Evaluation Pyramid

The test pyramid is a concept introduced by Mike Cohn in Succeeding with Agile: Software Development Using Scrum [Coh09]. The premise is straightforward. Different kinds of tests find different kinds of defects, but some tests are easier to create and maintain than others. The test pyramid proposes that the majority of tests should be fast to run and cheap to maintain unit tests. Since unit tests can’t catch everything, we should also create a small number of slow, brittle full-stack integration tests.

The premise behind the evaluation pyramid is similar to the test pyramid. The vast majority of architecture evaluations should be fast and cheap quick checks. Quick checks won’t find every kind of design issue so we’ll also want to perform a few thorough but costly deep evaluations. To provide for some consistency between quick checks and deep evaluations, we can perform targeted evaluations.

On a typical system, we might perform only one or two deep evaluations, dozens of targeted evaluations, and hundreds of quick checks. Deep evaluations will always be a major milestone in the system’s life. Targeted evaluations might happen as part of a regular cadence, perhaps as often as every 2 to 4 weeks. Quick checks occur daily—sometimes multiple times each day—and become a seamless part of the development workflow.

Here are some examples of each type of evaluation from the pyramid:

Evaluation Type | How many? | Description | Examples |

|---|---|---|---|

Deep Evaluation | 1–3 | Considers the whole system and interplay of several ASRs | Architecture Trade-off Analysis Method |

Targeted | |||

Evaluation | dozens | Considers a single decision, component, or ASR | Risk storming, Question–Comment–Concern, Architecture Briefing |

Quick Check | countless | Considers discrete design decisions as they are made, often used to reinforce understanding or evaluate details | Code review, storytelling, whiteboard jam, sanity check |

Just because we evaluate the architecture continuously, it does not mean we are doing a good job of it. We also need to look for a variety of issues during our evaluations to ensure we have good coverage.

Look for Different Kinds of Issues

Eat the rainbow is something I tell my son to make sure he eats a variety of fresh fruits and vegetables. Fresh foods are different colors because they have an abundance of different vitamins. Eating different colors ensures he gets all the vitamins and minerals a healthy body needs. Variety is as important for healthy architectures as it is for healthy bodies.

Most of the methods discussed in this chapter and in Chapter 17, Activities to Evaluate Design Options can be adapted to draw out different kinds of issues. An issue, in the general sense, is any topic that requires additional investigation or thought. To ensure we have a healthy architecture, look for issues from across the architectural issues rainbow as shown in the figure.

Every issue from the rainbow can tell us something different about our architecture.

- Risks (Red)

-

A risk is something bad that might happen, but it hasn’t happened yet. As you learned in Let Risk Be Your Guide, risks have two components: a condition and a consequence. The condition is something currently true about the architecture. The consequence is something bad that might happen as a direct result of the condition.

Risks can be mitigated or accepted.

- Unknowns (Orange)

-

Sometimes we simply don’t have enough information to say whether or not the architecture satisfies ASRs. Identify unknowns by looking for open questions about how things work and how specific ASRs will be addressed. Architecture evaluations can turn unknown unknowns into known unknowns. You can deal with the latter. The former can kill your architecture.

Unknowns require further investigation.

- Problems (Yellow)

-

Problems, unlike risks, are bad things that have already come to pass. Problems arise when you make a design decision and it just doesn’t work out the way you hoped. Problems can also arise because the world changed around you so that a decision that was a good idea at the time no longer makes sense. If the architecture already exists in code, we think of problems as technical debt.

Problems can be fixed or accepted.

- Gaps in Understanding (Green)

-

When you zig and your team zags there is a gap in understanding. Gaps in understanding arise when what stakeholders think they know about the architecture doesn’t match the current design. In rapidly evolving architectures, gaps can arise quickly and without warning.

Gaps in understanding can be addressed through education.

- Architectural Erosion (Blue)

-

The implemented system almost never turns out the way we imagined it. This gap between the designed architecture and the as-built architecture is called architectural erosion, sometimes called architectural drift or architectural rot. Without vigilance, the architecture drifts from the planned design a little every day until the implemented system bears little resemblance to the plan.

Architectural erosion can be addressed by paying attention to the little details—in code or documentation—on a regular basis.

- Contextual Drift (Violet)

-

Sometimes the world changes without us noticing. Software takes time to build. Over the months, facts that were true can become untrue. New facts come to light. Circumstances change. Contextual drift happens any time the business drivers or context driving our decisions changes after we’ve made a design decision.

Contextual drift can be addressed by occasionally revisiting business goals, architecturally significant requirements, and other things we think we know about our stakeholders and their needs.

A common mistake new software architects make is to look for the same kinds of issues all the time. A simple way to take your architecture evaluations to the next level is to ask questions that haven’t been asked before. Look for a variety of issues and you won’t be surprised by what you don’t know.

Start with Low Ceremony Evaluation Methods

The amount of ceremony in a method refers to how much formality is required to apply the method. High ceremony methods are filled with rituals and can be costly to use. High ceremony methods are easy to repeat and produce consistent results. Low ceremony methods, on the other hand, are informal and have few rituals. As such, they are faster and cheaper to apply but narrower in scope, and more likely to produce inconsistent results.

If your team is new to architecture evaluations, starting with a high ceremony method such as the ATAM can be off-putting and might scare teammates away from evaluations for life. Instead of diving into high ceremony methods first, ease your team in to evaluations by starting with low ceremony methods. You might choose not to tell your team they are doing an architecture evaluation.

Here’s an example of how this could play out. After a whiteboard jam (introduced) but before the group disbands, kick off an evaluation. Grab the whiteboard marker and say, This looks like a good start. Are there any issues you see with our ability to promote <insert quality attribute here>? Write down what they say on the whiteboard next to the diagrams. Help the team summarize findings and decide on next steps. Boom. Impromptu architecture evaluation completed in 10 minutes or less.

Low ceremony evaluation methods reinforce architectural thinking among the team and build a culture that challenges design decisions. As your team becomes comfortable with low ceremony approaches, strategically introduce targeted evaluations and, eventually, a deep evaluation.