A short history of audio technology

Early years: from phonograph to stereo recording

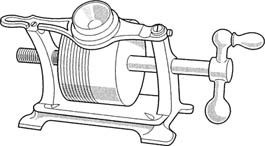

The evolution of recording and reproduction of audio signals started in 1877, with the invention of the phonograph by T. A. Edison. Since then, research and efforts to improve techniques have been determined by the ultimate aim of recording and reproducing an audio signal faithfully, i.e., without introducing distortion or noise of any form.

With the introduction of the gramophone, a disc phonograph, in 1893 by P. Berliner, the original form of our present record was born. This model could produce a much better sound and could also be reproduced easily.

Around 1925 electric recording was started, but an acoustic method was still mainly used in the sound reproduction system: where the sound was generated by a membrane and a horn, mechanically coupled to the needle in the groove in playback. When recording, the sound picked up was transformed through a horn and membrane into a vibration and coupled directly to a needle which cut the groove onto the disc.

Figure I shows Edison’s original phonograph, patented in 1877, which consisted of a piece of tin foil wrapped around a rotating cylinder.

Vibration of his voice spoken into a recording horn (as shown) caused the stylus to cut grooves into a tin foil. The first sound recording made was Edison reciting ‘Mary had a little lamb’ (Edison National History Site).

Figure I Edison’s phonograph.

Figure II shows the Berliner gramophone, manufactured by US Gramophone Company, Washington, DC. It was hand-powered and required an operator to crank the handle up to a speed of 70 revolutions per minute (rpm) to get a satisfactory playback (Smithsonian Institution).

Figure II Berliner gramophone

Further developments, such as the electric crystal pick-up and, in the 1930s, broadcast AM radio stations, made the SP (standard playing 78 rpm record) popular. Popularity increased with the development, in 1948 by CBS, of the 331⁄3 rpm long-playing record (LP), with about 25 minutes of playing time on each side. Shortly after this, the EP (extended play) 45 rpm record was introduced by RCA with an improvement in record sound quality. At the same time, the lightweight pick-up cartridge, with only a few grams of stylus pressure, was developed by companies like General Electric and Pickering.

The true start of progress towards the ultimate aim of faithful recording and reproduction of audio signals was the introduction of stereo records in 1956. This began a race between manufacturers to produce a stereo reproduction tape recorder, originally for industrial master use. However, the race led to a simplification of techniques which, in turn, led to the development of equipment for domestic use.

Broadcast radio began its move from AM to FM, with consequent improvement of sound quality, and in the early 1960s stereo FM broadcasting became a reality. In the same period, the compact cassette recorder, which would eventually conquer the world, was developed by Philips.

Developments in analog reproduction techniques

The three basic media available in the early 1960s – tape, record and FM broadcast – were all analog media. Developments since then include the following.

Developments in turntables

There has been remarkable progress since the stereo record appeared. Cartridges, which operate with stylus pressure of as little as 1 gram, were developed and tonearms which could trace the sound groove perfectly with this 1-gram pressure were also made. The hysteresis synchronous motor and DC servo motor were developed for quieter, regular rotation and elimination of rumble. High-quality heavyweight model turntables, various turntable platters and insulators were developed to prevent unwanted vibrations from reaching the stylus. With the introduction of electronic technology, full automation was performed. The direct drive system with the electronically controlled servo motor, the BSL motor (brushless and slotless linear motor) and the quartz-locked DC servo motor were finally adopted together with the linear tracking arm and electronically controlled tone-arms (biotracer). So, enormous progress was achieved since the beginning of the gramophone: in the acoustic recording period, disc capacity was 2 minutes on each side at 78 rpm, and the frequency range was 200 Hz–3 kHz, with a dynamic range of 18 dB. At its latest stage of development, the LP record frequency range is 30 Hz–15 kHz, with a dynamic range of 65 dB in stereo.

Figure III PS-X75 analog record player.

Developments in tape recorders

In the 1960s and 1970s, the open reel tape recorder was the instrument used both for record production and for broadcast, so efforts were constantly made to improve the performance and quality of the signal. Particular attention was paid to the recording and reproduction heads, recording tape as well as tape path drive mechanism with, ultimately, a wow and flutter of only 0.02% wrms at 38 cm s–1, and of 0.04% wrms at 19 cm s–1. Also, the introduction of compression/expansion systems such as Dolby, dBx, etc. improved the available signal-to-noise ratios.

Professional open reel tape recorders were too bulky and too expensive for general consumer use, however, but since its invention in 1963 the compact cassette recorder began to make it possible for millions of people to enjoy recording and playing back music with reasonable tone quality and easy operation. The impact of the compact cassette was enormous, and tape recorders for recording and playing back these cassettes became quite indispensable for music lovers, and for those who use the cassette recorders for a myriad of purposes such as taking notes for study, recording speeches, dictation, for ‘talking letters’ and for hundreds of other applications.

Inevitably, the same improvements used in open reel tape recorders eventually found their way into compact cassette recorders.

Figure IV TC-766-2 analog domestic reel-to-reel tape recorder

Limitations of analog audio recording

Despite the spectacular evolution of techniques and the improvements in equipment, by the end of the 1970s the industry had almost reached the level above which few further improvements could be performed without increasing dramatically the price of the equipment. This was because quality, dynamic range and distortion (in its broadest sense) are all determined by the characteristics of the medium used (record, tape, broadcast) and by the processing equipment. Analog reproduction techniques had just about reached the limits of their characteristics.

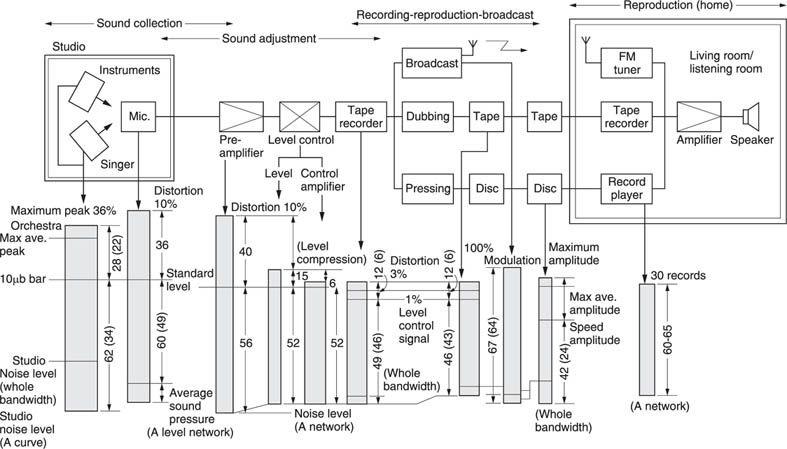

Figure V Typical analog audio systems, showing dynamic range.

Figure V represents a standard analog audio chain, from recording to reproduction, showing dynamic ranges in the three media: tape, record, broadcast.

The lower limit of dynamic range is determined by system noise and especially the lower frequency component of the noise. Distortion by system non-linearity generally sets the upper limit of dynamic range.

The strength and extent of a pick-up signal from a microphone is determined by the combination of the microphone sensitivity and the quality of the microphone preamplifier, but it is possible to maintain a dynamic range in excess of 90 dB by setting the levels carefully. However, the major problems in microphone sound pick-up are the various types of distortion inherent in the recording studio, which cause a narrowing of the dynamic range, i.e., there is a general minimum noise level in the studio, created by, say, artists or technical staff moving around, or the noise due to air currents and breath, and all types of electrically induced distortions.

Up to the pre-mixing and level amplifiers no big problems are encountered. However, depending on the equipment used for level adjustment, the low and high limits of dynamic range are affected by the use of equalization. The type and extent of equalization depend on the medium. Whatever, control amplification and level compression are necessary, and this affects the sound quality and the audio chain. Furthermore, if you consider the fact that for each of the three media (tape, disc, broadcast) master tape and mother tape are used, you can easily understand that the narrow dynamic range available from conventional tape recorders becomes a ‘bottle neck’ which affects the whole process.

To summarize, in spite of all the spectacular improvements in analog technology, it is clear that the original dynamic range is still seriously affected in the analog reproduction chain.

Similar limits to other factors affecting the system – frequency response, signal-to-noise ratio, distortion, etc. – exist simply due to the analog processes involved. These reasons prompted manufacturers to turn to digital techniques for audio reproduction.

First development of PCM recording systems

The first public demonstration of pulse code modulated (PCM) digital audio was in May 1967, by NHK (Japan Broadcasting Corporation) and the record medium used was a 1-inch, two-head, helical scan VTR. The impression gained by most people who heard it was that the fidelity of the sound produced by the digital equipment could not be matched by any conventional tape recorder. This was mainly because the limits introduced by the conventional tape recorder simply no longer occurred.

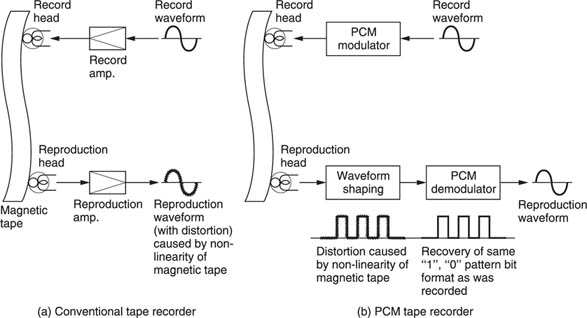

As shown in Figure VIa, the main reason why conventional analog tape recorders cause such a deterioration of the original signal is firstly that the magnetic material on the tape actually contains distortion components before anything is actually recorded. Secondly, the medium itself is non-linear, i.e., it is not capable of recording and reproducing a signal with total accuracy. Distortion is, therefore, built in to the very heart of every analog tape recorder. In PCM recording (Figure VIb), however, the original bit value pattern corresponding to the audio signal, and thus the audio signal itself, can be fully recovered, even if the recorded signal is distorted by tape non-linearities and other causes.

After this demonstration at least, there were no grounds for doubting the high sound quality achievable by PCM techniques. The engineers and music lovers who were present at this first public PCM playback demonstration, however, had no idea when this equipment would be commercially available, and many of these people had only the vaguest concept of the effect which PCM recording systems would have on the audio industry. In fact, it would be no exaggeration to say that, owing to the difficulty of editing, the weight, size, price and difficulty in operation, not to mention the necessity of using the highest quality ancillary equipment (another source of high costs), it was at that time very difficult to imagine that any meaningful progress could be made.

Figure VI Conventional analog (a) and PCM digital (b) tape recording.

Nevertheless, highest quality record production at that time was by the direct cutting method, in which the lacquer master is cut without using master and mother tapes in the production process: the live source signal is fed directly to the disc cutting head after being mixed. Limitations due to analog tape recorders were thus side-stepped. Although direct cutting sounds quite simple in principle, it is actually extremely difficult in practice. First of all, all the required musical and technical personnel, the performers, the mixing and cutting engineers, have to be assembled together in the same place at the same time. Then the whole piece to be recorded must be performed right through from beginning to end with no mistakes, because the live source is fed directly to the cutting head.

If PCM equipment could be perfected, high-quality records could be produced while solving the problems of time and value posed by direct cutting. PCM recording meant that the process after the making of the master tape could be completed at leisure.

In 1969, Nippon Columbia developed a prototype PCM recorder, loosely based on the PCM equipment originally created by NHK: a four-head VTR with 2-inch tape was used as recording medium, with a sampling rate of 47.25 kHz using a 13-bit linear analog-to-digital converter. This machine was the starting point for the PCM recording systems which are at present marketed by Sony, after much development and adaptation.

Development of commercial PCM processors

In a PCM recorder, there are three main parts: an encoder which converts the audio source signal into a digital PCM signal; a decoder to convert the PCM signal back into an audio signal; and, of course, there has to be a recording medium, using some kind of magnetic tape for record and reproduction of the PCM encoded signal.

The time period occupied by 1 bit in the stream of bits composing a PCM encoded signal is determined by the sampling frequency and the number of quantization bits. If, say, a sampling frequency of 50 kHz is chosen (sampling period 20 μs), and that a 16-bit quantization system is used, then the time period occupied by 1 bit when making a two-channel recording will be about 0.6 μs. In order to ensure the success of the recording, detection bits for the error-correction system will also have to be included. As a result, it is necessary to employ a record/reproduction system which has a bandwidth of between about 1 and 2 MHz.

Bearing in mind this bandwidth requirement, the most suitable practical recorder is a video tape recorder (VTR). The VTR was specifically designed for recording TV pictures, in the form of video signals. To successfully record a video signal, a bandwidth of several megahertz is necessary, and it is a happy coincidence that this makes the VTR eminently suitable for recording a PCM encoded audio signal.

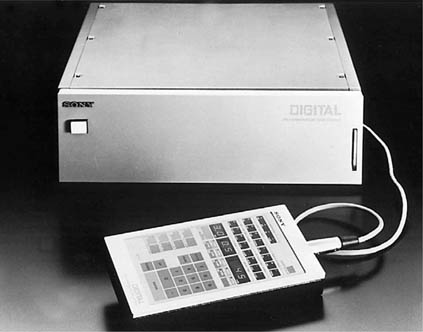

The suitability of the VTR as an existing recording medium meant that the first PCM tape recorders were developed as two-unit systems comprising a VTR and a digital audio processor. The latter was connected directly to an analog hi-fi system for actual reproduction. Such a device, the PCM-1, was first marketed by Sony in 1977.

In the following year, the PCM-1600 digital audio processor for professional applications was marketed. In April 1978, the use of 44.056 kHz as a sampling frequency (the one used in the above-mentioned models) was accepted by the Audio Engineering Society (AES).

Figure VII PCM-1 digital audio processor.

At the 1978 Consumer Electronics Show (CES) held in the USA, an unusual display was mounted. The most famous names among the American speaker manufacturers demonstrated their latest products using a PCM-1 digital audio processor and a consumer VTR as the sound source. Compared with the situation only a few years ago, when the sound quality available from tape recorders was regarded as being of relatively low standard, the testing of speakers using a PCM tape recorder marked a total reversal of thought. The audio industry had made a major step towards true fidelity to the original sound source, through the total redevelopment of the recording medium which used to cause most degradation of the original signal.

At the same time, a committee for the standardization of matters relating to PCM audio processors using consumer VTRs was established, in Japan, by 12 major electronics companies. In May 1978, they reached agreement on the Electronics Industry Association of Japan (EIAJ) standard. This standard basically agreed on a 14-bit linear data format for consumer digital audio applications.

The first commercial processor for domestic use according to this EIAJ standard, which gained great popularity, was the now famous PCM-F1 launched in 1982. This unit could be switched from 14-bit into 16-bit linear coding/decoding format so, in spite of being basically a product designed for the demanding hi-fi enthusiast, its qualities were so outstanding that it was immediately used on a large scale in the professional audio recording business as well, thus quickening the acceptance of digital audio in the recording studios.

Figure VIII PCM-F1 digital audio processor.

Figure IX PCM-1610 digital audio processor

In the professional field the successor to the PCM-1600, the PCM-1610, used a more elaborate recording format than EIAJ and consequently necessitated professional VTRs based on the U-Matic standard. It quickly became a de facto standard for two-channel digital audio production and compact disc mastering.

Stationary-head digital tape recorders

The most important piece of equipment in the recording studio is the multi-channel tape recorder: different performers are recorded on different channels – often at different times – so that the studio engineer can create the required ‘mix’ of sound before editing and dubbing. The smallest number of channels used is generally four, the largest 32.

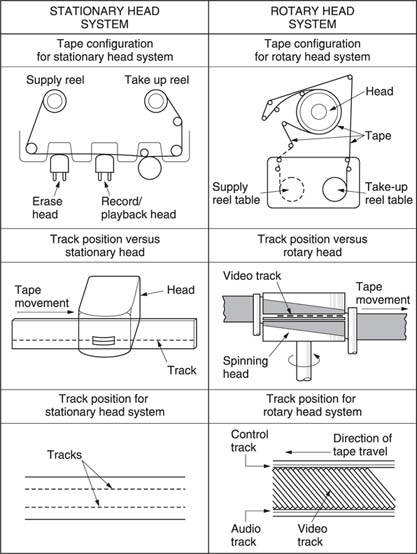

A digital tape recorder would be ideal for studio use because dubbing (re-recording of the same piece) can be carried out more or less indefinitely. On an analog tape recorder (Figure XI), however, distortion increases with each dub. Also, a digital tape recorder is immune to cross-talk between channels, which can cause problems on an analog tape recorder.

It would, however, be very difficult to satisfy studio standard requirements using a digital audio processor combined with a VTR. For a studio, a fixed head digital tape recorder would be the answer. Nevertheless, the construction of a stationary-head digital tape recorder poses a number of special problems. The most important of these concerns the type of magnetic tape and the heads used.

The head-to-tape speed of a helical scan VTR (Figure XI) used with a digital audio processor is very high, around 10 m s–1.

Figure X PCM-3324 digital audio stationary-head (DASH) recorder

However, on a stationary-head recorder, the maximum speed possible is around 50 cm s–1, meaning that information has to be packed much more closely on the tape when using a stationary-head recorder; in other words, it has to be capable of much higher recording densities. As a result of this, a great deal of research was carried out in the 1970s into new types of modulation recording systems and special heads capable of handling high-density recording.

Another problem is generated when using a digital tape recorder to edit audio signals – it is virtually impossible to edit without introducing ‘artificial’ errors in the final result. Extremely powerful error-correcting codes were invented capable of eliminating these errors.

Figure XI Analog audio and video tape recording.

The digital multi-channel recorder had finally developed after all the problems outlined above had been resolved. A standard format for stationary-head recorders, called DASH (digital audio stationary head), was agreed upon by major manufacturers like Studer, Sony and Matsushita. An example of such a machine is the 24-channel Sony PCM-3324.

Development of the compact disc

In the 1970s, the age of the video disc began, with three different systems being pursued: the optical system, where the video signal is laid down as a series of fine grooves on a sort of record, and is read off by a laser beam; the capacitance system, which uses changes in electrostatic capacitance to plot the video signal; and the electrical system, which uses a transducer. Engineers then began to think that because the bandwidth needed to record a video signal on a video disc was more than the one needed to record a digitized sound signal, similar systems could be used for PCM/VTR recorded material. Thus, the digital audio disc (DAD) was developed, using the same technologies as the optical video discs: in September 1977, Mitsubishi, Sony and Hitachi demonstrated their DAD systems at the Audio Fair. Because everyone knew that the new disc systems would eventually become widely used by the consumer, it was absolutely vital to reach some kind of agreement on standardization.

Furthermore, Philips from the Netherlands, who had been involved in the development of video disc technology since the early 1970s, had by 1978 also developed a DAD, with a diameter of only 11.5 cm, whereas most Japanese manufacturers were thinking of a 30-cm DAD, in analogy with the analog LP. Such a large record, however, would hold up to 15 hours of music, so it would be rather impractical and expensive.

During a visit of Philips executives to Tokyo, Sony was confronted with the Philips idea, and they soon joined forces to develop what was to become the now famous compact disc, which was finally adopted as a worldwide standard. The eventual disc size was decided upon as 12 cm, in order to give it a capacity of 74 minutes: the approximate duration of Beethoven’s Ninth Symphony.

The compact disc was finally launched on the consumer market in October 1982, and in a few years, it gained great popularity with the general public, becoming an important part of the audio business.

Peripheral equipment for digital audio production

It is possible to make a recording with a sound quality extremely close to the original source when using a PCM tape recorder, as digital tape recorders do not ‘colour’ the recording, a failing inherent in analog tape recorders. More important, a digital tape recorder offers scope for much greater freedom and flexibility during the editing process.

There follows a brief explanation of some peripheral equipment used in a studio or a broadcasting station as part of a digital system for the production of software.

Figure XII Digital editing console.

Figure XIII Digital reverberator.

Outline of a digital audio production system

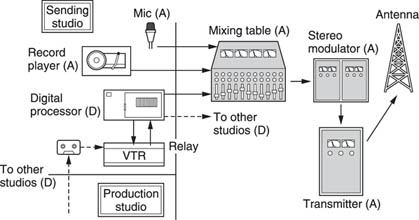

Several units from the conventional analog audio record production system can already be replaced by digital equipment in order to improve the quality of the end product, as shown in Figure XIV.

Audio signals from the microphone, after mixing, are recorded on a multi-channel digital audio recorder. The output from the digital recorder is then mixed into a stereo signal through the analog mixer, with or without the use of a digital reverberator.

The analog output signal from the mixer is then converted into a PCM signal by a digital audio processor and recorded on a VTR.

Editing of the recording is performed on a digital audio editor by means of digital audio processors and VTRs. The final result is stored on a VTR tape or cassette. The cutting machine used is a digital version.

Figure XIV Digital audio editing and record production.

When the mixer is replaced by a digital version and – in the distant future – a digital microphone is used, the whole production system will be digitized.

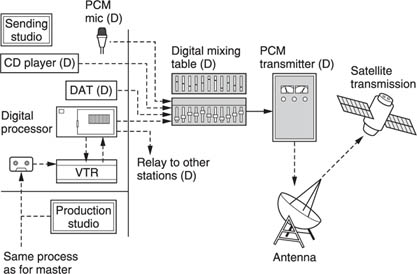

Digital audio broadcasting

Since 1978, FM broadcasting stations have expressed a great deal of interest in digital tape recorders, realizing the benefits they could bring almost as soon as they had been developed. Figure XV shows an FM broadcast set-up using digital tape recorders to maintain high-quality broadcasts.

In 1987, the European Eureka-147 project was the starting point to develop Digital Audio Broadcasting (DAB). This full digital broadcasting system has meanwhile been fully developed, it is implemented in a number of countries around the world and gradually it is becoming a successor to the ‘old’ FM broadcasting system.

Basic specifications for DAB are a transmission bandwidth of 1.54 MHz; the audio is encoded according to ISO/MPEG layer II with possible sampling frequencies of 48 and 24 kHz. The channel coding system is Coded Orthogonal Frequency Division Multiplex (COFDM) and the used frequencies are such that it can be used both on a terrestrial and satellite base.

Figure XV Mixed analog and digital audio broadcasting system.

A number of frequency blocks have been agreed: 12 blocks within the 87–108 MHz range, 38 blocks within VHF band III (174–240 MHz) and 23 blocks within the L-band (1.452–1.492 GHz). These are the terrestrial frequencies. For satellite use, a number of frequencies in the 1.5, 2.3 and 2.6 GHz band are possible, but this is still in the experimental stage.

Due to the use of the latest digital encoding, error protection and transmission techniques, this broadcasting system can drastically change our radio listening habits. First of all, due to the encoding techniques (MPEG and COFDM) used, the quality of sound can be up to CD standards, but also reception can be virtually free of interruptions or interferences. The contents and use are also different; DAB provides a number of so-called ‘ensembles’, each ensemble (comparable to an FM station frequency) containing a maximum number of 63 channels. In other words, tune in to one ensemble and there is a choice between 63 music channels. The quality of each channel is adaptable by the broadcasters to suit the contents and needs. A music programme, for example, can have the highest quality; a live transmission of a football match, on the other hand, can be lower quality, as full quality (needing full bandwidth) is not needed.

Figure XVI Digital audio broadcasting system.

Another important aspect of the DAB system is the high data capacity. Apart from the contents (music), a lot of other information can be broadcast at the same time: control information, weather info, traffic info, multimedia data services, etc. are all perfectly possible and can still be developed further.

The experience gained from DAB will surely be useful for the next step: Digital Video Broadcasting (DVB).

Digital recording media: R-DAT and S-DAT

Further investigation to develop small, dedicated, digital audio recorders which would not necessitate a video recorder has led to the parallel development of both rotary-head and stationary-head approaches, resulting in the so-called R-DAT (rotary-head digital audio tape recorder) and S-DAT (stationary-head digital audio tape recorder) formats.

Like its professional counterpart, the S-DAT system relies on multiple-track thin-film heads to achieve a very high packing density, whereas the R-DAT system is actually a miniaturized rotary-head recording system, similar to video recording systems, optimized for audio applications.

The R-DAT system, launched first on the market, uses a small cassette of only 73 mm × 54 mm × 10.5 mm – about one-half of the well-known analog compact cassette. Tape width is 3.81 mm – about the same as the compact cassette. Other basic characteristics of R-DAT are:

A description of the R-DAT format and system is given in Chapter 15.

The basic specifications of the S-DAT system have been initially determined: in this case, the cassette will be reversible (as the analog compact cassette), using the same 3.81 mm tape.

Two or four audio channels will be recorded on 20 audio tracks. The width of the tracks will be only 65 μm (they are all recorded on a total width of only 1.8 mm!) and, logically, various technological difficulties hold up its practical realization.

New digital audio recording media

R-DAT and S-DAT have never established themselves in the mass consumer market. Expensive equipment and lack of pre-recorded tapes have led consumers to stay with the analog compact cassette, although broadcasters and professional users favoured the R-DAT format because of its high sound quality. This situation has led to the development of several new consumer digital audio formats, designed to be direct replacements for the analog compact cassette. The requirements for the new formats are high. The recording media must be small and portable with a sound quality approaching the sound quality of a CD. The formats must receive strong support from the software industry for pre-recorded material. Above all, the equipment must be cheap.

DCC

As an answer to customers’ requests to have a cheap and easy to use recording system, Philips developed the Digital Compact Cassette (DCC) system. This system was launched on the market in the beginning of the 1990s.

As the name suggests, DCC has a lot in common with the analog compact cassette which was introduced almost 30 years ago by the same company. The DCC basically has the same dimensions as the analog compact cassette, which enables downward compatibility: DCC machines were indeed able to play back analog compact cassettes.

The DCC system uses a stationary thin-film head with 18 parallel tracks, comparable with the now obsolete S-DAT format. Tape width and tape speed are equal to those of the analog compact cassette.

The proposed advantage (backward compatibility with analog compact cassettes) turned out to be one of the main reasons why DCC never gained a strong foothold and was stopped in the middle of the 1990s. By that time, people were used to the advantages of disc-based media: easy track jumping, quick track search, etc. and as DCC was still tape based, it was not able to exploit these possibilities.

MiniDisc

With the advantages and success of CD in mind, Sony developed an optical disc-based system for digital recording: MiniDisc or MD. This was launched around the same time as DCC, these two formats being direct competitors.

One of the main advantages appreciated by CD users is the quick access. Contrary to tape-based systems, a number of editing possibilities can be exploited by MiniDisc, increasing the user friendliness: merging and dividing tracks, changing track numbers, inserting disc and track names, etc.

Also, the read-out and recording with a non-contact optical system makes the MD an extremely durable and long-lasting sound medium. The 4-Mbit semiconductor memory enables the listener to enjoy uninterrupted sound, even when the set vibrates heavily. This ‘shockproof’ memory underlines the portable nature of MiniDisc.

The MiniDisc system is explained in detail in Chapter 17 of this book.

CD-recordable

The original disc-based specifications as formulated jointly by Philips and Sony also described recordable CD formats (the so-called ‘orange book’ specification).

Originally, this techology was mostly used for data storage applications, but as of the middle of the 1990s, this technology also emerged for audio applications. Two formats have been developed: CD-recordable (CD-R) and CD-rewritable (CD-RW).

CD-R draws its technology from the original CD-WO (write-once) specification. This is a one-time recording format in this sense that, once a track is recorded, there is no way to erase or overwrite it. There are, however, possibilities to record one track after the other independently. The recording is similar to the first writing of a pre-recorded Audio CD. The same format is used.

The actual recording is done on a supplementary ‘dye’ layer. When this layer is heated beyond a critical temperature (around 250°C) a bump appears. In this way, the CD-like pit/bump structure is recreated. This process is irreversible; once a bump has been created by laser heat, it cannot be changed further.

The use of a dye layer is a significant difference from the original CD format. Another difference with audio CD is the use of a pregroove (also called ‘wobble’) for tracking purposes. As this technique is also used in MiniDisc, it is explained in Chapter 17.

Since the recorded format and the dimensions of the CD-R are the same as conventional CD players, they can be played back on most players.

CD-rewritable

The main difference between CD-R and CD-RW is the unique recording layer, which is composed of a silver–indium–antimony–tellurium alloy, allowing recording and erasing over 1000 times. Recording is done by heating up the recording layer by the record laser to over 600°C, where the crystalline structure of the layer is changing to a less reflective surface. In this way, a ‘pit’ pattern is created. Erasing is done by heating up the surface to 200°C, returning it to a more reflective surface. This is the second main difference between CD-R and CD-RW; in the case of the latter, the process is reversible.

One major drawback of this system is that the reflectivity does not conform to the original CD (red book) standard. The CD standard states that at least 70% of the laser light which hits the reflective area of a disc must be reflected; the CD-RW only reflects about 20%. The CD-RW format can therefore not guarantee playback in any CD player, especially not the older CD players. Manufacturers of CD players cannot be held responsible if their players cannot handle the CD-RW format, but seen through users’ eyes, this appeared as a contradictory situation. Obviously, this has caused some confusion on the market, but gradually most new CD players have a design which is adapted to this lower reflectivity.

High-quality sound reproduction: SACD and DVD-Audio

Even if the CD audio system provides a level of quality exceeding most people’s expectations, the search for better sound never stops. Audiophiles sometimes complained that CD sound was still no match for the ‘warmth’ of an analog recording; technically speaking, this is related to the use of A/D and D/A converters, but also due to a rather sharp cut-off above 20 kHz. A human ear is not capable of hearing sounds above this frequency, but it is a known fact that these higher frequencies do influence the overall sound experience, as they will cause some harmonics within the audible area. Fairness, however, urges us to mention that part of what is considered the ‘warmth’ of an analog recording is in reality noise which is not related to the original sound; this noise is a result of the used technology and its limitations, as well as imperfections. The improved recording and filtering techniques eliminate most of this noise, so some people will always consider digital sound as being ‘too clean’.

Two new formats for optical laser discs were developed in the second part of the 1990s. These two new systems are able to reproduce frequencies up to 100 kHz with a dynamic range of 120 dB in the audible range. Due to these higher frequency and dynamic ranges, the claims against CD audio can be addressed, but other advantages are also seen: the positioning of recorded instruments and artists in the sound field is far better; a listener might close his eyes and be able to imagine the artists at their correct position in front of him, not only from left to right but also from front to back.

As the disc media used can also contain much more data, both SACD and DVD-Audio can be used for multi-channel reproduction; as an example it is perfectly possible that if a rock band consists of five musicians, each musician is recorded on a separate track, which creates possibilities for the user to make his own channel mix.

Super Audio CD (SACD) was developed as a joint venture between Sony and Philips; this should not be a surprise since these two companies jointly developed all previous CD-based audio systems, and since SACD is a logical successor (also technically) of these highly successful systems.

SACD is a completely new 1-bit format, operating with a sampling frequency of 2.8224 MHz. Besides its high-quality reproduction capabilities, the hybrid disc is also compatible with the conventional CD player. Of course, the conventional multi-bit PCM CD can be played back on the SACD player.

The second format is based on the existing DVD optical read-out and CD technology. This new format produced on part 1 and part 2 of the DVD-ROM specifications is called DVD-Audio (DVD-A). To achieve a frequency band of nearly 100 kHz, a sampling frequency of 192 kHz with a resolution of 24 bits is adopted. Both SACD and DVD-A are explained within this book.

Digital audio compression

Already from the start of digital storage, the need to compress data became clear. In the early days of computers, memory was very expensive and storage capacity was limited. Also, from the production side, it was impossible to create the memory capacity as we know it now, at least not at an affordable price and on a usable scale. In particular, after the idea to convert the analog audio signals into a digital format originated, the impressive capacity required for storage became a problem.

The explosive growth of electronic communication and the Internet was also a major boost for distribution of data, but at the same time it was a major reason why data compression became a crucial matter.

To allow recording digital music signals to a limited storage medium or transferring it within an acceptable delay, compression becomes necessary. Consider the following example.

Suppose we want to record 1 minute of stereo CD quality music on our hard disk. The sampling frequency for CDs is 44.1 kHz, with 16 bits per sample. One minute of music represents an amount of data of 44 100 (samples/second) × 60 (samples/minute) × 16 (bits/sample) × 2 (stereo) = 84 672 Mbits or about 10.6 Mbytes of memory on your hard disk.

Transferring this amount of data via the internet through an average 28.8 kb s–1 modem will take about 84 672 Mbits/28 800 (bits s–1) × 60 (s/minute) or 49 minutes. When using conventional telephone lines, this is even an optimistic approach, as the modem speeds tend to vary (mostly downward) along with network loads, and the equation does not even take into account that data transfer through modems also uses error protection protocols, which also decrease the speed.

To achieve a reasonable delay when transferring or storing the data in a memory, the need of compressing becomes clear.

Over the years, different techniques have been developed. Most audio compression techniques are derived directly or indirectly from MPEG standards. MPEG stands for Motion Picture Expert Group; it is a worldwide organization where all interested parties like manufacturers, designers, producers, etc. can propose, discuss and agree on technologies and standards for motion pictures, but also the related audio and video technologies.

For consumer use, it started with the PASC system for DCC and ATRAC for MD – both MPEG1 layer 1-based compression techniques, they allow a four- to fivefold reduction in the amount of data. Thanks to the evolution of more accurate and faster working microcomputers, higher quality and higher compression has been achieved.

This same MPEG1, but now layer 3, is the basis for the widely used and sometimes contested MP3 format. More on this subject is given elsewhere within this book (see Chapter 20).

Summary of development of digital audio equipment at Sony

October 1974

First stationary digital audio recorder, the X-12DTC, 12-bit

September 1976

FM format 15-inch digital audio disc read by laser. Playing time:30 minutes at 1800 rpm, 12-bit, two-channel

October 1976

First digital audio processor, 12-bit, two-channel, designed to be used in conjunction with a VTR

June 1977

Professional digital audio processor PAU-1602, 16-bit, two-channel, purchased by NHK (Japan Broadcasting Corporation)

September 1977

World’s first consumer digital audio processor PCM-1, 13-bit, two-channel 15-inch digital audio disc read by laser. Playing time:1 hour at 900 rpm

March 1978

Professional digital audio processor PCM-1600

Stationary-head digital audio recorder X-22, 12-bit, two-channel, using ¼-inch tape

World’s first digital audio network

October 1978

Long-play digital audio disc read by laser. Playing time: 2½ hours at 450 rpm

Professional, multi-channel, stationary-head audio recorder PCM-3224, 16-bit, 24-channel, using 1-inch tape

Professional digital audio mixer DMX-800, eight-channel input, two-channel output, 16-bit

Professional digital reverberator DRX-1000, 16-bit

May 1979

Professional digital audio processor PCM-100 and consumer digital audio processor PCM-10 designed to Electronics Industry Association of Japan (EIAJ) standard

October 1979

Professional, stationary-head, multi-channel digital audio recorder PCM-3324, 16-bit, 24-channel, using ½-inch tape Professional stationary-head digital audio recorder PCM-3204, 16-bit, four-channel, using ¼-inch tape

May 1980

Willi Studer of Switzerland agrees to conform to Sony’s digital audio format on stationary-head recorder

June 1980

Compact disc digital audio system mutually developed by Sony and Philips

October 1980

Compact disc digital audio demonstration with Philips, at Japan Audio Fair in Tokyo

February 1981

Digital audio mastering system including digital audio processor PCM-1610, digital audio editor DAE-1100 and digital reverberator DRE-2000

Spring 1982

PCM adapter PCM-F1, which makes digital recordings and playbacks on a home VTR

October 1982

Compact disc player CDP-101 is launched onto the Japanese market and as of March 1983 it is available in Europe

Figure XVII DTC-55ES R-DAT player.

1983

Several new models of CD players with sophisticated features:

CDP-701, CDP-501, CDP-11S

PCM-701 encoder

November 1984

Portable CD player: the D-50

Car CD players: CD-X5 and CD-XR7

1985

Video 8 multi-track PCM recorder (EV-S700)

March 1987

First R-DAT player DTC-1000ES launched onto the Japanese market

July 1990

Second generation R-DAT player DTC-55ES available on the European market

March 1991

DAT Walkman: TCD-D3

Car DAT player: DTX-10

November 1992

Worldwide introduction of the MiniDisc (MD) format, first generation MD Walkman: MZ-1 (recording and playback) and MZ-2P (playback only)

1992–1999

Constant improvement of the ATRAC DSP for MiniDisc. Several versions were issued: ATRAC versions 1, 2, 3, 4, 4.5 and finally ATRAC-R. Evolution and expansion of the DAB network

May 1996

The International Steering Committee (ISC) made a list of recommendations for the next generation of high-quality sound formats

February 1999

First home DAB receiver

ATRAC DSP Type-R in the fifth generation of MiniDisc players:

MDS-JA20ES

October 1999

Introduction of the first generation of SACD players: SCD-1 and SCD-777ES

April 2000

Second generation SACD player: SCD-XB940 in the QS class

September 2000

Introduction of MDLP (Long Play function for MiniDisc) based on ATRAC3

October 2000

Combined DVD/SACD player: DVP-S9000ES

February 2001

First multi-channel Hybrid SACD disc on the market

February 2001

Sony introduces its first multi-channel player: SCD-XB770