Chapter 11: Identifying VSM Tool Types and Capabilities

In Predicts 2021: Value Streams Will Define the Future of DevOps Report (published October 5, 2020), Gartner states that "By 2023, 70% of organizations will use value stream management to improve flow in the DevOps pipeline, leading to faster delivery of customer value." (https://www.gartner.com/) Clearly, Gartner believes that VSM, as an IT improvement strategy, is rapidly becoming mainstream.

As you can see from the preceding Gartner quote, VSM tools are rapidly gaining traction in the IT industry. But it would be a mistake to start implementing the tools before understanding the larger objectives of VSM, as well as how to implement a VSM activity across a value stream. The problem is that the data and metrics that VSM tools provide have little practical use unless we first understand Lean value stream improvements' goals and principles. That is why you spent the first four chapters learning how to apply a generic VSM methodology.

Now that you understand the goals, metrics, and activities associated with value stream management, we must look at the tools that support our ongoing VSM activities. This chapter will provide instructions on how to use the primary types of VSM tools in the market today. You will also learn about the different capabilities available in modern VSM tools.

In this chapter, we're going to cover the following topics:

- Leveraging VSM tools and platforms

- Enabling VSM tool capabilities

- Highlighting important VSMP/VSM tool capabilities

- Key issues addressed by VSM tools

- VSM tool implementation issues

- Enabling business transformations

- VSM tool benefits

Leveraging VSM tools and platforms

This section will introduce the types of tools and capabilities available in modern VSM tools. You will also find that the VSM tool industry promotes its ability to help implement Lean improvements to IT value streams. But the information that's available via these VSM tools can also support Lean improvement activities across all organizational value streams via their digital touchpoints.

VSM tools help implement and improve Lean practices across DevOps pipelines by providing real-time access to critical IT value stream data, dashboard-based visualizations, and metrics. This information helps DevOps team members and other stakeholders monitor and improve information flow and work across the IT value streams. Moreover, VSM tools provide information and analytics that help keep the IT value streams' focus on adding value to their customers.

In this chapter, you are going to learn the capabilities provided by VSM tools. But before that, we will start with an introductory discussion about the types of DevOps tools and processes that support the IT value stream's customer delivery capabilities.

In the same article mentioned in this chapter's introduction, Gartner outlines three types of supporting DevOps tools that transform the IT value stream's ability to deliver customer value rapidly and reliably. These tools and processes include the following:

- DevOps value stream management platforms (VSMPs): provide out-of-the-box connectors to integrate disparate DevOps toolchains and facilitate the orchestration of IT activities across the planning, releasing, building, and monitoring activities. VSMPs help to improve velocity, quality, and customer value by providing visibility and analytics across the IT value stream. When you look up value stream management (VSM) tools in a modern context, these are the types of tools you will typically find.

- Value stream delivery platforms (VSDPs): Provides integrated toolchains as out-of-the-box solutions, usually available as cloud-based CI/CD or DevOps platforms. VSDPs also include tools to support the visibility, traceability, auditability, and observability of activities across the software delivery value stream. These capabilities extend well beyond the traditional DevOps platform capabilities. In this sense, VSDPs combine the capabilities of the DevOps platforms with VSM tool capabilities. And you will find both DevOps platform vendors and VSM tool vendors merging into this shared space.

- Continuous compliance automation (CCA) tools: These help automate and expedite compliance and security-related tasks, replacing manual checklists, policies, and worksheets. Moreover, these tools provide visibility to potential issues early in the SDLC cycle, when corrections are easier to make and less costly. CCA tools should not operate in a vacuum, apart from the organization's CI/CD, DevOps, and VSM platforms and tools. It's better to have them integrated as part of the automated test capabilities with integrated VSM tools, as this helps support real-time monitoring, visibility of compliance, and security test results.

Gartner also identifies two critical processes, Chaos engineering and IT resiliency roles, that can help optimize IT value stream flows. Later in this book, we'll discuss the importance of incident management and service restoration. These are after-the-fact problem resolution tactics. Chaos engineering further identifies preventative measures the IT department can take to deliver high reliability in complex environments.

Similar to the preceding discussion, disaster recovery (DR) is also an after-the-fact problem resolution effort to bring a failed system back online. IT resiliency roles is an approach to linking DR teams and product teams as a preventative measure to deliver better resilience across the DevOps value stream.

Enabling VSM tool capabilities

A good strategy for any product owner is to assess customer requirements regarding their customers' capabilities. In other words, what do our customers expect our products to deliver to them in the way of capabilities that solve some issue or need they may have? Providing capabilities that address customer issues or needs is how we provide value. In this context, product features and functions deliver value through the capabilities they offer.

By definition, the word capability connotes an ability or the authority to do something. In software development, requirements analysis defines areas where our customers can't do or achieve something desirable. The objective is to create software products that deliver those capabilities.

But it's a mistake to think the word capabilities only refers to delivering some set of features or functionality. Instead, customers purchase things, such as software, to fulfill basic human needs, such as improving the following:

- Performance or productivity

- Health and wellbeing

- Economics or finances

- Personal image

VSM tools are no different. VSM tools need to add value to users to support their organization's IT value stream improvement initiatives. Yes, productivity and performance improvements are at the heart of making Lean production improvements. But those improvements also improve the health and wellbeing of the organization and potentially its customers. And a successful VSM initiative can improve the organization's economics.

But don't belittle the last area of concern: image. Large purchases and investments in VSM and DevOps tools and platforms can have an enormous emotional impact on executive and stakeholder decision making and buy-in. The same concerns are valid for employees when executives make wholesale changes to organizational structures and work activities. In the end, the most powerful motivator to make the investments and structural changes might just come down to improving the organization's image as an industry leader.

But I've digressed. So, let's move on and understand the capabilities that are delivered by modern VSM tools and why those capabilities matter.

Having read through the first four chapters of this book on applying a generic VSM methodology, you may have already guessed that a VSM tool should support VSM processes, including mapping, metrics, and analytics. If you added integration, automation, and orchestration to your guess, then you are even closer to understanding the expanded value of modern VSM tools.

In the Identifying VSM tool capabilities section we will introduce many of the leading VSM vendors. One of the vendors we will introduce, ConnectAll, promotes a view that a tool-agnostic VSM solution must implement the following six critical features:

- IT and business alignment

- Actionable and relevant data

- Data-driven, outcome-focused analytics

- Dynamic value stream visualization

- Workflow orchestration

- IT governance

This list is good to start with, so let's take a few minutes to understand what capabilities these features provide. In other words, before you read on, it will help if you replace the word features with the word capabilities as you read through each item.

IT and business alignment

In our modern digital economy, the IT function supports nearly every aspect of the business. For example, organizations may offer IT solutions as standalone products or integrated with physical products to provide enhanced features and functionality. But IT solutions also improve the productivity of other development- and operations-oriented value streams.

For these reasons, VSM must serve a greater purpose than simply improving CI/CD and DevOps pipeline flows. These improvements must also support the strategies, goals, and mission of the business as a whole. What this means is that your value streams are not limited to software development. Instead, software value streams extend into the business, supporting all organizational value streams.

In this context, a VSM solution must help the organization align its IT functions with the business by providing the following capabilities:

- Implementing real-time metrics to support Lean value stream improvements

- Implementing and visualizing key performance indicators (KPIs) across development- and operations-oriented value streams to improve speed and quality of delivery

- Providing end-to-end visibility of key metrics and outcomes across all portfolio investments

Having access to real-time data is a game-changer in VSM. However, it's of little value if it's not actionable and relevant to improving your ability to deliver value.

Actionable and relevant data

VSM is a continuous improvement activity from the perspective of Lean across our value delivery streams. VSM tools should provide metrics for measuring the performance of your value stream activities in terms of desired outcomes.

CI/CD and DevOps pipelines involve integrating, automating, and orchestrating work item flows across complex toolchains. Equally as important is the real-time flow of data across the tools that support work being orchestrated across your system's development and life cycle support processes.

As with the manual VSM processes defined in Chapter 6, Launching the VSM initiative (VSM Steps 1–3) through Chapter 10, Improving the Lean-Agile Value Delivery Cycle (VSM Steps 7 and 8), VSM tools help the organization identify waste in the form of bottlenecks and waiting caused by inefficient and unmatched flows. But software development also has much more variability in terms of product requirements than typical mass-production lines. For that reason, we also need information that helps us identify delays caused by orphaned software (abandonware), inaccurately defined configurations, ill-defined requirements, and unnecessary testing that is not value-added.

Moreover, as part of future state modeling, it's essential to have the ability to replace current state metrics with desired variables for what if testing. In effect, we can use VSM tools to simulate future state operations using the current state maps, metrics, and flows as a baseline for experimentation.

Data-driven, outcome-focused analytics

A VSM team's assessment of value stream performance is only as good as the metrics that drive it. In Chapter 8, Identifying Lean Metrics (VSM Step 5), you learned which metrics help support Lean-oriented production improvements. You also learned that an excellent way to get these metrics is through Gemba Walks and meeting with value stream operators.

But what if you had access to real-time data that directly maps to the value stream metrics your VSM team defines as being critical to monitoring, as well as improving value delivery and production performance? That is the promise of modern VSM tools.

Of course, we need to do our homework first to define our current state flows, waste, and metrics. That's a human activity that VSM tools cannot eliminate.

Recall from our discussions on value stream mapping that we always start our assessments downstream with deliveries to our customers; then, we work our way upstream to assess how we deliver value. In other words, we start our analysis with customer requirements describing the desired value delivery outcomes and then work our way backward through the value stream activities – this helps us see for ourselves how or whether they contribute value from our customers' perspectives.

Therefore, before we can leverage data delivered by VSM tools, we need to visualize the value stream work and information flows, and areas of waste. As a quick review of Chapter 7, Mapping the Current State (VSM Step 4), let's summarize the current state mapping process, as that effort forms the baseline for our future state improvement analysis:

- Identify the customer (internal or external requirements; do the same for any partner relationships).

- Draw the entry and exit points for the value stream.

- Draw all processes between the entry and exit points, starting with the most downstream activities and working your way upstream to the initial order/backlog entry points.

- Identify and define all activity attributes (information, materials, and constraints associated with each activity).

- Draw queues between the activities and include wait time metrics.

- Draw all communications links as information flows occurring within the value stream.

- Draw the production control strategies in place at each value stream activity – primarily documenting push versus pull-oriented work and information flows.

- Complete the map with any other data that helps refine our understanding of the work and exceptions to the standard processes.

It should be apparent that the entire VS mapping exercise requires human actions to complete the map until step eight. But it's not the map that's the most important – it's the exercise of going out to see how things work that drives the real insights for improvements. The integration and analytical capabilities of modern VSM tools give us access to real-time data on demand, which we need to ensure our findings and analysis are accurate.

The integration and automation capabilities of CI/CD and DevOps toolchains hide a lot of the activity details and metrics. VSM tools help make those activities and metrics visible and immediately available whenever needed. In addition, monitors and triggers can immediately point out anomalies (metrics outside the desired performance metrics) that need to be addressed.

That's not to say the Gemba walks of traditional VSMs are not a helpful concept. In a modern, agile-based software shop, team members frequently meet to assess opportunities for improvement. More importantly, all software requirements and production data are made readily and visibly available to managers, executives, and other stakeholders at any time. Modern VSM tools make the visibility of product production and delivery data more accurate, accessible, and readily available.

As organizations make their way through a digital transformation, value streams become data-driven, and VSM tools hold the key to unlocking that data. For example, once connected, the VSM tools provide accurate data on key performance metrics, such as process times, cycle times, lead times, flow times, wait times, value-added times, mean time to repair (MTTR), escaped defect ratios, WIP, blocker data, queues, throughput, and production impacts.

VSM tools can provide the data and analytical tools to help teams assess product issues and risks, including missed release dates, substandard NPS scores, untapped capacity, and inadequate test coverage. These analytics help decision makers evaluate alternatives for cost reduction, competitive positioning, and product fit in the marketplace.

In short, VSMs capture data, while analytical tools help the organization assess value stream performance and make better decisions to achieve their desired outcomes. Those outcomes must map back to the business's goals and objectives and the desired outcomes of the organization's customers. After all, revenues and profitability depend on our ability to deliver value to our customers.

VSM provides capabilities to access real-time data on-demand, plus analytical tools, and they also help make the data and analytics visible.

Dynamic value stream visualization

Having access to real-time data with powerful analytical tools is a critical capability provided by modern VSM tools. But, consistent with Agile practices, we also need to make sure the data is highly visible, assessable, and consumable by those who need it. VSM tools provide dashboards with distributed access via a secure network or internet-based connections to support visibility needs.

The VSM tools and platforms provide visualizations across all connected product value streams, with metrics and visual aids showing cross-stream integrations, relationships, and orchestrations. In large product development environments with multiple teams, the VSM tools provide end-to-end visibility of every work item and how they flow across value streams. The VSM tools provide information that the independent feature teams or larger requirement areas need to discover and help address dependency, integration, and synchronization issues.

While VSM tools provide access dynamically to real-time data, they also provide access to historical data across your value streams. The importance of this is that historical data allows us to diagnose issues to determine how, when, and why they evolved. And, of course, their what if capabilities allow us to explore alternative solutions before making investments in time, resources, and money.

Many VSM tools started as toolchain integration and automation platforms. In other words, CI/CD and DevOps pipelines evolved to integrate toolchains and automate activities across the traditional software development life cycle (SDLC) processes. But it wasn't long before the software industry understood that toolchain integration and automation are only one part of Lean-oriented IT value stream improvement activity. We also need orchestration.

Workflow orchestration

CI/CD and DevOps pipelines operate most efficiently by integrating toolchains, automating SDLC and potentially ITSM processes, and orchestrating work and information flows. Larger software products with work distribution across multiple product teams create a more complex environment. The teams must deal with integration, coordination, and synchronization issues to ensure the component work items function correctly within the more extensive integrated system.

Many VSM platforms support the collaboration of multiple development teams who must orchestrate and coordinate their work. The extension of VSM capabilities came about due to their historical underpinnings as integration platforms and their support for CI/CD requirements. Examples of orchestration capabilities include automatic triggers for builds, automated tests, and deployments.

Automating triggers assumes the development teams have employed their configurations as code. There are two types of generally accepted code-based configurations: Configuration as Code (CaC) and Infrastructure as Code (IaC).

CaC involves developing configuration files, managed within a source code repository, to specify how your software applications need to be configured to work on different platforms or computing environments. CaC supports the versioning of application configurations, traceability of each software configuration version deployed across various environments, and the capability to deploy new software configurations without redeploying the application.

IaC supports automatically provisioning resources in computer data centers via machine-readable definition files, thus eliminating manual processes and interventions to reconfigure computing equipment. Data center operators must manually configure servers, networks, security systems, and backup systems in a traditional environment before each software deployment. This approach is a tedious, expensive, and labor-intensive process, spanning multiple skills. The configurations must be exact and consistent to ensure proper monitoring and visibility of their performance. As a result, many things can go wrong, adding more delays and costs of deployment. IaC helps avoid all those issues.

As an orchestration platform, VSM tools can centralize builds, CI/CD tests, and releases/deployments. The cross-functional nature of DevOps and its supporting VSM platforms improves collaboration between Development, Quality, and Operations teams. Finally, VSM dashboards provide data and analytical tools to provide insights into how configuration and process changes can impact future releases.

Another critical issue in IT is implementing effective controls over IT governance policies and compliance requirements, which we'll discuss in the following subsection.

IT governance

CIOs and other IT executives have fiduciary responsibilities to manage and protect the organization's IT assets and investments. There are also financial, regulatory, and legal requirements that must be followed to help us avoid legal and financial risks. As a direct result, IT organizations require some control over the teams that develop and support the organization's computing systems, networks, security systems, and software applications.

To support compliance requirements, IT organizations often implement IT frameworks such as ITIL, CMMI, COBIT, HIPAA, INFOSEC, and ISO 27001. There are many other IT frameworks available. Most of these frameworks are rich in policies and procedures but lacking in enforcement capabilities and details.

While it's easy enough to write documentation to specify standards, policies, and procedural requirements, it's much more challenging to provide oversight to ensure everyone is following the guidance. Fortunately, IT governance is another area where VSM vendors have stepped in to provide automation support for governing the organization's IT policies, compliance requirements, and standards.

Modern VSM tools can orchestrate the processes associated with governing IT capabilities by supporting the aforementioned IT frameworks. In this capacity, the VSM tools offer centralized governance over the flow of work across an entire product value stream. The data and metrics offered by VSM platforms help provide visibility to the IT value stream pipeline, as well as product quality objectives and compliance requirements. The information that's available also provides evidence-based data to support tool investment decisions, staffing requirements, and productivity goals.

This subsection completes our discussion of general VSM tool capabilities. In the next section, we will learn about the common types of VSM tools available and how they support IT value stream production and delivery improvement capabilities.

Highlighting important VSMP/VSM tool capabilities

This section will introduce the fundamental capabilities provided by modern VSMP/VSM tools. This is a class of tools that focus on supporting VSM activities in a DevOps environment. More precisely, VSMP/VSM tools gather information across the DevOps-oriented Value stream and offer visualizations as a VSM dashboard for supporting digital transformations to Lean-oriented practices.

VSMP/VSM tools typically include the following types of VSM support capabilities in a DevOps environment:

- DevOps value stream mapping: End-to-end mapping of the current and future state IT value stream work and information flow.

- DevOps workflow metrics: This helps measure the rate of value delivery, including the four critical DevOps measures of deployment frequency, mean lead time for changes, mean time to restore, and change failure rates.

- DevOps analytics: This includes tools for evaluating the current state of the DevOps toolchain and processing its capabilities against future state recommendations. Analytics can include recording and analyzing lead time and cycle time measurements spanning the following DevOps value stream activities:

i. Issue resolution

ii. From planning new features to the first code commits

iii. Feature branch creation to merge requests

iv. Executing automated tests

v. Performing code reviews

vi. Configuring, provisioning, and orchestrating dev, test, and staging environments

vii. Deploying to production

viii. Enabling application and feature rollbacks and service restorations

- DevOps orchestration: This helps eliminate waste in DevOps value streams by coordinating and synchronizing work items and data flows across the DevOps toolchain.

- DevOps workflow optimization: This supports leveling work flowing across integrated and automated DevOps toolchains and pipelines.

- DevOps information flow visualization: This offers data and information outputs in the form of reports and dashboard visualizations with near real-time relevancy.

- DevOps flow metrics: This helps teams analyze the performance of the DevOps pipeline in terms of cycle times, lead times, and wait times.

Analytic tools help the IT organization assess the speed of developing a "Done" feature or function from ideation through deployment, the throughput of new features and functions over time (also known as velocity), the time spent on improving business value across categories of work (that is, features, defects, risks, and technical debt), the amount of work in progress (WIP), and time spent on value-added work versus waiting.

- DevOps data cleaning: This provides tools for discovering and correcting or removing corrupted, incorrect, or incomplete data records, tables, or databases that support the DevOps toolchain.

Many VSM tools offer a value stream data model and data store that normalizes data across activities, as well as data inputs from the disparate yet integrated CI/CD and DevOps toolchains. Normalization is the process of organizing data in a database to eliminate redundancy and inconsistent relationships and dependencies. A single VSM data source with normalization enables end-to-end data analysis across value streams and each product's life cycle.

- What if analysis: Available from analytical tools, this helps the DevOps or VSM team evaluate the impact of changing the DevOps value stream processes and metrics, without directly affecting the value stream pipeline processes and activities or their work and information flows. In effect, the current state metrics and activity flows captured by the VSM tools form a baseline to conduct what if analysis on future state improvement concepts.

- DevOps tool integration: This includes application programming interfaces (APIs), adapters, and the connectors necessary to integrate DevOps toolchains with the VSM tools to integrate CI/CD and DevOps toolchains, automate activities, and orchestrate work and information flows.

Let's now take a quick look at the vendor offerings that have currently been identified in each of these three categories of tools.

Classifying VSMP/VSM tool vendors

DevOps VSMPs provide data and tools to monitor and assess strategic metrics such as release velocity and DevOps operational efficiencies. While Gartner refers to these tools as VSMPs, Forester refers to them as value stream management (VSM) tools.

VSMP and VSM tools serve as an integration, automation, and orchestration platform for third-party DevOps tools, of which there are many to choose. For example, Digital.ai provides a comprehensive list of DevOps tools within its 2020 Periodic Table of DevOps Tools, which contains a subset of 400 products across 17 categories.

Current tool offerings that fit into the VSMP/VSM category include ConnectAll, Digital.ai, Plutora, CloudBees Value Stream Management, and Tasktop Viz.

Classifying VSDP tool vendors

The critical differentiator between VSDPs, compared to the previously identified VSMP and VSM tools, is whether they provision DevOps tools directly within their VSDP platforms. In some cases, the VSDP vendors may provide many DevOps tools as out-of-the-box solutions to eliminate pipeline integration tasks. In other cases, the integrated DevOps tools may come from third-party vendors, but the integration and automation work has already been done.

DevOps value stream delivery platforms (VSDPs) help orchestrate DevOps toolchains to integrate and automate SDLC processes associated with building, delivering, and deploying software. Since the VSDP platforms control the tools, they can create a central data store with normalized data that provides visibility and analytical capabilities across all pipeline activities from end to end.

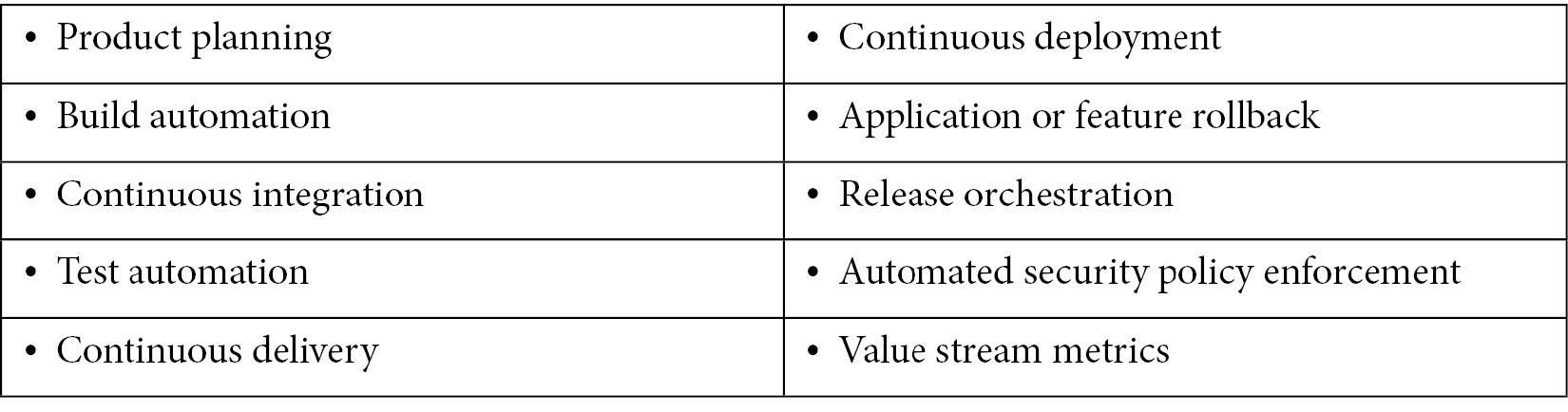

We will look at the capabilities of modern VSDP tools in more detail in the next chapter. But for now, as a quick introduction, we will state that VSDPs support a myriad of SDLC and ITSM processes, including the following:

Current VSM offerings that fit into the VSDP category include ServiceNow, GitLab, HCL Accelerate, IBM UrbanCode Velocity, Jira Align, and ZenHub.

VSMP, VSM, and VSDP have similar purposes and functionality. The critical difference is the degree to which the vendor provides a complete DevOps/VSM solution or provides a platform to integrate DevOps tools of your choosing. But for now, we will take a quick look at CCA tools that have a different but equally important role in improving business performance.

Classifying CCA tool vendors

CCA tools are also known as governance, risk, and compliance (GRC) platforms. These tools implement a capability to manage an organization's overall governance policies, enterprise risk management assessments, and compliance with laws and regulations. The objective of GRC is to align IT with the organization's business objectives while managing risks and meeting compliance requirements.

CCA tools/GRC platforms help improve decision making regarding IT alignment with business strategies and product line objectives. They help improve IT investments' prioritization by ensuring sufficient attention is paid to corporate compliance, risk management, and compliance issues. CCA tools/GRC platforms also help the IT department take an enterprise view to support corporate policies, managing risks, and regulatory compliance.

Finally, a critical element of CCA tools/GRC platform capabilities is to make IT systems and data secure. They do not replace the security software in use to protect applications and web-based services. Instead, they help ensure continued enforcement of policies, regulatory compliance, and the risk management assessments that are necessary to keep IT systems and data secure.

Rather than reinvent good practices, GRC platforms and tools often implement an underlying foundation of processes and policies from an industry-specified framework. Therefore, the CCA tools/GRC platform may implement a specific compliance framework such as COBIT, a risk management framework such as COSO, or an ITSM framework such as ITIL.

TrustRadius, a trusted review site for business technology, cites the following as examples of current GRC platforms (https://www.trustradius.com/):

So far, we have evaluated the types and capabilities of VSM tools and platforms. You learned about three basic categories of tools or platforms that support transforming IT value streams into Lean DevOps pipeline environments. Then, we took a deeper dive into the capabilities offered by modern VSM tools that support digital transformations via DevOps-oriented IT value streams. We will hold off on assessing the capabilities of VSDPs and CCA tools until the next chapter, where we will look at driving business value through a DevOps pipeline. Now, let's look at some of the critical issues addressed by implementing VSM tools and platforms.

Key issues addressed by VSM tools

In our digital economy, software products enable value delivery in three forms. First, the software is often sold as a standalone product. Second, the software supports other organizational value streams such as those discussed in Chapter 4, Defining Value Stream Management, including those discussed in the Identifying value streams section. Third, VSMs can help improve software delivery velocity across CI/CD and DevOps pipeline flows, from concept ideations through to delivery and support.

Throughout this book, you have learned that the enablers of software delivery include collaboration, integration, automation, and orchestration. But you've also learned that the velocity of software deliveries must support the flows and delivery of other organizational value streams. Therefore, the velocity of software development must be synched with the demands driven by the other organizational value streams that rely upon it.

True, software delivery value streams are complex in their own right. But they are exceedingly more complex when considering the critical impact software deliveries have on other organizational value streams. In short, our emergent digital economy drives the need to deliver value through software, as well as IT networks and infrastructures, in support of value deliveries across all organizational value streams.

Few worthwhile things in life are free, or easy to obtain, or even without issues. So, let's set our expectations correctly by identifying the issues any VSM team or initiative is likely to face.

VSM tool implementation issues

As with any set of software delivery tools, there are implementation issues the organization must address before committing to procuring a VSM platform. The issues highlighted in this section apply to both installing DevOps and VSM capabilities at an enterprise scale. We will address the subject of DevOps platform and tool implementation issues in much greater detail later in Chapter 15, Defining the Appropriate DevOps Platform Strategy. So, for now, we'll limit the conversation to VSM tool implementation issues, which includes the following:

- Breaking down organizational silos

- Developing knowledge, skills, and resources

- Getting executive buy-in

- Knowing how to get started

- Lacking process maturity

- Overcoming budget constraints

- Maintaining governance and compliance

Let's get into the details of this list to understand what the issues are and the approaches we can take to address them head-on.

Breaking down organizational silos

Turf protection is not a new problem, but it is one of the most critical issues any organization faces. Successful organizations evolve to build an economy of scale that makes them efficient and profitable. The problem is that the increased organizational size often leads to bloat, over-processing, inefficient layers of management, and functional-oriented departments that move decision making increasingly further away from the product line. The result is less efficiency and much less flexibility and agility.

Modern-scale Scrum and Lean-Agile practices offer methods to break down organizational silos. An example of a method that helps break down silos is DevOps, where development teams and operations teams collaborate to ensure information flows both ways across the IT organization.

Developers need to work with Operations teams to ensure the products they develop are adequately tested and supported once they go into production. Similarly, the Operations teams need to provide feedback to the Development teams on issues they face in supporting, operating, securing, and maintaining business applications.

Lean processes implement continuous flows but are based on a pull-oriented paradigm. In effect, communications across the pipeline activities are both supported and constrained by Lean's pull-oriented product control systems.

Still, organizations may retain some of their functional orientations to build domain skills and capacity across value streams. In such cases, everyone in the organization must understand that decision making and authorities must be pushed down to the value streams as much as possible. And, for those issues that exceed the ability of local authorities to resolve, the executives must establish open communications channels and have access to informative data and metrics, via charts and dashboards, in near real time.

As you learned in Chapter 7, Mapping the Current State (VSM Step 4), executives, managers, and VSM team members must practice Gemba. In other words, they must go to the actual place where work is performed to see what's going on and learn about the impediments and issues directly from the value stream operators. Through collaboration with the operators, the executives, managers, and value stream team members can work through the issues to gain the information they require to make effective decisions and plans.

Developing knowledge, skills, and resources

Building the proper skills to conduct software development in a CI/CD or DevOps pipeline environment is not a straightforward issue for IT shops. But, in smaller shops, the teams tend to evolve those skills over time. However, building the knowledge, skills, and resources to implement DevOps pipelines at an enterprise scale is a considerable challenge that cannot be taken lightly.

In the following subsection, we'll deal with the issues of platform and toolchain investment costs. But there is also the issue that many of the software development teams are likely working on legacy applications and enterprise-class commercial off-the-shelf (COTS) software applications. The longer they have worked on those applications, the further their skills may have lagged behind modern methods and tools.

While these are not insurmountable issues, executives who wish to transition to a DevOps-centric approach to software delivery must make investments in their people. The development costs of our people are as much a part of the business transformation expense and ROI as the investments the organization makes in terms of platforms, infrastructures, and tools.

Getting executive buy-in

Few things in life are free. I'm in a situation where organizational executives wanted to reduce costs in one vendors' application life cycle management platform and used a DevOps transformation initiative to justify the move. Unfortunately, the decision makers did not fully appreciate the investment costs in both people and tools to transition.

While executives can mandate a transition to DevOps, there's probably a much more significant investment in people and tooling than they have imagined. Adding in VSM to improve pipeline flows is an intelligent move. But that work also adds to the investment costs in developing skills, platforms, and tooling.

This issue was discussed in Chapter 6, Launching the VSM Initiative (VSM Steps 1-3) It's hard to get executive attention and buy-in to set aside people to perform work that does not directly contribute to building and delivering value to customers. Nevertheless, making Lean improvements is critical to ensuring the organization, as a whole, is delivering value optimally. This requires investments in people, processes, and tools to improve value stream pipeline flows.

The same issue is true with investing in the development of IT value stream pipelines via DevOps and VSM platforms and tools. Someone within the organization with the proper authority will have to build the business case to make these in people, processes, and tools. Ideally, the person leading the effort is a CTO, CIO, or CEO, or at least a line of business executive. If not, someone in the organization will need to take the bull by the horns and build the business case.

In the next section, you will learn how applications can be evaluated on a return on investment (ROI) basis to justify the investments in installing DevOps and VSM capabilities.

Overcoming budget constraints

Let's face it: organizational budgets are constantly stressed. There are always more things to invest in than the organization can afford. That's a given. DevOps- and VSM-related investments are portfolio-level decisions. In other words, they impact the mission and strategies of the business, and they need to be evaluated as such.

The Scaled Agile Framework® (SAFe®), as an example, addresses portfolio-level investments in two forms. One is product-related, while the other is something they refer to as an Architectural Runway. The product investments are easy to understand, as they directly relate to the value the organization delivers.

The term Architectural Runway probably seems a bit odd, and no – we're not talking about designing and building airports. Instead, Architectural Runway refers to investments made to create the future state technical foundation for developing business initiatives and implementing new features and capabilities. DevOps and VSM are the primary investments organizations make in their Architectural Runway to improve their value delivery capabilities in a digital economy.

Knowing how to get started

This topic is the reason you bought this book, I hope. In Chapter 6, Launching the VSM Initiative (VSM Steps 1-3) through Chapter 10, Improving the Lean-Agile Value Delivery Cycle (VSM Steps 7 and 8) you learned how to apply a generic eight-step VSM methodology. You know to conduct a VSM initiative across any type of value stream. Over time, you can modify the approach taught in this book and make it your own.

But one point I want to make is that modern VSM tools help orchestrate your flows, and they provide you with the metrics and visibility to make Lean-oriented improvements across your IT value streams. But the tools should not replace the human aspects of collaborating to understand and improve your pipeline flows. Metaphorically speaking, don't be afraid to work as a group and get your hands dirty in the data and analytical aspects of VSM.

Lacking process maturity

This subsection's heading is not meant to imply the implementation of a formal process maturity program. However, as the saying goes, practice makes perfect. OK, agreed, that's not an Agile nor Lean-oriented concept. In our Lean-Agile orientation, we constantly seek improvements to our processes and activities. But Lean doesn't work unless we also have mature standard practices.

The issue of having standards was addressed in Chapter 4, Defining Value Stream Management, on the need for standardized work, and specifically in the section on the 5S system. Recall that the fourth item in the 5S system is Seiketsu (standardization) – incorporate 5S into standard operating procedures. Create guidelines to keep them organized, orderly, and clean, and make the standards visual and obvious.

The reason for having standardized processes is that we can't have optimal flows if we have done the work to reduce waste and then ensure waste is not allowed to creep back in. Yes, we can and should continue to seek improvements for our value stream flows, but we should always build from our base of proven, standardized processes.

Maintaining governance and compliance

The last item in our list of VSM tool implementation issues deals with governance. Recall from the first section of this chapter, Leveraging VSM tools and platforms, that Gartner includes CCA tools as an essential DevOps tools category that help transform the IT value stream's ability to deliver customer value rapidly and reliably.

In the IT governance subsection, you also learned that CIOs and other IT executives have fiduciary responsibilities to manage and protect the organization's IT assets and investments. In that subsection, we primarily discussed IT governance as a critical element to ensure fiscal responsibility and minimize legal liabilities. However, there are other governance issues related to health (such as HIPAA in health-related privacy and security), safety (such as compliance with OSHA), and user accessibility issues (governed by 508 compliance). There are many more compliance requirements, many of which are regulated by government agencies.

This is the end of this section on VSM tool implementation issues. In this section, you learned that budgeting and executive buy-in are critical issues. Without executive buy-in, budgets will not be made available to implement DevOps and VSM platforms. Executives need to see value in supporting the business's mission and strategies to justify their investments. With that objective in mind, we will now explore how VSM and DevOps support business transformations that help the organization compete in our digital economy effectively.

Enabling business transformations

Throughout this book, you have been told that VSM is an approach to making Lean improvements. Moreover, you have learned that Lean improvements often require more significant investments than those that can be approved and authorized at the small Agile team level. But the outcomes and benefits of Lean transformations are much greater too.

VSM is the transformative process of delivering business value across the organization. And it is in that context that organizations need to evaluate their investments in VSM initiatives and tools. This section will explore some of the applications that help executives justify the strategic investments in VSM tools and processes.

Providing metrics to show business value

If you did not skip Chapter 4, Defining Value Stream Management, you should already understand the importance of capturing relevant metrics to improve value delivery. Modern VSM tools make it much easier to capture snapshots of data in real time.

Moreover, in modern CI/CD pipelines, activity flows are measured in microseconds. A human can't capture meaningful metrics in those time frames with a clock or stopwatch. But the VSM tools can. That frees up humans to perform analysis. The metrics tell us where there are inefficient flows, waiting, and bottlenecks. They can even tell us where in the pipeline those areas of waste occur. But it's human beings who need to go in to do the work to figure out how to resolve any identified issues.

Understanding costs across organizational value streams

The ability to identify costs goes hand in hand with metrics. In Lean, all forms of waste hinder our ability to deliver value, and therefore all forms of waste create non-value-adding costs.

Recall from Chapter 4, Defining Value Stream Management, how James Martin chronicled 17 common organizational value streams, reshown in the following table. The more value streams we have interconnected, the more rapidly non-value-added costs can accrue:

Figure 11.1 – James Martin's list of 17 conventional business value streams

We've discussed how software development value streams support other operational value streams throughout this book, such as those listed in the preceding table. For example, software development teams may implement software products, be it COTS or custom, to improve order fulfillment or customer service delivery. Delays in software delivery to those two operations-oriented value streams may cost the organization customer orders or future business.

The same principles apply to marketing and market information capture. We discussed these issues in Chapter 6, Launching the VSM Initiative (VSM Steps 1-3), in the Integrating value streams section. Here, we implemented the Adaptive Productizing Process. In a modern Agile context, we have product owners making development decisions based on market intelligence created by the marketing and product management value streams. However, the speed, accuracy, and actionability of market-driven intelligence are severely handicapped by manual processes.

Moreover, the software may drive many of the process improvements associated with building physical products in modern manufacturing facilities. For example, manufacturing equipment often has control systems that monitor processes and the flow of materials, information, and work.

Additionally, countries with higher labor rates have found it necessary to implement modern factory automation and robotics capabilities to improve quality and productivity with lower labor costs. But factory automation and robotic systems must be programmed.

With these examples, it should be apparent that IT value streams have linkages to the organization's other value streams. And, just as we are using VSM and DevOps platforms to integrate, automate, and orchestrate IT value streams, we must apply the same capabilities across all linked value streams to ensure optimized flows across the entire value delivery chain.

Understanding value stream lead and cycle times

Improving our delivery time to market is an essential requirement of virtually any business transformation activity. And the key to improving value delivery is by making improvements to value stream lead and cycle times. We covered this subject at length in the previous chapters, so there's no reason to explain why again here.

The critical point to be made here is that VSM platforms provide the means to have an accurate view of value stream lead and cycle times across the DevOps pipeline, from front to end. Additionally, as part of the VSM tools solution, having "what if" analytical capabilities allows the organization to try out alternative improvement scenarios.

It's important to remember that making local optimizations at the activity level may or may not have any real impact on improving overall productivity and throughput. In other words, cycle time improvements may not create lead time improvements. That's because we need to address those activities that most hinder flow, and we need to know which activities have the lengthiest cycle times that impede our pipeline flows.

Another thing to consider is that customers won't see nor value cycle time improvements at the activity level. What they care about is time to delivery, and that's measured as lead time.

Managing risks across value streams

VSM and DevOps tools and platforms improve risks across IT value streams by significantly reducing the possibilities of having unexpected issues crop up. Modern VSM tools integrate tools to enable the continuous flow of information and work. DevOps platforms' CI/CD capabilities help automate SDLC activities within the pipeline, thus minimizing the potential for human errors and delays. These VSM tools provide orchestration capabilities that affect continuous and optimized flows.

Moreover, when things change in our pipeline, be it changes in customer demand or suboptimal flows, the metrics and analytic capabilities of the VSM tools provide the information you need to assess alternatives and make decisions before the risks become issues.

Analyzing patterns and trends

In traditional Lean product processes, product development teams use Six Sigma techniques to monitor performance parameters, which indicate trends toward the upper and lower boundary points of our production processes and quality goals.

The metrics we identified in Chapter 8, Identifying Lean Metrics (VSM Step 5), establish the goals of our value stream activities. The current and future state mapping activities help us establish the upper and lower boundaries we need to operate within to optimize our pipeline's materials, information, and workflow. And, as you might surmise, modern VSM tools provide monitoring and visualization capabilities to see how our value stream activities are trending against those goals and boundaries.

VSM tools also provide visibility into the product backlogs. This helps us monitor how work is queued at the frontend of the pipeline and how it progresses through the pipeline. We can see how well refining and scoping the requirements match reality once the work is introduced to the development pipeline. We can also use the data monitoring and visualization capabilities of the VSM tools to see whether something is breaking in our DevOps pipeline, including the production environments. For example, it's helpful to know when and if we have applications, data stores, networks, servers, or security components that are failing. In traditional IT shops, these functions are siloed. Before the advent of modern VSM tools, bringing all the data together into one monitoring and visualization platform was challenging.

Accelerating improvements with AI

The use of artificial intelligence (AI) in VSM tools is another capability that is emerging in modern VSM platforms. Definitionally, AI-based tools and platforms mimic a human's ability to think, referred to as cognitive functions, which can include human-like activities such as learning, perceiving, problem solving, and reasoning. However, cognitive AI is only a subset of the larger field of AI. So, let's look at the distinctions between the two before getting into the details of AI:

- Cognitive computing: This is a narrow subset of AI that seeks to create systems that understand and simulate human reasoning and behavior.

- AI: These are systems that apply pattern recognition capabilities across large datasets to augment human thinking for solving complex problems.

Machine learning takes AI another step further by implementing capabilities to learn and improve from experience without an explicit programming approach. This branch of AI creates applications that are programmed to find patterns and trends in massive volumes of data and then use that data to discover, through trial and error, optimal approaches to achieving goals they are programmed to accomplish.

In other words, AI systems with machine learning capabilities evaluate data in terms of their goals, and then apply different strategies to accomplish those goals without the programmers telling them how to do it.

In effect, machine learning systems apply the same types of iterative and incremental development processes that Agile programmers use to accomplish their goals – except the computers can process much more information and do so much more quickly than humans. Nevertheless, the patterns and strategies quickly identified by the AI systems do not remove the need for humans to analyze the findings, make decisions, and perform the necessary work to resolve the negative trends.

AI researchers branch the discipline of AI into four types and seven patterns. The four types of AI form increasingly higher-level tiers of capabilities and include the following:

- Reactive machines: These are the oldest and simplest form of AI. In this case, an AI application is programmed for a single purpose and reacts to inputs according to their instructions, without saving any findings. In other words, these AI systems only "react" to current inputs, potentially applying millions of calculations before selecting an optimal result for each situation that's encountered.

An example of this type of AI system is applications that compete against humans in playing games, such as chess.

- Limited memory: This is one step beyond reactive machines, where AI systems incorporate limited memory to maintain knowledge gained from previously learned information, stored data, or observed events. The goal of such systems is to build experiential knowledge that is immediately useful but fleeting in terms of time and purpose.

For example, autonomous vehicles monitor their surroundings to identify threats and obstacles, make predictions of trajectories, respond accordingly, and then move on to reassess their surroundings and continue to react accordingly. Such systems need to retain sufficient information about their immediate environment, but then forget and rapidly move on to evaluating incoming data and responding appropriately to the next set of environmental conditions.

- Theory of mind: These types of AI systems attempt to mimic human decision making and communications with the same contextual thoughts and emotions that affect human behavior. This type of AI system must learn rapidly and react to facial expressions, body language, and emotional responses from human beings they are interacting with.

The Theory of Mind AI systems application includes establishing human-like, responsive, and empathetic communications with human beings. As these capabilities mature, the application for Theory of Mind-based AI systems improves human-like communications in call center and customer service environments.

- Self-aware: AI systems attempt to achieve human levels of consciousness. Here, we can think of robots and computing systems that understand their existence, think for themselves, have desires, and have emotive feelings and experiences.

Self-aware AI systems do not exist. Such potential applications could assist humans with various life or work tasks, where empathy and self-awareness can help ensure safe conditions during interactions with humans. In the old Jetson cartoon, you can imagine the cleaning robot, Rosey, as a potential future application.

Of course, a well-known negative example of this type of capability comes from the novel and movie 2001: A Space Odyssey, by Author C. Clarke and the fictional AI computer, Hal. Hal is an onboard computer in the Discovery space shuttle who becomes self-aware. When Hal makes an unintentional error, he becomes fearful that he will be shut down and thus terminated. So, he begins to kill the Shuttle astronauts, fearing they will learn of his mistake.

OK, with that negative concern expressed – not to belittle it – let's move on and discuss the current realities, limitations, and capabilities of AI-based technologies. According to Cognilytica, a leading market research firm on the state of AI adoption, the seven patterns of AI are as follows:

- Autonomous systems: These are the AI systems that focus on specific tasks to achieve a directed goal or interact with their surroundings with minimal to no human involvement. There are two types of autonomous systems. The first is a physical and hardware-oriented product such as autonomous vehicles. The second type of autonomous system includes software systems or virtualized autonomous systems such as software bots.

- Conversation and human interaction: These directly interact with humans via a natural conversation, with interactions spanning voice, text, images, and other written formats. The goal is to enable computer-to-human communications in plain-spoken languages or even human-to-human communications, where language differences or disabilities interfere. Such systems can also generate information in text, images, video, audio, and other media formats meant for human consumption.

Another goal is to implement Theory of Mind capabilities to improve understanding between participants. Such applications provide situations where the accurate two-way conveyance of information is critical to supporting a business or social interaction.

- Goal-driven systems: This applies machine learning and other cognitive approaches to learning through trial and error to find an optimal solution. Some people believe that it may be possible to apply trial and error processes to learn anything theoretically.

Goal-driven AI systems are most valuable when we know what our desired outcome is, but we're not sure how to get there, and there are many alternative approaches to work our way through. Recall the issues we discussed regarding the exponentially expanding inter-relationships between nodes in large, complex systems in Chapter 3, Analyzing Complex System Interactions. Goal-driven systems apply a brute-force approach to discover optimal outcomes by evaluating all potential scenarios.

- Hyper-personalization: This is an application of machine learning that's used to develop unique profiles of individuals, with continued learning and adaption over time to support various purposes. For example, the AI system may, based on individual preferences, display relevant content, recommend relevant products, and provide personalized recommendations and guidance. Hyper-personalization systems evaluate each person as a unique individual.

The applications of hyper-personalization include provisioning personalized health care, financial services, directed insights, product information, general advice, and feedback.

- Patterns and anomalies: These can be detected by applying machine learning and other cognitive approaches. The objective is to identify patterns in the data and discover higher-order connections between information. More precisely, the goal is to gain insights on whether any given piece of data within a more extensive dataset fits an existing pattern. If it does, the data fits within and strengthens the pattern; if not, it's an outlier. In other words, the primary objective of this pattern is to find which one of the things is like the other and which is not.

This AI strategy is applied within VSM tools and platforms to discover patterns and trends.

- Predictive analytics and decisions: This strategy employs machine learning and other cognitive approaches to understand how learned patterns can help predict future outcomes by leveraging insight gained by analyzing system behaviors, interactions, and data.

Predictive analytics is another AI application within modern VSM tools and platforms, as the predicted outcomes can help guide humans in decision making. The most important aspect of this pattern is that humans are still making decisions, but the tools are helping humans make better and more informed ones.

- Recognition: Recognition-based AI systems use machine learning and other cognitive approaches to identify and determine objects or other desired things to be identified within some form of unstructured content.

Structured data is highly organized and formatted to be stored, managed, and searched for within relational databases. In contrast, unstructured data has no predefined format or organization, making it much more challenging to collect, process, and analyze.

Examples of unstructured content include information available in images, video, audio, or text file formats. The objective of AI-based recognition systems is to identify, recognize, segment, or otherwise separate some part of the content so that it can be labeled and tagged for future use. By tagging and labeling unstructured data, the files and metadata can be managed within a relational database and other structured data.

The value of using recognition systems is that they are much quicker and potentially more accurate than humans performing the exact same identification and cataloging tasks. Such applications include image and object recognition, facial recognition, sound and audio recognition, item detection, handwriting and text recognition, gesture detection, and identifying patterns within a piece of content.

By now, you should have the idea that AI systems are not quite the black boxes they may seem to be, nor are all the AI patterns applicable to use in VSM tools and platforms.

AI and machine learning systems do the heavy lifting in VSMs to search volumes of data to find relationships and patterns that would take humans hundreds of person-hours to find. AI systems aren't performing magic; instead, they follow a set of rules and tasks humans have directed.

Computing systems can faithfully follow their coded rules and algorithms, precisely apply mathematical and logical expressions innumerable times, all the while searching through volumes of data – with no errors. Comparatively, humans don't do those kinds of tasks very well. Moreover, assuming anyone wanted such a task in a DevOps environment, the work pace is measured in microseconds, and no human would be able to keep up.

Finally, just like humans, some AI systems learn from their experiences and reapply what they've learned to gain new insights and evaluate potential solutions to complex problems.

Humans have always created tools to make their lives easier and more comfortable. This situation is no different when those tools are AI-based VSM tools. They make our jobs easier and our work more productive. But, as with any tool, humans must control AI tools for productive and humane purposes.

Governing the software delivery process

We addressed the subject of IT governance in the previous subsection, titled Maintaining governance and compliance. The context here was maintaining governance policies and standards that IT must support. Here, the issue is using VSM and DevOps tools to improve the governance of the overall software delivery process.

In DevOps pipeline flows, the system's development life cycle follows the same patterns as the traditional and agile models but at a greatly accelerated rate. In other words, we still follow a process that includes identifying a business or user problem, gathering requirements, and then architecting, designing, building, testing, and deploying a solution to deliver customer-centric value. But the speed is so great in DevOps pipelines that we need to ensure proper governance of the process through its execution.

There are definitely business, industry, and regulatory compliance aspects to implementing governance as part of the software delivery process, and those compliance requirements also operate at an accelerated rate.

The critical capability here is that VSM tools operate at the same speeds as integrated and automated DevOps pipelines. Modern VSM tools implement and enforce governance policies without human intervention, and thereby minimize risks that humans will miss critical process and compliance requirements at the accelerated pace of software development and delivery.

Managing value streams as continuous flows

The critical issue with the traditional Waterfall software development model was that it did not enforce a Lean-oriented production process. The entire system development life cycle was segmented and extended beyond reasonability. The traditional waterfall model was entirely out of step with the Lean production processes that organizations implemented across their other value streams.

Agile practices helped a bit by implementing iterative and incremental development patterns. But the early agile methodologies, such as Scrum, still enforced batch processing in the form of Sprints. Kanban Boards helped improve flows within the Sprint but did not improve overall system development pipeline flows.

CI/CD and DevOps pipelines implemented integrated toolchains and automation strategies to improve work and information flow from end to end, including extending into operations-oriented support activities. But that still leaves the matter of orchestration to ensure proper and efficient pipeline flows, as well as to manage governance and compliance requirements throughout the software delivery process.

Modern VSM tools implement orchestration capabilities from end to end across the software development and operations-oriented value streams. With DevOps VSM tools, the organization can achieve continuous and optimized software delivery and support flows.

Visualizing value streams

In a modern DevOps pipeline, most activities occur in an automated and orchestrated fashion, without human intervention. Without visualization capabilities, we would have to take things as a matter of faith that the pipeline is operating as intended. Unfortunately, this means that if there are issues, we generally won't find out until after the fact, when we have a more significant problem to deal with due to missed deliveries or missed requirements.

Modern VSM tools provide visualization capabilities that demonstrate key metrics and pipeline flows. These tools also provide graphical visualizations to help depict queuing and waiting for work items. In short, modern VSM tools help identify areas of waste that need improvement and provide visualization capabilities to see where that waste lies.

Improving cross-functional and cross-team collaborations

This area is the original purpose of DevOps: to enable collaborations between development- and operations-oriented teams. But communication and collaboration must occur across the entire value delivery chain and all the value streams – not just development and operations teams.

In our modern digital world, it's not unusual to have collaborating teams spread out across large worksite locations, geographic regions, and even international boundaries. The tools that are used for communicating, collaborating, and orchestrating work include project management software, video conferencing, office chat or instant messaging systems, file sharing, online and real-time collaborative document revision tools, document management and synchronization tools, online whiteboards, and version control tools.

The value of these capabilities is that it's nearly impossible to maintain continuous flows and orchestration of work without constant and appropriate communication and collaboration. The more geographically dispersed the organization and its customers and supply chain partners are, the more these tools become necessary.

Improving value stream efficiencies and handoffs

This entire book is about improving production flows across value streams of all types, but IT-oriented value streams in particular. The IT industry has transitioned through a paradigm shift that moved production from the traditional project-based flows to Agile's batch-oriented workflows, and then to modern CI/CD and DevOps pipeline flows. Those organizations that effectively implement DevOps capabilities have a distinct competitive advantage over those that do not.

While some of the concepts behind DevOps can be made possible without integrated toolchains and automated processes, those environments will not be competitive with other organizations that have fully invested in the DevOps and VSM tools. Quite simply, DevOps and VSM platforms offer accelerated software deliveries with higher quality than the traditional and Agile-based practices.

That's not to imply that DevOps or even VSM is something different to Agile. By analogy, turtles and rabbits are both types of animals, but rabbits are much faster than turtles. The same is true in IT. DevOps pipelines are much faster at delivering software value than traditional or Agile-based workflows. They are also more efficient and tend to deliver higher quality products.

Improved quality

The previous subsection mentioned that IT shops that have implemented DevOps and VSM platforms tend to deliver higher quality products. Let's take a moment to examine why.

In the traditional Waterfall model, testing is the last activity that's performed before deployment. The problem with this approach is that the code base is entirely built by that time and likely hiding a million and one bugs. That's perhaps a slight exaggeration, but there will be many bugs – guaranteed. Worse, they will be hard to isolate and fix at that late stage.

Agile practices are much better in that the development teams are building smaller increments of code iteratively and testing each new increment of functionality along the way. So, even if the software is delayed across several sprints to go out as a formal release, the software code is generally better tested than in the traditional model.

But CI/CD and DevOps pipelines take things to a whole other level but automating testing and provisioning the test environments on demand. For example, I worked in a shop where each day's code was run through a series of tests, often tens of thousands of tests, every night. As a result, it was extremely rare to see a bug make it into production.

This type of capability can be installed in an Agile team. But the next step is to deliver tiny increments of functionality – often referred to as microservices – often many times a day or even many times over an hour, and dynamically spin up production-like test environments on demand to test each new micro-release of the software. That is what continuous delivery is all about.

But some organizations take the CD concept even further and allow each new code release that passes all functional, non-functional, and performance tests to go directly into production. The development team may release new features and functions to select groups of users, by roles, within the production environments. This helps minimize risks by having a small group of users validate the feature or function's functionality before making a full-scale deployment to all users.

Performing what if analysis

We have touched on this subject serval times in this book. Organizational investments, DevOps skills, and toolchains are not inexpensive, nor are they quick to implement across larger organizations. Such organizations need to make changes incrementally to afford these investments and minimize potential disruptions as the development teams come up to speed.

As you now know from reading the VSM methodology chapters (Chapter 6, Launching the VSM initiative (VSM Steps 1-3) through Chapter 10, Improving the Lean-Agile Value Delivery Cycle (VSM Steps 7 and 8)), VSM achieves desired future state operations incrementally over time. The basic idea is to evaluate all activities across your value stream flows and prioritize them based on eliminating the most waste for the least cost. This strategy will incrementally improve value stream flows quickly and cost-effectively. Most importantly, the Lean-Agile process improvement activities never stop. We can always do better.

But then the question is, how do we know which alternative approaches are best to take us from the current state to a better future state of operations? That is where the what if capabilities of VSM tools and platforms come into play. In effect, we can simulate making changes to products, equipment, processes, and activities before making the investments to see the effect on the overall productivity of the value stream.

Reusing templates for better standard work items

DevOps VSMP, VSDPs, and CCA tools may include tools that help simplify tedious though relatively common work. For example, VSMP tools may include Adaptor forms and templates to input parameters to connect databases and applications. Both VSMP and VSDPs may include value stream mapping tools and templates to expedite the development of current state and future state maps. On the other hand, CCA tools may include templates that their systems populate as audit trails to demonstrate compliance with regulatory requirements.

Unifying data and artifacts

Large enterprises with multiple product lines must assess value stream productivity when the functional departments and values streams all use different data sources and artifacts to monitor, govern, and document their activities. The situation worsens when the organizations rely on manual labor to capture and reformat the information for management, customer, and stakeholder consumption.

Lean and VSM practices give us common semantics to describe how to improve value delivery. That's half the problem solved. The other half is to eliminate the manual labor of collecting, transforming, and delivering information. Though the information may be valuable for decision making, it's probably late, and the work is non-value-added from our customers' perspectives. Modern VSM platforms address these issues by automating data capture in real time, as well as providing tools that allow the data consumers to visualize and analyze the value stream data at any time and with multiple tools and formats.

Integrating, automating, and orchestrating value stream activities

Lean product improvements encompass the integration, automation, and orchestration of value-adding activities, no matter the value stream. The objectives are to deliver customer-centric value more rapidly and with higher quality by eliminating waste. Modern DevOps and VSM platforms eliminate waste and improve productivity across software development, delivery, operations, and support processes.

However, software potentially touches all organizational value streams. Therefore, we must use DevOps and VSM methods and tools to integrate, automate, and orchestrate all value stream activities via software solutions.

Providing a common data architecture

Many IT organizations allow their development teams to select different tools for their integrated CI/CD and DevOps toolchains. Providing this flexibility is the predicate upon which DevOps VSMPs operate. While the VSMP strategy provides a great deal of flexibility and use of best-in-class tools, the downside is that each tool potentially has a different datastore and data model, making it more difficult to retrieve, normalize, and use data across the toolchain.

VSDP vendors solve this problem by minimizing the tool options and integrating them in a single data store with normalized data across all value stream activities. With VSMP tools, each data source must be mapped to a central repository. In either case, the value of having a single data store that contains normalized data is that queries operate end to end on all the data items that have been managed for the value streams, as well as their activities. In short, single-source data stores with data normalization makes it easier to generate reports, conduct analysis, and create graphical visualizations of pipeline flows.

Developing and populating key performance indicators

Definitionally, key performance indicators (KPIs) are the organization's defined key indicators of progress toward an intended result. Organizations may define KPIs to support strategic and tactical objectives. KPIs are metrics-based, as the results must be measurable to determine progress against our goals and objectives.