![]()

CHAPTER 7

Low Tech: Social

Engineering and

Physical Security

In this chapter you will learn about

• Defining social engineering

• Describing the different types of social-engineering attacks

• Describing insider attacks, reverse social engineering, dumpster diving, social networking, and URL obfuscation

• Describing phishing attacks and countermeasures

• Listing social-engineering countermeasures

• Describing physical security measures

![]()

When kids call home unexpectedly it usually means trouble; so when the phone rang that midsummer’s day in Huntsville, Alabama, I just knew something was wrong. My daughter, 17 years old at the time, was nearly hysterical. Calling from the side of a fairly busy road, she frantically blurted out that the car had “blown up.” Most people hearing this would instantly picture flames and a mushroom cloud, but I knew what she meant: It was simply kaput. So I calmed her down and drove out to check things out.

I tried to apply logic to the situation. She had been driving and the car just stopped, so my first thought was that the problem was electrical; I checked the battery and wiring. When those checked out I decided it may be a vacuum leak or other engine problem, so I looked over the engine. I checked for leaks, and even verified the pedal mechanisms were working. After about half an hour, I deduced this was beyond fixing on the side of the road, that it just had to be a computer problem, and called a tow.

The tow truck cost me $120, and took our van to a local shop about 10 minutes down the road from the house. The van actually made it to the shop before I got home, and as I walked through the front door the phone was already ringing. Donnie Anderson, a great repair man and a whiz of an auto mechanic, was laughing when I finally got to the phone. When I asked what was so funny, he said, “So, Matt, when was the last time you put gas in this thing?”

Sometimes we try to overcomplicate things, especially in this technology-charged career field we’re in. We look for answers that make us feel more intelligent, to make us appear smarter to our peers. We seem to want the complicated way—to have to learn some vicious code listing that takes six servers churning away in our basement to break past our target’s defenses. We look for the tough way to break in, when it’s sometimes just as easy as asking someone for a key. Want to be a successful ethical hacker? Learn to take pride in, and master, the simple things. It’s the gas in your tank that keeps your pen-test machine running.

This chapter is all about the nontechnical things you may not even think about as a “hacker.” Checking the simple stuff first, targeting the human element and the physical attributes of a system, is not only a good idea, it’s critical to your overall success.

Social Engineering

Every major study on technical vulnerabilities and hacking will say the same two things. First, the users themselves are the weakest security link. Whether on purpose or by mistake, users, and their actions, represent a giant security hole that simply can’t ever be completely plugged. Second, an inside attacker poses the most serious threat to overall security. Although most people agree with both statements, they rarely take them in tandem to consider the most powerful—and scariest—flaw in security: What if the inside attacker isn’t even aware she is one? Welcome to the nightmare that is social engineering.

Show of hands, class: How many of you have held the door open for someone racing up behind you, with his arms filled with bags? How many of you have slowed down to let someone out in traffic, allowed the guy with one item in line to cut in front of you, or carried something upstairs for the elderly lady in your building? I, of course, can’t see the hands raised, but I’d bet most of you have performed these, or similar, acts on more than one occasion. This is because most of you see yourselves as good, solid, trustworthy people, and given the opportunity, most of us will come through to help our fellow man (or woman) in times of need.

For the most part, people naturally trust one another—especially when authority of some sort is injected into the mix—and they will generally perform good deeds for one another. It’s part of what some might say is human nature, however that may be defined. It’s what separates us from the animal kingdom, and the knowledge that most people are good at heart is one of the things that makes life a joy for a lot of folks. Unfortunately it also represents a glaring weakness in security that attackers gleefully, and very successfully, take advantage of.

| EXAM TIP Most of this section and chapter deal with insider attacks—whether the user is a willing participant or not. It’s important to remember, though, that the disgruntled employee (whether still an insider to the organization or recently fired, still with the knowledge to cause serious harm) represents the single biggest threat to a network. | |

Social engineering is the art of manipulating a person, or a group of people, into providing information or a service they otherwise would never have given. Social engineers prey on people’s natural desire to help one another, their tendency to listen to authority, and their trust of offices and entities. For example, I’d bet 90 percent or more of users will say, if asked directly, that they should never share their password with anyone. However, I’d bet out of that same group, 30 percent or more of them will gladly hand over their password—or provide an easy means of getting it—if they’re asked nicely by someone posing as a help desk employee or network administrator. Put that request in a very official looking e-mail, and the success rate will go up even higher.

| NOTE From the truly ridiculous files regarding social engineering, Infosecurity Europe did a study where they found 45 percent of women and 10 percent of men were willing to give up their password during the study…for a chocolate bar. (www.geek.com/articles/news/women-are-more-likely-to-give-up-passwords-for-chocolate-20080417/) |

Social engineering is a nontechnical method of attacking systems, which means it’s not limited to people with technical know-how. Whereas technically minded people might attack firewalls, servers, and desktops, social engineers attack the help desk, the receptionist, and the problem user down the hall everyone is tired of working with. It’s simple, easy, effective, and darn near impossible to contain.

Human-Based Attacks

All social-engineering attacks fall into one of two categories: human-based or computer-based. Human-based social engineering uses interaction in conversation or other circumstances between people to gather useful information. This can be as blatant as simply asking someone for their password, or as elegantly wicked as getting the target to call you with the information—after a carefully crafted setup, of course. The art of human interaction for information-gathering has many faces, and there are simply more attack vectors than we could possibly cover in this or any other book. However, here are just a few:

• Dumpster diving Exactly what it sounds like, dumpster diving requires you to get down and dirty, digging through the trash for useful information. Rifling through the dumpsters, paper-recycling bins, and office trashcans can provide a wealth of information. Things such as written-down passwords (to make them “easier to remember”) and network design documents are obvious, but don’t discount employee phone lists and other information. Knowing the employee names, titles, and upcoming events makes it much easier for a social engineer to craft an attack later on. Although technically a physical security issue, dumpster diving is covered as a social engineering topic per EC-Council.

• Impersonation In this attack, a social engineer pretends to be an employee, a valid user, or even an executive (or other VIP). Whether by faking an identification card or simply by convincing employees of his “position” in the company, an attacker can gain physical access to restricted areas, thus providing further opportunities for attacks. Pretending to be a person of authority, the attacker might also use intimidation on lower-level employees, convincing them to assist in gaining access to a system. Of course, as an attacker, if you’re going to impersonate someone, why not impersonate a tech support person? Calling a user as a technical support person and warning him of an attack on his account almost always results in good information.

• Technical support A form of impersonation, this attack is aimed at the technical support staff themselves. Tech support professionals are trained to be helpful to customers—it’s their goal to solve problems and get users back online as quickly as possible. Knowing this, an attacker can call up posing as a user and request a password reset. The help desk person, believing they’re helping a stranded customer, unwittingly resets a password to something the attacker knows, thus granting him access the easy way.

• Shoulder surfing If you’ve got physical access, it’s amazing how much information you can gather just by keeping your eyes open. An attacker taking part in shoulder surfing simply looks over the shoulder of a user and watches them log in, access sensitive data, or provide valuable steps in authentication. Believe it or not, shoulder surfing can also be done “long distance,” using telescopes and binoculars (referred to as “surveillance” in the real world). And don’t discount eavesdropping as a side benefit too—while standing around waiting for an opportunity, an attacker may be able to discern valuable information by simply overhearing conversations.

• Tailgating and piggybacking Although many of us use the terms interchangeably, there is a semantic difference between them—trust me on this. Tailgating occurs when an attacker has a fake badge and simply follows an authorized person through the opened security door—smoker’s docks are great for this. Piggybacking is a little different in that the attacker doesn’t have a badge but asks for someone to let her in anyway. She may say she’s left her badge on her desk or at home. In either case, an authorized user holds the door open for her even though she has no badge visible.

| NOTE In hacker lingo, potential targets for social engineering are known as “Rebecca” or “Jessica.” When communicating with other attackers, the terms can provide information on whom to target—for example, “Rebecca, the receptionist, was very pleasant and easy to work with.” |

Lastly, a really devious social-engineering impersonation attack involves getting the target to call you with the information, known as reverse social engineering. The attacker will pose as some form of authority or technical support and set up a scenario whereby the user feels he must dial in for support. And, like seemingly everything involved in this certification exam, specific steps are taken in the attack—advertisement, sabotage, and support. First, the attacker advertises or markets his position as “technical support” of some kind. In the second step, the attacker performs some sort of sabotage—whether a sophisticated DoS attack or simply pulling cables. In any case, the damage is such that the user feels they need to call technical support—which leads to the third step: The attacker attempts to “help” by asking for login credentials, thus completing the third step and gaining access to the system.

| NOTE This actually points out a general truth in the pen-testing world: Inside-to-outside communication is always more trusted than outside-to-inside communication. Having someone internal call you, instead of the other way around, is akin to starting a drive on the opponent’s one-yard line; you’ve got a much greater chance of success this way. |

For example, suppose a social engineer has sent an e-mail to a group of users warning them of “network issues tomorrow,” and has provided a phone number for the “help desk” if they are affected. The next day, the attacker performs a simple DoS on the machine, and the user dials up, complaining of a problem. The attacker then simply says, “Certainly I can help you—just give me your ID and password and we’ll get you on your way….”

Regardless of the “human-based” attack you choose, remember presentation is everything. The “halo effect” is a well-known and studied phenomenon of human nature, whereby a single trait influences the perception of other traits. If, for example, a person is attractive, studies show people will assume they are more intelligent and will also be more apt to provide them with assistance. Humor, great personality, and a “smile while you talk” voice can take you far in social engineering. Remember, people want to help and assist you (most of us are hard-wired that way), especially if you’re pleasant.

Computer-Based Attacks

Computer-based attacks are those attacks carried out with the use of a computer or other data-processing device. These attacks can include specially crafted pop-up windows, tricking the user into clicking through to a fake website, and SMS texts, which provide false technical support messages and dial-in information to a user. These can get very sophisticated once you inject the world of social networking into the picture. A quick jaunt around Facebook, Twitter, and LinkedIn can provide all the information an attacker needs to profile, and eventually attack, a target. Lastly, spoofing entire websites, wireless access points, and a host of other entry points is often a goldmine for hackers.

Social networking has provided one of the best means for people to communicate with one another, and to build relationships to help further personal and professional goals. Unfortunately, this also provides hackers with plenty of information on which to build an attack profile. For example, consider a basic Facebook profile: Date of birth, address, education information, employment background, and relationships with other people are all laid out for the picking (see Figure 7-1). LinkedIn provides that and more—showing exactly what specialties and skills the person holds, as well as peers they know and work with. A potential attacker might use this information to call up as a “friend of a friend” or to drop a name in order to get the person’s guard lowered and then to mine for information.

The simplest, and by far most common method of computer-based social engineering is known as phishing. A phishing attack involves crafting an e-mail that appears legitimate, but in fact contains links to fake websites or to download malicious content. The e-mail can appear to come from a bank, credit card company, utility company, or any number of legitimate business interests a person might work with. The links contained within the e-mail lead the user to a fake web form in which information entered is saved for the hacker’s use.

Phishing e-mails can be very deceiving, and even a seasoned user can fall prey to them. Although some phishing e-mails can be prevented with good perimeter e-mail filters, it’s impossible to prevent them all. The best way to defend against phishing is to educate users on methods to spot a bad e-mail. Figure 7-2 shows an actual e-mail I received some time ago, with some highlights pointed out for you. Although a pretty good effort, it still screamed “Don’t call them!” to me.

Figure 7-1 A Facebook profile

Figure 7-2 Phishing example

The following list contains items that may indicate a phishing e-mail—items that can be checked to verify legitimacy:

• Beware unknown, unexpected, or suspicious originators. As a general rule, if you don’t know the person or entity sending the e-mail, it should probably raise your antenna. Even if the e-mail is from someone you know, but the content seems out of place or unsolicited, it’s still something to be cautious about. In the case of Figure 7-2, not only was this an unsolicited e-mail from a known business, but the address in the “From” line was [email protected]—a far cry from the real Capital One, and a big indicator this was destined for the trash bin.

• Beware whom the e-mail is addressed to. We’re all cautioned to watch where an e-mail’s from, but an indicator of phishing can also be the “To” line itself, along with the opening e-mail greeting. Companies just don’t send messages out to all users asking for information. They’ll generally address you, personally, in the greeting instead of providing a blanket description: ”Dear Mr. Walker” vs. “Dear Member.” This isn’t necessarily an “Aha!” moment, but if you receive an e-mail from a legitimate business that doesn’t address you by name, you may want to show caution. Besides, it’s just rude.

• Verify phone numbers. Just because an official-looking 800 number is provided does not mean it is legitimate. There are hundreds of sites on the Internet to validate the 800 number provided. Be safe, check it out, and know the friendly person on the other end actually works for the company you’re doing business with.

• Beware bad spelling or grammar. Granted, a lot of us can’t spell very well, and I’m sure e-mails you receive from your friends and family have had some “creative” grammar in them. However, e-mails from MasterCard, Visa, and American Express aren’t going to have misspelled words in them, and they will almost never use verbs out of tense. Note in Figure 7-3 that the word activity is misspelled.

• Always check links. Many phishing e-mails point to bogus sites. Simply changing a letter or two in the link, adding or removing a letter, changing the letter o to a zero, or changing a letter l to a one completely changes the DNS lookup for the click. For example, www.capitalone.com will take you to Capital One’s website for your online banking and credit cards. However, www.capita1one.com will take you to a fake website that looks a lot like it, but won’t do anything other than give your user ID and password to the bad guys. Additionally, even if the text reads www.capitalone.com, hovering the mouse pointer over it will show where the link really intends to send you.

| NOTE Using a sign-in seal is an e-mail protection method in use at a variety of business locations. The practice is to use a secret message or image that can be referenced on any official communication with the site. If you receive an e-mail purportedly from the business that does not include the image or message, you should suspect it’s probably a phishing attempt. This sign-in seal is kept locally on your computer, so the theory is no one can copy or spoof it. |

Although phishing is probably the most prevalent computer-based attack you’ll see, there are plenty of others. Many attackers make use of code to create pop-up windows users will unknowingly click, as shown in Figure 7-3. These pop-ups take the user to malicious websites where all sorts of badness is downloaded to their machines, or users are prompted for credentials at a realistic-looking web front. By far the most common modern implementation of this is the prevalence of fake antivirus (AV) programs taking advantage of outdated Java installations on systems. Usually hidden in ad streams on legitimate sites, JavaScript is downloaded that, in effect, takes over the entire system, preventing the user from starting any new executables.

Figure 7-3 Fake AV pop-up

Another very successful computer-based social-engineering attack involves the use of chat or messenger channels. Attackers not only use chat channels to find out personal information to employ in future attacks, but they make use of the channels to spread malicious code and install software. In fact, IRC is one of the primary ways zombies (computers that have been compromised by malicious code and are part of a “bot-net”) are manipulated by their malicious code masters.

And, finally, we couldn’t have a discussion on social-engineering attacks without at least a cursory mention of how to prevent them. Setting up multiple layers of defense, including change-management procedures and strong authentication measures, is a good start, and promoting policies and procedures is also a good idea. Other physical and technical controls can also be set up, but the only real defense against social engineering is user education. Training users—especially those in technical-support positions—how to recognize and prevent social engineering is the best countermeasure available.

The Science of Close Enough

So you’re a business owner, and you’ve read enough so far to know it’s important to create, maintain, and enforce a good overall security policy in an effort to keep everyone educated and to protect your systems. The problem is, it’s simply impossible to do. Well, nearly impossible anyway.

Most businesses just don’t have a “culture of security,” and most don’t necessarily care to develop one. This isn’t because they don’t believe in security or don’t see the value in it, but rather because it goes against everything our mothers tried to teach us as kids. The “security culture” is insensitive, and goes against the principle of “our employees are our most valuable asset.” Not to mention it generally requires people to be suspicious, prying, and intolerant; as we’ve talked about already in this chapter, that’s just not how Mom raised us. We want to be helpful, good-natured, trusting people, and the owners and managers of the company want to believe their people are just that. A policy telling us to question everything and everyone, and challenge strangers in the hallway for their badges (requiring a—gasp!—physical confrontation and speaking to another human being), seems like it’s in bad form and, let’s be honest, will wind up hurting people’s sensibilities and feelings.

In the real world, it seems the only companies that do adopt a true security culture are government contractors, banks, hospitals, and casinos, better known as frequent targets of sophisticated adversaries. This isn’t because government employees, bank tellers, or casino associates are heartless, emotionless automatons immune to your petty sensibilities. It’s usually because these entities simply have to be this way. Whether by government regulation and requirement, or by the simple fact of survival, these groups know what it takes to make it in the security world, and they don’t really have a choice.

But what about your company or business? Is there really a Catch-22 where security policy and education are concerned? Well, consider just a few examples. You can start with IT training for your employees, but as we’ve all seen so often before, it’s not always the most useful thing on the planet. Your productive employees complain it’s a waste of their valuable work time and, although it does provide knowledge to your employees, some would say reading the phone book provides knowledge in a similar fashion (in other words, not very well). Additionally, IT training rarely presents real-world examples where simply being nice results in millions of dollars in losses—or the potential for it. How about an incentive program, then, where people are rewarded for calling out badgeless wanderers or other security violators? Usually this results in vigilante “Barney Fifes” running around the business, making things worse rather than better. So what about enforcing a punitive policy, wherein someone with a password under their keyboard gets fired? Now you’ve got huge morale problems to deal with.

The hopeless situation security personnel and managers find themselves in doesn’t have an easy solution. It requires patience, effort, and time. A good friend of mine once said that IT security engineering is “the science of getting close enough.” That may sound weird, because “close enough” isn’t good enough when an open vulnerability winds up losing business or personal assets, but when you pause the knee-jerk reaction for a moment and think about it, he’s right. Balance your efforts, risk-analyze what you’re protecting and what efforts you might take to do so, and only apply measures where needed. Security for a 10-person company that builds $1 widgets will, and should, look different from security for a bank with $1 billion in holdings.

Physical Security

Physical security is perhaps one of the most overlooked areas in an overall security program. For the most part, all the NIDS, HIDS, firewalls, honeypots, and security policies you put into place are pointless if you give an attacker physical access to the machine(s). And you can kiss your job goodbye if that access reaches into the network closet, where the routers and switches sit.

From a penetration test perspective, it’s no joyride either. Generally speaking, physical security penetration is much more of a “high-risk” activity for the penetration tester than many of the virtual methods we’re discussing. Think about it: If you’re sitting in a basement somewhere firing binary bullets at a target, it’s much harder for them to actually figure out where you are, much less to lay hands on you. Pass through a held-open door and wander around the campus without a badge, and someone, eventually, will catch you. When strong IT security measures are in place, though, determined attackers will move to the physical attacks to accomplish the goal. This section covers the elements of physical security you’ll need to be familiar with for the exam.

Physical Security 101

Physical security includes the plans, procedures, and steps taken to protect your assets from deliberate or accidental events that could cause damage or loss. Normally people in our particular subset of IT tend to think of locks and gates in physical security, but it also encompasses a whole lot more. You can’t simply install good locks on your doors and ensure the wiring closet is sealed off to claim victory in physical security; you’re also called to think about those events and circumstances that may not be so obvious. These physical circumstances you need to protect against can be natural, such as earthquakes and floods, or manmade, ranging from vandalism and theft to outright terrorism. The entire physical security system needs to take it all into account and provide measures to reduce or eliminate the risks involved.

Furthermore, physical security measures come down to three major components: physical, technical, and operational. Physical measures include all the things you can touch, taste, smell, or get shocked by. For example, lighting, locks, fences, and guards with Tasers are all physical measures. Technical measures are a little more complicated. These are measures taken with technology in mind, to protect explicitly at the physical level. For example, authentication and permissions may not come across as physical measures, but if you think about them within the context of smart cards and biometrics, it’s easy to see how they should become technical measures for physical security. Operational measures are the policies and procedures you set up to enforce a security-minded operation. For example, background checks on employees, risk assessments on devices, and policies regarding key management and storage would all be considered operational measures.

| EXAM TIP Know the three major categories of physical security measures and be able to identify examples of each. | |

To get you thinking about a physical security system and the measures you’ll need to take to implement it, it’s probably helpful to start from the inside out and draw up ideas along the way. For example, inside the server room or the wiring closet, there are any number of physical measures we’ll need to control. Power concerns, the temperature of the room, and the air quality itself (dust can be a killer, believe me) are examples of just a few things to consider. Along that line of thinking, maybe the ducts carrying air in and out need special attention. For that matter, someone knocking out your AC system could affect an easy denial of service on your entire network, couldn’t it? What if they attack and trip the water sensors for the cooling systems under the raised floor in your computer lab? Always something to think about….

How about some technical measures to consider? What about the authentication of the server and network devices themselves—if you allow remote access to them, what kind of authentication measures are in place? Are passwords used appropriately? Is there virtual separation—that is, a DMZ they reside in—to protect against unauthorized access? Granted, these aren’t physical measures by their own means (authentication might cut the mustard, but location on a subnet sure doesn’t), but they’re included here simply to continue the thought process of examining the physical room.

Continuing our example here, let’s move around the room together and look at other physical security concerns. What about the entryway itself: Is the door locked? If so, what is needed to gain access to the room—perhaps a key? In demonstrating a new physical security measure to consider—an operational one, this time—who controls the keys, where are they located, and how are they managed? We’re already covering enough information to employ at least two government bureaucrats and we’re not even outside the room yet. You can see here, though, how the three categories work together within an overall system.

| NOTE You’ll often hear that security is “everyone’s responsibility.” Although this is undoubtedly true, some people hold the responsibility a little more tightly than others. The physical security officer (if one is employed), information security employees, and the CIO are all accountable for the system’s security. |

Another term you’ll need to be aware of is access controls. Access controls are physical measures designed to prevent access to controlled areas. They include biometric controls, identification/entry cards, door locks, and man traps. Each of these is interesting in its own right.

Biometrics includes the measures taken for authentication that come from the “something you are” concept. Biometrics can include fingerprint readers, face scanners, retina scanners, and voice recognition (see Figure 7-4). The great thing behind using biometrics to control access—whether physically or virtually—is that it’s very difficult to fake a biometric signature (such as a fingerprint). The bad side, though, is a related concept: Because the nature of biometrics is so specific, it’s very easy for the system to read false negatives and reject a legitimate user’s access request.

Can I Offer You a Hand?

Brad Horton and I were chatting about biometric security measures, and this little story was so good I couldn’t help but add it to the chapter.

It seems that several years ago Brad had permission to access one of the largest raised floors in the commercial world. Obviously, physical security measures were of utmost importance to protect this virtual cornucopia of computerized wonderment and power from unauthorized access. When queried about their access control, the security team pointed to a hand scanner as a biometric access control device. During the discussion, one of the team members asked, “Well, couldn’t they just chop your hand off and get through?” After some chuckling and snickering, the security lead answered, “Of course, the device manufacturer thought about that, and it checks for an adequate temperature of the hand.” Brad replied, pointing to a Dominos heat wrap pizza box, “So, if I make sure to keep the severed limb at a nice 98.6 degrees, or close to it, I’m good to go?”

Sure, this is funny—in a strange, George Romero way—but it illustrates a couple of valid points. First, reliance on a security measure simply because it is in place is a path to disaster. And, second, even the most outlandish of plans and actions, no matter how silly or unbelievable to you, may still prove to be your security undoing. Don’t discount an idea just because you don’t think it’s possible. It may not be probable, but it’s still possible.

Figure 7-4 Biometrics

In fact, most biometric systems are measured by two main factors. The first, false rejection rate (FRR), is the percentage of time a biometric reader will deny access to a legitimate user. The percentage of time that an unauthorized user is granted access by the system, known as false acceptance rate (FAR), is the second major factor. These are usually graphed on a chart, and the intercepting mark, known as crossover error rate (CER), becomes a ranking method to determine how well the system functions overall. For example, if one fingerprint scanner had a CER of 4 and a second one had a CER of 2, the second scanner would be a better, more accurate solution.

From the “something you have” authentication factor, identification and entry cards can be anything from a simple photo ID to smart cards and magnetic swipe cards. Also, tokens can be used to provide access remotely. Smart cards have a chip inside that can hold tons of information, including identification certificates from a PKI system, to identify the user. Additionally, they may also have RFID features to “broadcast” portions of the information for “near swipe” readers. Tokens generally ensure at least a two-factor authentication method, as you need the token itself and a PIN you memorize to go along with it.

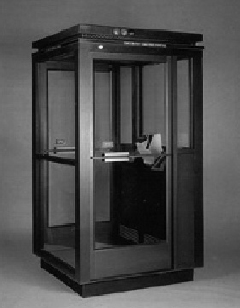

The man trap, designed as a pure physical access control, provides additional control and screening at the door or access hallway to the controlled area. In the man trap, two doors are used to create a small space to hold a person until appropriate authentication has occurred. The user enters through the first door, which must shut and lock before the second door can be cleared. Once inside the enclosed room, which normally has clear walls, the user must authenticate through some means—biometric, token with pin, password, and so on—to open the second door (Figure 7-5 shows one example from Hirsch electronics). If authentication fails, the person is trapped in the holding area until security can arrive and come to a conclusion. Usually man traps are monitored with video surveillance or guards.

Figure 7-5 Man trap

So far we’ve covered some of the more common physical security measures—some of which you probably either already knew or had thought about. But there are still a few things left to consider in the overall program. For example, the problem of laptop and USB drive theft and loss is a growing physical security concern. Controlling physical access to devices designed to be mobile is out of the question, and educating your laptop users doesn’t stop them from being victims of a crime.

The best way to deal with the data loss is to implement some form of encryption (a.k.a. data-at-rest controls). As mentioned earlier in this book, this will encrypt the drive (or drives), rendering a stolen or lost laptop a paperweight. If you’re going to allow the use of USBs, investing in encrypted versions is well worth the cost. Iron Key and Kingston drives are two examples. For the laptop, you can use any of a number of encryption software systems designed to secure the device. Some, such as McAfee’s Endpoint Protection, encrypt the entire drive at the sector level and force a pre-boot login known only by the owner of the machine. Systems like this may even take advantage of smart cards or tokens at the pre-boot login to force two-factor authentication.

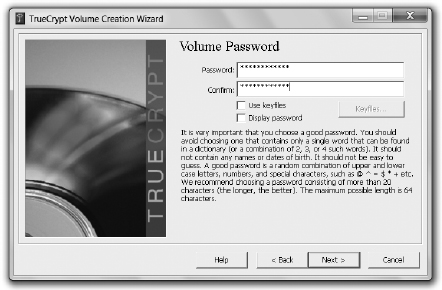

Other tools can encrypt the entire drive or portions of the drive. TrueCrypt is a free, open-source example of this that can be used to encrypt single volumes (treated like a folder) on a drive, a non-system partition (such as a USB drive), or the entire drive itself (thus forcing a pre-boot login). Suppose, for example, you wanted to protect sensitive data on a laptop, like the one I’m using right now, in the event of a theft or loss. You could create an encrypted TrueCrypt volume in which to store the files by following the steps shown in Exercise 7-1.

Exercise 7-1: Using TrueCrypt to Secure Data at Rest

In this exercise, we’ll demonstrate how simple using TrueCrypt to create a secured, encrypted storage volume truly is.

1. After installing TrueCrypt, open the application by double-clicking the shortcut.

2. At the TrueCrypt home screen, shown in Figure 7-6, click Create Volume. The Volume Creation Wizard screen will appear, providing three options (the descriptions under each title describe what they are). Leave the Encrypted File Container option checked and click Next.

3. The Volume Type window allows you to choose between a standard encrypted volume and a “hidden” one. The hidden volume option is there to protect you, should you be forced to reveal a password. The idea being that an encrypted volume, with a separate access password, is hidden inside a standard volume. If you are forced to reveal the password for the encrypted volume, no one will be able to tell whether or not any of the remaining space is a hidden volume. In this case, we’ll create a standard volume, so just click Next.

Figure 7-6 TrueCrypt home screen

4. In the Volume Location window, click Select File. Browse to C: and create the file tcfile (no extension needed). Click Next.

| NOTE In this step you are creating the “file” that will serve as your encrypted volume. To any outsider it will appear as a single file. You will need to mount it as a volume, using TrueCrypt later to manipulate files. |

5. Accept the defaults in the Encryption Options screen. Click Next. Then choose a volume size (for this example 10MB should do). Click Next.

6. Choose a password at the Volume Password screen, shown in Figure 7-7. This will be used to access the encrypted volume later.

| NOTE Underneath the password box is a check box labeled “Use keyfiles.” If this option is checked, TrueCrypt prompts you to choose any file on your system as a key, along with your password, to access the volume. This can be any file (so long as you don’t change it) from music to text to graphics. In this exercise we won’t use one, but just know that this option can provide extra security, should you want it. |

Figure 7-7 TrueCrypt Volume Password screen

7. At the Volume Format screen, read the information about the random pool and click Format. After formatting, the screen will display Volume Created. Click Next and then Cancel at the Creation Wizard screen.

8. To mount your drive, at the TrueCrypt main window, click Select File and navigate to tcfile (created earlier). Select any drive letter appearing at the top of the screen (in Figure 7-8, I selected “F:”) and then click Mount.

9. Type in your password and click OK. You’ll see the drive mounted at the top of the window.

10. To test and use your newly encrypted drive, open an Explorer window—you’ll see “F:” mounted as a drive just like your other partitions, as shown in Figure 7-9. You can drag and drop files into and out of it all you’d like. Once it is dismounted, it will remain encrypted with the encryption formats you chose earlier. (To dismount the drive, select the drive letter in the TrueCrypt window and click Dismount.)

Figure 7-8 Mounting a volume in TrueCrypt

Figure 7-9 Mounted TrueCr ypt volume

As a technical measure within physical security, TrueCrypt is hard to beat. It’s easy to use, very effective, and provides a lot of options for a variety of uses—not to mention it’s free, which should make the boss happy. The only cautionary note to offer here is that the use of the key itself provides real security, not just a password. If your USB drive plugs in and requires a password to decrypt, you’re protected only by that password. Unless, of course, you remove the keyfile and store it separately. Most users, because of convenience or ignorance, just keep the keyfile (if they bother generating one at all) on the same computer. Worse, they sometimes conveniently label it something such as “keyfile.” If the goal is to add something to a password-protected system, you must do it effectively, and this requires keyfile management at the user level.

| NOTE A lot of software is made freely available, but its creators still seek funds, through voluntary donations, to make their offering better. Just as many, many others do, TrueCrypt has a donations link on their front page. Whether you donate to this, or any other freeware development tool you use, is your own choice. |

A few final thoughts on setting up a physical security program are warranted here. The first is a concept I believe anyone who has opened a book on security in the past 20 years is already familiar with—layered defense. The “defense in depth” or layered security thought process involves not relying on any single method of defense, but rather stacking several layers between the asset and the attacker. In the physical security realm, these are fairly easy to see: If your data and servers are inside a building, stack layers to prevent the bad guys from getting in. Guards at an exterior gate checking badges and a swipe card entry for the front door are two protections in place before the bad guys are even in the building. Providing access control at each door with a swipe card, or biometric measures, adds an additional layer. Once an attacker is inside the room, technical controls can be used to prevent local logon. In short, layer your physical security defenses just as you would your virtual ones—you may get some angry users along the way, huffing and puffing about all they have to do just to get to work, but it’ll pay off in the long run.

I’m an Excellent Driver…and Not a Bad Pen Tester Either

Have you ever seen the movie Rain Man? In it, a guy finds he has an autistic brother who happens to be a genius with numbers. He, of course, takes his brother to Las Vegas and makes a killing on the casino floor. In an iconic pop-culture favorite scene from the movie, early on in their relationship, the autistic brother says, “I’m an excellent driver.” He would’ve made an excellent pen tester too.

Suppose you’re surrounded by true math nerds on your pen test team. Also, suppose you have a goal of gaining access to a server room. Someone in the group is going to start estimating success rates for each layer of security you’ll have to go through (a.k.a. Mr. Spock on Star Trek). Let’s say there’s an estimated 10 percent chance of getting past guards, a 30 percent chance of getting past a secretary, a 25 percent chance of getting into the server room physically, and a 35 percent chance of breaking into a server after gaining physical access. So what, you may ask, is the overall probability of someone doing all of these and successfully gaining server access? Why, that happens to be 0.26 percent, or a 1-in-385 chance.

I know you’re probably thinking, “So what? Why should I care about crazy math problems and probabilities multiplying? What’s all this got to do with pen testing?” Have you ever heard the term defense in depth? How about layered security? These concepts work because of probability. Sure, you might talk your way past a secretary—heck, you might even find a way to charm your way past a guard or two. But getting past all the layers of physical security to get to the target? You’ve got a better chance of beating Rain Man at blackjack.

Another thought to consider, as mentioned earlier, is that physical security should also be concerned with those things you can’t really do much to prevent. No matter what protections and defenses are in place, an F5 tornado doesn’t need an access card to get past the gate. Hurricanes, floods, fires, and earthquakes are all natural events that could bring your system to its knees. Protection against these types of events usually comes down to good planning and operational controls. You can certainly build a strong building and install fire-suppression systems; however, they’re not going to prevent anything. In the event something catastrophic does happen, you’ll be better off with solid disaster-recovery and contingency plans.

From a hacker’s perspective, the steps taken to defend against natural disasters aren’t necessarily anything that will prevent or enhance a penetration test, but they are helpful to know. For example, a fire-suppression system turning on or off isn’t necessarily going to assist in your attack. However, knowing the systems are backed up daily and offline storage is at a poorly secured warehouse across town could become very useful.

Finally, there’s one more thought we should cover (more for your real-world career than for your exam) that applies whether we’re discussing physical security or trying to educate a client manager on prevention of social engineering. There are few truisms in life, but one is absolute: Hackers do not care that your company has a policy. Many a pen tester has stood there listening to the client say, “That scenario simply won’t (or shouldn’t, or couldn’t) happen because we have a policy against it.” Two minutes later, after a server with a six-character password left on a utility account has been hacked, it is evident the policy requiring 10-character passwords didn’t scare off the attacker at all, and the client is left to wonder what happened to the policy. Policy is great, and policy should be in place. Just don’t count on it to actually prevent anything on its own. After all, the attacker doesn’t work for you and couldn’t care less what you think.

Physical Security Hacks

Believe it or not, hacking is not restricted just to computers, networking, and the virtual world—there are physical security hacks you can learn, too. For example, most elevators have an express mode that lets you override the selections of all the previous passengers, allowing you to go straight to the floor you’re going to. By pressing the Door Close button and the button for your destination floor at the same time, you’ll rocket right to your floor while all the other passengers wonder what happened.

Others are more practical for the ethical hacker. Ever hear of the bump key, for instance? A specially crafted bump key will work for all locks of the same type by providing a split second of time to turn the cylinder. See, when the proper key is inserted into the lock, all of the key pins and driver pins align along the “shear line,” allowing the cylinder to turn. When a lock is “bumped,” a slight impact forces all of the bottom pins in the lock, which keeps the key pins in place. This separation only lasts a split second, but if you keep a slight force applied, the cylinder will turn during the short separation time of the key and driver pins, and the lock can be opened.

Other examples are easy to find. Some Master brand locks can be picked using a simple bobby pin and an electronic flosser, believe it or not. Combination locks can be easily picked by looking for “sticking points” (apply a little pressure and turn the dial slowly—you’ll find them) and mapping them out on charts you can find on the Internet. I could go on and on here, but you get the point. Don’t overlook physical security—no matter which side you’re employed by.

Chapter Review

Social engineering is the art of manipulating a person, or a group of people, into providing information or a service they otherwise would never have given. Social engineers prey on people’s natural desire to help one another, to listen to authority, and to trust offices and entities. Social engineering is a nontechnical method of attacking systems.

All social-engineering attacks fall into one of two categories: human-based or computer-based. Human-based social engineering uses interaction in conversation or other circumstances between people to gather useful information.

Dumpster diving is an attack where the hacker digs through the trash for useful information. Rifling through dumpsters, paper-recycling bins, and office trashcans can provide a wealth of information, such as passwords (written down to make them “easier to remember”), network design documents, employee phone lists, and other information.

Impersonation is an attack where a social engineer pretends to be an employee, a valid user, or even an executive (or other VIP). Whether by faking an identification card or simply convincing the employees of his “position” in the company, an attacker can gain physical access to restricted areas, providing further opportunities for attacks. Pretending to be a person of authority, the attacker might also use intimidation on lowerlevel employees, convincing them to assist in gaining access to a system.

A technical support attack is a form of impersonation aimed at the technical support staff themselves. An attacker can call up posing as a user and request a password reset. The help desk person, believing they’re helping a stranded customer, unwittingly resets a password to something the attacker knows, thus granting him access the easy way.

Shoulder surfing is a basic attack whereby the hacker simply looks over the shoulder of an authorized user. If you’ve got physical access, you can watch users log in, access sensitive data, or provide valuable steps in authentication.

Tailgating and piggybacking are two closely related attacks on physical security. Tailgating occurs when an attacker has a fake badge and simply follows an authorized person through the opened security door—smoker’s docks are great for this. Piggybacking is a little different in that the attacker doesn’t have a badge but asks for someone to let him in anyway. Attackers may say they’ve left their badge on the desk, or forgot it at home. In either case, an authorized user holds the door open for them despite the fact they have no badge visible.

In reverse social engineering, the attacker will pose as someone in a position of some form of authority or technical support and then set up a scenario whereby the user feels they must dial in for support. Specific steps are taken in this attack—advertisement, sabotage, and support. First, the attacker will advertise or market their position as “technical support” of some kind. Second, the attacker will perform some sort of sabotage—whether a sophisticated DoS attack or simply pulling cables. In any case, the damage is such that the user feels they need to call technical support—which leads to the third step, where the attacker attempts to “help” by asking for login credentials and thus gains access to the system.

Computer-based attacks are those attacks carried out with the use of a computer or other data-processing device. The most common method of computer-based social engineering is known as phishing. A phishing attack involves crafting an e-mail that appears legitimate, but in fact contains links to fake websites or downloads malicious content. The links contained within the e-mail lead the user to a fake web form in which the information entered is saved for the hacker’s use.

Another very successful computer-based social-engineering attack is through the use of chat or messenger channels. Attackers not only use chat channels to find out personal information to employ in future attacks, but they make use of the channels to spread malicious code and install software. In fact, IRC (Internet Relay Chat) is the primary way zombies (computers that have been compromised by malicious code and are part of a “bot-net”) are manipulated by their malicious code masters.

Setting up multiple layers of defense—including change management procedures and strong authentication measures—is a good start in mitigating social engineering. Also, promoting policies and procedures is a good idea as well. Other physical and technical controls can be set up, but the only real defense against social engineering is user education. Training users—especially those in technical support positions—how to recognize and prevent social engineering is the best countermeasure available.

Physical security measures come down to three major components: physical, technical, and operational. Physical measures include all the things you can touch, taste, smell, or get shocked by. For example, lighting, locks, fences, and guards with Tasers are all physical measures. Technical measures are implemented as authentication or permissions. For example, firewalls, IDS, and passwords are all technical measures designed to assist with physical security. Operational measures are the policies and procedures you set up to enforce a security-minded operation.

Biometrics includes the measures taken for authentication that come from the “something you are” category. Biometric systems are measured by two main factors. The first, false rejection rate (FRR), is the percentage of time a biometric reader will deny access to a legitimate user. The percentage of time that an unauthorized user is granted access by the system, known as false acceptance rate (FAR), is the second major factor. These are usually graphed on a chart, and the intersecting mark, known as crossover error rate (CER), becomes a ranking method to determine how well the system functions overall.

The man trap, designed as a pure physical access control, provides additional control and screening at the door or access hallway to the controlled area. In the man trap, two doors are used to create a small space to hold a person until appropriate authentication has occurred. The user enters through the first door, which must shut and lock before the second door can be cleared. Once inside the enclosed room, which normally has clear walls, the user must authenticate through some means—biometric, token with pin, password, and so on—to open the second door.

Questions

1. Jim, part of a pen test team, has attained a fake ID badge and waits next to an entry door to a secured facility. An authorized user swipes a key card and opens the door. Jim follows the user inside. Which social engineering attack is in play here?

A. Piggybacking

B. Tailgating

C. Phishing

D. None of the above

2. John socially engineers physical access to a building and wants to attain access credentials to the network using nontechnical means. Which of the following social-engineering attacks would prove beneficial?

A. Tailgating

B. Piggybacking

C. Shoulder surfing

D. Sniffing

3. Bob decides to employ social engineering during part of his pen test. He sends an unsolicited e-mail to several users on the network advising them of potential network problems, and provides a phone number to call. Later on that day, Bob performs a DoS on a network segment and then receives phone calls from users asking for assistance. Which social-engineering practice is in play here?

A. Phishing

B. Impersonation

C. Technical support

D. Reverse social engineering

4. Phishing, pop-ups, and IRC channel use are all examples of which type of social-engineering attacks?

A. Human-based

B. Computer-based

C. Technical

D. Physical

5. As part of a pen test team, Jenny decides to search for information as quietly as possible. After watching the building and activities for a couple of days, Jenny notices the paper-recycling bins sit on an outside dock. After hours, she rifles through some papers and discovers old password files and employee call lists. Which social-engineering attack did Jenny employ?

A. Paper pilfering

B. Dumpster diving

C. Physical security

D. Dock dipping

6. Which threat presents the highest risk to a target network or resource?

A. Script kiddies

B. Phishing

C. A disgruntled employee

D. A white hat attacker

7. Jerry is performing a pen test with his team and decides to attack the wireless networks. After observing the building for a few days, Jerry parks in the parking lot and walks up to the loading dock. After a few minutes, he plants a wireless access point configured to steal information just inside the dock. Which security feature is Jerry exploiting?

A. War driving

B. Physical security

C. Dock security

D. War dialing

8. Phishing e-mail attacks have caused severe harm to Bob’s company. He decides to provide training to all users in phishing prevention. Which of the following are true statements regarding identification of phishing attempts? (Choose all that apply.)

A. Ensure e-mail is from a trusted, legitimate e-mail address source.

B. Verify spelling and grammar is correct.

C. Verify all links before clicking them.

D. Ensure the last line includes a known salutation and copyright entry (if required).

9. Lighting, locks, fences, and guards are all examples of measures within physical security.

A. Physical

B. Technical

C. Operational

D. Exterior

10. Background checks on employees, risk assessments on devices, and policies regarding key management and storage are examples of ____ measures within physical security.

A. Physical

B. Technical

C. Operational

D. None of the above

Answers

1. B. In tailgating, the attacker holds a fake entry badge of some sort and follows an authorized user inside.

2. C. Because he is already inside (thus rendering tailgating and piggybacking pointless), John could employ shoulder surfing to gain the access credentials of a user.

3. D. Reverse engineering occurs when the attacker uses marketing, sabotage, and support to gain access credentials and other information.

4. B. Computer-based social-engineering attacks include any measures using computers and technology.

5. B. Dumpster diving—that is, rifling through waste containers, paper recycling, and other trash bins—is an effective way of building information for other attacks.

6. C. Everyone recognizes insider threats as the worst type of threat, and a disgruntled employee on the inside is the single biggest threat for security professionals to plan for and deal with.

7. B. Although Jerry is using a wireless access point, physical security breakdowns allowed him to perform his attack.

8. A, B, and C. Phishing e-mails can be spotted by who they are from, who they are addressed to, spelling and grammar errors, and unknown or malicious embedded links.

9. A. Physical security controls fall into three categories: physical, technical, and operational. Physical measures include lighting, fences, and guards.

10. C. Operational measures are the policies and procedures you set up to enforce a security-minded operation.