9. Measure Effectiveness

What’s the most dangerous assumption you can make about communication? That just because you’ve sent a message, employees have received it, understood it, bought into it, and acted on it.

The truth is there’s only one way to know your communication has been effective: by measuring its effectiveness. Measurement also helps you know how to make improvements.

That’s why it’s surprising that many HR and communication professionals neglect measurement. They think measurement is too time-consuming, too expensive, and too mathematical. (After all, we didn’t choose our career because of our love of statistics!)

As two non-math majors, we’re here to reassure you that measurement doesn’t have to be difficult. In this chapter, we show you a simple model for communication measurement. We demonstrate how to apply this model to different situations. And we provide examples of survey questions and other tools, as well as advice on what not to do.

Defining Effectiveness

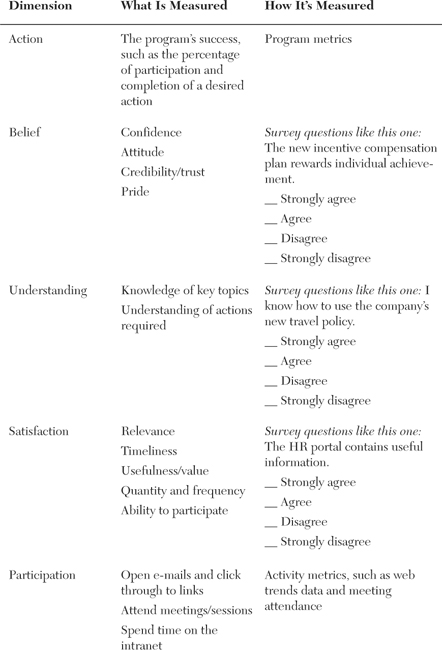

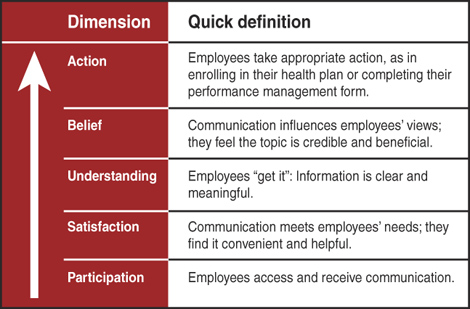

When we started our careers, communication was considered to be more art than science. The commonly held belief was that because communication was subjective, you couldn’t measure it. But we know now that effectiveness is not just a matter of preference. You can measure whether communication creates knowledge, changes minds, and influences behavior. We developed the model shown in Figure 9-1; it defines five dimensions that together equal communication effectiveness.

Figure 9-1 Five dimensions of communication effectiveness

How do you measure each of these? Here’s an overview:

When people think about measurement, they usually envision a survey. However, you’ll notice that not every effectiveness dimension is evaluated by asking employees to complete a questionnaire. In fact, when it comes to participation and action, surveys can be deceiving: People tend to give more positive responses than their actions indicate. For example, they’ll report that they visit your website several times a month, because that’s what they remember. But your web metrics may indicate otherwise. Or, employees will respond that they intend to enroll in long-term disability coverage, but the only way to measure the result of that intention is through actual enrollment results.

Survey Essentials

When you want to measure employees’ beliefs, understanding, or satisfaction through a survey, here are the seven essential steps to take:

1. Create focus.

4. Get buy-in and participation.

6. Analyze and report on the results.

7. Communicate and take action.

Create Focus

If you’ve ever taken a survey that meanders from topic to topic, seemingly without a sense of direction, you’ve experienced the problem that occurs when measurement lacks focus. The survey’s designers had good intentions, but somewhere along the way they lost control of the questionnaire. The result is a survey that’s too expansive, making it confusing for respondents (and more likely they’ll give up before they’re done). Plus, because survey organizers get so much data on so many different subjects, it is difficult for them to act on all those findings.

It’s much better to focus on just a few key topics and create a short survey that gives you just the results you need. To do so, start by considering your survey’s purpose: the reason for the research. Purpose answers the big question: Why are we doing this survey? For example, using effectiveness dimensions as your guide, here are examples of what you might focus on:

• Satisfaction with your intranet site

• Understanding of healthcare plan choices

• Confidence that the performance management system helps employees manage their careers

Choose the Best Method

The most commonly used research method these days is an online survey, using either web-based software or an online service such as Zoomerang or SurveyMonkey. There’s good reason for this: Online surveys are cheaper, faster, and more timely. And in most cases, electronic surveys result in a greater response rate than old-fashioned paper surveys. However, before you decide to go with an online survey, consider these questions:

• Who is your target audience? If not all your employees have easy electronic access, by distributing a survey exclusively via e-mail or the Internet/intranet, you may overlook an important segment of your employee base. Consider using a print survey or electronic/print combination.

• How long is your survey? An electronic survey needs to take less time to complete than a paper survey, because it’s difficult to read a computer screen for a long time. If you are planning on asking a lot of questions, an online survey isn’t for you. You’re much better off with a paper survey, where employees can scan the page much more quickly. In our experience, the longer the survey, the fewer responses you’ll get—so, when you want a high response rate, stick to a one-page (or one-screen) survey.

• What is your timetable? Electronic surveys take less time to send out, complete, and tabulate. If you’re working with a tight time frame, an online survey is best.

Ask Good Questions

The heart of every survey is questions. Writing effective survey questions is a precise science: Market researchers extensively study how people respond to words and phrases. Although you’re not a research expert, you can apply the same thinking by making sure your questions are as clear and specific as possible.

Most survey questions ask about one of four attributes:

1. What did you do? (Experiential) Questions that ask about experiences employees have had. Opinion or belief does not play a role in answering these questions. Example: “I attended the town hall meeting last month.”

2. What do you think or believe? (Attitudinal) Used when trying to gauge feelings, opinions, and beliefs. Example: “The benefits newsletter helps me learn about my healthcare plan choices.”

3. What do you know? (Knowledge testing) Used when trying to gauge awareness or knowledge of an issue. Can be self-assessment or actual knowledge-testing questions. Example: “I understand how to choose the healthcare plan that’s right for me.”

4. Who are you? (Demographic) Allows you to cross-reference a person’s location, tenure, job category, or other characteristics against his or her responses with results from other levels. Example: “In which region do you work? (Northeast, Midwest, Southeast, etc.)”

Whichever type of question you’re writing, remember the following:

• Be simple. Put away your thesaurus, and forget all the fancy words you know.

• Know precisely what you’re asking. Ill-defined or vague questions are an easy trap. You may understand what you mean by a question such as “Do you believe you have enough information to support our strategies?”, but will your respondent? What exactly is “support?”

• Include only one concept. Keep your questions narrowly focused. Two questions combined into one create confusion. For example, if you ask employees about their level of agreement with the statement “E-mails from HR and Employee Communication are timely,” you’re really asking two questions. While you may be interested in e-mails generally, employees may have different experiences with the two distinct sources.

• Avoid jargon or obscure language. Don’t use complex terms the participant might be unfamiliar with.

• Stay neutral. Make sure you avoid leading questions that can influence a participant to a certain response. Leading questions are a common problem that violates the integrity of your data. How could an employee disagree with “I am actively involved in setting my goals and objectives” or “I never have enough time to take advantage of career development courses”?

• Use a consistent four-point scale. There’s a lot of debate in the research world about which answer scale is best. A 5-point scale, from “strongly agree” to “strongly disagree” with “neutral” in the middle? A scale that varies from question to question? For more complex topics, a 7- or even 10-point scale? Here’s what we’ve found works best. Create a simple 4-point scale—Strongly Agree, Agree, Disagree, Strongly Disagree—and design all your questions as statements (“The open enrollment package answers all my questions about healthcare plans”). Eliminate the middle “Neutral” response; for most communication questions you ask, employees have a distinct opinion and don’t need the Neutral option. Use the same scale throughout your survey for consistency.

• Limit open-ended questions. Too many open-ended or write-in questions will contribute to a condition called “Survey Shutdown,” in which a respondent leaves the survey before he or she is finished. Every survey should be limited to one open-ended question. If you feel the need to include many open-ended questions, a focus group is the better option.

(Bad) Example: Survey Fatigue

One of the professional organizations that Alison belongs to conducted a survey about the organization’s print publication and its electronic newsletter. Since Alison believes that feedback is a good thing, she decided to participate.

But the survey was so long, extensive, and open-ended that it was exhausting to complete. It contained 29 questions, a lot for an online survey. Even worse, 16 of those questions were open-ended. And they weren’t the usual “Do you have any suggestions?” questions; they required deep thought. Here are just a few examples:

• “List up to five issues or trends in branding and marketing that you want us to address in our magazine.”

• “What do you like best about our magazine?”

• “What other industry-related e-newsletters do you subscribe to?

• “What kinds of products or services would you like to see advertised in our magazine or e-newsletter?”

Whew! Only the most dedicated reader (or member) would take the time to answer every question. Most people would either do what Alison did—answer just a few questions and skip the others—or get to a certain point and jump ship. In research parlance, that’s called “noncompletion.”

What caused this problem? There are at least two reasons. First, the survey creators wanted to explore issues in an open-ended way. But the wrong tool for this job is a survey, which is a quantitative, closed-ended, data-producing instrument. The right method is qualitative—either focus groups or interviews.

Second, the creators didn’t consider the experience of survey respondents. If the creators had tested the survey, they would have realized that completing it would take at least 15 and probably up to 30 minutes—way too long for an online survey.

Before you are tempted to include open-ended questions in your next survey, consider the fatigue factor.

Get Buy-in and Participation

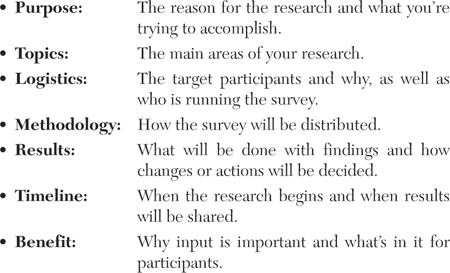

Preparing the organization to participate in your survey requires as much effort as developing the right set of questions. If you conduct research without adequate communication in advance, your target audience may fail to see its value, which may negatively impact participation.

Depending on the breadth of your survey, you should reach out to three primary audiences:

• Senior leaders. It’s important to engage this group, because you may be knocking on their doors when you have your results and recommendations in hand. Be sure they understand how you intend to help the business with the results. And if you have any expectations about their role, such as encouraging participation, be explicit. You should also explain how results will be shared (and expectations about their use).

• Managers. Requesting information from employees usually means that managers will get questions. Be sure this group understands the timeline, what you want to accomplish, how employees will be invited, and what you want them to do. Then they’ll be ready for questions.

• Employees. Use a variety of channels to remind employees of upcoming research (e-mail, print, bulletin board posting, staff meetings, voice mail, newsletter article). Hearing about research many times and in different ways will make employees more aware of it.

For all these stakeholders, here are the points you should cover as you set expectations about your upcoming survey:

Conduct the Survey

Asking the right questions won’t be as valuable if you don’t ask the right people to participate. That’s why it’s important to think carefully about your “sample” or selection of participants.

Start by defining your overall target population. Are you looking to study the entire company? A specific site or division? Certain types of employees, such as hourly workers? This first selection is your “target sample” or universe of participants.

Your next step is determining your sample. The most commonly used methods of sampling are a census (your entire organization) or a random sample (a representative group in your organization).

Use a census survey when you want to include everyone in your target sample or your entire organization. A census survey is simple—just ask everyone to participate—and it signals that every opinion matters. However, if your organization is large or complex, a census may be logistically difficult and expensive. And when people in an organization always ask everyone to participate in every survey, employees may begin feeling over-researched.

That’s why you should consider a random sample. This is a statistically valid way to conduct research that is routinely used by professional polling companies. For example, when campaigns want to learn what U.S. voters will do, they use random sampling to choose 1,000 people who will represent the entire electorate. In your company, use a random sample as a cost-effective method for taking the pulse of your organization. By systematically choosing names from your employee database (a common method is to select every 11th name), you can be assured of viewpoints that are representative of the entire employee population.

Delivering the Survey

After you’ve set your sample, you’re ready to survey. Here are tips for managing the process:

• Provide detailed instructions, along with contact information. This will help people if they have trouble with the survey form.

• For print surveys, provide an envelope for easy return.

• Be up front about how long it will take to answer the survey, especially if it takes people away from their jobs.

• Communicate the deadline for the survey to be returned. Don’t allow too much time, since people tend to answer surveys shortly after receiving them.

• For online surveys, don’t include too many questions on one page. If you’re using multiple web page screens, let people know how many questions they have left to answer.

Analyze and Report on the Results

The results are in! Now you must tabulate the numbers and figure out what they mean and what to do about them. We recommend taking your time to understand fully what the feedback is telling you:

• Start by reviewing the purpose and focus of your survey. Remind yourself what you set out to learn, and keep that top of mind while you analyze the results.

• Now look at the raw data for each question. If your software translates these numbers into percentages, that saves you a step; if not, use your calculator (or a friend in finance) to figure out percentages for each response.

• Begin to draw conclusions. We’ve found that the best way to do so is to collaborate with one or more colleagues. Give each team member a copy of the raw data report to review, and then get together in a spacious conference room. Using large Post-it Notes, ask each member to record findings, one per Post-it, that seem significant. Group trends, and discuss what the results are telling you. Discuss which results met your expectations and which did not.

• Look for separate results from individual questions that work together to tell a story. For example, you may have asked a question about how helpful your intranet site is for finding information about healthcare plan choices. Another question may have asked how well employees understand a new plan choice. By comparing the results of both questions, you begin to tell a story about how well communication is working to create understanding about healthcare.

• Organize open-ended comments by topic. Use those comments to add texture to your quantitative findings.

• Create five to seven key findings, the main conclusions of your survey results. Decide what you will do in response; these actions become your recommendations.

Communicate and Take Action

Here’s a step that’s often overlooked: communicating results to key stakeholders, including employees who took the time to participate. Start by sharing the results with leaders so that you can get buy-in for making changes. For example, suppose your results point to the need for more tools to help managers communicate. You need to create a clear picture of how the data supports this need—from preferences to the current effectiveness of manager communication. You also need to demonstrate potential impact on the business, such as better engagement.

Employees need confirmation that you take their feedback seriously. Not only does this signal that the organization is listening, but it also encourages future participation. With most surveys, it can take time to agree on the next steps, especially if a large investment or major change is involved. Plan to share two or three key findings, as well as where you are in the process, within three to four weeks of the survey. Also pledge to keep the lines of communication open as you move forward.

Checklist for Measuring Communication Effectiveness

![]() Consider five dimensions—participation, satisfaction, understanding, belief, and action—when measuring communication effectiveness.

Consider five dimensions—participation, satisfaction, understanding, belief, and action—when measuring communication effectiveness.

![]() Focus your survey on just a few key areas you can act upon.

Focus your survey on just a few key areas you can act upon.

![]() Make survey questions clear and concrete.

Make survey questions clear and concrete.

![]() Communicate the purpose of your research to encourage buy-in.

Communicate the purpose of your research to encourage buy-in.

![]() Consider a sample instead of asking all employees to participate.

Consider a sample instead of asking all employees to participate.

![]() Analyze your findings by taking the time to draw conclusions and look for patterns.

Analyze your findings by taking the time to draw conclusions and look for patterns.

![]() Share the results of your survey as well as action steps you intend to take.

Share the results of your survey as well as action steps you intend to take.