1. Universal Principles for Game Innovation

A/Symmetric Play and Synchronicity

Symmetric gameplay occurs when players experience the exact same thing at the same time when they are playing a game together. Chess, an analog game, is one such game (with the exception of Chess by mail, where one player knows the move well in advance of the other). Pong is the quintessential symmetric gameplay example in the video game world. Both players experience each move in tandem, perfectly synchronized; each sees exactly what the other sees on their screen.

Many console game that are multiplayer show players the same thing at the same time. In Mario Kart, players see the same “overhead map” of all the players’ progress while each “window” shows each player a close-up of their particular vehicle so that they can manipulate their own vehicle accurately. In this case, the play is both symmetrical, because of the overhead map, and asymmetrical, because the players are not watching identical actions simultaneously.

Asymmetric gameplay occurs when two or more players see different things simultaneously, even though they are playing together. The prototypical example of this is when one player is the Dungeon (or Game) Master (DM) in a Dungeons and Dragons game. Whereas the DM sees what is going on in its entirety, certain knowledge is kept from the other players. In a video game, players may have different skills that enable them to see things (like traps) that other players cannot. These are cases of intentional asymmetry.

Finally, players may see different things due to lag. This unintentional asymmetry may cause a player to think they have made a shot that they have not; in fact, they may get shot by an opponent they cannot see because the server hasn’t yet caught up to the real-time player.

This brings us to synchronicity. Synchronous gameplay is gameplay in which the players make their moves at the same time. This is common in online games where multiple players are in a play space simultaneously. Players usually see some approximation of the same thing at the same time during synchronous play. Multiplayer console games also feature synchronous play. Again, Mario Kart is perfectly synchronous.

The latest craze in asynchronous play is Words with Friends. In asynchronous play, one player makes a move and then waits for the computer/Internet to mediate the move and for the other player to play their move. This can take moments, if both players are connected, or days, depending on when the second player logs in to make a move.

Aces High; Jokers Wild

Aces high; Jokers wild represents an organizational schema in games in which sets of game objects can be reorganized in terms of their presented value or rank. In the case of playing cards, Aces have been (since the French Revolution) classically understood to be the highest ranking card, even higher than the King, Queen, or Jack (which possess a socially symbolical high rank); they are also considered higher than the other numbers, even though they are often used to represent the lowest card (the number one). Similarly, in a Spanish deck, the King beats the Knight, who in turn beats the Page.

However, at the outset of any game using these cards, players establish which of these pieces (cards) is considered the “high” card, and thus they can reorder the deck and change the distribution of probabilities for particular outcomes without changing the foundational rules of the game or even requiring players to draw a new hand. Calling Aces high modifies the distribution of cards available at any one moment without requiring a reset of the game pieces.

Any game in which the set of elements a player has at their disposal, especially those games in which the known values of game objects can be organized in any way, can contain a quick call to Aces high or Kings high. Some games use this principle by changing the high card halfway through the round, or requiring players to call a new high token based on individual or group goals. This introduces an element of variation or surprise into games where the experience might otherwise suffer from too much repetition.

Adding to the complexity of reordering a specifically organized schema on the fly is the introduction of the Joker as a wild card or token. Wild cards represent any other card or token in play, rather than having their own value. The wild card is essentially an empty variable that the player can set as needed. Since a game object (card, token, etc.) that can change its value is often used to the advantage of its player and the player’s personal playing goals, the Joker, or any other card labeled as wild, can even become the highest possible ranking token; it can even become higher than an Ace, even if Aces are high, adding more complexity to the straightforward game mechanic of one token outranking another in an ordered set.

Some games label multiple objects (or cards) as wild, allowing for a handicap on the original distribution in any given set of game objects. These wild objects alter and increase the possibility of coming across rare game moments since they can occur more frequently than the original distribution of game objects allowed for probabilistically.

For example, in Poker, calling Deuces wild turns four cards of low and almost wasteful value (the Twos) into the potential for a higher ranking hand; in this scenario, imagine a Two being used with the other face cards of a Royal Flush family, or even turning a Two and a Seven (statistically the worst opening hand) into at least a pair of Sevens. Calling diamonds, or red cards, or face cards wild creates different statistics on the plays available in any game, while all other rules can remain unchanged.

Bartle’s Player Types

Richard Bartle was a pioneer of multiplayer gaming—the co-creator of the first virtual world. Called MUD (for multi-user dungeon), the game allowed multiple players to simultaneously explore a virtual world and interact not only with the game, but with each other. By creating MUD, Bartle and his compatriots split the atom in terms of teaching designers new things about player behavior. In a 1996 paper called “Hearts, Clubs, Diamonds, Spades: Players Who Suit MUDs,” he attempted to classify the wide variety of player behaviors he saw into four types. Whereas many studies since have helped refine the topology of player types, Bartle’s classifications remain popular for their simplicity and reach.

Achievers’ (or Diamonds’) main focus is “winning” the game. This could be the intrinsic goals of the game or self-defined goals such as “I want to hit Level 80,” “I want to be on top of the leader board,” “I want one million gold coins,” or “I want to beat this game in three hours with just the combat knife.”

Explorers (or Spades) like to figure out the system and experience all it has to offer. Game designers often glom onto the Spade role. Collectors are another type of explorer. Pokemon is a game that itches the itch of explorers to explore the world not only topologically (by running around a map), but also mechanically (by making the battle mechanics nuanced and transparent enough to be interesting to learn). Players that fill out spreadsheets of all the possible items in a game are certainly a type of explorer.

Socializers (or Hearts) play multiplayer games because of the interaction with other players. Beyond just the social nature of playing together, they like to leverage things like guilds and teams to further codify their social existence.

Killers (or Clubs) want to impose their will on their fellow players. This can take on two forms: Some killers kill because doing so shows their prowess in the game. However, some players, called griefers, impose their will strictly because they want to disrupt other players.

Bartle classified these player types on two axes: Acting On versus Interacting With and World versus Players. Achievers prefer to act on the world. Explorers want to interact with the world. Socializers want to interact with players. Killers want to act on players.

By changing the number of opportunities for play in each of these four areas, game designers can affect the types of players who become interested in their game. In analyzing a game, try and determine which of the four player types are being best served. Is there something for all four to enjoy? Is something missing? Is there something for Socializers to do? Killers? By segregating players into logical groups, designers can better provide something for everyone.

Cooperative vs. Oppositional

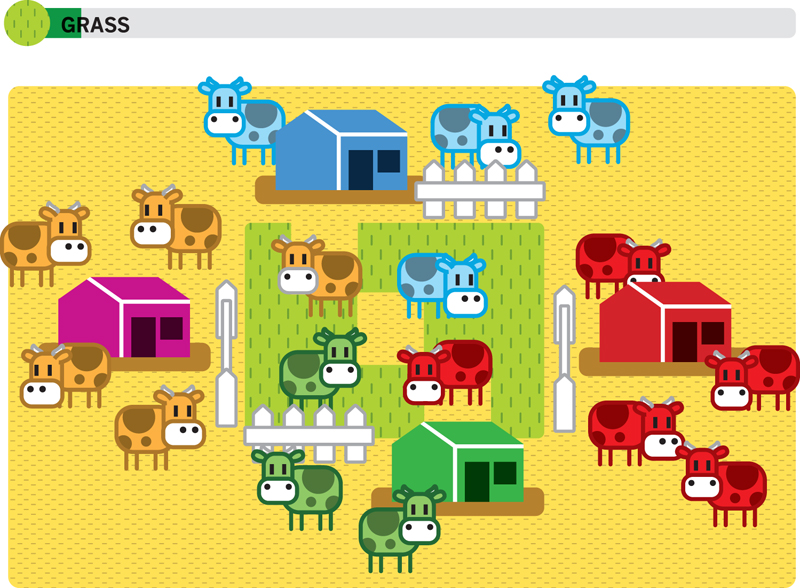

When playing a game with more than a single player, there are two possible configurations for players: cooperative or oppositional. More often than not, play is oppositional or competitive in nature.

In a cooperative game (often referred to as co-op play), two or more players share goals and work together to attain those goals. Table-top roleplaying games (RPGs) are an excellent example of cooperative play. Players band together in parties for adventure. In this case, the obstacle to be overcome is that of the world or the world of the game’s design or the game master’s imagination.

In video games, cooperative play usually involves engaging two or more players against an artificial intelligence (AI) opponent. Players can trade items, heal one another, use complementary play strategies (such as a battle tank joining forces with a ranged-weapon user), or do things as dynamic as physically boosting one another to get on ledges that neither player could reach on their own.

Animal Crossing is an example of co-op play where players can help each other out by connecting their game cartridges to the system in order to unlock content for another player. A number of games have unlockable endings that can only be accessed by players playing cooperatively to solve the final challenge. Console games often offer co-op play to players on the same console and, more recently, have begun to allow two players on the same console to play cooperatively with players over a network connection. Co-op play even exists in single player games where a player can play alongside their previously saved selves.

The place where co-op and competitive meet is the team sport. Within a team, play is cooperative with each player playing a position in order to further the game. Teams are then competitive, playing against each other to score. First-person shooters offer this same team-based competitive play (see Volunteer’s Dilemma and Tragedy of the Commons).

An oppositional game is just that: Players (or teams of players) oppose one another to win. The win condition is predicated upon having a single winner unless a match can end in a draw. Oppositional competition is at the heart of most multi-player games, and many single player games are even played “against” a previous “best” play or high score.

A golfer may play against other players on a given course, but is always playing against his or her own previous scores on the course. In this way, the player may lose to competitors but win as far as a personal best is concerned. This is also true of bowling, racing, or a first-person shooter (FPS) multiplayer game such as Team Fortress 2.

Keep in mind that individual game mechanics and features may encourage or discourage cooperation or opposition, sometimes in unexpected ways. For instance, many Facebook games display lists of a player’s “friends” to encourage socially inclusive interaction, but the list is ranked leaderboard style, which encourages competition, not cooperation. Achieving a certain position on a leaderboard is just as competitive as any other head-to-head play (see Social Ties).

Fairness

Fairness, according to the dictionary, implies something that is free from bias, something that is just. And fairness in game design is no different; the game must be fair to the player. In other words, the game must always be unbiased and not cheat the player. For instance, if the player has been told that they will receive X for action Y, it is unfair to give the player Z for action Y. In this instance, the contract with the player has been broken. When making any contract with the player, it must be honored to be fair.

Fairness becomes particularly important in games of chance. A slot machine that is weighted to give particular results is not fair. Randomness and the guarantee thereof is part of the contract with the player. To renege on that contract is to be unfair to the player.

In a game such as Tetris, part of the contract with the player is that the next piece will be a randomly chosen one of seven piece configurations. Players often perceive the randomness to not be random at all; instead they think the game is giving them unwanted pieces on purpose. If this were, in fact, the case, the game would be acting unfairly or not in good faith. Tetris pieces are, in fact, totally random, but players perceive patterns of behavior and will attribute them to unfairness.

In a similar vein, a game that ramps slowly and then takes a sudden jump in difficulty is perceived to be unfair and it is. Difficulty should scale up evenly so the player does not feel cheated or treated in an unjust fashion.

To go beyond this basic understanding of fairness, one might look to Rabin’s model of fairness. This model is based on three central rules. The first is that, to people who are being kind, people are willing to sacrifice their own material well being. In other words, if players are being kind, a single player will act altruistically and give up some material gain for those kind players. The second rule is the basic inverse of rule one; a player is willing to sacrifice her own material well-being to players who are being unkind. If a player is intentionally being unkind to others, another player will give up some degree of material gain to punish the unkind player. Finally, the third rule is that, as the material sacrifice gets smaller, both rules one and two are more likely to be followed. Put differently, the less the player is giving up materially, the more likely the player is to engage in altruistic or punishing behavior.

Obviously, Rabin’s rules of fairness pertain to multiplayer games where other players are involved. For instance, as it ordinarily costs nothing to send gifts in Facebook games, players are very likely to send gifts to other players who have been kind to them. On the other hand, if there is a severe material penalty in an MMO for killing another player, most players will refrain from killing even the most unkind other player.

Players who feel a game is treating them unfairly are likely to quit the game. Players who feel other players are being unfair are likely to punish those players. Keep this in mind when creating systems where the fairness of play may come into question.

Feedback Loops

When playing a game of Monopoly, often it just seems like one player gets stronger and stronger while the others flounder. The player in the lead keeps getting hotels and knocking other players out of the game while another player is just trying to complete their first set. There isn’t really a way to come back. It’s not fun. And the other players just want to upend the board and play something else.

Colloquially, this problem is called the rich-get-richer problem or a vicious cycle. In the previous example, when the winning player buys hotels, it means that they get more money when people land on their properties, which allows them to have more money to buy more hotels. Because they are rich, they keep getting richer.

This is a feature more generally known in game design as a feedback loop. There are two kinds of feedback loops:

In a positive feedback loop, achieving a goal is rewarded, which makes it easier to continue achieving goals. Here are some examples:

• In a roleplaying game (RPG), killing skeletons gives the player level-ups, which allow them to kill more skeletons.

• In Chess, capturing a player’s pieces makes them more vulnerable, leading to more captures.

• In Dodgeball, a team who has had more players eliminated has multiple threats to deal with, whereas the team with more players has fewer threats.

The other kind of feedback loop is a negative feedback loop. In this, achieving a goal makes it harder to continue achieving goals. Here are some examples:

• In Mario Kart, the blue shell targets only the leader of the race. Being in first means that player is likely to be hit with the shell, which may cause them to fall out of first.

• In American football, getting closer to the end zone means that there is less field remaining in which the 11 defenders are spaced, which means there are fewer empty zones in which to pass.

• In eight-ball pool, one player sinking your balls leads them to fewer options and greater chances of your balls blocking their shots.

Designers want to provide rewards that matter to their players, but players are generally only interested in rewards that help them win. This is why positive feedback loops are so prevalent. The problem is that they can lead a game out of balance, where only the first player to succeed will be able to succeed in the future. Although players want to be more powerful, what they really want is an interesting and challenging game. Sometimes giving them more power goes counter to that goal.

Negative feedback loops are sometimes seen as unfair as well. If a game is designed so it rewards losing, it is rewarding behavior that is contrary to its goals! Designers must be careful in implementing negative feedback loops so that performance in the game isn’t seen as irrelevant. For instance, many racing games that have a rubber banding mechanic are chided for rewarding sloppy driving.

Fixing a negative feedback loop problem is generally easy: Reward the player for achieving the game’s goals. Fixing a positive feedback loop problem can be a bit trickier. Designers may want to scrap positive feedback entirely, but they must be careful to make sure the player still feels an intrinsic reward for completing the game’s goals. Designers may wish to pair the positive feedback given with a kind of negative feedback. Or they may wish to find a reward that doesn’t contribute to the player’s efficacy, like cosmetic rewards such as new skins or animations.

Gardner’s Multiple Intelligences

In 1983, Howard Gardner, a developmental psychology professor at Harvard, came up with a theory of multiple intelligences. This theory states that all of us, as individuals, have strengths and weaknesses in the way we learn. As an example, some take to learning math in school very easily whereas others struggle. This does not necessarily mean they cannot learn it, but that the traditional ways in which math is taught in schools may not be the correct approach for these students.

Through his research, Gardner found that there are eight different intelligences or ways in which people learn; he broke them down this way:

• Logical-Mathematical: The process of learning through critical thinking and using logic. This is what may be vaguely referred to as left-brained learning.

• Spatial: The process of learning by using the mind’s eye to visualize items in space. This is how professional Chess players are able to visualize the moves not only they can make but that their opponent can make in turn.

• Linguistic-Verbal: The process of learning from words, whether they be aural or written. Those strong in this intelligence can learn from listening to speeches or reading books.

• Bodily-Kinesthetic: The process of learning from moving one’s body and the physical world around them. These learners do better if they can stand up, move around, or have physical contact with items they are learning from.

• Musical: The process of learning through the use of all things musical; tone, pitch, rhythm, and sound. A learner of this type can learn from childhood rhymes or anything posed to them in a lyrical fashion.

• Interpersonal: The process of learning from interactions with other people. This person might be a caring sort or a social butterfly.

• Intrapersonal: The process of learning from oneself. These are the quiet people who are always looking within themselves for answers.

• Naturalistic: The process of learning from relating to one’s natural surroundings.

If designers keep each of these intelligences in mind when designing a game, they can open it to an infinite number of players. These intelligences have been used in games before. Eve Online and Dungeons and Dragons appeal to those with logical and mathematical minds with all of the stats that players have to keep up with. A Rubik’s Cube and games like Lumines appeal to those people with a shine for spatial learning. Dungeons and Dragons and most text-based roleplaying games (RPGs) appeal to those who learn linguistically. Games like Red Light, Green Light and any of the new motion-controlled games excite those keen on kinesthetic learning. Musical Chairs and Legend of Zelda: Ocarina of Time utilize music to bring in those who learn this way. The advent of the Internet’s online play and group play in Dungeons and Dragons appeals to those who learn interpersonally. Solitary games such as Solitaire and some RPGs help those who are self-reflective learn more about themselves intrapersonally. Scavenger hunts and hidden items games allow those who learn from their surroundings in a naturalistic way to excel.

Many games utilize two or three of these intelligences, but how many of them touch on a little bit of each?

Howard’s Law of Occult Game Design

Howard’s Law of Occult Game Design (or just The Law of Occult Game Design or Howard’s Law) can be expressed as a formula: “Secret Significance ∝ Seeming Innocence × Completeness.” Translated into everyday speech, this equation means that the power of secret significance is directly proportional to the apparent innocence and completeness of the surface game. In this case, innocence refers to a surface that appears simple, cheerful, even carefree. Completeness means that players can experience the game naively as a conventional platformer, shooter, or other standard game genre without being aware of any thematic depth. Sudden knowledge of the game’s depths transforms players’ experiences. The Law of Occult Game Design is often connected to a sense of the esoteric, of occult significance in both the connotation of dark magic and the original definition of occult: hidden.

The Law of Occult Game Design is why many indie game designers with emotive and thematic design goals tend to work in retro genres with simple mechanics and art styles. Indeed, many successful independent games could be boiled down to “the game seems like a simple platformer (or shooter/adventure game/puzzle game) but then. . . .” Retro genres and styles bring with them nostalgic expectations of simplicity associated with the early history of games. A metaphysical meditation on unseen cosmic forces is more unexpected, and therefore more powerful, in a sidescrolling shooter with eight-bit graphics and a chiptune soundtrack.

Often, a particular mechanic has an unusual twist that operates in both the narrative and gameplay universes of the game, thereby exemplifying excellent narrative design. For example, Braid is a sidescrolling platformer in the style of Super Mario Bros., but Braid’s time-reversal mechanic encodes a reflection on the nature of love and loss. Eversion appears to be a platformer into which Lovecraftian cosmic horror gradually intrudes, revealed partially through the player’s ability to evert (shift between dimensions). Terry Cavanagh’s Don’t Look Back appears to be a simple platformer, but the player is not allowed to move backward as he emerges from the underworld in the second half of the game. This gameplay rule mirrors a narrative rule attached to the hero, Orpheus, when he tried to rescue his lover, Eurydice, from the underworld: He could never look back, or her spirit would be pulled back into the land of the dead.

All of the games mentioned here create a similar transformative experience for the player, in which a seemingly ordinary game is revealed to have concealed thematic depth the entire time (see Theme), much like a Magic Eye picture from which a secret design suddenly emerges. Howard’s Law suggests that the intensity of this transformation will be greater to the extent that the game initially seemed to function as a one-dimensional, self-contained experience.

Occult design is also connected to the idea of the Easter egg (a secret hidden within the game, such as a designer’s initials), but the Easter egg as integrated with the enterprise of world-building. The first Easter egg appeared in the Atari 2600 game Adventure, in which players could access a secret room with the initials of the game’s creator, Warren Robinett. Some Easter eggs promise a vast expansion of the original game, as if offering players the opportunity to peer beyond the veil into another world. For example, in the original Legend of Zelda for the Nintendo Entertainment System (NES), players who input the name “Link” after completing the game once revealed a second quest with altered dungeons.

Howard’s Law has design consequences in space, time, mechanics, and cumulative experience. In level design, densely packed, interconnected labyrinths with many hidden passageways and alcoves are the most effective ways to hide secrets. Demon’s Souls exemplifies this level design principle, which is writ large across the overworld in the game’s spiritual successor, Dark Souls. In terms of time, games that contain events that recur at regular, but unexpected, moments are especially powerful, as when black demonic dogs roam the streets after midnight in the cult horror game Deadly Premonition. Occult design can influence obscure mechanics, such as world tendency in Demon’s Souls, in which an undisclosed global variable allows an aggregate of player actions on the server to unlock hidden events, areas, and characters. Such secrets function most effectively when they combine cumulatively to reveal larger truths about the world of the game, as when the demonic dogs of Deadly Premonition echo an overarching dog shape in the map layout, as well as the pet dog of the game’s disguised cosmic antagonist.

Information

The amount and nature of information that a player has at their disposal at any point in a game can dramatically change the decisions that player makes. For instance, without knowing the rules or the general state of the game, the player cannot reason through the decisions they need to make. Instead, all they can do is make wild, uninformed guesses. So the type and level of information that is available at different points in a game can dramatically change the nature of how it is played.

Information about a game can take several forms and can be categorized for easier consideration.

Structure of the Game

First and foremost of these information types is the game structure, including both its setting and rules. Card games, for instance, print the entirety of the rule set for the game in booklets or on the side of the box. Board games like Checkers have strict rules about valid moves.

The playing environment itself should be regarded simply as information. Consider how the board layout and piece set-up for Chess can be communicated clearly as pure information using algebraic chess notation.

Even the random elements in games are clear information when considered as parameters, rather than as specific values. For instance, in Monopoly players can’t know exactly how far they will get to move on their next turn, but they do know movement is determined by rolling two dice.

State of the Game

The second category of information is the state of the game at any point in time. Broadly, it can be summed up as “what is going on right now?” This information can include positioning of units, scores, resources, and so on. And the state of the game can be broader information than the specific placement of units on terrain. For example, some board games alternate through “movement” phases and “scoring” phases. Information regarding which of these two states the game is currently in determines which player actions are valid.

Game theory goes on to further describe how this information is used in games. Of course, the exact implementation of these principles varies from game to game, but general classifications help to better describe how game designers can manipulate this information.

Perfect Information

The most basic and least restrictive style of information dissemination in games is that of perfect information. Perfect information describes an environment where all players know every single thing about the game—the environment, the rules, the current location, and the status of all items as well as the current phase in the game. Simple board games often fall into this category. Certainly Chess, Checkers, Go, and Monopoly are prime examples of games with perfect information. Nothing is kept secret or hidden in these games.

Imperfect Information

In contrast, if some part of a game is hidden from one or more players, it is considered a game of imperfect information. Examples of this kind of game include the classic board game Clue, or the party game Werewolf. In both these cases, the fun revolves around finding out information that is kept secret from one or more players. In Clue, the secret information is the who/where/with what weapon of a murder. Werewolf is a village-wide hunt for secret were-wolves in hiding. Both of these games and many more use the manipulation and pursuit of information as their Core Gameplay Loop.

Games of imperfect information are further broken down and categorized, but that is covered in this book under the principle of Transparency, since all games of imperfect information revolve around the implications of secrets. Another principle that tackles this topic is Howard’s Law of Occult Game Design.

Koster’s Theory of Fun

A Theory of Fun for Game Design is a book by Raph Koster, published in 2004. It is a foundation work that all designers should acquaint themselves with. Koster tackles head-on how to make a game addictive, engaging, and entertaining. He also shows how games fail when they are unengaging and, well, simply not fun.

The premise of the book is that all games are actually low-risk learning tools, making every single game a sort of edutainment. Just as animals engage in play to learn things like dominance behaviors, how to hunt, and so on, humans also engage in games to learn. Playful learning releases endorphins, thus reinforcing the learning and bringing pleasure to the player. This endorphin cycle is what brings us back to a game experience. Once the game is no longer teaching us anything, we generally grow bored and stop playing.

To illustrate, let’s take a simple game: Tic-Tac-Toe. This game is very basic with a simplistic core mechanic. For youngsters, this is a fun game as they learn to master where to place their X’s and O’s. For an adult who has played the game most of their life, it’s not an interesting game; it has already been mastered and therefore no longer triggers an endorphin response as there is no more learning to be had. An adult (or even a child) who has had sufficient practice will know the moves required to win within the first few moves, or at least what to do to enact a draw. Without the learning involved, the game is no longer fun to play. Now, an adult may enjoy the fun of introducing his/her child to the game and thus derive fun from the teaching. However, this is usually a case of naches—pride in a mentee, or child, as they share the experience of learning and joy through the child’s eyes. In any case, this type of fun is derived from Metagames.

Koster goes further with his theory, including how to use concepts such as “chunking” to help with game design. Chunking is the process by which a complex task is assimilated into something we do subconsciously (see Working Memory). For instance, when learning to drive, the new driver is faced with many tasks: watching the speedometer, the rear-view mirror, the side-view mirrors, the other cars, the traffic controls, and so on. However, by the time someone has learned to drive, they have chunked this information into one unit, so they can process it smoothly and almost without thinking. Obviously, experienced drivers must be engaged and conscious while completing the task of driving, but they don’t spend their time focusing on the process of how to check their rear-view mirror safely.

Koster goes on to incorporate biology, psychology, anthropology, and game theory in this work. His idea is that what we experience as “fun” is whatever engages and challenges us through a number of dynamics, most especially learning. He asserts that the mastery of challenges—learning how to achieve the goals of a game—is what makes it feel like fun. He also points out, however, that games are specifically designed to rewire brain patterns, and that this is a serious responsibility. He takes this seriously and cautions new game designers to keep their power and culpability in mind when designing games.

Ten years later, further research has borne out Koster’s assertions. In the second edition, he updates the book with these findings and includes related work, such as Lazzaro’s Four Keys to Fun and developments in psychology and teaching that reinforce his assertions.

Lazzaro’s Four Keys to Fun

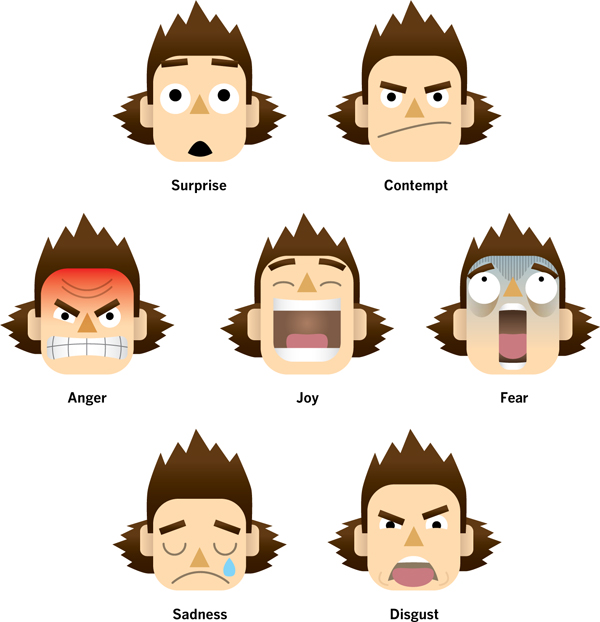

Lazzaro’s Four Keys to Fun is a design tool to inspire designers to develop new ideas for mechanics and that researchers can use to examine the effectiveness of these mechanics. Engagement comes from the way a player’s favorite actions create emotions. Game mechanics create emotions in players that, in turn, drive engagement. There are four reasons why people play games.

First, they’re curious or they get hooked and are pulled in by a novel experience. This is called Easy Fun, like dribbling a basketball or popping some bubble wrap; it’s engaging all on its own and doesn’t require players to gain points or keep score.

Next, games offer a goal broken into achievable steps. Obstacles challenge players to develop strategy and new skills to create Hard Fun. Frustration hopefully increases the player’s concentration and when the player succeeds, this type of fun provides them with the feeling of the epic win.

The feeling of winning is more powerful when friends are also playing. With People Fun, the opportunities for competition, cooperation, communication, and leadership combine to increase engagement. There are more emotions involved in People Fun than in the other three types combined.

Finally, there is Serious Fun, where players play to change themselves and their world. People play a shooting game to blow off frustration at their boss, for instance. They play brain teasers to get smarter and a dancing game to lose weight. The excitement and relaxation they get from rhythm, repetition, collection, and completion create value and drive them to participate so that gaming is an expression of their values rather than a waste of their time.

The Four Keys to Fun focus on the activities gamers perform most in their play. Best-selling games have at least three of the Four Keys. Although they have favorites, gamers enjoy all four of these aspects of play. They typically rotate between these types of play in a single play session. Because each play style offers different things to do and unique emotions, players find that switching between them refreshes and lengthens play.

Easy Fun is the hook that games use to attract curious players and to draw them in. Gamers respond to Easy Fun with curiosity, wonder, and surprise as they experience novel controls, opportunities for exploration, and fantasy. Exploration, role play, creativity, and story all engage without too much challenge for the new gamer. Easy Fun provides various opportunities to experience other emotions when players have become too frustrated by the main challenge. Fun failure states reward the creative adventurer and make the game world feel more complete.

At a certain point, novelty loses its ability to hold the player’s attention and they search for something specific to accomplish. The most obvious fun in games is that they are challenging. Hard Fun balances game difficulty with player skill by offering a clear goal, obstacles, and opportunities for players to use strategy to create frustration and then fiero from the epic win. One of the biggest emotions that comes from gameplay, fiero, requires the player to feel frustrated first. In fact, the player has to feel so frustrated that they are about to throw the controller out the window; if they win at that moment, the feeling of fiero is quite strong and their arms punch the sky. Players can’t push a button and win the Grand Prix and feel all that good about it. Instead they need to develop skills to accomplish a goal. Fiero requires the opportunity for failure. Hard Fun achieves this by balancing difficulty and skill. If the game does not get more difficult, players leave because they are bored. If the game gets difficult too quickly, players leave because they are frustrated.

The thrill of victory is more fun in the context of friends. Social interaction in and around the game creates amusement and social bonding. People Fun mechanics such as competition, cooperation, caretaking, and communication create social emotions such as amusement, schadenfreude (pleasure at someone else’s misfortune), and amici (friendliness). More emotions are involved when people play the same game in the same room, and more emotions come from experiencing People Fun than from the other three types of fun combined. Walt Disney believed that shared experiences are compelling experiences; compelling experiences are more meaningful, which brings us to the last key.

Serious Fun is where the fun of games changes the player or their world. After the fiero from winning fades, the Serious Fun remains to create value and meaning for the player. Collection, repetition, and impact mechanics create excitement, relaxation, and the desire to acquire items of value or status.

For the best results, designers use the Four Keys as a design framework to map out the opportunity space for increasing engagement for their game. A great story and eye-catching art are powerful experience creators in games, but the core value proposition comes from the emotions gamers experience while they are making choices.

For more ideas on how to use the Four Keys, download the free white paper and poster from here: http://4k2f.com

Magic Circle

One of the features of games (indeed one of the essential parts of the very definition of games) is that they exist in a kind of make-believe. No one playing baseball, for instance, should see it as a battle for life and death. No matter the amount of contract bonuses on the line, it is still a game. The inherent assumption is that games exist in a separate reality than the real world.

Early 20th century historian Johan Huizinga pointed out in his Homo Ludens that games have their own separate areas for play:

The arena, the card-table, the magic circle, the temple, the stage, the screen, the tennis court, the court of justice, etc., are all in form and function play-grounds, i.e., forbidden spots, isolated, hedged round, hallowed, within which special rules obtain. All are temporary worlds within the ordinary world, dedicated to the performance of an act apart.

Katie Salen and Eric Zimmerman talk about one of the clauses from this quote—the magic circle—in their Rules of Play and further expound on Huizinga’s idea: that reality is indeed different when play begins. Tiny, red, extruded plastic shapes become “hotels,” trees become “base,” and a goal line becomes a spot that must be protected without regard to one’s own health or safety.

Although to many, it may seem a distinction without a difference, think instead about the freedom this type of play grants. An entire lucrative genre of digital games is centered around the play experience of being the first to murder the opposing player or to accumulate the most murders. This is absolutely horrific unless one is able to say that play itself is a separate reality. Now use this as a lens for creativity: What interactions can people not do in the real world (because of taboos, because of the laws of physics, because of lack of resources) that become irrelevant limitations in game reality?

Also, some games cross the boundary of the magic circle with regularity. A gambling addict is playing a game, but the effects of that game are certainly not limited to the reality of the magic circle. A player who fumes about his loss, which then puts him in a bad mood for the rest of the day, has crossed the membrane between the magic circle and reality. Indeed, griefers (see Griefing) are a type of ne’er-do-well who embrace the idea that the magic circle can be crossed, and use their power as a type of Metagame.

When “it’s only a game” is no longer only a game, the magic circle has been left behind.

Making Moves

Games may be Simultaneous or Sequential, referring to the order in which the players make their decisions or “take turns.” Distinguishing between Simultaneous and Sequential games is important because each requires different strategies and design considerations.

In Simultaneous games, players must consider what decisions other players will choose while making their own, but without knowing for certain what they will do. Each player also knows that the other players face the same dilemma. This crucial information—of what the other players are doing—will influence the outcome of any turn, but players don’t have access to it while making decisions (see Information and Transparency).

In Sequential games, each player has more information available to them. They can consider the decisions of players who just made their move and make reliable predictions about what players that follow in turn may do.

Simultaneous games may be literally simultaneous, like Rock, Paper, Scissors, or players may make decisions at different times, but without actually knowing the decisions of any other players (making them effectively simultaneous). These kinds of games are most easily presented using a Normal Form table to show their Payoffs. Simultaneous games may be solved by a Nash Equilibrium (in which all players have a single best choice and will not do better by changing their strategy based on common knowledge of the possible outcomes of all choices). Prisoner’s Dilemma is another example of a Simultaneous game (see its Normal Form table on its description page).

Sequential games require that players make their decisions in turn, as in Chess, and also that they know at least some of what decisions were made by other players previously (they may have Imperfect Information—see Information). In multiplayer games, it is also essential to understand whether there is a first-move advantage (or disadvantage) in the game, and at what point in the sequence the player is making their own decision; that is, who has already made their decision.

These kinds of games are most often presented using Extensive Form (or Decision Trees) to show their Payoffs. Extensive Form represents all of the options for each player for every decision at each opportunity based on every combination of available information and the payoff for each result. These can be highly mathematically complex for even the simplest game.

Sequential games are generally solved through Backward Induction. This is the process of determining the desired outcome, then working back through the Decision Tree to find those choices that will lead to that eventual outcome. Ideally, the player will also determine their best course of action for any branch in the tree based on the outcome of other players’ previous decisions. This allows them to make the most optimal decision at any point in the game. This kind of reverse planning is generally based on an assumption of Rational Self-Interest (each player moving toward a goal that provides the best result for that player), and is considered to have been brought into game theory by John von Neumann and Oskar Morgenstern in the mid-20th century.

MDA: Mechanics, Dynamics, and Aesthetics

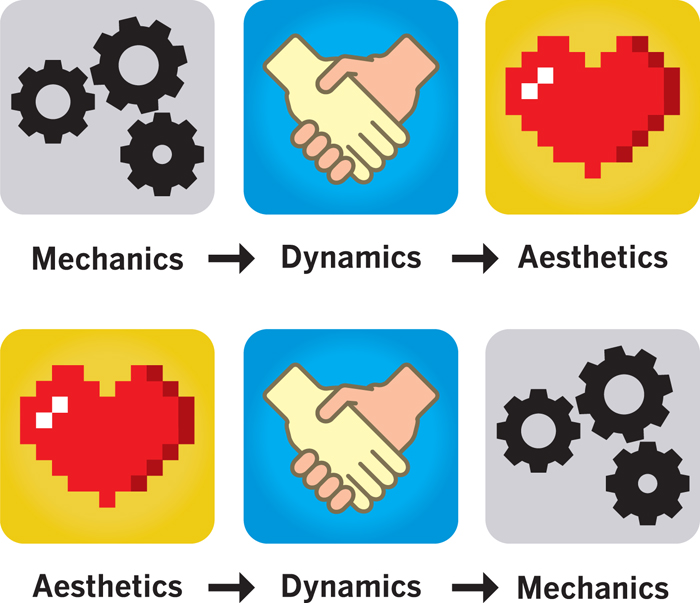

Mechanics, Dynamics, and Aesthetics (MDA) is a systemized approach to analyzing and understanding games. It comes from the work of designers Marc LeBlanc, Robin Hunicke, and Robert Zubek. They assert that all games can be broken down into the following components:

• Mechanics are the rules of the system. This element refers to how the system handles player input and what the player sees and does. In a board game, the mechanics consist of the rules and presentation. In a digital game, the mechanics are the rules vis-à-vis the source code of the game. The source code tells the machine how to interpret what the player does and how the game should react.

• Dynamics are how the actors in the system behave when the game is being played. This can be the hardest of the three to fully understand. Take the “game” that is played out when you are competing for an eBay auction. No rule says you need to bid as close to the close of the auction as possible. Yet the vast majority of bids happen in the closing seconds of an auction in bidders’ attempts to not signal to the other potential bidders (players) what their interests are (often using sniping software). This dynamic occurs because of the mechanics; the players can see the current high bid and have to stop bidding at a specified time. If this was not the case, people would bid differently. Dynamics are an explanation of the runtime effects of the mechanics.

• Aesthetics are the emotional output of the game due to the dynamics. In the aforementioned eBay example, because of the “sniping” dynamic, there is a lot of tension in the closing of an auction for the current leader, the potential bidders, and the seller. There is no catalog of viable aesthetics in games, but challenge, fear, tension, fantasy, social interaction, and exploration are common game aesthetics.

There are two ways to look at MDA. In the first, you, the game designer, starts the design process by defining the desired aesthetic output of a game. You then decide what dynamics the players would engage in that could produce that aesthetic, and finally, you choose mechanics to set up those dynamics. In the second way, the game player experiences MDA from the other direction and interacts with the mechanics. Those mechanics result in certain dynamics, which produce a certain aesthetic response.

This is just one way to approach the task of creating a specific emotional reaction through games. It has its limitations. For instance, what a player experiences as an emotional response isn’t just a function of the dynamics that the game creates, but also a function of the player and his or her background. Imagine a child, a teen, and a grandparent playing Resident Evil. The child may find it extremely scary, the teen may find it exhilarating, and the grandparent may find it repulsive. Additionally, culture and time period affect this reaction. Few people would get disgusted at the sight of Death Race 2000 nowadays, but in its day, it created quite a stir.

Nonetheless, MDA can be an incredibly useful tool when you are taking a step back and analyzing the mechanics planned for a new game. Here are some questions it can help you answer: What behaviors will these mechanics create? Do these behaviors match the desired behaviors of your game? If a rule is changed, what change will that make to the dynamic? What is your game about? What mechanics align with what the game is about and what mechanics work against what your game is about?

Memory vs. Skill

In game design there are various types of game categories, such as first-person shooters (FPSs), roleplaying games (RPGs), and the like. Although those niche markets are great, much broader category classifications for games are memory games and skill games.

Memory games contain elements that need to use trial and error, memory recognition, reflexes (platformers), and knowledge of the game itself. Games of skill contain elements that need physical or mental strength and conditioning in order to progress through them. Many games contain aspects of both in specific scenarios.

Let’s take a look at a few examples of memory games. A player in an FPS game becomes more successful in the game once they have played it several times; at this point, they remember where the elements are, where the item-ups are, and how the level is laid out. In a sidescrolling platformer, the player needs to remember where items are and how they move in order to use their reflexes to get through the levels. A racing game has elements of both, where the driver needs to remember the race track and use reflexes to maneuver around it.

Here are a few examples of skill games. The game of Pool, or Billiards, even in a video game format, takes math to calculate the angles of the shot and takes knowledge of how a 3D sphere will interact with other 3D spheres on a flat plane with six possible outcomes. In an RPG, the player might get information from a non-playable character (NPC) about how they can only beat the boss of the area with a specific combination of weapons and magic. This leads the player down a mental path to map out their next move and to evaluate how to get the items their character needs to pass the level.

Whereas a smaller casual game played on a smartphone can focus on remembering where to place a finger or where to aim a flying object, a larger game might have elements of both memory and skill. Good examples of this are the “coin crunchers” from the late, great arcade games. Players needed excellent memories to beat any part of these arcade game since they never changed, and they needed a lot of game-specific skills like using a limited number of bullets wisely, using the correct button combination to throw a super uppercut, or learning the timing on a jump.

When it comes to the two types of games, memory games tend to bore the player after a while because they play the same game, in the same way, in the same areas, and with the same tools or weapons. One method of solving this issue is to add in randomization so that the game mechanics, story, and outcomes stay the same, but the enemies spawn in different locations, the platforms move at different speeds or in different directions, or item drops or where items are dropped in the level change. Games of skill tend to make the player more frustrated if they have not developed the skill set to surpass a certain part of the game. When this happens, a designer can help the player out using different methods. One recent method appears in Super Mario Wii, where Luigi helps show Mario the way to the end of the level if he dies quite a few times. In the same game, Mario can also fly back to the first world to watch videos of tips, tricks, and hidden items that keep the player playing and help the player progress through the game. A designer can also have the game offer tips, and create glowing items to show the player the way or which items they need to pick up. Designers can hide extra items around the level that can help the player in some way, and enemies can help the player as well; for example, maybe jumping on an enemy to hurt him can project the player to a higher level so they can reach a platform that they couldn’t reach before.

Memorization techniques in games are also being used to advance skills in the real world. In the simulation industry, soldiers, doctors, students, boat captains, and many more are learning such things as how to field strip a weapon or how to perform a certain type of surgery.

Minimax and Maximin

Not to be confused with Min/Maxing, Minimax refers to the concept developed by John von Neumann that says it is rational for each player to choose a mixed strategy that maximizes their own payoff, and that the resulting combination of strategies and payoffs is the most Pareto optimal for that zero-sum game (see Payoffs, Outcomes: Pareto Optimality, and Zero-Sum Game). Minimax can also be used to reduce the opportunity cost (also known as regret) in economic game theory.

According to von Neumann, this theorem is the underpinning of all modern game theory. Maximin, the other side of the same coin, but applicable to non-zero sum games, is for players who focus on preventing the most catastrophic consequences and want to protect themselves from the worst outcomes of bad decisions. In general, the two ideas of Minimax and Maximin are nearly identical forms of rational self-interest, in which each player believes that they are making the right decisions to ensure their own success.

• Minimax. The Minimaxer is an opportunist or optimist, making decisions targeting the minimum possible payoff for the other player. Taking the option with the best success result isn’t always a likely choice, since it may not sufficiently reduce the gain for their opponent. Their choice will always be the Nash Equilibrium.

• Maximin. The Maximiner is a worrier or pessimist, making conservative decisions to avoid negative payoffs for themselves. Taking the option with the least-horrible failure result is a likely choice. The Maximiner is the kind of person who puts their money in a savings account instead of risking it in the stock market or even hides it in their mattress to prevent its loss in case of a bank collapse. Their concern is maximizing their minimum gain.

Mathematically, a Minimax algorithm is a recursive algorithm for making the next decision in a game with a specific number of players, normally two. A value is defined for each possible state of the game for each player and is computed using a position evaluation function that indicates how successful a player would have to be to reach that position. According to the function, a rational Minimaxer would make the decision that maximizes the minimum value of the position based on their opponent’s potential subsequent decisions and values set by the player (i.e., based on predefined goals).

The Minimax theorem is also used for decisions where no other player is involved, but for which the results depend on unpredictable events. This may be approached as a decision made in the face of nature, chance, or environment, such as determining whether to invest in a risky stock, which could provide great profit if the company is successful, but which could fail utterly if not. In this case, the expected outcomes are similar to a two-player zero-sum game.

Nash Equilibrium

The Nash Equilibrium is named for its discoverer, John Nash, who based his research on previous work concerning Zero-Sum strategies (see Zero-Sum Games) by John von Neumann and Oskar Morgenstern. According to Nash, there is a set of strategies for each player in any mixed strategy game with finite choices that provides no incentive to unilaterally change their strategy because such a change would always result in a lower Payoff than if that player had continued with their present strategy.

When all players have a single best choice and will not do better by changing their strategy, this is a Nash Equilibrium. A Nash Equilibrium does not have to be the Pareto-optimal outcome (see Outcomes: Pareto Optimality) for that game. Examples of games with a Nash Equilibrium include Tic-Tac-Toe (for which the Nash Equilibrium results in a draw) and Prisoner’s Dilemma (in which the Nash Equilibrium results when both players keep silent).

The Nash Equilibrium can be used to predict the outcome of interactions between players based on their optimal strategies. It cannot predict the results of a decision without taking into account the actions of the other players, so it is not useful unless all of the players have common knowledge of the game’s possible decisions and the results. In this case, each player must know all of the possible outcomes and payoffs for all players so that they know which decision is best for them and which decision is most beneficial to other players (see Information and Transparency).

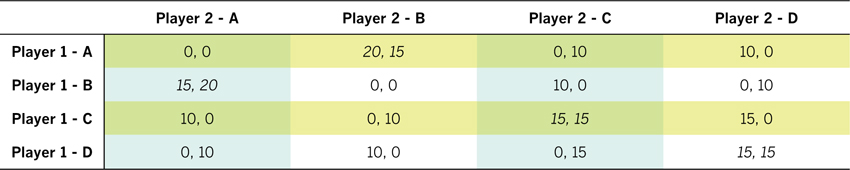

A Nash Equilibrium can be found mathematically through a payoff matrix. Such matrices are convenient only for games with few players and few strategies, however. If the first payoff number in the cell is the highest for that column and if the second number is also the largest for that row, then the cell contains a Nash Equilibrium. For example, in a two-player game with four possible strategies, the payoff matrix may appear as follows (with the Nash Equilibria italicized and results scored for Player 1, Player 2):

As long as the ordinal relationship between the two values in a cell doesn’t change, and the two values remain the highest in the column and the highest in the row, the Nash Equilibria will remain stable. In the example shown, values of 11, 12 for BA, 12, 11 for AB, and 16, 16 for CC and DD would retain the equilibrium of the matrix. On the other hand, changing CC or DD to 10, 10 would remove them as Nash Equilibria since there would be a higher value in either column or row for one of these payoffs.

Of course, errors, complexity, distrust, risk, and irrational behavior may influence player strategies, causing them to choose a strategy with a lower payoff, but one which they may feel they have good reason to select. Player communication (outside agreements and credible or non-credible threats) can also influence the selection, particularly in sequential play of the same game, leading to Metagaming strategies (see Metagames) such as Tit-for-Tat, often found in series play of the Prisoner’s Dilemma.

The Nash Equilibrium has been used to analyze political and military conflict (including the Arms Race of the Cold War), economic trends such as monetary crises, and the flow of ordinary traffic in congested areas. The application of the Nash Equilibrium to the environment has even supported another game theory called the Tragedy of the Commons. Iteration of games with Nash Equilibria has shown that repeated interaction between players can provide a basis for long-term strategies that may supersede any statistically predicted outcome, including a greater likelihood of cooperation in many cases, especially when players are allowed to communicate freely.

Outcomes: Pareto Optimality

Many game theory examples are presented as Zero Sum propositions—that one player’s gain is another player’s loss. Certainly, in games, this is often the case. Especially in games where players claim resources—for example, the board game Risk—and in cases when one person’s improved position through capturing a territory, by its nature, detracts from someone else’s position.

However, there are also situations where a person may improve their own position without negatively affecting any other players. Italian economist Vilfredo Pareto studied these relationships in areas such as wealth and income distribution. The concepts that he developed from his observations have been named after him.

Rather than view improvements solely related to the individual, he analyzed them in the scope of the whole system. For instance, a Pareto shift occurs if one is given an allocation of goods, money, land, and so on, and a change from one allocation to another (e.g., through a sale) occurs. Naturally, a zero sum transfer from one party to another is not an improvement of the system as a whole. However, a shift that improves one party’s situation without directly making another’s worse is called a Pareto improvement.

One example of a Pareto improvement is when a roleplaying game (RPG) character upgrades their abilities and spells. In typical game worlds, these actions do not depend on another character having something reduced. On the other hand, a situation in which a character steals another character’s equipment is, obviously, not a Pareto improvement since the shift impacts the second character negatively.

Often, Pareto improvements can be made by both sides—even multiple times. Once a system reaches a point where no more Pareto improvements can be made, it achieves Pareto efficiency or Pareto optimality. Any further shifts in the system would be zero sum—that is, the shift would be detrimental to at least one of the parties.

It is an important distinction that Pareto optimality isn’t necessarily a fair and equitable distribution. It also doesn’t imply any judgment on whether or not the distribution is the best possible arrangement. It only states that the choices have expanded to the point where neither one can be improved without impacting the other.

Additionally, it is interesting to note that a Dominant Strategy doesn’t always align with a Pareto optimality. For example, in the Prisoner’s Dilemma, the dominant strategy (betray) differs from the Pareto optimal solution (both players cooperate). This occurs because each player “staying silent” amounts to a pair of simultaneous Pareto improvements—each prisoner’s position gets better without making the other’s worse. At this point, however, neither one can improve their own position (by betraying the other player) without making the other person’s position worse.

In cooperative games or systems, Pareto optimality can be a desirable goal. In competitive games, however, when Pareto optimality is achieved, it often results in stalemate or inevitable conflict. In a strategy game such as Civilization, for example, players often expand their territory until their borders meet those of other players. In a general sense, these expansions are Pareto improvements as long as they don’t take territory from anyone else. However, once players have claimed all the territory, a Pareto optimality has been reached. The only way for one player to increase their holdings is to take territory from another.

Also, Pareto improvements (and, therefore, optimality) are often used as a resource-balancing game mechanic. For example, a game that involves decisions regarding what types of units to produce (through limited money, time, space, and so on) often deals with Pareto improvements. When given a finite number of resources, for example, a player might be given the decision to create offensive or defensive units. The increased ability to construct either one is a Pareto improvement as long as it doesn’t slow the production of the other. Once the maximum creation capacity is reached, however, a decision to increase one necessarily decreases the other. Again, there is no implication of what the “right mix” is—only the recognition that all resources are being used efficiently.

Pareto improvements possible: Either player can improve their position without having any impact on their opponent.

Pareto optimality: Neither player can improve their position without negatively impacting their opponent.

Payoffs

A Payoff is the outcome (see Outcomes: Pareto Optimality) or result of a decision in a game, whether positive or negative, no matter how it is measured. That could be in score, profit, or some other form of perceived value that serves as incentive and motivates a player (see VandenBerghe’s Five Domains of Play).

It is important to note that not every player (see Bartle’s Player Types) is motivated by the same Payoff. Some players will fight to get to a higher score or level while others are more interested in how many flowers they can plant (see Metagames).

Rational self-interest is assumed among players of games in general, which means that everyone is expected to move toward the Payoff that is best for them, and everyone will try to maximize their Payoff (see Minimax and Maximin). Decisions are rationalized in terms of their effect on the player, based on the player’s own internal system of values. This sometimes results in a player doing what they think is best for the group, in hopes that it also improves their own situation (see Volunteer’s Dilemma), but usually rational self-interest means that decisions are made solely in the interest of the player, regardless of the impact on other players.

Game theory points out that Payoffs can be classified as either Cardinal or Ordinal.

• Cardinal Payoffs are those that represent some identified value of money or points or whatever is being used as a unit of measure. Cardinal Payoffs are fixed, specific numbers, which can be set at varying levels to provide a variety of relationships between outcomes. The key to Cardinal Payoffs is a specific number such as 1 or 0, yes or no. A reward is given or it is not.

• Ordinal Payoffs describe outcomes in which the value of the Payoff is of less interest than the relative order in which they occur. Ordinal Payoffs are relative, comparative values, ranked from best to worst, as in a race, where order of merit is more important than time or distance. The key to Ordinal Payoffs is order, such as 1, 2, 3, 8, and 12. The winner is the one at the top of the rank, not associated with a particular number.

Payoffs are generally presented in a Normal Form table when play is simultaneous (see Making Moves); that is, when one player must move without knowing what the other player will do. The results of each player’s choice can then be compared. The table that accompanies the Rock, Paper, Scissors principle shows an example of a Zero-Sum Game with Cardinal Payoffs. This is made obvious by the possible outcomes, which consist solely of win/lose scenarios rather than a scale from success to failure with multiple points on it.

However, in a full match of Rock, Paper, Scissors—where the same participants play several games—the winner is decided not by who wins the final game of the match (this would be Cardinal), but by which player wins the most games. The players can be ranked on a continuum, in order, so the payoff in this case is Ordinal.

One thing to note about balancing payoffs in a game: The application of rational self-interest to the decision-making process (without credible commitments by other players) will often result in the worst possible outcome for the player. As an example, consider the Prisoner’s Dilemma, where two players who cooperate with each other (not using rational self-interest) will each receive the second-best outcome. However, when both choose the option that could result in their freedom—betraying each other—then both are instead sentenced for a longer period. Note that even though this game shows Cardinal Payoffs, they are essentially Ordinal, as it is only important that they remain in the order shown (-10, -4, -1, 0), with any values for those numbers acceptable as long as they remain in the same sequence (see the table that accompanies the Prisoner’s Dilemma).

Prisoner’s Dilemma

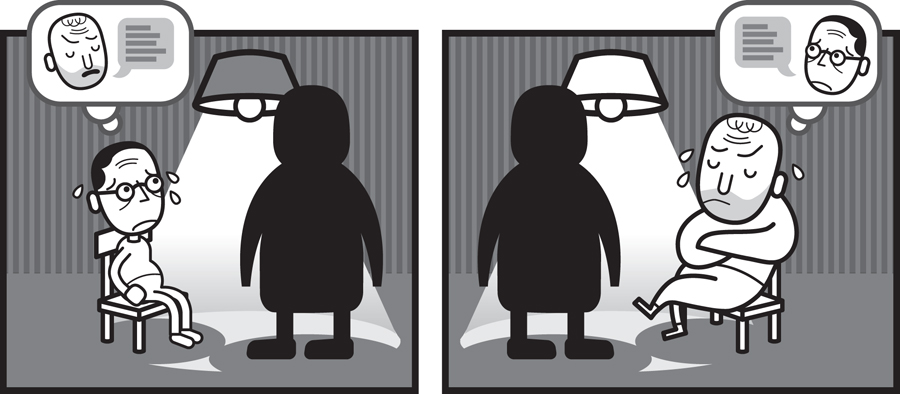

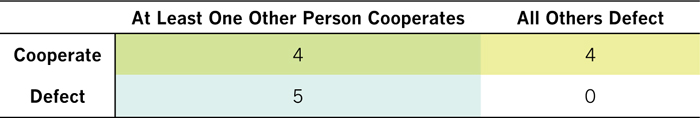

The Prisoner’s Dilemma is a simple game that demonstrates why two players may make individual choices that are not in their own best interest instead of cooperating for a better result. It models mutual trust in a Synchronous Non-Zero-Sum (usually a Symmetrical) game of Ordinal Payoffs in which rational self-interest is generally assumed (see A/Symmetric Play and Synchronicity and Zero-Sum Game), although cooperative behavior is frequently noted (even though communication between players is forbidden) and heavily rewarded, with mutual cooperation in the Pareto-Optimal Outcome (see Outcomes: Pareto Optimality). The game may be played classically, as a single decision, or iteratively, resulting in patterns of behavior based on previous outcomes. In the table at the right, when a prisoner cooperates, that person is staying silent in support of the other prisoner. When that individual betrays his partner, he is implicating the other prisoner to the authorities.

When two players play the Prisoner’s Dilemma multiple times in succession, and each forms an opinion of the other player based on previously exhibited behavior, each will begin to form strategies based on the other’s behavior. The most rational decision when playing a known (N) number of games in sequence is to betray the other prisoner every time. However, in practice, most people are not so superrational as to realize that the greatest benefit to them is to cooperate every game and then betray on the last play; by supposition, this leads them to infer that the other player is also equally rational and would do the same, and so they betray on the next to last round, ad infinitum, back to the beginning. In a series of an unknown number of plays of Prisoner’s Dilemma, this is no longer the game’s Dominant Strategy, but only its Nash Equilibrium.

In fact, several strategies develop among actual human players that are not as rational, but are more successful. The most basic of these strategies is known as Tit-for-Tat, in which the player does each round whatever their opponent did on the previous round and begins the first round cooperating. Robert Axelrod, one of the early researchers of this game theory, developed four conditions that a player’s strategy must have to be successful:

• Nice. Do not betray before your opponent betrays (cooperate as often as possible).

• Non-Envious. Never try to gain more than the other player (optimal balanced score).

• Retaliating. Punish your opponent when they betray you (do not always cooperate).

• Forgiving. Return to cooperation after retaliation (as long as your opponent does not betray you).

Unorthodox methods, based on techniques such as random betrayal (the player chooses cooperation or betrayal at random, which results in a slight gain against nicer players), Pavlovian reward (the player cooperates whenever the opponent does the same thing as the player did on the previous turn), and team competition (Min/Maxing by having designated teammates lose so that others can win, communicated through a Morse Code–like cooperate/betray scheme), can sometimes gain an advantage over more traditional strategies. The game can also be played with a single player (balancing among outcomes, each with its own advantages and repercussions), with multiple players on each side each controlling a portion of the outcome, or even with multiple teams managing cooperation and refusal (or resource allocation) between the different teams. This can sometimes result in a Tragedy of the Commons. Additional variants allow for Asynchronous play (see A/Symmetric Play and Synchronicity), Cardinal Payoffs, or Voluntary Transparency and distinctly change the nature of the game.

Recently, a new approach by William Press and Freeman Dyson has emerged called Zero-Determinant Strategy, which holds that one player can control the game by causing their opponent to believe that they are going to make a specific choice, giving them some advantage over their opponent through the use of disinformation, but only if they can determine the strategy that their opponent is using. Although common in games that allow communication (for example, bluffing in Poker), this type of second-order analysis has not been done with Prisoner’s Dilemma until now, primarily due to the enforced lack of communication between players. Even though it has been shown to offer only a temporary advantage, particularly against other players who also know the Zero-Determinant Strategy, this heavily probability-based method has at least reopened the investigation into Prisoner’s Dilemma and may lead to further understanding of Metagames strategies for this particular game theory model.

The Prisoner’s Dilemma was originally developed by Melvin Dresher and Merrill Flood in 1950 (although it was named by Albert Tucker). It is frequently used in the study of economics (for business development and advertising campaigns), the military decision-making process (when escalation or disarmament could each result in war), in psychology (as a determinant for addiction models), and in evolutionary biology theory (in investigating whether genetic or social desires can overcome individual wants and needs). It provides a useful paradigm that can compare rational expectations with irrational behaviors to determine potential motivators outside the scope of mathematical probability.

The Prisoner’s Dilemma comes down to this: Do you betray your partner (in crime) or do you cooperate with him (to foil the cops)? It goes to the heart of how players decide to cooperate or compete with each other.

As long as B > A > D > C and they are proportional, the game need not be symmetrical.

Puzzle Development

Puzzles are a very interesting subset of game development. Designer Scott Kim relates this definition of a puzzle: “something fun with a right answer.” Squishiness of the definition aside, this does provide at least one useful element of a puzzle: It can be solved. A few additional requirements make for an effective puzzle.

A good puzzle must be neither too easy nor too hard for its audience. A perfect puzzle is just the right difficulty so it challenges the user, but not so difficult that they give up in frustration. A good technique to bumping puzzlers into this flow state is by using breadcrumbs. These are hints, either internal or external to the puzzle, that allow players to progress. For instance, in Sudoku or crossword puzzles, as the puzzle is partially solved, the remaining unsolved blocks get easier and easier. Whereas a player may not have known 48 Across when they started the crossword puzzle, by filling in nearby Down clues, they can unlock letters that partially solve 48 Across. Breadcrumbs allow hints to be revealed to the user to gradually ease the puzzle closer and closer to the player’s natural difficulty tolerance.

A good puzzle must involve a clever, intellectual effort to get from unsolved to solved. It must not require simple brute force. Consider this example: “I’m thinking of a number from 1 to 10. What is it?” “1?” “No.” “2?” “No.” “3?” “No.” This is not a good puzzle. A maze with just a couple of obviously false paths is not a good puzzle. Because of this, quizzes and some riddles make for poor puzzles in general, because the solver either knows the answer or does not and cannot break the puzzle down into steps.

A puzzle can be generated randomly but must be deterministic once the player encounters it. For instance, a board of Sudoku can be generated pseudo-randomly, but once the player starts the puzzle, every player that makes the same moves will experience the puzzle in the same way. If two players get the same Minesweeper board and uncover the same squares in the same order, they will have identical experiences. Conversely, if two people play tennis and make the exact same movements, they will have a very different game experience. Chess, unless played against an artificial intelligence (AI) specifically designed for this purpose, is not deterministic. If a player makes the same five opening moves, five different players may play it in five (or more) different ways. Chess can be made a puzzle if a set of moves and deterministic rules are provided. Examples of chess problems in puzzle magazines show readers how to get to mate in X moves with rules for how the opponent will move.

Finally, a good puzzle must let the player know what the goal is and what they need to manipulate to reach that goal. Some puzzles are confounding simply because the player is never told the rules. Many old adventure games had this problem—a room would have some elements that were obviously a puzzle, but the goals of the puzzle and what pieces had to be manipulated and how were never described to the user. Designers of these “puzzles” used rule obfuscation to increase their difficulty to “challenging,” but they never actually developed a puzzle that was fair. “Players have to solve how to solve the puzzle” is an intellectual cop-out used to avoid making interesting puzzles.

To sum up, when designing a puzzle ensure that it

• Keeps the player in a Flow state according to difficulty

• Requires clever intellectual effort to get from unsolved to solved

• Is deterministic

• Is clear and fair as to the goals and mechanics

Rock, Paper, Scissors

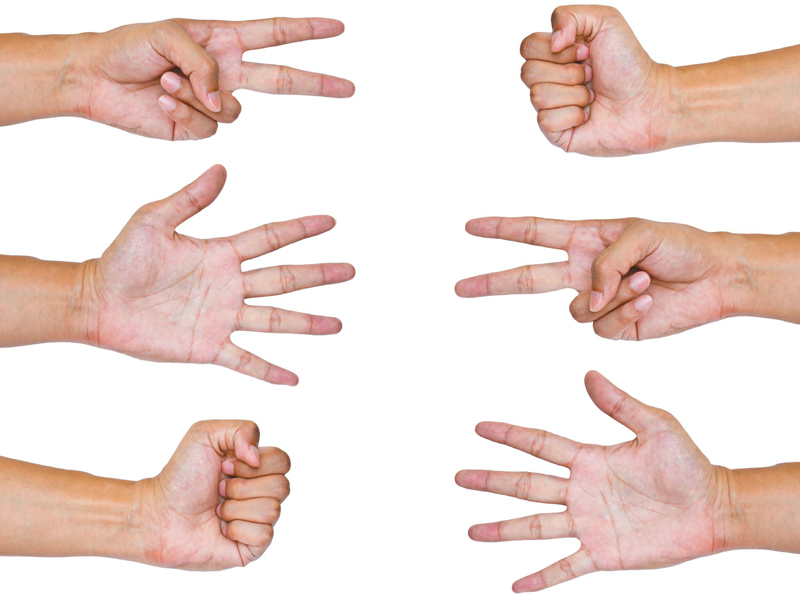

Rock, Paper, Scissors, also known as roshambo, is a synchronous, semi-random, zero-sum game played only with hand gestures (see Zero-Sum Game). On the surface, it is a very simple game with attributes that are referenced and reused continually in game design. Its simple surface hides some complex ideas, however.

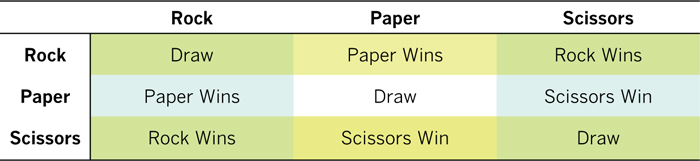

The game uses three symbolic gestures which are balanced against the other two, as shown here:

As you can see from the table, each gesture—Rock (fist), Paper (open hand), and Scissors (two fingers, spread apart)—beats one other gesture, and yet it can be beaten by one other gesture as well. This game is perfectly balanced, with the gestures forming a circular pattern of supremacy: Rock > Scissors > Paper > Rock and so on.

Matches of games are normally played, such as “best two out of three,” to prevent cheating and to allow for the formation of strategies. Draws are not counted against the total. National and international championships are played in this game, sometimes for very high stakes.

Skilled players can win more frequently than the default one-third of the time through an understanding of the game’s patterns and how other players are likely to react. Strategies may be used, such as ploys to confuse the opponent, including yelling the names of gestures other than the one the player is using, or goading the other player into making an invalid move (neither Rock, nor Paper, nor Scissors) to force them to forfeit (see Metagames). Some players plan out all three of their selections for a match in advance, which can prevent confusion or hesitation, but can also result in predictability when competitions allow players to watch each other perform. Computer programs have also been developed that can “play” Rock, Paper, Scissors against other computer opponents and can select “gestures” using algorithms based on player patterns and trends, Markov Chains, strategic prediction, and random numbers.

The concept of a circular chain of supremacy has been used in other games to prevent the evolution of a dominant strategy and allow each type of unit to hold an equal value in gameplay (see Dominant Strategy). For example, in a modern war game, tanks may beat infantry, while infantry beat artillery, and artillery beat tanks. Usually, these advantages are set up as weaker attacks and specific defenses against the other units, but the direct causality can be modified and obfuscated by attributes (see Transparency) and triggers like weather, terrain, tactics, and other influences (see Objects, Attributes, States).