5.4 Invariant Subspaces and the Cayley-Hamilton Theorem

In Section 5.1, we observed that if v is an eigenvector of a linear operator T, then T maps the span of {v}

Definition.

Let T be a linear operator on a vector space V. A subspace W of V is called a T-invariant subspace of V if T(W)⊆W

Example 1

Suppose that T is a linear operator on a vector space V. Then the following subspaces of V are T-invariant:

(1) {0}

{0} (2) V

(3) R(T)

(4) N(T)

(5) Eλ

Eλ , for any eigenvalue λλ of T.

The proofs that these subspaces are T-invariant are left as exercises. (see Exercise 3.)

Example 2

Let T be the linear operator on R3

Then the xy−plane={(x, y, 0): x, y∈R}

Let T be a linear operator on a vector space V, and let x be a nonzero vector in V. The subspace

is called the T-cyclic subspace of V generated by x. It is a simple matter to show that W is T-invariant. In fact, W is the “smallest” T-invariant sub- space of V containing x. That is, any T-invariant subspace of V containing x must also contain W (see Exercise 11). Cyclic subspaces have various uses. We apply them in this section to establish the Cayley-Hamilton theorem. In Exercise 31, we outline a method for using cyclic subspaces to compute the characteristic polynomial of a linear operator without resorting to determinants. Cyclic subspaces also play an important role in Chapter 7, where we study matrix representations of nondiagonalizable linear operators.

Example 3

Let T be the linear operator on R3

We determine the T-cyclic subspace generated by e1=(1, 0, 0)

and

it follows that

Example 4

Let T be the linear operator on P(R) defined by T(f(x))=f′(x)

The existence of a T-invariant subspace provides the opportunity to define a new linear operator whose domain is this subspace. If T is a linear operator on V and W is a T-invariant subspace of V, then the restriction TW

Theorem 5.20.

Let T be a linear operator on a finite-dimensional vector space V, and let W be a T-invariant subspace of V. Then the characteristic polynomial of T divides the characteristic polynomial of T.

Proof.

Choose an ordered basis γ={v1, v2, …, vk}

Let f(t) be the characteristic polynomial of T and g(t) the characteristic polynomial of TW

by Exercise 21 of Section 4.3. Thus g(t) divides f(t).

Example 5

Let T be the linear operator on R4

and let W={(t, s, 0, 0): t, s∈R}

Let γ={e1, e2}

in the notation of Theorem 5.20. Let f(t) be the characteristic polynomial of T and g(t) be the characteristic polynomial of TW

In view of Theorem 5.20, we may use the characteristic polynomial of TW

Theorem 5.21.

Let T be a linear operator on a finite-dimensional vector space V, and let W denote the T-cyclic subspace of V generated by a nonzero vector v∈V

(a) {v, T(v), T2(v), …, Tk−1(v)}

{v, T(v), T2(v), …, Tk−1(v)} is a basis for W.(b) If a0v+a1T(v)+⋯+ak−1Tk−1(v)+Tk(v)=0

a0v+a1T(v)+⋯+ak−1Tk−1(v)+Tk(v)=0 , then the characteristic polynomial of TWTW is f(t)=(−1)k(a0+a1t+⋯+ak−1tk−1+tk)f(t)=(−1)k(a0+a1t+⋯+ak−1tk−1+tk) .

Proof.

(a) Since v≠0

is linearly independent. Such a j must exist because V is finite-dimensional. Let Z=span(β)

and hence

Thus T(w) is a linear combination of vectors in Z, and hence belongs to Z. So Z is T-invariant. Furthermore, v∈Z

(b) Now view β

Observe that

which has the characteristic polynomial

by Exercise 19. Thus f(t) is the characteristic polynomial of TW

Example 6

Let T be the linear operator of Example 3, and let W=span({e1, e2})

(a) By means of Theorem 5.21. From Example 3, we have that {e1, e2}

Therefore, by Theorem 5.21(b),

(b) By means of determinants. Let β={e1, e2}

and therefore,

The Cayley-Hamilton Theorem

As an illustration of the importance of Theorem 5.21, we prove a well- known result that is used in Chapter 7. The reader should refer to Appendix E for the definition of f(T), where T is a linear operator and f(x) is a polynomial.

Theorem 5.22. (Cayley-Hamilton)

Let T be a linear operator on a finite-dimensional vector space V, and let f(t) be the characteristic polynomial of T. Then f(T)=T0

Proof.

We show that f(T)(v)=0

Hence Theorem 5.21(b) implies that

is the characteristic polynomial of TW

By Theorem 5.20, g(t) divides f

Example 7

Let T be the linear operator on R2

where A=[T]β

It is easily verified that T0=f(T)=T2−2T+5I

Example 7 suggests the following result.

Corollary (Cayley-Hamilton Theorem for Matrices).

Let A be an n×n

Proof.

see Exercise 15.

Invariant Subspaces and Direct Sums3

It is useful to decompose a finite-dimensional vector space V into a direct sum of as many T-invariant subspaces as possible because the behavior of T on V can be inferred from its behavior on the direct summands. For example, T is diagonalizable if and only if V can be decomposed into a direct sum of one-dimensional T-invariant subspaces (see Exercise 35). In Chapter 7, we consider alternate ways of decomposing V into direct sums of T-invariant subspaces if T is not diagonalizable. We proceed to gather a few facts about direct sums of T-invariant subspaces that are used in Section 7.4. The first of these facts is about characteristic polynomials.

Theorem 5.23.

Let T be a linear operator on a finite-dimensional vector space V, and suppose that V=W1⊕W2⊕⋯⊕Wk

Proof.

The proof is by mathematical induction on k. In what follows,f(t) denotes the characteristic polynomial of T. Suppose first that k=2

where O and O′

as in the proof of Theorem 5.20, proving the result for k=2

Now assume that the theorem is valid for k−1

Let W=W1+W2+⋯+Wk−1

As an illustration of this result, suppose that T is a diagonalizable linear operator on a finite-dimensional vector space V with distinct eigenvalues λ1, λ2, …, λk

It follows that the multiplicity of each eigenvalue is equal to the dimension of the corresponding eigenspace, as expected.

Example 8

Let T be the linear operator on R4

and let W1={(s, t, 0, 0): s, t∈R}

and

Let f(t), f1(t)

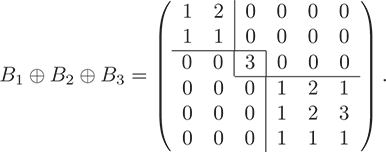

The matrix A in Example 8 can be obtained by joining the matrices B1

Definition.

Let B1∈Mm×m(F)

If B1, B2, …, Bk

If A=B1⊕B2⊕⋯⊕Bk

Example 9

Let

Then

The final result of this section relates direct sums of matrices to direct sums of invariant subspaces. It is an extension of Exercise 33 to the case k≥2

Theorem 5.24.

Let T be a linear operator on a finite-dimensional vector space V, and let W1, W2, …, Wk

Proof.

see Exercise 34.

Exercises

Label the following statements as true or false.

(a) There exists a linear operator T with no T-invariant subspace.

(b) If T is a linear operator on a finite-dimensional vector space V and W is a T-invariant subspace of V, then the characteristic polynomial of TW

TW divides the characteristic polynomial of T.(c) Let T be a linear operator on a finite-dimensional vector space V, and let v and w be in V. If W is the T-cyclic subspace generated by v, W′

W′ is the T-cyclic subspace generated by w, and W=W′W=W′ , then v=wv=w .(d) If T is a linear operator on a finite-dimensional vector space V, then for any v∈V

v∈V the T-cyclic subspace generated by v is the same as the T-cyclic subspace generated by T(v).(e) Let T be a linear operator on an n-dimensional vector space. Then there exists a polynomial g(t) of degree n such that g(T)=T0

g(T)=T0 .(f) Any polynomial of degree n with leading coefficient (−1)n

(−1)n is the characteristic polynomial of some linear operator.(g) If T is a linear operator on a finite-dimensional vector space V, and if V is the direct sum of k T-invariant subspaces, then there is an ordered basis β

β for V such that [T]β[T]β is a direct sum of k matrices.

For each of the following linear operators T on the vector space V, determine whether the given subspace W is a T-invariant subspace of V.

(a) V=P3(R), T(f(x))=f′(x)

V=P3(R), T(f(x))=f′(x) , and W=P2(R)W=P2(R) (b) V=P(R), T(f(x))=xf(x)

V=P(R), T(f(x))=xf(x) , and W=P2(R)W=P2(R) (c) V=R3, T(a, b, c)=(a+b+c, a+b+c, a+b+c)

V=R3, T(a, b, c)=(a+b+c, a+b+c, a+b+c) , and W={(t, t, t): t∈R}W={(t, t, t): t∈R} (d) V=C([0, 1]), T(f(t))=[∫10f(x)dx]t

V=C([0, 1]), T(f(t))=[∫10f(x)dx]t , and W={f∈V: f(t)=at+b for some a and b}W={f∈V: f(t)=at+b for some a and b} (f) V=M2×2(R), T(A)=(0110)A

V=M2×2(R), T(A)=(0110)A , and W={A∈V: At=A}W={A∈V: At=A}

Let T be a linear operator on a finite-dimensional vector space V. Prove that the following subspaces are T-invariant.

(a) {0}

{0} and V(b) N(T) and R(T)

(c) Eλ

Eλ , for any eigenvalue λλ of T

Let T be a linear operator on a vector space V, and let W be a T-invariant subspace of V. Prove that W is g(T)-invariant for any polynomial g(t).

Let T be a linear operator on a vector space V. Prove that the intersection of any collection of T-invariant subspaces of V is a T-invariant subspace of V.

For each linear operator T on the vector space V, find an ordered basis for the T-cyclic subspace generated by the vector z.

(a) V=R4, T(a, b, c, d)=(a+b, b−c, a+c, a+d)

V=R4, T(a, b, c, d)=(a+b, b−c, a+c, a+d) , and z=e1z=e1 .(b) V=P3(R), T(f(x))=f″(x)

V=P3(R), T(f(x))=f′′(x) , and z=x3z=x3 .(c) V=M2×2(R), T(A)=At

V=M2×2(R), T(A)=At , and z=(0110)z=(0110) .(d) V=M2×2(R), T(A)=(1122)A

V=M2×2(R), T(A)=(1212)A , and z=(0110)z=(0110) .

Prove that the restriction of a linear operator T to a T-invariant sub-space is a linear operator on that subspace.

Let T be a linear operator on a vector space with a T-invariant subspace W. Prove that if v is an eigenvector of TW

TW with corresponding eigenvalue λλ , then v is also an eigenvector of T with corresponding eigenvalue λλ .For each linear operator T and cyclic subspace W in Exercise 6, compute the characteristic polynomial of TW

TW in two ways, as in Example 6.For each linear operator in Exercise 6, find the characteristic polynomialf(t) of T, and verify that the characteristic polynomial of TW

TW (computed in Exercise 9) dividesf(t).Let T be a linear operator on a vector space V, let v be a nonzero vector in V, and let W be the T-cyclic subspace of V generated by v. Prove that

(a) W is T-invariant.

(b) Any T-invariant subspace of V containing v also contains W.

Prove that A=(B1B2OB3)

A=(B1OB2B3) in the proof of Theorem 5.20.Let T be a linear operator on a vector space V, let v be a nonzero vector in V, and let W be the T-cyclic subspace of V generated by v. For any w∈V

w∈V , prove that w∈Ww∈W if and only if there exists a polynomial g(t) such that w=g(T)(v)w=g(T)(v) .Prove that the polynomial g(t) of Exercise 13 can always be chosen so that its degree is less than dim(W)

dim(W) .Use the Cayley-Hamilton theorem (Theorem 5.22) to prove its corollary for matrices. Warning: If f(t)=det(A−tI)

f(t)=det(A−tI) is the characteristic polynomial of A, it is tempting to “prove” that f(A)=Of(A)=O by saying “f(A)=det(A−AI)=det(O)=0f(A)=det(A−AI)=det(O)=0 .” Why is this argument incorrect? Visit goo.gl/ZMVn9i for a solution.Let T be a linear operator on a finite-dimensional vector space V.

(a) Prove that if the characteristic polynomial of T splits, then so does the characteristic polynomial of the restriction of T to any T-invariant subspace of V.

(b) Deduce that if the characteristic polynomial of T splits, then any nontrivial T-invariant subspace of V contains an eigenvector of T.

Let A be an n×n

n×n matrix. Prove thatdim(span({In, A, A2, …}))≤n.dim(span({In, A, A2, …}))≤n. Let A be an n×n

n×n matrix with characteristic polynomialf(t)=(−1)ntn+an−1tn−1+⋯+a1t+a0.f(t)=(−1)ntn+an−1tn−1+⋯+a1t+a0. (a) Prove that A is invertible if and only if a0≠0

a0≠0 .(b) Prove that if A is invertible, then

A−1=(−1/a0)[(−1)nAn−1+an−1An−2+⋯+a1In].A−1=(−1/a0)[(−1)nAn−1+an−1An−2+⋯+a1In]. (c) Use (b) to compute A−1

A−1 forA=(12102300−1).A=⎛⎝⎜10022013−1⎞⎠⎟.

Let A denote the k×k

k×k matrix(00⋯0−a010⋯0−a101⋯0−a2⋮⋮⋮⋮00⋯1−ak−1),⎛⎝⎜⎜⎜⎜⎜⎜⎜010⋮0001⋮0⋯⋯⋯⋯000⋮1−a0−a1−a2⋮−ak−1⎞⎠⎟⎟⎟⎟⎟⎟⎟, where a0, a1, …, ak−1

a0, a1, …, ak−1 are arbitrary scalars. Prove that the characteristic polynomial of A is(−1)k(a0+a1t+⋯+ak−1tk−1+tk).(−1)k(a0+a1t+⋯+ak−1tk−1+tk). Hint: Use mathematical induction on k, computing the determinant by cofactor expansion along the first row.

Let T be a linear operator on a vector space V, and suppose that V is a T-cyclic subspace of itself. Prove that if U is a linear operator on V, then UT=TU

UT=TU if and only if U=g(T)U=g(T) for some polynomial g(t). Hint: Suppose that V is generated by v. Choose g(t) according to Exercise 13 so that g(T)(v)=U(v)g(T)(v)=U(v) .Let T be a linear operator on a two-dimensional vector space V. Prove that either V is a T-cyclic subspace of itself or T=cI

T=cI for some scalar c.Let T be a linear operator on a two-dimensional vector space V and suppose that T≠cI

T≠cI for any scalar c. Show that if U is any linear operator on V such that UT=TUUT=TU , then U=g(T)U=g(T) for some polynomial g(t).Let T be a linear operator on a finite-dimensional vector space V, and let W be a T-invariant subspace of V. Suppose that v1, v2, …, vk

v1, v2, …, vk are eigenvectors of T corresponding to distinct eigenvalues. Prove that if v1+v2+⋯+vkv1+v2+⋯+vk is in W, then vi∈Wvi∈W for all i. Hint: Use mathematical induction on k.Prove that the restriction of a diagonalizable linear operator T to any nontrivial T-invariant subspace is also diagonalizable. Hint: Use the result of Exercise 23.

(a) Prove the converse to Exercise 19(a) of Section 5.2: If T and U are diagonalizable linear operators on a finite-dimensional vector space V such that UT=TU

UT=TU , then T and U are simultaneously diagonalizable. (See the definitions in the exercises of Section 5.2.) Hint: For any eigenvalue λλ of T, show that EλEλ is U-invariant, and apply Exercise 24 to obtain a basis for EλEλ of eigenvectors of U.(b) State and prove a matrix version of (a).

Let T be a linear operator on an n-dimensional vector space V such that T has n distinct eigenvalues. Prove that V is a T-cyclic subspace of itself. Hint: Use Exercise 23 to find a vector v such that {v, T(v), …, Tn−1(v)}

{v, T(v), …, Tn−1(v)} is linearly independent.

Exercises 27 through 31 require familiarity with quotient spaces as defined in Exercise 31 of Section 1.3. Before attempting these exercises, the reader should first review the other exercises treating quotient spaces: Exercise 35 of Section 1.6, Exercise 42 of Section 2.1, and Exercise 24 of Section 2.4.

For the purposes of Exercises 27 through 31, T is a fixed linear operator on a finite-dimensional vector space V, and W is a nonzero T-invariant subspace of V. We require the following definition.

Definition.

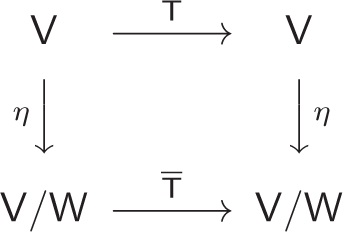

Let T be a linear operator on a vector space V, and let W be a T-invariant subspace of V. Define ˉT: V/W→V/W

(a) Prove that ˉT

T¯¯¯ is well defined. That is, show that ˉT(v+W)=ˉT(v′+W)T¯¯¯(v+W)=T¯¯¯(v′+W) whenever v+W=v′+Wv+W=v′+W .(b) Prove that ˉT

T¯¯¯ is a linear operator on V/W.(c) Let η: V→V/W

η: V→V/W be the linear transformation defined in Exercise 42 of Section 2.1 by η(v)=v+Wη(v)=v+W . Show that the diagram of Figure 5.6 commutes; that is, prove that ηT=ˉTηηT=T¯¯¯η . (This exercise does not require the assumption that V is finite-dimensional.)

Figure 5.6

Letf(t), g(t), and h(t) be the characteristic polynomials of T, TW

TW , and ˉTT¯¯¯ , respectively. Prove that f(t)=g(t)h(t)f(t)=g(t)h(t) . Hint: Extend an ordered basis γ={v1, v2, …, vk}γ={v1, v2, …, vk} for W to an ordered basis β={v1, v2, …, vk, vk+1, …, vn}β={v1, v2, …, vk, vk+1, …, vn} for V. Then show that the collection of cosets α={vk+1+W, vk+2+W, …, vn+W}α={vk+1+W, vk+2+W, …, vn+W} is an ordered basis for V/W, and prove that[T]β=(B1B2OB3),[T]β=(B1OB2B3), where B1=[T]γ

B1=[T]γ and B3=[ˉT]αB3=[T¯¯¯]α .Use the hint in Exercise 28 to prove that if T is diagonalizable, then so is ˉT.

Prove that if both TW and ˉT are diagonalizable and have no common eigenvalues, then T is diagonalizable.

The results of Theorem 5.21 and Exercise 28 are useful in devising methods for computing characteristic polynomials without the use of determinants. This is illustrated in the next exercise.

Let A=(11−3234121), let T=LA, and let W be the cyclic subspace of R3 generated by e1.

(a) Use Theorem 5.21 to compute the characteristic polynomial of TW.

(b) Show that {e2+W} is a basis for R3/W, and use this fact to compute the characteristic polynomial of ˉT.

(c) Use the results of (a) and (b) to find the characteristic polynomial of A.

Exercises 32 through 39 are concerned with direct sums.

Let T be a linear operator on a vector space V, and let W1, W2, …Wk be T-invariant subspaces of V. Prove that W1+W2+⋯+Wk is also a T-invariant subspace of V.

Give a direct proof of Theorem 5.24 for the case k=2. (This result is used in the proof of Theorem 5.23.)

Prove Theorem 5.24. Hint: Begin with Exercise 33 and extend it using mathematical induction on k, the number of subspaces.

Let T be a linear operator on a finite-dimensional vector space V. Prove that T is diagonalizable if and only if V is the direct sum of one-dimensional T-invariant subspaces.

Let T be a linear operator on a finite-dimensional vector space V, and let W1, W2, …Wk be T-invariant subspaces of V such that V=W1⊕W2⊕⋯⊕Wk. Prove that

det(T)=det(TW1)·det(TW2)·⋯·det(TWk).Let T be a linear operator on a finite-dimensional vector space V, and let W1, W2, …, Wk be T-invariant subspaces of V such that V=W1⊕W2⊕⋯⊕Wk. Prove that T is diagonalizable if and only if TWi is diagonalizable for all i.

Let C be a collection of diagonalizable linear operators on a finite- dimensional vector space V. Prove that there is an ordered basis β such that [T]β is a diagonal matrix for all T∈C if and only if the operators of C commute under composition. (This is an extension of Exercise 25.) Hints for the case that the operators commute: The result is trivial if each operator has only one eigenvalue. Otherwise, establish the general result by mathematical induction on dim(V), using the fact that V is the direct sum of the eigenspaces of some operator in C that has more than one eigenvalue.

Let B1, B2, …, Bk be square matrices with entries in the same field, and let A=B1⊕B2⊕⋯⊕Bk. Prove that the characteristic polynomial of A is the product of the characteristic polynomials of the Bi's.

Let

A=(12⋯nn+1n+2⋯2n⋮⋮⋮n2−n+1n2−n+2⋯n2).Find the characteristic polynomial of A. Hint: First prove that A has rank 2 and that span({(1, 1, …, 1), (1, 2, …, n)}) is LA-invariant.

Let A∈Mn×n(R) be the matrix defined by Aij=1 for all i and j. Find the characteristic polynomial of A.