1. Viral Data

For years, many information technology solutions have been pigeonholed as siloed applications, resulting in myriad negative experiences for those businesses that leverage custom-built software applications. Organizations often cite poor alignment with the business1 as a primary reason why they find custom business software applications out of sync with the business.2, 3, 4

1. In general, the term business refers to the collective human resources outside of an information technology department or to the functions these resources perform. As such, an organization such as a government agency or a corporation is deemed to be a dichotomy: the business (side) and information technology.

The Central Intelligence Agency (CIA) provides an illustration of the disparity between business and the way business consumes products or services from information technology. From time to time, the CIA uses focus groups to help evaluate the tools and services employees need to effectuate performance. On occasion, the CIA has found that their spies, analysts, and other employees have ranked information technology last in the tools and services they use. Although CIA employees believe information technology should be important, they have not derived the consistent value they consider vital to their job performance.5 This finding exemplifies the previously mentioned misalignment between the business and information technology.

In the literal sense, viral data does not have to be deadly, but sometimes it is. For example, in 1999, during the Kosovo war, the CIA mistakenly bombed the Chinese embassy in Belgrade, Serbia; 3 people were killed, and 20 others were injured. This tragedy resulted from misassumptions and out-of-date maps. The “CIA analysts who knew the address of a Yugoslavian weapons depot had assumed that house numbers in Belgrade were as orderly as those in Washington, D.C. and picked the wrong building.”6

A less-deadly scenario played out with a North American automaker. During a 1-month period, more than 250 vehicles were lost on a cargo train somewhere in Europe or Asia. Despite all the data to help manage the transportation logistics and the slew of bills of lading that had been issued, the company lost track of those vehicles. Although frantic efforts ensued, more than one calendar month, several man-months, and hundreds of thousands of dollars were spent before the company ultimately recovered the vehicles (from a railway depot).7

7. Private conversation.

Because of misadventures such as these, businesses typically seek better alignment with information technology. Information technologists attempt to meet this need and often argue that better alignment will, in all likelihood, lead to better results (such as finding the right building, tracking vehicles from point A to point B, and so on). On the surface, alignment seems a natural and sensible objective.

In the first quarter of the twentieth century, corporations such as General Motors and DuPont were instrumental in establishing (and popularizing) the multidivisional form of business structure. Pioneering corporations created divisions based on product or geography rather than function, and this practice has continued.8

While following the multidivisional model, organizations typically find themselves leveraging an abstract formation. Common formations include matrix, flat, and hierarchical:

• Hierarchical organization structures remain the structure of choice for governments and for the majority of corporations.9

• Matrix organization structures often find it difficult to make the management structure effective, and some employees may struggle with a potential conflict in loyalties in such an organization.10

• Flat organization structures are typically best suited for smaller companies because low morale and lack of motivation are viewed as common inhibitors to the success of flat structures in larger companies where employees have little opportunity for advancement. The resulting effect is known as the flat-organization dilemma.11

The model of choice for depicting the who’s in charge of what and who does what within a corporation is known as an organigram.12 Hierarchical models are characterized by a pyramid archetype, with the degree of authority decreasing as one progresses from the root (the top) down through each of the branches. Beyond the root node, flat models are generally the polar opposite of the repetitive parent and child links common in a hierarchical structure. Matrix models commonly depict dual reporting lines.

12. An organigram is an organization chart.

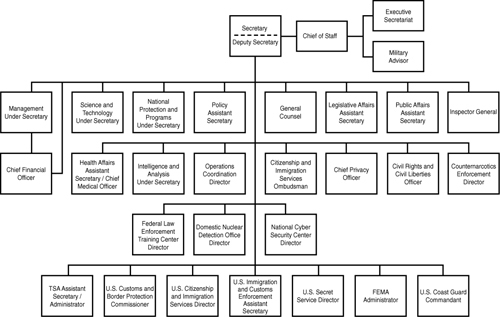

Figure 1-1 shows the higher echelons of the U.S. Department of Homeland Security hierarchical model. When you compare this model with other familiar hierarchical models, one observation should be blatantly apparent: Below the root node, governments and many corporations self-impose silo-oriented structures upon themselves.

Figure 1-1 U.S. Department of Homeland Security organization chart circa 2008.

Many information technology departments steadfastly state that they would like to align themselves to the business, but that is not really their true intension. Information technology departments tend to think of the business as a singularity—a cohesive whole and not as a clustered set of silos, where each silo has its own priorities, responsibilities, and motivations.

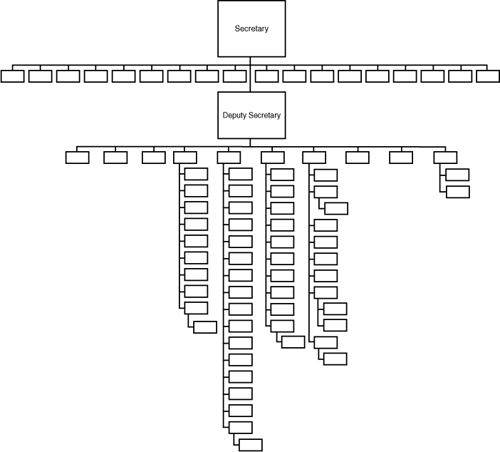

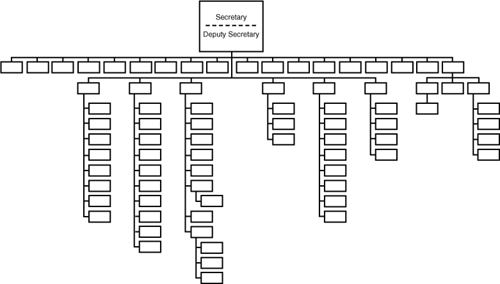

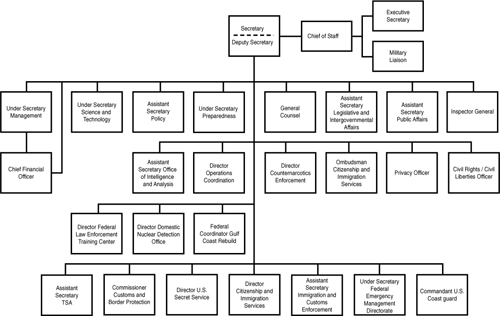

Unfortunately, some proponents of service-oriented enterprise application solutions support this singularity view.13 The error in this thinking is that an organization structure, in both government and business, is temporal. What the structure looks like today may not be what it looked like in the recent past or will look like in the not too distant future (see Figures 1-2, 1-3, and 1-4).

Figure 1-2 U. S. Department of Homeland Security organization chart circa 2002.

Figure 1-3 U. S. Department of Homeland Security organization chart circa 2004.

Figure 1-4 U. S. Department of Homeland Security organization chart circa 2006.

The organization charts in Figures 1-1 through 1-4 highlight the stochastic nature of the organization and the varying structures that may result. Over time, functional areas of an organization can come and go, and the scopes of both responsibility and authority for any single box may also expand or contract.

Any movement toward an information technology solution that overtly aligns itself with the business unnecessarily constrains the business and, over time, may ultimately result in promoting further misalignment. By recasting the desire to align as an ability to continually react, may start to get closer to the type of relationship information technology needs with the business. Reacting to temporal needs of a business requires adaptive, accurate, and timely solutions. Pro forma costs associated with reacting must also be cost-efficient. Overall, the ability to react is much better than the ability to establish an alignment. Organizing to be reactive poses a far loftier and prudent goal for information technology.

The paradigm associated with service-oriented architecture is extremely important because its architecture can readily be deployed to react. The innate ability to compose services of different levels of granularity and externalizing the orchestration of how those services interplay are also vital ingredients to create form-trusted information—the antithesis of viral data.

Businesses do more than just reorganize themselves; organizations can also change their business. Changes in business can be grand or seemingly minuscule. For example, Empire Today, a company founded in 1959, initially sold and installed carpet. Although the company’s product offerings have not radically changed over the years, Empire has expanded beyond carpeting into other home furnishings such as window treatments and bath liners.

In 1976, Apple Computer started out developing and selling computers. Today, Apple® may be better known as a digital entertainment and communications company producing and selling iTunes®, iPods®, and iPhones®. In 1914, Safeway Stores, started with a solitary grocery store. Since then, Safeway has expanded its capabilities and now also manufactures food. Safeway even provides private-label options for other grocery chains.

On occasion, General Motors, the automobile manufacturer, has ventured into alternative businesses such as satellite radio, with the 1999 investment in XM Radio; aviation equipment, with the 1985 purchase of Hughes Aircraft; and system integration, with the acquisition in 1984 of Electronic Data Systems Corporation.

In 1969, American Express, the credit card company, owned the brokerage company Lehman Brothers. American Express subsequently disposed of that asset 14 years before Lehman Brothers, a corporation founded before the outbreak of the American Civil War,14 declared bankruptcy. As a final example, in 1982, the steel manufacturer formally known as United States Steel ventured into the gasoline industry through the acquisition of the petroleum company Marathon Oil.

Legislative acts can also force or enable corporate change. In the United States, the 1933 Glass-Steagall Act forbade commercial banks from owning securities firms.15 75 years later, the securities industry all but came to an end when the remaining large players, Morgan Stanley and Goldman Sachs, requested permission to become bank holding companies.16

Competitive companies tend not to remain stagnant, especially those that formally declare through a mission or vision statement a desire to improve shareholder value. By restructuring the organization, acquiring another organization, spinning off a line of business, or growing organically, organizations continually change. Companies often change in ways that extend beyond refining their market niche. As an overarching strategy, an alignment of information technology with the business is a sure way to foster viral data.

Alignment may result in viral data because alignment is a cognitive desire to satisfy a specific point in time. External influences—such as competition, government regulation, the environment, the arrival of new technology, suppliers and vendors, and partners, to name a few different types of stimuli—can result in the need to define a new, potentially immediate, point in time.

Internal influencers—such as new senior management or a shift in corporate values, the execution of a management prerogative, and the availability or unavailability of resources or skill sets—may create new types of demand. An information technology solution backboned on alignment with the business will always lead to any current solution being misused and potentially damaging the quality of the corporate digital knowledge base.

Sometimes transformations occur in a slow and progressive manner, while some companies seemingly transform overnight. Some transformations can be sweeping (e.g., to comply with insider-trading rules). Adjustments might even blindside some employees in ways that they may perceive as draconian. Sweeping changes equivalent to eminent domain can be part and parcel of management prerogative. An internal information technology department can be outsourced, for example, a division can be sold, sales regions rearranged, and unsatisfactory deals made just to appease a self-imposed quota or sales mark.

In 1646, Hugo Grotius (1583–1645) coined the term eminent domain as the taking away by those in authority.17 In general, eminent domain is the procurement of an individual’s property by a state for public purposes. An individual’s right to own a home and the land beneath it is viewed as part of the liberty extended to all Americans by the constitution of the United States Varying degrees of land ownership is also a liberty afforded to individuals in many other nations around the world, too.

Throughout the United States, as part of a due diligence process in formally acquiring ownership of land, the purchaser traditionally conducts a title search. A title search involves examining all relevant records to confirm that the seller is the legal owner of the property and that no liens or other claims are outstanding on that particular piece of property.

A purchaser becomes the new rightful owner after a title has been awarded to the purchaser and then legally recorded. In this instance, the title represents a deed constituting evidence of ownership. Unfortunately, a title search is not, by itself, a guarantee that no one else can lay a legal claim to all or part of the land. Therefore, those who purchase property often choose to use a company that specialize in performing title searches and who can also offer a monetary guarantee (insurance) that should their search, at any time in the future, prove insufficient, the purchaser may have legal recourse for compensation.

Assuming that a land owner legally and rightfully owns the parcel of property and has all the appropriate documentation to prove ownership, the property may nevertheless be appropriated from the owner. Some legal systems allow a governing body to seize, for reasons of the governing body’s choosing, property regardless of title.18 During the seizure process, some form of compensation to the owner may be required. In the United States, the ability to seize is known as eminent domain (except in New York and Louisiana, where the synonym appropriation is used). This type of seizure is known in the United Kingdom, New Zealand, and Ireland as compulsory purchase; compulsory acquisition in Australia and Italy; and expropriation in Brazil, South Africa, and Canada.19

When a company experiences substantial internal changes, the formal information technology systems often lag behind the needs of the business. Businesspeople cannot always wait for information technology to catch up. In such cases, employees may seek their own alternative solutions—exploring and subsequently exploiting (recasting) existing applications in ways other than originally intended and without regard for integration or interoperability side effects.

Word processors, spreadsheets, and other office automation software enable businesspeople to take solution building into their own hands. The resulting rogue applications (including mash-ups20) can become undocumented playpens for viral data to spawn and be shared (often back into the mainstream information technology systems). When attempting to determine the provenance of viral data, rogue applications can become a metaphorical black hole.

20. A mash-up represents data fetched from multiple Web services.

The ability for both the business and information technology to adapt is an imperative and how well an organization as a whole adapts can directly impact profitability. While discussing a transformation plan, General Eric Shinseki (b. 1942) once barked, “If you dislike change, you’re going to dislike irrelevance even more.” General Shinseki was being forthright in signaling to those within his service that adaption is a personal choice even within the shroud of a larger body (the organization).

Viral data is not just a symptom of buggy code or a value keyed in incorrectly, viral data is also a symptom of the changing organization that improvises on its use of software—because the software is unable to natively adapt to the needs of the business in an expedient and diligent manner. Single views of data and interoperability, both features often seen in a service-oriented architecture can play a role in expediting poor quality or misused data across the enterprise through the inability to adapt in a reasonable timeframe.

Viral data is not exclusive to a service-oriented solution because non-enterprise solutions or siloed software applications can also have a latent contributing effect on viral data. Traditionally, integration needs required for an application to become fully functional within the enterprise are relegated to secondary or tertiary considerations during construction, testing, and deployment.

Positioning, up front, how each solution, application, or component must compliment another solution, application, or component either in automation or as part of a manual workflow can make a vital difference in how viral data spreads. Obviously, the concept of viral data involves, in large measure, an assertion of quality. Quality can be a complex and arcane topic. Many definitions about quality are abstruse, from the noncommittal I’ll know it when I see it, to Lao-Tze’s (c. 551–479 B.C.E.) esoteric, “The quality which can be named is not its true attribute.”21

Quality about a certain something can be said to be an assessment in terms of rating that certain something to “a certain value.”22 Value implies that quality about something must be measurable. Some organizations adopt specific protocols or efforts to drive measurable improvement but suffer from “the illusion that they can ‘assure quality’ by imposing a specification such as ISO 9000, or an award program such as Baldrige, which is supposed to contain all the information and actions necessary to produce quality. This is the ultimate naïveteé.”23

Quality cannot be taken for granted or assumed. Instead, quality is a “subjective term for which each person has his or her own definition. In technical usage, quality can have two meanings: (1) the characteristics of a product or service that bear on its ability to satisfy stated or implied needs, and (2) a product or service free of deficiencies.”24 The notions to satisfy and to be free of deficiencies confirm the potential subjectivity of quality.

If “quality is achieving or reaching for the highest standard as against being satisfied with the sloppy or fraudulent,”25 the verbs achieving and reaching can be continually reapplied to reassert the quality of what needs to be measured even if that something that is to be measured is evolving or transforming.

“Conformance by its nature relates to static standards and specification, whereas quality is a moving target.”26 Because organizations are continually morphing themselves, quality must be regarded as fickle or capricious; and how quality is perceived must be regarded as a complex variable, if only because the inherent nature of the organization is itself complex.

Because the workings of an enterprise typically involve a complex web of life cycles, or value chains, and as more and more enterprises attempt to obtain an enterprise or common perspective of their data via some type of service-oriented architecture, measuring quality must become an enterprise imperative as a way to ensure data is perceived as a trustworthy resource within and across each life cycle or value chain.

When the adverse effects of data use in a service-oriented environment are viewed in light of an enterprise pandemic, the treatment may, in turn, further influence how to approach service-oriented designs—especially in terms of creating a solution that can be reactive.

Published definitions of quality that are specifically in the context of data (or information) can be seen to contain some common and repeated themes:

• Fitness for the purpose of use.27

• Data has quality if it satisfies the requirements of its intended use.28

• The degree to which information and data can be a trusted source for any and/or all required uses. It is having the right set of correct information, at the right time, in the right place, for the right people to use to make decisions, to run the business, to serve customers, and to achieve company goals.29

• Data are of high quality if those who use them say so. Usually, high-quality data must be both free of defects and possess features that customers desire.30

• You should create standards that define acceptable data quality thresholds.31

• Timely, relevant, complete, valid, accurate, and consistent.32

• Ensure the consistency and usage of the values stored within the database.33

• The business ultimately defines the information quality requirements.34

• Correctness or accuracy of data; the value that accurate data has in supporting the work of the enterprise.35

• Focused on accuracy.36

• Fitness for use—the level of data quality determined by data consumers in terms of meeting or beating expectations.37

• Conformance to standards.38

• Data quality is more than simply data accuracy. Other significant dimensions such as completeness, consistency, and currency are necessary in order to fully characterize the quality of data.39

• The value of the asset and can be expensive or impossible to correct.40

• High quality is a value asset; can increase customer satisfaction; can improve revenues and profits; can be a strategic competitive advantage.41

To wit: Dezspite teh miny deafanitions four qualitea, its codswallop to fink their be nun grammer, typografical, or udder kindza errrors and misteaks in dis book. Dezspite teh miny deafanitions four qualitea, its codswallop to fink their be nun grammer, typografical, or udder kindza errrors and misteaks in dis ’ere book.

The preceding paragraph would, obviously, fail under most traditional measures of data quality. However, although the sentences are almost exactly alike (the second sentence has an extra word just before the end of the sentence) and full of spelling and grammar errors, readers can still decipher and fully comprehend the primary message without any loss of meaning. As such, a principle of quality based solely on fitness for use will more than likely prove insufficient to achieving a higher level of data quality.

While a succinct and holistic single-sentence definition of data quality may be difficult to craft, an axiom that appears to be generally forgotten when establishing a definition is that in business, data is about things that transpire during the course of conducting business. Business data is data about the business, and any data (transient or persisted) about the business is metadata.

Generally, business data describes an object, concept, or event that transpires in the course of conducting commerce. In general, business data reflects something in the physical world, such as a customer, a purchase order, a product, a service, or a location. First and foremost, the definition as to the quality of data must reflect the real-world object, concept, or event to which the data is supposed to be directly associated.

Traditionally, the name of a customer, contact information, and other pertinent details are stored in a file or a database and are customarily referred to as data. However, that data is not the customer. The customer is the real thing (the flesh and blood, if a living individual); the data represents a description of the real thing. Most of the time, business-oriented data is the first line of metadata.

Assuming the business adequately captures everything of interest about the real-world object, concept, or event, organizations are then free to worry about the best way to instantiate the real world as metadata—the business data. In turn, objects such as a photograph, an audio recording, unstructured textual content, and column/field-based portrayals may all suffice as means to represent what is or has transpired in the real world. However, how the metadata (business data) is consumed generally influences which form of metadata (business data) is appropriate.

A business may want to track how a customer traverses a store on each visit. However, if the means to monitor the customer (the object) and the course that the customer navigates (a continual series of events) while in the store are absent, whatever is transpiring is obviously going to be insufficient from a use perspective. Therefore, even the total absence of business data can impact the level of data quality because the appropriate amount of metadata is not being captured.

However, in a scenario where a store sets up a series of video cameras to monitor and track each customer, the customer’s movement can now be adequately tracked, even if multiple monitoring devices are required. From video, to tagging, to alternate representations, data is ultimately being set up for consumption. Of significance to an organization is that from the real-world event, to the video recording, to the tagging of the video, to a subsequent representation, in all likelihood some information may be lost.

Any loss of information becomes a constraint. An enterprise cannot know anything more than what the organization has elected to collect and to persist. Information loss may contribute to the effects of viral data as service-oriented applications and knowledge workers attempt to interpolate and extrapolate on what may have been lost.

More often that not, perceived needs for information are based on a myopic view of the business landscape. As information technology departments start to push service-oriented solutions into the business, the compulsion for the business to take a broader view of what can be and needs to be captured about the real-world objects, concepts, and events that transpire during the course of commerce will grow.

Recognizing that business data is metadata should help foster the understanding that there is more to be gathered (in terms of details). In many organizations, businesspeople are responsible to list and detail their business needs. In turn, these needs are translated into the products of information technology. However, businesspeople who attempt to relay their needs may fail to do so fully because of the following:

• Information technology response times: Poor response times mean that requirements may be trimmed to meet project deadlines. Such cutting back is a burden and a constraint on the business and results in an inability to satisfy a business need.

• Corporate direction: Whereas some organizations establish three- to ten-year strategic plans, many other organizations do not do so because they are dealing with point-in-time influences such as market forces, size of market capitalization for publicly traded corporations, legislation, customer preferences, environmental considerations, and other factors.

• Lack of vision: Businesspeople are not normally trained in visionary thinking, and so forecasting or anticipating needs may seem a futile aspiration. The lack of vision might also result from restrictions on shared knowledge (perhaps in response to insider-trading laws).

• Business metrics that de-motivate: Maintaining a sense of orderliness causes organizations to limit the responsibilities people are generally tasked with in their jobs. In this way, the organization promotes a self-imposed siloed structure. As such, people are subsequently measured within their domain. Business metrics (a form of control) tend to encourage employees to limit or restrict their actions because their extracurricular contributions cannot be formally recognized—a de-motivating factor.

• Organizational structures fundamentally set up as a collective of independent silos: The silo structure of an organization, coupled with corporate value chains, instills a notion of selective touch points across the business rather than a ubiquitous all-points to all-points functional enterprise. Limitations in specifications can directly affect the quality of data captured in conducting business, and so the business must communicate what is truly required and cannot be cut out.

“You improvise. You adapt. You overcome.” A mantra of the U. S. Marines and other military forces around the world, this phrase was echoed by actor Clint Eastwood (b. 1930) in the 1986 war movie Heartbreak Ridge to arouse the reconnaissance unit he was leading. In the movie, Eastwood’s Marines are shown invading Grenada on October 25, 1983.

Although Operation Urgent Fury (the codename for the invasion of Grenada) was actually carried out, the achievements of the U. S. Marines in the movie were in real life accomplished by the U. S. Army (specifically, the Rangers and the 82nd Airborne).42 Ascertaining truth is obviously a critical aspiration in business—in business practices, in providing specifications, and in provisioning data.

As previously stated, certain terminology and concepts of the modern business world have been borrowed from the military. To improvise, adapt, and overcome is another example of such borrowing. Information technology solutions that cannot natively react to new situations leave knowledge workers to improvise, adapt, and overcome. Service-oriented architectures are intended to encourage solution builders to create offerings that can readily transcend point-in-time solutions.

Flexible solutions are often more adaptive on the software (logic) side and much less adaptive on the data (database) side. Programmers and their counterparts from data management and database administration do not typically work in tandem when it comes to creating a uniform adaptive solution appropriate for a reactive environment.

Technical characteristics intended to benefit the business when using service-oriented solutions include interoperability, reusability, extensibility, layering of abstraction, and loose coupling. Each characteristic can contribute to varying degrees to a final solution. The characteristics of service-oriented architecture are not formed on the basis of Hobson’s choice.43 For example, a reusable service may be highly reusable, moderately reusable, or partially reusable:

43. Mr. Tobias Hobson, and the proverb “when what ought to be your Election was forced upon you, to say, Hobson’s choice,” was initially chronicled by Hezekiah Thrift. See Thrift (1712).

• Interoperability: Interoperability refers to “the ability of systems, units, or forces to (a) provide services to and accept services from other systems, units, or forces, and (b) use the services so exchanged to enable them to operate effectively together.”44

Integration and interoperability are polysemic exchange mechanisms. One potential way to compare integration with interoperability is that integration may require some additional logic or service to successfully perform the exchange. The additional service would be used to provision a gateway mechanism to provide additional formatting, transformation, or other enrichment functions.

Interoperability tends to permit services to naturally talk or readily exchange consumable data between them. When the input and output messages for a service are formally defined along with the business rules or underlying logic that can be applied, the service can be said to be associated with a contract. The use of a contract can help promote and sustain a manageable interoperable paradigm—however, techniques must still be used to avoid creating a point-in-time solution that cannot readily address ongoing business demands.

• Reusability: While reuse is favorable, not all services are equal candidates for reuse. Reuse is the ability for a service to be consumed in more than one context or business situation. Potentially, services that are geared toward some type of infrastructure function or that have been constructed to be less dependent on a specific type of payload (message or data) are potentially more adept for reuse.

“SOA also allows the preservation of IT investments because it enables us to integrate functionality from existing systems in an enterprise instead of replacing them. Thus support for reusability is central to future IT development.”45

• Extensibility: “SOA offers extensibility to give applications and users access to information not only within the enterprise but also across enterprises and industries.”46 Services can be extended by virtue that they can be composed or orchestrated and adapted to meeting any changing need that a business may have. Extensibility can also provide additional capabilities by offering late-binding options.

• Layering of abstraction: Hiding details and providing ease of flexibility are traits associated with abstracting the underlying logic within a service. The service may offer more than one operation, and all the specific details can be obscured (the notion of a black box, where intricate functions are isolated or hidden to promote ease of use).

Although SOA characteristics can be highly desirable, there is an onus to making them practical and sustainable. “The most important problem is that SOA leads to many different layers of abstraction. When each service abstracts business functionality of a lower layer, it also has to abstract the user identity context from the underlying application.”47

• Loose coupling: Loose coupling is the polar opposite of tight coupling. Achieving a loosely coupled solution is to have services that are independent of one another. As changes to the business are continually occurring, the ability to swap the old for the new without disrupting the entire solution is obviously a significant benefit. “Loose coupling is seen as helping to reduce the overall complexity and dependencies. Using loose coupling makes the application landscape more agile, enables quicker change, and reduces risk.”48

Loose coupling can occur on multiple levels, from hardware, to program language, to service name, to message format, to orchestration. The formal contracts, mentioned during interoperability, define the operations of a service that can be viewed as a form of tight coupling. In this regard, the boundary of framing operations and services helps achieve the other avenues of loose coupling.

Even if a solution is designed to be reactive to the needs of a business, the afforded flexibility should not be manifest in such a way that each incarnation cannot be tightly and absolutely controlled. For example, instead of admitting that its complex systems were flawed, the former brokerage company Kidder Peabody fired one of its traders, Joseph Jett (b. 1958).

Allegedly, Jett booked more than $330 million in false profits on bond trades. “Half of the profits booked by Jett were not legitimate profits, but appeared on Kidder’s computer screens due to a glitch in a complicated proprietary program that processed the stripping and reconstituting of these trades.”49

As a generalized statement, within capitalist markets, employees who have an opportunity to earn more than a base salary make their first order of business to learn how to master their commission plan. Therefore, employees like Kidder Peabody’s Jett are apt to find ways to manipulate their earning potential. Commissioned employees often provide a ripe scenario for the generation of viral data.

In trying to improve the quality of its products, one automobile manufacturer incented their plant managers to produce better-quality vehicles. The quality at each measured plant rose, and defects and rework went substantially down. The side effect was that dealerships started submitting more claims through the manufacturer’s warranty program (because they were receiving defective vehicles). The plant managers were not incented in a way that had a positive outcome on quality.

In some companies, commissioned salespeople might receive compensation on only a subset of the corporation’s overall portfolio of products and services, which in some cases may lead to failures to upsell or cross-sell. As a result, what that potential customer ultimately wants or requires is unlikely to be captured or recorded (ergo, considered).

In the normal course of business events, scaling up to reach a critical mass affords goodness: operational savings, general efficiencies, and sustainable revenue. Mark Walsh (b. 1960) found a niche at Lehman Brothers in the early 1990s by buying up discounted debt (mortgages); Walsh had mastered his commission plan. A dozen years later, Walsh scaled up his been there done that approach in buying discounted mortgages by holding more than $32 billion of loans and assets.50 Arguably, Walsh’s actions fueled the downfall of the company.

Any organization implementing a decisioning environment51 as part of provisioning a metrics program—and by necessity, all organizations measure—to ascertain insight into commissions, profitability, productivity, purchasing, return on investments, general accounting, and so on hopes to capture, monitor, and act accordingly upon the correct types of measurements.

51. A decisioning environment is one that supports real-time or static analytic capabilities. A data warehouse and a data mart are example data stores in a decisioning environment.

A data virtualization layer implemented through services is one type of method that can be used to deliver measurements from a decisioning environment in a timely fashion to those who are tasked with understanding, interpreting, and acting upon the data.

After a metric has been captured, a number of negative things can happen, including the following:

• Measurements are discarded and are not reported or presented to anyone.

• Measurements are reported, but for one reason or another, the details are ignored and are not reviewed.

• Measurements are reviewed, but the results do not provide any actionable insight because the wrong types of metrics were captured.

• Measurements are not presented in a coherent manner.

Potentially, these are all signs of viral data, which in turn produces toxic symptoms of a dysfunctional organization.

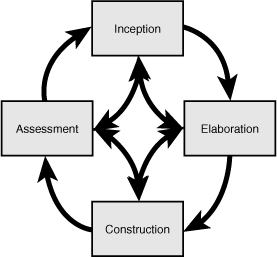

When measurements are actionable, the resulting feedback can be negative or positive. Feedback is often stated in terms of a feedback loop and modeled in a circular fashion, emphasizing the loop. Although circular, the loop is linear (see Figure 1-5). In reality, feedback comes from many places and tends to be nonlinear and more chaotic to handle (see Figure 1-6).

Figure 1-5 Linear feedback loop.

Figure 1-6 Nonlinear feedback loop: A picture of the process of making a nonlinear feedback model is itself a nonlinear feedback process.

Positive feedback is data returned to a process that reinforces and encourages behaviors akin to the original results (as with the case of Lehman’s Walsh and one that characterized the global financial crisis of 2008). Positive feedback can encourage people to sustain and strive for more of the same.

Witnessing an increase in sales might encourage management to keep pushing for an even greater number of sales in the next reporting period. In the end, an uncontrolled positive feedback loop contributes to the eventual demise of a process and may be detrimental to any other associated mechanism. Applying negative feedback to maintain a process can help control wild looping behaviors.

Despite its name, negative feedback is preferable. Negative feedback leads to adaptive and goal-seeking behavior. The goal may be self-determined, fixed, or evolving, and the adaptive behavior seeks to sustain a given level. Negative feedback influences the process to produce a result opposite of a previous result.

Metrics, measurements, and feedback govern behavior and influence the features of an application. A heuristic philosophy in the spacecraft industry is this: If you cannot analyze it, do not build it. However, commercial organizations often stop short of such safeguards. Perhaps a business heuristic could simply be expressed as follows: The level of energy spent gathering a measurement should not exceed the business value gathered from acquiring the measurement. The result is behavior that over time may prove to be unstable or aperiodic, especially in a volatile marketplace.

Organizations must learn to recognize that adverse behaviors associated with formulaic reward schemes may result in misinformation, lack of information, misuse of information, and overall poor decision making. However, such an understanding is not sufficient. Organizations must also combat these behaviors and look for other incentives that may lead to tenable behaviors and performance. This line of thinking provides motivation to instill governance.

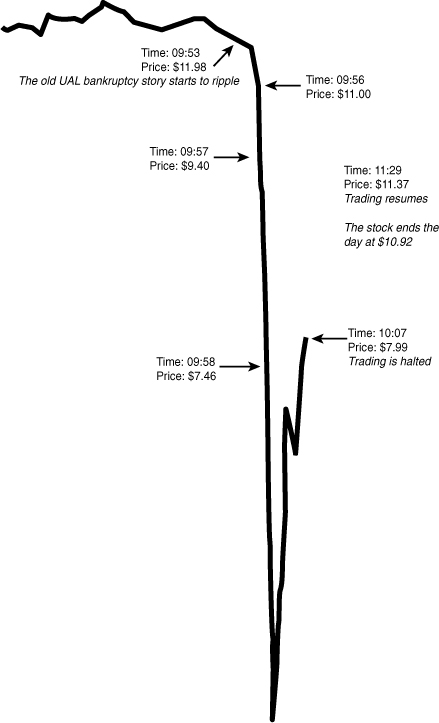

“Don’t be evil” is Google’s informal corporate motto.52 However, Google’s positive feedback robot Web crawler service, Googlebot®, is not blameless and has, in fact, caused harm. The South Florida Sun-Sentinel, a newspaper based in Fort Lauderdale, made available a link to a story that the company had previously published.53 The link appeared in one of the dynamic areas of the website’s Business section. The dynamic area was titled “Popular Stories: Business,” and the story’s hyperlink was placed within the Most Viewed tab.

The newly linked article was missing some vital metadata, such as the fact that the article was an old story (six years old). The leviathan Googlebot encountered the link and followed the trail. Googlebot did not care that information was missing; after all, text is text, and digging is good! Thanks to the Googlebot, the media outlet Bloomberg picked up the story and shortly afterward announced that United Airlines, for a second time in six years, had filed for bankruptcy protection.

The stock price of United Airlines plummeted, and trading was temporarily suspended (see Figure 1-7). The sheer panic experienced by shareholders directly resulted from how unstructured data is sometimes mishandled through a service-oriented architecture. Misled by an absence of metadata, Google ended up seeding what can be mildly construed as a misleading headline.

Figure 1-7 United Airlines stock price performance on September 8, 2008.

The headline revealed that sometimes shareholders panic before performing further research. The act-first nature exhibited by some of the shareholders is not without counterpart in business and certainly within how some people choose to implement a service-oriented architecture.

Was governance needed in this situation? If so, with whom does the responsibility of governance lay? With the Sun-Sentinel, for not providing adequate metadata in all of its stories? With Google, for not being sensitive to the type of site that was being mined or even realizing that it would have already cached this story? Or with Bloomberg, for reporting data regardless of the facts?

To help control people or situations, organizations often seek out and adopt a proven practice—specifically, a best practice. However, applying Socratic logic to the concept of a best practice, an organization must understand that when adopting a best practice that the practice may just be an average practice and may not result in the perceived benefits.

To be a best practice, the practice must be proven to have been successful on more than one occasion or in more than one instance. If a best practice cannot be demonstrated to be repeatable, the practice cannot be regarded a de facto best practice. If a practice is to successively succeed in various situations or industries, the practice must logically be seen as an average practice. Potentially, a best practice should be regarded only as a reasonable starting point. After all, “chasing after apparent best practices is largely futile... At best it may make the firm as good as the competition, and this is not enough to win. The more likely outcome is that it results in a monstrosity, a patchwork of ill-fitting organizational features that do not add up to a coherent design. This is a recipe for failure.”54

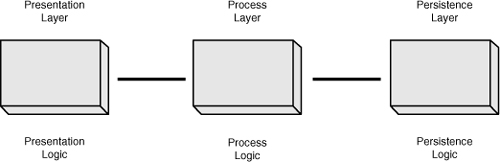

In addressing viral data in service-oriented architectures, elements of guidance are potentially more valuable than a specific set of practices. For example, since the 1990s, many organizations have sought to deploy information technology solutions using a loosely coupled architecture: the multi-tier architecture, of which the most widespread is the three-tier architecture. A fully optimized solution would necessarily be tightly coupled, but tightly coupled solutions typically prove too cumbersome and costly to maintain in light of a changing business organization and new technology. Loosely coupled solutions are designed to offer improved flexibility and enable autonomous change within an organization.

The emblematic three-tier architecture, from a hardware point of view, is shown in Figure 1-8. Each tier is intended to be physically independent, not just conceptually independent (hence the notion of loose coupling). The solution is separated into three distinct tiers—presentation, process, and persistence—and affords the pretext that each tier can be changed independently without affecting one of the other tiers.

Figure 1-8 Three-tier architecture, a hardware view.

Loose coupling should enable adjustments to be made to a system on an as-needed basis while maintaining the overall performance and without incurring the type of expenses associated with restructuring a whole system. Figure 1-9 shows a three-tier architecture from the aspect of software.

Figure 1-9 Three-tier architecture, a software view.

Single-handedly oblivious to what transpires, data practitioners have the potential to cause the ideals of a loosely coupled architecture to fall apart. As previously stated, the software side of a solution (the process tier) is often seen to be much more capable of being reactive and adaptive than the database side of a solution (the persistence tier).

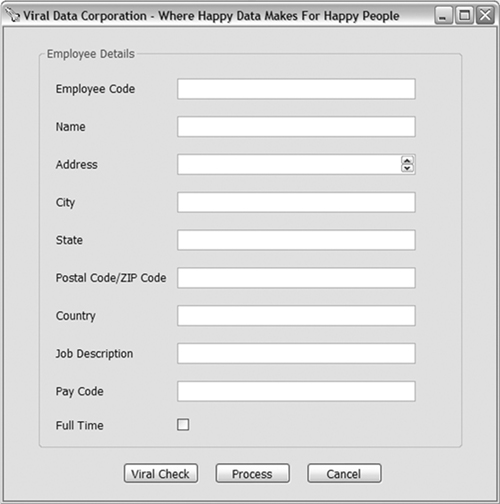

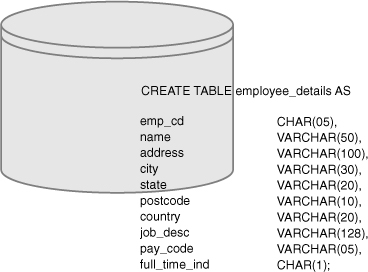

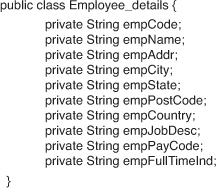

For example, Figure 1-10 shows a sample graphical user interface from the presentation tier. Figure 1-11 show a potential database design to support the process and presentation tier.

Figure 1-10 Sample presentation tier, an entry form.

Figure 1-11 Sample persistence tier, a database table design.

What can be observed from this simple example? First, each field in the graphical user interface (GUI) has a counterpart in the database table. Second, the lengths of each field in the GUI can be deduced to have corresponding lengths with the columns in the database table.

Although the architecture remains loosely coupled, the design unnecessarily couples certain elements. That is, although the architecture is loosely coupled, portions of the design are tightly coupled. The tight coupling results in nullifying the benefits of the loosely coupled architecture. If the employee name needs to be extended by five characters, the table must be changed. If a new field is added to the form, again the table must be changed.

The chances are high that the touch points between the process tier and the persistence tier as well as the touch points between the process tier and the presentation tier will need to be modified for a change to either the presentation or persistence tiers. Potentially, the core parts of a service-oriented design within the process tier may be able to withstand being changed, thus preventing tight coupling throughout the design.

Figure 1-12 shows a Java program that can withstand a length change to a field. In this case, the lengths are not an element of early binding.

Figure 1-12 Sample process tier, a structure expressed in Java.

Tight coupling produces solutions that are more difficult to change. Solutions that are more difficult to change are more susceptible to being compromised. Compromised solutions are a breeding ground for viral data. Certainly, the presentation tier must appear, at least visually, to be aligned with the current needs of an organization. The same is certainly not true for the persistence tier.

Changing a data structure is one of the simplest activities information technology can perform. However, consider the number of services and other programs that act upon the data structures, along with the physical amount of data that an organization persists (terabytes and petabytes). These make any type of change to a data structure one of the most complex information technology activities to orchestrate.

A point that cannot be overemphasized is that an organization may get only one opportunity to get a data structure for persistent data in the right form. Failure to formulate the structure correctly results in workarounds, band-aids, and patches, which can lead to viral data, distrust, and ultimately, the decommissioning of an application.

Even worse than compromising the architecture by tightly coupling the design is tightly coupling the design to the business—the most painful (to the business as a whole) and expensive (to the business) tier to couple or align with the business is the persistence tier. A persistence tier aligned with the business cannot be reactive (by information technology) or adaptive (to the business).

In this light, the technical characteristics discussed earlier (interoperability, reusability, extensibility, layering of abstraction, and loose coupling) that are intended to benefit businesses using service-oriented solutions need to be reexamined. Deploying the wrong type of data structure design can undermine each of these characteristics as follows:

• Interoperability: In terms of physics, deployed databases, for the most part, cannot support a single instance of data to satisfy every business need. The aspect of physics that is of interest here is time: latency. Current technology does not permit data, regardless of volume and regardless of the query type, to return a result set in zero seconds. In all likelihood, the need to have a robust data architecture, along with an ability to control data redundancy, will always exist.

Keeping duplicity adequately synchronized has historically proven awkward, and in many cases, severely lacking. Until an organization can discipline itself that any service can obtain data from anywhere within the organization that is consistent with the service’s context, the ability to achieve interoperability will, at certain levels, be compromised.

• Reusability: Producing and sustaining a corporate-wide canonical model, even at the logical level, has proven difficult. The difficultly demonstrates an inability to produce structures and substructures (columns or groupings of columns) that are uniform and consistent across all data structures.

For example, finding inconsistent lengths for a common concept such as the name of a city is typical, as is finding inconsistent forms of a common concept such as the name of a person. A person’s name may in some cases be portrayed as a single composite field, whereas in other cases, the name is portrayed in its atomic components: first name, middle name, and last name. Not replicating a singular modeling style for a concept can limit reusability and even the ability to detect a reusable opportunity.

• Extensibility: The tight coupling of business concepts directly to data structures is a design pattern that has not readily changed since the adaption of the ANSI-SPARC architecture55 (which is also a model intended to promote extensibility). Marrying data structures to the concept they portray has severely impacted how well structures can be morphed (extended) to accommodate changing business needs.

• Layering of abstraction: In many ways, layering has always been part of the data landscape (from COBOL redefine clauses, to the use of stored procedures, to the use of a federated query engine, and so on). However, many of these layering mechanisms are used to filter rows and columns and not as a way to recast an abstraction into a tangible business concept.

The general exception to this conundrum has been where the business develops its own natural abstraction vocabulary. For example, medical insurance companies may use term physician to apply to all the following: a medical doctor, a surgeon, a chiropractor, a nurse, a hospital, an ambulance, and an out-sourced ambulance service. In this case, the term physician is not restricted to a person who practices medicine, but to any person or organization that may be directly or indirectly associated with provisioning medical care covered by an insurance policy.

Another characteristic that can impede a service-oriented solution over the long term is when a concept is incorrectly modeled. For example, the concept of an employee is often modeled as a thing,56 whereas an employee is really a relationship between two things: those things being an organization and a person. In the relationship, the organization plays the role of an employer, while the person plays the role of an employee.

56. This is often why data modeling is referred to as thing modeling, whereas the basic metamodel for a data model is commonly depicted as thing-relationship-thing. See Hay (2006).

• Loose coupling: As previously stated, when elements of a database structure are, sensu stricto, surfaced in other tiers, the aspirations of loose coupling are undermined.

As the characteristics of a service-oriented architecture become realized, the potential for a fine-grained system of record service to infect a single-copy database or to have an already infected database serviced, in real time, throughout the operations of a business can cause anything from the mildest of annoyances to a severe and chronic impediment: an enterprise pandemic.

The art of software has moved beyond assembling a team of practitioners with intelligence and resourcefulness to assembling a team of practitioners with good judgment and wisdom. A potential starting point is governance.