Chapter 15

Network security

After completing this chapter, you will be able to:

Understand Azure network basics, including VNets, CIDR blocks, and routing.

Understand UDRs and NSGs.

Understand basic network topology, environment restrictions, and ingress/egress control objectives.

Develop solutions protected by network virtual appliances.

Implement PaaS services without public IP endpoints.

Network threats are related to either large attack surfaces or denial-of-service (DoS) attacks. This is the reason for using network defenses in the age of zero trust and identity as the new primary perimeter. In a holistic zero-trust approach, network security provides an important secondary defensive perimeter.

Azure networking primer

In a cloud environment and in some on-premises environments, networking is defined through code that is often referred to as Infrastructure as Code (IaC). For Azure, that code is written as an ARM template, Bicep file, or Terraform module.

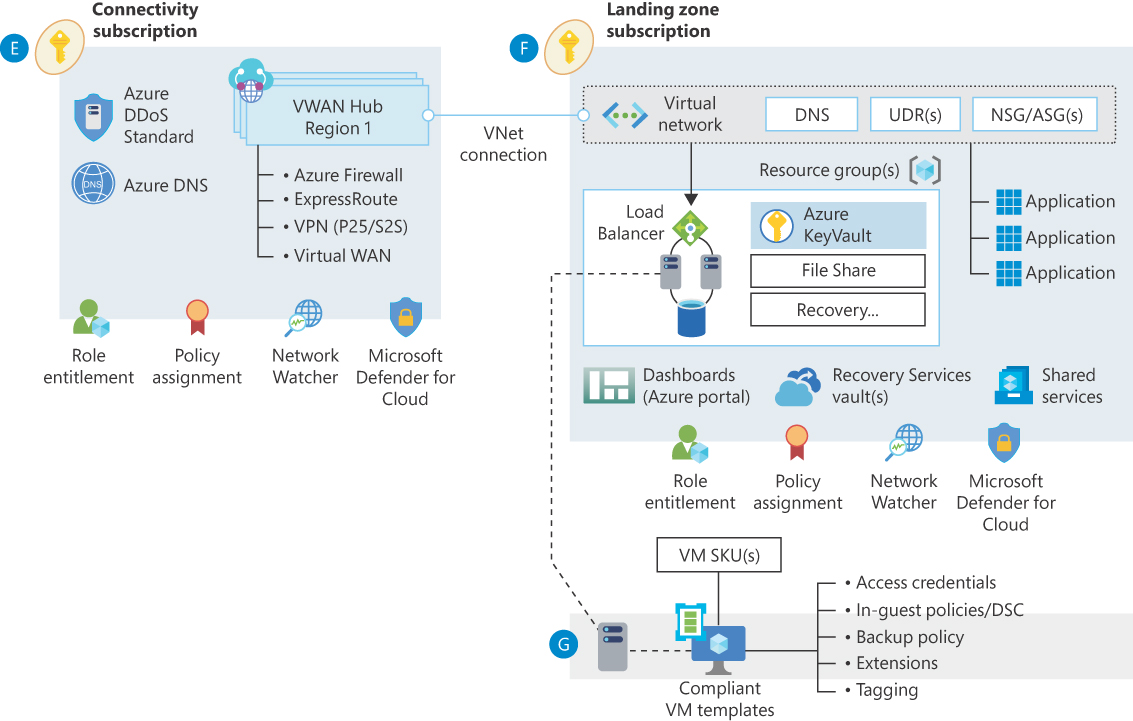

Azure uses virtual networks (VNets) and subnets within each VNet to segment your solution’s network. VNets are independent of each other, and communication is possible only to public IP addresses or to peered VNets. VNet peering is most often done through a hub-and-spoke model, as shown in Figure 15-1.

FIGURE 15-1 Virtual WAN network topology.

In Bicep, the simplest VNet and subnet deployment is as follows:

resource my_vnet_001 'Microsoft.Network/virtualNetworks@2020-06-01' = {

name: 'my-vnet-001'

location: 'eastus2'

properties: {

addressSpace: {

addressPrefixes: [

'10.0.0.0/24'

]

}

subnets: [

{

name: 'subnet-main'

properties: {

addressPrefix: '10.0.0.0/26'

}

}

{

name: 'subnet-private-endpoints'

properties: {

addressPrefix: '10.0.0.64/26'

}

}

]

}

}This Bicep file defines the following:

A VNet named my_vnet_001

An address space of 256 IP addresses, starting at 10.0.0.0, through a CIDR notation

A subnet named subnet-main, specifying 59 IP addresses, starting at 10.0.0.0

A subnet named subnet-private-endpoints, specifying 59 IP addresses, starting at 10.0.0.64

This leaves 128 IP addresses usable for future subnets.

Let’s learn more about VNet networking.

IPv4, IPv6 in Azure

Although the end of IPv4 has been predicted for the last 15 years, it is still the dominant addressing schema, despite “running out” of address space. Technologies such as network address translation (NAT) have extended IPv4’s life. VNets and subnets in Azure predominately use IPv4; however, Azure does support IPv6.

IPv4 concepts

IPv4 uses 4 bytes of addressing, written as decimals between 0 and 255, and separated by dots. The IPv4 address space (232) is subdivided into subnets. This subdivision is achieved with subnet masks.

A subnet mask specifies which bits are fixed by the subnet and which bits are available for resource addressing—for example, 11111111.11111111.11111111.11000000, or written as decimals, 255.255.255.192.

The starting address, called a network prefix, must fit into the subnet mask. Also, the subnet mask may not truncate the network prefix. In other words, the starting address must align correctly on the power of 2 subnet masks. The 0-bits part of the subnet mask can be used for the host part or, in the case of a VNet, to create subnets.

IPv4 addresses in Azure and CIDR

When specifying address prefixes in ARM, we use a Classless Inter-Domain Routing (CIDR) notation—for example, 10.0.5.0/24. CIDR notations specify the starting address, called a network prefix (in this example, 10.5.0.0), and the subnet mask size (here, /24), indicating how many leading ones are in the subnet mask. The number of addresses available for your use is calculated as follows:

addresses = 232-maskSize – 5

The 5 in the formula is used by Azure itself, specifying the first five addresses in a subnet.

The smallest block is a /29, which gives three available addresses. A block of /30 would have fewer than zero available addresses. See Table 15-1 for examples.

TABLE 15-1 CIDR definition examples

CIDR | Subnet Mask | Network Prefix | Host Part | IP address | Available Addresses |

|---|---|---|---|---|---|

10.0.1.8/29 | 255.255.255.248 | 10.0.1.8 | 0.0.0.6 | 10.0.1.14 | 3 |

10.2.4.0/24 | 255.255.255.0 | 10.2.4.0 | 0.0.0.129 | 10.2.4.129 | 250 |

10.3.0.0/16 | 255.255.0.0 | 10.3.0.0 | 0.0.17.15 | 10.3.17.15 | 65,530 |

Routing and user-defined routes

Azure uses standard IPv4 (and IPv6) routing. User-defined routes (UDRs) are used to inject routing through intermediaries. This capability is essential to route outbound traffic to the internet or to an on-premises environment through network virtual appliances (NVAs). It is common to use a UDR that routes all outgoing traffic from your solution to an NVA to perform packet inspection for the purposes of detecting data leakage. This is often called data egress filtering.

UDRs are attached to subnets (best practice) or individual NICs (not recommended). Each UDR has a unique name and can be reused across multiple subnets and VNets. Governance enforces the use of the correct per-region UDR.

Network security groups

Network security groups (NSGs) and UDRs are associated to subnets (best practice) or individual network interface cards (NICs), but this is not recommended. Both UDRs and NSGs can be reused for multiple subnets or NICs.

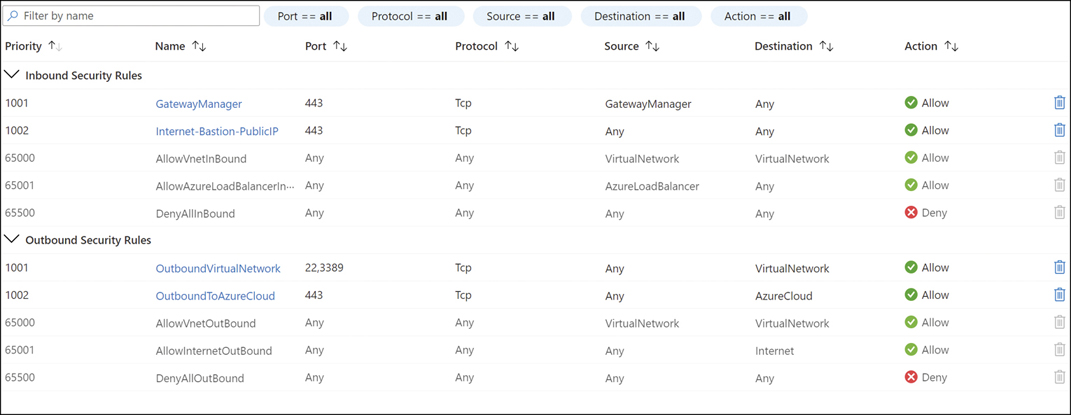

NSGs specify IP address–based access control through rules for inbound and/or outbound connectivity. These rules are ordered, meaning that once a rule is satisfied, no additional rules are processed. The last rule is a default rule denying all inbound and outbound traffic on any port and any protocol. This last rule enforces an allow-listing approach to grant communication.

![]() Note

Note

Not using an NSG will allow all traffic.

NSGs are named and have an evaluation order priority, a port number, a protocol source, a source IP address, a destination IP address, and an action (allow or deny). These values can be set to Any. The IP addresses can contain IP ranges, service tags, or application security groups (ASGs). Figure 15-1 shows an example.

FIGURE 15-1 NSG definition.

It is recommended that you always keep one high-priority NSG spot unused (for example, a spot with a priority of 100) so that if an attack occurs, you can block incoming traffic using a Deny/All IPs/All Ports/All Protocols rule.

Application security groups

Application security groups (ASGs) simplify the assignment of multiple network interface cards (NICs) to a network security group (NSG). We discourage defining network security at the NIC level because it tends to be hard to maintain at scale. It is better to define NSGs for subnets. However, in certain lift-and-shift scenarios, assigning NSGs to NICs can replicate existing micro-segmentation from the on-premises solution without creating lots of subnets. For this use case, group every NIC in a micro-segment to an ASG and use that ASG in the NSG to achieve significantly simpler NSG definitions.

Landing zones, hubs, and spokes

Well-governed Azure tenants implement enterprise-scale landing zones and use a hub-and-spoke networking model—either a traditional model or a newer virtual WAN model. When designing the landing zones and spokes, enterprise architects typically place security and other shared services in the hub and/or in specialized spokes.

Hub and spoke and segmentation

A spoke is often created for each business solution area, with subnets subdividing for more segmentation when desired. It is important to avoid getting too fine-grained, because “nano-segmentation,” as this practice is often called, is both fragile and difficult to manage. Using any number of technologies, the hub can limit which VNets are allowed to communicate with each other.

Environment segregation, VNets, and allowed communications

A core tenet is to only allow landing zones of the same environment to communicate between their VNets. This leads to the rules shown in Table 15-2.

TABLE 15-2 Matrix defining allowed communications between resources in different environments

From -> To | SANDBOX | DEV | DEVINT | QA | PROD | ONPREM |

|---|---|---|---|---|---|---|

SANDBOX | Yes | Forbidden | Forbidden | Forbidden | Forbidden | Forbidden |

DEV | Forbidden | Yes | Yes | Forbidden | Forbidden | Exception |

DEVINT | Forbidden | Forbidden | Yes | Forbidden | Forbidden | Exception |

QA | Forbidden | Forbidden | Forbidden | Yes | Forbidden | Exception |

PROD | Forbidden | Forbidden | Forbidden | Forbidden | Yes | Approval |

ONPREM | Forbidden | Approval | Approval | Approval | Approval | n/a |

To summarize:

SANDBOX SANDBOX environments are used for experiments. These environments should be completely isolated, with no connection to other environments, including the on-premises environment. Although PaaS services may use public endpoints, VMs should not be exposed directly to the internet through a public IP address. Use VPNs or the Azure Bastion Service to reach such VMs.

DEV DEV environments are used in continuous integration (CI) to test the code before merging them into the main branch. These environments can communicate with other DEV environments. A DEV might also need to communicate with a DEVINT environment for development-time testing, since DEVINT environments contain reasonably stable versions of code from other teams.

DEVINT DEVINT environments are used to verify changes after a PR merge into the main branch. They are used to test for dependency problems between different developments.

NONPROD environments The remaining NONPROD environments (including DEVINT) can communicate only with solutions in the same environment type—for example, DEVINT to DEVINT, QA to QA, PERF to PERF, and so on.

PROD environments These must communicate only with other PROD environments. On a related note:

Traditional CI/CD deployment strategies commonly use one VNet per region for each landing zone.

In blue/green CI/CD deployment strategies, green environments must not communicate with blue environments, or vice versa. Although this is not a security issue, such communication patterns violate the concept of blue/green.

Ingress and egress controls

Every organization will want to control connections between VNets in the same region or across regions. This is also known as east/west traffic. East/west traffic belonging to the same landing zone can be allowed without extra restrictions.

Inbound and outbound internet connections are always routed through the network hub(s) via firewalls and other security devices such as Azure Application Gateways, Azure API Management Gateways, Azure Front Door, and/or Azure Firewall. Inbound restrictions are enforced by preventing public IP addresses in the spokes, while outbound restrictions are enforced through the application of UDRs on every subnet not containing security appliances.

On-premises traffic (to/from) is routed through firewalls, NAT devices, and VPNs over the public internet or through an ExpressRoute (dedicated) connection.

VMs and PaaS components in the spokes may not contain any public IP addresses unless required due to technical limitations or special circumstances. Any such requirement will necessitate a thorough threat model and approved exception. An Azure Function App handling an Event Grid trigger is an example of a technical limitation requiring such an exception.

NVAs and gateways

As discussed, NVAs are implemented in the hub network to guard trust boundary transitions, whether ingress or egress. Depending on the direction, this is also known as north/south or east/west traffic, as shown in Table 15-3.

TABLE 15-3 Traffic directions

Source | Destination | Direction |

|---|---|---|

Internet | Azure services | North/south |

Azure services in one solution | Azure services in another solution | East/west |

Azure services | On-premises services | North/south |

![]() Note

Note

This book discusses Azure native services. However, many third-party NVAs are available for use within Azure. Their usage patterns parallel those of the native services.

Azure Firewall

Firewalls analyze transiting traffic at OSI layers 3 through 7 (see https://en.wikipedia.org/wiki/OSI_model), providing audit, traffic filtering, and threat intelligence capabilities. For example, a firewall can identify the following:

Source and destination IP addresses, either directly (through an IP range) or indirectly (through IP tags and reverse FQDN lookups)

Destination URL patterns

The destination URL’s web category, such as liability (bad sites), high-bandwidth, business use, and so on

The protocol used, and optionally, the enforcement of security protocols such as TLS

Port numbers

Intrusion patterns

And much more

Azure Firewall Premium SKU

The Azure Firewall Premium SKU goes beyond the basic firewall capabilities implemented with the Standard SKU by adding the following features:

TLS Inspection This is somewhat auxiliary to the other capabilities because it enables you to unencrypt requests to inspect them. This allows them to use more information than the servers or ports involved.

Intrusion Detection and Prevention This enables you to inspect packages in detail to identify, report, and even block potential malicious activity.

URL Filtering This enables you to define extensive rules to block requests. For example, you might want to allow requests from https://www.contoso.com but block requests from https://www.contoso.com/mymaliciousapp.

Web Categories These enable you to block specific targets based on their category, like gambling, social media, and so on.

The Azure Firewall Standard SKU also includes advanced capabilities such as threat intelligence.

Azure web application firewalls

Web application firewalls (WAFs) inspect HTTP/HTTPS traffic to audit or filter common malicious payloads. The Azure WAF can be applied on Azure CDN, Azure Front Door, or an Azure Application Gateway.

OWASP ModSecurity CRS is the source for many of the attack patterns covered by WAFs. You can find further details here: https://owasp.org/www-project-modsecurity-core-rule-set/. Many of these categories should be defended through secure coding practices; the WAF simply adds one more defensive layer and centralizes logging of attempted attacks.

It is important to understand that WAFs are based on heuristics determined over the years through observation of attacks. This provides a lot of value, because it allows them to recognize those attacks and to prevent them from occurring again. Unfortunately, however, there is no guarantee that the next attacker will use one of those techniques. For example, it has happened in the past that penetration testers learned of the presence of WAFs after having completed their exercise. In that case, the WAF was ineffective, so much so that the penetration tester didn’t even notice it! Moreover, WAFs are not unknown to knowledgeable attackers. If your adversary knows their business, you can assume that it may take them anywhere from just a few minutes to a couple of hours to circumvent it.

Another shortcoming of WAFs is that they do not know anything about your solution. Therefore, they cannot apply more stringent controls. For example, if you have a text field that accepts a phone number, the WAF may not be able to determine attacks where the phone number has been tweaked. But your application can detect them, and it should.

To summarize, WAFs play a very important role when used correctly, because they allow you to identify common attacks and to act, but you should not believe that they can do magic.

API Management Gateway

As its name clearly indicates, the primary purpose of an API Management (APIM) Gateway is API management. However, it also provides security-related capabilities in addition to its core capabilities. For example, an APIM gateway:

Accepts API calls and routes them to your back ends

Verifies API keys, JWT tokens, certificates, and other credentials

Enforces usage quotas and rate limits

Transforms your API on the fly without code modifications

Caches back-end responses

Logs call metadata for analytics purposes

Because the APIM gateway is not primarily a security NVA and therefore lacks WAF capability, it must be combined with a WAF-capable front end, such as Azure Front Door or Application Gateway.

Azure Application Proxy

Azure Application Proxy is intended for publishing on-premises and IaaS-based solutions on the internet. Application Proxy exposes the (private) app to a public endpoint and protects it by requiring valid authentication with Azure AD.

PaaS and private networking

PaaS was intended to rely exclusively on the identity perimeter. However, this was roundly rejected by horrified security teams. (This author experienced only one exception to this as a cybersecurity consultant.) Although some aspects of this widespread rejection can be traced to outdated network-perimeter thinking, there are many reasons to keep a PaaS private:

Layered defenses are better than single-layer defenses (see the section “Defense in depth” in Chapter 2, “Secure design”).

The attack surface can be reduced for internal services.

All internet ingress can be routed through a single place for logging, inspection, and intrusion detection.

All internet and on-premises egress can be routed through a single place for a data-loss prevention (DLP) system.

The solution is to inject PaaS endpoints and, if applicable, the outgoing implied NIC into a VNet. In addition, any such technology must disable public endpoints. Azure uses myriad approaches to solve this problem.

![]() Important

Important

PaaS public endpoint traffic originating in Azure is routed within Azure’s internal network. Therefore, this traffic does not go over the open internet and is better protected.

Private shared PaaS

PaaS services may have any combination of the following, which need to be made private:

Endpoints for the data plane

Endpoints for the control plane for complex services, such as Databricks, Synapse, and AKS

Egress calls requiring routing via a UDR to an egress control network virtual appliance

The consumption of data sources directly through private networking

Service endpoints and private endpoints

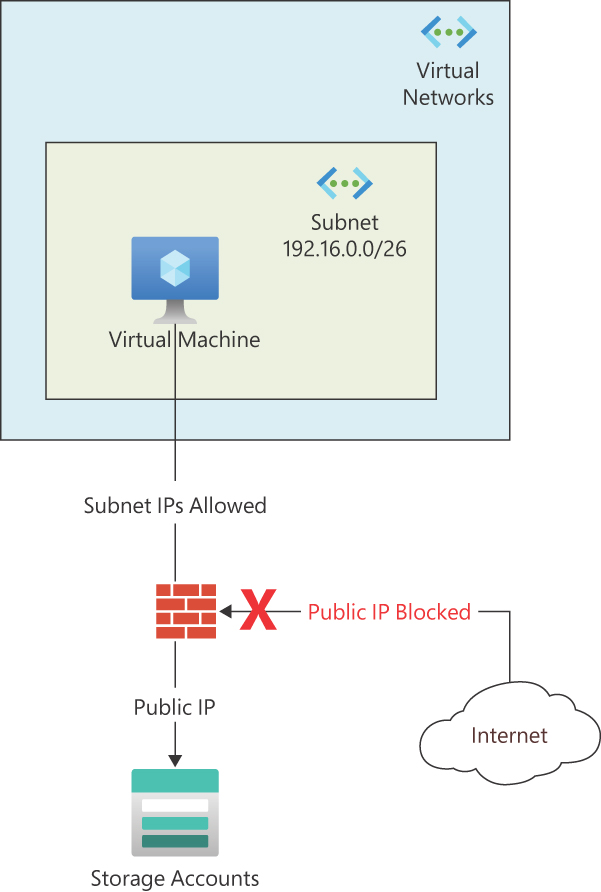

Service endpoints were an early technology to solve the problem of protecting shared PaaS in a private VNet. Service endpoints can be thought of as automanaged firewalls. The public DNS entry and IP address still exist, but connection requests for them are rejected by the firewall, except from the subnet containing the service endpoint. (See Figure 15-2.) Many services support service endpoints.

FIGURE 15-2 Visualization of a service endpoint.

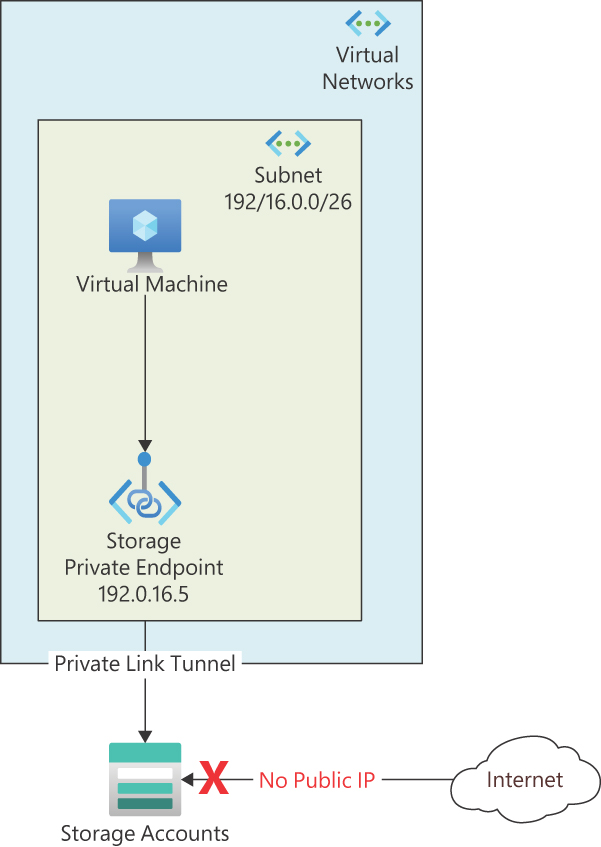

Private endpoints have replaced service endpoints. They project a virtual NIC into the VNet of your choosing. Private endpoints hide the public IP address of the service instance. Each PaaS service can have multiple private endpoints in different VNets and subnets. (See Figure 15-3.)

FIGURE 15-3 Visualization of a private endpoint.

Recommendation

In new environments, use private endpoints instead of service endpoints. Additionally, migrate existing PaaS solutions with service endpoints to private endpoints.

Private endpoints and the private DNS problem

The Domain Name System (DNS) translates a host name, called a fully qualified domain name (FQDN), into an IPv4 or IPv6 address. In a traditional Active Directory environment, this is often handled by the server hosting the domain controller (DC) role. For public endpoints in Azure, the resolution is forwarded by the DC to Azure’s DNS service.

![]() Note

Note

When one of your services fails to communicate with another service, the likely culprit is a DNS lookup problem.

DNS resolution for private endpoints within the same VNet is handled by Azure DNS. In any nontrivial system, the FQDN for private endpoints also needs to be resolved by DNS in peered VNets. Fortunately, Microsoft’s documentation has a solution for this problem, located here: https://azsec.tech/rf1.

Shared App Service plans

Integration of shared App Service plans for API, Web, and Function Apps in your VNet requires the following:

Private endpoint for ingress

VNet integration for egress

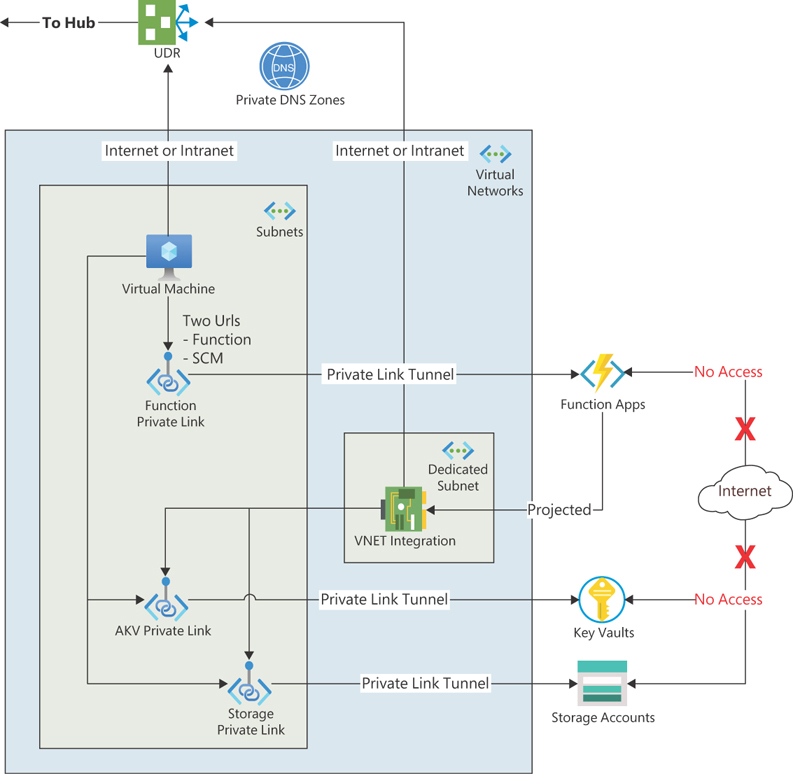

In addition, to enable outbound connections for all communications to pass through the VNet, making them subject to UDR, you must enable the WEBSITE_VNET_ROUTE_ALL setting. This may seem confusing, so let’s tease out the concept. The sample Function App in Figure 15-4 uses a Key Vault for secret storage and needs a storage account for itself. You can also add databases and other services to consume from the Function App.

FIGURE 15-4 VM accessing Function App privately and Function App protected within the same VNet.

Dedicated PaaS instances

Dedicated PaaS instances provide another path to private PaaS services. Examples of such dedicated instances include the following:

Azure App Service Environment (ASE) hosting an isolated SKU App Service plan This provides some of the same easy deployment and management as a shared App Service plan while running on a dedicated scale set.

Integration Service Environment (ISE) This provides the same level of isolation for Logic Apps. ISE are being deprecated - do not use in new solutions.

Databricks clusters with No Public IP (NPIP) These are isolated, but the control plane is public and must instead be protected with strong Azure Active Directory authentication.

Managed VNets

Services that provide managed VNets and private link hubs, such as Synapse, create VNets in your sub-scription. This exposes any ingress through private link hubs (Synapse provides three public endpoints) and managed private endpoints to connect to other services.

Agent-based network participation

SaaS-like services such as Azure DevOps and Purview provide IaaS-based agents. These are hosted on-premises in a VM or VM Scale Set (VMSS). They do not accept inbound connections and receive all their instructions from outgoing requests, reducing the risk. Unfortunately, you still need to manage the VMs increasing the risk; therefore, if available, use PaaS-based approaches, such as Managed VNets.

Azure DevOps (ADO) self-hosted agents (SHAs)

ADO SHAs are often hosted in a shared subscription within a network hub (separate subnet). Using the SHA scale set capability, you can scale your agent pools. Furthermore, such instances are destroyed after completing a job. This destruction prevents an SHA from accidentally or maliciously leaving information behind or having an altered state that affects the next job.

Azure Kubernetes Service networking

Azure Kubernetes Service (AKS) networking is a complex topic beyond the scope of this book. However, this section provides a brief overview of the two networking options supported by AKS:

Kubenet Native to Kubernetes, Kubenet is created as part of the cluster deployment. It manages IP addresses and provides access to AKS elements through load balancers and network address translation (NAT) requiring smaller IP address spaces. Kubenet is available only on Linux clusters. You can find more information about Kubenet here: https://azsec.tech/6ga.

Azure Container Networking Interface (CNI) The CNI provides more control over the network layout; however, it requires more IP addresses, because pods get full connectivity. It supports both Linux and Windows clusters. You can find more information about it here: https://azsec.tech/hk6.

Ingress controls

AKS can deploy an internal load balancer (ILB) or an external load balancer (ELB) for your cluster. An ILB does not expose a public IP address, allowing you to control ingress through a firewall. If you decide to use an ELB with a public IP address, you can use an Azure Application Gateway with WAF as an ingress controller to protect your cluster. Alternatively, you can use Nginx as the ingress controller. It is recommended to keep the ingress controller in a separate subnet within the VNet. For more information, see https://azsec.tech/9qe.

Egress controls with UDR

Like the previously discussed shared App Service plans, egress from an AKS cluster can call any IP address. You can prevent this, however, by first routing egress traffic through a firewall using UDRs. For more information, see https://azsec.tech/a6y.

Private endpoints for Kubernetes API server

The cluster itself is always in a VNet. However, the Kubernetes API server is a PaaS with a public IP address. As with other shared PaaS resources, using a Private Endpoint removes the public IP address.

Cluster network policies

AKS can use a network policy engine for a cluster. Network policies require CNI networking and must be specified when the cluster is created. (See https://azsec.tech/iml for more information.)

Clusters can use one of two network policy solutions:

AKS with Azure network policies created via Azure CLI To apply this solution, use the following code:

az aks create --resource-group $rg --name $name --node-count 5 --generate-ssh-keys --service-cidr 10.5.0.0/24 --dns-service-ip 10.0.0.10 --docker-bridge-address 172.17.0.1/16 --vnet-subnet-id $subnet --service-principal $sp --client-secret $pwd --network-plugin azure --network-policy azureAKS with Calico network policies created via Azure CLI To apply this solution, use the following code:

az aks create --resource-group $rg --name $name --node-count 5 --generate-ssh-keys --service-cidr 10.5.0.0/24 --dns-service-ip 10.0.0.10 --docker-bridge-address 172.17.0.1/16 --vnet-subnet-id $subnet --service-principal $sp --client-secret $pwd --windows-admin-username $winusername --vm-set-type VirtualMachineScaleSets --kubernetes-version 1.20.2 --network-plugin azure --network-policy calico

You can now define network policies. Network policies can be based on pod attributes, such as labels. They are similar to NSGs and can control traffic within a cluster as well as external communication. Here’s an example of a network-policy definition in YAML:

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: backend-policy

namespace: development

spec:

podSelector:

matchLabels:

app: webapp

role: backend

ingress: []These policies can be deployed with the kubectl command:

kubectl apply -f backend-policy.yamlThe dangling DNS problem

One key benefit of cloud-based solutions is agility. It is possible to quickly spool up and tear down resources. As a result, applications that might have taken months to deploy on-premises can be deployed in minutes in Azure. This ability to spool up and tear down resources has a dark side, however. The most notable issue is called the dangling DNS problem, which enables attackers to hijack your resources. This is often referred to as a subdomain takeover, and yes, it’s as bad as the name suggests.

Here’s what happens: when you map to a DNS canonical name (CNAME) record (an alias) for an Azure resource that is no longer provisioned, it leaves the associated domain “dangling.” This subdomain then becomes subject to takeover. This issue is not new; people have known about it for years. But the issue is exacerbated in the cloud.

An example

Here’s an example of the effects of a dangling DNS. Suppose that in Azure, you register a “nice” DNS name, app.contoso.com, to point to app-contoso-prod-002.azurewebsites.net. Then, at some point, you remove app-contoso-prod-002.azurewebsites.net. This leaves app.contoso.com dangling—that is, pointing to something that does not exist. Now suppose a bad actor, using tools that are well understood, discovers your dangling DNS (because DNS names and hierarchies are public information) and then creates an Azure resource that has the old not-so-easy-to-remember DNS name, app-contoso-prod-002.azurewebsites.net. Now, all traffic meant for app.contoso.com goes to app-contoso-prod-002.azurewebsites.net, which is controlled by the bad actor. The potential impact of this vulnerability ranges from cookie harvesting to phishing and beyond.

FIGURE 15-5 Looking at DNS CNAME records using PowerShell.

Fixing dangling DNS

At the time of this writing, a new capability named DNS reservations has been added to Azure to help mitigate dangling DNS issues. You can read more about DNS reservations here: https://azsec.tech/tfw.

Another way to mitigate dangling DNS is to use Microsoft Defender for DNS, which will detect and inform you of this issue.

There are yet more ways to mitigate dangling DNS, but they are more complex and require DNS knowledge. These include using DNS alias records and removing CNAME records when they are no longer used.

Summary

In this chapter, you were introduced to several important networking concepts. It then fleshed out how to reduce the attack surface on a PaaS by hiding the public endpoints in favor of private networking. The available approaches for private networking vary by PaaS type. They include service endpoints, private endpoints, VNet integration, dedicated PaaS instances, Managed VNets, and agent-based network participation.

This chapter also covered Kubernetes networking. Although the Kubernetes networking discussion is not sufficient to understand all the intricacies of Kubernetes networking, it should provide you with sufficient understanding of how to get started with AKS networking.

Finally, this chapter discussed the dangling DNS problem and how to mitigate it using a special capability within Azure.