8: DECOMPOSING THE CUBE FOR SECURITY ENFORCEMENT

INTRODUCTION

The McCumber Cube methodology is a structured process that examines security in the context of information states. This construct is central to the approach. Information is the asset, so security requirements that are defined as simply responses to threat-vulnerability pairs are not sufficient for the assessment and implementation of information security requirements. Vulnerabilities are technical security-relevant issues or exposures (see Chapter 4) that may or may not be problems with the technology system or component. Obviously, because vulnerabilities by definition are technical in nature, they will change with the technology. Some will be noted as programming errors or unnecessary features and will be repaired with a patch, update, or subsystem modification. The McCumber Cube approach allows the analyst to define and evaluate the safeguards at a level abstraction just above the technology.

To be clear, the McCumber Cube methodology does not obviate the need for tracking and managing technical vulnerabilities. In fact, mitigating risk in a specific operational environment will always require the analyst or practitioner to consider the entire library of technical vulnerabilities as a critical aspect for implementing and managing their security program. In recognition of this, I have included a compendium of information on the most widely used library of vulnerabilities as defined in MITRE Corporation’s Common Vulnerabilities and Exposure library. However, mapping security requirements based solely on these technical vulnerabilities and exposures is not an effective way to assess and implement security. Thus we have the McCumber Cube methodology for analyzing security functionality based on the information assets themselves.

Another important tool to use in the process is a comprehensive library of safeguards, such as patches for operating systems, security appliances, authentication products, auditing techniques, backup and recovery options, and all the others. Such a library does not currently exist. However, you can develop your own based on your current systems environment and planned implementation. When going through the McCumber Cube methodology, actually including them as options when assessing each block as described below would be a valuable exercise. Depending on the depth of the security assessment, it is usually an effective technique to actually list safeguards (existing or planned) in each category.

One of the major advantages of the methodology is the user’s ability to evaluate the entire spectrum of security enforcement mechanisms. It also recognizes the interactive nature of all security safeguards and controls. To be able to effectively assess and implement security in complex IT systems, it is critical to consider all possible safeguards including technology, procedural, and human factors. The penetration-and-patch security approach and vulnerability-centric models of security assessment focus almost solely on technical controls and do not provide the appropriate level of consideration for nontechnical controls. Only the McCumber Cube effectively integrates all three categories of safeguards.

This methodology also works on any level of abstraction to provide comprehensive analysis and implementation of security in information systems. However, the majority of security practitioners, policymakers, and analysts are concerned about the proper implementation and enforcement of security at the organizational level. With that in mind, this section will be used as an exemplar to outline each of the steps of the process. This section will define a generic information system environment and walk the user through each step required to perform a comprehensive and effective security assessment using the structured methodology.

There would be no way to create a text that could possibly outline every conceivable safeguard, vulnerability, or instantiation of an information state. This chapter simply walks you through a series of simple examples to show how the structured methodology is employed. To fully assess a full-scale environment, you need to follow the steps outlined for each of the information states you identified in Chapter 7.

This is not to say that you cannot leverage analyses performed for other information states and even completely copy the safeguard values you created for one state to an instantiation of an identical state. It does mean, however, that you have to account for the attributes of confidentiality, integrity, and availability for each state you identified by assessing safeguards in all three categories. This ensures that you have completely captured all elements of the information systems security program for the environment within the boundaries you have identified.

A WORD ABOUT SECURITY POLICY

The McCumber Cube methodology can dramatically aid the security policy development process. Almost every security researcher, practitioner, or implementer has claimed that security policy must drive the security architecture. On its face, this statement is true. However, the problem arises when one is confronted with the requirement to develop such policies. Many people begin by copying the efforts of someone else or perhaps they employ a checklist developed by a security expert. This process usually results in poorly implemented policies.

The definition of what comprises a security policy is not fixed. Sometimes policy requirements are rather vague and are captured with statements such as: All sensitive company information shall be kept confidential and will only be accessed by authorized employees. It is obvious that the actual tools and techniques that will have to be implemented to enforce this well-meaning directive remain inadequately defined.

Other security policies may be captured by a statement such as: All personnel system users must create and employ a password consisting of 12 alphanumeric characters with at least 2 numbers. This rather specific policy may be a good one, but begs the question: Why? It also leaves unspoken the degree to which the password protects the sensitive personnel information. If such a password causes users to write their password on a sticky note and paste it under the keyboard to aid their memory, it may prove less effective than a shorter, more easily remembered password.

By using the McCumber Cube methodology to help develop your security policies, you will have the tools necessary to determine how much protection is necessary given the value of the information resources. You will also be able to make a more definitive assessment of where policies are required and where enforcement technologies or human factor safeguards can either replace or support the policy requirement. The methodology also provides a specific lexicon of security terminology so security administrators, executives, users, and IT personnel can all discuss security-relevant issues using the appropriate risk reduction terms. This feature alone makes using the methodology far superior to hit-and-miss policy development activities.

For example, someone charged with assessing and implementing security in a corporate information system could make the case for investment in additional security technologies by specifically citing the potential loss exposure of information destruction, delay, distortion, and disclosure. Using the methodology allows the security administrator to pinpoint the risks mitigated and the potential loss from not implementing the appropriate controls. Using a structured methodology allows security technology developers and implementers alike to provide quantifiable justification for investments in products, procedures, and people.

DEFINITIONS

Applying the appropriate lexicon in this process is critical. In the How to Use This Text section, a short dictionary of common terms is presented. You will note that words and terms have been carefully described and used quite specifically throughout the text. In this section, this careful use of words is also important.

When the concept of information states is presented, it is used to describe the three primary information states of transmission, storage, and processing. It has been argued by academics and researchers that more states exist. Some of these experts have posited additional information states, such as display. These distinctions and additions to the three primary states are unnecessary if not actually inaccurate.

It also has been argued that the processing state can ultimately be decomposed into minute permutations of transmission and storage. This is not worth the discussion to debate the merits of this assertion. Although this may be arguably true at a low level of abstraction (within the microprocessor), attempting to apply security requirements in this fashion is untenable. The processing state is vitally important to the assessment and implementation of security policy because, as we have discussed, it has completely changed the science of information security. The automated processing of data and other digital information has ushered in completely new requirements for technologies, procedures, and training that did not exist before the introduction of computer systems to the management of information resources.

The three primary states can easily accommodate all the security-relevant requirements for information systems security assessment and implementation. There is no need to add to or detract from the original model for either precision or clarity. So in this process we must always account for each of the three states of information and refer to them as such.

The elements of confidentiality, integrity, and availability are known and are referred to as security attributes. When we speak of information security, these are the elements to which we refer. As with information states, academics and researchers have argued that there could or should be additions and addenda. Some have made the case for the inclusion of other security attributes such as nonrepudiation and authentication. In the case of nonrepudiation, this is actually a class of safeguard mechanisms that ultimately help ensure data integrity. Classifying nonrepudiation as a unique security attribute is not only inaccurate, it is also unnecessary.

Endeavoring to make the case for other security safeguards such as authentication is also inaccurate and unnecessary. These are safeguards and do not belong as category headings for security attributes. In this way, some academics and researchers have tried to correlate technology-based models with the McCumber Cube information-based model. Although this may be conceptually possible, it does not appear to provide any advantage to the security analyst, because the technology-based model will have to be adapted and modified continually as technology evolves.

The three elements—technology, procedures, and human factors (people)—comprise the three primary categories of safeguards. Sometimes the terms countermeasures or security measures are used. Although we could debate the academic merits of each, I choose to use the more general term safeguard as it best encompasses the concept. Countermeasures may imply somewhat more active technical defenses and its use can be confusing depending on the application.

In this section, we will use the terms states, attributes, and safeguards rather specifically. It is important to point this out so that your comprehension will be aided as you apply the model. As we pointed out in a previous chapter, the term security means different things to different people; it is a term fraught with misunderstanding and misapplication. For analysts and practitioners engaged in assessing and implementing information systems security, accurate use and application of these terms is crucial.

THE McCUMBER CUBE METHODOLOGY

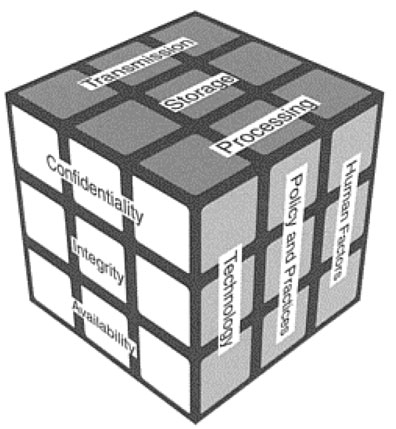

The first step of the McCumber Cube methodology process is to accurately map information flow as described in the Chapter 7. Each information state is then parsed out of the larger IT environment and examined for its security-relevant environment. We need to begin by reviewing the composition of the basic McCumber Cube (Figure 8.1).

The McCumber Cube methodology is ultimately based on decomposing the cube into the individual blocks that comprise it and using these blocks as the foundation for determining the appropriate safeguards for each information state. Across the Y-axis of the model are the information states of transmission, storage, and processing. Once these states are identified as outlined in the previous chapter, the analyst or practitioner is ready to analyze the security relevant aspects of the system impacting the information resources.

Now we must look at the other elements of the cube (Figure 8.2). The vertical axis comprises the information-centric security attributes of confidentiality, integrity, and availability. Simply looking at the cube on its face as a two-dimensional model, it is possible to create a matrix for defining the security requirements for each of the three information states.

THE TRANSMISSION STATE

The next step will be to separate a column based on the identified information state. For example, let us use one of the information states of transmission that we identified in Chapter 7. We can use the state that exists between an office computer (workstation) on the LAN and its connection to that network. Information is in its transmission state as it flows to and from this computer by an internal connection to a hub or switch. From there, information resources flow to and from various internal systems and even to an external router for access to the Internet. In this case, however, we need to define the transmission state as existing between the workstation and the hub. Information transmitted from the hub to other locations must be defined separately.

In this example, the transmission medium is Ethernet cabling that carries the information between the workstation and the hub. We must consider the entire transmission column (Figure 8.3) and assess all the security attributes we wish to enforce. Returning to the rows of security attributes, we see that confidentiality, integrity, and availability are all desirable security-relevant attributes we wish to maintain. Now it is time to assess what mechanisms we employ to maintain or enforce these requirements.

The Z-axis, or depth factor, of the cube defines the three primary categories of safeguards that can be employed to ensure the appropriate amount of security. If we begin with the first block, we can examine the security-relevant safeguards that ensure the appropriate amount of confidentiality assurance for the information in this transmission state. The value of the information (low, medium, or high) will help us determine to what degree these security requirements are enforced. It then becomes extremely useful for performing security cost-benefit trade-offs.

Transmission: Confidentiality

The first category to consider in the transmission-confidentiality square is technology. The safeguards we can employ for this transmission phase that also fall in the technology category begin with the medium itself. The fact the information flows through the wire means that a threat that can impact the confidentiality would require the ability to first access the data stream. Then the threat would need to be able to extract actual information (not simply data or electronic bits) and provide it to an unauthorized party. If your information valuation is relatively low or medium, you can review the library of vulnerabilities and determine that no special safeguards need to be employed to ensure confidentiality in this transmission state. If your information valuation is higher, you may wish to implementa physical technology safeguard such as protected conduits as a physical barrier to preclude wiretapping between the computer and the hub.

The next category of safeguard is policy. If you have a policy that only authorized, trusted personnel can access and modify the cabling that exists between the computer and the hub, you are using a policy safeguard. The inherent value of the McCumber Cube methodology can clue you in to these requirements and provide you a basis for making these decisions.

The human factor is the final category to consider. In this case, it may not make sense to employ any human-based safeguards. Yet, for high-value information environments, there may be a case made to have periodic inspection of the cabling system itself to look for unauthorized taps or other possible violations of confidentiality.

Transmission: Integrity

After completely analyzing the three categories of safeguards used to maintain the confidentiality of the information resources in this transmission state, we move to integrity. You will find that many (certainly not all) of the safeguards considered to enforce confidentiality in transmission are also effective in helping maintain information integrity as well. This is not always the case, especially as we move on to the processing function. However, it is important to realize that security management and enforcement mechanisms overlap and can help maintain one, two, or all thee of the security attributes of confidentiality, integrity and availability. We now move to the next block—integrity.

We again apply our analysis to the transmission state in question. However, this time we look at controls and safeguards employed to maintain the accuracy of the information resources. In the transmission medium, most integrity controls are handled by the transmission protocol itself. In this case, depending on the protocol used, a variety of integrity checking mechanisms are employed to ensure that which is sent is that which is received. These security safeguards include checksums, hash and secure hash algorithms, and hamming codes.

At the security enforcement level of abstraction, it is also worth reviewing the list of vulnerabilities to determine if there are existing vulnerabilities in the protocols that can impact the integrity of the information during transmission in this specific technical environment. If one or more exists, then updates, patches, and modifications to mitigate or eliminate the risk should be examined. If none are available, then consider the full spectrum of add-on integrity safeguards depending on the value of the information. The value of the information and specific threat environment will help determine the amount of resources required to mitigate the risks.

Transmission: Availability

The next security attribute to consider is availability. This aspect of security is often critical for information in its transmission state and is many times overlooked. On-call support technicians provided by the host organization may adequately address maintaining the availability of the transmission function between this particular workstation and the hub. In any case, it is wise to determine how much downtime can be tolerated (based again on the value of the information and its application) and safeguards tailored to meet these empirical requirements.

From a technology perspective, redundant transmission paths (cables, hubs, and routers) can be used to achieve these availability requirements. Moving on to policies, we can employ service level agreements that dictate response time and uptime availability requirements. The human intervention dimension of safeguards may be a help desk capability that responds to the loss of or degradation of availability.

Now we have walked through the entire analytic process of the methodology for this specific transmission state. We will move on to the information state of storage and again apply the same process we just employed for the transmission state. For our example, we will use the organizational server in our architecture to outline the process for assessing and implementing security in the storage state.

THE STORAGE STATE

The storage function (Figure 8.4) will be highlighted by one of the database servers in our notional architecture. We will define the storage state as the function where information resides at rest within the server environment. Obviously, the applications supporting the database environment can process information and information is transmitted into and out of the database. However, for purposes of the methodology, we concentrate solely on the function of information at rest.

Storage: Confidentiality

As we did with the example of transmission state above, we begin by analyzing the block at the intersection of the storage state and the confidentiality attribute of security. The question to ask here is what is or should be done to ensure the confidentiality of the information in its storage state. We will begin with the first category of safeguard— technology.

Information in its storage state is, by definition, at rest. To compromise the information’s confidentiality, unauthorized access is required. It is important to point out here that the concept of unauthorized access assumes that authorized users have appropriate granular levels of access. This concept supports principles outlined in models such as the Bell-LaPadula access model.

Access control technology does not (and cannot) assume that authorized users may not abuse their privileges and exploit information resources. Granular access control methodologies employed in many database applications and operating system controls can provide appropriate technology-enforced safeguards. These safeguards then can be layered with technology solutions such as intrusion detection tools and auditing systems to monitor the activities of users and look for unauthorized or security-relevant activities and incidents.

The challenge in a database environment such as the one depicted in this example is the level of granularity of the technology safeguards. Here is good place to consider applying the McCumber Cube methodology to the database subsystem to develop security requirements specific to this technology. However, if the vendor has not performed this analysis, it is up to the implementer or system operator to make security enforcement decisions by evaluating the database environment as an information storage state and treating it as a composite unit for analysis and enforcement purposes. Chapter 9 provides the framework for mapping information states and applying the methodology for a more granular assessment, if one is appropriate.

After assessing the technology controls for confidentiality of the information in storage in the database server, the next step is to consider procedural controls. In this instance, there may be policy or procedural controls that dictate access rules regarding personnel who are allowed access (through a technology-based safeguard such as the database access control subsystem) for maintenance, upkeep, and management purposes. These controls will support and enhance the technical controls that actually enforce these policy requirements.

Procedural controls for protecting information resources in the storage state often will be employed in support of technology safeguards. When we next consider human factors safeguards, we will notice that these options also will often be an integral aspect of the technical control structure. For instance, a security or systems administrator may need to manually review output from an audit system or access control log to search for anomalies and make a determination if the procedural requirements are being enforced by the technology controls. For this example, all three categories of safeguards are employed to help provide confidentiality for the information resources in their storage state within the database server.

Storage: Integrity

The next step is to assess integrity controls for information in its storage state within the database server. We begin by moving to the block at the intersection of storage and integrity. Maintaining integrity of information in a database environment is usually the purview of controls within the database application or the applications that manage this information resource. The confidentiality controls we assessed above can also be considered for protecting information integrity in this example.

It is not important to create definitive and more granular boundaries between the transmission, storage, and processing states within the database environment unless you are performing a more detailed assessment of the database application or the application that supports and manages it. This will be covered in Chapter 9. At this level of abstraction (security enforcement) it is sufficient to apply the storage state label while understanding that more granular processing and transmission states exist within the database application environment.

Even though the technology safeguards to ensure integrity may be embedded within the application, they need to be enumerated and evaluated as an integral component of the security assessment process. These controls can then be considered and highlighted as key elements of the security program because they support the critical integrity attribute. In most current security processes, these key security-relevant technology safeguards are either assumed or not considered. In the McCumber Cube methodology, these requirements are called out by the methodic decomposition of the cube and need to be assessed.

Procedural safeguards for protecting the integrity of information in its storage state are the next area for consideration. As with confidentiality safeguards, many integrity controls will often be employed in support of technology safeguards embedded in the database applications and storage systems themselves. Specific procedural controls for integrity may also encompass ways in which the information (or data in this case) is acquired and evaluated before inclusion in the database itself. In other words, procedural controls for managing integrity for information at rest relies most heavily on preventing unauthorized modification of the resources.

These procedural (as well as technical) controls may be implemented during the processing state or even the transmission state. Some researchers have argued that when information is at rest, it is, by definition, immune from modification or alteration— authorized or not. Again, this is an issue of abstraction. If a granular assessment has been performed on the database environment for the purposes of security functionality, then this evaluation can comprise an integral part of the security enforcement analysis. If not, these issues then must be considered as part of the security enforcement analysis described in this chapter.

As with procedural safeguards, human factors safeguards for information integrity are often employed as an adjunct to both technology and procedural controls. However, the structured methodology makes you define and list them to ensure they adequately support your protection requirements. These safeguards may include human review of information prior to input, human oversight of the database environment, or flags to alert operators and users to suspicious data elements.

Storage: Availability

The availability of the information resources in the storage state is an area that is usually overlooked by vulnerability-centric security models. No one could seriously deny the importance of ensuring the availability of information in its storage state. This is an area of major concern to the author of this text. Most of us have been confronted with the security problem of nonavailability of information that has been stored. If you have stored important information on a diskette, CD, or external hard drive that is lost or damaged, you have experienced this problem. Perhaps you have also considered or even taken steps to mitigate the risk. You can make an additional copy of the media, store a copy of the information on another computer system, or retain the information in another medium such as a printed copy of the information. In each case, you have looked to mitigate the risk of loss of information in its storage state.

The next step of the methodology is to first consider technology safeguards for maintaining the availability of information in the storage state in our example. Many database systems have backup and recovery capabilities built into their applications that are representative of this safeguard category. When the value of the information warrants it (high or even medium), a “hot” backup or real-time journaling system may be required to meet the requirements of the availability attribute. This dynamic capability can ensure that the data remains available to users when the primary system is impacted. When the value of the information dictates, you can also consider additional technical solutions that include a mix of these techniques.

Procedural controls are the next category of safeguard for analysis. Procedures are a vital protective aspect of providing availability of information in storage. Most organizations have these controls, yet few consider them as security mechanisms. Most data centers and systems administrators have procedurally based requirements to back up data on a recurring basis. This may be on a nightly, weekly, or monthly basis. Critical systems may also have specialized procedures to actually restore the data within a specified time period.

It is readily obvious that these technology and procedural safeguards come with a cost. The function of availability is normally easier to calculate because empirical measurements exist for determining how much availability you can afford. These metrics are most frequently expressed as uptime requirements (as a percentage) and restoration time (in linear time as days, hours, and minutes). The safeguard costs logically increase as availability requirements become more stringent. For some information systems, being able to back up to the previous week’s (or day’s) data is adequate based on the dramatically increased costs associated with more immediate restoration.

The final step in the storage state is to assess the human factors category of safeguards. In this case, the human factors come into play predominantly to enact the requirements of the procedural safeguards. This may seem a trivial distinction, but it highlights one of the key strengths of this methodology. The McCumber Cube approach ensures all safeguard elements are properly considered and accounted for in the implementation. The procedural controls that need to be enacted in the event of a loss of availability incident are moot if the people assigned to implement them and restore the lost data are not available. The human factors safeguards also should consider training and testing to ensure people assigned these security-relevant duties have adequate knowledge and the skills necessary to implement the policies.

THE PROCESSING STATE

The processing state (Figure 8.5) is unique to modern computing environments. In How to Use This Text, I challenge the reader to apply the methodology to precomputer information systems such as the telegraph and Napoleonic military command and control. Those who take the challenge realize that processing is purely a human-based endeavor if computing systems are not involved. However, with modern computing technology the manipulation and reinterpretation of information can take place outside the human sphere.

Technically, the computer is only executing a preestablished set of instructions coded into it by a human. However, this information state is critical to understanding and applying security in IT systems. If the processing state did not exist, extensive use of cryptography would accommodate most of the security implementation requirements for confidentiality and many for integrity. However, the automated manipulation of data and the resultant changes in the information produced requires the identification and assessment of the processing state as a unique state of information. The processing state represents a critical function fraught with vulnerabilities and security exposures. Information during this stage is vulnerable to a wide variety of threats.

To walk through this next state, we will use an application server as the example. In fact, we will target specifically a financial application on this computer system that manages the accounts for a small business. This system takes input from various users and other applications and creates analysis reports for decision makers and outputs information for submission to auditing groups and regulatory agencies.

The security implications are fairly obvious. If the reports are inaccurate, the corporate decision makers will be basing their decisions on faulty data. Erroneous reporting to regulatory agencies could result in fines and penalties or even possible imprisonment for company executives. Ensuring appropriate protection for this processing state will be essential. The methodology instructs us to call out the next column representing the processing function.

Processing: Confidentiality

The first step is to call out the block that represents the intersection of the processing function and the confidentiality attribute. As we stated in previous chapters, encrypted information cannot be processed. To process information, it must be in plaintext. However, there are numerous safeguards that must be considered to ensure the confidentiality of information in the processing state. The set of safeguards to consider at this point are the technology safeguards. As with the storage state, we must begin with access control technologies.

To violate the confidentiality of information during the identified processing function, we need to somehow gain access to the information during this state. Because we know that information here must be processed in plaintext, we can be assured that vulnerabilities in cryptographic protections would not be an issue. The violation of confidentiality is most logically accomplished by gaining unauthorized access to the information during this vulnerable state. Even if the application has a solid access control mechanism, other programs and memory storage areas that retain data after the completion of processing can prove capable of allowing unauthorized exposure of the information.

System administrators and those with privileged access to operating systems can take advantage of their access rights to view data that has been sent to the application for processing. They can also intercept reports before they are transmitted to authorized users. The processing function requires a careful review of all possible exploitations of confidentiality—even as the states of transmission and processing are invoked during the processing state.

The next step is to analyze procedural options for ensuring the confidentiality of information in its processing state. This could be a requirement to allow only certain people physical access to systems that process information. If the information is accorded the appropriate amount of protection as it is in the transmission state entering a secured perimeter, the physical security control may preclude unauthorized users from potentially obtaining keyboard access to the application server and its environment.

Another policy safeguard could be aimed at preventing any changes to the application not authorized by the vendor or application development organization. There is often an assumption that confidentiality is assured in some measure by the application vendor’s due diligence to create a product with a minimal amount of vulnerabilities. By maintaining the application in accordance with the vendor’s recommendations, at least a small measure of assurance can be factored into the analysis. Each of these procedural safeguards represents an option for protecting information in the processing state.

After looking at procedural ways to enforce confidentiality, human factors are the next to be assessed. These safeguards can include background checks for application developers, system administrators, operators, and auditors. These checks would go a partial way in mitigating risks from those people with a criminal background or potential problems (financial, social, or psychological) who may seek to gain access to sensitive company information.

Personnel background checks, for example, have become an integral aspect of many security programs. In fact, they are often used as a basic preemployment screening mechanism in the hiring process of many companies and are a critical security component for many government operations. Even basic aspects of a prehire screening, such as the veracity of educational claims, can provide insight into a person’s character.

These human factor security issues would work hand-in-hand with the procedural and technical controls to ensure the appropriate amount of protection is applied to information in the processing state. Again, the structured methodology approach ensures the complete spectrum of security safeguards are considered to provide a comprehensive look at all safeguards and how they interact.

Processing: Integrity

We next move to the block that represents the intersection of the processing state and the integrity attribute. As we did with the example of the confidentiality attribute above, we begin by analyzing what is or should be done to ensure the integrity of the information in its processing state. We will begin with the first category of safeguard—technology.

This is another block that represents significant vulnerabilities. The processing state is critical to maintaining the integrity (accuracy and robustness) of the information it manipulates. The first step is to consider technology safeguards for maintaining integrity.

In the application we are using for an example, much of the trust placed in the processing state is inherited from the people who coded the set of instructions that are performing the processing function. In many cases, the fact that an application is purchased from a major software vendor and is well respected in the industry counts for much. The various bugs and coding problems that crop up in most applications are a constant threat to the integrity of the information it manipulates. These programming errors are certainly just a part of operating automated processing systems, but technology safeguards exist.

Your processing state may require automated tools or software to preclude alteration to the programming code of the applications. Malicious code sentinels and anti-virus products help prevent changes to the application that can cause the processing function to act inappropriately. In the case of an e-mail application, it is instructive to look at the instance of malicious code that is programmed to cause an e-mail client to send out email messages to everyone in the users address book that contains another iteration of the worm or virus. To protect the integrity of the information assets during the e-mail processing function, security products such as virus scanners and content filters are invaluable technology safeguards.

Automated checks that search for anomalies or bad data can be incorporated into the processing function. As with the transmission phase, checksums, hashes, and other processing techniques can be employed to ferret out potential integrity problems during the processing state. These types of controls may not be required for our example (based on the probable value of the information resources), but it still is part of the methodology to consciously examine each block of the cube using each of the three safeguard categories for assessment purposes. If you choose not to use a safeguard, you are still far ahead of those who did not even examine the processing state for possible vulnerabilities and potential safeguards to mitigate risks.

Procedural safeguards comprise the next category to assess. For integrity of processing, there are many possible procedural safeguards. One policy could be to only use an application from a certain known vendor with a track record of ensuring sound software with extensive quality assurance testing. Another policy may require only updates to the code by this same trusted vendor.

Other procedural safeguards to consider here include requirements on the review of data or information pre—and postprocessing. Integrity is also supported by physical access controls to the processing environment and assurance of only authorized and monitored modifications to software. By physically controlling access to the system, the list of possible threats to the integrity of the information in the processing function is dramatically reduced.

Human factors safeguards are the final assessment area for this section. As with confidentiality, human factors safeguards can include background checks for application developers, system administrators, operators, and auditors. Although the issue of integrity and confidentiality is closely related in the processing function, it is still important to uniquely assess the human factors safeguards that can affect each attribute. Confidentiality and integrity are two completely separate security attributes, and although a sound security enforcement program has many mutually supporting controls, failure to uniquely examine the impact to these attributes leaves the analysis open to overlooked vulnerabilities and possible safeguards.

Processing: Availability

The final step for this information state is looking at the vulnerabilities and safeguards for availability of the processing function. Again, we begin with the technology vulnerabilities and safeguard options. As with transmission, many processing availability safeguards are centered on redundant technology and backup capabilities. For our example, it may be adequate for our financial application to maintain a simple backup copy of the software to reload in case of loss of the processing function through any incident that precludes the application from performing its processing function. A backup host system also may be a technology safeguard.

Availability safeguards in this area tend to work in concert with other availability safeguards such as the ones that support transmission and storage. Many applications and other processing capabilities can cross-protect by working with safeguards that support and protect other information states through the use of redundant facilities, hardware, software, and support.

Procedural safeguards that can help maintain the processing capabilities of our application are recovery procedures and alternate processing sites that can provide backup in case of system failures, attacks, environmental degradation, and other incidents. Many of these security fallback options also have empirical requirements to assess the efficacy of the safeguards. As with the storage function, quicker and more comprehensive restoration comes with more cost. These may be based on the time value of the information or the requirement for timely access to make critical decisions in support of the organizational mission. The metric may be days, hours, or even minutes. If our financial application is used to pay employees, timely processing of the payroll is definitely critical to the effective operation of the organization as well as employee morale.

Human factors are now the final vulnerability and safeguards assessment area for the processing state. The technology and procedural controls that need to be enacted in the event of a loss of the processing capability are completely ineffective if the people assigned to implement them and restore the processing capability are not included. The human factors safeguards should also consider training and testing to ensure people assigned these security-relevant duties have adequate knowledge and the skills necessary to operate the technology solutions and implement the appropriate security policies under the appropriate circumstances.

RECAP OF THE METHODOLOGY

The general overview of the methodology is rather simple to espouse. The steps are:

1 Information flow mapping:

- Define the boundary.

- Make an inventory of all information resources and technology.

- Decompose and identify all information states.

2 Cube decomposition based on information states:

- Call out column from the cube—transmission, storage, or processing.

- Decompose blocks by the attributes of confidentiality, integrity, and availability.

- Identify existing and potential vulnerabilities:

- Use vulnerability library.

- Develop or use safeguard library.

- Factor information values—use valuation metrics.

- Assign appropriate safeguards in each category.

3 Develop comprehensive architecture of safeguards (technology, procedures, human factors):

- Describe comprehensive security architecture components.

- Cost out architecture components (including procedural and human factor safeguards).

4 Perform comprehensive risk assessment for the specific environ ment (if necessary):

- Include threat and assets measurements.

- Add other implementation specific data.

- Perform cost trade-off and valuation analyses.

Although I have only included a short chapter on the risk assessment process, it is a critical aspect of ultimately making appropriate cost-benefit trade-offs for a security program and functions as the capstone for the implementation of specific elements of the security program. The McCumber Cube methodology allows the analyst or security practitioner to minutely examine the entire security environment outside of the actual implementation. Ultimately, the entire risk environment of the IT system must be included; that is where the elements of threat and asset valuation are ultimately employed to establish the trade-offs necessary for any security program. You can begin to approach perfect security (complete freedom from risk) only with unlimited resources. The risk assessment process will be able to use the safeguards developed with the McCumber Cube methodology and integrate that architectural environment into the risk assessment process to provide a solid underpinning to any information systems security program.