Epilogue

“All good things must come to an end.”

English Proverb

Acceptance test-driven development (ATDD) has been presented through stories and examples. Here is the final word.

Who, What, When, Where, Why, and How

The Preface stated the context of this book and the questions to be answered. Here are the questions again, and if you skipped the entire book, here are the answers.

• Who—The triad—customer, developer, and tester communicating and collaborating

• What—Acceptance criteria for projects and features, acceptance tests for stories

• When—Prior to implementation—either in the iteration before or up to one second before, depending on your environment

• Where—Created in a joint meeting, run as part of the build process

• Why—To effectively build high-quality software and to cut down the amount of rework

• How—In face-to-face discussions, using Given/When/Then and examples

So What Else Is There?

Feel free to write to me at [email protected] if you have comments or questions. Let me know what topics you would like covered in more depth. With the web world, it’s easier to increase the amount of information, so check out atdd.biz. If your triad needs an in-person run-through of creating acceptance tests, Net Objectives offers courses and coaching. (www.netobjectives.com)

Legal Notice

Based on Sam’s growing business, some readers may decide they want to open a CD rental store. For information regarding the legality of renting CDs in the United States, please see 17 USC 109 (b)(1)(A). In Japan, it is legal to have a licensed CD rental store that pays royalties to JASRAC (Japanese Society for Rights of Authors Composers and Publishers) [Isolum01].

Experiences of Others

I asked people to describe how ATDD was introduced to their teams and the benefits it produced. Here are their stories.

Rework Down from 60% to 20%

One team I have worked with was asked how much of their time they would estimate they spend on reworking features. Their estimate was about 60%. Having seen this situation a few times, I have found a method that seems to work with some pretty amazing results. Using FitNesse and Selenium, development items are written as executable specifications. The business analyst, tester, and developer define the feature in a FitNesse wiki and run automated tests on the feature from the same place. Using the Slim Scenario tables, features are written as English-readable sentences and then translated into commands below the covers. This makes the tests readable by the less technical members of the team so they can feel confident that they know what is being tested.

The business analyst types up what the feature should do. The developer and tester ask questions to define the scope, and the answers are added to the wiki. The tester translates the English sentences into Selenium scripts, and the developer starts to write unit tests. Once the Selenium scripts are complete, the developer runs them to check on the development process. The customer can run the tests at any time to see how much has been completed. After [the team members] started working through features this way, they estimated that their time spent on rework decreased to about 20%.

Dawn Cannan, agile tester

www.passionatetester.com/

Workflows Working First Time

I have experience with acceptance test-driven development on automated workflows. My team works on automated workflows for a European Mobile virtual network operator. When I started to coach/manage this team, the challenge was to keep (regain) the control on the software. With unit testing and then test-driven development, we took the control of the code base and we started to refactor and redesign workflow’s actions.

After that appeared our most difficult challenge. Individually, each action worked fine, but each time the development team or the QA team tested workflows from the beginning to the end, we found a lot of defects.

To solve that problem, we wrote some acceptance tests with Junit. It was a difficult job because workflows generate many outputs (messages, database change, web services calls, etc.) and need many inputs. The first results were not convincing: We added a lot of mocks and fakes and so missed a lot of little defects. The tests were difficult to understand and hard to maintain.

Then we decided to use Concordion and reduce the use of mocks and fakes. We sold the idea to the QA team, the CIO, and the business people. On the following project, we built a proof of concept on some new workflows to convince people that the time required to build the tests was valuable. It was a technical success: All the workflows covered by acceptance testing worked the first time, and the defects that were found were just special cases not covered by the tests.

We generalized the use of acceptance test-driven development to other parts of the information system. For us, that was a success because we reduced the number of defects delivered to QA, and we could start to work on the subject of continuous delivery. Our only regret is that, for the moment, the tests are prepared and used only by QA and the development team, not the business people. Perhaps the root cause of this issue is that we use the acceptances tests to check a lot of details that are not directly useful to the business people or that the most useful client for our automated workflow is our QA team.

Gabriel Le Van

Paris, France

Little Room for Miscommunication

I helped develop the Visual Hospital Touchscreen Solution for energizedwork. (See http://lean-health.blogspot.com/2010/02/visual-hospital-system.html for details.) The approach we took was:

- Quality assurance (QA) and product owner prepare the stories.

- QA pair with the developers at the start of a story, breaking the acceptance criteria up into slices and agreeing the probable order in which the slices will be delivered. We keep our stories small—2 days or less. There may be around eight acceptance criteria per story and usually one or two acceptance criteria per slice.

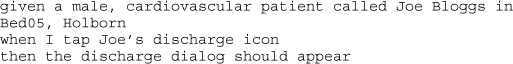

- QA and developers jointly write the automated acceptance tests for the first slice. We use Selenium IDE with a custom extension enabling Given-When-Then style tests, e.g.

We feel using Selenium IDE creates less of a barrier to entry for the QA and makes it easier to write the tests. Using the IDE, you can easily step forward and backward through a test or jump halfway through it. The drawback of the IDE is that it’s harder to set up test data, and comparatively slow. (We’ve written more extensions that go a long way to mitigating both these problems.)

- When the developers have the tests passing, we ask the QA to review, discuss any changes we had made to the tests, and assuming all is well, check in, then repeat from step 3 until all the slices are complete.

The benefits:

I think the biggest win is that because the automated acceptance tests are written jointly by the QA and developers, using near-plain English (as permitted by Given-When-Then), there is very little room for miscommunication. This means less rework and a happier QA/developer relationship. Other benefits include that the source code closely matches the business domain language and you can return to a test after 6 months and understand exactly what it was supposed to be doing.

Stephen Cresswell

Acuminous Ltd.

Saving Time

Acceptance test-driven development dovetails nicely with what I’ve called mock client testing. When creating automated tests for any body of code, write a test that pretends to be the client. Consume the code in the way you think the client will use the code. If you’re writing an API, write a test that uses the API the way you expect it to be used, [and] then write the code behind the API call. The goal is to create a suite of tests that exercise the system the way you expect it to be used. This never completely exercises the code, but it’s a great way to ensure all the basic functionality is in place.

A few years ago I was managing a small group of very talented developers at a startup. We had an existing product that was fairly complicated, and we were adding a network API. As the effort began, the team bogged down almost immediately. The work that one person finished would be broken by a teammate or by the same developer! The work was not going well, and it appeared that we had a great deal of frustrating work ahead of us.

I looked for a solution to this problem. This was a while before the current test automation movement, but that’s essentially what we hit upon. I asked the team to create a series of tests, one for each API, and then implement the feature.

Every person on the team disagreed with me. They didn’t like this new direction. One of the developers said, “We don’t have the cycles to write tests, then write code as well! If you insist, I’ll do it, but I want you to know how I feel. This is going to slow us down even more.”

I insisted, and [the] team moved forward. It’s worth noting that the team was already using a continuous integration system, but only for compiles, not testing.

Resistance was high during the first week, but I kept asking about the tests and checked to make sure they were being checked in. The second week, it was grudgingly conceded that the tests weren’t a waste of time, but it was still just breaking even when you considered how much time it took to write them. But week three was the turning point.

By week three, the team had finally written enough tests that they could create them rapidly and generated enough tests that they started to see the benefit. They’d touch a piece of code, a test for a completely different API would break, and they’d fix it. The long hours in the debugger evaporated. When a problem occurred, the test found it within minutes instead of days later.

In my mind, the effort had truly succeeded when my skeptical co-worker came to me and said, “Jared, I was completely wrong. I had no idea how much time this would save us. It keeps us on track, catches problems when they occur, and now that we’ve had some practice, it doesn’t take much time to add to the test suite.”

Whether you call it acceptance test-driven development or mock client testing, create the tests that drive your product. Keep your team focused on building what you need, and catch the problems as quickly as they occur.

Jared Richardson

http://agileartisans.com/main

Getting Business Rules Right

Scenarios for testing

Years ago, I delivered software for primary care to the National Health Service (NHS, the UK’s public health provider). Every year we received new requirements that we had to meet to get our software accredited as providing the functionality someone in primary care needed. We received this in two packages. One was a conventional list of requirements; the other was a test pack. The test pack had a set of scenarios—some end to end, some covering a specific feature that needed to pass before you could get accreditation. Early we learned that the test pack was the best source of information on what we needed to build to be “done.” Requirements are vague and open to interpretation. Scenarios are concrete, specific, and rarely have interpretation. Ever get that moment where you built something and then had an argument with the person who wrote the requirement because [he] just told you that your implementation is nothing like [his] requirements? Ever had [him] point to some sentence that you overlooked that changes the whole perception of how you deliver the story? Scenarios avoid that problem because they call attention to the correct behavior of the software.

So the ideal is to capture the scenarios that the software should support. Those give you the conditions for accepting the story as done. These are the acceptance tests. Now the composition of your team will dictate to some extent who is responsible for the parts of this process. In most cases, I have found it unlikely that the users of your software will be able to express the scenarios, though sometimes you might get acceptance criteria from them. In our case we have domain experts who act as proxies for real customers, so we ask them to define acceptance criteria—usually just a list—of things that a successful implementation will represent.

Interestingly, we occasionally fall down on getting scenarios written and end up developing off the acceptance criteria. The result is all too predictable. What gets developed is not quite right or what was quite intended, and we end up with rework. We certainly need to get better at this discipline. A root cause analysis tends to suggest that what fails for us here is the communication about what we should build. The storytest first approach is forcing the correct behavior of understanding the story before we build it.

Acceptance tests and unit tests

There is definitely some pressure here with folks occasionally feeling that we are “testing twice:” once in the acceptance test and once in the unit test. Most developers would prefer just to write the unit test and not have to implement the acceptance test. I believe that acceptance testing comes into its own for ensuring we have developed the right work and during large-scale refactoring.

However, I think this feeling—that the acceptance tests are not adding value—occurs most often when you slide into post-implementation FitNesse testing because your scenarios were not ready. A lot of the value-add comes from defining and understanding the scenario up front. Much of the value in acceptance testing comes from agreeing what “done” means. That is often less clear than people think, and defining a test usually reveals a host of disagreements and tacit assumptions.

It is hard to write unit tests as part of the definition of the story. You don’t necessarily know how you will build the story at planning or definition. The classes that implement the functionality probably don’t exist, and you cannot exercise them from a test fixture at that point. Tools like Cucumber and FitNesse decouple authoring a test from implementing the test fixture for that test. This enables you to author the scenario in your test automation tool before you begin implementing the fixture that exercises the SUT. This is the key to enabling a test-first approach, where you need to communicate the scenario for confirmation prior to commencing work such as planning. Tools like FitNesse and Cucumber provide an easy mechanism to communicate your scenarios. We find that FitNesse allows customers and developers to communicate about the scenario before implementation. You can also see the results of executing it.

Because the conversation is more important than the tool, a tool like FitNesse or Cucumber may not be appropriate for the acceptance test. The most likely case is that you do not have any business rules to put under test. Don’t feel constrained to use a particular tool. The most important thing is to define the acceptance test and then decide what tool is appropriate to automate the test. We even have some cases where the value of automation is too low. Where we are configuring the system, for example, it may be easier to test the rules that work with the configured system than test the act of configuring the system itself.

No user interface

One obvious point here is that no user interface has been defined as yet. One obvious problem is that testing through the UI depends on the UI being completed to define tests. However, it can be worth thinking about mocking up the UI at the same time as you write the scenarios using a tool like Balsamiq.

We had a struggle at the beginning of the project over responsibility for defining how this should look before realizing that it needed to be cooperative. In the end, it all comes back to the agile emphasis on communication. The customer asks for features, but the developers need to negotiate how the features will be implemented.

Some folks still like to work from the UI down, whereas I want them to work from the acceptance tests on down, then hook up to the UI. It’s probably all tilting at windmills at some point, but for me while both are essential to getting it right, I see more cost in the majority of cases to the rework for not getting the business rule right than to changing the UI.

Ian Cooper

Codebetter.com

Game Changing

I am an in-house software development consultant at Progressive Insurance and a proponent of ATDD. Here’s my story....

In 2006, I started an assignment with a group that published service APIs to other parts of our company for the purpose of retrieving data from external vendors. At the time, they were testing most of their services manually, using the GUIs of the calling applications. Their testing was dependent upon the availability of both their own and their clients’ test environments, and disruptions were common. Additionally, their service request and response schemata consisted of thousands of fields, but only dozens of those fields were exposed directly in the client GUIs. The rest were calculated, defaulted, or simply ignored. Not surprisingly, the test team felt squeezed by schedule pressure and quality problems.

Their management decided that they were simply out-gunned by the technical challenge before them, so they recruited some programmers with testing skills (and vice versa) to join the team. That’s where I and a couple of other test engineers came onto the scene. One of the first things we did was raise awareness about the low level of coverage for these relatively complex interfaces. (This was somewhat disconcerting for veteran team members, and a tribute to the maturity of all involved that these conversations were rarely contentious.) We also began surveying other test teams within our company to see if there were any tools already in-house that we could use to circumvent the client GUIs and go directly at our service interfaces. We discovered a group that was using Fit for a similar purpose, and it was love at first sight.

We copied their implementation (Fit and OpenWiki with some customizations) to our environment, and within days we were creating and executing tests for some of our larger projects. Within a few weeks we had these tools well integrated into our infrastructure and processes. Tests were now being defined during or shortly after requirements definition, frequently serving to clarify requirements, but we didn’t know then to call it ATDD. Soon, developers were asking for our tests to run before check-in and were helping with fixture design and development.

The number of tests for our systems typically increased five-fold as we introduced our implementation of automated ATDD, and we moved from executing a handful of test passes per project to a handful of passes per day. Defects discovered in our QA environment dropped dramatically because we were running the tests in predecessor environments; the tests became informal entry criteria. Test projects were costing about the same and taking about the same amount of time, but quality was increasing significantly. In fact, the team’s quality ranking within the company, based on production availability of our systems, improved from worst to first in about a two-year period.

In addition to the quality improvements, we gained a great deal of confidence in our ability to refactor our systems and move them through environments because coverage had increased substantially and test execution had become relatively effortless. Furthermore, the clarity, usability, and credibility of the tests led to more collaborative test failure investigations. It was not uncommon to see developers, testers, and business analysts huddled around a screen or camped in a conference room discussing the significance of patterns of red cells on a test result table, discovering and resolving issues in minutes where formerly it had taken hours or days of asynchronous communication. While there are many other ways that we have continued to improve our testing, nothing has been as “game changing” as our move to automated ATDD with Fit.

Greg McNelly

Progressive Insurance

Tighter Cross-Functional Team Integration – Crisp Visible Story Completion Criteria – Automation Yields Reduced Testing Time

I am an engineering manager for a major publishing company. The adoption of Story Acceptance Testing with the FitNesse framework has resulted in tighter cross-functional integration between development and QA team members. Acceptance tests are written on a per story basis at the start of the sprint. The tests and the results are rendered visually in an easy to understand table format—they are even comprehensible by nontechnical business stakeholders. Because the application under development is business logic intensive and has a minimal UI, the demo at the sprint review meeting consists primarily of running and viewing the test results.

On previous projects, the functional testing efforts were entirely manual and very time consuming. To ensure a stable release, it was necessary to implement a feature freeze 2+ months in advance of the release date. With the manual approach to testing, the sprint would frequently end with untested stories. Adoption of the FitNesse framework provides nearly instantaneous feedback regarding the completion status of each story.

Based on the rapid ROI of the FitNesse adoption for this project, all subsequent new projects will adopt a test automation strategy. Currently, QA is writing the tests. Going forward, I anticipate that a broader audience (business analysts and Product Owners) will contribute to test development.

Gary Marcos

http://www.linkedin.com/in/agile1

What Is Your Story?

Share your success on how ATDD improved your organization with others. Send your story to [email protected] or visit http://atdd.biz.

Summary

• The Who, What, When, Where, Why, and How have been explained.

• The experiences and benefits have been documented.

• What are you waiting for? Why not try it?