Chapter 3. Testing Strategy

“How do I test thee? Let me count the ways.”

Elizabeth Barrett Browning (altered)

The different types of testing that occur during development are explained to give the context in which acceptance tests are developed. The tests that the customer provides are only one part of the testing process.

Types of Tests

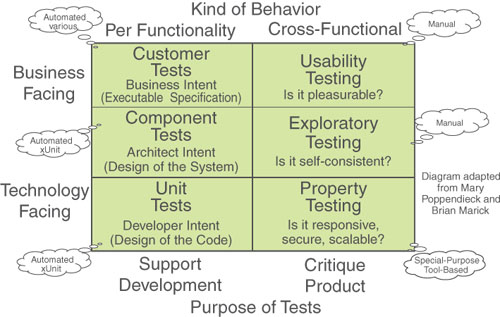

Acceptance tests are one part of the testing strategy for a program. The easiest way to describe the full set of tests for an application is to use the testing matrix from Gerard Meszaros [Meszaros01]. The matrix in Figure 3.1 shows how acceptance tests fit into the overall picture.

Figure 3.1. The Testing Matrix

(Source: Meszaros, Xunit Test Patterns: Refactoring Test Code, Fig 6.1 “Purpose of Tests” p. 51, © 2007 Pearson Education, Inc. Reproduced by permission of Pearson Education, Inc.)

Customer tests encompass the business facing functional tests that ensure the product is acceptable to the customer. These functional tests are the acceptance tests described in this book. The result of almost every acceptance test can be expressed in yes or no terms. Examples are, “When a customer places an order of $100, does the system give a 5% discount?” and, “Is the Edit button disabled if the account is inactive?”

As shown in lower right of the matrix, there are other requirements for a software system checked by the property tests. These include nonfunctional requirements (often called the ilities or quality attributes) such as scalability, reliability, security, and performance.1 Some of the tests for these requirements can be expressed in questions with yes or no answers. For example, “If there are 100 users on the system and they are placing orders at the same time, does the system respond to each one of them in less than 5 seconds?” However, for other quality attributes, the question can be asked, but the answer is unknowable, such as, “Is the system secure from all threats?” For the user to accept the system, the system needs to pass these nonfunctional tests. So the property tests are sometimes referred to as nonfunctional acceptance tests.

Usability tests are in a separate category. You might create some factual tests, such as, “Given a certain level of user, can he pay for an order in less than 30 seconds?” or, “Given 100 users ranking the system usability on a scale from 1 to 10, is the average greater than 8?” But often, usability is more subjective: “Does this screen feel right to me?” or, “Does this workflow match the way I do things?” Usability testing is strictly manual. No robot program can measure the usability of a system. Often the customer is of the mind, “I’m not sure what I’d like, but I’ll know it when I see it.” It’s difficult to write a test for that [Constantine01], [Nielsen01], [Aston01].

Exploratory tests are tests whose flow is not described in advance [Pettichord01]. An exploratory tester does parallel test design, execution, result interpretation, and learning. Exploratory testing may disclose defects undiscovered by other forms of testing [Whittaker01], [Bach01].

The term has also been applied to a situation in which all team members—Tom, Cathy, and Debbie—take on the persona of a user and go through the system based on the needs and abilities of that user. Because a system has to be working to be explored, these tests cannot be created up front. But they can be performed whenever the program is in a working condition.

Unit tests are created by Debbie and other developers in conjunction with writing code. They aid in creating a design that is testable, a measure of high technical quality. Unit tests also serve as documentation for the way the internal code works.

Component tests verify that units and combinations of units work together to perform the desired operations. As we will see later, many of the unit and component tests are derived from the acceptance tests [Wiki03].

All types of testing are important to ensure delivery of a quality product.2 This book discusses mainly acceptance tests—the functional tests that involve collaboration between the business customer, the developer, and the tester.

Where Tests Run

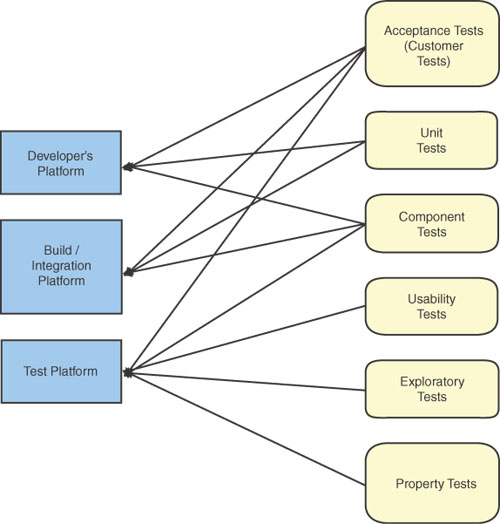

Acceptance tests, as defined in this book, can be run on multiple platforms at multiple times. An example of some of the platforms is shown in Figure 3.2. Debbie runs unit tests on her machine. She can also run many acceptance tests, particularly if they don’t require external resources. For example, any business rule test can usually run on her machine. In some instances, she may create test doubles for external resources to avoid having tests depend on them. The topic of test doubles will be covered in Chapter 11, “System Boundary.”

Figure 3.2. Where Tests Run

On a larger project, Debbie and the other developers would merge their code to a build or integration platform. The unit tests of all the developers would be run on this platform to make sure that the changes one developer makes in his code would not affect the changes that other developers make. The acceptance tests would be run on this platform if the external resources or their test doubles are available. In Sam’s project, Debbie’s machine acts as both the developer platform and the integration platform because she has no other developers on the project.

Once all tests pass on the build/integration platform, the application is deployed to the test platform. On this platform, the full external resources, such as a working database, are available. All types of tests can be run here. But often the unit tests are not run, particularly if the application is deployed as a whole and not rebuilt for the test platform.

Cathy, the customer, and other users try out the user interface to see how well it works. Tom can do some exploratory testing. If this were a system that required it, the security testers and the performance testers could have their first go at the application.

Once the customer is satisfied with the outcome of all tests, the application is deployed to the production platform. There is still a possibility that bugs may show up. Users may do entirely unexpected things, or there may be some configuration that causes problems for the application. Debbie and Tom have a measure of quality that is the number of bugs in production. They are called escaped bugs because they escaped discovery from all other testing.

Test Facets

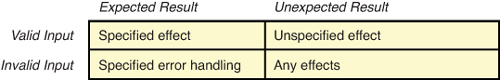

Figure 3.3 shows examples of positive and negative testing. Positive tests ensure that the program works as expected. Negative testing checks to see that the program does not create unexpected results. Acceptance tests that the customer thinks about are mostly in the “Specified Effect” box. The ones that Tom and Debbie come up with are in the other three boxes. Tom, as the tester, has a particular focus on finding unexpected results.

Figure 3.3. Positive and Negative Testing

Control and Observation Points

Tests are often run from the external view of the system. Bret Pettichord talks about control points and observation points [Pettichord01]. A control point is the part of the system where the tester inputs values or commands to the system. The observation point is where the system response is checked to see that it is performing properly. Often the control point is the user interface and the output is observed on the user interface, a printed report, or a connection to an external platform. As seen in the next chapter, it is often easier to run many tests if you have control and observation points within the system.

New Test Is a New Requirement

Requirements and tests are linked. You can’t have one without the other. They are like Abbott and Costello, Calvin and Hobbes, nuts and bolts, or another favorite duo. The tests clarify and amplify the requirements [Melnik01]. A test that fails shows that the system does not properly implement a requirement. A test that passes is a specification of how the system works. Any test created after the code is written is a new requirement or a new detail on an existing requirement.3

If Cathy comes across a new detail after Debbie has implemented the code, the triad needs to create a new test for that detail. For example, suppose there is an input field that doesn’t have limits on what can go in it. Then Cathy realizes that there needs to be a limit. The triad would create tests to ensure that the limit is checked on input. The requirement that there is a limit and the tests that ensure it is checked are linked. Because the tests did not exist before Debbie finished the code, it is not a developer bug that the limit was not checked. It is just a new requirement that needs to be implemented. Some people might call it an analysis bug or a missed requirement. Or you can simply say, “You can’t think of everything” and call it a new requirement.

Summary

• Testing areas include

• Acceptance tests that are business-facing functionality tests

• Tests that check component and module functionality

• Unit tests that developers use to drive the design

• Usability, exploratory, and quality attribute (reliability, scalability, performance)

• Functionality tests should be run frequently on developer, build/integration, and test platforms.

• Tests and requirements are linked together.