IBM System Storage DS8000

This chapter addresses specific considerations for using the IBM System Storage DS8000 as a storage system for IBM ProtecTIER servers.

This chapter describes the following topics:

•DS8000 series overview and suggested RAID levels

•General considerations for planning tools, metadata, user data, firmware levels, replication

|

Note: The DS8000 series models DS8100, DS8300, DS8700, and DS8800 (types 2107 and 242x, and models 931, 932, 9B2, 941, and 951) have been withdrawn from marketing. The currently available DS8000 products have been replaced by the IBM System Storage DS8870 (type 242x model 961).

These predecessor products are still all supported as back-end storage for ProtecTIER, and will be covered in this chapter.

For a list of supported disk storage systems, see the IBM System Storage Interoperation Center (SSIC) and ProtecTIER ISV Support Matrix. More details are in Appendix B, “ProtecTIER compatibility” on page 457.

|

11.1 DS8000 series overview

The DS8000 family is a high-performance, high-capacity, and resilient series of disk storage systems. It offers high availability (HA), multiplatform support, and simplified management tools to provide a cost-effective path to an on-demand configuration.

Table 11-1 describes the DS8000 products (DS8300, DS8700, DS8800, DS8870).

Table 11-1 DS8000 hardware comparison

|

|

DS8300

|

DS8700

|

DS8800

|

DS8870

|

|

Processor

|

P5+ 2.2 GHz

4-core

|

P6 4.7 GHz

2 or 4-core

|

P6+ 5.0 GHz

2 or 4-core

|

P7 4.228 GHz

2, 4, 8, 16-core

|

|

Processor memory

|

32 - 256 GB

|

32 - 384 GB

|

16 - 384 GB

|

16 - 1024 GB

|

|

Drive count

|

16 - 1024

|

16 - 1024

|

16 - 1536

|

16 - 1536

|

|

Enterprise drive options

|

FC - 73, 146, 300, 450 GB

|

FC - 300, 450, 600 GB

|

SAS2 - 146, 300, 450, 600, 900 GB

|

SAS2 - 146, 300, 600, 900 GB

|

|

Solid-state drive (SSD) options

|

73, 146 GB

|

600 GB

|

300, 400 GB

|

200, 400,800 GB

1.6 TB

|

|

Nearline drive options

|

1 TB

|

2 TB

|

3 TB

|

4 TB

|

|

Drive enclosure

|

Megapack

|

High-density, high-efficiency Gigapack

|

||

|

Max physical capacity

|

1024 TB

|

2048 TB

|

2304 TB

|

2304 TB

|

|

Power supply

|

Bulk

|

Bulk

|

Bulk

|

DC-UPS

|

|

Rack space for SSD Ultra Drawer

|

No

|

No

|

No

|

Yes

|

|

Redundant Array of Independent Disks (RAID) options

|

RAID 5, 6, 10

|

RAID 5, 6, 10

|

RAID 5, 6, 10

|

RAID 5, 6, 10

|

|

Internal fabric

|

Remote Input/Output (RIO)-G

|

Peripheral Component Interconnect Express (PCIe)

|

||

|

Maximum logical unit number (LUN)/count key data (CKD) volumes

|

64,000 total

|

64,000 total

|

64,000 total

|

65,280 total

|

|

Maximum LUN size

|

2 TB

|

16 TB

|

16 TB

|

16 TB

|

|

Host adapters

|

IBM ESCON x2 ports

4 Gb FC x4 ports

|

4 Gb FC x4 ports

8 Gb FC x4 ports

|

8 Gb FC x4 or x8 ports per adapter

|

8 Gb FC x4 or x8 ports per adapter

16 Gb FC x4 ports per adapter

|

|

Host adapter slots

|

32

|

32

|

16

|

16

|

|

Maximum host adapter ports

|

128

|

128

|

128

|

128

|

|

Drive interface

|

2 Gbps FC-AL

|

2 Gbps FC-AL

|

6 Gbps SAS2

|

6 Gbps SAS2

|

|

Device adapter slots

|

16

|

16

|

16

|

16

|

|

Cabinet design

|

Top exhaust

|

Front-to-back

|

||

Table 11-2 compares key features of the DS8000 products.

Table 11-2 DS8000 key feature comparison.

|

|

DS8300

|

DS8700

|

DS8800

|

DS8870

|

|

Self-encrypting drives

|

Optional

|

Optional

|

Optional

|

Standard

|

|

Point-in-time copies

|

IBM FlashCopy®, FlashCopy SE

|

Same plus Spectrum Control Protect Snapshot, Remote Pair FlashCopy

|

Same

|

Same

|

|

Smart Drive Rebuild

|

No

|

Yes

|

Yes

|

Yes

|

|

Remote Mirroring

|

Advanced mirroring

|

Same plus Global Mirror Multi Session, Open IBM HyperSwap® for AIX

|

Same

|

Same

|

|

Automated drive tiering

|

No

|

Easy Tier Gen 1, 2, 3

|

Easy Tier Gen 1, 2, 3, 4

|

Same

|

|

Thin provisioning

|

Yes

|

Yes

|

Yes

|

Yes

|

|

Storage pool striping

|

Yes

|

Yes

|

Yes

|

Yes

|

|

I/O Priority Manager

|

No

|

Yes

|

Yes

|

Yes

|

|

Graphical user interface (GUI)

|

DS Storage Manager

|

Same plus enhancements

|

New XIV-like GUI

|

Same

|

|

Dynamic provisioning

|

Add/Delete

|

Same plus depopulate rank

|

Same

|

Same

|

For more information about the DS8000 family products, see the following web page:

11.1.1 Disk drives

This section describes the available drives for the DS8000 products.

Solid-state drives (SSD)

SSDs are the best choice for I/O-intensive workloads. They provide up to 100 times the throughput and 10 times lower response time than 15,000 revolutions per minute (RPM) spinning disks. They also use less power than traditional spinning disks.

SAS and Fibre Channel disk drives

Serial-attached Small Computer System Interface (SAS) enterprise drives rotate at 15,000 or 10,000 RPM. If an application requires high performance data throughput and continuous, intensive I/O operations, enterprise drives are the best price-performance option.

Serial Advanced Technology Attachment (SATA) and NL-SAS

The 4 TB nearline SAS (NL-SAS) drives are both the largest and slowest of the drives available for the DS8000 family. Nearline drives are a cost-efficient storage option for lower intensity storage workloads, and are available since the DS8800. Because of the lower usage and the potential for drive protection throttling, these drives are a not the optimal choice for high performance or I/O-intensive applications.

11.1.2 RAID levels

The DS8000 series offers RAID 5, RAID 6, and RAID 10 levels. There are some limitations:

•RAID 10 for SSD is not standard and is only supported through a request for product quotation (RPQ).

•SSDs cannot be configured in RAID 6.

•Nearline disks cannot be configured in RAID 5 and RAID 10.

RAID 5

Normally, RAID 5 is used because it provides good performance for random and sequential workloads and it does not need much more storage for redundancy (one parity drive). The DS8000 series can detect sequential workload. When a complete stripe is in cache for destaging, the DS8000 series switches to a RAID 3-like algorithm. Because a complete stripe must be destaged, the old data and parity do not need to be read.

Instead, the new parity is calculated across the stripe, and the data and parity are destaged to disk. This action provides good sequential performance. A random write causes a cache hit, but the I/O is not complete until a copy of the write data is put in non-volatile storage (NVS). When data is destaged to disk, a write in RAID 5 causes four disk operations, the so-called write penalty:

•Old data and the old parity information must be read.

•New parity is calculated in the device adapter.

•Data and parity are written to disk.

Most of this activity is hidden to the server or host because the I/O is complete when data enters the cache and non-volatile storage (NVS).

RAID 6

RAID 6 is an option that increases data fault tolerance. It allows additional failure, compared to RAID 5, by using a second independent distributed parity scheme (dual parity). RAID 6 provides a read performance that is similar to RAID 5, but has more write penalty than RAID 5 because it must write a second parity stripe.

RAID 6 should be considered in situations where you would consider RAID 5, but there is a demand for increased reliability. RAID 6 is designed for protection during longer rebuild times on larger capacity drives to cope with the risk of having a second drive failure in a rank while the failed drive is being rebuilt. It has the following characteristics:

•Sequential read of about 99% x RAID 5 rate.

•Sequential write of about 65% x RAID 5 rate.

•Random 4 K 70% R/30% W IOPS of about 55% x RAID 5 rate.

•The performance is degraded with two failing disks.

|

Note: If enough disks are available and capacity is not an issue at an installation, then using a RAID 6 array for best possible protection of the data is always a better approach.

|

RAID 10

A workload that is dominated by random writes benefits from RAID 10. In this case, data is striped across several disks and concurrently mirrored to another set of disks. A write causes only two disk operations compared to the four operations of RAID 5. However, you need nearly twice as many disk drives for the same capacity compared to RAID 5.

Therefore, for twice the number of drives (and probably cost), you can perform four times more random writes, so considering the use of RAID 10 for high-performance random-write workloads is worthwhile.

11.2 General considerations

This section describes general considerations for the ProtecTIER Capacity Planning tool and some guidelines for the usage and setup of metadata and user data in your DS8000. For an example of the ProtecTIER Capacity Planning tool, see 7.1, “Overview” on page 100.

11.2.1 Planning tools

An IBM System Client Technical Specialist (CTS) expert uses the ProtecTIER Capacity Planning tool to size the ProtecTIER repository metadata and user data. Capacity planning always differs because it depends heavily on your type of data and the expected deduplication ratio. The planning tool output includes detailed information about all volume sizes and capacities for your specific ProtecTIER installation. If you do not have this information, contact your IBM Sales Representative to get it.

|

Tip: When calculating the total amount of physical storage required to build the repository for ProtecTIER, always account for three factors:

•Factoring ratio (estimate by using the IBM ProtecTIER Performance Calculator)

•Throughput

•Size of the repository user data

The factoring ratio and the size of the repository directly impact the size of the metadata volumes: the bigger these two values are, the bigger the required metadata volumes size will be requested. The throughput directly impacts the number of metadata values: the higher the desired throughput, a larger number of metadata volumes will be requested.

Consider the three factors for the initial installation, always accounting for the future growth of the business.

|

11.2.2 Metadata

Consider the following items about metadata:

•Use the ProtecTIER Capacity Planning tool and the Create repository planning wizard output to determine the metadata requirements for your environment.

•You must use RAID 10 for metadata. Use high-performance and high-reliability enterprise class disks for metadata RAID 10 arrays.

•When possible, do not use SATA disks, because RAID 10 is not supported by SATA disks and because ProtecTIER metadata has a heavily random read I/O characteristic. If you require a large physical repository and have only SATA drives available in the storage system, you must use the rotateextents feature for all LUNs to ensure the equal distribution of ProtecTIER workload across all available resources. For more information about the usage of the rotateextents feature, see 11.3, “Rotate extents: Striping and when to use it” on page 173.

11.2.3 User data

Consider the following items about user data:

•ProtecTIER is a random-read application. 80 - 90% of I/O in a typical ProtecTIER environment is random read. Implement suitable performance optimizations and tuning as suggested for this I/O profile.

•For SATA drives or large capacity disk drives, use RAID 6 with 6 + 2 disk members for increased availability and faster recovery from disk failure.

•Do not intermix arrays with different disk types in the metadata (MD) and the user data (UD) because smaller disk types hold back the performance of larger disk types and degrade the overall system throughput.

•For smaller capacity FC or SAS drives, use RAID 5 with at least five disk members

per group.

per group.

•Create an even number of LUNs in each pool.

.

|

Important: With ProtecTIER and DS8000, create LUNs that are all the same size to avoid performance degradation.

Starting with ProtecTIER Version 3.2, the management of LUNs greater than 8 TB is improved. When ProtecTIER uses LUNs greater than 8 TB, it splits them into logical volumes of smaller size. Therefore, you can work with LUNs greater than 8 TB, but there is no benefit in performance in completing this action.

Always use RAID 6 for SATA or NL-SAS drives for the user data LUNs. With SAS drives, only RAID 6 is supported.

|

•Do not use thin provisioning with your ProtecTIER. Thin provisioning technology enables you to assign storage to a LUN on demand. The storage can present to a host a 10 TB LUN, but allocate only 2 TB of physical storage at the beginning. As data is being written to that LUN and the 2 TB are overpassed, the storage will assign more physical storage to that LUN, up until the LUN size is reached.

When the ProtecTIER repository is built, ProtecTIER reserves all of the space in the user data file systems, by writing zeros (padding) in the entire file system. This padding process voids the reason for using thin provisioning.

11.2.4 Firmware levels

Ensure that you are using supported firmware levels. When possible, use the current supported level. For compatibility information, see the IBM SSIC and ProtecTIER ISV Support Matrix. More details are in Appendix B, “ProtecTIER compatibility” on page 457.

11.2.5 Replication

Do not use disk-based replication, because disk-based replication features are not supported by the ProtecTIER product. Rather than using the replication feature of the DS8000, use the ProtecTIER native replication. For more information about replication, see Part 4, “Replication and disaster recovery” on page 359.

11.3 Rotate extents: Striping and when to use it

This section describes the rotateexts feature, and when to use it, or not use it, in your ProtecTIER environment. The rotate extents (rotateexts) feature is also referred to as Storage Pool Striping (SPS). In addition to the rotate volumes extent allocation method, which remains the default, the rotate extents algorithm is an extra option of the mkfbvol command.

The rotate extents algorithm evenly distributes the extents of a single volume across all the ranks in a multirank extent pool. This algorithm provides the maximum granularity that is available on the DS8000 (that is, on the extent level that is equal to 1 GB for fixed-block architecture (FB) volumes), spreading each single volume across multiple ranks, and evenly balancing the workload in an extent pool.

Depending on the type and size of disks that you use in your DS8000 server, and your planned array size to create your ProtecTIER repository, you can consider using rotateexts. Because the ProtecTIER product already does a good job at equally distributing the load to the back-end disks, there are some potential scenarios where you should not use rotateexts (as described in 11.3.1, “When not to use rotate extents” on page 174).

|

Important: For ProtecTIER performance, the most critical item is the number of spinning disks in the back end. The spindle count has a direct effect on the ProtecTIER performance. Sharing disk arrays between ProtecTIER and some other workload is not recommended. This situation directly impacts your ability to reach your wanted performance. Because you do not share disks between ProtecTIER and other workloads, assigning the full array capacity to the ProtecTIER server is suggested.

|

With these considerations, you can easily decide when to use rotateexts and when not to use it. In the DS8000, the following array types should be used with ProtecTIER, taking the host spare (S) requirements into account:

•4+4 RAID 10 or 3+3+2S RAID 10

•7+1 RAID 5 or 6+1+S RAID 5

•6+2 RAID 6 or 5+2+S RAID 6

|

Tip: The DS8000 server creates four spares per device adapter pair. If you have a spare requirement when you create your RAID 10 arrays, you must create 3+3+2S RAID 10 arrays. You should redesign your layout to enable all metadata arrays to be 4+4 RAID 10 arrays only. Do not create 3+3+2S RAID 10 arrays for DS8000 repositories.

|

If you use 3 TB SATA disks to create your arrays, you can have these array dimensions:

•Creating a 6+2 RAID 6 with a 3 TB disk results in a potential LUN size of 18 TB.

•Creating a 5+1+S RAID 5 with a 3 TB disk results in a potential LUN size of 15 TB.

In this case, use rotateexts to equally distribute the ProtecTIER load to the DS8000 across all available resources. The rotate extents feature helps you create smaller LUNs when ProtecTIER code older than Version 3.2 is installed.

|

Important: ProtecTIER metadata that is on the RAID 10 arrays has a heavily random write I/O characteristic. ProtecTIER user data that is on RAID 5 or RAID 6 arrays has a heavily random read I/O characteristic. You should use high-performance and high-reliability enterprise-class disk for your metadata RAID 10 arrays.

|

11.3.1 When not to use rotate extents

Rotate extents (rotateexts) is a useful DS8000 feature that can be used to achieve great flexibility and performance with minimal effort. ProtecTIER includes special requirements where using the rotateexts feature does not always make sense.

The ProtecTIER product does a great job of equally distributing its load to its back-end disks and directly benefits all available resources, even without rotateexts. The typical ProtecTIER write pattern does not create hot spots on the back-end disk, so rotateexts does not contribute to better I/O performance.

If the repository needs to be grown, the addition of more disks to already existing extent pools, or the addition of another extent pool with all new disks, creates storage that has different performance capabilities than the already existing ones. Adding dedicated arrays with their specific performance characteristics enables the ProtecTIER server to equally distribute all data across all LUNs. So, all back-end LUNs have the same performance characteristics and therefore behave as expected.

Consider the following example. You want to use 300 GB 15,000 RPM FC drives for metadata and user data in your DS8000. To reach the wanted performance, you need four 4+4 RAID 10 arrays for metadata. Because you use FC drives, go with RAID 5 arrays and configure all user data file systems with 6+1+S RAID 5 or 7+1 RAID 5. With this approach, you do not create RAID 10 arrays with ranks that have a hot spare requirement.

As shown in Figure 11-1, the following example needs some work in order to be aligned with preferred practices.

Figure 11-1 DS8000 layout example with bad RAID 10 arrays

Figure 11-2 shows the dscli output of the lsextpool command and the names that are assigned to extent pools.

Figure 11-2 lsextpool output

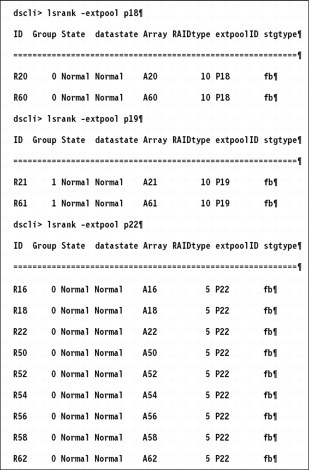

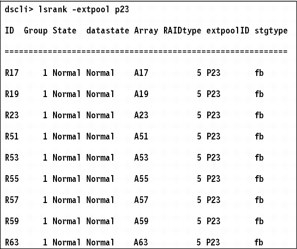

Look more closely at the extpools p18, p19, and p22 in Figure 11-3, and extent pool p23 in Figure 11-4 on page 176.

Figure 11-3 Extent pool attributes for p18, p19, and p22

Figure 11-4 Extent pool tabulates for p23

Align the respective ranks to dedicated DS8000 cluster nodes by grouping odd and even numbers of resources together in extent pools.

To ensure that you do not use rotateexts but keep specific repository LUNs to stick to dedicated 4+4 arrays, use the chrank command to reserve ranks and make them unavailable during fixed block volume creation by completing the following steps:

1. Reserve the rank 61 in extent pool p19 to make it unavailable during volume creation (Example 11-1).

Example 11-1 Reserve rank r61 with extent pool p19

dscli> chrank -reserve r61

CMUC00008I chrank: Rank R61 successfully modified.

Example 11-2 lsrank command

dscli> lsrank -l

ID Group State datastate Array RAIDtype extpoolID extpoolnam stgtype exts usedexts

=======================================================================================

R21 1 Normal Normal A21 10 P19 TS_RAID10_1 fb 1054 0

R61 1 Reserved Normal A61 10 P19 TS_RAID10_1 fb 1054 0

3. After verification, create your first of two metadata LUNs in this extent pool (Example 11-3).

Example 11-3 Create the first of two metadata LUNs

dscli> mkfbvol -extpool P19 -cap 1054 -name ProtMETA_#d -volgrp V2 1900

CMUC00025I mkfbvol: FB volume 1900 successfully created.

4. After volume creation, verify that the allocated 1054 extents for the newly created fixed block volume 1900 are all placed into rank R21 (Example 11-4).

Example 11-4 Verify allocated extents for new volume in rank r21

dscli> lsrank -l

ID Group State datastate Array RAIDtype extpoolID extpoolnam stgtype exts usedexts

=======================================================================================

R21 1 Normal Normal A21 10 P19 TS_RAID10_1 fb 1054 1054

R61 1 Reserved Normal A61 10 P19 TS_RAID10_1 fb 1054 0

5. Now, you can release the second rank in your extent pool to enable volume creation on it (Example 11-5).

Example 11-5 Release rank r61

dscli> chrank -release r61

CMUC00008I chrank: Rank R61 successfully modified.

6. Create the fixed block volume that is used as the metadata LUN (Example 11-6).

Example 11-6 Create metadata LUN

dscli> mkfbvol -extpool P19 -cap 1054 -name ProtMETA_#d -volgrp V2 1901

CMUC00025I mkfbvol: FB volume 1901 successfully created.

7. After volume creation, verify that the newly allocated extents are all placed in the second rank R61 (Example 11-7).

Example 11-7 Verify that new extents are placed in the second rank r61

dscli> lsrank -l

ID Group State datastate Array RAIDtype extpoolID extpoolnam stgtype exts usedexts

=====================================================================================

R21 1 Normal Normal A21 10 P19 TS_RAID10_1 fb 1054 1054

R61 1 Normal Normal A61 10 P19 TS_RAID10_1 fb 1054 1054

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.