Chapter 2

The e-Infrastructure Ecosystem: Providing Local Support to Global Science

2.1 THE WORLDWIDE E-INFRASTRUCTURE LANDSCAPE

Modern science is increasingly dependent on information and communication technologies (ICTs), analyzing huge amounts of data (in the terabyte and petabyte range), running large-scale simulations requiring thousands of CPUs (in the teraflop and petaflop range), and sharing results between different research groups. This collaborative way of doing science has led to the creation of virtual organizations that combine researches and resources (instruments, computing, and data) across traditional administrative and organizational domains (Foster et al., 2001). Advances in networking and distributed computing techniques have enabled the establishment of such virtual organizations, and more and more scientific disciplines are using this concept, which is also referred to as grid computing (Foster and Kesselman, 2003; Lamanna and Laure, 2008). The past years have shown the benefit of basing grid computing on a well-managed infrastructure federating the network, storage, and computing resources across a big number of institutions and making them available to different scientific communities via well-defined protocols and interfaces exposed by a software layer (grid middleware). This kind of federated infrastructure is referred to as e-infrastructure. Europe is playing a leading role in building multinational, multidisciplinary e-infrastructures. Initially, these efforts were driven by academic proof-of-concept and test-bed projects, such as the European Data Grid Project (Gagliardi et al., 2006), but have since developed into large-scale, production e-infrastructures supporting numerous scientific communities. Leading these efforts is a small number of large-scale flagship projects, mostly cofunded by the European Commission, which take the collected results of predecessor projects forward into new areas. Among these flagship projects, the Enabling Grids for E-sciencE (EGEE) project unites thematic, national, and regional grid initiatives in order to provide an e-infrastructure available to all scientific research in Europe and beyond in support of the European Research Area (Laure and Jones, 2009). But EGEE is only one of many e-infrastructures that have been established all over the world. These include the U.S.-based Open Science Grid (OSG)1 and TeraGrid2 projects, the Japanese National Research Grid Initiative (NAREGI)3 project, and the Distributed European Infrastructure for Supercomputing Applications (DEISA)4 project, a number of projects that extend the EGEE infrastructure to new regions (see Table 2.1), as well as (multi)national efforts like the U.K. National Science Grid (NGS),5 the Northern Data Grid Facility (NDGF)6 in northern Europe, and the German D-Grid.7 Together, these projects cover large parts of the world and a wide variety of hardware systems.

TABLE 2.1 Regional e-Infrastructure Projects Connected to EGEE

| Project | Web Site | Countries Involved |

| BalticGrid | http://www.balticgrid.eu | Estonia, Latvia, Lithuania, Belarus, Poland, Switzerland, and Sweden |

| EELA | http://www.eu-eela.org | Chile, Cuba, Italy

Argentina, Brazil Mexico, Peru, Portugal, Spain, Venezuela |

| EUChinaGrid | http://www.euchinagrid.eu | China, Greece, Italy, Poland, and Taiwan |

| EUIndiaGrid | http://www.eumedgrid.eu | India, Italy, and United Kingdom |

| EUMedGrid | http://www.euindiagrid.eu | Algeria, Cyprus, Egypt, Greece, Jordan

Israel, Italy, Malta, Morocco, Palestine Spain, Syria, Tunisia, Turkey, United Kingdom |

| SEE-GRID | http://www.see-grid.eu | Croatia, Greece, Hungary, FYR of Macedonia

Albania, Bulgaria, Bosnia and Herzegovina Moldova, Montenegro, Romania, Serbia, Turkey |

In all these efforts, providing support and bringing the technology as close as possible to the scientist are of utmost importance. Experience has shown that users require local support when dealing with new technologies rather than a relatively anonymous European-scale infrastructure. This local support has been implemented, for instance, by EGEE through regional operation centers (ROCs) as well as DEISA, which assigns home sites to users, which are providing user support and a base storage infrastructure. In a federated model, local support can be provided by national or regional e-infrastructures, as successfully demonstrated by the national and regional projects mentioned earlier. These projects federate with international projects like EGEE to form a rich infrastructure ecosystem under the motto “think globally, act locally.” In the remainder of this chapter, we exemplify this strategy on the BalticGrid and EGEE projects, discuss the impact of clouds on the ecosystem, and provide an outlook on future developments.

2.2 BALTICGRID: A REGIONAL E-INFRASTRUCTURE, LEVERAGING ON THE GLOBAL “MOTHERSHIP” EGEE

To establish a production-quality e-infrastructure in a greenfield region like the Baltic region, a dedicated project was established, the BalticGrid8 project. According to the principle of think globally, act locally, the aim of the project was to build a regional e-infrastructure that seamlessly integrates with the international e-infrastructure of EGEE. In the first phase of the BalticGrid project, starting in 2005, the necessary network and middleware infrastructure was rolled out and connected to EGEE. The main objective of BalticGrid’s second phase, BalticGrid-II (2008–2010), was to further increase the impact, adoption, and reach of e-science infrastructures to scientists in the Baltic States and Belarus, as well as to further improve the support of services and users of grid infrastructures. As with its predecessor BalticGrid, BalticGrid-II continued with strong links with EGEE and its technologies, with gLite (Laure et al., 2006) as the underlying middleware of the project’s infrastructure.

2.2.1 The BalticGrid Infrastructure

The BalticGrid infrastructure is in production since 2006 and has been used significantly by the regional scientific community. The infrastructure consists of 26 clusters in five countries, of which 18 are on the EGEE production infrastructure, with more than 3500 CPU cores, 230 terabytes of storage space.

One of the first challenges of the BalticGrid project was to establish a reliable network in Estonia, Latvia, and Lithuania as well as to ensure optimal network performance for large file transfers and interactive traffic associated with grids. This was successfully achieved in the projects in the first year exploiting the European GéANT network infrastructure, adding Belarus in the second phase.

2.2.2 BalticGrid Applications: Providing Local Support to Global Science

The resulting BalticGrid infrastructure supports and helps scientists from the region to foster the use of modern computation and data storage systems, enabling them to gain knowledge and experience to work in the European research area.

The main application areas within the BalticGrid are from high-energy physics, materials science and quantum chemistry, framework for engineering modeling tasks, bioinformatics and biomedical imaging, experimental astrophysical thermonuclear fusion (in the framework of the ITER project), linguistics, as well as operational modeling of the Baltic Sea ecosystem.

To support these, a number of applications have been ported, often leveraging on earlier EGEE work, to the BalticGrid:

- ATOM: a set of computer programs for theoretical studies of atoms

- Complex Comparison of Protein Structures: an application that offers a method applied for the exploration of potential evolutionary relationships between the CATH protein domains and their characteristics

- Crystal06: a quantum chemistry package to model periodic systems

- Computational Fluid Dynamics (FEMTOOL): modeling of viscous incompressible free surface flows

- DALTON 2.0: a powerful molecular electronic structure program with extensive functions for the calculation of molecular properties at different levels of theory

- Density of Montreal: a molecular electronic structure program

- ElectroCap Stellar Rates of Electron Capture: a set of computer codes produce nuclear physics input for core-collapse supernova simulations

- Foundation Engineering (Grill): global optimization of grillage-type foundations using genetic algorithms

- MATLAB: distributed computing server

- MOSES SMT Toolkit (with SRILM): a factored phrase-based beam search decoder for machine translators

- NWChem: a computational chemistry package

- Particle Technology (DEMMAT): particle flows, nanopowders, and material structure modeling on a microscopic level using the discrete element method

- Polarization and Angular Distributions in Electron-Impact Excitation of Atoms (PADEEA)

- Vilnius Parallel Shell Model Code: an implementation of nuclear spherical shell model approach

2.2.3 The Pilot Applications

To test, validate, and update the support model, a smaller set of pilot applications was chosen. These applications received special attention during the initial phase of BalticGrid-II and helped shape BalticGrid’s robust and cost-efficient overall support model.

2.2.3.1 Particle Technology (DEMMAT)

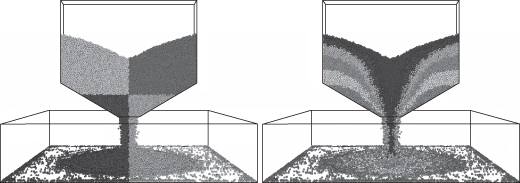

The development of the appropriate theoretical framework as well as numerical research tools for the prediction of constitutive behavior with respect to microstructure belongs to major problems of computational sciences. In general, the macroscopic material behavior is predefined by the structure of grains of various sizes and shapes, or even by individual molecules or atoms. Their motion and interaction have to be taken into account to achieve a high degree of accuracy. The discrete element method is an attractive candidate to be applied for modeling granular flows, nanopowders, and other materials on a microscopic level. It belongs to the family of numerical methods and refers to conceptual framework on the basis of which appropriate models, algorithms, and computational technologies are derived (Fig. 2.1).

Figure 2.1 Particle flow during hopper discharge. Particles are colored according to resultant force.

The main disadvantages of the discrete element method technique, in comparison to the well-known continuum methods, are related to computational capabilities that are needed to handle a large number of particles and a short time interval of simulations. The small time step imposed in the explicit time integration schemes gives rise to the requirement that a very large number of time increments should be performed. Grid and distributed computing technologies are a standard way to address industrial-scale computing problems. Interdisciplinary cooperation and development of new technologies are major factors driving the progress of discrete element method models and their countless applications (e.g., nanopowders, compacting, mixing, hopper discharge, and crack propagation in building constructions).

2.2.3.2 Materials Science, Quantum Chemistry

NWChem9 is a computational chemistry package that has been developed by the Molecular Sciences Software group of the Environmental Molecular Sciences Laboratory at the Pacific Northwest National Laboratory, USA.

NWChem provides many methods to compute the properties of molecular and periodic systems using standard quantum mechanical descriptions of the electronic wave function or density. In addition, NWChem has the capability to perform classical molecular dynamics and free energy simulations. These approaches may be combined to perform mixed quantum mechanics and molecular mechanics simulations.

2.2.3.3 CORPLT: Corpus of Academic Lithuanian

The corpus (large and structured set of texts) was designed as a specialized corpus for the study of academic Lithuanian, and it will be the first synchronic corpus of academic written Lithuanian in Lithuania. It will be a major resource of authentic language data for linguistic research of academic discourse, for interdisciplinary studies, lexicographical practice, and terminology studies in theory and practice. The compilation of the corpus will follow the most important criteria: methods, balance, representativeness, sampling, TEI P5 Guidelines, and so on. The grid application will be used for testing algorithms of automatic encoding, annotation, and search-analysis steps. Encoding covers recognition of text parts (sections, titles, etc.) and correcting of text flow. Linguistic annotation consists of part of speech tagging, part of sentence tagging, and so on. The search-analysis part deals with the complexity level of the search and tries to distribute and effectively deal with the load for corpus services.

2.2.3.4 Complex Comparison of Protein Structures

The Complex Comparison of Protein Structures application supports the exploration of potential evolutionary relationships between the CATCH protein domains and their characteristics and uses an approach called 3D graphs. This tool facilitates the detection of structural similarities as well as possible fold mutations between proteins.

The method employed by the Complex Comparison of Protein Structures tool consists of two stages:

- Stage 1: all-against-all comparison of CATCH domains by the ESSM software

- Stage 2: construction of fold space graphs on the basis of the output of the first stage

This method is used for evolutionary aspects of protein structure and function. It is based on the assumption that protein structures, similar to sequences, have evolved by a stepwise process, each step involving a small change in protein fold. The application (“gridified” within BalticGrid-II) accesses the Protein DataBase to compare proteins individually and in parallel.

2.2.4 BalticGrid’s Support Model

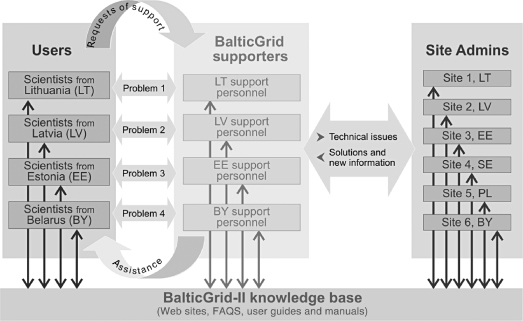

By working with these pilot applications, a robust, scalable, and cost-efficient support model could be developed. Although usually seen as a homogeneous region, the Baltic states expose significant differences, with different languages, different cultures, and different ways local scientists use to interact with their resource providers. Due to these differences, local support structures in the individual countries have been established to provide users with the best possible local support. These local support teams interact with resource providers irrespective of their origin and build upon a joint knowledge base consisting of Web sites, FAQs, user guides, and manuals that is constantly enriched by the support teams. This knowledge base is also available to the users, reducing the need to contact support. The overall support model is depicted in Figure 2.2.

Figure 2.2 The BalticGrid support model.

2.3 THE EGEE INFRASTRUCTURE

The BalticGrid example mentioned earlier is to be seen as an extension of EGEE, given that a large number of BalticGrid sites are EGEE certified and are utilized by the EGEE users. As a result, we have both global users using resources from BalticGrid, as well as BalticGrid users participating and using global resources from the full EGEE setting. To complete the picture, an overview of EGEE is given next; more detailed descriptions can be found in Laure et al. (2006) and in Laure and Jones (2009).

The EGEE project is a multiphase program starting in 2004 and expected to end in 2010. EGEE has built a pan-European e-infrastructure that is being increasingly used by a variety of scientific disciplines. EGEE has also expanded to the Americas and the Asia Pacific, working toward a worldwide e-infrastructure. EGEE (October 2009 data) federates more then 260 resource centers from 55 countries, providing over 150,000 CPU cores and 28 petabytes of disk storage and 41 petabytes of long-term tape storage. The infrastructure is routinely being used by over 5000 users forming some 200 virtual organizations and running over 330,000 jobs per day. EGEE users come from disciplines as diverse as archaeology, astronomy, astrophysics, computational chemistry, earth science, finance, fusion, geophysics, high-energy physics, life sciences, materials sciences, and many more.

The EGEE infrastructure consists of a set of services and test beds, and the management processes and organizations that support them.

2.3.1 The EGEE Production Service

The computing and storage resources EGEE integrates are provided by a large and growing number of resource centers, mostly in Europe but also in the Americas and the Asia Pacific.

EGEE has decided that a central coordination of these resource centers would not be viable mainly because of scaling issues. Hence, EGEE has developed a distributed operations model where ROCs take over the responsibility of managing the resource centers in their region. Regions are defined geographically and include up to eight countries. This setup allows adjusting the operational procedures to local peculiarities like legal constraints, languages, and best practices. Currently, EGEE has 11 ROCs managing between 12 and 38 sites. CERN acts as a special catchall ROC taking over responsibilities for sites in regions not covered by an existing ROC. Once the number of sites in that region has reached a significant number (typically over 10), the creation of a new ROC is envisaged. EGEE’s ROC model has proven very successful, allowing the fourfold growth of the infrastructure (in terms of sites) to happen without any impact on the service.

ROCs are coordinated by the overall Operations Coordination located at CERN. Coordination mainly happens through weekly operations meetings where issues from the different ROCs are being discussed and resolved. A so-called grid operator on duty is constantly monitoring the health of the EGEE infrastructure using a variety of monitoring tools and initiates actions in case services or sites are not in a good state. EGEE requires personnel at resource centers that manage the grid services offered and take corrective measures in case of problems. Service-level agreements are being set up between EGEE and the resource centers to fully define the required level of commitment, which may differ between centers. The Operations Coordination is also responsible for releasing the middleware distribution for deployment; individual sites are supported by their ROC in installing and configuring the middleware.

Security is a cornerstone of EGEE’s operation and the Operational Security Coordination Group is coordinating the security-related operations at all EGEE sites. In particular, the EGEE Security Officer interacts with the site’s security contacts and ensures security-related problems are properly handled, security policies are adhered to, and the general awareness of security-related issues is raised. EGEE’s security policies are defined by the Joint Security Policy Group, a group jointly operated by EGEE, the U.S. OSG project, and the Worldwide LHC Computing Grid project (LCG).10 Further security-related policies are set by the EUGridPMA and the International Grid Trust Federation (IGTF),11 the bodies approving certificate authorities and thus establishing trust between different actors on the EGEE infrastructure. A dedicated Grid Security Vulnerability Group proactively analyzes the EGEE infrastructure and its services to detect potential security problems early on and to initiate their remedy. The EGEE security infrastructure is based on X.509 proxy certificates which allow to implement the security policies both at site level as well as on grid-wide services.

The EGEE infrastructure is federating resources and making them easily accessible but does not own the resources itself. Instead, the resources accessible belong to independent resource centers that procure their resources and allow access to them based on their particular funding schemes and policies. Federating the resources through EGEE allows the resource centers to offer seamless, homogeneous access mechanisms to their users as well as to support a variety of application domains through the EGEE virtual organizations. Hence, EGEE on its own cannot take any decision on how to assign resources to virtual organizations and applications. EGEE merely provides a marketplace where resource providers and potential users negotiate the terms of usage on their own. EGEE facilitates this negotiation in terms of the Resource Access Group, which brings together application representatives and the ROCs. Overall, about 20% of the EGEE resources are provided by nonpartner organizations, and while the majority of the EGEE resource centers are academic institutions, several industrial resource centers participate on the EGEE infrastructure to gain operational experience and to offer their resources to selected research groups.

All EGEE resources centers and particularly ROCs are actively supporting their users. As it is sometimes difficult for a user to understand which part of the EGEE infrastructure is responsible for a particular support action, EGEE operates the Global Grid User Support (GGUS). This support system is used throughout the project as a central entry point for managing problem reports and tickets, for operations, as well as for user, virtual organization, and application support. The system is interfaced to a variety of other ticketing systems in use in the regions/ROCs. This is in order that tickets reported locally can be passed to GGUS or other areas, and that operational problem tickets can be pushed down into local support infrastructures. Overall, GGUS deals on average with over 1000 tickets per month, half of them due to the ticket exchange with network operators as explained next.

All usage of the EGEE infrastructure is accounted and all sites collect usage records based on the Open Grid Forum (OGF)12 usage record recommendations. These records are both stored at the site and pushed into a global database that allows retrieving statistics on the usage per virtual organization, regions, countries, and sites. Usage records are anonymized when pushed into the global database, which allows retrieving them via a Web interface.13 The accounting data are also gradually used to monitor service-level agreements that virtual organizations have set up with sites.

EGEE also provides an extensive training program to enable users to efficiently exploit the infrastructure. A description of this training program is out of the scope of this chapter and the interested reader is referred to the EGEE training Web site14 for further information.

An e-infrastructure is highly dependent on the underlying network provisioning. EGEE is relying on the European Research Network operated by DANTE and the National Research and Educational Networks. The EGEE Network Operations Center links EGEE to the network operations and ensures both EGEE and the network operations are aware of requirements, problems, and new developments. Particularly, the network operators push their trouble tickets into GGUS, thus integrating them into the standard EGEE support structures.

2.3.2 EGEE and BalticGrid: e-Infrastructures in Symbiosis

To fully leverage on the EGEE project success, BalticGrid has reused much of the work done with respect to operations, support organization, education, dissemination, and so forth, as well as all the work put into the EGEE middleware gLite. The goal in making as many BalticGrid sites EGEE certified, that is, seamless parts of the overall EGEE infrastructure, is to enable users from the Baltic and Belarus region to participate as much as possible in the global research projects.

From the EGEE perspective, BalticGrid is expanding the reach and increasing the total capacity of the infrastructure. In addition, BalticGrid has been a test and meeting point for coexisting middlewares, gLite with ARC15 and UNICORE.16

The larger project has helped the smaller to a very quick and economic launch. This was achieved by serving the smaller project with already prepared structures, policies, and middleware. The smaller project, being more flexible due to its size, has acted as a test bed for aspects such as interoperability of middlewares and the deployment and use of cloud computing for small and medium enterprises (SMEs) and start-up companies.

The cooperation of the two projects is intended to improve the environment for their users.

2.4 INDUSTRY AND E-INFRASTRUCTURES: THE BALTIC EXAMPLE

2.4.1 Industry and Grids

Industry and academia share many of the challenges in using distributed environments in cost-efficient and secure ways. To learn more from each other, there have been a number of initiatives to further engage the industry to participate in academic grid projects.

Examples are mainly from life science, materials science, but also economics, where financial institutions are using parts of the open source grid middleware developed by academic projects. In addition, there have been some spin-offs from academia resulting in enterprise software offerings, for example, the UNIVA17 start-up company with founders from the academic and open source community.

The overall uptake of grid technology by small- and medium-sized companies has not been as high as first anticipated. One reason is possibly the complexity of the underlying problem—to share resources in a seamless fashion, introducing a number of complex techniques to ensure this functionality in a secure and controlled fashion. In many ways, the grid offering is very useful from a business perspective, for example, establishing new markets for resources, but to this day, still much work is needed from the user to join such environments, and much work is needed to port users’ applications to use the grid systems. This is, to a large extent due to the academic focus of most grid projects that put higher weights on functionality and performance than on user experiences. The biggest obstacles encountered were the usage of X.509 certificates as authentication means as well as the different interfaces and semantics of grid services originating from different research groups. Standardization efforts (Riedel et al., 2009) aim at overcoming the latter problem. In addition, to share resources as a concept is still considered a big barrier in many industries also within corporations, and security, especially mutual trust issues, is usually the first obstacle mentioned. Another issue is the overall quality of the open source middlewares, still considered too low for many companies.

2.4.2 Industry and Clouds, Clouds and e-Infrastructures

In the commercial sector, dynamic resource and service provisioning as well as “pay-per-use” concepts are being pushed to the next level with the introduction of “cloud computing,” successfully pioneered by Amazon with their Elastic Compute Cloud and Simple Storage Service offerings. Many other major IT businesses offer cloud services today, including Google, IBM, and Microsoft. Using virtualization techniques, these infrastructures allow dynamic service provisioning and give the user the illusion of having access to virtually unlimited resources on demand. This computing model is particularly interesting for start-up ventures with limited IT resources as well as for dynamic provisioning of additional resources to cope with peak demands, rather than overprovisioning one’s own infrastructure. As of today, the usability of these commercial offerings for research remains yet to be shown, although a few promising experiments have already been performed. In principle, clouds could be considered yet another resource provider in e-infrastructures, with a need to bridge the different interfaces and operational procedures to provide the researcher with a seamless infrastructure. More work on interface standardization and on how commercial offerings can be made part of the operations of academic research infrastructures is needed.

2.4.3 Clouds: A New Way to Attract SMEs and Start-Ups

Compared to grid, in cloud services, the ambition and the resulting complexity is lower, resulting in a lower barrier for the user as well as higher security, availability, and quality. All these simplifications, with the high degree of flexibility, has caught great interest from the industry, and while open grid efforts are led by academia, cloud computing is, to a high degree, business driven.

For smaller companies, flexibility is the key to be able to quickly launch and delaunch, and to be able to move capital investments (buying hardware) to operation expenses (hiring infrastructure, pay-per-use). Larger companies are more hesitant, trying clouds in a stepwise manner, combining private clouds with public-clouds-when-needed, so called hybrid clouds.

First out of the smaller companies are the newly started ones, the start-ups. For many of these companies there are no alternatives but to use all means of cost minimization, and here cloud computing fits very well. Start-ups do not have much time in building their own infrastructure and usually do not really know what they will need in the near future, and here cloud computing offers a quick launch, as well as delaunch, when needed. Investors are also benefiting from this shift in cost models, lowering their initial risks and getting quick proof of concept, still with scalable solutions in case of early success.

2.4.3.1 BalticGrid Innovation Lab (BGi)

Within the BalticGrid project, a focused effort to attract SMEs and start-ups to use the regional e-infrastructure was made, resulting in the creation of the BGi.18 BGi aims to educate early-stage start-ups in the use of BalticGrid resources, mainly through a cloud interface—the BalticCloud.19 BalticCloud is a cloud infrastructure based on open source solutions (Eucalyptus, OpenNebula) (Sotomayor et al., 2008; Nurmi et al., 2009). At BGi, companies learn about how to leverage on cloud computing, both to change their cost model and possibly to lower their own internal IT costs (Assuncao et al., 2009). Companies learn also how to prepare short-term pilots, prototyping, and novel services to their customers. BGi is also a business networking effort, all with BalticGrid in common.

2.4.3.2 Clouds and SMEs: Lessons Learned

BGi, together with BalticCloud, shows a way to attract SMEs and start-ups in the region, and the experience so far is very positive. Early examples are from the movie production industry (rendering on BalticCloud) and larger IT infrastructure companies preparing cloud services for start-ups. The project has also produced a number of cloud consultancy firms, and there is a growing interest of cloud support for innovation services, for example, incubators, in the region.

2.5 THE FUTURE OF EUROPEAN E-INFRASTRUCTURES: THE EUROPEAN GRID INITIATIVE (EGI) AND THE PARTNERSHIP FOR ADVANCED COMPUTING IN EUROPE (PRACE) INFRASTRUCTURES

The establishment of a European e-infrastructure ecosystem is currently progressing along two distinct paths: EGI and PRACE. EGI intends to federate national and regional e-infrastructures, managed locally by National Grid Initiatives (NGIs) into a pan-European, general-purpose e-infrastructure as pioneered by the EGEE project that unites thematic, national, and regional grid initiatives. EGI is a direct result of the European e-Infrastructure Reflection Group (e-IRG) recommendation to develop a sustainable base for European e-infrastructures. Most importantly, funding schemes are being changed from short-term project funding (like 2 years’ funding periods in the case of EGEE) to sustained funding on a national basis. This provides researchers with the long-term perspective needed for multiyear research engagements. All European countries support the EGI vision, with final launch during 2010. Unlike EGEE, which has strong central control, EGI will consist of largely autonomous NGIs with a lightweight coordination entity on the European level. This setup asks for an increased usage of standardized services and operational procedures to enable a smooth integration of different NGIs exposing a common layer to the user while preserving their own autonomy. In this context, the work of the OGF is of particular importance. The goal of OGF is to harmonize these different interfaces and protocols and to develop standards that will allow interoperable, seamlessly accessible services. One notable effort in this organization where all of the above-mentioned projects work together is the Grid Interoperation Now (GIN) (Riedel et al., 2009) group. Through this framework, the mentioned infrastructures work to make their systems interoperate with one another through best practices and common rules. GIN does not intend to define standards but to provide the groundwork for future standardization performed in other groups of OGF. Already this has led to seamless interoperation between OSG and EGEE, allowing jobs to freely migrate between the infrastructures as well as seamless data access using a single sign-on. Similar efforts are currently ongoing with NAREGI, DEISA, and NDGF. As part of the OGF GIN effort, a common service discovery index of nine major grid infrastructures worldwide has been created, allowing users to discover the different services available from a single portal. A similar effort aiming at harmonizing the policies for gaining access to these infrastructures is under way.

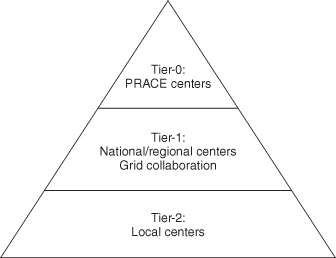

At the same time, the e-IRG has recognized the need to provide European researchers with access to petaflop-range supercomputers in addition to high-throughput resources that are prevailing in EGI. PRACE aims to define the legal and organizational structures for a pan-European high-performance computing (HPC) service in the petaflop range. These petaflop-range systems are supposed to complement the existing European HPC e-infrastructure as pioneered by the DEISA project. DEISA is federating major European HPC centers in a common e-infrastructure providing seamless access to supercomputing resources and, thanks to a global shared file system, data stored at the various centers. This leads to a three-tier structure, with the European petaflop systems at Tier-0 being supported by leading national systems at Tier-1. Regional and midrange systems complement this HPC pyramid at Tier-2 as depicted in Figure 2.3.

Figure 2.3 HPC ecosystem.

Although the goals of PRACE/DEISA are similar to the ones of EGI/EGEE, the different usage and organizational requirements demand a different approach, and hence the establishment of two independent yet related infrastructures. For researchers, it is important, however, to have access to all infrastructures in a seamless manner; hence, a convergence of the services and operational models in a similar way as discussed in the EGI/NGI case mentioned earlier will be needed.

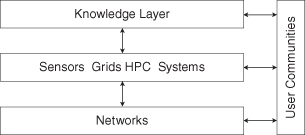

2.5.1 Layers of the Ecosystem

This convergence and the addition of other tools (like sensors, for instance) will eventually build the computing and data layer of the e-infrastructure ecosystem. Leveraging the physically wide area connectivity provided by the network infrastructure (operated by GéANT and the National Research and Education Networks in Europe), this computing and data layer facilitates the construction of domain-specific knowledge layers that provide user communities with higher-level abstractions, allowing them to focus on their science rather than on the computing technicalities. The resulting three-layered ecosystem is depicted in Figure 2.4.

Figure 2.4 e-Infrastructure ecosystem.

2.6 SUMMARY

In summary, a variety of different e-infrastructures are available today to support e-research. Convergence of these infrastructures in terms of interfaces and policies is needed to provide researchers with seamless access to the resources required for their research, independently of how the resource provisioning is actually managed. Eventually, a multilayer ecosystem will greatly reduce the need for scientists to manage their computing and data infrastructure, with a knowledge layer eventually providing high-level abstractions according to the needs of different disciplines. Initial elements of such an e-infrastructure ecosystem already exist, and Europe is actively striving for sustainability to ensure that it continues to build a reliable basis for e-research.

ACKNOWLEDGMENTS

The authors would like to thank all the members of EGEE and BalticGrid for their enthusiasm and excellent work, which made the EGEE and BalticGrid vision a reality. The authors would also like to thank the open source cloud developers—Eucalyptus and OpenNebula—making BalticCloud and BGi a reality.

Notes

1 http://www.opensciencegrid.org.

13 http://www3.egee.cesga.es/gridsite/accounting/CESGA/egee_view.php.

14 http://www.egee.nesc.ac.uk.

15 http://www.nordugrid.org/middleware.

18 http://www.balticgrid.eu/bgi.

19 http://cloud.balticgrid.eu.

REFERENCES

M Assuncao, A Costanzo, and R Buyya. Evaluating the cost-benefit of using cloud computing to extend the capacity of clusters. In Proc. of 18th ACM Int’l Symposium on High Performance Distributed Computing, pp. 141–150, 2009.

I. Foster and C. Kesselman, editors, The Grid: Blueprint for A New Computing Infrastructure. San Francisco, CA: Morgan Kaufmann, 2003.

I. Foster, C. Kesselman, and S. Tuecke. The anatomy of the grid. The Int’l Journal of High Performance Computing Applications, 15(3):200–222, 2001.

F. Gagliardi, B. Jones, and E. Laure. The EU DataGrid Project: Building and operating a large-scale grid infrastructure. In B. D. Martino, J. Dongarra, and A. Hoisie, editors, Engineering the Grid: Status and Perspective. Los Angeles, CA: American Scientific Publishers, 2006.

M. Lamanna and E. Laure, editors. The EGEE user forum experiences in using grid infrastructures. Journal of Grid Computing, 6(1), 2008.

E. Laure, S. Fisher, A. Frohner, et al. Programming the grid with gLite. Computational Methods in Science and Technology, 12(1):33–45, 2006.

E. Laure and B. Jones. Enabling Grids for E-sciencE—The EGEE project. In L. Wang, W. Jie, and J. Chen, editors, Grid Computing: Infrastructure, Service, and Application, Boca Raton, FL: CRC Press, 2009.

D. Nurmi, R. Wolski, C. Grzegorczyk, et al. The eucalyptus open-source cloud-computing system. In Proc. of 9th IEEE/ACM International Symposium on Cluster Computing and the Grid, pp. 124–131, 2009.

M. Riedel, E. Laure, T. Soddemann, et al. Interoperation of worldwide production e-science infrastructures. Concurrency and Computation: Practice and Experience, 21(8):961–990, 2009.

B. Sotomayor, R. Montero, I. M. Llorente, et al. Capacity leasing in cloud systems using the OpenNebula engine. In Proc. of the Cloud Computing and its Applications 2008, 2008.