Chapter 6

Building and Running Collaborative Distributed Multiscale Applications

6.1 INTRODUCTION

Multiscale simulations are a dynamically developing area of complex system modeling. Examples include blood flow simulations (e.g., in treatment of in-stent restenosis) as presented by Caiazzo et al. (2009), solid tumor models (Hirsch et al., 2009), or stellar system simulations (Portegies Zwart et al., 2008). Modern technical solutions provided by computer science offer many useful features for such simulations, including support for composability and reuse (component approach) as well as resource sharing (grid concept). In this chapter, we explain how to efficiently exploit some of them. Providing a distributed, easy-to-use e-infrastructures that can be collaboratively and transparently shared by multidisciplinary scientists is one of the main aspects of large-scale computing. As shown in this chapter, some of these solutions can also be successfully applied to the field of multiscale simulations.

Grid technologies can be exploited for the development and execution of multiscale simulations on various levels. The first approach, based on the concept presented by Foster et al. (2001), is to use the grid as a metacomputer with access to distributed computing and storage resources. Groen et al. (2010) presents a case where a galaxy collision simulation switches at run time between heterogeneous resources on two grid sites: a graphical processing unit (GPU)-enabled computer (Pharr and Fernando, 2005) in The Netherlands and a Gravity Pipe (GRAPE)-enabled machine (Makino et al., 2003) in the United States.

The second approach to large-scale distributed computing, based on the concept of Web1 and grid services (Foster et al., 2002), allows the user to construct an application from software components (services) installed in the e-infrastructure. In this approach, higher-level software services are shared in addition to pure computational and storage resources. A good example is the GridSpace virtual laboratory presented in Malawski et al. (2010).

The third approach, also connected with the idea of cloud computing (Rajkumar and Rajiv, 2010), is to enable the user to dynamically deploy software/applications into high-level containers residing on the e-infrastructure. One example is the H2O framework (Kurzyniec et al, 2003), where pluglets written by a user are deployed into so-called kernels installed on the grid. Another system that can be mentioned here is the Highly Available Dynamic Deployment Infrastructure for Globus Toolkit (HAND) for dynamic deployment of grid services (Qi et al., 2007).

In this chapter, we focus on applying the second approach to support multiscale simulations, that is, allowing the user to build an application from software components or services installed in the e-infrastructure. We propose a component environment for such simulations that allows multiscale application developers to wrap their models as recombinant software components and share them using the available e-infrastructure. This would help scientists working on multiscale problems to exchange models and connect them in various applications.

This chapter is organized as follows. Section 6.2 defines the requirements of complex multiscale simulations. Section 6.3 presents some of the available grid and component technologies and some related work. Section 6.4 describes an environment supporting the high-level architecture (HLA) component model. Section 6.5 describes an experiment involving a sample multiscale simulation of a dense stellar system. Summary and future work are presented in Section 6.6.

6.2 REQUIREMENTS OF MULTISCALE SIMULATIONS

When designing an environment supporting the creation and execution of multiscale simulations, one can identify two different types of issues. One group of issues, described in Section 6.2.1, relates to the problem of actually connecting two or more simulation modules together. The set of actual requirements depends on the type of simulation. In this chapter, we focus on advanced time synchronization requirements and describe three different types of time interactions between multiscale simulation models. Issues related to composability and reusability of existing simulation modules are described in Section 6.2.2, where we also justify the need for wrapping simulation models into recombinant software components that can later be selected and assembled in various combinations.

6.2.1 Interactions between Single-Scale Models

Interactions between single-scale modules require adherence to conservation laws, support for joining modules with different internal time management, efficient data exchange between pairs of modules with different scales, and so on. The set of actual requirements depends on the type of simulation: Not every set of models requires advanced time management, so the simulation developer needs to make an optimal choice between functionality and simplicity of the environment. For example, the Multiscale Multiphysics Scientific Environment (MUSE) (Portegies Zwart et al., 2008) requires advanced time synchronization between modules, whereas the simulation model of in-stent restenosis (Caiazzo et al., 2009), applying asynchronous unidirectional pipes blocking on the receiver site, remains sufficient for communication.

In our work, we focus on models that require advanced synchronization support. Temporal interactions occurring between multiscale components were analyzed in our previous study (Rycerz et al., 2008c); they will also be briefly described in the following three subsections. We can distinguish scenarios where modules are running concurrently and exchanging time-stamped data using (1) a conservative approach, (2) an optimistic approach, and (3) situations where one module triggers another and waits for its data.

Our analysis bases on modules derived from MUSE, which is a sequential simulation environment designed for dense stellar systems. However, the types of supported interactions are generic and can apply to other module connections that require advanced time management facilities.

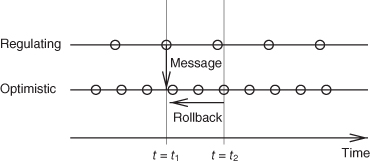

6.2.1.1 Concurrent Execution: Conservative Approach

One of the most common types of interactions between simulation models occurs when one simulation regulates the timing of another simulation, as shown in Figure 6.1 The conservative constrained simulation can move forward as far as it is allowed by the regulating simulation. In Figure 6.1, the constrained simulation is not allowed to go beyond time t = t1 until it is certain that it will not receive any messages with time stamp t = t1 (i.e., until the regulating simulation does not move forward).

Figure 6.1 Concurrent execution—conservative approach. The conservative constrained simulation can move forward as far as it is allowed by the regulating simulation.

This type of interaction is useful when data sent by the regulating simulation affect the entire constrained simulation and any rollback would be too costly. In multiscale simulations, this approach can be applied when a macroscale model produces some useful data for a mesoscale model. It enables the macroscale model simulation to move forward as far as needed and to control the mesoscale model simulation. As the mesoscale model moves forward slower (in terms of simulation time units), controlling it via the macroscale model should be acceptable.

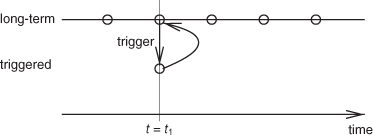

6.2.1.2 Concurrent Execution: Optimistic Approach

When a conservative approach is significant for simulation execution, one may apply optimistic model interaction. In this case, both simulations can advance in time as far as possible, but once one of them receives a message with a time stamp lower then its execution time, it needs to roll back its state to match this execution time. This is shown in Figure 6.2, where the optimistic simulation has to roll back from t = t2 to t = t1 because the regulating simulation has just sent a message with time stamp t = t1.

Figure 6.2 Concurrent execution—optimistic approach. Both simulations can advance in time as far as needed, but a rollback becomes necessary if an outdated message is received.

This type of interaction is useful for models where rollback is relatively inexpensive—for example, an outdated message only affects some of the results already produced. In multiscale simulations, this situation occurs, for instance, when the microscale model produces data useful for the macroscale model in an asynchronous way and such data only affect some part of the macroscale model.

6.2.1.3 Execution Triggered by Another Execution

Sometimes, it is not necessary to run two simulation models concurrently, either in an optimistic or conservative way. Consequently, a much simpler solution can be applied. This happens when model A “knows” when to launch the execution of model B (usually with a relatively short execution time) and waits for its results, as presented in Figure 6.3, where the long-term simulation triggers a short model execution.

Figure 6.3 Execution triggered by another simulation. One simulation launches the execution of another and waits for its results.

In multiscale simulations, this situation occurs, for example, when a mesoscale model needs to launch a microscale simulation to obtain data that will have a significant effect on the whole mesoscale simulation.

6.2.2 Interoperability, Composability, and Reuse of Simulation Models

Another important set of requirements involves the reuse of existing models and their composability. Specifically, it might prove useful to find existing models and to link them together. Therefore, there is a need for wrapping simulations into recombinant components that can be selected and assembled in various ways to satisfy specific requirements. The modeling and simulation community has a need for simulation interoperability and composability.2

Ongoing efforts in this research area have resulted in the Base Object Model (BOM)3 standard for semantic description of simulation components and their relationships. However, none of the present solutions is widely applied by simulation developers, and they generally do not address issues specific to multiscale simulations. This is due to the fact that composability and reuse requirements imply simplicity and ease of use, which, in turn, has to be provided along with advanced functionality, specific to multiscale simulations.

The above-mentioned requirements can be fulfilled by applying new technological solutions, assisting multiscale simulation researchers in collaboration, exchange of simulation models, and sharing know-how ideas for the effective operation of such complex simulations. The next section provides an overview of new technological solutions.

6.3 AVAILABLE TECHNOLOGIES

6.3.1 Tools for Multiscale Simulation Development

6.3.1.1 Model Coupling Toolkit (MCT)

MCT (Larson et al., 2005) is a tool capable of simplifying the construction of parallel coupled models. It was successfully exploited in a parallel climate model. MCT applies the Message Passing Interface (MPI) style of communication between simulation modules. It is designed for the domain data decomposition of a simulated problem and provides support for advanced data transformations between different modules. Data are exchanged between modules with a defined coupling frequency.

6.3.1.2 HLA

HLA4 is a standard for large-scale distributed interactive simulations. HLA offers the ability to plug and unplug modules containing various simulation models, with different internal types of time management, to/from a complex simulation system. The elements of the simulation system are connected together in a tuple space, used for subscribing to and publishing time-stamped events and data objects.

6.3.1.3 MUSE

MUSE (Portegies Zwart et al., 2008) is a software environment for astrophysical applications in which different simulation models of star systems are incorporated into a single framework. The scripting approach (Python) is used to couple software modules containing models. Currently, the set of available models includes stellar evolution, hydrodynamics, stellar dynamics, and radiative transfer domains. Python scripts are used to execute modules in an appropriate order. Execution is sequential; that is, in a single time loop, the module containing model A is executed followed by the module with model B. Finally, the data are updated in both modules.

6.3.1.4 The Multiscale Coupling Library and Environment (MUSCLE)

MUSCLE (Hegewald et al., 2008) provides a software framework for building simulations according to the complex automata theory (Hoekstra et al., 2007). It has been applied to the simulation of coronary artery in-stent restenosis (Caiazzo et al., 2009), but it is designed for complex automata in general. The framework introduces the concept of kernels, which communicate by unidirectional pipelines dedicated to passing specific types of data from/to a kernel. Communication is asynchronous and blocks only on the receiver side.

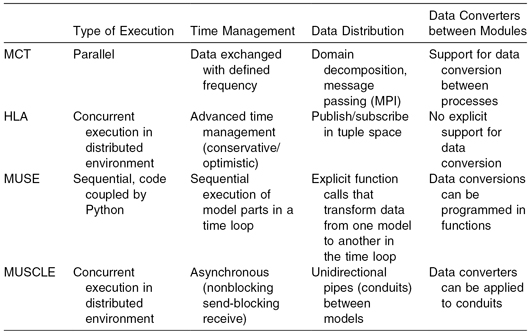

Table 6.1 depicts data comparing the environments mentioned earlier. The data show that the functionality of the environments is complementary and that the user choice depends on the concrete simulation requirements. MCT is appropriate for parallel simulations with domain decomposition, while HLA is suitable for joining simulation with different time management polices, but is also quite complicated and involves a steep learning curve. MUSE is relatively easy to use, but it is designed mainly for astrophysical simulations. MUSCLE was designed for cellular automata problems and can be used for simulations that require simple asynchronous communication.

TABLE 6.1 Comparison of Environments Supporting Multiscale Model Coupling

In our work, we have decided to apply HLA due to its advanced support for distributed simulations, especially its time management facilities, which cover all three types of time interactions mentioned in Section 6.2.1), and it also allows us to join different types of time interactions in one simulation system.

6.3.2 Support for Composability

Composition approaches vary with regard to their degree of coupling. The most loosely coupled approach includes workflow models, such as Business Process Execution Language (BPEL),5 ASKALON (Fahringer et al., 2005), or Kepler (Altintas et al., 2004). They focus mainly on loosely coupled services without support for direct links. Script-based solutions for loosely coupled compositions include Geodise (Pound et al., 2003) and GridSpace virtual laboratory.6

In a tightly coupled approach to composition, the application is presented as a graph of connected components. The graph defines the workflow of the application, showing how components directly call each other. There are also sample architectures, for example, the Service Component Architecture (SCA),7 where business functionality is provided as a series of services, which are assembled together to create solutions that serve a particular business need. Another example is the Common Component Architecture (CCA) described by Armstrong et al. (2006) (with implementations such as MOCCA, described by Malawski et al., 2006), used in high-performance computing, where scientific components are directly connected by their Uses and Provides ports. The Provides port is a set of public interfaces that the component implements and that can be referenced and “used” by other components. The Uses port can be viewed as a connection point on the surface of the component where the framework can attach (connect) references to Provides ports implemented by other components of the framework.

In addition, there are also high-level composition models based on a skeleton concept, such as those recently developed for grid computing (e.g., grid component model [GCM]), presented by Françoise et al., 2009). To the best of our knowledge, none of the existing component approaches provides advanced features for distributed multiscale simulations (in particular, they do not explicitly support connections of modules with different time management mechanisms); thus, there is a need for a component model that supports such advanced, simulation-specific types of connections.

6.3.3 Support for Simulation Sharing

The grid concept (Foster et al., 2002) is oriented toward joining geographically distributed communities of scientists working on shared problems. This feature also enables users of distributed simulations to more easily exchange software components containing their models. The grid aims at facilitating access to distributed resources across administrative domains. For example, the concept of a virtual organization (VO) introduced by Foster et al. (2001) allows a set of individuals and/or interrelated institutions to share resources, services, and applications regardless of their administrative domains and geographic locations.

One of the most important implementations of the grid concept is the Globus Toolkit.8 To achieve interoperability between distributed systems, the Globus community has come up with the idea of extending the Web services standard9 with state and dynamic creation facilities, which resulted in the Web Services Resource Framework (WSRF) standard. WSRF uses a classical Web service as an interface to a stateful WS-Resource.10 Apart from the work of the Globus community, there are also other approaches to grid computing. Grids can be built on top of the H2O resource sharing platform (Kurzyniec et al., 2003), which introduces the concept of kernels, that is, containers for user-provided software modules. Usually, kernels should be installed on an e-infrastructure, while the modules (called pluglets) can be dynamically deployed by authorized third parties (not necessarily kernel owners). The framework is portable (Java based), secure, scalable, and lightweight. Finally, grid architectures can be constructed from pure Web services or component technologies described in the previous sections.

Examples of working grids include European e-infrastructures such as Enabling Grids for E-sciencE (EGEE) or Distributed European Infrastructure for Supercomputing Applications (DEISA).

As our goal is to support component exchange between scientists working in the field of multiscale simulations regardless of their actual geographic location, we have decided to use grid solutions and have chosen the H2O framework as a lightweight platform designed to support composition frameworks.

6.4 AN ENVIRONMENT SUPPORTING THE HLA COMPONENT MODEL

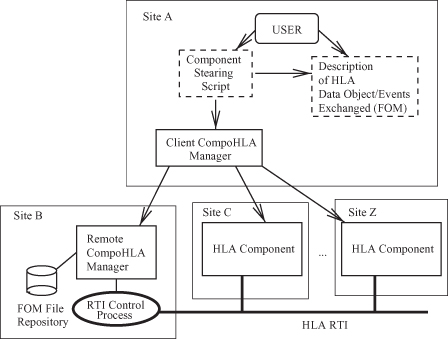

6.4.1 Architecture of the CompoHLA Environment

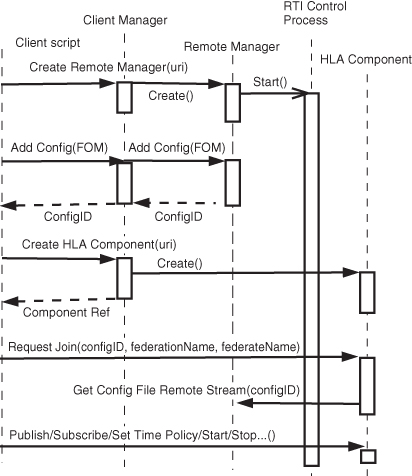

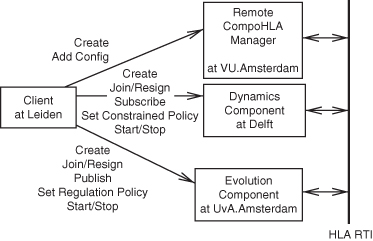

The architecture of the CompoHLA environment is shown in Figure 6.4. A Client CompoHLA Manager provides a user API accessible via a scripting language to call other modules of the framework. The Remote CompoHLA Manager manages simulation configuration files—currently, an HLA federation object model (FOM) that describes events and objects exchanged between simulation modules. It also starts and stops a legacy run-time infrastructure (RTI) control process necessary to run an HLA application.

Figure 6.4 Architecture of the CompoHLA environment. The user interacts with HLA components and the RTI control process via the Client CompoHLA Manager API accessible via a scripting language.

HLA components contain actual simulation models wrapped as software modules, plugged into the HLA RTI by join/resign mechanisms, which they use to communicate. In comparison, the CCA model introduces direct connections between components by joining Provides and Uses ports, as described in Section 6.3.2. The components can use all available mechanisms provided by HLA (time and data management), especially when building multiscale simulations with different timescales. Thanks to HLA features it is possible to aggregate models with different internal timescales into one coherent simulation system. Different types of time management (event driven vs. time driven and conservative vs. optimistic) can be integrated so that all time interactions described in Section 6.2.1 are supported. Simulation modules can exchange events and data objects using publish/subscribe mechanisms. For advanced synchronization, they can use time-stamped messages (transitory events and persistent objects).

The difference between this approach and the original HLA is that the behavior of components and their interactions is defined and set by an external module upon user request. The proposed approach separates component developers from users who wish to construct a particular distributed simulation system from existing components. Preliminary component design and implementation are described in our previous work (Rycerz et al., 2008a, 2008b).

6.4.2 Interactions within the CompoHLA Environment

A generic time sequence diagram showing an interaction scenario between components in the CompoHLA environment during the setup process is presented in Figure 6.5. A client script calls the Client CompoHLA Manager to create the Remote CompoHLA Manager, which, in turn, starts the legacy RTI control process. Subsequently, once the user prepares a FOM file describing data objects and events to be exchanged between HLA components taking part in the simulation, the client script asks the Client CompoHLA Manager to add the appropriate FOM as a config file. The Remote CompoHLA Manager, which manages all config files, assigns an appropriate ID and returns it. When this is done, HLA components can be created and asked to join. Each join request includes the ID of the config file, which is then fetched by the HLA component from the Remote CompoHLA Manager. Given this file, the actual join operation for the legacy RTI control process can be performed later by an internal component scheduler as descibed in Section 6.4.3. Finally, other operations can be invoked on the component (publish, subscribe, set time policy, start, stop, etc.)

Figure 6.5 Time sequence diagram showing an interaction scenario within the CompoHLA environment when setting up a simulation from HLA components.

6.4.3 HLA Components

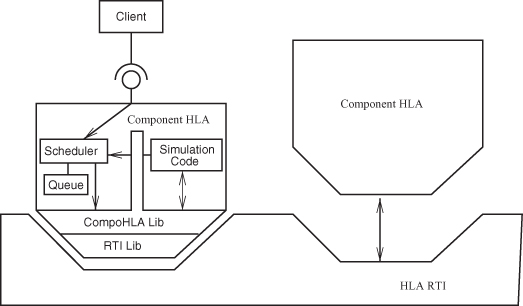

The detailed architecture of an HLA component is shown in Figure 6.6. The simulation code (custom for each component and provided by the component developer) interfaces other elements of the component by means of the CompoHLA library.

Figure 6.6 Architecture of the HLA component plugged into the HLA RTI communication bus. The component exposes an interface for external requests. Thus, it is possible to change the state of the simulation in the HLA RTI layer by using an independent client.

Components are plugged into the HLA RTI to communicate with each other by means of HLA mechanisms. They need to process internal requests coming from the simulation code layer and the HLA RTI layer (from other components). Moreover, they expose interfaces for external state change requests in the HLA RTI layer generated from the client while the actual simulation is already running.

To efficiently handle concurrent processing of internal and external requests, we have applied ideas from the active object pattern presented by Lavender and Schmidt (1995), which separates invocation from execution. We have introduced a scheduler processing external requests, called from the simulation by a single routine (Pycerz and Bubak, in print). External requests are processed asynchronously—the scheduler places them in a queue, where they can later be retrieved and actually executed (when called from the simulation code).

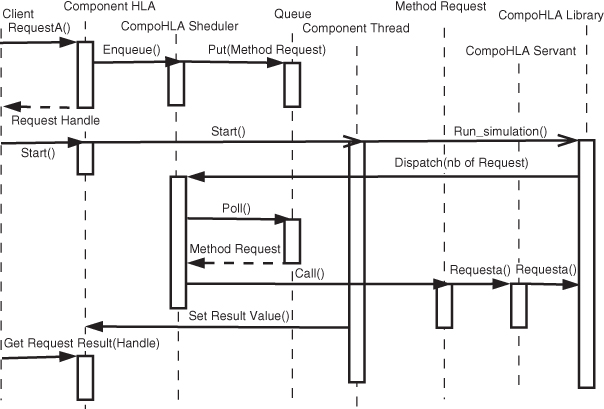

The scenario of interaction between HLA component modules is shown in Figure 6.7 in the form of a time sequence diagram.

Figure 6.7 Time sequence diagram showing the interaction scenario between HLA component modules compliant with the active object pattern (Lavender and Schmidt, 1995). External requests are placed in a queue. Once the simulation starts, the scheduler is asked to dispatch the requests.

First, the client invokes a request on the HLA component that is processed by the ComponentHLA class. The ComponentHLA class invokes the enqueue method of CompoHLAScheduler, which places the appropriate type of request (MethodRequest) in the queue. The handle of this request is returned to the client. If the client invokes the start method on the HLA component, it causes ComponentHLA to start a new thread, ComponentThread, which runs the actual simulation (using the CompoHLA library API). During the execution of the simulation, the user code (via CompoHLA library API) periodically calls the dispatch() method of the CompoHLAScheduler. The scheduler withdraws MethodRequests from the queue and executes their call method, which then executes appropriate request methods of CompoHLAServant (according to the actual type of MethodRequest). The server calls the CompoHLA library, which actually executes the request. Results are stored in ComponentHLA by calling its setResultValue method, which enables them to be retrieved by the client.

6.4.4 CompoHLA Component Users

There are two different group of users of the CompoHLA system: developers who create components and scientists who actually use them (component users).

The component developer can wrap a custom legacy simulation module using the CompoHLA library as described by Rycerz et al. (2008a, 2008b) so that it can be easily plugged into the distributed multiscale simulation. The use of the compoHLA library does not free the developer from understanding HLA time management and data exchange mechanisms; however, it considerably simplifies their use and enables the HLA component to be steered from the user code.

The component user can use a scripting language to dynamically set up a distributed multiscale simulation comprising selected HLA components. He can also decide which components will participate in simulation and can dynamically plug and unplug them to/from running simulations. Additionally, the user can decide how components will interact with one another (e.g., by setting up appropriate HLA subscription/publication and time management mechanisms) and can change the nature of their interactions during run time.

6.5 CASE STUDY WITH THE MUSE APPLICATION

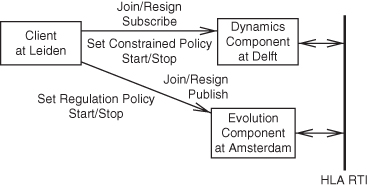

The implementation of the CompoHLA environment is based on the H2O technology. The functionality of the CompoHLA system is presented here on the example of a multiscale dense stellar system simulation. We have used simulation modules with different timescales taken from MUSE, as described in Section 6.3.1. Our experiment compares the execution of legacy sequential MUSE with its distributed version using HLA components on the grid. We make use of the Dutch Grid DAS311 infrastructure. Components are built from two MUSE modules: star evolution simulation (macroscale) and star dynamics simulation (mesoscale), which run concurrently to show the second type of time interaction described in Section 6.2.1.1, namely, concurrent execution—conservative approach. This experiment is a good example of using the HLA time management features when one simulation controls the timing of another. The dynamics module should obtain updates from the evolution module before it actually passes the appropriate point in time. The HLA time management mechanism (High Level Architecture specification—IEEE 1516) of the regulating federate (evolution), which controls the time flow in the constrained federate (dynamics), is therefore very useful. We have created a sample simulation consisting of a hundred stars. Data representing mass, radius, 3-D position, and velocity of stars were exchanged between the evolution and dynamics modules. The HLA components were asked to join the simulation (both), set the time-regulating policy, and publish data (evolution component) or subscribe to data (dynamics component). Subsequently, the components were asked to unset their time policy, resign the simulation, and stop. Figure 6.8 shows a sample user script (JRuby file) that performs these steps.

Figure 6.8 A fragment of a sample user JRuby script. The script show the setting up and steering of a multiscale simulation consisting of two HLA components that contain dynamics (mesoscale) and evolution (macroscale) models.

In our implementation, we have used H2O v2.1 and HLA CERTI implementation v3.3.2. The client script was written in JRuby 1.5.0.RC3 (Ruby 1.8.7). Experiments were performed using DAS3. MUSE sequential execution was run on a grid node in Delft. The actual setup of the HLA component experiment is shown in Figure 6.9.

Figure 6.9 Setup of the HLA component experiment. Elements of the CompoHLA environment are installed on different sites on the DAS3 e-infrastructure and are called by the client script.

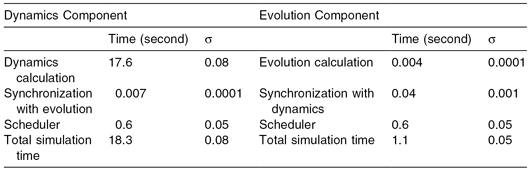

The component client was run on a grid node in Leiden; the dynamics component was run on a grid node in Delft; the evolution component was run at UvA, Amsterdam, while the HLA control process was run at Vrije University, Amsterdam. All grid notes shared a similar architecture (dual AMD Opteron compute nodes, 2.4 GHz, 4-GB RAM). DAS3 employs a novel internal wide-area interconnect based on light paths between its grid sites.12 A comparison between the distributed version using HLA components and the sequential MUSE Python scheduler is shown in Tables 6.2 and 6.3. The overhead of using the scheduler and HLA communication mechanisms (time management and data transfer) was very low in comparison to actual calculations. Note that the computation time for the dynamics component was far more significant than evolution time; therefore, the dynamics were the dominant factor of the total simulation time in both cases (concurrent and sequential execution). The performance results prove that the overhead of the HLA-based distribution (especially its repeating part involving synchronization between multiscale elements) was low and that HLA can be beneficial for multiscale simulations. Moreover, the scheduler-based deign, which manages external requests, did not produce much overhead.

TABLE 6.2 Stellar Dynamics and Evolution: Multiscale Simulation Time Using Original MUSE Sequential Execution Steered by Python Scheduler

| Sequential MUSE | ||

| Time (second) | σ | |

| Dynamics calculation | 18.1 | 0.6 |

| Evolution calculation | 0.004 | 0.0001 |

| Data update evolution–dynamics | 0.01 | 0.001 |

| Total simulation time | 18.1 | 0.6 |

TABLE 6.3 Stellar Dynamics and Evolution: Multiscale Simulation Time Using Distributed HLA Components

Table 6.4 shows the execution time for requests issued to the HLA component from the client perspective, measured in a JRuby script from Figure 6.8 (in miliseconds). The most time-consuming part was the creation of a remote manager and components as it required a loading code. Adding a config file to the manager and preparing join requests was also time-consuming as these operations required transferring config files.

TABLE 6.4 Timing of Requests to the Modules of the CompoHLA Environment Issued from the JRuby Script

| Request | Time (millisecond) | σ |

| Create remote manager | 2020 | 36 |

| Create dynamics component | 2120 | 23 |

| Create evolution component | 2150 | 27 |

| Add config to the manager | 150 | 39 |

| Join evolution | 140 | 9 |

| Join dynamics | 171 | 3 |

| Publish evolution | 4 | 0.1 |

| Subscribe dynamics | 5 | 0.4 |

| Set time policy evolution | 4 | 0.1 |

| Set time policy dynamics | 5 | 0.4 |

| Start evolution | 4 | 0.4 |

| Start dynamics | 7 | 0.6 |

| Unset time policy evolution | 5 | 0.4 |

| Unset time policy dynamics | 9 | 0.4 |

| Resign evolution | 5 | 0.2 |

| Resign dynamics | 8 | 0.9 |

| Stop evolution | 3 | 0.4 |

| Stop dynamics | 8 | 0.4 |

Other requests to the components were on the order of several milliseconds. The requests were called in an asynchronous fashion so that during the call, they were only scheduled in the queue. Actual work was performed by the scheduler, with timing presented in Table 6.3. The results of the experiment showed that the execution time of requests was relatively low and that the component layer did not introduce significant overhead.

6.6 SUMMARY AND FUTURE WORK

In this chapter, we presented an environment supporting the HLA component model that enables the user to dynamically compose/decompose distributed simulations from multiscale elements residing on e-infrastructures. The simulation models, encapsulated in software components, can make use of advanced HLA mechanisms such as time and data management, which is especially helpful when joining different timescales. The elements of the environment are programmatically accessible via scripting interfaces. Prototype functionality was described on the basis of a sample multiscale simulation of a dense stellar system in the MUSE environment. The results of the experiment show that that the component layer does not introduce much overhead.

The HLA components described in this chapter are designed to facilitate the composability of simulation models by means of HLA mechanisms, accessible and steerable from the user layer. However, in order to fully exploit their composability, we are currently in the process of integration with the GridSpace Virtual Laboratory.13 GridSpace provides an experiment workbench for constructing experiment plans from code snippets. It supports multiple interpreters and enables access to computing infrastructures. The basic integration step is straightforward—the client script setting up HLA components (shown in Fig. 6.8) can be directly run as a GridSpace experiment and connects to the H2O component containers installed on the e-infrastructure. Integration with GridSpace yields the ability to interpret commands issued to HLA components in an interactive mode as it supports step-by-step programming where steps are not known in advance but are based on previous results. This feature is especially useful for long-running simulation models producing partial output.

We plan to use GridSpace to access other European e-infrastructures (e.g., EGEE) apart from using DAS2 and also to use the common GridSpace services (gems) for component sharing and future reuse. In the future, partial results of long-running components will be accessible directly from the GridSpace workbench using its interface to a Web application displaying output and error streams from components.

Future work will also involve a description language for connecting HLA components. Currently in our work, we use the HLA FOM definition of structures of data objects and events that need to be passed between HLA components. This approach should be further extended to provide more information related to the scale of modules. Moreover, module descriptions should be flexible enough to support simpler types of data (e.g., arrays) frequently used in legacy implementations of simulation models.

ACKNOWLEDGMENTS

The authors wish to thank Alfons Hoekstra and Jan Hegewald for discussions on MUSCLE, Simon Portegies Zwart for valuable discussions on MUSE and our colleagues for input concerning GridSpace. The authors are also very grateful to Piotr Nowakowski for his suggestions. The research presented in this chapter was partially supported by the European Union in the EFS PO KL Pr. IV Activity 4.1 Subactivity 4.1.1 project UDA-POKL.04.01.01-00-367/08-00 “Improvement of the Didactic Potential of the AGH University of Science and Technology—Human Assets” and also by the MAPPER project—grant agreement no. 261507, 7FP UE.

Notes

1 Web services: http://www.w3.org/TR/ws-arch/.

2 The Simulation Interoperability Standards Organization (SISO) home page: http://www.sisostds.org/.

3 BOM standards home page: http://www.boms.info/standards.htm.

4 High Level Architecture specification—IEEE 1516.

5 OASIS WSBPEL Technical Committee. Web Services Business Process Execution Language Version 2.0, April 2007. http://docs.oasis-open.org/wsbpel/2.0/OS/wsbpel-v2.0-OS.html.

6 Virolab home page: http://virolab.cyfronet.pl.

7 G. Barber. Service Component Architecture Home, 2007. http://osoa.org/display/Main/Service+Component+Architecture+Home.

9 Web services: http://www.w3.org/TR/ws-arch/.

10 WSRF home page: http://www.globus.org/wsrf/.

11 The Distributed ASCI Supercomputer 3 Web site: http://www.cs.vu.nl/das3.

12 StarPlane home page http://www.starplane.org/.

REFERENCES

I. Altintas, E. Jaeger, K. Lin, et al. A Web service composition and deployment framework for scientific workflows. In ICWS’04: Proc. of the IEEE Int’l Conference on Web Services, p. 814, Washington, DC: IEEE Computer Society, 2004.

R. Armstrong, G. Kumfert, L. Curfman McInnes, et al. The CCA component model for high-performance scientific computing. Concurrency and Computation-Practice & Experience, 180(2):215–229, 2006.

A. Caiazzo, D. Evans, J.-L. Falcone, et al. Towards a complex automata multiscale model of in-stent restenosis. In G. Allen et al., editors, ICCS’09: Proc. of the 9th Int’l Conference on Computational Science, pp. 705–714, Berlin: Springer-Verlag, 2009.

T. Fahringer, A. Jugravu, S. Pllana, et al. ASKALON: A tool set for cluster and grid computing: Research articles. Concurrency and Computation-Practice & Experience, 17(2–4):143–169, 2005.

I. Foster, C. Kesselman, and S. Tuecke. The anatomy of the grid: Enabling scalable virtual organizations. International Journal of High Performance Computing Applications, 15(3):200–222, 2001.

I. Foster, C. Kesselman, J. M. Nick, et al. The physiology of the grid: An Open Grid Services Architecture for distributed systems integration. In Open Grid Service Infrastructure WG, Global Grid Forum, June 2002.

B. Françoise, C. Denis, D. Cédric, et al. GCM: A grid extension to fractal for autonomous distributed components. Annales des Télécommunications, 64(1–2):5–24, 2009.

D. Groen, S. Harfst, and S. Portegies Zwart. The living application: A self-organizing system for complex grid tasks. International Journal of High Performance Computing Applications, 24(2):185–193, 2010.

J. Hegewald, M. Krafczyk, J. Tölke, et al. An agent-based coupling platform for complex automata. In M. Bubak et al., editors, ICCS’08: Proc. of the 8th Int’l Conference on Computational Science, Part II, pp. 227–233, Berlin: Springer-Verlag, 2008.

S. Hirsch, D. Szczerba, B. Lloyd, et al. A mechano-chemical model of a solid tumor for therapy outcome predictions. In G. Allen et al., editors, ICCS’09: Proc. of the 9th Int’l Conference on Computational Science, pp. 715–724, Berlin: Springer-Verlag, 2009.

A. G. Hoekstra, E. Lorenz, J.-L. Falcone, et al. Toward a complex automata formalism for multi-scale modeling. International Journal for Multiscale Computational Engineering, 5(6):491–502, 2007.

D. Kurzyniec et al. Towards self-organizing distributed computing frameworks: The H2O approach. Parallel Processing Letters, 13(2):273–290, 2003.

J. Larson, R. Jacob, and E. Ong. The Model Coupling Toolkit: A new Fortran90 toolkit for building multiphysics parallel coupled models. International Journal of High Performance Computing Applications, 19(3):277–292, 2005.

G. Lavender and D. C. Schmidt. Active object: An object behavioral pattern for concurrent programming. In Proc. Pattern Languages of Programs, 1995.

J. Makino, E. Kokubo, and T. Fukushige. Performance evaluation and tuning of GRAPE-6—towards 40 “real” Tflops. In SC’03: Proc. of the 2003 ACM/IEEE Conference on Supercomputing, p. 2, Washington, DC: IEEE Computer Society, 2003.

M. Malawski, T. Barty![]() ski, and M. Bubak. Invocation of operations from script-based grid applications. Future Generation Computer Systems, 26(1):138–146, 2010.

ski, and M. Bubak. Invocation of operations from script-based grid applications. Future Generation Computer Systems, 26(1):138–146, 2010.

M. Malawski, M. Bubak, M. Placek, et al. Experiments with distributed component computing across grid boundaries. In Proc. of the HPC-GECO/CompFrame Workshop in Conjunction with HPDC 2006, Paris, France, 2006.

M. Pharr and R. Fernando. GPU Gems 2: Programming Techniques for High-Performance Graphics and General-Purpose Computation (GPU Gems). Boston: Addison-Wesley Professional, 2005.

S. Portegies Zwart, S. Mcmillan, B. Ó. Nualláin, et al. A multiphysics and multiscale software environment for modeling astrophysical systems. In M. Bubak et al., editors, ICCS’08: Proc. of the 8th Int’l Conference on Computational Science, Part II, pp. 207–216, Berlin: Springer-Verlag, 2008.

G. E. Pound, M. H. Eres, J. L. Wason, et al. A grid-enabled problem solving environment (PSE) for design optimisation within Matlab. In IPDPS’03: Proc. of the 17th Int’l Symposium on Parallel and Distributed Processing, p. 50.1, Washington, DC: IEEE Computer Society, 2003.

L. Qi, H. Jin, I. Foster, et al. HAND: Highly Available Dynamic Deployment Infrastructure For Globus Toolkit 4. In P. D’Ambra and M. R. Guarracino, editors, PDP’07: Proc. of the 15th Euromicro Int’l Conference on Parallel, Distributed and Network-Based Processing, pp. 155–162, Washington, DC: IEEE Computer Society, 2007.

B. Rajkumar and R. Rajiv. Special section: Federated resource management in grid and cloud computing systems. Future Generation Computer Systems, 26:1189–1191, 2010.

K. Rycerz, M. Bubak, and P. M. Sloot. HLA component-based environment for distributed multiscale simulations. In T Priol and M Vanneschi, editors, From Grids to Service and Pervasive Computing, pp. 229–239, Berlin: Springer-Verlag, 2008a.

K. Rycerz, M. Bubak, and P. M. Sloot. Dynamic interactions in HLA component model for multiscale simulations. In M. Bubak et al., editors, ICCS’08: Proc. of the 8th Int’l Conference on Computational Science, Part II, pp. 217–226, Berlin: Springer-Verlag, 2008b.

K. Rycerz, M. Bubak, and P. M. A. Sloot. Using HLA and grid for distributed multiscale simulations. In R. Wyrzykowski et al., editors, PPAM’07: Proc. of the 7th Int’l Conference on Parallel Processing and Applied Mathematics, pp. 780–787, Berlin: Springer-Verlag, 2008c.