1.4 Styles of Programming

We use the term “styles of programming” rather than perhaps the more common/conventional, but antiquated, term “paradigm of programming.” See Section 1.4.6 for an explanation.

1.4.1 Imperative Programming

The primary method of describing/affecting computation in an imperative style of programming is through the execution of a sequence of commands or imperatives that use assignment to modify the values of variables—which are themselves abstractions of memory cells. In C and Fortran, for example, the primary mode of programming is imperative in nature. The imperative style of programming is a natural consequence of basing a computer on the von Neumann architecture, which is defined by its uniform representation of both instructions and data in main memory and its use of a fetch–decode–execute cycle. (While the Turing machine is an abstract model that captures the notion of mechanical computation, the von Neumann architecture is a practical design model for actual computers. The concept of a Turing machine was developed in 1935–1937 by Alan Turing and published in 1937. The von Neumann architecture was articulated by John von Neumann in 1945.)

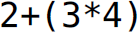

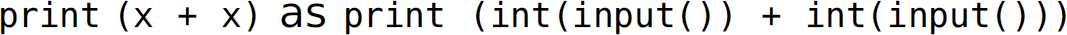

The main mechanism used to effect computation in the imperative style is the assignment operator. A discussion of the difference between statements and expressions in programs helps illustrate alternative ways to perform such computation. Expressions are evaluated for their value, which is returned to the next encompassing expression. For instance, the subexpression ![]() in the expression

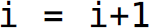

in the expression  returns the integer 12, which becomes the second operand to the addition operator. In contrast, the statement

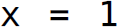

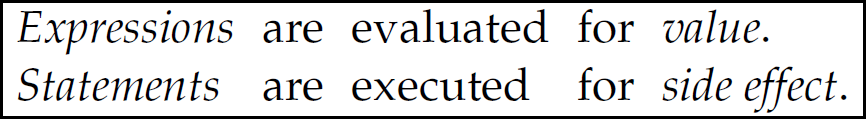

returns the integer 12, which becomes the second operand to the addition operator. In contrast, the statement  has no return value.1 After that statement is executed, evaluation proceeds with the following statement (i.e., sequential execution). Expressions are evaluated for values while statements are executed for side effect (Table 1.2). A side effect is a modification of a parameter to a function or operator, or an entity in the external environment (e.g., a change to a global variable or performing I/O, which changes the nature of the input stream/file). The primary way to perform computation in an imperative style of programming is through side effect. The assignment statement inherently involves a side effect. For instance, the execution of statement

has no return value.1 After that statement is executed, evaluation proceeds with the following statement (i.e., sequential execution). Expressions are evaluated for values while statements are executed for side effect (Table 1.2). A side effect is a modification of a parameter to a function or operator, or an entity in the external environment (e.g., a change to a global variable or performing I/O, which changes the nature of the input stream/file). The primary way to perform computation in an imperative style of programming is through side effect. The assignment statement inherently involves a side effect. For instance, the execution of statement  changes the first parameter (i.e., x) to the = assignment operator to 1. I/O also inherently involves a side effect. For instance, consider the following Python program:

changes the first parameter (i.e., x) to the = assignment operator to 1. I/O also inherently involves a side effect. For instance, consider the following Python program:

Table 1.2 Expressions Vis-à-Vis Statements

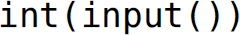

If the input stream contains the integer 1 followed by the integer 2, readers accustomed to imperative programming might predict the output of this program to be 2 because the input function executes only once, reads the value 1,2 and stores it in the variable x. However, one might interpret the line  , since x stands for

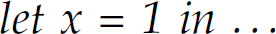

, since x stands for  . With this interpretation, one might predict the output of the program to be 3, where the first and second invocations to input() read 1 and 2, respectively. While mathematics involves binding (e.g.,

. With this interpretation, one might predict the output of the program to be 3, where the first and second invocations to input() read 1 and 2, respectively. While mathematics involves binding (e.g.,  ), mathematics does not involve assignment.3

), mathematics does not involve assignment.3

The aforementioned interpretation of the statement  might seem unnatural to most readers. For those readers who are largely familiar with the imperative style of programming, describing computation through side effect is so fundamental to and ingrained into their view of programming and so unconsciously integrated into their programming activities that the prior interpretation is viewed as entirely foreign. However, that interpretation might seem entirely natural to a mathematician or someone who has no experience with programming.

might seem unnatural to most readers. For those readers who are largely familiar with the imperative style of programming, describing computation through side effect is so fundamental to and ingrained into their view of programming and so unconsciously integrated into their programming activities that the prior interpretation is viewed as entirely foreign. However, that interpretation might seem entirely natural to a mathematician or someone who has no experience with programming.

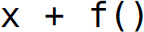

Side effects also make a program difficult to understand. For instance, consider the following Python program:

Function f has a side effect: After f is called, the global variable x has value 2, which is different than the value it had prior to the call to f. As a result, the output of this program depends on the order in which the operands to the addition operator are evaluated. However, the result of a commutative operation, like addition, is not dependent on the order in which its operands are evaluated (i.e.,  ). If the operands are evaluated from left to right (i.e., Python semantics), the output of this program is 3. If the operands are evaluated from right to left, the output is 4.

). If the operands are evaluated from left to right (i.e., Python semantics), the output of this program is 3. If the operands are evaluated from right to left, the output is 4.

The concept of side-effect is closely related to, yet distinct from, the concept of referential transparency. Expressions and languages are said to be referentially transparent (i.e., independent of evaluation order) if the same arguments/operands to a function/operator yield the same output irrespective of the context/environment in which the expression applying the function/operator is evaluated. The function Python f given previously has a side effect and the expression  is not referential transparent. The absence of side effects is not sufficient to guarantee referential transparency (Conceptual Exercise 1.8).

is not referential transparent. The absence of side effects is not sufficient to guarantee referential transparency (Conceptual Exercise 1.8).

Since the von Neumann architecture gave rise to an imperative mode of programming, most early programming languages (e.g., Fortran and COBOL), save for Lisp, supported primarily that style of programming. Moreover, programming languages evolved based on the von Neumann model. However, the von Neumann architecture has certain inherent limitations. Since a processor can execute program instructions much faster than program instructions and program data can be moved from main memory to the processor, I/O between the processor and memory—referred to as the von Neumann bottleneck—affects the speed of program execution. Moreover, the reality that computation must be described as a sequence of instructions operating on a single piece of data that is central to the von Neumann architecture creates another limitation. The von Neumann architecture is not a natural model for other non-imperative styles of describing computation. For instance, recursion, nondeterministic computation, and parallel computation do not align with the von Neumann model.4,5

Imperative programming is programming by side effect; functional programming is programming without side effect. Functional programming involves describing and performing computation by calling functions that return values. Programmers from an imperative background may find it challenging to conceive of writing a program without variables and assignment statements. Not only is such a mode of programming possible, but it leads to a compelling higher-order style of program construction, where functions accept other functions as arguments and can return a function as a return value. As a result, a program is conceived as a collection of highly general, abstract, and reusable functions that build other functions, which collectively solve the problem at hand.

1.4.2 Functional Programming

While the essential element in imperative programming is the assignment statement, the essential ingredient in functional programming is the function. Functions in languages supporting a functional style of programming are first-class entities. In programming languages, a first-class entity is a program object that has privileges that other comparable program entities do not have.6 The designation of a language entity as first-class generally means that the entity can be expressed in the source code of the program and has a value at run-time that can be manipulated programmatically (i.e., within the source code of the program). Traditionally, this has meant that a first-class entity can be stored (e.g., in a variable or data structure), passed as an argument, and returned as a value. For instance, in many modern programming languages, functions are first-class entities because they can be created and manipulated at run-time through the source code. Conversely, labels in C passed to goto do not have run-time values and, therefore, are not first-class entities. Similarly, a class in Java does not have a manipulatable value at run-time and is not a first-class entity. In contrast, a class in Smalltalk does have a value that can be manipulated at run-time, so it is a first-class entity.

In a functional style of programming, the programmer describes computation primarily by calling a series of functions that cascade a set of return values to each other. Functional programming typically does not involve variables and assignment, so side effects are absent from programs developed using a functional style. Since side effect is fundamental to sequential execution, statement blocks, and iteration, a functional style of programming utilizes recursion as a primary means of repetition. The functional style of programming was pioneered in the Lisp programming language, designed by John McCarthy in 1958 at MIT (1960). Scheme and Common Lisp are dialects of Lisp. Scheme, in particular, is an ideal vehicle for exploring language semantics and implementing language concepts. For instance, we use Scheme in this text to implement recursion from first principles, as well as a variety of other language concepts. In contrast to the von Neumann architecture, the Lisp machine is a predecessor to modern single-user workstations. ML, Haskell, and F# also primarily support a functional style of programming.

Functional programming is based on lambda-calculus (hereafter referred to as λ-calculus)—a mathematical theory of functions developed in 1928–1929 by Alonzo Church and published in 1932.7 Like the Turing machine, λ-calculus is an abstract mathematical model capturing the notion of mechanical computation (or an algorithm). Every function that is computable—referred to as decidable—by Turing machines is also computable in (untyped) q-calculus. One goal of functional programming is to bring the activity of programming closer to mathematics, especially to formally guarantee certain safety properties and constraints. While the criterion of sequential execution of assignment and conditional statements is sufficient to determine whether a language is Turing-complete, languages without support for sequential execution and variable assignment can also be Turing-complete. Support for (1) arithmetic operations on integer values, (2) a selection operator (e.g.,  ), and (3) the ability to define new recursive functions from existing functions/operators are alternative and sufficient criteria to describe the computation that a Turing machine can perform. Thus, a programming language with those facilities is also Turing-complete.

), and (3) the ability to define new recursive functions from existing functions/operators are alternative and sufficient criteria to describe the computation that a Turing machine can perform. Thus, a programming language with those facilities is also Turing-complete.

The concept of purity in programming languages also arises with respect to programming style. A language without support for side effect, including no side effect for I/O, can be considered to support a pure form of functional programming. Scheme is not pure in its support for functional programming because it has an assignment operator and I/O operators. By comparison, Haskell is nearly pure. Haskell has no support for variables or assignment, but it supports I/O in a carefully controlled way through the use of monads, which are functions that have side effects but cannot be called by functions without side effects.

Again, programming without variables or assignment may seem inconceivable to some programmers, or at least seem to be an ascetical discipline. However, modification of the value of a variable through assignment accounts for a large volume of bugs in programs. Thus, without facilities for assignment one might write less buggy code. “Ericsson’s AXD301 project, a couple million lines of Erlang code,8 has achieved 99.9999999% reliability. How? ‘No shared state and a sophisticated error-recovery model,’ Joe [Armstrong, who was a designer of Erlang] says” (Swaine 2009, p. 16). Moreover, parallelization and synchronization of single-threaded programs is easier in the absence of variables whose values change over time since there is no shared state to protect from corruption. Chapter 5 introduces the details of the functional style of programming. The imperative and functional modes of programming are not entirely mutually exclusive, as we see in Section 1.4.6.

1.4.3 Object-Oriented Programming

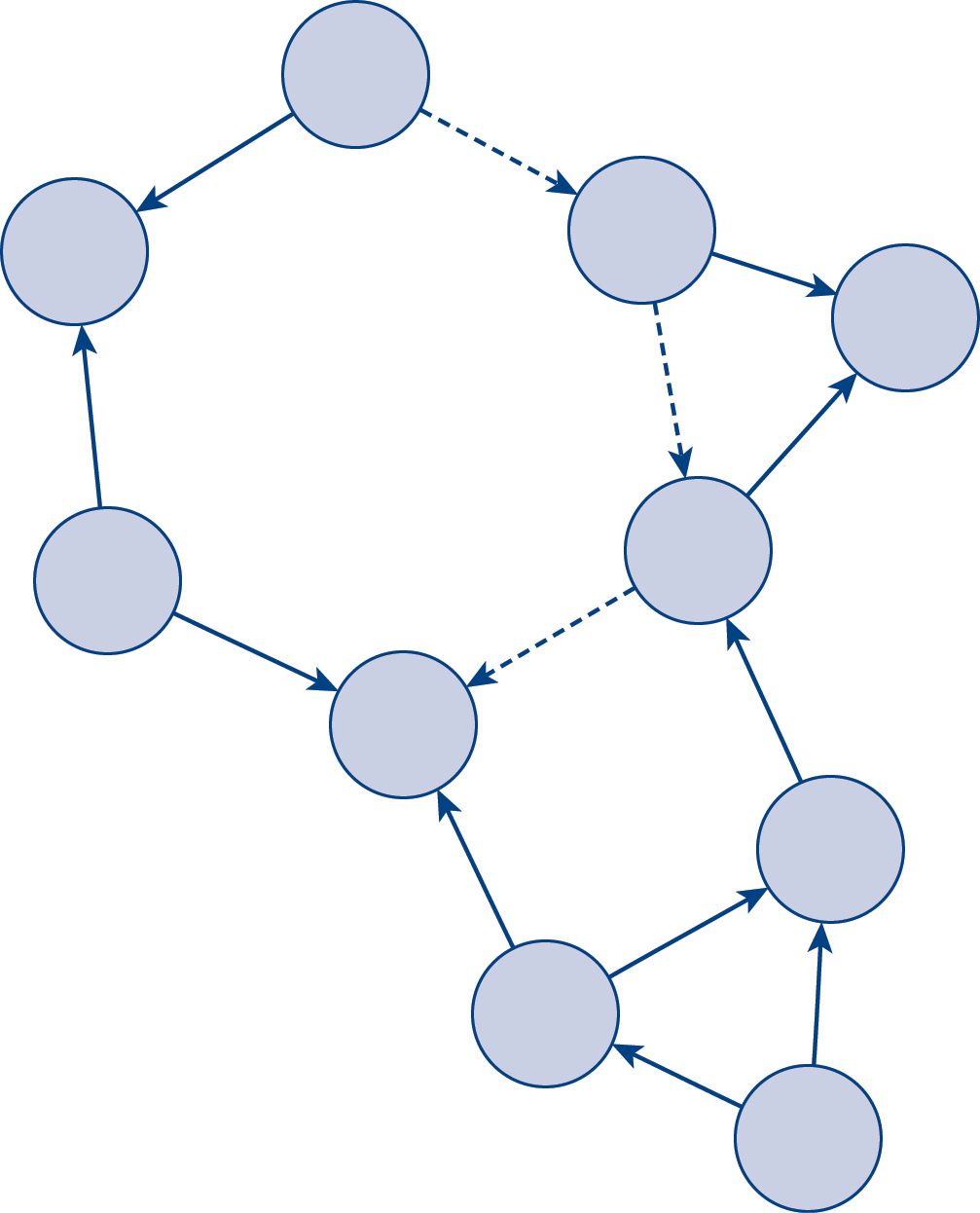

In object-oriented programming, a programmer develops a solution to a problem as a collection of objects communicating by passing messages to each other (Figure 1.1):

Figure 1.1 Conceptual depiction of a set of objects communicating by passing messages to each other to collaboratively solve a problem.

DescriptionI thought of objects being like biological cells and/or individual computers on a network, only able to communicate with messages (so messaging came at the very beginning—it took a while to see how to do messaging in a programming language efficiently enough to be useful). (Kay 2003)

Objects are program entities that encapsulate data and functionality. An object-oriented style of programming typically unifies the concepts of data and procedural abstraction through the constructs of classes and objects. The object-oriented style of programming was pioneered in the Smalltalk programming language, designed by Alan Kay and colleagues in the early 1970s at Xerox PARC. While there are imperative aspects involved in object-oriented programming (e.g., assignment), the concept of a closure from functional programming (i.e., a first-class function with associated bindings) is an early precursor to an object (i.e., a program entity encapsulating behavior and state). Alan Kay (2003) has expressed that Lisp influenced his thoughts in the development of object orientation and Smalltalk. Languages supporting an object-oriented style of programming include Java, C++, and C#. A language supporting a pure style of object-oriented programming is one where all program entities are objects—including primitives, classes, and methods—and where all computation is described by passing messages between these objects. Smalltalk and languages based on the Common Lisp Object System (CLOS), including Dylan, support a pure form of object-oriented programming.

Lisp (and the Lisp machine) and Smalltalk were the experimental platforms that gave birth to many of the commonly used and contemporary language features, including implicit pointer dereferencing, automatic garbage collection, run-type typing, and associated tools (e.g., interactive programming environments and pointing devices such as the mouse). Both languages significantly influenced the subsequent evolution of programming languages and, indeed, personal computing. Lisp, in particular, played an influential role in the development of other important programming languages, including Smalltalk (Kay 2003).

1.4.4 Logic/Declarative Programming

The defining characteristic of a logic or declarative style of programming is description of what is to be computed, not how to compute it. Thus, declarative programming is largely an activity of specification, and languages supporting declarative programming are sometimes called very-high-level languages or fifth-generation languages. Languages supporting a logic/declarative style of programming have support for reasoning about facts and rules; consequently, this style of programming is sometimes referred to as rule-based. The basis of the logic/declarative style of programming is first-order predicate calculus.

Prolog is a language supporting a logic/declarative style of programming. In contrast to the von Neumann architecture, the Warren Abstract Machine is a target platform for Prolog compilers. CLIPS is also a language supporting logic/declarative programming. Likewise, programming in SQL is predominantly done in a declarative manner. A SQL query describes what data is desired, not how to find that data (i.e., developing a plan to answer the query). Usually language support for declarative programming implies an inefficient language implementation since declarative specification occurs at a very high level. In turn, interpreters for languages that support declarative programming typically involve multiple layers of abstraction.

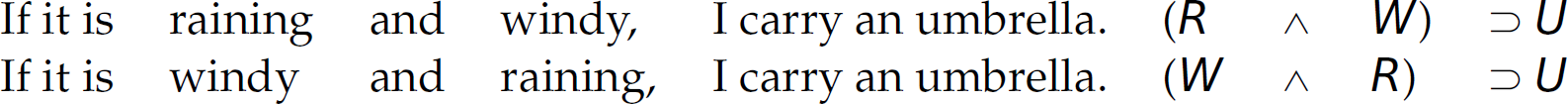

An objective of logic/declarative programming is to support the specification of both what you want and the knowledge base (i.e., the facts and rules) from which what you want is to be inferred without regard to how the system will deduce the result. In other words, the programmer should not be required or permitted to codify the facts and rules in the program in a form that imparts control over or manipulates the built-in deduction algorithm for producing the desired result. No control information or procedural directives should be woven into the knowledge base so to direct the interpreter’s deduction process. Specification (or declaration) should be order-independent. Consider the following two logical propositions:

Since the conjunction logical operator ![]() is commutative, these two propositions are semantically equivalent and, thus, it should not matter which of the two forms we use in a program. However, since computers are deterministic systems, the interpreter for a language supporting declarative programming typically evaluates the terms on the left-hand side of these propositions (i.e., R and W) in a left-to-right or right-to-left order. Thus, the desired result of the program can—due to side effect and other factors—depend on that evaluation order, akin to the evaluation order of the terms in the Python expression

is commutative, these two propositions are semantically equivalent and, thus, it should not matter which of the two forms we use in a program. However, since computers are deterministic systems, the interpreter for a language supporting declarative programming typically evaluates the terms on the left-hand side of these propositions (i.e., R and W) in a left-to-right or right-to-left order. Thus, the desired result of the program can—due to side effect and other factors—depend on that evaluation order, akin to the evaluation order of the terms in the Python expression  described earlier. Languages supporting logic/declarative programming as the primary mode of performing computation often equip the programmer with facilities to impart control over the search strategy used by the system (e.g., the cut operator in Prolog). These control facilities violate a defining principle of a declarative style—that is, the programmer need only be concerned with the logic and can leave the control (i.e., the inference methods used to produce program output) up to the system. Unlike Prolog, the Mercury programming language is nearly pure in its support for declarative programming because it does not support control facilities intended to circumvent or direct the search strategy built into the system (Somogyi, Henderson, and Conway 1996). Moreover, the form of the specification of the facts and rules in a logic/declarative program should have no bearing on the output of the program. Unfortunately, it often does. Mercury is the closest to a language supporting a purely logic/declarative style of programming. Table 1.3 summarizes purity in programming styles. Chapter 14 discusses the logic/declarative style of programming.

described earlier. Languages supporting logic/declarative programming as the primary mode of performing computation often equip the programmer with facilities to impart control over the search strategy used by the system (e.g., the cut operator in Prolog). These control facilities violate a defining principle of a declarative style—that is, the programmer need only be concerned with the logic and can leave the control (i.e., the inference methods used to produce program output) up to the system. Unlike Prolog, the Mercury programming language is nearly pure in its support for declarative programming because it does not support control facilities intended to circumvent or direct the search strategy built into the system (Somogyi, Henderson, and Conway 1996). Moreover, the form of the specification of the facts and rules in a logic/declarative program should have no bearing on the output of the program. Unfortunately, it often does. Mercury is the closest to a language supporting a purely logic/declarative style of programming. Table 1.3 summarizes purity in programming styles. Chapter 14 discusses the logic/declarative style of programming.

Table 1.3 Purity in Programming Languages

Style of Programming | Purity Indicates | (Near-)Pure Language(s) |

|---|---|---|

|

Functional programming Logic/declarative programming Object-oriented programming |

No provision for side effect No provision for control No provision for performing computation without message passing; all program entities are objects |

Haskell Mercury Smalltalk, Ruby, and CLOS-based languages |

1.4.5 Bottom-up Programming

A compelling style of programming is to use a programming language not to develop a solution to a problem, but rather to build a language specifically tailored to solving a family of problems for which the problem at hand is an instance. The programmer subsequently uses this language to write a program to solve the problem of interest. This process is called bottom-up programming and the resulting language is typically either an embedded or a domain-specific language. Bottom-up programming is not on the same conceptual level as the other styles of programming discussed in this chapter—it is on more of a meta-level. Similarly, Lisp is not just a programming language or a language supporting multiple styles of programming. From its origin, Lisp was designed as a language to be extended (Graham 1993, p. vi), or “a programmable programming language” (Foderaro 1991, p. 27), on which the programmer can build layers of languages supporting multiple styles of programming. For instance, the abstractions in Lisp can be used to extend the language with support for object-oriented programming (Graham 1993, p. ix). This style of programming or metaprogramming, called bottom-up programming, involves using a programming language not as a tool to write a target program, but to define a new targeted (or domain-specific) language and then develop the target program in that language (Graham 1993, p. vi). In other words, bottom-up programming involves “changing the language to suit the problem” (Graham 1993, p. 3). “Not only can you program in Lisp (that makes it a programming language) but you can program the language itself” (Foderaro 1991, p. 27). It has been said that “[i]f you give someone Fortran, he has Fortran. If you give someone Lisp, he has any language he pleases” (Friedman and Felleisen 1996b, p. 207).

Other programming languages are also intended to be used for bottom-up programming (e.g., Arc9). While we do return to the idea of bottom-up programming in Section 5.12 in Chapter 5, and in Chapter 15, the details of bottom-up programming are beyond the scope of this text. For now it suffices to say that bottom-up design can be thought of as building a library of functions followed by writing a concise program that calls those functions. “However, Lisp gives you much broader powers in this department, and augmenting the language plays a proportionately larger role in Lisp style—so much so that [as mentioned previously] Lisp is not just a different language, but a whole different way of programming” (Graham 1993, p. 4).

A host of other styles of programming are supported by a variety of other languages: concatenative programming (e.g., Factor, Joy) and dataflow programming (e.g., LabView). Table 1.4 summarizes the origins of the styles of programming introduced here. Table 1.5 presents the terms introduced in this section that are fundamental/universal to the study of programming languages.

Table 1.4 Practical/Conceptual/Theoretical Basis for Common Styles of Programming

Style of Programming | Practical/Conceptual/Theoretical Foundation | Defining/Pioneering Language |

|---|---|---|

|

Imperative programming Functional programming Logic/declarative programming Object-oriented programming |

von Neumann architecture λ-calculus; Lisp machine First-order Predicate Calculus; Warren Abstract Machine Lisp, biological cells, individual computers on a network |

Fortran Lisp Prolog Smalltalk |

Table 1.5 Key Terms Discussed in Section 1.4

|

syntax: form of language semantics: meaning of language first-class entity side effect referential transparency |

1.4.6 Synthesis: Beyond Paradigms

Most languages have support for imperative (e.g., assignment, statement blocks), object-oriented (e.g., objects, classes), and functional (e.g., λ/anonymous [and first-class] functions) programming. Some languages even have, to a lesser extent, support for declarative programming (e.g., pattern-directed invocation).

What we refer to here as styles of programming was once—and in many cases still is—referred to as paradigms of languages.10 Imperative, functional, logic/declarative, and object-oriented have been, traditionally, the four classical paradigms of languages. However, historically, other paradigms have emerged for niche application domains,11 including languages for business applications (e.g., COBOL), hardware description languages (e.g., Verilog, VHDL), and scripting languages (e.g., awk, Rexx, Tcl, Perl). Traditional scripting languages are typically interpreted languages supporting an imperative style of programming with an easy-touse command-and-control–oriented syntax and ideal for processing strings and generating reports. The advent of the web ignited the evolution of languages used for traditional scripting-type tasks into languages supporting multiple styles of programming (e.g., JavaScript, Python, Ruby, PHP, and Tcl/Tk). As the web and its use continued to evolve, the programming tasks common to web programming drove these languages to continue to grow and incorporate additional features and constructs supporting more expressive and advanced forms of functional, object-oriented, and concurrent programming. (Use of these languages with associated development patterns [e.g., Model-View-Controller] eventually evolved into web frameworks [e.g., Express, Django Rails, Lavavel].)

The styles of programming just discussed are not mutually exclusive, and language support for multiple styles is not limited to those languages used solely for web applications. Indeed, one can write a program with a functional motif while sparingly using imperative constructs (e.g., assignment) for purposes of pragmatics. Scheme and ML primarily support a functional style of programming, but have some imperative features (e.g., assignment statements and statement blocks). Alternatively, one can write a primarily imperative program using some functional constructs (e.g., λ/anonymous functions). Dylan, which was influenced by Scheme and Common Lisp, is a language that adds support for object-oriented programming to its functional programming roots. Similarly, the pattern-directed invocation built into languages such as ML and Haskell is declarative in nature and resembles the rule-based programming, at least syntactically, in Prolog. Curry is a programming language derived from Haskell and, therefore, supports functional programming; however, it also includes support for logic programming. In contrast, POP-11 primarily facilitates a declarative style of programming, but supports first-class functions. Scala is a language with support for functional programming that runs on the Java virtual machine.

Moreover, some languages support database connectivity to make (declaratively written) queries to a database system. For instance, C# supports “Language-INtegrated Queries” (LINQ), where a programmer can embed SQL-inspired declarative code into programs that otherwise use a combination of imperative, functional, object-oriented, and concurrent programming constructs. Despite this phenomenon in language evolution, both the concept and use of the term paradigm as well as the classical boundaries were still rigorously retained. These languages are referred to as either web programming languages (i.e., a new paradigm was invented) or multi-paradigm languages—an explicit indication of the support for multiple paradigms needed to maintain the classical paradigms.

Almost no languages support only one style of programming. Even Fortran and BASIC, which were conceived as imperative programming languages, now incorporate object-oriented features. Moreover, Smalltalk, which supports a pure form of object-oriented programming, has support for closures from functional programming—though, of course, they are accessed and manipulated through object orientation and message passing. Similarly, Mercury, which is considered nearly a pure logic/declarative language, also supports functional programming. For example, while based on Prolog, Mercury marries Prolog with the Haskell type system (Somogyi, Henderson, and Conway 1996). Conversely, almost all languages support some form of concurrent programming—an indication of the influence of multicore processors on language evolution (Section 1.5). Moreover, many languages now support some form of λ/anonymous functions. Languages supporting more than one style of programming are now the norm; languages supporting only one style of programming are now the exception.12

Perhaps this is partial acknowledgment from the industry that concepts from functional (e.g., first-class functions) and object-oriented programming (e.g., reflection) are finding their way from research languages into mainstream languages (see Figure 1.4 and Section 1.5 later in this chapter). It also calls the necessity of the concept of language paradigm into question. If all languages are multi-paradigm languages, then the concept of language paradigm is antiquated. Thus, the boundaries of the classical (and contemporary) paradigms are by now thoroughly blurred, rendering both the boundaries and the paradigms themselves irrelevant: “Programming language ‘paradigms’ are a moribund and tedious legacy of a bygone age. Modern language designers pay them no respect, so why do our courses slavishly adhere to them?” (Krishnamurthi 2008). The terms originally identifying language paradigms (e.g., imperative, object-oriented, functional, and declarative) are more styles of programming13,14 than descriptors for languages or patterns for languages to follow. Thus, instead of talking about a “functional language” or an “object-oriented language,” we discuss “functional programming” and “object-oriented programming.”

A style of programming captures the concepts and constructs through which a language provides support for effecting and describing computation (e.g., by assignment and side effect vis-á-vis by functions and return values) and is not a property of a language. The essence of the differences between styles of programming is captured by how computation is fundamentally effected and described in each style.15

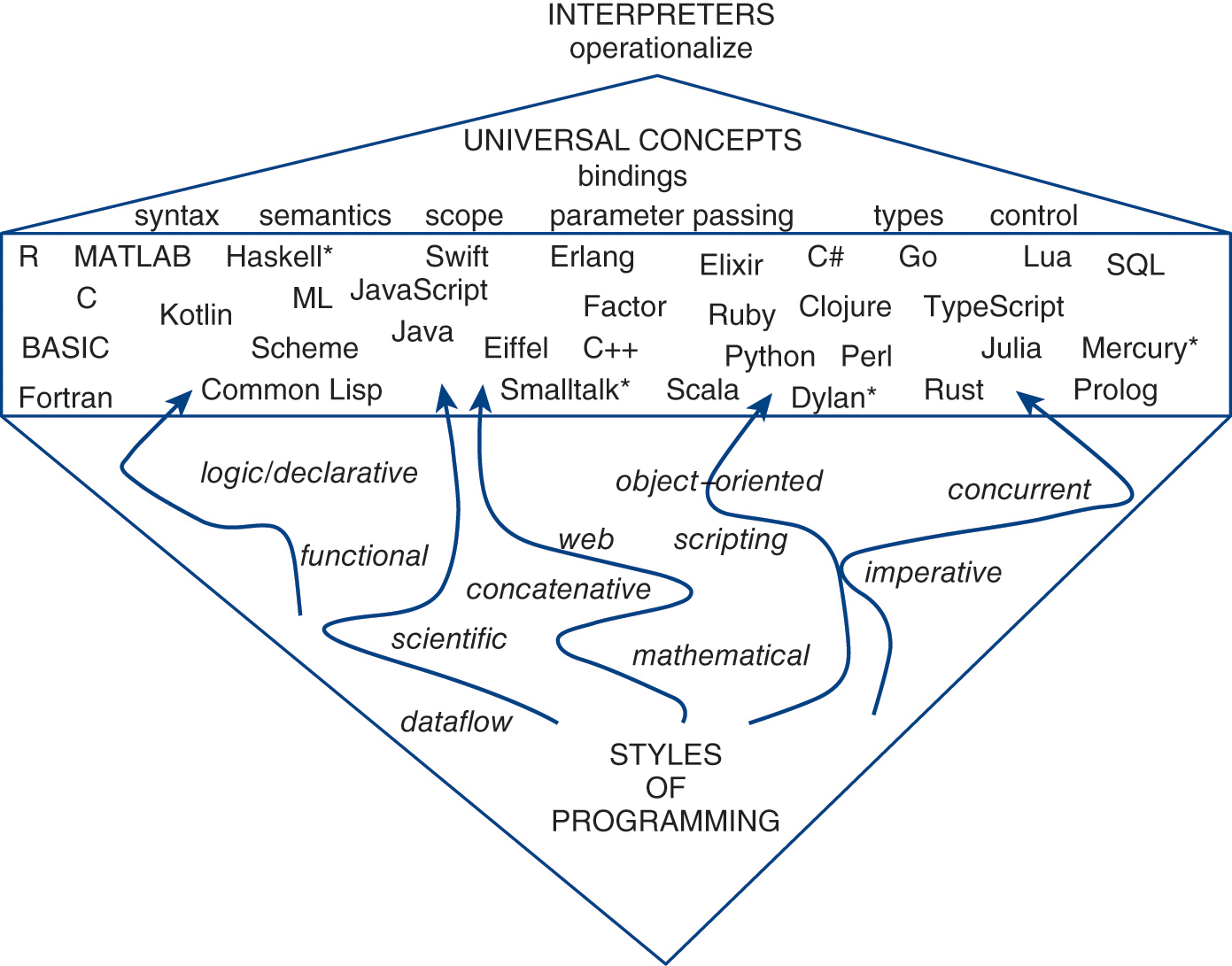

1.4.7 Language Evaluation Criteria

As a result of the support for multiple styles of programming in a single language, now, as opposed to 30 years ago, a comparative analysis of languages cannot be fostered using the styles (i.e., “paradigms”) themselves. For instance, since Python and Go support multiple overlapping styles of programming, a comparison of them is not as simple as stating, “Python is an object-oriented language and Go is an imperative language.” Despite their support for a variety of programming styles, all computer languages involve a core set of universal concepts (Figure 1.2), so concepts of languages provide the basis for undertaking comparative analysis. Programming languages differ in terms of the implementation options each employs for these concepts. For instance, Python is a dynamically typed language and Go is a statically typed language. The construction of an interpreter for a computer language operationalizes (or instantiates) the design options or semantics for the pertinent concepts. (Operational semantics supplies the meaning of a computer program through its implementation.) One objective of this text is to provide the framework in which to study, compare, and select from the available programming languages.

Figure 1.2 Within the context of their support for a variety of programming styles, all languages involve a core set of universal concepts that are operationalized through an interpreter and provide a basis for (comparative) evaluation. Asterisks indicate (near-)purity with respect to programming style.

DescriptionThere are other criteria—sometimes called nonfunctional requirements—by which to evaluate languages. Traditionally, these criteria include readability, writability, reliability (i.e., safety), and cost. For instance, all of the parentheses in Lisp affect the readability and writability of Lisp programs.16 Others might argue that the verbose nature of COBOL makes it a readable language (e.g., ADD 1 TO X GIVING Y), but not a writable language. How are readability and writability related? In the case of COBOL, they are inversely proportional to each other. Some criteria are subject to interpretation. For instance, cost (i.e., efficiency) can refer to the cost of execution or the cost of development. Other language evaluation criteria include portability, usability, security, maintainability, modifiability, and manageability.

Languages can be also be compared on the basis of their implementations. Historically, languages that primarily supported imperative programming involved mostly static bindings and, therefore, tended to be compiled. In contrast, languages that support a functional or logic/declarative style of programming involve mostly dynamic bindings and tend to be interpreted. (Chapter 4 discusses strategies for language implementation.)

1.4.8 Thought Process for Problem Solving

While most languages now support multiple styles of programming, use of the styles themselves involves a shift in one’s problem-solving thought process. Thinking in one style (e.g., iteration—imperative) and programming in another style (e.g., functional, where recursive thought is fundamental) is analogous to translating into your native language every sentence you either hear from or speak to your conversational partner when participating in a synchronous dialog in a foreign language—an unsustainable strategy. Just as a one-to-one mapping between phrases in two natural languages—even those in the same family of languages (e.g., the Romance languages)—does not exist, it is generally not possible to translate the solution to a problem conceived with thought endemic to one style (e.g., imperative thought) into another (e.g., functional constructs), and vice versa.

An advantageous outcome of learning to solve problems using an unfamiliar style of programming (e.g., functional, declarative) is that it involves a fundamental shift in one’s thought process toward problem decomposition and solving. Learning to think and program in alternative styles typically entails unlearning bad habits acquired unconsciously through the use of other languages to accommodate the lack of support for that style in those languages. Consider how a programmer might implement an inherently recursive algorithm such as mergesort using a language without support for recursion:

Programming languages teach you not to want what they cannot provide. You have to think in a language to write programs in it, and it’s hard to want something you can’t describe. When I first started writing programs—in Basic—I didn’t miss recursion, because I didn’t know there was such a thing. I thought in Basic. I could only conceive of iterative algorithms, so why should I miss recursion? (Graham 1996, p. 2)

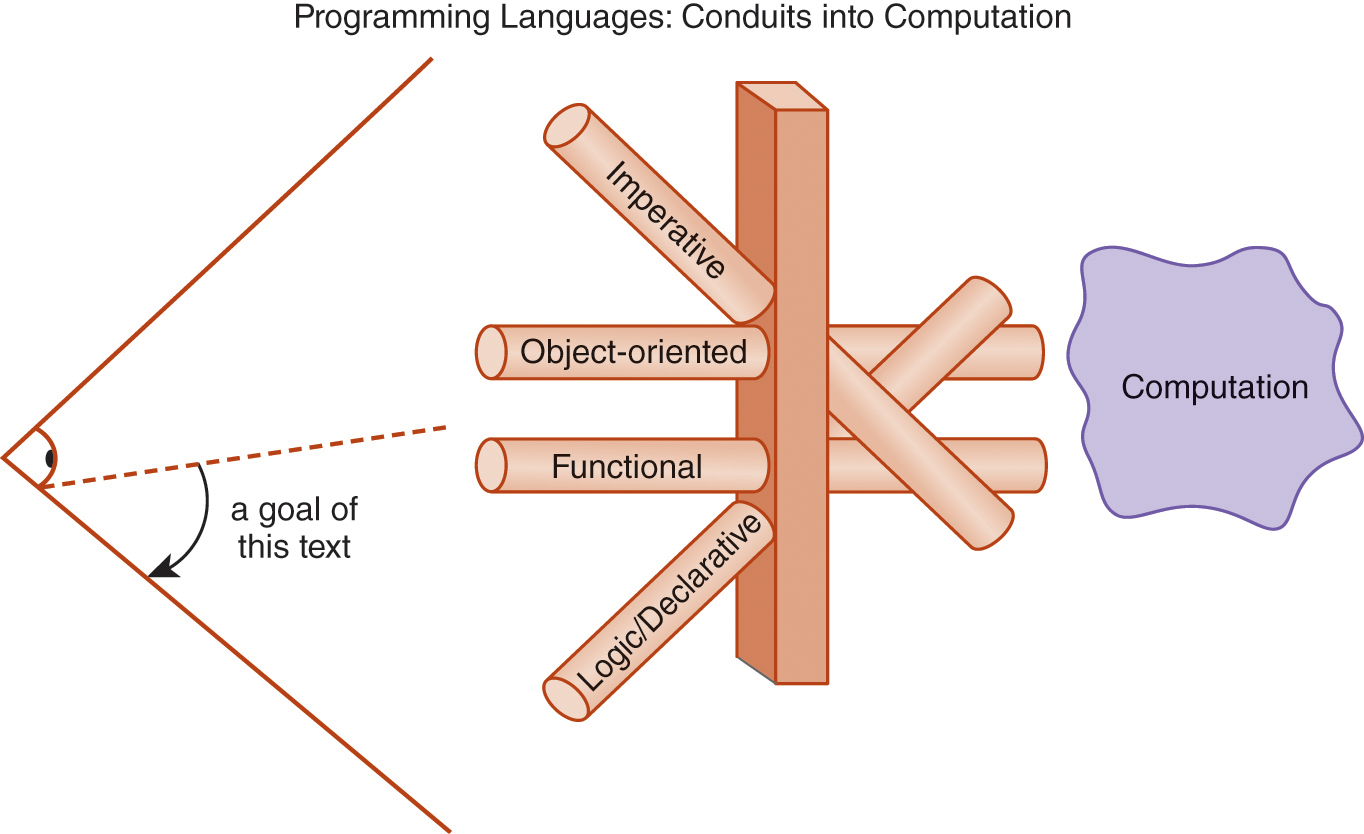

Paul Graham (2004b, p. 242) describes the effect languages have on thought as the Blub Paradox.17 Programming languages and the use thereof are— perhaps, so far—the only conduit into the science of computing experienced by students. Because language influences thought and capacity for thought, an improved understanding of programming languages and the different styles of programming supported by that understanding result in a more holistic view of computation.18 Indeed, a covert goal of this text or side effect of this course of study is to broaden the reader’s understanding of computation by developing additional avenues through which to both experience and describe/effect computation in a computer program (Figure 1.3). An understanding of Latin— even an elementary understanding—not only helps one learn new languages but also improves one’s use and command over their native language. Similarly, an understanding of both Lisp and the linguistic ideas central to it—and, more generally, the concepts of languages—will help you more easily learn new programming languages and make you a better programmer in your language of choice. “[L]earning Lisp will teach you more than just a new language—it will teach you new and more powerful ways of thinking about programs” (Graham 1996, p. 2).

Figure 1.3 Programming languages and the styles of programming therein are conduits into computation.

Description

1. In C, such statements return the value of i after the assignment takes place.

2. The Python int function used here converts the string read with the input function to an integer.

3. The common programming idiom x=x+1 can be confusing to nonprogrammers because it appears to convey that two entities are equal that are clearly not equal.

4. Ironically, John Backus, the recipient of the 1977 ACM A. M. Turing Award for contributions to the primarily imperative programming language Fortran, titled his Turing Award paper “Can Programming Be Liberated from the von Neumann Style?: A Functional Style and Its Algebra of Programs.” This paper introduced the functional programming language FP through which Backus (1978) cast his argument. While FP was never fully embraced by the industrial programming community, it ignited both debate and interest in functional programming and subsequently influenced multiple languages supporting a functional style of programming (Interview with Simon Peyton-Jones 2017).

5. Computers have been designed for these inherently non-imperative styles as well (e.g., Lisp machine and Warren Abstract Machine).

6. Sometimes entities in programming languages are referred to as second-class or even third-class entities. However, these distinctions are generally not helpful.

7. Alonzo Church was Alan Turing’s PhD advisor at Princeton University from 1936 to 1938.

8. Erlang is a language supporting concurrent and functional programming that was developed by the telecommunications company Ericsson.

9. http://arclanguage.org

10. A paradigm is a worldview—a model. A model is a simplified view of some entity in the real world (e.g., a model airplane) that is simpler to interact with. A programming language paradigm refers to a style of performing computation from which programming in a language adhering to the tenets of that style proceeds. A language paradigm can be thought of as a family of natural languages, such as the Romance languages or the Germanic languages.

11. In the past, even the classical functional and logic/declarative paradigms, and specifically the languages Lisp and Prolog, respectively, were considered paradigms primarily for artificial intelligence applications even though the emacs text editor for UNIX and Autocad are two non-AI applications that are more than 30 years old and were developed in Lisp. Now there are Lisp and Prolog applications in a variety of other domains (e.g., Orbitz). We refer the reader to Graham (1993, p. 1) for the details of the origin of the (accidental) association between Lisp and AI. Nevertheless, certain languages are still ideally suited to solve problems in a particular niche application domain. For instance, C is a language for systems programming and continues to be the language of choice for building operating systems.

12. The miniKanren family of languages primarily supports logic programming.

13. John Backus (1978) used the phrase “functional style” in the title of his 1977 Turing Award paper.

14. When we use the phrase “styles of programming” we are not referring to the program formatting guidelines that are often referred to as “program style” (e.g., consistent use of three spaces for indentation or placing the function return type on a separate line) (Kernighan and Plauger 1978), but rather the style of effecting and describing computation.

15. For instance, the object-relational impedance mismatch between relational database systems (e.g., PostgreSQL or MySQL) and languages supporting object-oriented programming—which refers to the challenge in mapping relational schemas and database tables (which are set-, bag-, or list-oriented) in a relational database system to class definitions and objects—is more a reflection of differing levels of granularity in the various data modeling support structures than one fundamental to describing computation.

16. Some have stated that Lisp stands for Lisp Is Superfluous Parentheses.

17. Notice use of the phrase “thinking in” instead of “programming in.”

18. The study of formal languages leads to the concept of a Turing machine; thus, language is integral to the theory of computation.