12.7 Distributed Query Processing

To process a query expressed in a high-level language such as SQL, it is necessary to transform the query into a low-level representation such as relational algebra before execution. There are various methods of transforming relational algebra expressions into equivalent but more efficient ones, and several methods for estimating the cost of processing queries for different relational algebra operations, which are discussed in Chapter 10. The dominant factor in the cost estimates is the number of disk accesses required. A distributed environment requires that we also consider the cost of transmitting data over the network. If the network is relatively slow, this factor can become the dominant cost factor. If the data is replicated, we must consider the issue of choosing the best of several possible sites for performing all or part of a query. Partitioning of data complicates queries that require the use of more than one of the partitions. Therefore, in a DDBS, we must consider network-wide query optimization procedures.

As described earlier, database queries can be categorized as local, remote, or compound (also called global). A local request is one that can be satisfied at the node where it is entered. A remote request can be satisfied at a single, remote node. A compound request is one that requires access to more than one node. In satisfying a compound request, the DDBMS is responsible for breaking down the query into several subqueries, each of which is directed to a specific node for processing. The DDBMS must then collect and coordinate the responses to obtain an overall result. Ideally, the user should not be aware of the distribution of data and should not need to specify where the data items are located.

12.7.1 Steps in Distributed Query Processing

Some or all of the following steps must be performed by the DDBMS in answering a query:

Accept the user’s request. The user interface is the responsibility of the DDBMS, rather than the local DBMS, at each node. Because the local DBMS is not aware of the distribution, it is unable to handle queries about data items not stored locally.

Check the validity of the request. This requires checking that the correct query language syntax is used and checking the existence of the referenced data items. This is external-level validity checking, which requires that the DDBMS have access to the user’s subschema.

Check authorization. Access control information must be available to the DDBMS, so that it can check whether the user is authorized to perform the operations requested on the data items specified. This checking should be done both at the user’s node and at every node where the request is processed. This is logical-level checking, and it requires that the schema be available to the DDBMS.

Map external to logical level. The DDBMS maps the user’s data names and views to the corresponding logical-level objects.

Determine a request-processing strategy. The DDBMS must consult the global data dictionary to determine the location of the data items requested. If the request is local, it forwards it to the local DBMS for processing. If the request is remote, the DBMS sends it to the remote node. If the request is compound, it must break it into subqueries and direct each to a node. There may be several ways of breaking up the query, so the DDBMS normally uses some form of optimization to determine which decomposition to use. Optimization in a centralized system involves several techniques. For example, if there are multiple conditions, testing can be done first on the condition that eliminates the largest number of records or the one that requires the fewest I/O operations. In accessing records, the shortest access paths are chosen. In a distributed system, the optimization also includes the use of the communications system. An objective of optimization might be to minimize response time, which would dictate decomposing the request in such a manner that parallel processing could be performed at several nodes, especially if the communications system is fast and the processing load is light. Sometimes several nodes perform the same process, and results are obtained from the one that finishes first. If the system is heavily used, other objectives might be more appropriate. These include minimizing total processing time, which consists of the sum of the (possibly simultaneous) processing times of the nodes and the time used for executing communications software and consolidating the results. In selecting an algorithm, true optimization requires that the DDBMS find all the ways of processing the query and then “cost out” each one by using mathematical formulas that include processing costs, communications costs, and storage costs. Because of the difficulty of finding all possible processing methods, a hill climbing method is often used. This consists of finding an initial solution and costing it out, then evaluating a slightly different alternative, choosing the better of the two. The better one then becomes the basis for a comparison with another alternative, and the process continues until no other method can be found or a certain number of iterations fail to produce substantial improvement.

If the system is heterogeneous, translate each query into the DML of the data node. In remote or compound requests, the node where the data is located may use a different DBMS from the user’s node. Therefore, the DDBMS must provide translation due to hardware and software differences. In the worst case, different data models and data structures as well as different codes and word lengths might be involved.

Encrypt the request. Security is improved if every request and every response is encrypted before being passed to the communications system. The receiving node must then decrypt the message before processing.

Depending on the system, the local DC component may determine the routing. The requesting node must provide logical identifiers for each node that is to receive a subrequest. The function of translating a logical identifier to a physical identifier and choosing a path requires a network description, which is available to the DC component. Routing may be direct or indirect, requiring that the message pass through intermediate nodes. If indirect, the routing may be determined by the originating node in advance, or it may be dynamically determined by the intermediate nodes.

The DC component transmits the message through the communications system. Because communications between databases are process-to-process communications, the message must pass down through all the data communication layers at the source node, be transmitted to the destination, and pass back up through all the communication layers at the destination node.

Decrypt the request. If the message was encrypted at the source, it must now be decrypted at the destination node before processing can begin.

Perform update synchronization, if needed. If the request is an update, the DDBMS at the receiving node must go through synchronization procedures, as described in Section 12.6.

The local DBMS does its processing. The local DBMS at the data node performs logical and physical binding, determines local processing strategy, and retrieves the data requested of it.

If the system is heterogeneous, translate the data. The requesting node, data node, or some intermediate node can perform any necessary translation.

Send the results to the destination node. Normally, query results are returned to the requesting node, but other nodes might also be specified as destinations. The results might be encrypted before they are returned.

Consolidate the results, edit, and format the response for the user. The requesting node usually coordinates the entire query decomposition process, and it is normally the site where final consolidation of partial results is performed. In addition, the DDBMS at that node consults the user’s external model to present data in the form the user expects. However, other nodes may perform some of these functions.

Return the results to the user. Because the user interface is provided by the DDBMS, it is responsible for displaying the results.

12.7.2 The Semijoin Operation

For many distributed databases, a semijoin operation can be very cost-effective. If S and T are tables, then the left semijoin S ⋉ T is found by taking the natural join of S and T and then projecting the result onto the attributes of S. The result will be just those tuples of S that participate in the join. We define the right semijoin in a similar fashion. As an example of the use of the semijoin in a distributed environment, assume that a Department table with the following schema is stored at site A:

![]()

Also assume that a Faculty table with the following schema is stored at site B:

![]()

We assume that chairpersonId in Department is a foreign key, referencing facId in Faculty, and that deptCode is a foreign key in Faculty, referencing deptCode in Department.

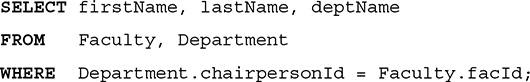

Consider the query “Find the name of each department and the name of the chairperson of that department,” entered at site A. In SQL, we write

To perform this join operation, we need to be able to compare Id values at site A and site B. The natural join would require either sending a copy of the Department table to site B of the Faculty table or sending the entire Faculty table to site A of the Department table. Instead, we could do a relational algebra project operation on chairpersonId in the Department table and ship only those values to the site of Faculty table, site B. There, we could do a natural join with Faculty, which selects only the records of faculty members who are department chairs. We then project that result on deptCode, firstName, and lastName, and ship that result back to the site of the Department table, site A. There, we do a join of the result over deptCode to find the deptName for those records and produce the final result. Shipping costs are minimized because only those records that have matches for the join operation are sent to the other site.

Note that the initial projection identified only the facId of chairpersons, substantially reducing the amount of data to be shipped. The semijoin was done at site B, where we did a join and then projected the results onto the attributes of Faculty (firstName, lastName, and deptCode) that were in the join.

The semijoin is often used this way in distributed systems, especially in queries where few tuples will participate in the join. The optimizer considers a semijoin in place of a join when the tables to be joined reside at different sites.