![]()

Determining What Makes a Good API

Among people writing libraries in Java, there is a common misconception that APIs are comprised of nothing more than classes and methods, and the Javadoc used to annotate them. Indeed, the Javadoc serves as an important index of API visibility, but “API” is a far broader term than this view would indicate and includes many other types of interfaces.

Once again, let’s remind ourselves why we’re interested in the development of APIs: we want to cluelessly assemble our applications from large building blocks, such as shared libraries, frameworks, premade application skeletons, as well as combinations of these. We believe that if people designing these blocks do their job well—that is, write their APIs properly—then assembly will be a simple operation and we won’t need to waste our time debugging, reading source code, patching it, and generally trying to understand what others did. In short, we’ll be able to operate in “clueless mode.”

WORKING WITH THE SOURCE CODE

This is the first chapter that contains various code samples, so it makes sense to mention how to work with them. All the code snippets in this book are taken from real projects. You can download them and play with them. Just visit http://source.apidesign.org and follow its instructions.

An API seen from this point of view is much richer than the Javadoc-bounded idea of “API” that might originally have appeared in your mind. To make the understanding of the term more concrete, this chapter enumerates a few sample API types.

The most obvious and least controversial example of an API is a set of classes, their methods, and their fields. Probably everyone producing a library in Java will package it into a JAR file containing classes. These classes and their members then become the library’s API.

Of course, not all signatures are part of an API. If you use nonpublic classes, or private or package private fields and methods, common sense suggests that these elements aren’t part of the contract that other programmers should use. Often it’s possible to access such elements by using reflection or other low-level techniques, but these are commonly and correctly considered to be hacks: if reflection is needed for communication between a system’s components, it indicates that something is wrong or that the available API is insufficient. You can expect that such hacks will break across versions, as no API contract specifies that they should work at all.

However, it’s not true that reflection cannot be used to form valid and useful APIs. Even in the basic Java platform, you can find examples where reflection is successfully used to simplify coding and sometimes even to define new coding patterns. For example, in the JavaBeans specification, the user of the bean gets a list of methods and fields that are available at design time and can be modified through the use of reflection. In other places, it’s common to require a public constructor for a class to exist, because some code will use Class.newInstance to get an instance of a class without knowing its name statically. Therefore, using reflection can be helpful, but you must do so with care; in particular, you must document clearly for those coding against such APIs that tricks of this kind are in use.

A bit less obvious, but important nonetheless, is the set of files the application reads or writes, as well as their format.

As an example, let’s consider how communication between a telnet application and the Kerberos authentication subsystem can be achieved. These two components are unlikely to have been developed by the same people, as the skill set for writing encryption is somewhat different from that required for handling socket communication. An API can enable these components to work together.

The telnet application reads its configuration files from a shared system location, such as /etc/telnet.rc, as well as via user personal preferences from $HOME/.telnetrc. Kerberos relies on this, and at install time modifies the content of the shared file with instructions for telnet to call the authentication subsystem. The telnet application then uses this information to load appropriate libraries and authenticate the user’s login password.

SANDWICH-BASED FILE CONFIGURATION

Unlike Unix applications, some Java applications can exist without touching the disk at all. On the other hand, the NetBeans Platform, and especially its configuration, is built around files. However, it doesn’t deal directly with files on disk. Instead, it’s based on the concept of virtual files.

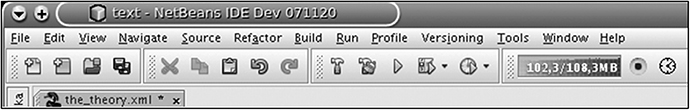

The configuration area of any NetBeans Platform–based application is similar to the content of Unix’s /etc directory. Every utility (in Unix) or a module (in the case of the NetBeans Platform) has one or more dedicated locations that it reads to acquire configuration parameters. For example, the system that builds the NetBeans Platform’s menu and toolbar as shown in Figure 3-1 reads its data from the /Menu or /Toolbars directories. In fact, locations of this kind form an important API, because other modules need to know them to register their own configuration files there. Their names cannot change arbitrarily, as that would mean that currently existing registrations would no longer be recognized. Their content needs to be stable as well, for the same reason.

Figure 3-1. The NetBeans menu and toolbar are built from the content of file directories.

This is comparable to Unix’s /etc configuration area. However, there is one significant difference. Most of the files in the NetBeans configuration directory are just virtual; that is, they are taken from many XML filesystems. Just like a giant sandwich, the configuration is composed from various layers. Each component (a NetBeans module) is capable of providing an XML filesystem layer with many file registrations, such as this one:

<filesystem>

<folder name="Menu">

<file name="Open.instance"/>

</folder>

</filesystem>

The system then merges all these layers together into one filesystem. This works similarly to unionfs in modern Linux distributions. This big, merged filesystem is then used to provide the actual configuration data for a running NetBeans system. All this feels like a big sandwich composed of individual component layers.

I am fairly proud of this invention of mine, which I created in 2000, because it eliminates many small configuration files on disk, replacing them with virtual files read directly from each module. Moreover, it provides a significant advantage: the provided files are always consistent with the list of installed modules, which might not be true if they are copied to disk when a component is installed and removed when it’s uninstalled.

The importance of this “file-based API” is obvious. If the location of the shared file that telnet reads changes, for example, to /etc/telnet5.rc, any modifications made by Kerberos in the original location will be useless, as nobody will read the modifications. Also, if the format of the file changes, Kerberos might perform syntactically incorrect changes during installation. In turn, this might cause the changes to fail and can prevent the whole file from being read successfully, preventing the telnet client from being run.

Environment Variables and Command-Line Options

Environment variables and command-line interfaces are not often important in the context of libraries. However, as demonstrated by the presence of the section “ENVIRONMENT” in the Unix man pages, these “interfaces” are extremely important in certain environments. In the Unix world, it’s common to write an application in a shell by means of chained invocations of small tools.

To execute these tools, a lot of information is taken from environment variables. For example, the search path for executed programs is stored in the variable PATH. By changing it you can change the meaning of the executed programs. For example, one of the basic tricks to hack a computer is to redefine the meaning of the su utility. Simply sit at someone’s unlocked computer, write a shell script to mail you the password, name it su, and change the PATH so it first finds your version rather than executing the standard one located at /bin/su. Providing different values for the environment variables a program reads can significantly influence the program’s behavior. The point here is that files and environment variables are no less an API than class names and method signatures. This applies to software libraries in a modular application, as well as to Unix command-line utilities. Output and ad hoc strings that are accessible from foreign code are part of a component’s API.

ENVIRONMENT VARIABLES IN JAVA APPLICATIONS

For a long time (from Java 1.1 to Java 5), the Java APIs lacked a way to read environment variables. This was probably due to the feeling that these variables aren’t available on all operating systems and that code relying on them wouldn’t be portable. However, it’s sometimes hard to write real applications without the use of these variables. For example, the NetBeans IDE CVS and Subversion support plug-ins needed to invoke command-line tools to perform version-control operations. These tools provide an environment API to control their behavior on Windows and Unix systems. It was necessary to invoke these with the appropriate environment variables from inside the NetBeans IDE. Often, it was also necessary to propagate certain global user variables to them. For this reason, we needed to read the current environment variables from inside the Java application, and we did this with a special launcher script. However, now we can remove these old tricks, because Java 5 provides a standard API to read environment variables.

Java has a partial replacement for environment variables: system properties. Any method in Java can use System.getProperty, with a string name, to read a value associated with the property. This is similar to Unix environment variables: system properties can be passed to the Java Virtual Machine when it starts. It’s also possible to change property values while the Java Virtual Machine is running.

Often, we use these property-based APIs in NetBeans for changing the default behavior of certain parts of the system, which we want to make possible but we don’t want to advertise too much. This enables finetuning, such as slightly different behavior during drag and drop, the disabling of certain hacks involving access to the clipboard, and the changing of behavior to be more suitable for running tests. This kind of API isn’t needed while using the NetBeans IDE or writing modules for the NetBeans Platform; it’s mainly aimed at people and companies building various customized applications based on the NetBeans Platform. Although not as prominent as classes and methods in the Javadoc API, we do document this API properly and we do our best to ensure this API won’t break in future releases. It’s a real API to us, and it serves everyone well for certain purposes.

Of course, Unix utilities don’t react only to environment variables. Another important API used is the list of arguments passed at the time of invocation. For example, in the previous case the su utility knows who to log in, because it’s invoked with that argument. Obviously, changing the way arguments are interpreted, the order in which they can be provided, or abandoning support for some that used to be supported might completely break an application that invokes a new version of a utility with old-style arguments. Therefore, input parameters, and any documented variables that can affect the operation of the system, are also APIs.

The use of pipes makes Unix unique compared to its predecessors and many of its successors. Tool utilities aren’t controlled just by environment variables and parameters provided on the command line. You can also send any text input to a utility and get a result in return. Chaining such utilities into an application is a common practice on any Unix system and constitutes one of the most powerful and well-understood coding patterns for any Unix system user. As a result, anything a program reads or prints can be seen as—and often is—an API. So, it has to undergo careful review, as I’ll describe in the next section.

The text you print and the text you read can become an important API. I’ve already mentioned the case of configuration files and input and output streams sent to and from a program, but that isn’t all. Java assigns to every object the ability to convert itself into a string, using the toString method. The default implementation that prints just class names and hexadecimal object hash codes isn’t terribly useful. For debugging purposes, Java objects often override toString methods to return more meaningful information. This can become a problem, as sometimes the value acquires too much meaning and foreign components come to rely on it. Any unintentional API such as this can compromise the promise of distributed development: the main motivation for having an API in the first place.

MISUSE OF TOSTRING

A misuse of the toString method happened to NetBeans with FileObject.toString(). FileObject is a NetBeans wrapper around java.io.File that allows you to plug virtual filesystems such as FTP, HTTP, XML filesystems, and so on, into the NetBeans Platform. The toString method originally returned a concatenation of two strings that by chance exactly matched the local file name. As a result, you could do new File(fileObject.toString()) and access the right local file. However, this was just an accidental effect. It didn’t work for files controlled by CVS or other version control systems, or for members of JAR files. However, developers testing their code on their own local disk, where such code would appear to work, would end up using this trick in production modules. Of course, it broke as soon as any other virtual filesystem was used.

We decided to fix the problem. We tried to return a URL from toString() and told people to use the getPath() method, which they should have been using anyway. However, a few weeks later, we found our colleagues had started to rely on toString returning URLs! Thankfully, we discovered this pretty quickly, before the release, and could change the toString method to return a value that had no meaning. Now a string with a prefix, then a URL, and then hexadecimal System.identityHashCode() is the value of toString. This seems to have achieved our goal and clearly communicated that this method wasn’t useful. As a result, our colleagues are using getPath(), which is the actual API those developers should have been using to get a relative path from the root of the virtual filesystem in the first place. However, this is not a happy ending. Although the Javadoc of getPath() warns that this is just a relative path, it was accidentally also the actual path on the disk in the case of file objects representing local files. Quite a few people started to rely on new File(to.getPath()) really existing, and as a result we broke their code when we wanted to change the relative root. Déjà vu?

In a similar case, we discovered that providing discoverable string names to graphical user interface (GUI) components in an API caused abuse whereby innocent changes to that GUI component unexpectedly broke clients. It’s clear that any available information from a component in a modular system can and will be abused. So, it’s important to be clear in specifying what is and what is not part of the API contract.

Beware of situations where there is no alternative to parsing text messages! If the information isn’t available in other ways, people will parse any textual output generated by your code. For example, this happened to the JDK’s Exception.printStackTrace(OutputStream). Until JDK 1.4, there was no other way to understand the origin of an exception than to parse this output. Indeed, we did it in the NetBeans IDE, as did many others in their own applications. As a result, the originally informal format of the stack trace became an API and could not be changed without breaking client code. If you are serious about your API, you want to avoid such situations.

In Java 1.4 this misuse of the API was understood and fixed. The Exception class acquired the new getStackTrace() method that returned the same information in a more structured format. This was supposed to completely remove the need for parsing the textual output, and likely it did, for new applications. However, nothing changed for those that were written prior to Java 1.4. Those applications continue to rely on parsing the textual format and don’t acknowledge the new methods at all. As a result, it’s still necessary to keep the textual format untouched! This is a result of APIs being like stars: once someone discovers them, they need to stay and behave well forever.

Protocols are related to textual and file-based APIs. They are the formats of messages sent over a network, and as such they are of especially great importance. Whenever you open a socket, either for reading or for writing, you immediately enter an API transaction. It’s not just an ordinary transaction, but one that is potentially even more dangerous than the common usage of APIs through local interfaces.

The first issue is that, except where externally provided, there is no access control. In Java you can use public, private, protected, or package private to guard access, but an open socket offers no such choice. Everyone able to access your computer and a given port can now open the connection, start to talk to you, and become dependent upon you. What’s good about this kind of API is that unless you distribute your application to other parties that run the server independently, you can count and analyze access frequency and communication, as it all happens on “your” server. This can help you analyze possible misuses of your socket. You know what others do when using it, you can analyze their requests, and you can make sure your responses are sane. You can adjust your responses over time, but you still have to ensure that you can deal with any connection, as there is no way to restrict those who can connect to your port. Your port, which is now your API, is open for everyone.

The other issue amplified by the use of network protocols is the widespread proliferation of various clients and protocol versions that are used to talk to your application. I once read an interesting article about the development of Subversion, the version control system, regarding the challenges developers faced during their program’s evolution and the protocols it uses.1 In fact, Subversion’s developers need to fight on various fronts, because they have a wide range of APIs. As a command-line tool, Subversion is dominated by arguments and environment variables. As a version control system, Subversion needs to store and understand metadata about locally checked-out files. Last but not least, developers also need the client to connect to their server with some protocol and make updates and checkouts over the wire. This would be simple if there was just one version of the Subversion system. However, as with any other software, the system evolves. There is a need to fix existing bugs, and there is a constant need to add new features. As a result, the local checkout might be done with one version of the tool from a few weeks ago, while the operations might be performed later with a newer version. Those versions need to understand their data or at least identify whether it can operate on them or not. Identification is the most important part: once Subversion is sure that a different version produced the data, you can refuse to operate on them and ask the user to update the tool. This is an acceptable way to solve a mismatch of versions. However, this only works locally.

The mismatch might happen in local operations, but it’s certain to happen in server communication. The version of the Subversion system on the server is almost guaranteed to be different from that used on local computers that access the server. Why? Because there are usually innumerable client computers, each running its own distribution with a different revision of the Subversion client. The server might try the same trick and ask the clients to upgrade to some specified version, but this won’t work. First of all, it might not be easy to get the new version of a Subversion client for each system. However, even if it were, the problem wouldn’t be solved. There are many Subversion projects, each running their own server, each guaranteed to use a slightly different version of the system. If each of the servers required a specific version of the client, you would need to have svn1, svn1.0.1, svn1.1, svn1.2.3, and so on installed on the client system and always remember which one to use to connect to each Subversion server. Such a proliferation of various incompatible versions of the same tool would create a horrible user experience and would likely lead people to another version control system that would do a better job at keeping backward compatibility of its protocols.

The reason why protocol-like APIs need to evolve in a backward compatible way is because they multiply the problem of the independent life cycle of each participant. Once a protocol is defined, its usages start to live on their own. There can be various client programs that speak to the protocol. Those programs have multiple versions, and these versions are upgraded completely arbitrarily on various computers all around the world. As a result, it’s almost certain that two programs speaking to each other use different versions and that they need a slightly different version of the protocol. Still, despite that, they do need to communicate.

LOCALLY RESTRICTED SERVER

Although applications based on the NetBeans Platform, including the NetBeans IDE, are mostly desktop-based, I had the opportunity to “enjoy” a protocol-based API and its evolution problems. NetBeans applications are singletons. For example, usually there is only one instance of the application per user. If the user invokes the same application for the second time, it shouldn’t do anything itself, just tell the previously running instance to move its window to the front and finish itself. As a result, no second instance should be started. This is achieved using a lock file combined with a socket server. On startup, each NetBeans-based application allocates a random local port, writes its number into a lock file, and listens to it. Whenever another instance is started, it checks for the existence of the lock file. If the lock file is found, it reads the number of the port and attempts to connect to the port to determine if the previous instance of the application is still alive. If so, then these two processes exchange command-line arguments, perform some computations, and send each other input and output streams, after which the second instance exits. This is useful if you want to pass additional arguments to the already running application, such as --open filename.

The communication protocol is quite simple and proprietary. When I coded it, I had no need for it to be extensible or bulletproof. I used it just for communication between two instances of the same application. I thought that I had full control over it and that I could safely add new features to it, if I correctly changed the sender as well as receiver side of the communication channel.

After a few NetBeans releases, I found that I had been naive. I received bug reports that, after a lot of investigation, showed that for some reason people were trying to connect to an already running NetBeans IDE 5.5 via NetBeans IDE 6.0. Because these versions use slightly different versions of the protocol, this doesn’t work.

Since then I’ve learned that the initial sentence of each protocol should be dedicated to a proper handshake—even if the protocol is used just for local, private communication.

Breaking the protocol is like switching from Subversion to another version control system, which is painful to say the least. That is why it’s important that the protocol be capable of some kind of handshake. The handshake should be the first activity it performs when communication is established. It should introduce the version of each of the peers in the communication, allowing them to choose a common language to understand. The other requirement is to have a common language for all the possible mixtures of versions of the clients. The language might not allow complicated tasks to be expressed, just as “basic English” is enough for common situations, such as Subversion checkout, status, and diff.

The protocol is likely to evolve as new features need new expressions in the protocol language. However, there should never be a need for the end users of a Subversion tool, or users of Subversion libraries, to deal with the protocol changes themselves. It shouldn’t matter whether you need svn1, svn1.0.1, svn1.1, svn1.2.3, or some other version. The decision of which protocol to use should be hidden inside a single Subversion binary or library. Internally, it can even contain independent copies of svn1, svn1.0.1, svn1.1, and svn1.2.3 code bases to talk different dialects of the same protocol, but for common usages this shouldn’t be exposed to the clients for the sake of API usage simplicity.

APIs exist to shield us from a component’s internals. They exist to allow us to be clueless yet still able to build our applications using the black-box building blocks. Does that mean that an API’s definition stops at the surface? It would be nice if this were true. However, it usually isn’t: regardless of how good the abstractions provided by the APIs are, the underlying implementation often leaks out and thereby also becomes part of the API.

I’ve met a lot of people who thought that an API ends at the signature of a class or method. Whatever happens behind it is “implementation,” and these details don’t belong to the API at all. I am guilty of thinking in this way as well. When the NetBeans QA department asked whether we were backward compatible, I claimed that because we didn’t change the public signatures of our APIs, we were backward compatible. However, I was wrong. The main goal of producing components is to simplify building modular systems. Compatibility means that you can replace a piece of the system with its newer version and the system will continue to work as it used to. The observation that “things will work” is the most important constraint of compatibility. This is influenced by the internal implementations of methods deep below the signature level.

If a method claims to return a non-null value in one version, then changing it to return null is in fact an incompatible change, because this change can be observed from the outside and negatively influences the component’s users. If a method of an interface is said to be called in the Java AWT Event Dispatch thread, then changing the component to call this method from any thread can break an existing implementation of the interface. It might no longer be possible to create visual components, interact with the user, show dialogs, and so on. Many other behavioral aspects of an API are relevant here, such as program flow, call order, and the locks that are unwittingly being relied upon.

These examples show that although the behavior of an API is much harder to grasp, it’s a very important—if not the most important—part of the API contract. Only if the behavior of a component remains unchanged can its users cluelessly replace versions of the component in their final application. Only then can they trust that the functionality will not be compromised by upgrading to a newer version. That is why this book will treat behavior of components as an API.

I18N Support and L10N Messages

Not all APIs are designed for everyone. If I am only a Linux C developer, I don’t need to care about the internal APIs between the Linux kernel. They’re too low level, far beyond my cluelessness level. It would be completely distracting for a C developer to be overwhelmed by all these details. I want these to be completely beyond my own programming horizon. On the other hand, people do care about these APIs, and for them they form proper and interesting APIs.

It’s normal for different APIs to be targeted at different groups. One of the most extreme cases is internationalization support, used by localization groups to translate individual modules into different languages. Internationalization in Java is usually handled using ResourceBundle. Instead of hard-coding textual messages in the code itself, you define a key and then ask the ResourceBundle for the actual text for the given key. The mappings between keys and messages are placed in Bundle.properties, Bundle_en.properties, Bundle_ja.properties, and so on, and then read from the bundle associated with the current user’s language during program execution.

Developers usually provide just a Bundle.properties with the default language, which is usually English. Someone other than the author of the code then develops and distributes the localizations to other languages. These translations are often independent efforts, with different schedules, done by groups of people located all around the world, potentially far away from the original code developer.

From the viewpoint of a regular API user, such as the developer coding against a library, the Bundle keys are low-level implementation details. Using them is just like using reflection to access private classes, methods, or fields that are not exposed publicly in the API itself. Relying on them is against good habits and common sense. It makes no sense to document these in Javadoc, or to bother regular API users with them. This kind of API is completely beyond the horizon of these groups of users.

SOLARIS WITH A JAPANESE LOCALE

NetBeans uses a slight extension of the ResourceBundle class and most of the time this works well. However, sometimes we need to go beyond this contract and define some extensions. This is usually asking for trouble.

Recently, I defined a semi-online contract, where part of the NetBeans user interface (UI) is read from a web site. From a common address I read an HTML page, parsed it, partially displayed it, and partially used it to create UI buttons, labels, and so on. I managed to make this work easily on my Linux installation. However, when the translation group started their work, I immediately received several error reports.

As the localizers often use Solaris and translate mostly to Japanese, we receive most bug reports from this combination. It’s not much fun to try to reproduce them: booting up Solaris; starting a Japanese login session; and then trying to find out how to invoke a terminal, NetBeans, or a browser is a topic for a separate story. However, the reason we had so many problems with this combination is that, in contrast to most of our Linux installations, most Japanese systems don’t use UTF-8 encoding, but euc_ja. Conversions from and to various encodings often reveal inconsistencies in the application.

It’s best to test your application under the most stressful conditions. In the case of NetBeans and localization-related APIs, we know that the most complicated combination, revealing the most errors, is Solaris in a Japanese locale.

For the localization groups, the bundle keys form an important API, and perhaps the most significant API. To simplify distributed development between programmers and localizers, it’s better to follow the rules of good API evolution, such as limiting the removal or renaming of existing keys, because these are in fact incompatible changes.

As is probably clear by now, the definition of an API stretches far beyond a simple set of classes and methods, or functions and signatures. The API, in the sense useful for the clueless assembly of big system components, ranges from simple text messages to complex and hard-to-grasp behaviors of the components themselves. Just imagine what a mess you can cause by arbitrarily changing database or XML schemas or by redefining the meaning of WDDL, REST, or IDL services. These all fall into the API category and deserve to be designed with evolution in mind.

How to Check the Quality of an API

As previously mentioned, people tend to associate correctness with elegance. In a similar way, when it comes to discussing the properties of a good API, many will say that a good API should be beautiful. Indeed, being beautiful is an advantage. A good impression is always created in the first encounter and if something is nice and we like it, it has a much greater chance of being accepted. That is why it’s acceptable to strive to design beautiful APIs, but beauty cannot be the only measure of a good API.

The definition and measurement of beauty is subjective. No two persons can agree on beauty; everyone has their own preferences. Applied to API design, this means that each API is original and that each requires different skills from its users. This might prevent widespread usage of APIs. Beauty belongs to the world of art, while software engineering is, well, engineering. The primary goal of engineering is to produce working systems. The fact that deep in our minds we have the old Greek heritage, the feeling that if something is correct, it also has to be beautiful, should not distract us from the main goal: designing APIs that are easy to use, widely adopted, and productive. An engineering approach needs an objective way to measure the quality of its products. We need to formulate this for each API to measure the extent that a given objective is satisfied.

In the following sections, we’ll study the various aspects visible to an API’s user, and analyze their importance and ways to conform to them. Given the fact that the expected reader of this book is an engineer, and engineers are taught to measure everything, all the measuring we are about to do will be some compensation for the sad necessity of rejecting beauty as unquantifiable and therefore as a poor criterion of quality.

Those who have to use an API must be able to understand it. This is a vague statement, but also the most important. I have already mentioned that an API is about communication between the programmer who writes the API and the programmer who writes an implementation against that API. As in spoken language, if these two cannot communicate, then something is wrong with the way we are using our communication channel—that is, the API.

The most similar activity to writing an API is writing a book: one writer and a lot of readers. The readers know something of the writer, but the writer knows little or nothing about the readers. Guessing their skills and knowledge correctly is part of the delicate art of making an API that is easy to understand.

Everyone’s view of the world is defined by the horizon. Items that are closer to us are seen clearly; those that lie nearer the horizon become less and less distinct. What is beyond the horizon is not known at all, vanishing into nothingness. Concepts provided by a good API have to lie inside the user’s horizon, otherwise they won’t be understood. The API designer needs to understand what is common knowledge across the target audience and use this knowledge when designing the API. From time to time it’s acceptable to go past the horizon, and expand it by introducing a new concept. However, this has to be built in incrementally, step by step, as going past the horizon is always associated with the danger of getting lost in unexplored territory.

When coding in Java, it’s safe to expect that people know concepts used in most of the java.* libraries. Iterators, enumerations, I/O streams, JavaBeans, listeners, and visual components are objects that nearly any Java programmer has encountered. Use of familiar terminology, classes, and terms reduces the learning curve, which in turn places fewer cognitive demands on an API’s user.

When coding constructs are unfamiliar, there is a simple solution based on the observation that most users of an API first search for existing applications that do something similar to their task, copy the application, and modify it to suit their needs. That is why it doesn’t matter how exceptional an API might be. Having many examples of how to use it increases the likelihood that developers can find something close to their needs. This also greatly increases the probability that they will come to understand the API. The more novel the concepts an API uses, the greater the benefit of extensive examples.

Another important aspect of an API that can be easily checked is whether it’s consistent. If the users of an API have to invest time to learn a concept, it’s important that the concept be applied consistently across the entire API. For example, if there is a way to register, say, factories for objects, then all types of factories should use that one registration mechanism. This reduces the number of caveats and special cases a user of the API needs to track.

Similarly, it’s preferable to allow the same threading model to be used across the whole API or class, rather than documenting that certain methods must be called only from a certain thread.

From the point of view of the lifetime of an API, it’s important to follow the same style of development or at least clearly communicate the evolutionary style of an API. It’s inconvenient to find that certain parts of an API have evolved in a different way than others.

As an example, let’s mention the case of the org.w3c.dom interfaces. They became part of the JDK in version 1.3, and although there was a warning about them being “public domain,” nobody said that those interfaces could not be implemented by clients as any other interfaces in standard Java APIs can be. Implementing java.lang.Runnable is supposed to be safe. For example, nobody will ever add a new method into java.lang.Runnable, because so many clients implement that interface. However, the addition of new methods to org.w3c.dom did happen in newer versions of the JDK. This is problematic because those who had implemented the interface could not rely on the implementation to compile anymore. Despite the fact that most applications are fine, compatibility of certain pieces is gone, and the way developers are informed of this is that they can no longer compile code that was perfectly legitimate in earlier versions. This type of evolution, unless clearly communicated at the outset, is the worst kind of betrayal of trust.

Even the most beautiful API is useless if those who are supposed to use it cannot find it or easily understand how to use it.

Few situations are worse than finding a project that claims to provide an API to help solve your problem, which then sends you to the Javadoc of 5 packages and 30 classes, without entry points or guidance. An example of this is the Javadoc for java.awt.Image. It’s a class that represents graphical images that can be painted on screen or saved in standard image formats. It’s an abstract class, but of course what most users want is to load an image from disk. It contains this cryptic instruction: “The image must be obtained in a platform-specific manner.” A clever reader of the Javadoc might notice the subclass BufferedImage and look there. Empty images of this class can be constructed, but throughout the documentation of these image classes, there is not a hint as to how you might either obtain an instance of one loaded with an image from disk, or draw an image on the screen, or save one. This is a perfect example of meeting the requirements of reference documentation while providing no help to the user trying to find an entry point to do something useful with the features being documented.

In most cases, the set of classes is not what interests most API users. They are interested in getting their job done. For these purposes it’s more important to see examples of API usage, enabling the selection of the idiom that is closest to what you want to do. This partially explains the success of open source projects, as usually you can just copy existing sources to get started. In fact, the source can serve as documentation and provide initial guidance. Consider the history of HTML itself: in the early days of the Web, most budding web designers copied examples of code they saw in their browsers. The World Wide Web might be the ultimate open source project itself.

Regardless of what type of API you provide, it’s important to create a single place that can serve as a starting point and can send people in the directions that solve their problems. As people don’t think in terms of classes, it’s important to organize this entry point in an optimal manner, based on the actual or at least expected goals and tasks.

Sometimes an API supports multiple target audiences. For example, Java’s JNDI allows people to discover an object by its name and also to plug in their own name resolvers. These two actions are created for two completely different groups of API users.

JNDI is just an example, but in fact this happens to many APIs: different audiences will consume it. The first and most basic mistake is putting items of interest to different parties into one API. This hurts discoverability, as people interested in just one aspect of the API will be distracted by the parts of the API that are designed for a completely different audience.

The common approach to take is to split an API into two or more parts: one targeted for callers, the other ideally in a separate package or namespace, for providers plugging into the API to provide its services or extensions. A good approach taken by JNDI was the creation of separate interfaces for those distinct audiences, splitting them into completely different packages. Callers into the API just use javax.naming and javax.naming.event, while the implementors are more interested in javax.naming.spi, and so forth.

This sort of separation is even more important than documenting the API in that it provides automatic scoping: the different audiences for the API can easily focus their study and concern on the area that’s of interest to them, without having to ask the question, “Is this something I need to care about?” for each class they see in the documentation index.

Doing this sort of split incorrectly—or worse, optimizing for the wrong audience, which is typically smaller—can seriously diminish the API’s usefulness. For example, the JavaMail API contains an enormous number of concepts and classes. It requires quite a bit of work on the developer’s part simply to send mail or to get a list of messages from a mail server, with a great deal of boilerplate code required. On the other hand, the entire API is optimized to make it extremely easy to write providers that implement new protocols. The problem with this is that there are vastly more applications that will want to send and receive mail than there are wire protocols for mail-like messages. Although perhaps not provable, it’s certainly probable that this misoptimization is single-handedly responsible for the relative rarity of Java-based mail applications.

It’s important for anyone designing an API to treat partners fairly—in other words, to treat the API’s users well. Users of an API beget more uses of that API, and vice versa. The more people who write applications in Java, the more other Java applications are likely to be written in the future. The more developers use the JUnit library, for example, the more important the library and the development style it represents become. To become a user of a library, you need to understand it and to be convinced that it saves work. But above all, for the users of an API to be truly comfortable with it, they must believe that their work won’t break or vanish when a new version of the library is released. Coding to a library is an investment of time, study, effort, and money. The first and foremost responsibility of the API designer is to preserve the investment of those who use it.

The users of the API are its jewels. Their work has a right to be respected and admired. The better the user experience, the better they will speak of the API, the better they will feel using it, and the better it will be promoted and evangelized. This will all lead to a happier API- using community. That is why it’s important to preserve participant investment and always to try to evolve API contracts in a compatible—or at least predictable—way. Each new version of a library should ensure that existing client modules continue to execute in a reasonable way, or failing that, that it’s easy to update existing sources to compile and use the contracts of the new release. The expectation of a need for updates must be set well in advance of any possible incompatibility.

There are two modes of using a library. In less flexible environments or in more distributed ones, an end user might have an application binary installed that uses the old version of the library. To satisfy everyone, when a new version of the library is updated, the application needs to continue to work without problems. If this is achieved, we can claim that the investment made by the author of the application is well preserved.

Less strict setups have the flexibility to get source code, fix it, and recompile it. This is common in the open source world and manifested in Linux distributions, for example. In these setups, it might not be necessary to have absolute compatibility of binaries; it just has to be easy to recompile a new version. If that state is achieved and expectations are set correctly, the goal of preserving investments has also been mostly reached.

On the other side of these kinds of preservations is the well-intended desire to make the API of a library “nicer”: renaming methods to be more self-explanatory, restructuring API patterns to be more understandable, and so on. These activities are welcome before the first release. However, after the first release, they cannot outweigh the problems related to the burden of breaking existing API client code. Only those who don’t value their users have this attitude.

________________

1. Garrett Rooney, “Preserving Backward Compatibility” (2005), http://www.onlamp.com/pub/a/onlamp/2005/02/17/backwardscompatibility.html.