In this chapter, we will discuss the various types of database tables and cover when you might want to use each type (i.e., when one type of table is more appropriate than another). We will concentrate on the physical storage characteristics of the tables: how the data is organized and stored.

Once upon a time, there was only one type of table, really: a normal table. It was managed in the same way a heap of stuff is managed (the definition of which appears in the next section). Over time, Oracle added more sophisticated types of tables. Now, in addition to the heap organized table, there are clustered tables (three types of those), index organized tables, nested tables, temporary tables, external tables, and object tables. Each type of table has different characteristics that make it suitable for use in different application areas.

We will define each type of table before getting into the details. There are nine major types of tables in Oracle, as follows:

Heap organized tables: These are normal, standard database tables. Data is managed in a heap-like fashion. As data is added, the first free space found in the segment that can fit the data will be used. As data is removed from the table, it allows space to become available for reuse by subsequent

INSERTs andUPDATEs. This is the origin of the name "heap" as it refers to this type of table. A heap is a bunch of space, and it is used in a somewhat random fashion.Index organized tables: These tables are stored in an index structure. This imposes physical order on the rows themselves. Whereas in a heap the data is stuffed wherever it might fit, in index-organized tables (IOTs) the data is stored in sorted order, according to the primary key.

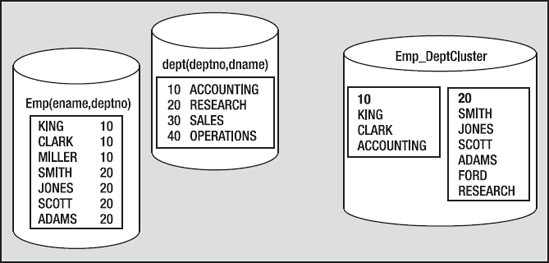

Index clustered tables: Clusters are groups of one or more tables, physically stored on the same database blocks, with all rows that share a common cluster key value being stored physically near each other. Two goals are achieved in this structure. First, many tables may be stored physically joined together. Normally, you would expect data from only one table to be found on a database block, but with clustered tables, data from many tables may be stored on the same block. Second, all data that contains the same cluster key value, such as

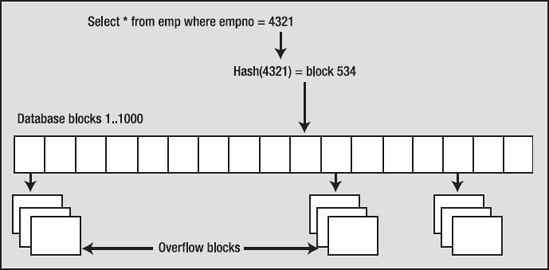

DEPTNO = 10, will be physically stored together. The data is clustered around the cluster key value. A cluster key is built using a B*Tree index.Hash clustered tables: These tables are similar to index clustered tables, but instead of using a B*Tree index to locate the data by cluster key, the hash cluster hashes the key to the cluster to arrive at the database block the data should be on. In a hash cluster, the data is the index (metaphorically speaking). These tables are appropriate for data that is read frequently via an equality comparison on the key.

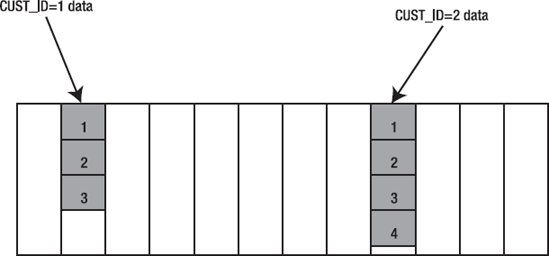

Sorted hash clustered tables: This table type is new in Oracle 10g and combines some aspects of a hash-clustered table with those of an IOT. The concept is as follows: you have some key value that rows will be hashed by (say,

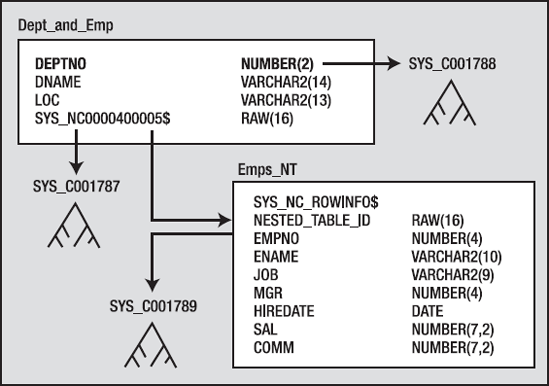

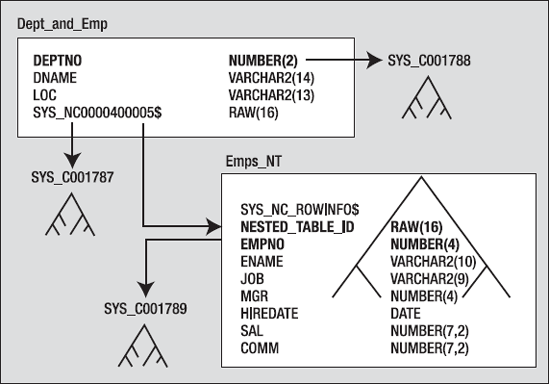

CUSTOMER_ID), and then a series of records related to that key that arrive in sorted order (timestamp-based records) and are processed in that sorted order. For example, a customer places orders in your order entry system, and these orders are retrieved and processed in a first in, first out (FIFO) manner. In such a system, a sorted hash cluster may be the right data structure for you.Nested tables: These are part of the object-relational extensions to Oracle. They are simply system-generated and maintained child tables in a parent/child relationship. They work much in the same way as

EMPandDEPTin theSCOTTschema with theEMPtable being the nested table.EMPis considered to be a child of theDEPTtable, since theEMPtable has a foreign key,DEPTNO, that points toDEPT. The main difference is that they are not stand-alone tables likeEMP.Temporary tables: These tables store scratch data for the life of a transaction or the life of a session. These tables allocate temporary extents, as needed, from the current user's temporary tablespace. Each session will see only the extents that session allocates; it will never see any of the data created in any other session.

Object tables: These tables are created based on an object type. They have special attributes not associated with non-object tables, such as a system-generated

REF(object identifier) for each row. Object tables are really special cases of heap, index organized, and temporary tables, and they may include nested tables as part of their structure as well.External tables: The data in these tables are not stored in the database itself; rather, they reside outside of the database in ordinary operating system files. External tables in Oracle9i and above give you the ability to query a file residing outside the database as if it were a normal table inside the database. They are most useful as a means of getting data into the database (they are a very powerful data-loading tool). Furthermore, in Oracle 10g, which introduces an external table unload capability, they provide an easy way to move data between Oracle databases without using database links. We will look at external tables in some detail in Chapter 15 "Data Loading and Unloading."

Here is some general information about tables, regardless of their type:

A table can have up to 1,000 columns, although I recommend against a design that does contain the maximum number of columns, unless there is some pressing need. Tables are most efficient with far fewer than 1,000 columns. Oracle will internally store a row with more than 254 columns in separate row pieces that point to each other and must be reassembled to produce the entire row image.

A table can have a virtually unlimited number of rows, although you will hit other limits that prevent this from happening. For example, typically a tablespace can have at most 1,022 files (although there are

BIGFILEtablespaces in Oracle 10g that will get you beyond these file size limits, too). Say you have a typical tablespace and are using files that are 32GB in size—that is to say, 32,704GB (1,022 files time 32GB) in total size. This would be 2,143,289,344 blocks, each of which is 16KB in size. You might be able to fit 160 rows of between 80 to 100 bytes per block. This would give you 342,926,295,040 rows. If you partition the table, though, you can easily multiply this number many times. For example, consider a table with 1,024 hash partitions—that would be 1024 * 342,926,295,040 rows. There are limits, but you'll hit other practical limitations before even coming close to having Three Hundred Fifty-One trillion One Hundred Fifty-Six billion Five Hundred Twenty-Six million One Hundred Twenty thousand Nine Hundred Sixty rows in a table.A table can have as many indexes as there are permutations of columns (and permutations of functions on those columns and permutations of any unique expression you can dream of). With the advent of function-based indexes, the true number of indexes you could create theoretically becomes infinite! Once again, however, practical restrictions, such as overall performance (every index you add will add overhead to an

INSERTinto that table) will limit the actual number of indexes you will create and maintain.There is no limit to the number of tables you may have, even within a single database. Yet again, practical limits will keep this number within reasonable bounds. You will not have millions of tables (as this many is impractical to create and manage), but you may have thousands of tables.

In the next section, we'll look at some of the parameters and terminology relevant to tables. After that, we'll jump into a discussion of the basic heap-organized table and then move on to examine the other types.

In this section, we will cover the various storage parameters and terminology associated with tables. Not all parameters are used for every table type. For example, the PCTUSED parameter is not meaningful in the context of an IOT (the reason for this will become obvious in Chapter 11 "Indexes"). We'll cover the relevant parameters as part of the discussion of each individual table type. The goal is to introduce the terms and define them. As appropriate, more information on using specific parameters is covered in subsequent sections.

A segment in Oracle is an object that consumes storage on disk. While there are many segment types, the most popular are as follows:

Cluster: This segment type is capable of storing tables. There are two types of clusters: B*Tree and hash. Clusters are commonly used to store related data from multiple tables prejoined on the same database block and to store related information from a single table together. The name "cluster" refers to this segment's ability to cluster related information physically together.

Table: A table segment holds data for a database table and is perhaps the most common segment type used in conjunction with an index segment.

Table partition or subpartition: This segment type is used in partitioning and is very similar to a table segment. A table partition or subpartition segment holds just a slice of the data from a table. A partitioned table is made up of one or more table partition segments, and a composite partitioned table is made up of one or more table subpartition segments.

Index: This segment type holds an index structure.

Index partition: Similar to a table partition, this segment type contains some slice of an index. A partitioned index consists of one or more index partition segments.

Lob partition, lob subpartition, lobindex, and lobsegment: The lobindex and lobsegment segments hold the structure of a large object, or LOB. When a table containing a LOB is partitioned, the lobsegment will be partitioned as well—the lob partition segment is used for that. It is interesting to note that there is not a lobindex partition segment type—for whatever reason, Oracle marks the partitioned lob index as an index partition (one wonders why a lobindex is given a special name!).

Nested table: This is the segment type assigned to nested tables, a special kind of child table in a master/detail relationship that we'll discuss later.

Rollback and Type2 undo: This is where undo data is stored. Rollback segments are those manually created by the DBA. Type2 undo segments are automatically created and managed by Oracle.

So, for example, a table may be a segment. An index may be a segment. I stress the words "may be" because we can partition an index into separate segments. So, the index object itself would just be a definition, not a physical segment—and the index would be made up of many index partitions, and each index partition would be a segment. A table may be a segment or not. For the same reason, we might have many table segments due to partitioning, or we might create a table in a segment called a cluster. Here the table will reside, perhaps with other tables in the same cluster segment.

The most common case, however, is that a table will be a segment and an index will be a segment. This is the easiest way to think of it for now. When you create a table, you are normally creating a new table segment and, as discussed in Chapter 3 "Files," that segment consists of extents, and extents consist of blocks. This is the normal storage hierarchy. But it is important to note that only the common case has this one-to-one relationship. For example, consider this simple CREATE TABLE statement:

Create table t ( x int primary key, y clob, z blob );

This statement creates six segments, assuming Oracle Database 11g Release 1 and before; in Oracle Database 11g Release 2 and above, segment creation is deferred until the first row is inserted by default (we'll use syntax to have the segments created immediately below). If you issue this CREATE TABLE statement in a schema that owns nothing, you'll observe the following:

ops$tkyte%ORA11GR2> select segment_name, segment_type

2 from user_segments;

no rows selected

ops$tkyte%ORA11GR2>

ops$tkyte%ORA11GR2> Create table t

2 ( x int primary key,

3 y clob,

4 z blob )

5 SEGMENT CREATION IMMEDIATE

6 /Table created. ops$tkyte%ORA11GR2> ops$tkyte%ORA11GR2> select segment_name, segment_type 2 from user_segments; SEGMENT_NAME SEGMENT_TYPE ------------------------------ ------------------ T TABLE SYS_IL0000093076C00002$$ LOBINDEX SYS_IL0000093076C00003$$ LOBINDEX SYS_C0019048 INDEX SYS_LOB0000093076C00002$$ LOBSEGMENT SYS_LOB0000093076C00003$$ LOBSEGMENT 6 rows selected.

The table itself created a segment in this example: the first row in the output. The primary key constraint created an index segment in this case in order to enforce uniqueness.

Note

A unique or primary key constraint may or may not create a new index. If there is an existing index on the constrained columns, and these columns are on the leading edge of the index, the constraint can and will use them.

Additionally, each of the LOB columns created two segments: one segment to store the actual chunks of data pointed to by the character large object (CLOB) or binary large object (BLOB) pointer, and one segment to organize them. LOBs provide support for very large chunks of information, up to many gigabytes in size. They are stored in chunks in the lobsegment, and the lobindex is used to keep track of where the LOB chunks are and the order in which they should be accessed.

Note that on line 5 of the CREATE TABLE statement I used syntax specific to Oracle 11g Release 2 and above—the SEGMENT CREATION IMMEDIATE clause. If you attempt to use that syntax in earlier releases you will receive:

ops$tkyte%ORA11GR1> Create table t 2 ( x int primary key, 3 y clob, 4 z blob ) 5 SEGMENT CREATION IMMEDIATE 6 / SEGMENT CREATION IMMEDIATE * ERROR at line 5: ORA-00922: missing or invalid option

Starting with Oracle9i, there are two methods for managing space in segments:

Manual Segment Space Management: You set various parameters such as

FREELISTS, FREELIST GROUPS, PCTUSED, and others to control how space is allocated, used, and reused in a segment over time. I will refer to this space management method in this chapter as MSSM, but bear in mind that that is a made-up abbreviation that you will not find widely in the Oracle documentation.Automatic Segment Space Management (ASSM): You control one parameter relating to how space is used:

PCTFREE. The others are accepted when the segment is created, but they are ignored.

MSSM is the legacy implementation in Oracle. It has been around for many years, over many versions. ASSM was first introduced in Oracle9i Release 1 and its design intention was to eliminate the need to fine tune the myriad parameters used to control space allocation and provide high concurrency. For example, by having the FREELISTS parameter set to the default of 1, you might find that your insert/update intensive segments may be suffering from contention on free space allocation. When Oracle goes to insert a row into a table, update an index key entry, or update a row causing the row to migrate (more on that in a moment), it may need to get a block from the list of free blocks associated with the segment. If there is only one list, only one transaction at a time may review and modify this list—they would have to wait for each other. Multiple FREELISTS and FREELIST GROUPS serve the purpose of increasing concurrency in such a case, as the transactions may each be looking at different lists and not contending with each other.

When I discuss the storage settings shortly, I will mention which are for manual and which are for automatic segment space management, but in the area of storage/segment characteristics, the only storage settings that apply to ASSM segments are as follows:

BUFFER_POOLPCTFREEINITRANSMAXTRANS(only in 9i; in 10g and above this is ignored for all segments)

The remaining storage and physical attribute parameters do not apply to ASSM segments.

Segment space management is an attribute inherited from the tablespace in which a segment is contained (and segments never span tablespaces). For a segment to use ASSM, it would have to reside in a tablespace that supported that method of space management.

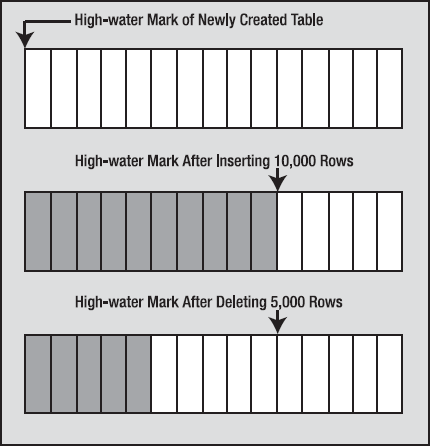

This is a term used with table segments stored in the database. If you envision a table, for example, as a flat structure or as a series of blocks laid one after the other in a line from left to right, the high-water mark (HWM) would be the rightmost block that ever contained data, as illustrated in Figure 10-1.

Figure 10-1 shows that the HWM starts at the first block of a newly created table. As data is placed into the table over time and more blocks get used, the HWM rises. If we delete some (or even all) of the rows in the table, we might have many blocks that no longer contain data, but they are still under the HWM, and they will remain under the HWM until the object is rebuilt, truncated, or shrunk (shrinking of a segment is a new Oracle 10g feature that is supported only if the segment is in an ASSM tablespace).

The HWM is relevant since Oracle will scan all blocks under the HWM, even when they contain no data, during a full scan. This will impact the performance of a full scan—especially if most of the blocks under the HWM are empty. To see this, just create a table with 1,000,000 rows (or create any table with a large number of rows), and then execute a SELECT COUNT(*) from this table. Now, DELETE every row in it and you will find that the SELECT COUNT(*) takes just as long (or longer, if you need to clean out the block! Refer to the "Block Cleanout" section of Chapter 9 "Redo and Undo" to count 0 rows as it did to count 1,000,000. This is because Oracle is busy reading all of the blocks below the HWM to see if they contain data. You should compare this to what happens if you used TRUNCATE on the table instead of deleting each individual row. TRUNCATE will reset the HWM of a table back to zero and will truncate the associated indexes on the table as well. If you plan on deleting every row in a table, TRUNCATE—if it can be used—would be the method of choice for this reason.

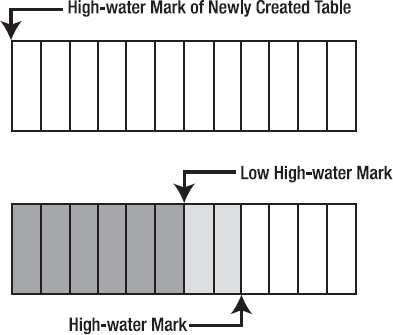

In an MSSM tablespace, segments have a definite HWM. In an ASSM tablespace, however, there is an HWM and a low HWM. In MSSM, when the HWM is advanced (e.g., as rows are inserted), all of the blocks are formatted and valid, and Oracle can read them safely. With ASSM, however, when the HWM is advanced Oracle doesn't format all of the blocks immediately—they are only formatted and made safe to read upon their first actual use. The first actual use will be when the database decides to insert a record into a given block. Under ASSM, the data is inserted in any of the blocks between the low high water mark and the high water mark, so many of the blocks in this area might not be formatted. So, when full scanning a segment, we have to know if the blocks to be read are safe or unformatted (meaning they contain nothing of interest and we do not process them). To make it so that not every block in the table needs go through this safe/not safe check, Oracle maintains a low HWM and a HWM. Oracle will full scan the table up to the HWM—and for all of the blocks below the low HWM, it will just read and process them. For blocks between the low HWM and the HWM (see Figure 10-2), it must be more careful and refer to the ASSM bitmap information used to manage these blocks in order to see which of them it should read and which it should just ignore.

When you use an MSSM tablespace, the FREELIST is where Oracle keeps track of blocks under the HWM for objects that have free space on them.

Note

FREELISTS and FREELIST GROUPS do not pertain to ASSM tablespaces at all; only MSSM tablespaces use this technique.

Each object will have at least one FREELIST associated with it, and as blocks are used, they will be placed on or taken off of the FREELIST as needed. It is important to note that only blocks under the HWM of an object will be found on the FREELIST. The blocks that remain above the HWM will be used only when the FREELISTs are empty, at which point Oracle advances the HWM and adds these blocks to the FREELIST. In this fashion, Oracle postpones increasing the HWM for an object until it has to.

An object may have more than one FREELIST. If you anticipate heavy INSERT or UPDATE activity on an object by many concurrent users, then configuring more than one FREELIST can have a major positive impact on performance (at the cost of possible additional storage). Having sufficient FREELISTs for your needs is crucial.

FREELISTs can be a huge positive performance influence (or inhibitor) in an environment with many concurrent inserts and updates. An extremely simple test can show the benefits of setting FREELISTS correctly. Consider this relatively simple table:

ops$tkyte%ORA11GR2> create table t ( x int, y char(50) ) tablespace mssm; Table created.

Note

mssm in the above example is the name of a tablespace, not a keyword. You may replace it with the name of any tablespace you have that uses manual segment space management.

Using five concurrent sessions, we start inserting into this table like wild. If we measure the system-wide wait events for block-related waits both before and after inserting, we will find large waits, especially on data blocks (trying to insert data). This is frequently caused by insufficient FREELISTs on tables (and on indexes, but we'll cover that in detail in the next chapter "Indexes"). I used Statspack for this—I took a statspack.snap, executed a script that started the five concurrent SQL*Plus sessions, and waited for them to exit, before taking another statspack.snap. The script these sessions ran was simply:

begin

for i in 1 .. 100000

loop

insert into t values ( i, 'x' );

end loop;

commit;

end;

/

exit;Now, this is a very simple block of code, and I'm the only user in the database here. I should get the best possible performance. I have plenty of buffer cache configured, my redo logs are sized appropriately, indexes won't be slowing things down, I'm running on a machine with two hyperthreaded Xeon CPUs—this should run fast. What I discovered afterward, however, is the following:

Snapshot Snap Id Snap Time Sessions Curs/Sess Comment

~~~~~~~~ ---------- ------------------ -------- --------- ------------------

Begin Snap: 364 17-Mar-10 11:58:24 26 1.7

End Snap: 365 17-Mar-10 11:59:01 24 1.8

Elapsed: 0.62 (mins) Av Act Sess: 4.9

DB time: 3.04 (mins) DB CPU: 1.18 (mins)

Top 5 Timed Events Avg %Total

~~~~~~~~~~~~~~~~~~ wait Call

Event Waits Time (s) (ms) Time

----------------------------------------- ------------ ----------- ------ ------

buffer busy waits 199,708 107 1 53.5

CPU time 70 35.0

db file async I/O submit 44 11 252 5.5

log file parallel write 1,130 9 8 4.5

control file parallel write 56 1 16 .4

-------------------------------------------------------------I collectively waited 107 seconds, or about 21 seconds per session on average, on buffer busy waits. These waits are caused entirely by the fact that there are not enough FREELISTs configured on my table for the type of concurrent activity that is taking place. I can eliminate most of that wait time easily, just by creating the table with multiple FREELISTs

ops$tkyte@ORA11GR2> create table t ( x int, y char(50) ) 2 storage ( freelists 5 ) tablespace MSSM; Table created.

or by altering the object

ops$tkyteORA11GR2> alter table t storage ( FREELISTS 5 ); Table altered.

You will find that the buffer busy waits goes way down, and the amount of CPU needed (since you are doing less work here; competing for a latched data structure can really burn CPU) also goes down along with the elapsed time:

Snapshot Snap Id Snap Time Sessions Curs/Sess Comment ~~~~~~~~ ---------- ------------------ -------- --------- ------------------ Begin Snap: 367 17-Mar-10 12:26:50 24 1.3 End Snap: 368 17-Mar-10 12:27:09 22 1.5 Elapsed: 0.32 (mins) Av Act Sess: 4.3 DB time: 1.37 (mins) DB CPU: 0.98 (mins) Top 5 Timed Events Avg %Total ~~~~~~~~~~~~~~~~~~ wait Call Event Waits Time (s) (ms) Time ----------------------------------------- ------------ ----------- ------ ------ CPU time 59 59.9 db file async I/O submit 28 15 536 15.3 log file parallel write 752 10 13 9.8 buffer busy waits 17,929 5 0 5.4 cursor: pin S 252 3 11 2.9

What you want to do for a table is try to determine the maximum number of concurrent (truly concurrent) inserts or updates that will require more space. What I mean by truly concurrent is how often you expect two people at exactly the same instant to request a free block for that table. This is not a measure of overlapping transactions; it is a measure of how many sessions are doing inserts at the same time, regardless of transaction boundaries. You want to have about as many FREELISTs as concurrent inserts into the table to increase concurrency.

You should just set FREELISTs really high and then not worry about it, right? Wrong—of course, that would be too easy. When you use multiple FREELISTs, there is a master FREELIST and there are process FREELISTs. If a segment has a single FREELIST, then the master and process FREELISTs are one and the same thing. If you have two FREELISTs, you'll really have one master FREELIST and two process FREELISTs. A given session will be assigned to a single process FREELIST based on a hash of its session ID. Now, each process FREELIST will have very few blocks on it—the remaining free blocks are on the master FREELIST. As a process FREELIST is used, it will pull a few blocks from the master FREELIST as needed. If the master FREELIST cannot satisfy the space requirement, then Oracle will advance the HWM and add empty blocks to the master FREELIST. So, over time, the master FREELIST will fan out its storage over the many process FREELISTs (again, each of which has only a few blocks on it). So, each process will use a single process FREELIST. It will not go from process FREELIST to process FREELIST to find space. This means that if you have ten process FREELISTs on a table and the one your process is using exhausts the free buffers on its list, it will not go to another process FREELIST for space—so even if the other nine process FREELISTs have five blocks each (45 blocks in total), it will go to the master FREELIST. Assuming the master FREELIST cannot satisfy the request for a free block, it would cause the table to advance the HWM or, if the table's HWM cannot be advanced (all the space is used), to extend (to get another extent). It will then continue to use the space on its FREELIST only (which is no longer empty). There is a tradeoff to be made with multiple FREELISTs. On one hand, use of multiple FREELISTs is a huge performance booster. On the other hand, it will probably cause the table to use slightly more disk space than absolutely necessary. You will have to decide which is less bothersome in your environment.

Do not underestimate the usefulness of the FREELISTS parameter, especially since you can alter it up and down at will with Oracle 8.1.6 and later. What you might do is alter it to a large number to perform some load of data in parallel with the conventional path mode of SQL*Loader. You will achieve a high degree of concurrency for the load with minimum waits. After the load, you can reduce the value to some more reasonable day-to-day number. The blocks on the many existing FREELISTs will be merged into the one master FREELIST when you alter the space down.

Another way to solve the previously mentioned issue of buffer busy waits is to use an ASSM managed tablespace. If you take the preceding example and create the table T in an ASSM managed tablespace as follows

ops$tkyte@ORA11GR2> create tablespace assm

2 datafile size 1m autoextend on next 1m

3 segment space management auto;

Tablespace created.

ops$tkyte@ORA11GR2> create table t ( x int, y char(50) ) tablespace ASSM;

Table created.you'll find the buffer busy waits, CPU time, and elapsed time to have decreased for this case as well, similar to when we configured the perfect number of FREELISTs for a segment using MSSM —without having to figure out the optimum number of required FREELISTs:

Snapshot Snap Id Snap Time Sessions Curs/Sess Comment ~~~~~~~~ ---------- ------------------ -------- --------- ------------------ Begin Snap: 369 17-Mar-10 12:31:05 22 1.5 End Snap: 370 17-Mar-10 12:31:25 24 1.3 Elapsed: 0.33 (mins) Av Act Sess: 4.6 DB time: 1.54 (mins) DB CPU: 1.08 (mins) Top 5 Timed Events Avg %Total ~~~~~~~~~~~~~~~~~~ wait Call Event Waits Time (s) (ms) Time ----------------------------------------- ------------ ----------- ------ ------ CPU time 65 58.2 db file async I/O submit 25 17 661 14.9 log file parallel write 674 11 16 9.8 log file switch (checkpoint incomplete) 13 4 334 3.9 buffer busy waits 7,642 4 0 3.4

This is one of ASSM's main purposes: to remove the need to manually determine the correct settings for many key storage parameters. ASSM uses additional space when compared to MSSM in some cases as it attempts to spread inserts out over many blocks, but in most all cases, the nominal extra storage utilized is far outweighed by the decrease in concurrency issues. An environment where storage utilization is crucial and concurrency is not (a data warehouse pops into mind) would not necessarily benefit from ASSM managed storage for that reason.

In general, the PCTFREE parameter tells Oracle how much space should be reserved on a block for future updates. By default, this is 10 percent. If there is a higher percentage of free space than the value specified in PCTFREE, then the block is considered to be free. PCTUSED tells Oracle the percentage of free space that needs to be present on a block that is not currently free in order for it to become free again. The default value is 40 percent.

As noted earlier, when used with a table (but not an IOT, as we'll see), PCTFREE tells Oracle how much space should be reserved on a block for future updates. This means if we use an 8KB block size, as soon as the addition of a new row onto a block causes the free space on the block to drop below about 800 bytes, Oracle will use another block from the FREELIST instead of the existing block. This 10 percent of the data space on the block is set aside for updates to the rows on that block.

Note

PCTFREE and PCTUSED are implemented differently for different table types. Some table types employ both, whereas others only use PCTFREE, and even then only when the object is created. IOTs use PCTFREE upon creation to set aside space in the table for future updates, but do not use PCTFREE to decide when to stop inserting rows into a given block, for example.

The exact effect of these two parameters varies depending on whether you are using ASSM or MSSM tablespaces. When you are using MSSM, these parameter settings control when the block will be put on and taken off the FREELIST. If you are using the default values for PCTFREE (10) and PCTUSED (40), then a block will remain on the FREELIST until it is 90 percent full (10 percent free space). Once it hits 90 percent, it will be taken off the FREELIST and remain off the FREELIST until the free space on the block exceeds 60 percent of the block.

When you are using ASSM, PCTFREE still limits if a new row may be inserted into a block, but it does not control whether a block is on a FREELIST or not, as ASSM does not use FREELISTs at all. In ASSM, PCTUSED is simply ignored.

There are three settings for PCTFREE: too high, too low, and just about right. If you set PCTFREE for blocks too high, you will waste space. If you set PCTFREE to 50 percent and you never update the data, you have just wasted 50 percent of every block. On another table, however, 50 percent may be very reasonable. If the rows start out small and tend to double in size, setting PCTFREE too small will cause row migration as you update the rows.

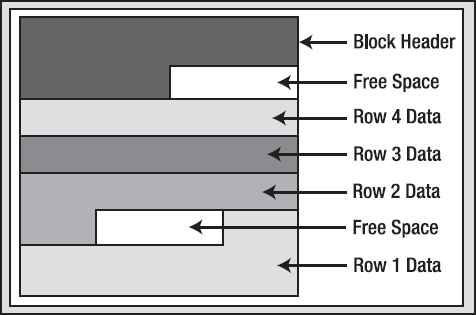

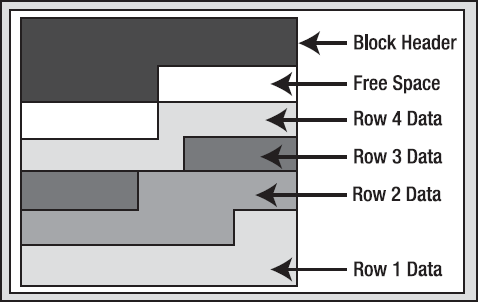

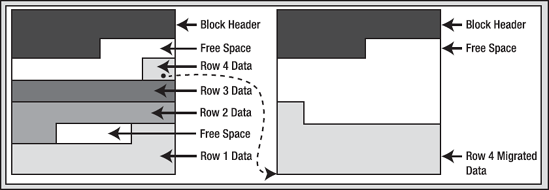

What is row migration? Row migration is when a row is forced to leave the block it was created on because it grew too large to fit on that block with the rest of the rows. To illustrate row migration, we start with a block that looks like Figure 10-3.

Approximately one-seventh of the block is free space. However, we would like to more than double the amount of space used by row 4 via an UPDATE (it currently consumes one-seventh of the block). In this case, even if Oracle coalesced the space on the block as shown in Figure 10-4, there is still insufficient room to double the size of row 4, because the size of the free space is less than the current size of row 4.

If the row fit into the coalesced space, it would have happened. This time, however, Oracle will not perform this coalescing and the block will remain as it is. Since row 4 would have to span more than one block if it stayed on this block, Oracle will move, or migrate, the row. However, Oracle cannot just move the row; it must leave behind a forwarding address. There may be indexes that physically point to this address for row 4. A simple update will not modify the indexes as well.

Note

There is a special case with partitioned tables that a rowid, the address of a row, will change. We will look at this case in Chapter 13 "Partitioning." Additionally, other administrative operations such as FLASHBACK TABLE and ALTER TABLE SHRINK may change rowids assigned to rows as well.

Therefore, when Oracle migrates the row, it will leave behind a pointer to where the row really is. After the update, the blocks might look as shown in Figure 10-5.

So, a migrated row is a row that had to move from the block it was inserted into onto some other block. Why is this an issue? Your application will never know; the SQL you use is no different. It only matters for performance reasons. If you go to read this row via an index, the index will point to the original block. That block will point to the new block. Instead of doing the two or so I/Os to read the index plus one I/O to read the table, you'll need to do yet one more I/O to get to the actual row data. In isolation, this is no big deal—you won't even notice it. However, when you have a sizable percentage of your rows in this state, with many users accessing them, you'll begin to notice this side effect. Access to this data will start to slow down (additional I/Os and the associated latching that goes with the I/O add to the access time), your buffer cache efficiency goes down (you need to buffer two blocks instead of just the one you would if the rows were not migrated), and your table grows in size and complexity. For these reasons, you generally do not want migrated rows (but do not lose sleep if a couple hundred/thousand rows in a table of thousands or more rows are migrated!).

It is interesting to note what Oracle will do if the row that was migrated from the block on the left to the block on the right in Figure 10-5 has to migrate again at some future point in time. This would be due to other rows being added to the block it was migrated to and then updating this row to make it even larger. Oracle will actually migrate the row back to the original block and, if there is sufficient space, leave it there (the row might become unmigrated). If there isn't sufficient space, Oracle will migrate the row to another block altogether and change the forwarding address on the original block. As such, row migrations will always involve one level of indirection.

So, now we are back to PCTFREE and what it is used for: it is the setting that will help you to minimize row migration and row chaining (discussed later) when set properly.

Setting PCTFREE and PCTUSED is an important—and greatly overlooked—topic. In summary, PCTUSED and PCTFREE are both crucial when using MSSM; with ASSM, only PCTFREE is. On the one hand, you need to use them to avoid too many rows from migrating. On the other hand, you use them to avoid wasting too much space. You need to look at your objects and describe how they will be used, and then you can come up with a logical plan for setting these values. Rules of thumb may very well fail you on these settings; they really need to be set based on usage. You might consider the following (keeping in mind that "high" and "low" are relative terms, and that when using ASSM, only PCTFREE applies):

High

PCTFREE, lowPCTUSED: This setting is for when you insert lots of data that will be updated, and the updates will increase the size of the rows frequently. This setting reserves a lot of space on the block after inserts (highPCTFREE) and makes it so that the block must almost be empty before getting back onto theFREELIST(lowPCTUSED).Low

PCTFREE, highPCTUSED: This setting is for if you tend to only everINSERTorDELETEfrom the table, or if you doUPDATE, theUPDATEtends to shrink the row in size.

Normally, objects are created in a LOGGING fashion, meaning all operations performed against them that can generate redo will generate it. NOLOGGING allows certain operations to be performed against that object without the generation of redo; we covered this in the Chapter 9 "Redo and Undo" in some detail. NOLOGGING affects only a few specific operations, such as the initial creation of the object, direct path loads using SQL*Loader, or rebuilds (see the SQL Language Reference Manual for the database object you are working with to see which operations apply).

This option does not disable redo log generation for the object in general—only for very specific operations. For example, if I create a table as SELECT NOLOGGING and then INSERT INTO THAT_TABLE VALUES(1), the INSERT will be logged, but the table creation might not have been (the DBA can force logging at the database or tablespace level).

Each block in a segment has a block header. Part of this block header is a transaction table. Entries will be made in the transaction table to describe which transactions have what rows/elements on the block locked. The initial size of this transaction table is specified by the INITRANS setting for the object. For tables, this defaults to 2 (indexes default to 2 as well). This transaction table will grow dynamically as needed up to MAXTRANS entries in size (given sufficient free space on the block, that is). Each allocated transaction entry consumes 23 to 24 bytes of storage in the block header. Note that as of Oracle 10g, MAXTRANS is ignored—all segments have a MAXTRANS of 255.

A heap organized table is probably used 99 percent (or more) of the time in applications. A heap organized table is the type of table you get by default when you issue the CREATE TABLE statement. If you want any other type of table structure, you need to specify that in the CREATE statement itself.

A heap is a classic data structure studied in computer science. It is basically a big area of space, disk, or memory (disk in the case of a database table, of course) that is managed in an apparently random fashion. Data will be placed where it fits best, rather than in any specific sort of order. Many people expect data to come back out of a table in the same order it was put into it, but with a heap, this is definitely not assured. In fact, rather the opposite is guaranteed: the rows will come out in a wholly unpredictable order. This is quite easy to demonstrate.

In this example, I will set up a table such that in my database I can fit one full row per block (I am using an 8KB block size). You do not need to have the case where you only have one row per block— I am just taking advantage of this to demonstrate a predictable sequence of events. The following sort of behavior (that rows have no order) will be observed on tables of all sizes, in databases with any block size:

ops$tkyte%ORA11GR2> create table t

2 ( a int,

3 b varchar2(4000) default rpad('*',4000,'*'),

4 c varchar2(3000) default rpad('*',3000,'*')

5 )

6 /

Table created.

ops$tkyte%ORA11GR2> insert into t (a) values ( 1);

1 row created.

ops$tkyte%ORA11GR2> insert into t (a) values ( 2);

1 row created.

ops$tkyte%ORA11GR2> insert into t (a) values ( 3);

1 row created.

ops$tkyte%ORA11GR2> delete from t where a = 2 ;

1 row deleted.

ops$tkyte%ORA11GR2> insert into t (a) values ( 4);

1 row created.

ops$tkyte%ORA11GR2> select a from t;

A

----------

1

4

3Adjust columns B and C to be appropriate for your block size if you would like to reproduce this. For example, if you have a 2KB block size, you do not need column C, and column B should be a VARCHAR2(1500) with a default of 1,500 asterisks. Since data is managed in a heap in a table like this, as space becomes available, it will be reused.

Note

When using ASSM or MSSM, you'll find rows end up in different places. The underlying space management routines are very different; the same operations executed against a table in ASSM and MSSM may well result in different physical order. The data will logically be the same, but it will be stored in different ways.

A full scan of the table will retrieve the data as it hits it, not in the order of insertion. This is a key concept to understand about database tables: in general, they are inherently unordered collections of data. You should also note that I do not need to use a DELETE in order to observe this effect; I could achieve the same results using only INSERTs. If I insert a small row, followed by a very large row that will not fit on the block with the small row, and then a small row again, I may very well observe that the rows come out by default in the order "small row, small row, large row." They will not be retrieved in the order of insertion—Oracle will place the data where it fits, not in any order by date or transaction.

If your query needs to retrieve data in order of insertion, you must add a column to the table that you can use to order the data when retrieving it. This column could be a number column, for example, maintained with an increasing sequence (using the Oracle SEQUENCE object). You could then approximate the insertion order using a SELECT that did an ORDER BY on this column. It will be an approximation because the row with sequence number 55 may very well have committed before the row with sequence 54, therefore it was officially first in the database.

You should think of a heap organized table as a big unordered collection of rows. These rows will come out in a seemingly random order, and depending on other options being used (parallel query, different optimizer modes, and so on), they may come out in a different order with the same query. Do not ever count on the order of rows from a query unless you have an ORDER BY statement on your query!

That aside, what is important to know about heap tables? Well, the CREATE TABLE syntax spans some 72 pages in the SQL Reference Manual provided by Oracle, so there are lots of options that go along with them. There are so many options that getting a hold on all of them is pretty difficult. The wire diagrams (or train track diagrams) alone take 18 pages to cover. One trick I use to see most of the options available to me in the CREATE TABLE statement for a given table is to create the table as simply as possible, for example:

ops$tkyte@ORA11GR2> create table t 2 ( x int primary key, 3 y date, 4 z clob 5 ) 6 / Table created.

Then, using the standard supplied package DBMS_METADATA, I query the definition of it and see the verbose syntax:

ops$tkyte%ORA11GR2> select dbms_metadata.get_ddl( 'TABLE', 'T' ) from dual;

DBMS_METADATA.GET_DDL('TABLE','T')

------------------------------------------------------------------------------

CREATE TABLE "OPS$TKYTE"."T"

( "X" NUMBER(*,0),

"Y" DATE,

"Z" CLOB,

PRIMARY KEY ("X")

USING INDEX PCTFREE 10 INITRANS 2 MAXTRANS 255 NOCOMPRESS LOGGING

TABLESPACE "USERS" ENABLE

) SEGMENT CREATION DEFERRED

PCTFREE 10 PCTUSED 40 INITRANS 1 MAXTRANS 255 NOCOMPRESS LOGGING

TABLESPACE "USERS"

LOB ("Z") STORE AS BASICFILE (

TABLESPACE "USERS" ENABLE STORAGE IN ROW CHUNK 8192 RETENTION

NOCACHE LOGGING )The nice thing about this trick is that it shows many of the options for my CREATE TABLE statement. I just have to pick data types and such; Oracle will produce the verbose version for me. I can now customize this verbose version, perhaps changing the ENABLE STORAGE IN ROW to DISABLE STORAGE IN ROW, which would disable the storage of the LOB data in the row with the structured data, causing it to be stored in another segment. I use this trick myself all of the time to avoid having to decipher the huge wire diagrams. I also use this technique to learn what options are available to me on the CREATE TABLE statement under different circumstances.

Now that you know how to see most of the options available to you on a given CREATE TABLE statement, which are the important ones you need to be aware of for heap tables? In my opinion, there are three with ASSM and five with MSSM:

FREELIST: MSSM only. Every table manages the blocks it has allocated in the heap on aFREELIST. A table may have more than oneFREELIST. If you anticipate heavy insertion into a table by many concurrent users, configuring more than oneFREELISTcan have a major positive impact on performance (at the cost of possible additional storage). Refer to the previous discussion and example in the section "FREELISTS" for the sort of impact this setting can have on performance.PCTFREE: Both ASSM and MSSM. A measure of how full a block can be is made during theINSERTprocess. As shown earlier, this is used to control whether a row may be added to a block or not based on how full the block currently is. This option is also used to control row migrations caused by subsequent updates and needs to be set based on how you use the table.PCTUSED: MSSM only. A measure of how empty a block must become before it can be a candidate for insertion again. A block that has less thanPCTUSEDspace used is a candidate for insertion of new rows. Again, likePCTFREE, you must consider how you will be using your table to set this option appropriately.INITRANS: Both ASSM and MSSM. The number of transaction slots initially allocated to a block. If set too low (defaults to and has a minimum of 2), this option can cause concurrency issues in a block that is accessed by many users. If a database block is nearly full and the transaction list cannot be dynamically expanded, sessions will queue up for this block, as each concurrent transaction needs a transaction slot. If you believe you will have many concurrent updates to the same blocks, consider increasing this value.COMPRESS/NOCOMPRESS: Both ASSM and MSSM. Enables or disables compression of table data during either direct path operations or during conventional path ("normal," if you will) operations such asINSERT. Prior to Oracle 9i Release 2, this option was not available. Starting with Oracle 9i Release 2 through Oracle 10g Release 2, the option wasCOMPRESSorNOCOMPRESS, to either use or not use table compression during direct path operations only. In those releases, only direct path operations such asCREATE TABLE AS SELECT, INSERT /*+ APPEND */, ALTER TABLE T MOVE, and SQL*Loader direct path loads could take advantage of compression. Starting with Oracle 11g Release 1 and above, the options areNOLOGGING, COMPRESS FOR OLTP, and COMPRESS BASIC. NOLOGGINGdisables any compression,COMPRESS FOR OLTPenables compression for all operations (direct or conventional path), andCOMPRESS BASICenables compression for direct path operations only.

Note

LOB data that is stored out of line in the LOB segment does not make use of the PCTFREE/PCTUSED parameters set for the table. These LOB blocks are managed differently: they are always filled to capacity and returned to the FREELIST only when completely empty.

These are the parameters you want to pay particularly close attention to. With the introduction of locally managed tablespaces, which are highly recommended, I find that the rest of the storage parameters (such as PCTINCREASE, NEXT, and so on) are simply not relevant anymore.

Index organized tables (IOTs) are quite simply tables stored in an index structure. Whereas a table stored in a heap is unorganized (i.e., data goes wherever there is available space), data in an IOT is stored and sorted by primary key. IOTs behave just like "regular" tables do as far as your application is concerned; you use SQL to access them as normal. They are especially useful for information retrieval, spatial, and OLAP applications.

What is the point of an IOT? You might ask the converse, actually: what is the point of a heap organized table? Since all tables in a relational database are supposed to have a primary key anyway, isn't a heap organized table just a waste of space? We have to make room for both the table and the index on the primary key of the table when using a heap organized table. With an IOT, the space overhead of the primary key index is removed, as the index is the data, and the data is the index. The fact is that an index is a complex data structure that requires a lot of work to manage and maintain, and the maintenance requirements increase as the width of the row to store increases. A heap, on the other hand, is trivial to manage by comparison. There are efficiencies in a heap organized table over an IOT. That said, IOTs have some definite advantages over their heap counterparts. For example, I once built an inverted list index on some textual data (this predated the introduction of interMedia and related technologies). I had a table full of documents, and I would parse the documents and find words within them. My table looked like this:

create table keywords ( word varchar2(50), position int, doc_id int, primary key(word,position,doc_id) );

Here I had a table that consisted solely of columns of the primary key. I had over 100 percent overhead; the size of my table and primary key index were comparable (actually, the primary key index was larger since it physically stored the rowid of the row it pointed to, whereas a rowid is not stored in the table—it is inferred). I only used this table with a WHERE clause on the WORD or WORD and POSITION columns. That is, I never used the table—I used only the index on the table. The table itself was no more than overhead. I wanted to find all documents containing a given word (or near another word, and so on). The KEYWORDS heap table was useless, and it just slowed down the application during maintenance of the KEYWORDS table and doubled the storage requirements. This is a perfect application for an IOT.

Another implementation that begs for an IOT is a code lookup table. Here you might have ZIP_CODE to STATE lookup, for example. You can now do away with the heap table and just use an IOT itself. Anytime you have a table that you access via its primary key exclusively, it is a possible candidate for an IOT.

When you want to enforce co-location of data or you want data to be physically stored in a specific order, the IOT is the structure for you. For users of Sybase and SQL Server, this is where you would have used a clustered index, but IOTs go one better. A clustered index in those databases may have up to a 110 percent overhead (similar to the previous KEYWORDS table example). Here, we have a 0 percent overhead since the data is stored only once. A classic example of when you might want this physically co-located data would be in a parent/child relationship. Let's say the EMP table had a child table containing addresses. You might have a home address entered into the system when the employee is initially sent an offer letter for a job. Later, he adds his work address. Over time, he moves and changes the home address to a previous address and adds a new home address. Then he has a school address he added when he went back for a degree, and so on. That is, the employee has three or four (or more) detail records, but these details arrive randomly over time. In a normal heap based table, they just go anywhere. The odds that two or more of the address records would be on the same database block in the heap table are very near zero. However, when you query an employee's information, you always pull the address detail records as well. The rows that arrive over time are always retrieved together. To make the retrieval more efficient, you can use an IOT for the child table to put all of the records for a given employee near each other upon insertion, so when you retrieve them over and over again, you do less work.

An example will easily show the effects of using an IOT to physically co-locate the child table information. Let's create and populate an EMP table:

ops$tkyte%ORA11GR2> create table emp 2 as 3 select object_id empno, 4 object_name ename, 5 created hiredate, 6 owner job 7 from all_objects 8 / Table created. ops$tkyte%ORA11GR2> alter table emp add constraint emp_pk primary key(empno) 2 / Table altered. ops$tkyte%ORA11GR2> begin 2 dbms_stats.gather_table_stats( user, 'EMP', cascade=>true ); 3 end; 4 / PL/SQL procedure successfully completed.

Next, we'll implement the child table two times, once as a conventional heap table and again as an IOT:

ops$tkyte%ORA11GR2> create table heap_addresses 2 ( empno references emp(empno) on delete cascade, 3 addr_type varchar2(10), 4 street varchar2(20), 5 city varchar2(20), 6 state varchar2(2), 7 zip number,

8 primary key (empno,addr_type) 9 ) 10 / Table created. ops$tkyte%ORA11GR2> create table iot_addresses 2 ( empno references emp(empno) on delete cascade, 3 addr_type varchar2(10), 4 street varchar2(20), 5 city varchar2(20), 6 state varchar2(2), 7 zip number, 8 primary key (empno,addr_type) 9 ) 10 ORGANIZATION INDEX 11 / Table created.

I populated these tables by inserting into them a work address for each employee, then a home address, then a previous address, and finally a school address. A heap table would tend to place the data at the end of the table; as the data arrives, the heap table would simply add it to the end, due to the fact that the data is just arriving and no data is being deleted. Over time, if addresses are deleted, the inserts would become more random throughout the table. Suffice it to say, the chance an employee's work address would be on the same block as his home address in the heap table is near zero. For the IOT, however, since the key is on EMPNO, ADDR_TYPE, we'll be pretty sure that all of the addresses for a given EMPNO are located on one or maybe two index blocks together. The inserts used to populate this data were:

ops$tkyte%ORA11GR2> insert into heap_addresses 2 select empno, 'WORK', '123 main street', 'Washington', 'DC', 20123 3 from emp; 72075 rows created. ops$tkyte%ORA11GR2> insert into iot_addresses 2 select empno, 'WORK', '123 main street', 'Washington', 'DC', 20123 3 from emp; 72075 rows created.

I did that three more times, changing WORK to HOME, PREV, and SCHOOL in turn. Then I gathered statistics:

ops$tkyte%ORA11GR2> exec dbms_stats.gather_table_stats( user, 'HEAP_ADDRESSES' ); PL/SQL procedure successfully completed. ops$tkyte%ORA11GR2> exec dbms_stats.gather_table_stats( user, 'IOT_ADDRESSES' ); PL/SQL procedure successfully completed.

Now we are ready to see what measurable difference we could expect to see. Using AUTOTRACE, we'll get a feeling for the change:

ops$tkyte%ORA11GR2> set autotrace traceonly

ops$tkyte%ORA11GR2> select *

2 from emp, heap_addresses

3 where emp.empno = heap_addresses.empno

4 and emp.empno = 42;

Execution Plan

----------------------------------------------------------

Plan hash value: 4163174643

-----------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes |

-----------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 4 | 356 |

| 1 | NESTED LOOPS | | 4 | 356 |

| 2 | TABLE ACCESS BY INDEX ROWID| EMP | 1 | 43 |

|* 3 | INDEX UNIQUE SCAN | EMP_PK | 1 | |

| 4 | TABLE ACCESS BY INDEX ROWID| HEAP_ADDRESSES | 4 | 184 |

|* 5 | INDEX RANGE SCAN | SYS_C0019080 | 4 | |

-----------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

3 - access("EMP"."EMPNO"=42)

5 - access("HEAP_ADDRESSES"."EMPNO"=42)

Statistics

----------------------------------------------------------

0 recursive calls

0 db block gets

11 consistent gets

0 physical reads

0 redo size

1153 bytes sent via SQL*Net to client

419 bytes received via SQL*Net from client

2 SQL*Net roundtrips to/from client

0 sorts (memory)

0 sorts (disk)

4 rows processedThat is a pretty common plan: go to the EMP table by primary key; get the row; then using that EMPNO, go to the address table; and using the index, pick up the child records. We did 11 I/Os to retrieve this data. Now running the same query, but using the IOT for the addresses

ops$tkyte%ORA11GR2> select * 2 from emp, iot_addresses 3 where emp.empno = iot_addresses.empno

4 and emp.empno = 42;

Execution Plan

----------------------------------------------------------

Plan hash value: 1490857195

--------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes |

--------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 4 | 356 |

| 1 | NESTED LOOPS | | 4 | 356 |

| 2 | TABLE ACCESS BY INDEX ROWID| EMP | 1 | 43 |

|* 3 | INDEX UNIQUE SCAN | EMP_PK | 1 | |

|* 4 | INDEX RANGE SCAN | SYS_IOT_TOP_93124 | 4 | 184 |

--------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

3 - access("EMP"."EMPNO"=42)

4 - access("IOT_ADDRESSES"."EMPNO"=42)

Statistics

----------------------------------------------------------

0 recursive calls

0 db block gets

7 consistent gets

0 physical reads

0 redo size

1153 bytes sent via SQL*Net to client

419 bytes received via SQL*Net from client

2 SQL*Net roundtrips to/from client

0 sorts (memory)

0 sorts (disk)

4 rows processedwe did four fewer I/Os (the four should have been guessable); we skipped four TABLE ACCESS (BY INDEX ROWID) steps. The more child records we have, the more I/Os we would anticipate skipping.

So, what is four I/Os? Well, in this case it was over one-third of the I/O performed for the query, and if we execute this query repeatedly, it would add up. Each I/O and each consistent get requires an access to the buffer cache, and while it is true that reading data out of the buffer cache is faster than disk, it is also true that the buffer cache gets are not free and not totally cheap. Each will require many latches of the buffer cache, and latches are serialization devices that will inhibit our ability to scale. We can measure both the I/O reduction as well as latching reduction by running a PL/SQL block such as this:

ops$tkyte%ORA11GR2> begin 2 for x in ( select empno from emp ) 3 loop 4 for y in ( select emp.ename, a.street, a.city, a.state, a.zip 5 from emp, heap_addresses a 6 where emp.empno = a.empno

7 and emp.empno = x.empno ) 8 loop 9 null; 10 end loop; 11 end loop; 12 end; 13 / PL/SQL procedure successfully completed.

Here, we are just emulating a busy period and running the query some 45,000 times, once for each EMPNO. If we run that for the HEAP_ADRESSES and IOT_ADDRESSES tables, TKPROF shows us the following:

SELECT EMP.ENAME, A.STREET, A.CITY, A.STATE, A.ZIP

FROM EMP, HEAP_ADDRESSES A WHERE EMP.EMPNO = A.EMPNO AND EMP.EMPNO = :B1

call count cpu elapsed disk query current rows

------- ------ -------- ---------- ---------- ---------- ---------- ----------

Parse 1 0.00 0.00 0 0 0 0

Execute 72073 11.53 11.79 0 0 0 0

Fetch 72073 6.55 6.58 3641 722160 0 288292

------- ------ -------- ---------- ---------- ---------- ---------- ----------

total 144147 18.09 18.38 3641 722160 0 288292

Misses in library cache during parse: 0

Optimizer mode: ALL_ROWS

Parsing user id: 286 (recursive depth: 1)

Rows Row Source Operation

------- ---------------------------------------------------

4 NESTED LOOPS (cr=10 pr=0 pw=0 time=60 us cost=8 size=280 card=4)

1 TABLE ACCESS BY INDEX ROWID EMP (cr=3 pr=0 pw=0 time=0 us cost=2 siz...)

1 INDEX UNIQUE SCAN EMP_PK (cr=2 pr=0 pw=0 time=0 us cost=1 size=0 ...)

4 TABLE ACCESS BY INDEX ROWID HEAP_ADDRESSES (cr=7 pr=0 pw=0 time=54 us...)

4 INDEX RANGE SCAN SYS_C0019080 (cr=3 pr=0 pw=0 time=3 us cost=2 size=...)

********************************************************************************

SELECT EMP.ENAME, A.STREET, A.CITY, A.STATE, A.ZIP

FROM

EMP, IOT_ADDRESSES A WHERE EMP.EMPNO = A.EMPNO AND EMP.EMPNO = :B1

call count cpu elapsed disk query current rows

------- ------ -------- ---------- ---------- ---------- ---------- ----------

Parse 1 0.00 0.00 0 0 0 0

Execute 72073 11.61 11.74 0 0 0 0

Fetch 72073 4.70 4.78 4863 437132 0 288292

------- ------ -------- ---------- ---------- ---------- ---------- ----------

total 144147 16.32 16.52 4863 437132 0 288292

Misses in library cache during parse: 0

Optimizer mode: ALL_ROWS

Parsing user id: 286 (recursive depth: 1)Rows Row Source Operation

------- ---------------------------------------------------

4 NESTED LOOPS (cr=7 pr=3 pw=0 time=9 us cost=4 size=280 card=4)

1 TABLE ACCESS BY INDEX ROWID EMP (cr=3 pr=0 pw=0 time=0 us cost=2 size=30...)

1 INDEX UNIQUE SCAN EMP_PK (cr=2 pr=0 pw=0 time=0 us cost=1 size=0 ...)

4 INDEX RANGE SCAN SYS_IOT_TOP_93124 (cr=4 pr=3 pw=0 time=3 us cost=2 ...)Both queries fetched exactly the same number of rows, but the HEAP table performed considerably more logical I/O. As the degree of concurrency on the system goes up, we would likewise expect the CPU used by the HEAP table to go up more rapidly as well, while the query possibly waits for latches into the buffer cache. Using runstats (a utility of my own design; refer to the introductory section "Setting Up Your Environment for This Book" for details), we can measure the difference in latching. On my system, I observed the following

STAT...consistent gets 723,073 438,048 −285,025 STAT...consistent gets from ca 723,073 438,048 −285,025 STAT...no work - consistent re 362,683 77,651 −285,032 STAT...session logical reads 723,131 438,086 −285,045 STAT...consistent gets from ca 359,225 72,982 −286,243 STAT...table fetch by rowid 360,385 72,085 −288,300 STAT...buffer is not pinned co 648,695 288,324 −360,371 LATCH.cache buffers chains 1,090,022 523,161 −566,861 STAT...physical read total byt 30,703,616 40,747,008 10,043,392 STAT...physical read bytes 30,703,616 40,747,008 10,043,392 STAT...cell physical IO interc 30,703,616 40,747,008 10,043,392 Run1 latches total versus runs -- difference and pct Run1 Run2 Diff Pct 1,204,593 624,834 −579,759 192.79%

where Run1 was the HEAP_ADDRESSES table and Run2 was the IOT_ADDRESSES table. As you can see, there was a dramatic and repeatable decrease in the latching taking place, mostly due to the cache buffers chains latch (the one that protects the buffer cache). The IOT in this case would provide the following benefits:

Increased buffer cache efficiency, as any given query needs to have fewer blocks in the cache.

Decreased buffer cache access, which increases scalability.

Less overall work to retrieve our data, as it is faster.

Less physical I/O per query possibly, as fewer distinct blocks are needed for any given query and a single physical I/O of the addresses most likely retrieves all of them (not just one of them, as the heap table implementation does).

The same would be true if you frequently use BETWEEN queries on a primary or unique key. Having the data stored physically sorted will increase the performance of those queries as well. For example, I maintain a table of stock quotes in my database. Every day, for hundreds of stocks, I gather together the stock ticker, date, closing price, days high, days low, volume, and other related information. The table looks like this:

ops$tkyte@ORA11GR2> create table stocks 2 ( ticker varchar2(10), 3 day date,

4 value number, 5 change number, 6 high number, 7 low number, 8 vol number, 9 primary key(ticker,day) 10 ) 11 organization index 12 / Table created.

I frequently look at one stock at a time for some range of days (e.g., computing a moving average). If I were to use a heap organized table, the probability of two rows for the stock ticker ORCL existing on the same database block are almost zero. This is because every night, I insert the records for the day for all of the stocks. This fills up at least one database block (actually, many of them). Therefore, every day I add a new ORCL record, but it is on a block different from every other ORCL record already in the table. If I query as follows

Select * from stocks where ticker = 'ORCL' and day between sysdate-100 and sysdate;

Oracle would read the index and then perform table access by rowid to get the rest of the row data. Each of the 100 rows I retrieve would be on a different database block due to the way I load the table—each would probably be a physical I/O. Now consider that I have this same data in an IOT. That same query only needs to read the relevant index blocks, and it already has all of the data. Not only is the table access removed, but all of the rows for ORCL in a given range of dates are physically stored near each other as well. Less logical I/O and less physical I/O is incurred.

Now you understand when you might want to use IOTs and how to use them. What you need to understand next is what the options are with these tables. What are the caveats? The options are very similar to the options for a heap organized table. Once again, we'll use DBMS_METADATA to show us the details. Let's start with the three basic variations of the IOT:

ops$tkyte%ORA11GR2> create table t1 2 ( x int primary key, 3 y varchar2(25), 4 z date 5 ) 6 organization index; Table created. ops$tkyte%ORA11GR2> create table t2 2 ( x int primary key, 3 y varchar2(25), 4 z date 5 ) 6 organization index 7 OVERFLOW; Table created. ops$tkyte%ORA11GR2> create table t3 2 ( x int primary key, 3 y varchar2(25),

4 z date 5 ) 6 organization index 7 overflow INCLUDING y; Table created.

We'll get into what OVERFLOW and INCLUDING do for us, but first let's look at the detailed SQL required for the first table:

ops$tkyte%ORA11GR2> select dbms_metadata.get_ddl( 'TABLE', 'T1' ) from dual;

DBMS_METADATA.GET_DDL('TABLE','T1')

-------------------------------------------------------------------------------

CREATE TABLE "OPS$TKYTE"."T1"

( "X" NUMBER(*,0),

"Y" VARCHAR2(25),

"Z" DATE,

PRIMARY KEY ("X") ENABLE

) ORGANIZATION INDEX NOCOMPRESS PCTFREE 10 INITRANS 2 MAXTRANS 255 LOGGING

STORAGE(INITIAL 65536 NEXT 1048576 MINEXTENTS 1 MAXEXTENTS 2147483645

PCTINCREASE 0 FREELISTS 1 FREELIST GROUPS 1 BUFFER_POOL DEFAULT FLASH_CACHE

DEFAULT CELL_FLASH_CACHE DEFAULT)

TABLESPACE "USERS"

PCTTHRESHOLD 50This table introduces a new option, PCTTHRESHOLD, which we'll look at in a moment. You might have noticed that something is missing from the preceding CREATE TABLE syntax: there is no PCTUSED clause, but there is a PCTFREE. This is because an index is a complex data structure that isn't randomly organized like a heap, so data must go where it belongs. Unlike a heap, where blocks are sometimes available for inserts, blocks are always available for new entries in an index. If the data belongs on a given block because of its values, it will go there regardless of how full or empty the block is. Additionally, PCTFREE is used only when the object is created and populated with data in an index structure. It is not used like it is in the heap organized table. PCTFREE will reserve space on a newly created index, but not for subsequent operations on it, for much the same reason as PCTUSED is not used at all. The same considerations for FREELISTs we had on heap organized tables apply in whole to IOTs.

First, let's look at the NOCOMPRESS option. This option is different in implementation from the table compression discussed above. It works for any operation on the index organized table (as opposed to the table compression which may or may not be in effect for conventional path operations). Using NOCOMPRESS, it tells Oracle to store each and every value in an index entry (i.e., do not compress). If the primary key of the object were on columns A, B, and C, every occurrence of A, B, and C would physically be stored. The converse to NOCOMPRESS is COMPRESS N, where N is an integer that represents the number of columns to compress. This removes repeating values and factors them out at the block level, so that the values of A and perhaps B that repeat over and over are no longer physically stored. Consider, for example, a table created like this:

ops$tkyte%ORA11GR2> create table iot 2 ( owner, object_type, object_name, 3 primary key(owner,object_type,object_name) 4 ) 5 organization index 6 NOCOMPRESS

7 as 8 select distinct owner, object_type, object_name from all_objects / Table created.

It you think about it, the value of OWNER is repeated many hundreds of times. Each schema (OWNER) tends to own lots of objects. Even the value pair of OWNER,OBJECT_TYPE repeats many times, so a given schema will have dozens of tables, dozens of packages, and so on. Only all three columns together do not repeat. We can have Oracle suppress these repeating values. Instead of having an index block with values shown in Table 10-1, we could use COMPRESS 2 (factor out the leading two columns) and have a block with the values shown in Table 10-2.

Table 10-1. Index Leaf Block, NOCOMPRESS

Sys,table,t1 | Sys,table,t2 | Sys,table,t3 | Sys,table,t4 |

Sys,table,t5 | Sys,table,t6 | Sys,table,t7 | Sys,table,t8 |

... | ... | ... | ... |

Sys,table,t100 | Sys,table,t101 | Sys,table,t102 | Sys,table,t103 |

That is, the values SYS and TABLE appear once, and then the third column is stored. In this fashion, we can get many more entries per index block than we could otherwise. This does not decrease concurrency—we are still operating at the row level in all cases—or functionality at all. It may use slightly more CPU horsepower, as Oracle has to do more work to put together the keys again. On the other hand, it may significantly reduce I/O and allow more data to be cached in the buffer cache, since we get more data per block. That is a pretty good tradeoff.

Let's demonstrate the savings by doing a quick test of the preceding CREATE TABLE as SELECT with NOCOMPRESS, COMPRESS 1, and COMPRESS 2. We'll start by creating our IOT without compression:

ops$tkyte%ORA11GR2> create table iot 2 ( owner, object_type, object_name, 3 constraint iot_pk primary key(owner,object_type,object_name) 4 ) 5 organization index 6 NOCOMPRESS 7 as 8 select distinct owner, object_type, object_name

9 from all_objects 10 / Table created.

Now we can measure the space used. We'll use the ANALYZE INDEX VALIDATE STRUCTURE command for this. This command populates a dynamic performance view named INDEX_STATS, which will contain only one row at most with the information from the last execution of that ANALYZE command:

ops$tkyte%ORA11GR2> analyze index iot_pk validate structure;

Index analyzed.

ops$tkyte%ORA11GR2> select lf_blks, br_blks, used_space,

2 opt_cmpr_count, opt_cmpr_pctsave

3 from index_stats;

LF_BLKS BR_BLKS USED_SPACE OPT_CMPR_COUNT OPT_CMPR_PCTSAVE

---------- ---------- ---------- -------------- ----------------

429 3 3081451 2 33This shows our index is currently using 429 leaf blocks (where our data is) and 3 branch blocks (blocks Oracle uses to navigate the index structure) to find the leaf blocks. The space used is about 3MB (3,081,451 bytes). The other two oddly named columns are trying to tell us something. The OPT_CMPR_COUNT (optimum compression count) column is trying to say, "If you made this index COMPRESS 2, you would achieve the best compression." The OPT_CMPR_PCTSAVE (optimum compression percentage saved) is telling us if we did the COMPRESS 2, we would save about one-third of the storage and the index would consume just two-thirds the disk space it is now.

Note

The next chapter, "Indexes," covers the index structure in more detail.

To test that theory, we'll rebuild the IOT with COMPRESS 1 first:

ops$tkyte%ORA11GR2> alter table iot move compress 1;

Table altered.

ops$tkyte%ORA11GR2> analyze index iot_pk validate structure;

Index analyzed.

ops$tkyte%ORA11GR2> select lf_blks, br_blks, used_space,

2 opt_cmpr_count, opt_cmpr_pctsave

3 from index_stats;

LF_BLKS BR_BLKS USED_SPACE OPT_CMPR_COUNT OPT_CMPR_PCTSAVE

---------- ---------- ---------- -------------- ----------------

372 3 2666971 2 22As you can see, the index is in fact smaller: about 2.6MB, with far fewer leaf. But now it is saying, "You can still get another 22% off," as we didn't chop off that much yet. Let's rebuild with COMPRESS 2:

ops$tkyte%ORA11GR2> alter table iot move compress 2;

Table altered.

ops$tkyte%ORA11GR2> analyze index iot_pk validate structure;

Index analyzed.

ops$tkyte%ORA11GR2> select lf_blks, br_blks, used_space,

2 opt_cmpr_count, opt_cmpr_pctsave

3 from index_stats;

LF_BLKS BR_BLKS USED_SPACE OPT_CMPR_COUNT OPT_CMPR_PCTSAVE

---------- ---------- ---------- -------------- ----------------

286 3 2052864 2 0Now we are significantly reduced in size, both by the number of leaf blocks as well as overall used space, by about 2MB. If we go back to the original numbers

ops$tkyte%ORA11GR2> select (2/3)*3081451 from dual; (2/3)*3081451 ------------- 2054300.67

we can see the OPT_CMPR_PCTSAVE was pretty much dead-on accurate. The preceding example points out an interesting fact with IOTs. They are tables, but only in name. Their segment is truly an index segment.

I am going to defer discussion of the PCTTHRESHOLD option at this point, as it is related to the next two options for IOTs: OVERFLOW and INCLUDING. If we look at the full SQL for the next two sets of tables, T2 and T3, we see the following (I've used a DBMS_METADATA routine to suppress the storage clauses, as they are not relevant to the example):

ops$tkyte%ORA11GR2> begin

2 dbms_metadata.set_transform_param

3 ( DBMS_METADATA.SESSION_TRANSFORM, 'STORAGE', false );

4 end;

5 /

PL/SQL procedure successfully completed.

ops$tkyte%ORA11GR2> select dbms_metadata.get_ddl( 'TABLE', 'T2' ) from dual;

DBMS_METADATA.GET_DDL('TABLE','T2')

-------------------------------------------------------------------------------

CREATE TABLE "OPS$TKYTE"."T2"

( "X" NUMBER(*,0),

"Y" VARCHAR2(25),

"Z" DATE,

PRIMARY KEY ("X") ENABLE

) ORGANIZATION INDEX NOCOMPRESS PCTFREE 10 INITRANS 2 MAXTRANS 255 LOGGING

TABLESPACE "USERS"PCTTHRESHOLD 50 OVERFLOW

PCTFREE 10 PCTUSED 40 INITRANS 1 MAXTRANS 255 LOGGING

TABLESPACE "USERS"

ops$tkyte%ORA11GR2> select dbms_metadata.get_ddl( 'TABLE', 'T3' ) from dual;

DBMS_METADATA.GET_DDL('TABLE','T3')

-------------------------------------------------------------------------------

CREATE TABLE "OPS$TKYTE"."T3"

( "X" NUMBER(*,0),

"Y" VARCHAR2(25),

"Z" DATE,

PRIMARY KEY ("X") ENABLE

) ORGANIZATION INDEX NOCOMPRESS PCTFREE 10 INITRANS 2 MAXTRANS 255 LOGGING

TABLESPACE "USERS"

PCTTHRESHOLD 50 INCLUDING "Y" OVERFLOW

PCTFREE 10 PCTUSED 40 INITRANS 1 MAXTRANS 255 LOGGING

TABLESPACE "USERS"So, now we have PCTTHRESHOLD, OVERFLOW, and INCLUDING left to discuss. These three items are intertwined, and their goal is to make the index leaf blocks (the blocks that hold the actual index data) able to efficiently store data. An index is typically on a subset of columns. You will generally find many more times the number of row entries on an index block than you would on a heap table block. An index counts on being able to get many rows per block. Oracle would spend large amounts of time maintaining an index otherwise, as each INSERT or UPDATE would probably cause an index block to split in order to accommodate the new data.

The OVERFLOW clause allows you to set up another segment (making an IOT a multisegment object, much like having a CLOB column does) where the row data for the IOT can overflow onto when it gets too large.

Note

The columns making up the primary key cannot overflow—they must be placed on the leaf blocks directly.

Notice that an OVERFLOW reintroduces the PCTUSED clause to an IOT when using MSSM. PCTFREE and PCTUSED have the same meanings for an OVERFLOW segment as they did for a heap table. The conditions for using an overflow segment can be specified in one of two ways:

PCTTHRESHOLD: When the amount of data in the row exceeds that percentage of the block, the trailing columns of that row will be stored in the overflow. So, ifPCTTHRESHOLDwas 10 percent and your block size was 8KB, any row that was greater than about 800 bytes in length would have part of it stored elsewhere, off the index block.INCLUDING: All of the columns in the row up to and including the one specified in theINCLUDINGclause are stored on the index block, and the remaining columns are stored in the overflow.

Given the following table with a 2KB block size:

ops$tkyte@ORA11GR2> create table iot 2 ( x int, 3 y date, 4 z varchar2(2000), 5 constraint iot_pk primary key (x) 6 ) 7 organization index 8 pctthreshold 10 9 overflow 10 / Table created.

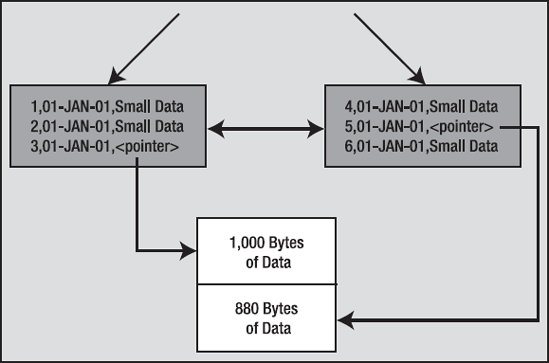

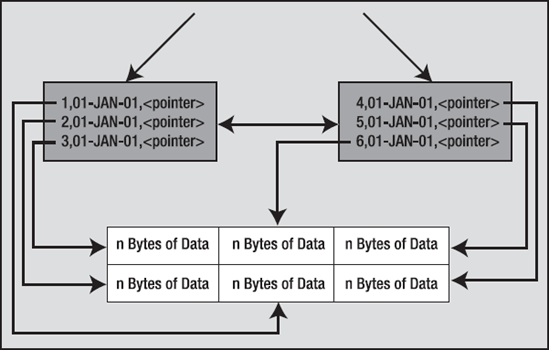

Graphically, it could look like Figure 10-6.