![]()

Physical Model Implementation Case Study

The whole difference between construction and creation is exactly this: that a thing constructed can only be loved after it is constructed; but a thing created is loved before it exists.

—Charles Dickens, writer and social critic, author of A Christmas Carol

In some respects, the hardest part of the database project is when you actually start to create code. If you really take the time to do the design well, you begin to get attached to the design, largely because you have created something that has not existed before. Once the normalization task is complete, you have pretty much everything ready for implementation, but tasks still need to be performed in the process for completing the transformation from the logical model to the physical, relational model. We are now ready for the finishing touches that will turn the designed model into something that users (or at least developers) can start using. At a minimum, between normalization and actual implementation, take plenty of time to review the model to make sure you are completely happy with it.

In this chapter, we’ll take the normalized model and convert it into the final blueprint for the database implementation. Even starting from the same logical model, different people tasked with implementing the relational database will take a subtly (or even dramatically) different approach to the process. The final physical design will always be, to some extent, a reflection of the person/organization who designed it, although usually each of the reasonable solutions “should” resemble one another at its core.

The model we have discussed so far in the book is pretty much implementation agnostic and unaffected by whether the final implementation would be on Microsoft SQL Server, Microsoft Access, Oracle, Sybase, or any relational database management system. (You should expect a lot of changes if you end up implementing with a nonrelational engine, naturally.) However, during this stage, in terms of the naming conventions that are defined, the datatypes chosen, and so on, the design is geared specifically for implementation on SQL Server 2016 (or earlier). Each of the relational engines has its own intricacies and quirks, so it is helpful to understand how to implement on the system you are tasked with. In this book, we will stick with SQL Server 2016, noting where you would need to adjust if using one of the more recent previous versions of SQL Server, such as SQL Server 2012 or SQL Server 2014.

We will go through the following steps to transform the database from a blueprint into an actual functioning database:

- Choosing a physical model for your tables: In the section that describes this step, I will briefly introduce the choice of engine models that are available to your implementation.

- Choosing names: We’ll look at naming concerns for tables and columns. The biggest thing here is making sure to have a standard and to follow it.

- Choosing key implementation: Throughout the earlier bits of the book, we’ve made several types of key choices. In the section covering this step, we will go ahead and finalize the implementation keys for the model, discussing the merits of the different implementation methods.

- Determining domain implementation: We’ll cover the basics of choosing datatypes, nullability, and simple computed columns. Another decision will be choosing between using a domain table or a column with a constraint for types of values where you want to limit column values to a given set.

- Setting up schemas: The section corresponding to this step provides some basic guidance in creating and naming your schemas. Schema allow you to set up groups of objects that provide groupings for usage and security

- Adding implementation columns: We’ll consider columns that are common to almost every database that are not part of the logical design.

- Using Data Definition Language (DDL) to create the database: In this step’s section, we will go through the common DDL that is needed to build most every database you will encounter.

- Baseline testing your creation: Because it’s is a great practice to load some data and test your complex constraints, the section for this step offers guidance on how you should approach and implement testing.

- Deploying your database: As you complete the DDL and at least some of the testing, you need to create the database for users to use for more than just unit tests. The section covering this step offers a short introduction to the process.

Finally, we’ll work on a complete (if really small) database example in this chapter, rather than continue with any of the examples from previous chapters. The example database is tailored to keeping the chapter simple and to avoiding difficult design decisions, which we will cover in the next few chapters.

![]() Note For this and subsequent chapters, I’ll assume that you have SQL Server 2016 installed on your machine. For the purposes of this book, I recommend you use the Developer edition, which is (as of printing time) available for free as a part of the Visual Studio Dev Essentials from www.visualstudio.com/products/visual-studio-dev-essentials. The Developer Edition gives you all of the functionality of the Enterprise edition of SQL Server for developing software, which is considerable. (The Enterprise Evaluation Edition will also work just fine if you don’t have any money to spend. Bear in mind that licensing changes are not uncommon, so your mileage may vary. In any case, there should be a version of SQL Server available to you to work through the examples.)

Note For this and subsequent chapters, I’ll assume that you have SQL Server 2016 installed on your machine. For the purposes of this book, I recommend you use the Developer edition, which is (as of printing time) available for free as a part of the Visual Studio Dev Essentials from www.visualstudio.com/products/visual-studio-dev-essentials. The Developer Edition gives you all of the functionality of the Enterprise edition of SQL Server for developing software, which is considerable. (The Enterprise Evaluation Edition will also work just fine if you don’t have any money to spend. Bear in mind that licensing changes are not uncommon, so your mileage may vary. In any case, there should be a version of SQL Server available to you to work through the examples.)

Another possibility is Azure SQL Database (https://azure.microsoft.com/en-us/services/sql-database/) that I will also make mention of. The features of Azure are constantly being added to, faster than a book can keep up with, but Azure SQL Database will get many features before the box product that I will focus on. I will provide scripts for this chapter that will run on Azure SQL Database with the downloads for this book. Most other examples will run on Azure SQL Database as well.

The main example in this chapter is based on a simple messaging database that a hypothetical company is building for its hypothetical upcoming conference. Any similarities to other systems are purely coincidental, and the model is specifically created not to be overly functional but to be very, very small. The following are the simple requirements for the database:

- Messages can be 200 characters of Unicode text. Messages can be sent privately to one user, to everyone, or both. The user cannot send a message with the exact same text more than once per hour (to cut down on mistakes where users click Send too often).

- Users will be identified by a handle that must be 5–20 characters and that uses their conference attendee numbers and the key value on their badges to access the system. To keep up with your own group of people, apart from other users, users can connect themselves to other users. Connections are one-way, allowing users to see all of the speakers’ information without the reverse being true.

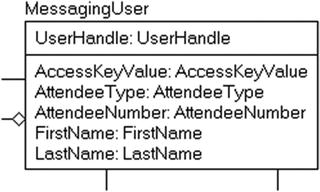

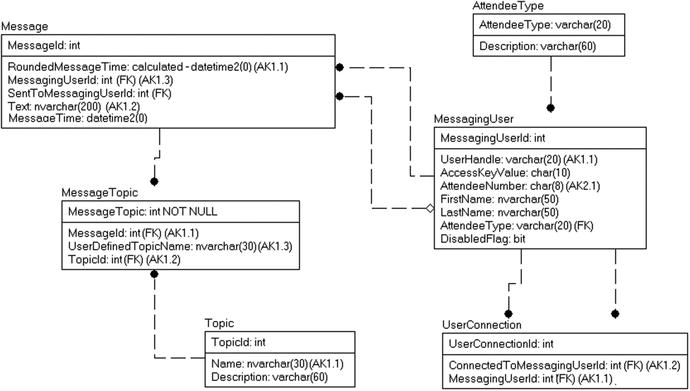

Figure 6-1 shows the logical database design for this application, on which I’ll base the physical design.

Figure 6-1. Simple logical model of conferencing message database

The following is a brief documentation of the tables and columns in the model. To keep things simple, I will expound on the needs as we get to each need individually.

- User: Represents a user of the messaging system, preloaded from another system with attendee information.

UserHandle: The name the user wants to be known as. Initially preloaded with a value based on the person’s first and last name, plus an integer value, changeable by the user.

- AccessKey: A password-like value given to the users on their badges to gain access.

- AttendeeNumber: The number that the attendees are given to identify themselves, printed on front of their badges.

- TypeOfAttendee: Used to give the user special privileges, such as access to speaker materials, vendor areas, and so on.

- FirstName, LastName: Name of the user printed on badge for people to see.

- UserConnection: Represents the connection of one user to another in order to filter results to a given set of users.

- UserHandle: Handle of the user who is going to connect to another user.

- ConnectedToUser: Handle of the user who is being connected to.

- Message: Represents a single message in the system.

- UserHandle: Handle of the user sending the message.

- Text: The text of the message being sent.

- RoundedMessageTime: The time of the message, rounded to the hour.

- SentToUserHandle: The handle of the user that is being sent a message.

- MessageTime: The time the message is sent, at a grain of one second.

- MessageTopic: Relates a message to a topic.

- UserHandle: User handle from the user who sent the message.

- RoundedMessgeTime: The time of the message, rounded to the hour.

- TopicName: The name of the topic being sent.

- UserDefinedTopicName: Allows the users to choose the UserDefined topic styles and set their own topics.

- Topic: Predefined topics for messages.

- TopicName: The name of the topic.

- Description: Description of the purpose and utilization of the topics.

Choosing a Physical Model for Your Tables

Prior to SQL Server 2014 there was simply one relational database engine housed in SQL Server. Every table worked the same way (and we liked it, consarn it!). In 2014, a second “in-memory” “OLTP” engine (also referred to as “memory optimized”) was introduced, which works very differently than the original engine internally but, for SQL programmers, works in basically the same, declarative manner. In this section I will introduce some of the differences at a high level, and will cover the differences in more detail in later chapters. For this chapter, I will also provide a script that will build the tables and code as much as possible using the in-memory engine, to show some of the differences.

Briefly, there are two engines that you can choose for your objects:

- On-Disk: The classic model that has been incrementally improved since SQL Server 7.0 was rewritten from the ground up. Data is at rest on disk, but when the query processor uses the data, it is brought into memory first, into pages that mimic the disk structures. Changes are written to memory and the transaction log and then persisted to disk asynchronously. Concurrency/isolation controls are implemented by blocking resources from affecting another connection’s resources by using locks (to signal to other processes that you are using a resource, like a table, a row, etc.) and latches (similar to locks, but mostly used for physical resources).

- In-Memory OLTP: (Many references will simply be “in-memory” for the rest of the book.) Data is always in RAM, in structures that are natively compiled (if you do some just digging into the SQL Server directories, you can find the C code) and compiled at create time. Changes are written to memory and the transaction log, and asynchronously written to a delta file that is used to load memory if you restart the server (there is an option to make the table nondurable; that is, if the server restarts, the data goes away). Concurrency/isolation controls are implemented using versioning (MVCC-Multi-Valued Concurrency Controls), so instead of locking a resource, most concurrency collisions are signaled by an isolation level failure, rather than blocking. The 2014 edition was very limited, with just one unique constraint and no foreign keys, check constraints. The 2016 edition is much improved with support for most needed constraint types.

What is awesome about the engine choice is that you make the setting at a table level, so you can have tables in each model, and those tables can interact in joins, as well as interpreted T-SQL (as opposed to natively compiled T-SQL objects, which will be noted later in this section). The in-memory engine is purpose-built for much higher performance scenarios than the on-disk engine can handle, because it does not use blocking operations for concurrency control. It has two major issues for most common immediate usage:

- All of your data in this model must reside in RAM, and servers with hundreds of gigabytes or terabytes are still quite expensive.

- MVCC is a very different concurrency model than many SQL Server applications are built for.

The differences, particularly the latter one, mean that in-memory is not a simple “go faster” button.

Since objects using both engines can reside in the same database, you can take advantage of both as it makes sense. For example, you could have a table of products that uses the on-disk model because it is a very large table with very little write contention, but the table of orders and their line items may need to support tens of thousands of write operations per second. This is just one simple scenario where it may be useful for you to use the in-memory model.

In addition to tables being in-memory, there are stored procedures, functions, and triggers that are natively compiled at create time (using T-SQL DDL, compiled to native code) as well that can reference the in-memory objects (but not on on-disk ones). They are limited in programming language surface, but can certainly be worth it for many scenarios. The implementation of SQL Server 2016 is less limited than that of 2014, but both support far less syntax than normal interpreted T-SQL objects.

There are several scenarios in which you might apply the in-memory model. The Microsoft In-Memory OLTP web page (msdn.microsoft.com/en-us/library/dn133186.aspx) recommends it for the following:

- High data read or insertion rate: Because there is no structure contention, the In-Memory model can handle far more concurrent operations than the On-Disk model.

- Intensive processing: If you are doing a lot of business logic that can be compiled into native code, it will perform far better than the interpreted version.

- Low latency: Since all data is contained in RAM and the code can be natively compiled, the amount of execution time can be greatly reduced, particularly when milliseconds (or even microseconds) count for an operation.

- Scenarios with high-scale load: Such as session state management, which has very little contention, and may not need to persist for long periods of time (particularly not if the SQL Server machine is restarted).

So when you are plotting out your physical database design, it is useful to consider which mode is needed for your design. In most cases, the on-disk model will be the one that you will want to use (and if you are truly unsure, start with on-disk and adjust from there). It has supported some extremely large data sets in a very efficient manner and will handle an amazing amount of throughput when you design your database properly. However, as memory becomes cheaper, and the engine approaches coding parity with the interpreted model we have had for 20 years, this model may become the default model. For now, databases that need very high throughput (for example, like ticket brokers when the Force awakened) are going to be candidates for employing this model.

If you start out with the on-disk model for your tables, and during testing determine that there are hot spots in your design that could use in-memory, SQL Server provides tools to help you decide if the In-Memory engine is right for your situation, which are describe in the page named “Determining if a Table or Stored Procedure Should Be Ported to In-Memory OLTP” (https://msdn.microsoft.com/en-us/library/dn205133.aspx).

The bottom line at this early part of the implementation chapters of the book is that you should be aware that there are two query processing models available to you as a data architect, and understand the basic differences so throughout the rest of the book when the differences are explained more you understand the basics.

The examples in this chapter will be done solely in the on-disk model, because it would be the most typical place to start, considering the requirements will be for a small user community. If the user community was expanded greatly, the in-memory model would likely be useful, at least for the highest-contention areas such as creating new messages.

![]() Note Throughout the book there will be small asides to how things may be affected if you were to employ in-memory tables, but the subject is not a major one throughout the book. The topic is covered in greater depth in Chapter 10, “Index Structures and Application,” because the internals of how these tables are indexed is important to understand; Chapter 11, “Matters of Concurrency,” because concurrency is the biggest difference; and somewhat again in Chapter 13, “Architecting Your System,” as we will discuss a bit more about when to choose the engine, and coding using it.

Note Throughout the book there will be small asides to how things may be affected if you were to employ in-memory tables, but the subject is not a major one throughout the book. The topic is covered in greater depth in Chapter 10, “Index Structures and Application,” because the internals of how these tables are indexed is important to understand; Chapter 11, “Matters of Concurrency,” because concurrency is the biggest difference; and somewhat again in Chapter 13, “Architecting Your System,” as we will discuss a bit more about when to choose the engine, and coding using it.

Choosing Names

The target database for our model is (obviously) SQL Server, so our table and column naming conventions must adhere to the rules imposed by this database system, as well as being consistent and logical. In this section, I’ll briefly cover some of the different concerns when naming tables and columns. All of the system constraints on names have been the same for the past few versions of SQL Server, going back to SQL Server 7.0.

Names of columns, tables, procedures, and so on are referred to technically as identifiers. Identifiers in SQL Server are stored in a system datatype of sysname. It is defined as a 128-character (or less, of course) string using Unicode characters. SQL Server’s rules for identifier consist of two distinct naming methods:

- Regular identifiers: Identifiers that need no delimiter, but have a set of rules that govern what can and cannot be a name. This is the preferred method, with the following rules:

- The first character must be a letter as defined by Unicode Standard 3.2 (generally speaking, Roman letters A to Z, uppercase and lowercase, as well as letters from other languages) or the underscore character (_). You can find the Unicode Standard at www.unicode.org.

- Subsequent characters can be Unicode letters, numbers, the “at” sign (@), or the dollar sign ($).

- The name must not be a SQL Server reserved word. You can find a large list of reserved words in SQL Server 2016 Books Online, in the “Reserved Keywords” topic. Some of these are tough, like user, transaction, and table, as they do often come up in the real world. (Note that our original model includes the name User, which we will have to correct.) Note that some words are considered keywords but not reserved (such as description) and may be used as identifiers. (Some, such as int, would make terrible identifiers!)

- The name cannot contain spaces.

- Delimited identifiers: These should have either square brackets ([ ]) or double quotes ("), which are allowed only when the SET QUOTED_IDENTIFIER option is set to ON, around the name. By placing delimiters around an object’s name, you can use any string as the name. For example, [Table Name], [3232 fjfa*&(&^(], or [Drop Database HR;] would be legal (but really annoying, dangerous) names. Names requiring delimiters are generally a bad idea when creating new tables and should be avoided if possible, because they make coding more difficult. However, they can be necessary for interacting with data from other environments. Delimiters are generally to be used when scripting objects because a name like [Drop Database HR;] can cause “problems” if you don’t.

If you need to put a closing brace (]) or even a double quote character in the name, you have to include two closing braces (]]), just like when you need to include a single quote within a string. So, the name fred]olicious would have to be delimited as [fred]]olicious]. However, if you find yourself needing to include special characters of any sort in your names, take a good long moment to consider whether you really do need this (or if you need to consider alternative employment opportunities). If you determine after some thinking that you do, please ask someone else for help naming your objects, or e-mail me at [email protected]. This is a pretty horrible thing to do to your fellow human and will make working with your objects very cumbersome. Even just including space characters is a bad enough practice that you and your users will regret for years. Note too that [name] and [name] are treated as different names in some contexts (see the embedded space) as will [name].

![]() Note Using policy-based management, you can create naming standard checks for whenever a new object is created. Policy-based management is a management tool rather than a design one, though it could pay to create naming standard checks to make sure you don’t accidentally create objects with names you won’t accept. In general, I find doing things that way too restrictive, because there are always exceptions to the rules and automated policy enforcement only works with a dictator’s hand. (Have you met Darth Vader, development manager? He is nice!)

Note Using policy-based management, you can create naming standard checks for whenever a new object is created. Policy-based management is a management tool rather than a design one, though it could pay to create naming standard checks to make sure you don’t accidentally create objects with names you won’t accept. In general, I find doing things that way too restrictive, because there are always exceptions to the rules and automated policy enforcement only works with a dictator’s hand. (Have you met Darth Vader, development manager? He is nice!)

While the rules for creating an object name are pretty straightforward, the more important question is, “What kind of names should be chosen?” The answer I generally give is: “Whatever you feel is best, as long as others can read it and it follows the local naming standards.” This might sound like a cop-out, but there are more naming standards than there are data architects. (On the day this paragraph was first written, I actually had two independent discussions about how to name several objects and neither person wanted to follow the same standard.) The standard I generally go with is the standard that was used in the logical model, that being Pascal-cased names, little if any abbreviation, and as descriptive as necessary. With space for 128 characters, there’s little reason to do much abbreviating. A Pascal-cased name is of the form PartPartPart, where words are concatenated with nothing separating them. Camel-cased names do not start with a capital letter, such as partPartPart.

![]() Caution Because most companies have existing systems, it’s a must to know the shop standard for naming objects so that it matches existing systems and so that new developers on your project will be more likely to understand your database and get up to speed more quickly. The key thing to make sure of is that you keep your full logical names intact for documentation purposes.

Caution Because most companies have existing systems, it’s a must to know the shop standard for naming objects so that it matches existing systems and so that new developers on your project will be more likely to understand your database and get up to speed more quickly. The key thing to make sure of is that you keep your full logical names intact for documentation purposes.

As an example, let’s consider the name of the UserConnection table we will be building later in this chapter. The following list shows several different ways to build the name of this object:

- user_connection (or sometimes, by some awful mandate, an all-caps version USER_CONNECTION): Use underscores to separate values. Most programmers aren’t big friends of underscores, because they’re cumbersome to type until you get used to them. Plus, they have a COBOLesque quality that rarely pleases anyone.

- [user connection] or "user connection": This name is delimited by brackets or quotes. Being forced to use delimiters is annoying, and many other languages use double quotes to denote strings. (In SQL, you always uses single quotes) On the other hand, the brackets [ and ] don’t denote strings, although they are a Microsoft-only convention that will not port well if you need to do any kind of cross-platform programming.

- UserConnection or userConnection: Pascal case or camelCase (respectively), using mixed case to delimit between words. I’ll use Pascal style in most examples, because it’s the style I like. (Hey, it’s my book. You can choose whatever style you want!)

- usrCnnct or usCnct: The abbreviated forms are problematic, because you must be careful always to abbreviate the same word in the same way in all your databases. You must maintain a dictionary of abbreviations, or you’ll get multiple abbreviations for the same word—for example, getting “description” as “desc,” “descr,” “descrip,” and/or “description.” Some applications that access your data may have limitations like 30 characters that make abbreviations necessary, so understand the needs.

One specific place where abbreviations do make sense are when the abbreviation is very standard in the organization. As an example, if you were writing a purchasing system and you were naming a purchase-order table, you could name the object PO, because this is widely understood. Often, users will desire this, even if some abbreviations don’t seem that obvious. Just be 100% certain, so you don’t end up with PO also representing disgruntled customers along with purchase orders.

Choosing names for objects is ultimately a personal choice but should never be made arbitrarily and should be based first on existing corporate standards, then existing software, and finally legibility and readability. The most important thing to try to achieve is internal consistency. Your goal as an architect is to ensure that your users can use your objects easily and with as little thinking about structure as possible. Even most pretty bad naming conventions will be better than having ten different good ones being implemented by warring architect/developer factions.

A particularly hideous practice that is somewhat common with people who have grown up working with procedural languages (particularly interpreted languages) is to include something in the name to indicate that a table is a table, such as tblSchool or tableBuilding. Please don’t do this (really…I beg you). It’s clear by the context what is a table. This practice, just like the other Hungarian-style notations, makes good sense in a procedural programming language where the type of object isn’t always clear just from context, but this practice is never needed with SQL tables. Note that this dislike of prefixes is just for names that are used by users. We will quietly establish prefixes and naming patterns for non-user-addressable objects as the book continues.

![]() Note There is something to be said about the quality of corporate standards as well. If you have an archaic standard, like one that was based on the mainframe team’s standard back in the 19th century, you really need to consider trying to change the standards when creating new databases so you don’t end up with names like HWWG01_TAB_USR_CONCT_T just because the shop standards say so (and yes, I do know when the 19th century was).

Note There is something to be said about the quality of corporate standards as well. If you have an archaic standard, like one that was based on the mainframe team’s standard back in the 19th century, you really need to consider trying to change the standards when creating new databases so you don’t end up with names like HWWG01_TAB_USR_CONCT_T just because the shop standards say so (and yes, I do know when the 19th century was).

The naming rules for columns are the same as for tables as far as SQL Server is concerned. As for how to choose a name for a column—again, it’s one of those tasks for the individual architect, based on the same sorts of criteria as before (shop standards, best usage, and so on). This book follows this set of guidelines:

- Other than the primary key, my feeling is that the table name should rarely be included in the column name. For example, in an entity named Person, it isn’t necessary to have columns called PersonName or PersonSocialSecurityNumber. Most columns should not be prefixed with the table name other than with the following two exceptions:

- A surrogate key such as PersonId. This reduces the need for role naming (modifying names of attributes to adjust meaning, especially used in cases where multiple migrated foreign keys exist).

- Columns that are naturally named with the entity name in them, such as PersonNumber, PurchaseOrderNumber, or something that’s common in the language of the client and used as a domain-specific term.

- The name should be as descriptive as possible. Use few abbreviations in names, except for the aforementioned common abbreviations, as well as generally pronounced abbreviations where a value is read naturally as the abbreviation. For example, I always use id instead of identifier, first because it’s a common abbreviation that’s known to most people, and second because the surrogate key of the Widget table is naturally pronounced Widget-Eye-Dee, not Widget-Identifier.

- Follow a common pattern if possible. For example, a standard that has been attributed as coming from ISO 11179 is to have names constructed in the pattern (RoleName + Attribute + Classword + Scale) where each part is:

- RoleName [optional]: When you need to explain the purpose of the attribute in the context of the table.

- Attribute [optional]: The primary purpose of the column being named. If omitted, the name refers to the entity purpose directly.

- Classword: A general suffix that identifies the usage of the column, in non-implementation-specific terms. It should not be the same thing as the datatype. For example, Id is a surrogate key, not IdInt or IdGUID. (If you need to expand or change types but not purpose, it should not affect the name.)

- Scale [optional]: Tells the user what the scale of the data is when it is not easily discerned, like minutes or seconds; or when the typical currency is dollars and the column represents euros.

Some example names might be:

- StoreId is the identifier for the store.

- UserName is a textual string, but whether or not it is a varchar(30) or nvarchar(128) is immaterial.

- EndDate is the date when something ends and does not include a time part.

- SaveTime is the point in time when the row was saved.

- PledgeAmount is an amount of money (using a decimal(12,2), or money, or any sort of types).

- PledgeAmount Euros is an amount of money in Euros.

- DistributionDescription is a textual string that is used to describe how funds are distributed.

- TickerCode is a short textual string used to identify a ticker row.

- OptInFlag is a two-value column (possibly three including NULL) that indicates a status, such as in this case if the person has opted in for some particular reason.

Many possible classwords could be used, and this book is not about giving you all the standards to follow at that level. Too many variances from organization to organization make that too difficult. The most important thing is that if you can establish a standard, make it work for your organization and follow it.

![]() Note Just as with tables, avoid prefixes like col to denote a column as it is a really horrible practice.

Note Just as with tables, avoid prefixes like col to denote a column as it is a really horrible practice.

I’ll use the same naming conventions for the implementation model as I did for the logical model: Pascal-cased names with a few abbreviations (mostly in the classwords, like “id” for “identifier”). Later in the book I will use a Hungarian-style prefix for objects other than tables, such as constraints, and for coded objects, such as procedures. This is mostly to keep the names unique and avoid clashes with the table names, plus it is easier to read in a list that contains multiple types of objects (the tables are the objects with no prefixes). Tables and columns are commonly used directly by users. They write queries and build reports directly using database object names and shouldn’t need to change the displayed name of every column and table.

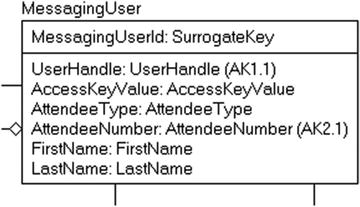

In our demonstration model, the first thing we will do is to rename the User table to MessagingUser because “User” is a SQL Server reserved keyword. While User is the more natural name than MessagingUser, it is one of the trade-offs we have to make because of the legal values of names. In rare cases, when an unsuitable name can’t be created, I may use a bracketed name, but even if it took me four hours to redraw graphics and undo my original choice of User as a table name, I don’t want to give you that as a typical practice. If you find you have used a reserved keyword in your model (and you are not writing a chapter in a book that is 80+ pages long about it), it is usually a very minor change.

In the model snippet in Figure 6-2, I have made that change.

Figure 6-2. Table User has been changed to MessagingUser

The next change we will make will be to a few of the columns in this table. We will start off with the TypeOfAttendee column. The standard we discussed was to use a classword at the end of the column name. In this case, Type will make an acceptable class, as when you see AttendeeType, it will be clear what it means. The implementation will be a value that will be an up to 20-character value.

The second change will be to the AccessKey column. Key itself would be acceptable as a classword, but it will give the implication that the value is a key in the database (a standard I have used in my data warehousing dimensional database designs). So suffixing Value to the name will make the name clearer and distinctive. Figure 6-3 reflects the change in name.

Figure 6-3. MessagingUser table after change to AccessKey column name

The next step in the process is to choose how to implement the keys for the table. In the model at this point, it has one key identified for each table, in the primary key. In this section, we will look at the issues surrounding key choice and, in the end, will set the keys for the demonstration model. We will look at choices for implementing primary keys and then note the choices for creating alternate keys as needed.

Primary Key

Choosing the style of implementation for primary keys is an important choice. Depending on the style you go with, the look and feel of the rest of the database project will be affected, because whatever method you go with, the primary key value will be migrated to other tables as a reference to the particular row. Choosing a primary key style is one of the most argued about topics on the forums and occasionally over dinner after a SQL Saturday event. In this book, I’ll be reasonably agnostic about the whole thing, and I’ll present several methods for choosing the implemented primary key throughout the book. In this chapter, I will use a very specific method for all of the tables, of course.

Presumably, during the logical phase, you’ve identified the different ways to uniquely identify a row. Hence, there should be several choices for the primary key, including the following:

Each of these choices has pros and cons for the implementation. I’ll look at them in the following sections.

Basing a Primary Key on Existing Columns

In many cases, a table will have an obvious, easy-to-use primary key. This is especially true when talking about independent entities. For example, take a table such as product. It would often have a productNumber defined. A person usually has some sort of identifier, either government or company issued. (For example, my company has an employeeNumber that I have to put on all documents, particularly when the company needs to write me a check.)

The primary keys for dependent tables can often generally take the primary key of the independent tables, add one or more attributes, and—presto!—primary key.

For example, I used to have a Ford SVT Focus, made by the Ford Motor Company, so to identify this particular model, I might have a row in the Manufacturer table for Ford Motor Company (as opposed to GM, for example). Then, I’d have an automobileMake with a key of manufacturerName = ’Ford Motor Company’ and makeName = ’Ford’ (as opposed to Lincoln), style = ’SVT’, and so on, for the other values. This can get a bit messy to deal with, because the key of the automobileModelStyle table would be used in many places to describe which products are being shipped to which dealership. Note that this isn’t about the size in terms of the performance of the key, just the number of values that make up the key. Performance will be better the smaller the key, as well, but this is true not only of the number of columns, but this also depends on the size of the value or values that make up a key. Using three 2-byte values could be better than one 15-byte key, though it is a lot more cumbersome to join on three columns.

Note that the complexity in a real system such as this would be compounded by the realization that you have to be concerned with model year, possibly body style, different prebuilt packages of options, and so on. The key of the table may frequently have many parts, particularly in tables that are the child of a child of a child, and so on.

Basing a Primary Key on a New, Surrogate Value

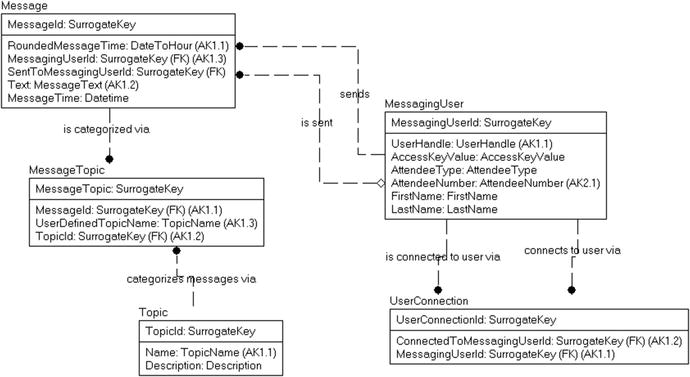

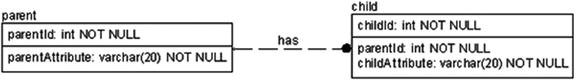

The other common key style is to use only a single column for the primary key, regardless of the size of the other keys. In this case, you’d specify that every table will have a single artificially generated primary key column and implement alternate keys to protect the uniqueness of the natural keys in your tables, as shown in Figure 6-4.

Figure 6-4. Single-column key example

Note that in this scenario, all of your relationships will be implemented in the database as nonidentifying type relationships, though you will implement them to all be required values (no NULLs). Functionally, this is the same as if theparentKeyValue was migrated from parent through child and down to grandChild, though it makes it harder to see in the model.

In the model in Figure 6-4, the most important thing you should notice is that each table has not only the primary key but also an alternate key. The term “surrogate” has a very specific meaning, even outside of computer science, and that is that it serves as a replacement. So the surrogate key for the parent object ofparentKeyValue can be used as a substitute for the defined key, in this caseotherColumnsForAltKey.

This method does have some useful advantages:

- Every table has a single-column primary key: It’s much easier to develop applications that use this key, because every table will have a key that follows the same pattern. It also makes code generation easier to follow, because it is always understood how the table will look, relieving you from having to deal with all the other possible permutations of key setups.

- The primary key index will be as small as possible: If you use numbers, you can use the smallest integer type possible. So if you have a max of 200 rows, you can use a tinyint; 2 billion rows, a 4-byte integer. Operations that use the index to access a row in the table will be faster. Most update and delete operations will likely modify the data by accessing the data based on simple primary keys that will use this index. (Some use a 16-byte GUID for convenience to the UI code, but GUIDs have their downfall, as we will discuss.)

- Joins between tables will be easier to code: That’s because all migrated keys will be a single column. Plus, if you use a surrogate key that is named TableName + Suffix (usually Id in my examples), there will be less thinking to do when setting up the join. Less thinking equates to less errors as well.

There are also disadvantages to this method, such as always having to join to a table to find out the meaning of the surrogate key value. In our example table in Figure 6-4, you would have to join from the grandChild table through the child table to get key values from parent. Another issue is that some parts of the self-documenting nature of relationships are obviated, because using only single-column keys eliminates the obviousness of all identifying relationships. So in order to know that the logical relationship between parent and grandChild is identifying, you will have trace the relationship and look at the uniqueness constraints and foreign keys carefully.

Assuming you have chosen to use a surrogate key, the next choice is to decide what values to use for the key. Let’s look at two methods of implementing these keys, either by deriving the key from some other data or by using a meaningless surrogate value.

A popular way to define a primary key is to simply use a meaningless surrogate key like we’ve modeled previously, such as using a column with the IDENTITY property or generated from a SEQUENCE object, which automatically generates a unique value. In this case, you rarely let the user have access to the value of the key but use it primarily for programming.

It’s exactly what was done for most of the entities in the logical models worked on in previous chapters: simply employing the surrogate key while we didn’t know what the actual value for the primary key would be. This method has one nice property:

You never have to worry about what to do when the key value changes.

Once the key is generated for a row, it never changes, even if all the data changes. This is an especially nice property when you need to do analysis over time. No matter what any of the other values in the table have been changed to, as long as the row’s surrogate key value (as well as the row) represents the same thing, you can still relate it to its usage in previous times. (This is something you have to be clear about with the DBA/programming staff as well. Sometimes, they may want to delete all data and reload it, but if the surrogate changes, your link to the unchanging nature of the surrogate key is likely broken.) Consider the case of a row that identifies a company. If the company is named Bob’s Car Parts and it’s located in Topeka, Kansas, but then it hits it big, moves to Detroit, and changes the company name to Car Parts Amalgamated, only one row is touched: the row where the name is located. Just change the name, address, etc. and it’s done. Keys may change, but not primary keys. Also, if the method of determining uniqueness changes for the object, the structure of the database needn’t change beyond dropping one UNIQUE constraint and adding another.

Using a surrogate key value doesn’t in any way prevent you from creating additional single part keys, like we did in the previous section. In fact, it generally demands it. For most tables, having a small code value is likely going to be a desired thing. Many clients hate long values, because they involve “too much typing.” For example, say you have a value such as “Fred’s Car Mart.” You might want to have a code of “FREDS” for it as the shorthand value for the name. Some people are even so programmed by their experiences with ancient database systems that had arcane codes that they desire codes such as “XC10” to refer to “Fred’s Car Mart.”

In the demonstration model, I set all of the keys to use natural keys based on how one might do a logical model, so in a table like MessagingUser in Figure 6-5, it uses a key of the entire handle of the user.

Figure 6-5. MessagingUser table before changing model to use surrogate key

This value is the most logical, but this name, based on the requirements (current and future), can change. Changing this to a surrogate value will make it easier to make the name change and not have to worry about existing data in this table and related tables. Making this change to the model results in the change shown in Figure 6-6, and now, the key is a value that is clearly recognizable as being associated with the MessagingUser, no matter what the uniqueness of the row may be. Note that I made the UserHandle an alternate key as I switched it from primary key.

Figure 6-6. MessagingUser table after changing model to use surrogate key

Next up, we will take a look at the Message table shown in Figure 6-7. Note that the two columns that were named UserHandle and SentToUserHandle have had their role names changed to indicate the change in names from when the key of MessagingUser was UserHandle.

Figure 6-7. Message table before changing model to use surrogate key

We will transform this table to use a surrogate key by moving all three columns to nonkey columns, placing them in a uniqueness constraint, and adding the new MessageId column. Notice, too, in Figure 6-8 that the table is no longer modeled with rounded corners, because the primary key no longer is modeled with any migrated keys in the primary key.

Figure 6-8. Message table before changing model to use surrogate key

Having a common pattern for every table is useful for programming with the tables as well. Because every table has asingle-column key that isn’t updatable and is the same datatype, it’s possible to exploit this in code, making code generation a far more straightforward process. Note once more that nothing should be lost when you use surrogate keys, because a surrogate of this style only stands in for an existing natural key. Many of the object relational mapping (ORM) tools that are popular (if controversial in the database community) require a single-column integer key as their primary implementation pattern. I don’t favor forcing the database to be designed in any manner to suit client tools, but sometimes, what is good for the database is the same as what is good for the tools, making for a relatively happy ending, at least.

By implementing tables using this pattern, I’m covered in two ways: I always have a single primary key value, but I always have a key that cannot be modified, which eases the difficulty for loading a secondary copy like a data warehouse. No matter the choice of human-accessible key, surrogate keys are the style of key that I use for nearly all tables in databases I create (and always for tables with user-modifiable data, which I will touch on when we discuss “domain” tables later in this chapter). In Figure 6-9, I have completed the transformation to using surrogate keys.

Figure 6-9. Messaging Database Model progression after surrogate key choices

Keep in mind that I haven’t specified any sort of implementation details for the surrogate key at this point, and clearly, in a real system, I would already have done this during the transformation. For this chapter example, I am using a deliberately detailed process to separate each individual step, so I will put off that discussion until the DDL section of this book, where I will present code to deal with this need along with creating the objects.

Alternate Keys

In the model so far, we have already identified alternate keys as part of the model creation (MessagingUser.AttendeeNumber was our only initial alternate key), but I wanted to just take a quick stop on the model and make it clear in case you have missed it. Every table should have a minimum of one natural key; that is, a key that is tied to the meaning of what the table is modeling. This step in the modeling process is exceedingly important if you have chosen to do your logical model with surrogates, and if you chose to implement with single part surrogate keys, you should at least review the keys you specified.

A primary key that’s manufactured or even meaningless in the logical model shouldn’t be your only defined key. One of the ultimate mistakes made by people using such keys is to ignore the fact that two rows whose only difference is a system-generated value are not different. With only an artificially generated value as your key, it becomes more or less impossible to tell one row from another.

For example, take Table 6-1, a snippet of a Part table, where PartID is an IDENTITY column and is the primary key for the table.

Table 6-1. Sample Data to Demonstrate How Surrogate Keys Don’t Make Good Logical Keys

|

PartID |

PartNumber |

Description |

|---|---|---|

|

1 |

XXXXXXXX |

The X part |

|

2 |

XXXXXXXX |

The X part |

|

3 |

YYYYYYYY |

The Y part |

How many individual items are represented by the rows in this table? Well, there seem to be three, but are rows with PartIDs 1 and 2 actually the same row, duplicated? Or are they two different rows that should be unique but were keyed in incorrectly? You need to consider at every step along the way whether a human being could not pick a desired row from a table without knowledge of the surrogate key. This is why there should be a key of some sort on the table to guarantee uniqueness, in this case likely on PartNumber.

![]() Caution As a rule, each of your tables should have a natural key that means something to the user and that can uniquely identify each row in your table. In the very rare event that you cannot find a natural key (perhaps, for example, in a table that provides a log of events that could occur in the same .000001 of a second), then it is acceptable to make up some artificial key, but usually, it is part of a larger key that helps you tell two rows apart.

Caution As a rule, each of your tables should have a natural key that means something to the user and that can uniquely identify each row in your table. In the very rare event that you cannot find a natural key (perhaps, for example, in a table that provides a log of events that could occur in the same .000001 of a second), then it is acceptable to make up some artificial key, but usually, it is part of a larger key that helps you tell two rows apart.

In a well-designed logical model, you should not have anything to do at this point with keys that protect the uniqueness of the data from a requirements standpoint. The architect (probably yourself) has already determined some manner of uniqueness that can be implemented. For example, in Figure 6-10, a MessagingUser row can be identified by either the UserHandle or the AttendeeNumber.

Figure 6-10. MessagingUser table for review

A bit more interesting is the Message table, shown in Figure 6-11. The key is the RoundedMessageTime, which is the time, rounded to the hour, the text of the message, and the UserId.

Figure 6-11. Message table for review

In the business rules, it was declared that the user could not post the same message more than once an hour. Constraints such as this are not terribly easy to implement in a simple manner, but breaking it down to the data you need to implement the constraint can make it easier. In our case, by putting a key on the message, user, and the time rounded to the hour (which we will find some way to implement later in the process), configuring the structures is quite easy.

Of course, by putting this key on the table, if the UI sends the same data twice, an error will be raised when a duplicate message is sent. This error will need to be dealt with at the client side, typically by translating the error message to something nicer.

The last table I will cover here is the MessageTopic table, shown in Figure 6-12.

Figure 6-12. MessageTopic table for review

What is interesting about this table is the optional UserDefinedTopicName value. Later, when we are creating this table, we will load some seed data that indicates that the TopicId is ‘UserDefined’, which means that the UserDefinedTopicName column can be used. Along with this seed data, on this table will be a check constraint that indicates whether the TopicId value represents the user-defined topic. I will use a 0 surrogate key value. In the check constraint later, we will create a check constraint to make sure that all data fits the required criteria.

At this point, to review, we have the model at the point in Figure 6-13.

Figure 6-13. Messaging model for review

Determining Domain Implementation

In logical modeling, the concept of domains is used to specify a template for datatypes and column properties that are used over and over again. In physical modeling, domains are used to choose the datatype to use and give us a guide as to the validations we will need to implement.

For example, in the logical modeling phase, domains are defined for such columns as name and description, which occur regularly across a database/enterprise. The reason for defining domains might not have been completely obvious at the time of logical design (it can seem like work to be a pompous data architect, rather than a programmer), but it becomes clear during physical modeling if it has been done well up front. During implementation domains serve several purposes:

- Consistency: We have used TopicName twice as a domain. This reminds us to define every column of type TopicName in precisely the same manner; there will never be any question about how to treat the column.

- Ease of implementation: If the tool you use to model and implement databases supports the creation of domain and template columns, you can simply use the template to build similar columns with the same pattern, and you won’t have to set the values over and over, which leads to mistakes! If you have tool support for property inheritance on domains, when you change a property in the definition, the values change everywhere. So if all descriptive-type columns are nullable, and all code columns are not, you can set this in one place.

- Documentation: Even if every column used a different domain and there was no reuse, the column/domain documentation would be very useful for programmers to be able to see what datatype to use for a given column. In the final section of this chapter, I will include the domain as part of the metadata I will add to the extended properties of the implemented columns.

Domains aren’t a requirement of logical or physical database design, nor does SQL Server actually make it easy for you to use them, but even if you just use them in a spreadsheet or design tool, they can enable easy and consistent design and are a great idea. Of course, consistent modeling is always a good idea regardless of whether you use a tool to do the work for you. I personally have seen a particular column type implemented in four different ways in five different columns when proper domain definitions were not available. So, tool or not, having a data dictionary that identifies columns that share a common type by definition is extremely useful.

For example, for the TopicName domain that’s used often in the Topic and MessageTopic tables in our ConferenceMessage model, the domain may have been specified by the contents of Table 6-2.

Table 6-2. Sample Domain: TopicName

|

Property |

Setting |

|---|---|

|

Name |

TopicName |

|

Optional |

No |

|

Datatype |

Unicode text, 30 characters |

|

Value Limitations |

Must not be empty string or only space characters |

|

Default Value |

n/a |

I’ll defer the CHECK constraint and DEFAULT bits until later in this chapter, where I discuss implementation in more depth. Several tables will have a TopicName column, and you’ll use this template to build every one of them, which will ensure that every time you build one of these columns it will have a type of nvarchar(30). Note that we will discuss data types and their usages later in this chapter.

A second domain that is used very often in our model is SurrogateKey, shown in Table 6-3.

Table 6-3. Sample Domain: SurrogateKey

|

Property |

Setting |

|---|---|

|

Name |

SurrogateKey |

|

Optional |

When used for primary key, not optional, typically auto-generated. When used as a nonkey, foreign key reference, optionality determined by utilization in the relationship. |

|

Datatype |

int |

|

Value Limitations |

N/A |

|

Default Value |

N/A |

This domain is a bit different, in that it will be implemented exactly as specified for a primary key attribute, but when it is migrated for use as a foreign key, some of the properties will be changed. First, if using identity columns for the surrogate, it won’t have the IDENTITY property set. Second, for an optional relationship, an optional relationship will allow nulls in the migrated key, but when used as the primary key, it will not allow them. Finally, let’s set up one more domain definition to our sample, the UserHandle domain, shown in Table 6-4.

Table 6-4. Sample Domain: UserHandle

|

Property |

Setting |

|---|---|

|

Name |

UserHandle |

|

Optional |

no |

|

Datatype |

Basic character set, 20 characters maximum |

|

Value Limitations |

Must be 5–20 simple alphanumeric characters and must start with a letter |

|

Default Value |

n/a |

In the next four subsections, I’ll discuss several topics concerning the implementation of domains:

- Implementing as a column or table: You need to decide whether a value should simply be entered into a column or whether to implement a new table to manage the values.

- Choosing the datatype: SQL Server gives you a wide range of datatypes to work with, and I’ll discuss some of the issues concerning making the right choice.

- Choosing nullability: In this section, I will demonstrate how to implement the datatype choices in the example model.

- Choosing the collation: The collation determines how data is sorted and compared, based on character set and language used.

Getting the domain of a column implemented correctly is an important step in getting the implementation correct. Too many databases end up with all columns with the same datatype and size, allowing nulls (except for primary keys, if they have them), and lose the integrity of having properly sized and constrained constraints.

Enforce Domain in the Column, or With a Table?

Although many domains have only minimal limitations on values, often a domain will specify a fixed set of named values that a column might have that is less than can be fit into one of the base datatypes. For example, in the demonstration table MessagingUser shown in Figure 6-14, a column AttendeeType has a domain of AttendeeType.

Figure 6-14. MessageUser table for reference

This domain might be specified as in Table 6-5.

Table 6-5. Genre Domain

|

Property |

Setting |

|---|---|

|

Name |

AttendeeType |

|

Optional |

No |

|

Datatype |

Basic character set, maximum 20 characters |

|

Value Limitations |

Regular, Volunteer, Speaker, Administrator |

|

Default Value |

Regular |

The value limitation limits the values to a fixed list of values. We could choose to implement the column using a declarative control (a CHECK constraint, which we will cover in more detail later in the chapter) with a predicate of AttendeeType IN (’Regular’, ’Volunteer’, ’Speaker’, ’Administrator’) and a literal default value of ’Regular’. There are a couple of minor annoyances with this form:

- There is no place for table consumers to know the domain: Unless you have a row with one of each of the values and you do a DISTINCT query over the column (something that is generally poorly performing), it isn’t easy to know what the possible values are without either having foreknowledge of the system or looking in the metadata. If you’re doing Conference Messaging system utilization reports by AttendeeType, it won’t be easy to find out what attendee types had no activity for a time period, certainly not using a simple, straightforward SQL query that has no hard-coded values.

- Often, a value such as this could easily have additional information associated with it: For example, this domain might have information about actions that a given type of user could do. For example, if a Volunteer attendee is limited to using certain topics, you would have to manage the types in a different table. Ideally, if you define the domain value in a table, any other uses of the domain are easier to maintain.

I nearly always include tables for all domains that are essentially “lists” of items, as it is just far easier to manage, even if it requires more tables. The choice of key for a domain table can be a bit different than for most tables. Sometimes, I use a surrogate key for the actual primary key, and other times, I use a natural key. The general difference is whether or not the values are user manageable, and if the programming tools require the integer/GUID approach (for example, if the front-end code uses an enumeration that is being reflected in the table values). In the model, I have two examples of such types of domain implementations. In Figure 6-15, I have added a table to implement the domain for attendee types, and for this table, I will use the natural key.

Figure 6-15. AttendeeType domain implemented as a table

This lets an application treat the value as if it is a simple value just like if this was implemented without the domain table. So if the application wants to manage the value as a simple string value, I don’t have to know about it from the database standpoint. I still get the value and validation that the table implementation affords me, plus the ability to have a Description column describing what each of the values actually means (which really comes in handy at 12:10 AM on December the 25th when the system is crashing and needs to be fixed, all while you are really thinking about the bicycle you haven’t finished putting together).

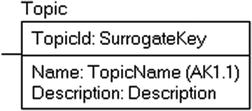

In the original model, we had the Topic table, shown in Figure 6-16, which is a domain similar to the AttendeeType but is designed to allow a user to make changes to the topic list.

Figure 6-16. Topic table for reference

The Topic entity has the special case that it can be added to by the application managers, so it will be implemented as a numeric surrogate value. We will initialize the table with a row that represents the user-defined topic that allows the user to enter their own topic in the MessageTopic table.

Choosing the Datatype

Choosing proper datatypes to match the domain chosen during logical modeling is an important task. One datatype might be more efficient than another of a similar type. For example, you can store integer data in an integer datatype, a numeric datatype, a floating-point datatype, or even a varchar(10) type, but these datatypes are certainly not alike in implementation or performance.

![]() Note I have broken up the discussion of datatypes into two parts. First, there is this and other sections in this chapter in which I provide some basic guidance on the types of datatypes that exist for SQL Server and some light discussion on what to use. Appendix A at the end of this book is an expanded look at all of the datatypes and is dedicated to giving examples and example code snippets with all the types.

Note I have broken up the discussion of datatypes into two parts. First, there is this and other sections in this chapter in which I provide some basic guidance on the types of datatypes that exist for SQL Server and some light discussion on what to use. Appendix A at the end of this book is an expanded look at all of the datatypes and is dedicated to giving examples and example code snippets with all the types.

It’s important to choose the best possible datatype when building the column. The following list contains the intrinsic datatypes (built-in types that are installed when you install SQL Server) and a brief explanation of each of them. As you are translating domains to implementation, step 1 will be to see which of these types matches to need best first, then we will look to constrain the data even further with additional techniques.

- Precise numeric data: Stores numeric data with no possible loss of precision.

- bit: Stores either 1, 0, or NULL; frequently used for Boolean-like columns (1 = True, 0 = False, NULL = Unknown). Up to 8-bit columns can fit in 1 byte. Some typical integer operations, like basic math, cannot be performed.

- tinyint: Non-negative values between 0 and 255 (0 to 2^8 - 1) (1 byte).

- smallint: Integers between –32,768 and 32,767 (-2^15 to 2^15 – 1) (2 bytes).

- int: Integers between -2,147,483,648 and 2,147,483,647 (–2^31 to 2^31 – 1) (4 bytes).

- bigint: Integers between 9,223,372,036,854,775,808 and 9,223,372,036,854,775,807 (-2^63 to 2^63 – 1) (8 bytes).

- decimal (or numeric, which is functionally the same in SQL Server, but decimal is generally preferred for portability): All numbers between –10^38 – 1 and 10^38 – 1 (between 5 and 17 bytes, depending on precision). Allows for fractional numbers, unlike integer-suffixed types.

- Approximate numeric data: Stores approximations of numbers, typically for scientific usage. Gives a large range of values with a high amount of precision but might lose precision of very large or very small numbers.

- float(N): Values in the range from –1.79E + 308 through 1.79E + 308 (storage varies from 4 bytes for N between 1 and 24, and 8 bytes for N between 25 and 53).

- real: Values in the range from –3.40E + 38 through 3.40E + 38. real is an ISO synonym for a float(24) datatype, and hence equivalent (4 bytes).

- Date and time: Stores values that deal with temporal data.

- date: Date-only values from January 1, 0001, to December 31, 9999 (3 bytes).

- time: Time-of-day-only values to 100 nanoseconds (3 to 5 bytes).

- datetime2(N): Despite the hideous name, this type will store a point in time from January 1, 0001, to December 31, 9999, with accuracy ranging from 1 second (0) to 100 nanoseconds (7) (6 to 8 bytes).

- datetimeoffset: Same as datetime2, but includes an offset for time zone (8 to 10 bytes).

- smalldatetime: A point in time from January 1, 1900, through June 6, 2079, with accuracy to 1 minute (4 bytes). (Note: It is suggested to phase out usage of this type and use the more standards-oriented datetime2, though smalldatetime is not technically deprecated.)

- datetime: Points in time from January 1, 1753, to December 31, 9999, with accuracy to 3.33 milliseconds (8 bytes). (Note: It is suggested to phase out usage of this type and use the more standards-oriented datetime2, though datetime is not technically deprecated.)

- Binary data: Strings of bits, for example, files or images. Storage for these datatypes is based on the size of the data stored.

- binary(N): Fixed-length binary data up to 8,000 bytes long.

- varbinary(N): Variable-length binary data up to 8,000 bytes long.

- varbinary(max): Variable-length binary data up to (2^31) – 1 bytes (2GB) long.

- Character (or string) data:

- char(N): Fixed-length character data up to 8,000 characters long.

- varchar(N): Variable-length character data up to 8,000 characters long.

- varchar(max): Variable-length character data up to (2^31) – 1 bytes (2GB) long.

- nchar, nvarchar, nvarchar(max): Unicode equivalents of char, varchar, and varchar(max).

- Other datatypes:

- sql_variant: Stores (pretty much) any datatype, other than CLR-based datatypes (hierarchyId, spatial types) and any types with a max length of over 8,016 bytes. (CLR is a topic I won’t hit on too much, but it allows Microsoft and you to program SQL Server objects in a .NET language. For more information, check https://msdn.microsoft.com/en-us/library/ms131089.aspx). It’s generally a bad idea to use sql_variant for all but a few fringe uses. It is usable in cases where you don’t know the datatype of a value before storing. The only use of this type in the book will be in Chapter 8 when we create user-extensible schemas (which is itself a fringe pattern.)

- rowversion (timestamp is a synonym): Used for optimistic locking to version-stamp a row. It changes on every modification. The name of this type was timestamp in all SQL Server versions before 2000, but in the ANSI SQL standards, the timestamp type is equivalent to the datetime datatype. I’ll make further use of the rowversion datatype in more detail in Chapter 11, which is about concurrency. (16 years later, and it is still referred to as timestamp very often, so this may never actually go away completely.)

- uniqueidentifier: Stores a GUID value.

- XML: Allows you to store an XML document in a column. The XML type gives you a rich set of functionality when dealing with structured data that cannot be easily managed using typical relational tables. You shouldn’t use the XML type as a crutch to violate First Normal Form by storing multiple values in a single column. I will not use XML in any of the designs in this book.

- Spatial types (geometry, geography, circularString, compoundCurve, and curvePolygon): Used for storing spatial data, like for maps. I will not be using these types in this book.

- hierarchyId: Used to store data about a hierarchy, along with providing methods for manipulating the hierarchy. We will cover more about manipulating hierarchies in Chapter 8.

Choice of datatype is a tremendously important part of the process, but if you have defined the domain well, it is not that difficult of a task. In the following sections, we will look at a few of the more important parts of the choice. A few of the considerations we will include are

- Deprecated or bad choice types

- Common datatype configurations

- Large-value datatype columns

- Complex datatypes

I didn’t use too many of the different datatypes in the sample model, because my goal was to keep the model very simple and not try to be an AdventureWorks-esque model that tries to show every possible type of SQL Server in one model (or even the newer WideWorldImporters database, which is less unrealistically complex than AdventureWorks and will be used in several chapters later in the book).

Deprecated or Bad Choice Types

I didn’t include several datatypes in the previous list because they have been deprecated for quite some time, and it wouldn’t be surprising if they are completely removed from the version of SQL Server after 2016 (even though I said the same thing in the previous few versions of the book, so be sure to stop using them as soon as possible). Their use was common in versions of SQL Server before 2005, but they’ve been replaced by types that are far easier to use:

- image: Replace with varbinary(max)

- text or ntext: Replace with varchar(max) and nvarchar(max)

If you have ever tried to use the text datatype in SQL code, you know it is not a pleasant thing. Few of the common text operators were implemented to work with it, and in general, it just doesn’t work like the other native types for storing string data. The same can be said with image and other binary types. Changing from text to varchar(max), and so on, is definitely a no-brainer choice.

The second types that are generally advised against being used are the two money types:

- money: –922,337,203,685,477.5808 through 922,337,203,685,477.5807 (8 bytes)

- smallmoney: Money values from –214,748.3648 through 214,748.3647 (4 bytes)

In general, the money datatype sounds like a good idea, but using it has some confusing consequences. In Appendix A, I spend a bit more time covering these consequences, but here are two problems:

- There are definite issues with rounding off, because intermediate results for calculations are calculated using only four decimal places.

- Money data input allows for including a monetary sign (such as $ or £), but inserting $100 and £100 results in the same value being represented in the variable or column.

Hence, it’s generally accepted that it’s best to store monetary data in decimal datatypes. This also gives you the ability to assign the numeric types to sizes that are reasonable for the situation. For example, in a grocery store, having the maximum monetary value of a grocery item over 200,000 dollars is probably unnecessary, even figuring for a heck of a lot of inflation. Note that in the appendix I will include a more thorough example of the types of issues you could see.

Common Datatype Configurations

In this section, I will briefly cover concerns and issues relating to Boolean/logical values, large datatypes, and complex types and then summarize datatype concerns in order to discuss the most important thing you need to know about choosing a datatype.

Boolean values (TRUE or FALSE) are another of the hotly debated choices that are made for SQL Server data. There’s no Boolean type in standard SQL, since every type must support NULL, and a NULL Boolean makes life far more difficult for the people who implement SQL, so a suitable datatype needs to be chosen through which to represent Boolean values. Truthfully, though, what we really want from a Boolean is the ability to say that the property of the modeled entity “is” or “is not” for some basic setting.

There are three common choices to implement a value of this sort:

- Using a bit datatype where a value of 1:True and 0:False: This is, by far, the most common datatype because it works directly with programming languages such as the .NET languages with no translation. The check box and option controls can directly connect to these values, even though a language like VB used -1 to indicate True. It does, however, draw the ire of purists, because it is too much like a Boolean. Commonly named “flag” as a classword, like for a special sale indicator: SpecialSaleFlag. Some people who don’t do the suffix thing as a rule often start the name off with Is, like IsSpecialSale. Microsoft uses the prefix in the catalog views quite often, like in sys.databases: is_ansi_nulls_on, is_read_only, and so on.

- A char(1) value with a domain of ’Y’, ’N’; ’T’, ’F’, or other values: This is the easiest for ad hoc users who don’t want to think about what 0 or 1 means, but it’s generally the most difficult from a programming standpoint. Sometimes, a char(3) is even better to go with ’yes’ and ’no’. Usually named the same as the bit type, but just having a slightly more attractive looking output.

- A full, textual value that describes the need: For example, a preferred customer indicator, instead of PreferredCustomerFlag, PreferredCustomerIndicator, with values ’Preferred Customer’ and ’Not Preferred Customer’. Popular for reporting types of databases, for sure, it is also more flexible for when there becomes more than two values, since the database structure needn’t change if you need to add ’Sorta Preferred Customer’ to the domain of PreferredCustomerIndicator.

As an example of a Boolean column in our messaging database, I’ll add a simple flag to the MessagingUser table that tells whether the account has been disabled, as shown in Figure 6-17. As before, we are keeping things simple, and in simple cases, a simple flag might do it. But of course, in a sophisticated system, you would probably want to have more information, like who did the disabling, why they did it, when it took place, and perhaps even when it takes effect (these are all questions for design time, but it doesn’t hurt to be thorough).

Figure 6-17. MessagingUser table with DisabledFlag bit column

In SQL Server 2005, dealing with large datatypes changed quite a bit (and hopefully someday Microsoft will kill the text and image types for good). By using the max specifier on varchar, nvarchar, and varbinary types, you can store far more data than was possible in previous versions using a “normal” type, while still being able to deal with the data using the same functions and techniques you can on a simple varchar(10) column, though performance will differ slightly.

As with all datatype questions, use the varchar(max) types only when they’re required, always use the smallest types possible. The larger the datatype, the more data possible, and the more trouble the row size can be to get optimal storage retrieval times. In cases where you know you need large amounts of data or in the case where you sometimes need greater than 8,000 bytes in a column, the max specifier is a fantastic thing.

![]() Note Keep on the lookout for uses that don’t meet the normalization needs, as you start to implement. Most databases have a “comments” column somewhere that morphed from comments to a semistructured mess that your DBA staff then needs to dissect using SUBSTRING and CHARINDEX functions.

Note Keep on the lookout for uses that don’t meet the normalization needs, as you start to implement. Most databases have a “comments” column somewhere that morphed from comments to a semistructured mess that your DBA staff then needs to dissect using SUBSTRING and CHARINDEX functions.

There are two special concerns when using these types:

- There’s no automatic datatype conversion from the normal character types to the large-value types.