![]()

Index Structures and Application

In this life, we have to make many choices. Some are very important choices. Some are not. Many of our choices are between good and evil. The choices we make, however, determine to a large extent our happiness or our unhappiness, because we have to live with the consequences of our choices.

— James E. Faust, American religious leader, lawyer, and politician

To me, the true beauty of the relational database engine comes from its declarative nature. As a programmer, I simply ask the engine a question, and it answers it. The questions I ask are usually pretty simple; just give me some data from a few tables, correlate it based on a column or two, do a little math perhaps, and give me back information (and naturally do it incredibly fast, if you don’t mind). While simple isn’t always the case, the engine usually obliges with an answer extremely quickly. Usually, but not always. This is where the DBA and data programmers must figure out what the optimizer is doing and help it along. If you are not a technical person, you probably think of query tuning as magic. It is not. What it is is a lot of complex code implementing a massive amount of extremely complex algorithms that allow the engine to answer your questions in a timely manner. With every passing version of SQL Server, that code gets better at turning your request into a set of operations that gives you the answers you desire in remarkably small amounts of time. These operations will be shown to you on a query plan, which is a blueprint of the algorithms used to execute your query. I will use query plans in this chapter to show you how your design choices can affect the way work gets done.

Our part in the process of getting back lightning-fast answers to complex questions is to assist the query optimizer (which takes your query and turns it into a plan of how to run the query), the query processor (which takes the plan and uses it to do the actual work), and the storage engine (which manages IO for the whole process). We do this first by designing and implementing as close to the relational model as possible by normalizing our structures, using good set-based code (no cursors), following best practices with coding T-SQL, and so on. This is obviously a design book, so I won’t cover T-SQL coding, but it is a skill you should master. Consider Apress’s Beginning T-SQL, Third Edition, by Kathi Kellenberger (Aunt Kathi!) and Scott Shaw, or perhaps one of Itzik Ben-Gan’s Inside SQL books on T-SQL, for some deep learning on the subject. Once we have built our systems correctly, the next step is to help out by adjusting the physical structures using proper hardware laid out well on disk subsystems, filegroups, files, partitioning, and configuring our hardware to work best with SQL Server, and with the load we will be, are, and have been placing on the server. This is yet another book’s worth of information, for which I will direct you to a book I recently reviewed as a technical editor, Peter Carter’s Pro SQL Server Administration (Apress, 2015).

In this chapter we are going to focus primarily on the structures that you will be adding and subtracting regularly: indexes. Indexing is a constant balance of helping performance versus harming performance. If you don’t use indexes enough, queries will be slow, as the query processor could have to read every row of every table for every query (which, even if it seems fast on your machine, can cause the concurrency issues we will cover in Chapter 11 by forcing the query processor to lock a lot more memory than is necessary). Use too many indexes, and modifying data could take too long, as indexes have to be maintained. Balance is the key, kind of like matching the amount of fluid to the size of the glass so that you will never have to answer that annoying question about a glass that has half as much fluid as it can hold. (The answer is either that the glass is too large or the server needs to refill your glass immediately, depending on the situation.)

All plans that I present will be obtained using the SET SHOWPLAN_TEXT ON statement. When you’re doing this locally, it is almost always easier to use the graphical showplan from Management Studio. However, when you need to post the plan or include it in a document, use one of the SET SHOWPLAN_TEXT commands. You can read about this more in SQL Server Books Online. Note that using SET SHOWPLAN_TEXT (or the other versions of SET SHOWPLAN that are available, such as SET SHOWPLAN_XML) commands does not actually execute the statement/batch; rather, they show the estimated plan. If you need to execute the statement (like to get some dynamic SQL statements to execute to see the plan), you can use SET STATISTICS PROFILE ON to get the plan and some other pertinent information about what has been executed. Each of these session settings will need to be turned OFF explicitly once you have finished, or they will continue executing returning plans where you don’t want so.

Everything we have done so far has been centered on the idea that the quality of the data is the number one concern. Although this is still true, in this chapter, we are going to assume that we’ve done our job in the logical and implementation phases, so the data quality is covered. Slow and right is always better than fast and wrong (how would you like to get paid a week early, but only get half your money?), but the obvious goal of building a computer system is to do things right and fast. Everything we do for performance should affect only the performance of the system, not the data quality in any way.

We have technically added indexes in previous chapters, as a side effect of adding primary key and unique constraints (in that a unique index is built by SQL Server to implement the uniqueness condition). In many cases, those indexes will turn out to be almost all of what you need to make normal OLTP queries run nicely, since the most common searches that people will do will be on identifying information.

What complicates all of our choices at this point in the process in the fifth edition of this book is that Microsoft has added an additional engine to the mix, in both the box and cloud versions of the product. I have noted in Chapters 6 and onward that these different versions exist, and that some of the sample code from those chapters will have downloaded versions to show how it might be coded with the different engines. But the differences are more than code. The design of the logical database isn’t that much different, and what is put into the physical database needn’t change considerably either. But there are internal differences to how indexes work that will change some of the implementation, which I will explore in this chapter. Chapter 11 will discuss how these changes affect concurrency and isolation, and finally, Chapter 13 will discuss changes in coding you will see. For now, we will start with the more common on-disk indexes, and then we will look at the memory-optimized index technologies, including indexes on in-memory OLTP table and columnstore indexes (which will be discussed in more detail in Chapter 14 as well).

Indexing Overview

Indexes allow the SQL Server engine to perform fast, targeted data retrieval rather than simply scanning though the entire table. A well-placed index can speed up data retrieval by orders of magnitude, while a haphazard approach to indexing can actually have the opposite effect when creating, updating, or deleting data.

Indexing your data effectively requires a sound knowledge of how that data will change over time, the sort of questions that will be asked of it, and the volume of data that you expect to be dealing with. Unfortunately, this is what makes any topic about physical tuning so challenging. To index effectively, you almost need the psychic ability to foretell the future of your exact data usage patterns. Nothing in life is free, and the creation and maintenance of indexes can be costly. When deciding to (or not to) use an index to improve the performance of one query, you have to consider the effect on the overall performance of the system.

In the upcoming sections, I’ll do the following:

- Introduce the basic structure of an index.

- Discuss the two fundamental types of indexes and how their structure determines the structure of the table.

- Demonstrate basic index usage, introducing you to the basic syntax and usage of indexes.

- Show you how to determine whether SQL Server is likely to use your index and how to see if SQL Server has used your index.

If you are producing a product for sale that uses SQL Server as the backend, indexes are truly going to be something that you could let your customers manage (unless you can truly effectively constrain how users will use your product). For example, if you sell a product that manages customers, and your basic expectation is that they will have around 1,000 customers, what happens if one wants to use it with 100,000 customers? Do you not take their money? Of course you do, but what about performance? Hardware improvements generally cannot even give linear improvement in performance. So if you get hardware that is 100 times “faster,” you would be extremely fortunate to get close to 100 times improvement. However, adding a simple index can provide 100,000 times improvement that may not even make a difference at all on the smaller data set. (This is not to pooh-pooh the value of faster hardware at all. The point is that, situationally, you get far greater gain from writing better code than you do from just throwing hardware at the problem. The ideal situation is adequate hardware and excellent code, naturally.)

Basic Index Structure

An index is a structure that SQL Server can maintain to optimize access to the physical data in a table. An index can be on one or more columns of a table. In essence, an index in SQL Server works on the same principle as the index of a book. It organizes the data from the column (or columns) of data in a manner that’s conducive to fast, efficient searching, so you can find a row or set of rows without looking at the entire table. It provides a means to jump quickly to a specific piece of data, rather than just starting on page one each time you search the table and scanning through until you find what you’re looking for. Even worse, when you are looking for one specific row (and you know that the value you are searching for is unique), unless SQL Server knows exactly how many rows it is looking for, it has no way to know if it can stop scanning data when one row had been found.

As an example, consider that you have a completely unordered list of employees and their details in a book. If you had to search this list for persons named ’Davidson’, you would have to look at every single name on every single page. Soon after trying this, you would immediately start trying to devise some better manner of searching. On first pass, you would probably sort the list alphabetically. But what happens if you needed to search for an employee by an employee identification number? Well, you would spend a bunch of time searching through the list sorted by last name for the employee number. Eventually, you could create a list of last names and the pages you could find them on and another list with the employee numbers and their pages. Following this pattern, you would build indexes for any other type of search you’d regularly perform on the list. Of course, SQL Server can page through the phone book one name at a time in such a manner that, if you need to do it occasionally, it isn’t such a bad thing, but looking at two or three names per search is always more efficient than two or three hundred, much less two or three million.

Now, consider this in terms of a table like an Employee table. You might execute a query such as the following:

SELECT LastName, <EmployeeDetails>

FROM Employee

WHERE LastName = ’Davidson’;

In the absence of an index to rapidly search, SQL Server will perform a scan of the data in the entire table (referred to as a table scan) on the Employee table, looking for rows that satisfy the query predicate. A full table scan generally won’t cause you too many problems with small tables, but it can cause poor performance for large tables with many pages of data, much as it would if you had to manually look through 20 values versus 2,000. Of course, on your development box, you probably won’t be able to discern the difference between a seek and a scan (or even hundreds of scans). Only when you are experiencing a reasonably heavy load will the difference be noticed. (And as we will notice in the next chapter, the more rows SQL Server needs to touch, the more blocking you may do to other users.)

If we instead created an index on the LastName column, the index creates a structure to allow searching for the rows with the matching LastName in a logical fashion and the database engine can move directly to rows where the last name is Davidson and retrieve the required data quickly and efficiently. And even if there are ten people with the last name of ’Davidson’, SQL Server knows to stop when it hits ’Davidtown’.

Of course, as you might imagine, the engineer types who invented the concept of indexing and searching data structures don’t simply make lists to search through. Instead, most indexes are implemented using what is known as a balanced tree (B-tree) structure (some others are built using hash structures, as we cover when we get to the in-memory OLTP section, but B-trees will be overwhelmingly the norm, so they get the introduction). The B-tree index is made up of index pages structured, again, much like an index of a book or a phone book. Each index page contains the first value in a range and a pointer to the next lower page in the index. The pages on last level in the index are referred to as the leaf pages, which contain the actual data values that are being indexed, plus either the data for the row or pointers to the data. This allows the query processor to go directly to the data it is searching for by checking only a few pages, even when there are millions of values in the index.

Figure 10-1 shows an example of the type of B-tree that SQL Server uses for on-disk indexes (memory-optimized indexes are different and will be covered later). Each of the outer rectangles is an 8K index page, just as we discussed earlier. The three values—’A, ’J’, and ’P’—are the index keys in this top-level page of the index. The index page has as many index keys as will fit physically on the page. To decide which path to follow to reach the lower level of the index, we have to decide if the value requested is between two of the keys: ’A’ to ’I’, ’J’ to ’P’, or greater than ’P’. For example, say the value we want to find in the index happens to be ’I’. We go to the first page in the index. The database determines that ’I’ doesn’t come after ’J’, so it follows the ’A’ pointer to the next index page. Here, it determines that ’I’ comes after ’C’ and ’G’, so it follows the ’G’ pointer to the leaf page.

Figure 10-1. Basic index structure

Each of these pages is 8KB in size. Depending on the size of the key (determined by summing the data lengths of the columns in the key, up to a maximum of 1,700 bytes for some types of indexes), it’s possible to have anywhere from 4 entries to over 1,000 on a single page. The more keys you can fit onto a single index page, allows that page to support that many more children index pages. Therefore a given level of the index B-Tree can support more index pages. The more pages are linked from each level to the next, and finally, the fewer numbers of steps from the top page of the index to reach the leaf.

B-tree indexes are extremely efficient, because for an index that stores only 500 different values on a page—a reasonable number for a typical index of an integer—it has 500 pointers to the next level in the index, and the second level has 500 pages with 500 values each. That makes 250,000 different pointers on that level, and the next level has up to 250,000 × 500 pointers. That’s 125,000,000 different values in just a three-level index. Change that to a 100-byte key, do the math, and you will see why smaller keys (like an integer, which is just 4 bytes) are better! Obviously, there’s overhead to each index key, and this is just a rough estimation of the number of levels in the index.

Another idea that’s mentioned occasionally is how well balanced the tree is. If the tree is perfectly balanced, every index page would have exactly the same number of keys on it. Once the index has lots of data on one end, or data gets moved around on it for insertions or deletions, the tree becomes ragged, with one end having one level, and another many levels. This is why you have to do some basic maintenance on the indexes, something I have mentioned already.

This is just a general overview of what an index is, and there are several variations of types of indexes in use with SQL Server. Many use a B-Tree structure for their basis, but some use hashing, and others use columnar structures. The most important aspect to understand at this point? Indexes speed access to rows by giving you quicker access to some part of the table so that you don’t have to look at every single row and inspect it individually.

On-Disk Indexes

To understand indexes on on-disk objects, it helps to have a working knowledge of the physical structures of a database. At a high level, the storage component of the on-disk engine works with a hierarchy of structures, starting with the database, which is broken down into filegroups (with one PRIMARY filegroup always existing), and each filegroup containing a number of files. As we discussed in Chapter 8, a filegroup can contain files for filestream, which in-memory OLTP also uses; but we are going to keep this simple and just talk about simple files that store basic data. This is shown in Figure 10-2.

Figure 10-2. Generalized database and filegroup structure

You control the placement of objects that store physical data pages at the filegroup level (code and metadata is always stored on the primary filegroup, along with all the system objects). New objects created are placed in the default filegroup, which is the PRIMARY filegroup (every database has one as part of the CREATE DATABASE statement, or the first file specified is set to primary) unless another filegroup is specified in any CREATE <object> commands. For example, to place an object in a filegroup other than the default, you need to specify the name of the filegroup using the ON clause of the table- or index-creation statement:

CREATE TABLE <tableName>

(...) ON <fileGroupName>

This command assigns the table to the filegroup, but not to any particular file. There are commands to place indexes and constraints that are backed with unique indexes on a different filegroup as well. An important part of tuning can be to see if there is any pressure on your disk subsystems and, if so, possibly redistribute data to different disks using filegroups. If you want to see what files you have in your database, you can query the sys.filegroups catalog view:

USE <databaseName>;

GO

SELECT CASE WHEN fg.name IS NULL

--other, such as logs

THEN CONCAT(’OTHER-’,df.type_desc COLLATE database_default)

ELSE fg.name END AS file_group,

df.name AS file_logical_name,

df.physical_name AS physical_file_name

FROM sys.filegroups AS fg

RIGHT JOIN sys.database_files AS df

ON fg.data_space_id = df.data_space_id;

As shown in Figure 10-3, files are further broken down into a number of extents, each consisting of eight separate 8KB pages where tables, indexes, and so on are physically stored. SQL Server only allocates space in a database in uniques of extents. When files grow, you will notice that the size of files will be incremented only in 64KB increments.

Figure 10-3. Files and extents

Each extent in turn has eight pages that hold one specific type of data each:

- Data: Table data.

- Index: Index data.

- Overflow data: Used when a row is greater than 8,060 bytes or for varchar(max), varbinary(max), text, or image values.

- Allocation map: Information about the allocation of extents.

- Page free space: Information about what different pages are allocated for.

- Index allocation: Information about extents used for table or index data.

- Bulk changed map: Extents modified by a bulk INSERT operation.

- Differential changed map: Extents that have changed since the last database backup command. This is used to support differential backups.

In larger databases, most extents will contain just one type of page, but in smaller databases, SQL Server can place any kind of page in the same extent. When all data is of the same type, it’s known as a uniform extent. When pages are of various types, it’s referred to as a mixed extent.

SQL Server places all on-disk table data in pages, with a header that contains metadata about the page (object ID of the owner, type of page, and so on), as well as the rows of data, which is what we typically care about as programmers.

Figure 10-4 shows a typical data page from a table. The header of the page contains identification values such as the page number, the object ID of the object the data is for, compression information, and so on. The data rows hold the actual data. Finally, there’s an allocation block that has the offsets/pointers to the row data.

Figure 10-4. Data pages

Figure 10-4 also shows that there are pointers from the next to the previous rows. These pointers are added when pages are ordered, such as in the pages of an index. Heap objects (tables with no clustered index) are not ordered. I will cover this in the next two sections.

The other kind of page that is frequently used that you need to understand is the overflow page. It is used to hold row data that won’t fit on the basic 8,060-byte page. There are two reasons an overflow page is used:

- The combined length of all data in a row grows beyond 8,060 bytes. In this case, data goes on an overflow page automatically, allowing you to have virtually unlimited row sizes (with the obvious performance concerns that go along with that, naturally).

- By setting the sp_tableoption setting on a table for large value types out of row to 1, all the (max) and XML datatype values are immediately stored out of row on an overflow page. If you set it to 0, SQL Server tries to place all data on the main page in the row structure, as long as it fits into the 8,060-byte row. The default is 0, because this is typically the best setting when the typical values are short enough to fit on a single page.

For example, Figure 10-5 depicts the type of situation that might occur for a table that has the large value types out of row set to 1. Here, Data Row 1 has two pointers to support two varbinary(max) columns: one that spans two pages and another that spans only a single page. Using all of the data in Data Row 1 will now require up to four reads (depending on where the actual page gets stored in the physical structures), making data access far slower than if all of the data were on a single page. This kind of performance problem can be easy to overlook, but on occasion, overflow pages will really drag down your performance, especially when other programmers use SELECT * on tables where they don’t really need all of the data.

Figure 10-5. Sample overflow pages

The overflow pages are linked lists that can accommodate up to 2GB of storage in a single column. Generally speaking, it isn’t really a very good idea to store 2GB in a single column (or even a row), but the ability to do so is available if needed.

Storing large values that are placed off of the main page will be far costlier when you need these values than if all of the data can be placed in the same data page. On the other hand, if you seldom use the data in your queries, placing them off the page can give you a much smaller footprint for the important data, requiring far less disk access on average. It is a balance that you need to take care with, as you can imagine how costly a table scan of columns that are on the overflow pages is going to be. Not only will you have to read extra pages, you’ll have to be redirected to the overflow page for every row that’s overflowed.

When you get down to the row level, the data is laid out with metadata, fixed length fields, and variable length fields, as shown in Figure 10-6. (Note that this is a generalization, and the storage engine does a lot of stuff to the data for optimization, especially when you enable compression.)

Figure 10-6. Data row

The metadata describes the row, gives information about the variable length fields, and so on. Generally speaking, since data is dealt with by the query processor at the page level, even if only a single row is needed, data can be accessed very rapidly no matter the exact physical representation.

The maximum amount of data that can be placed on a single page (including overhead from variable fields) is 8,060 bytes. As illustrated in Figure 10-5, when a data row grows larger than 8,060 bytes, the data in variable length columns can spill out onto an overflow page. A 16-byte pointer is left on the original page and points to the page where the overflow data is placed.

One last concept we need to discuss, and that is page splits. When inserting or updating rows, SQL Server might have to rearrange the data on the pages due to the pages being filled up. Such rearranging can be a particularly costly operation. Consider the situation from our example shown in Figure 10-7, assuming that only three values can fit on a page.

Figure 10-7. Sample data page before page split

Say we want to add the value Bear to the page. If that value won’t fit onto the page, the page will need to be reorganized. Pages that need to be split are split into two, generally with 50% of the data on one page, and 50% on the other (there are usually more than three values on a real page). Once the page is split and its values are reinserted, the new pages would end up looking something like Figure 10-8.

Figure 10-8. Sample data page after page split

Page splits are costly operations and can be terrible for performance, because after the page split, data won’t be located on successive physical pages. This condition is commonly known as fragmentation. Page splits occur in a normal system and are simply a part of adding data to your table. However, they can occur extremely rapidly and seriously degrade performance if you are not careful. Understanding the effect that page splits can have on your data and indexes is important as you tune performance on tables that have large numbers of inserts or updates.

To tune your tables and indexes to help minimize page splits, you can use the FILL FACTOR of the index. When you build or rebuild an index or a table (using ALTER TABLE <tablename> REBUILD, a command that was new in SQL Server 2008), the fill factor indicates how much space is left on each page for future data. If you are inserting random values all over the structures, a common situation that occurs when you use a nonsequential uniqueidentifier for a primary key, you will want to leave adequate space on each page to cover the expected number of rows that will be created in the future. During a page split, the data page is always split approximately fifty-fifty, and it is left half empty on each page, and even worse, the structure is becoming, as mentioned, fragmented.

Now that we have looked at the basic physical structures, let’s get down to the index structures that we will commonly work with. There are two different types of indexes:

- Clustered: This type orders the physical table in the order of the index.

- Nonclustered: These are completely separate structures that simply speed access.

How indexes are structured internally is based on the existence (or nonexistence) of a clustered index. For the nonleaf pages of an index, everything is the same for all indexes. However, at the leaf node, the indexes get quite different—and the type of index used plays a large part in how the data in a table is physically organized. In the upcoming sections, I’ll discuss how the different types of indexes affect the table structure and which is best in which situation.

The examples in this section on indexes will mostly be based on tables from the WideWorldImporters database you can download from Microsoft in some manner (as I am writing this chapter, it was located on GitHub).

Clustered Indexes

In the following sections, I will discuss the structure of a clustered index, followed by showing the usage patterns and examples of how these indexes are used.

Structure

A clustered index physically orders the pages of the data in the table by making the leaf pages of the index be the data pages of the table. Each of the data pages is then linked to the next page in a doubly linked list to provide ordered scanning. Hence, the records in the physical structure are sorted according to the fields that correspond to the columns used in the index. Tables with a clustered index are referred to as clustered tables.

The key of a clustered index is referred to as the clustering key. For clustered indexes that aren’t defined as unique, each record has a 4-byte value (commonly known as an uniquifier) added to each value in the index where duplicate values exist. For example, if the values were A, B, and C, you would be fine. But, if you added another value B, the values internally would be A, B + 4ByteValue, B + Different4ByteValue, and C. Clearly, it is not optimal to get stuck with 4 bytes on top of the other value you are dealing with in every level of the index, so in general, you should try to define the key columns of the clustered index on column(s) where the values are unique, and the smaller the better, as the clustering key will be employed in every other index that you place on a table that has a clustered index.

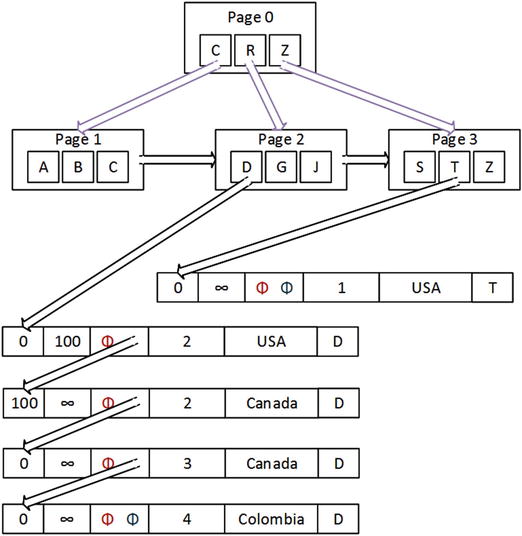

Figure 10-9 shows, at a high level, what a clustered index might look like for a table of animal names. (Note that this is just a partial example; there would likely be more second-level pages for Horse and Python at a minimum.)

Figure 10-9. Clustered index example

You can have only one clustered index on a table, because the table cannot be ordered on more than one set of columns. (Remember this; it is one of the most fun interview questions. Answering anything other than “one clustered index per table” leads to a fun line of follow-up questioning.)

A good real-world example of a clustered index would be a set of old-fashioned encyclopedias. Each book is a level of the index, and on each page, there is another level that denotes the things you can find on each page (e.g., Office–Officer). Then each topic is the leaf level of the index. These books are clustered on the topics in the encyclopedia, just as the example is clustered on the name of the animal. In essence, the entire set of books is a table of information in clustered order. And indexes can be partitioned as well. The encyclopedias are partitioned by letter into multiple books.

Now, consider a dictionary. Why are the words sorted, rather than just having a separate index with the words not in order? I presume that at least part of the reason is to let the readers scan through words they don’t know exactly how to spell, checking the definition to see if the word matches what they expect. SQL Server does something like this when you do a search. For example, back in Figure 10-9, if you were looking for a cat named George, you could use the clustered index to find rows where animal = ’Cat’, then scan the data pages for the matching pages for any rows where name = ’George’.

I must caution you that although it’s true, physically speaking, that tables are stored in the order of the clustered index, logically speaking, tables must be thought of as having no order. This lack of order is a fundamental truth of relational programming: you aren’t required to get back data in the same order when you run the same query twice. The ordering of the physical data can be used by the query processor to enhance your performance, but during intermediate processing, the data can be moved around in any manner that results in faster processing the answer to your query. It’s true that you do almost always get the same rows back in the same order, mostly because the optimizer is almost always going to put together the same plan every time the same query is executed under the same conditions. However, load up the server with many requests, and the order of the data might change so SQL Server can best use its resources, regardless of the data’s order in the structures. SQL Server can choose to return data to us in any order that’s fastest for it. If disk drives are busy in part of a table and it can fetch a different part, it will. If order matters, use ORDER BY clauses to make sure that data is returned as you want.

Using the Clustered Index

As mentioned earlier, the clustering key you choose has implications for the rest of your physical design. The column you use for the clustered index will become a part of every index for your table, so it has heavy implications for all indexes. Because of this, for a typical OLTP system, a very common practice is to choose a surrogate key value, often the primary key of the table, since the surrogate can be kept very small.

Using the surrogate key as the clustering key is usually a great decision, not only because it is a small key (most often, the datatype is an integer that requires only 4 bytes or possibly less using compression), but also because it’s always a unique value. As mentioned earlier, a nonunique clustering key has a 4-byte uniquifier tacked onto its value when keys are not unique. It also helps the optimizer that an index has only unique values, because it knows immediately that for an equality operator, either 1 or 0 values will match. Because the surrogate key is often used in joins, it’s helpful to have smaller keys for the primary key.

![]() Caution Using a GUID for a surrogate key is becoming the vogue these days, but be careful. GUIDs are 16 bytes wide, which is a fairly large amount of space, but that is really the least of the problem. GUIDs are random values, in that they generally aren’t monotonically increasing, and a new GUID could sort anywhere in a list of other GUIDs and end up causing large amounts of page splits. The only way to make GUIDs a reasonably acceptable type is to use the NEWSEQUENTIALID() function (or one of your own) to build sequential GUIDS, but it only works with unique identifier columns in a default constraint, and does not guarantee sequential order with existing data after a reboot. Seldom will the person architecting a solution that is based on GUID surrogates want to be tied down to using a default constraint to generate surrogate values. The ability to generate GUIDs from anywhere and ensure their uniqueness is part of the lure of the siren call of the 16-byte value. In SQL Server 2012 and later, the use of the sequence object to generate guaranteed unique values could be used in lieu of GUIDs.

Caution Using a GUID for a surrogate key is becoming the vogue these days, but be careful. GUIDs are 16 bytes wide, which is a fairly large amount of space, but that is really the least of the problem. GUIDs are random values, in that they generally aren’t monotonically increasing, and a new GUID could sort anywhere in a list of other GUIDs and end up causing large amounts of page splits. The only way to make GUIDs a reasonably acceptable type is to use the NEWSEQUENTIALID() function (or one of your own) to build sequential GUIDS, but it only works with unique identifier columns in a default constraint, and does not guarantee sequential order with existing data after a reboot. Seldom will the person architecting a solution that is based on GUID surrogates want to be tied down to using a default constraint to generate surrogate values. The ability to generate GUIDs from anywhere and ensure their uniqueness is part of the lure of the siren call of the 16-byte value. In SQL Server 2012 and later, the use of the sequence object to generate guaranteed unique values could be used in lieu of GUIDs.

The clustered index won’t always be used for the surrogate key or even the primary key. Other possible uses can fall under the following types:

- Range queries: Having all the data in order usually makes sense when there’s data for which you often need to get a range, such as from A to F.

- Data that’s always accessed sequentially: Obviously, if the data needs to be accessed in a given order, having the data already sorted in that order will significantly improve performance.

- Queries that return large result sets: This point will make more sense once I cover nonclustered indexes, but for now, note that having the data on the leaf index page saves overhead.

The choice of how to pick the clustered index depends on several factors, such as how many other indexes will be derived from this index, how big the key for the index will be, and how often the value will change. When a clustered index value changes, every index on the table must also be touched and changed, and if the value can grow larger, well, then we might be talking page splits. This goes back to understanding the users of your data and testing the heck out of the system to verify that your index choices don’t hurt overall performance more than they help. Speeding up one query by using one clustering key could hurt all queries that use the nonclustered indexes, especially if you chose a large key for the clustered index.

Frankly, in an OLTP setting, in all but the most unusual cases, I stick with a surrogate key for my clustering key, usually one of the integer types or sometimes even the unique identifier (GUID) type. I use the surrogate key because so many of the queries you do for modification (the general goal of the OLTP system) will access the data via the primary key. You then just have to optimize retrievals, which should also be of generally small numbers of rows, and doing so is usually pretty easy.

Another thing that is good about using the clustered index on a monotonically increasing value is that page splits over the entire index are greatly decreased. The table grows only on one end of the index, and while it does need to be rebuilt occasionally using ALTER INDEX REORGANIZE or ALTER INDEX REBUILD, you don’t end up with page splits all over the table. You can decide which to do by using the criteria stated by SQL Server Books Online. By looking in the dynamic management view (DMV) sys.dm_db_index_physical_stats, you can use REBUILD on indexes with greater than 30% fragmentation and use REORGANIZE otherwise. Now, let’s look at an example of a clustered index in use. If you select all of the rows in a clustered table, you’ll see a Clustered Index Scan in the plan. For this we will use the Application.Cities table from WideWorldImporters, with the following structure:

CREATE TABLE Application.Cities

(

CityID int NOT NULL CONSTRAINT PK_Application_Cities PRIMARY KEY CLUSTERED,

CityName nvarchar(50) NOT NULL,

StateProvinceID int NOT NULL

CONSTRAINT FK_Application_Cities_StateProvinceID_Application_StateProvinces

REFERENCES Application.StateProvinces (StateProvinceID),

Location geography NULL,

LatestRecordedPopulation bigint NULL,

LastEditedBy int NOT NULL,

ValidFrom datetime2(7) GENERATED ALWAYS AS ROW START NOT NULL,

ValidTo datetime2(7) GENERATED ALWAYS AS ROW END NOT NULL,

PERIOD FOR SYSTEM_TIME (ValidFrom, ValidTo)

) ON USERDATA TEXTIMAGE_ON USERDATA

WITH (SYSTEM_VERSIONING = ON (HISTORY_TABLE = Application.Cities_Archive))

This table has temporal extensions added to it, as covered in Chapter 8. In this version of the example database, there is currently also an index on the StateProvince column, which we will use later in the chapter.

CREATE NONCLUSTERED INDEX FK_Application_Cities_StateProvinceID ON Application.Cities

(

StateProvinceID ASC

)

ON USERDATA;

There are 37,940 rows in the Application.Cities table:

SELECT *

FROM [Application].[Cities];

The plan for this query is as follows:

|--Clustered Index Scan

(OBJECT:([WideWorldImporters].[Application].[Cities].[PK_Application_Cities]))

If you query on a value of the clustered index key, the scan will likely change to a seek, and almost definitely if it is backing the PRIMARY KEY constraint. Although a scan touches all the data pages, a clustered index seek uses the index structure to find a starting place for the scan and knows just how far to scan. For a unique index with an equality operator, a seek would be used to touch one page in each level of the index to find (or not find) a single value on a single data page, for example:

SELECT *

FROM Application.Cities

WHERE CityID = 23629; --A favorite city of mine, indeed.

The plan for this query now does a seek:

|--Clustered Index Seek

(OBJECT:([WideWorldImporters].[Application].[Cities].[PK_Application_Cities]),

SEEK:([WideWorldImporters].[Application].[Cities].[CityID]=

CONVERT_IMPLICIT(int,[@1],0)) ORDERED FORWARD)

Note the CONVERT_IMPLICIT of the @1 value. This shows the query is being parameterized for the plan, and the variable is cast to an integer type. In this case, you’re seeking in the clustered index based on the SEEK predicate of CityID = 23629. SQL Server will create a reusable plan by default for simple queries. Any queries that are executed with the same exact format, and a simple integer value would use the same plan. You can let SQL Server parameterize more complex queries as well. (For more information, look up “Simple Parameterization and Forced Parameterization” in Books Online.)

You can eliminate the CONVERT_IMPLICIT by explicitly casting the value in your WHERE as WHERE CityID = CAST(23629 AS int):

|--Clustered Index Seek

(OBJECT:([WideWorldImporters].[Application].[Cities].[PK_Application_Cities]),

SEEK:([WideWorldImporters].[Application].[Cities].[CityID]=[@1])

ORDERED FORWARD)

Though this not typically done when it is a complementary literal type in the WHERE clause, it can be an issue when you use noncomplimentary types, like when mixing a Unicode value and non-Unicode value, which we will see later in the chapter. Increasing the complexity, now, we search for two rows:

SELECT *

FROM Application.Cities

WHERE CityID IN (23629,334);

And in this case, pretty much the same plan is used, except the seek criteria now has an OR in it:

|--Clustered Index Seek

(OBJECT:([WideWorldImporters].[Application].[Cities].[PK_Application_Cities]),

SEEK:([WideWorldImporters].[Application].[Cities].[CityID]=(334)

OR

[WideWorldImporters].[Application].[Cities].[CityID]=(23629))

ORDERED FORWARD)

Note that it did not create a parameterized plan this time, but a fixed one with literals for 334 and 23629. Also note that this plan will be executed as two separate seek operations. If you turn on SET STATISTICS IO before running the query:

SET STATISTICS IO ON;

GO

SELECT *

FROM [Application].[Cities]

WHERE CityID IN (23629,334);

GO

SET STATISTICS IO OFF;

you will see that it did two “scans,” which using STATISTICS IO generally means any operation that probes the table, so a seek or scan would show up the same:

Table ’Cities’. Scan count 2, logical reads 4, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

But whether any given query uses a seek or scan, or even two seeks, can be a pretty complex question. Why it is so complex will become clearer over the rest of the chapter, and it will become instantly clearer how useful a clustered index seek is in the next section on nonclustered indexes.

Nonclustered Indexes

Nonclustered index structures are fully independent of the underlying table. Where a clustered index is like a dictionary with the index physically linked to the table (since the leaf pages of the index are a part of the table), nonclustered indexes are more like indexes in this book. For on-disk tables, nonclustered indexes are completely separate from the data. Instead of the leaf page having all of the data, they have just the index keys (and included values, there are pointers to go to the data pages much like the index of a book contains page numbers).

Each leaf page in a nonclustered index contains some form of link to the rows on the data page. The link from the index to a data row is known as a row locator. Exactly how the row locator of a nonclustered index is structured is based on whether or not the underlying table has a clustered index.

In this section, I will start with the structure of nonclustered indexes, and then look at how these indexes are used.

Structure

The base B-tree of the nonclustered index is very much the same as the B-tree of the clustered index. The difference will be how you get to the actual data. First we will look at an abstract representation of the nonclustered index and then show the differences between the implementation of a nonclustered index when you do and do not also have a clustered index. At an abstract level, all nonclustered indexes follow the basic form shown in Figure 10-10.

Figure 10-10. Sample nonclustered index

The major difference between the two possibilities comes down to the row locator being different based on whether the underlying table has a clustered index. There are two different types of pointer that will be used:

- Tables with a clustered index: Clustering key

- Tables without a clustered index: Pointer to physical location of the data, commonly referred to as a row identifier (RID)

In the next two sections, I’ll explain these in more detail.

![]() Tip You can place nonclustered indexes on a different filegroup than the data pages to maximize the use of your disk subsystem in parallel. Note that the filegroup you place the indexes on ought to be on a different controller channel than the table; otherwise, it’s likely that there will be minimal or no gain.

Tip You can place nonclustered indexes on a different filegroup than the data pages to maximize the use of your disk subsystem in parallel. Note that the filegroup you place the indexes on ought to be on a different controller channel than the table; otherwise, it’s likely that there will be minimal or no gain.

Nonclustered Index on a Clustered Table

When a clustered index exists on the table, the row locator for the leaf node of any nonclustered index is the clustering key from the clustered index. In Figure 10-11, the structure on the right side represents the clustered index, and the structure on the left represents the nonclustered index. To find a value, you start at the leaf node of the nonclustered index and traverse to the leaf pages. The result of the index traversal is one or more clustering keys, which you then use to traverse the clustered index to reach the data.

Figure 10-11. Nonclustered index on a clustered table

The overhead of the operation I’ve just described is minimal as long as you keep your clustering key optimal and the index maintained. While having to scan two indexes is more work than just having a pointer to the physical location, you have to think of the overall picture. Overall, it’s better than having direct pointers to the table, because only minimal reorganization is required for any modification of the values in the table. Consider if you had to maintain a book index manually. If you used the book page as the way to get to an index value and subsequently had to add a page to the book in the middle, you would have to update all of the page numbers. But if all of the topics were ordered alphabetically, and you just pointed to the topic name, adding a topic would be easy.

The same is true for SQL Server, and the structures can be changed thousands of times a second or more. Since there is very little hardware-based information lingering in the structure when built this way, data movement is easy for the query processor, and maintaining indexes is an easy operation. Early versions of SQL Server always used physical location pointers for indexes, and this led to corruption in our indexes and tables (without a clustering key, it still uses pointers, but in a manner that reduces corruption at the cost of some performance). Let’s face it, the people with better understanding of such things also tell us that when the size of the clustering key is adequately small, this method is remarkably faster overall than having pointers directly to the table.

The primary benefit of the key structure becomes more obvious when we talk about modification operations. Because the clustering key is the same regardless of physical location, only the lowest levels of the clustered index need to know where the physical data is. Add to this that the data is organized sequentially, and the overhead of modifying indexes is significantly lowered, making all of the data modification operations far faster. Of course, this benefit is only true if the clustering key rarely, or never, changes. Therefore, the general suggestion is to make the clustering key a small, nonchanging value, such as an identity column (but the advice section is still a few pages away).

Nonclustered Indexes on a Heap

A heap data structure in computer science is a generally unordered binary tree structure. In SQL Server, when a table does not have a clustered index, the table is physically referred to as a heap. A more practical definition of a heap is “a group of things placed or thrown one on top of the other.” This is a great way to explain what happens in a table when you have no clustered index: SQL Server simply puts every new row on the end of the last page for the table. Once that page is filled up, it puts data on the next page or a new page as needed.

When building a nonclustered index on a heap, the row locator is a pointer to the physical page and row that contains the row. As an example, take the example structure from the previous section with a nonclustered index on the name column of an animal table, represented in Figure 10-12.

Figure 10-12. Nonclustered index on a heap

If you want to find the row where name = ’Dog’, you first find the path through the index from the top-level page to the leaf page. Once you get to the leaf page, you get a pointer to the page that has a row with the value, in this case Page 102, Row 1. This pointer consists of the page location and the record number on the page to find the row values (the pages are numbered from 0, and the offset is numbered from 1). The most important fact about this pointer is that it points directly to the row on the page that has the values you’re looking for. The pointer for a table with a clustered index (a clustered table) is different, and this distinction is important to understand because it affects how well the different types of indexes perform.

To avoid the types of physical corruption issues that, as I mentioned in the previous section, can occur when you are constantly managing pointers and physical locations, heaps use a very simple method of keeping the row pointers from getting corrupted: they never change until you rebuild the table. Instead of reordering pages, or changing pointers if the page must be split, it moves rows to a different page and a forwarding pointer is left to point to the new page where the data is now. So if the row where name = ’Dog’ had moved (for example, perhaps due to a large varchar(3000) column being updated from a data length of 10 to 3,000), you might end up with following situation to extend the number of steps required to pick up the data. In Figure 10-13, a forwarding pointer is illustrated.

Figure 10-13. Forwarding pointer

All existing indexes that have the old pointer simply go to the old page and follow the forwarding pointer on that page to the new location of the data. If you are using heaps, which should be rare, it is important to be careful with your structures to make sure that data should rarely be moved around within a heap. For example, you have to be careful if you’re often updating data to a larger value in a variable length column that’s used as an index key, because it’s possible that a row may be moved to a different page. This adds another step to finding the data and, if the data is moved to a page on a different extent, adds another read to the database. This forwarding pointer is immediately followed when scanning the table, eventually causing possible horrible performance over time if it’s not managed.

Space is typically not reused in the heap without rebuilding the table (either by selecting into another table or by using the ALTER TABLE command with the REBUILD option). In the section “Indexing Dynamic Management View Queries” later in this chapter, I will provide a query that will give you information on the structure of your index, including the count of forwarding pointers in your table.

Using the Nonclustered Index

After you have made the ever-important choice of what to use for the clustered index, all other indexes will be nonclustered. In this section, I will cover some of the choices of how to apply nonclustered indexes in the following areas:

- General considerations

- Composite index considerations

- Nonclustered indexes with clustered tables

- Nonclustered indexes on heaps

In reality, many of the topics in this section pertain to clustered indexes—things such as composite indexes, statistics, uniqueness, etc., for certain. I am covering them here because you will typically make these decisions for nonclustered indexes, and choose the clustered index based on patterns of usage as the PRIMARY KEY, though not always. There is a section in the last major section of the chapter where I will discuss this in more detail.

General Considerations

We generally start to get the feeling that indexes are needed because queries are (or seem) slow. Naturally though, lack of indexes is clearly not the only reason that queries are slow. Here are some of the obvious reasons for slow queries:

- Extra heavy user load

- Hardware load

- Network load

- Poorly designed database

- Concurrency configuration issues (as covered in Chapter 11)

After looking for the existence of the preceding reasons, we can pull out Management Studio and start to look at the plans of the slow queries. Most often, slow queries are apparent because either |--Clustered Index Scan or |--Table Scan shows up in the query plan, and those operations take a large percentage of time to execute. Simple, right? Essentially, it is a true enough statement that index and table scans are time consuming, but unfortunately, that really doesn’t give a full picture of the process. It’s hard to make specific indexing changes before knowing about usage, because the usage pattern will greatly affect these decisions, for example:

- Is a query executed once a day, once an hour, or once a minute?

- Is a background process inserting into a table rapidly? Or perhaps inserts are taking place during off-hours?

Using Extended Events and the dynamic management views, you can watch the usage patterns of the queries that access your database, looking for slowly executing queries, poor plans, and so on. After you do this and you start to understand the usage patterns for your database, you need to use that information to consider where to apply indexes—the final goal being that you use the information you can gather about usage and tailor an index plan to solve the overall picture.

You can’t just throw around indexes to fix individual queries. Nothing comes without a price, and indexes definitely have a cost. You need to consider how indexes help and hurt the different types of operations in different ways:

- SELECT: Indexes can only have a beneficial effect on SELECT queries.

- INSERT: An index can only hurt the process of inserting new data into the table (if usually only slightly). As data is created in the table, there’s a chance that the indexes will have to be modified and reorganized to accommodate the new values.

- UPDATE: An update physically requires two or three steps: find the row(s) and change the row(s), or find the row(s), delete it (them), and reinsert it (them). During the phase of finding the row, the index is beneficial, just as for a SELECT. Whether or not it hurts during the second phase depends on several factors, for example:

- Did the index key value change such that it needs to be moved around to different leaf nodes?

- Will the new value fit on the existing page, or will it require a page split?

- DELETE: The delete requires two steps: to find the row and to remove it. Indexes are beneficial to find the row, but on deletion, you might have to do some reshuffling to accommodate the deleted values from the indexes.

You should also realize that for INSERT, UPDATE, or DELETE operations, if triggers on the table exist (or constraints exist that execute functions that reference tables), indexes will affect those operations in the same ways as in the list. For this reason, I’m going to shy away from any generic advice about what types of columns to index. In practice, there are just too many variables to consider.

![]() Tip Too many people start adding nonclustered indexes without considering the costs. Just be wary that every index you add has to be maintained. Sometimes, a query taking 1 second to execute is OK when getting it down to .1 second might slow down other operations considerably. The real question lies in how often each operation occurs and how much cost you are willing to suffer. The hardest part is keeping your tuning hat off until you can really get a decent profile of all operations that are taking place.

Tip Too many people start adding nonclustered indexes without considering the costs. Just be wary that every index you add has to be maintained. Sometimes, a query taking 1 second to execute is OK when getting it down to .1 second might slow down other operations considerably. The real question lies in how often each operation occurs and how much cost you are willing to suffer. The hardest part is keeping your tuning hat off until you can really get a decent profile of all operations that are taking place.

For a good idea of how your current indexes and/or tables are currently being used, you can query the dynamic management view sys.dm_db_index_usage_stats:

SELECT CONCAT(OBJECT_SCHEMA_NAME(i.object_id),’.’,OBJECT_NAME(i.object_id)) AS object_name

, CASE WHEN i.is_unique = 1 THEN ’UNIQUE ’ ELSE ’’ END +

i.TYPE_DESC AS index_type

, i.name as index_name

, user_seeks, user_scans, user_lookups,user_updates

FROM sys.indexes AS i

LEFT OUTER JOIN sys.dm_db_index_usage_stats AS s

ON i.object_id = s.object_id

AND i.index_id = s.index_id

AND database_id = DB_ID()

WHERE OBJECTPROPERTY(i.object_id , ’IsUserTable’) = 1

ORDER BY object_name, index_name;

This query will return the name of each object, an index type, an index name, plus the number of

- User seeks: The number of times the index was used in a seek operation

- User scans: The number of times the index was scanned in answering a query

- User lookups: For clustered indexes, the number of times the index was used to resolve the row locator of a nonclustered index search

- User updates: The number of times the index was changed by a user query

This information is very important when trying to get a feel for which indexes might need to be tuned and especially which ones are not doing their jobs because they are mostly getting updated. A particularly interesting thing to look at in an OLTP system is the user_scans on a clustered index. For example, thinking back to our queries of the Application.Cities table, we only have a clustered index on the PRIMARY KEY column CityID. So if we query

SELECT *

FROM Application.Cities

WHERE CityName = ’Nashville’;

the plan will be a scan of the clustered index:

|--Clustered Index Scan

(OBJECT:([WideWorldImporters].[Application].[Cities].[PK_Application_Cities]),

WHERE:([WideWorldImporters].[Application].[Cities].[CityName]=(N’Nashvile’));

And you will see the user_scans value increase every time you run this query. This is something we can reconcile by adding a nonclustered index to this column, locating it in the USERDATA filegroup, since that is where other objects were created in the DDL I included earlier:

CREATE INDEX CityName ON Application.Cities(CityName) ON USERDATA;

Of course, there are plenty more settings when creating an index, some we will cover, some we will not. If you need to manage indexes, it certainly is a great idea to read the Books Online topic about CREATE INDEX as a start for more information.

![]() Tip You can create indexes inline with the CREATE TABLE statement starting with SQL Server 2014.

Tip You can create indexes inline with the CREATE TABLE statement starting with SQL Server 2014.

Now check the plan of the query again, and you will see:

|--Nested Loops(Inner Join,

OUTER REFERENCES:([WideWorldImporters].[Application].[Cities].[CityID]))

|--Index Seek(OBJECT:([WideWorldImporters].[Application].[Cities].[CityName]),

SEEK:([WideWorldImporters].[Application].[Cities].[CityName]=N’Nashville’)

ORDERED FORWARD)

|--Clustered Index Seek

(OBJECT:([WideWorldImporters].[Application].[Cities].[PK_Application_Cities]),

SEEK:([WideWorldImporters].[Application].[Cities].[CityID]=

[WideWorldImporters].[Application].[Cities].[CityID])

LOOKUP ORDERED FORWARD)

This not only is more to format, but it looks like it should be a lot more to execute. However, this plan illustrates nicely the structure of a nonclustered index on a clustered table. The second |-- points at the improvement. To find the row with CityName of Nashville, it seeked in the new index. Once it had the index keys for Nashville rows, it took those and joined them together like two different tables, using a Nested Loops join type. For more information about nested loops and other join operators, check Books Online for “Showplan Logical and Physical Operators Reference.”

Determining Index Usefulness

It might seem at this point that all you need to do is look at the plans of queries, look for the search arguments, and put an index on the columns, then things will improve. There’s a bit of truth to this in a lot of cases, but indexes have to be useful to be used by a query. What if the index of a 418-page book has two entries:

- General Topics 1

- Determining Index Usefulness 417

This means that pages 1–416 cover general topics and pages 417-? are about determining index usefulness. This would be useless to you, unless you needed to know about index usefulness. One thing is for sure: you could determine that the index is generally useless pretty quickly. Another thing we all do with the index of a book to see if the index is useful is to take a value and look it up in the index. If what you’re looking for is in there (or something close), you go to the page and check it out.

SQL Server determines whether or not to use your index in much the same way. It has two specific measurements that it uses to decide if an index is useful: the density of values (sometimes known as the selectivity) and a histogram of a sample of values in the table to check against.

You can see these in detail for indexes by using DBCC SHOW_STATISTICS. Our table is very small, so it doesn’t need stats to decide which to use. Instead, we’ll look at the index we just created:

DBCC SHOW_STATISTICS(’Application.Cities’, ’CityName’) WITH DENSITY_VECTOR;

DBCC SHOW_STATISTICS(’Application.Cities’, ’CityName’) WITH HISTOGRAM;

This returns the following sets (truncated for space). The first tells us the size and density of the keys. The second shows the histogram of where the table was sampled to find representative values.

All density Average Length Columns

------------- -------------- --------------------------

4.297009E-05 17.17427 CityName

2.635741E-05 21.17427 CityName, CityID

RANGE_HI_KEY RANGE_ROWS EQ_ROWS DISTINCT_RANGE_ROWS AVG_RANGE_ROWS

---------------------- ------------- ------------- -------------------- --------------

Aaronsburg 0 1 0 1

Addison 123 11 70 1.757143

Albany 157 17 108 1.453704

Alexandria 90 13 51 1.764706

Alton 223 13 122 1.827869

Andover 173 13 103 1.679612

......... ... .. ... ........

White Oak 183 10 97 1.886598

Willard 209 10 134 1.559701

Winchester 188 18 91 2.065934

Wolverton 232 1 138 1.681159

Woodstock 137 12 69 1.985507

Wynnewood 127 2 75 1.693333

Zwolle 240 1 173 1.387283

I won’t cover the DBCC SHOW_STATISTICS command in great detail, but there are several important things to understand. First, consider the density of each column set. The CityName column is the only column that is actually declared in the index, but note that it includes the density of the index column and the clustering key as well.

All the density is calculated approximately by 1/number of distinct rows, as shown here for the same columns as I just checked the density on:

--Used ISNULL as it is easier if the column can be null

--value you translate to should be impossible for the column

--ProductId is an identity with seed of 1 and increment of 1

--so this should be safe (unless a dba does something weird)

SELECT 1.0/ COUNT(DISTINCT ISNULL(CityName,’NotACity’)) AS density,

COUNT(DISTINCT ISNULL(CityName,’NotACity’)) AS distinctRowCount,

1.0/ COUNT(*) AS uniqueDensity,

COUNT(*) AS allRowCount

FROM Application.Cities;

This returns the following:

density distinctRowCount uniqueDensity allRowCount

-------------------- ---------------- ----------------- -----------

0.000042970092 23272 0.000026357406 37940

You can see that the densities match. (The query’s density is in a numeric type, while the DBCC is using a float, which is why they are formatted differently, but they are the same value!) The smaller the number, the better the index, and the more likely it will be easily chosen for use. There’s no magic number, per se, but this value fits into the calculations of which way is best to execute the query. The actual numbers returned from this query might vary slightly from the DBCC value, as a sampled number might be used for the distinct count.

The second thing to understand in the DBCC SHOW_STATISTICS output is the histogram. Even if the density of the index isn’t low, SQL Server can check a given value (or set of values) in the histogram to see how many rows will likely be returned. SQL Server keeps statistics about columns in a table as well as in indexes, so it can make informed decisions as to how to employ indexes or table columns. For example, consider the following rows from the histogram (I have faked some of these results for demonstration purposes):

RANGE_HI_KEY RANGE_ROWS EQ_ROWS DISTINCT_RANGE_ROWS AVG_RANGE_ROWS

------------ ------------- ------------- -------------------- --------------

Aaronsburg 111 58 2 55.5

Addison 117 67 2 58.5

... ... ... ... ...

In the second row, the row values tell us the following:

- RANGE_HI_KEY: The sampled CityName values are Aaronsburg and Addison.

- RANGE_ROWS: There are 117 rows where the value is between Aaronsburg and Addison (noninclusive of the endpoints). These values would not be known. However, if a user uses Aaronsville as a search argument, the optimizer can now guess that a maximum of 117 rows would be returned (stats are not kept up as part of the transaction). This is one of the ways that the query plan gets the estimated number of rows for each step in a query and is one of the ways to determine if an index will be useful for an individual query.

- EQ_ROWS: There are exactly 67 rows where CityName = Addison.

- DISTINCT_RANGE_ROWS: For the row with Addison, it is estimated that there are two distinct values between Aaronsburg and Addison.

- AVG_RANGE_ROWS: This is the average number of duplicate values in the range, excluding the upper and lower bounds. This value is what the optimizer can expect to be the average number of rows. Note that this is calculated by RANGE_ROWS / DISTINCT_RANGE_ROWS.

One thing that having this histogram can do is allow a seemingly useless index to become valuable in some cases. For example, say you want to index a column with only two values. If the values are evenly distributed, the index would be useless. However, if there are only a few of a certain value, it could be useful (using tempdb):

USE tempDB;

GO

CREATE SCHEMA demo;

GO

CREATE TABLE demo.testIndex

(

testIndex int IDENTITY(1,1) CONSTRAINT PKtestIndex PRIMARY KEY,

bitValue bit,

filler char(2000) NOT NULL DEFAULT (REPLICATE(’A’,2000))

);

CREATE INDEX bitValue ON demo.testIndex(bitValue);

GO

SET NOCOUNT ON; --or you will get back 50100 1 row affected messages

INSERT INTO demo.testIndex(bitValue)

VALUES (0);

GO 50000 --runs current batch 50000 times in Management Studio.

INSERT INTO demo.testIndex(bitValue)

VALUES (1);

GO 100 --puts 100 rows into table with value 1

You can guess that few rows will be returned if the only value desired is 1. Check the plan for bitValue = 0 (again using SET SHOWPLAN ON, or using the GUI):

SELECT *

FROM demo.testIndex

WHERE bitValue = 0;

This shows a clustered index scan:

|--Clustered Index Scan(OBJECT:([tempdb].[demo].[testIndex].[PKtestIndex]),

WHERE:([tempdb].[demo].[testIndex].[bitValue]=(0)))

However, change the 0 to a 1, and the optimizer chooses an index seek. This means that it performed a seek into the index to the first row that had a 1 as a value and worked its way through the values:

|--Nested Loops(Inner Join, OUTER REFERENCES:

([tempdb].[ demo].[testIndex].[testIndex], [Expr1003]) WITH UNORDERED PREFETCH)

|--Index Seek(OBJECT:([tempdb].[demo].[testIndex].[bitValue]),

SEEK:([tempdb].[demo].[testIndex].[bitValue]=(1)) ORDERED FORWARD)

|--Clustered Index Seek(OBJECT:([tempdb].[demo].[testIndex].[PKtestIndex]),

SEEK:([tempdb].[demo].[testIndex].[testIndex]=

[tempdb].[demo].[testIndex].[testIndex]) LOOKUP ORDERED FORWARD)

As we saw earlier, this better plan looks more complicated, but it hinges on now only needing to touch a few 100 rows, instead of 50,100 in the Index Seek operator because we chose to do SELECT * (more on how to avoid the clustered seek in the next section).

You can see why in the histogram:

UPDATE STATISTICS demo.testIndex;

DBCC SHOW_STATISTICS(’demo.testIndex’, ’bitValue’) WITH HISTOGRAM;

This returns the following results in my test. Your actual values will likely vary.

RANGE_HI_KEY RANGE_ROWS EQ_ROWS DISTINCT_RANGE_ROWS AVG_RANGE_ROW

------------ ------------- ------------- -------------------- -------------

0 0 49976.95 0 1

1 0 123.0454 0 1

The statistics gathered estimated that about 123 rows match for bitValue = 1. That’s because statistics gathering isn’t an exact science—it uses a sampling mechanism rather than checking every value (your values might vary as well). Check out the TABLESAMPLE clause, and you can use the same mechanisms to gather random samples of your data.

The optimizer knew that it would be advantageous to use the index when looking for bitValue = 1, because approximately 123 rows are returned when the index key with a value of 1 is desired, but 49,977 are returned for 0. (Your try will likely return a different value. For the rows where the bitValue was 1, I got 80 in the previous edition and 137 in a different set of tests. They are all approximately the 100 that you should expect, since we specifically created 100 rows when we loaded the table.)

This simple demonstration of the histogram is one thing, but in practice, actually building a filtered index to optimize this query is a generally better practice. You might build an index such as this:

CREATE INDEX bitValueOneOnly

ON testIndex(bitValue) WHERE bitValue = 1;

The histogram for this index is definitely by far a clearer good match:

RANGE_HI_KEY RANGE_ROWS EQ_ROWS DISTINCT_RANGE_ROWS AVG_RANGE_ROWS

------------ ------------- ------------- -------------------- --------------

1 0 100 0 1

Whether or not the query actually uses this index will likely depend on how badly another index would perform, which can also be dependent on a myriad of other SQL Server internals. A histogram is, however, another tool that you can use when optimizing your SQL to see what the optimizer is using to make its choices.

![]() Tip Whether or not the histogram includes any data where the bitValue = 1 is largely a matter of chance. I have run this example several times, and one time, no rows were shown unless I used the FULLSCAN option on the UPDATE STATISTICS command (which isn’t feasible on very large tables unless you have quite a bit of time).

Tip Whether or not the histogram includes any data where the bitValue = 1 is largely a matter of chance. I have run this example several times, and one time, no rows were shown unless I used the FULLSCAN option on the UPDATE STATISTICS command (which isn’t feasible on very large tables unless you have quite a bit of time).

As we discussed in the “Clustered Indexes” section, queries can be for equality or inequality. For equality searches, the query optimizer will use the single point and estimate the number of rows. For inequality, it will use the starting point and ending point of the inequality and determine the number of rows that will be returned from the query.

Indexing and Multiple Columns

So far, the indexes I’ve talked about were on single columns, but it isn’t always that you need performance-enhancing indexes only on single columns. When multiple columns are included in the WHERE clause of a query on the same table, there are several possible ways you can enhance your queries:

- Having one composite index on all columns

- Creating covering indexes by including all columns that a query touches

- Having multiple indexes on separate columns

- Adjusting key sort order to optimize sort operations

When you include more than one column in an index, it’s referred to as a composite index. As the number of columns grows, the effectiveness of the index is reduced for the general case. The problem is that the index is sorted by the first column values first, then the second column. So the second column in the index is generally only useful if you need the first column as well (the next section on covering indexes demonstrates a way that this may not be the case, however). Even so, you will very often need composite indexes to optimize common queries when predicates on all of the columns are involved.

The order of the columns in a query is important with respect to whether a composite can and will be used. There are a couple important considerations:

- Which column is most selective? If one column includes unique or mostly unique values, it is likely a good candidate for the first column. The key is that the first column is the one by which the index is sorted. Searching on the second column only is less valuable (though queries using only the second column can scan the index leaf pages for values).

- Which column is used most often without the other columns? One composite index can be useful to several different queries, even if only the first column of the index is all that is being used in those queries.

For example, consider this query (StateProvince is a more obvious choice of column, but it has an index that we will be using in a later section):

SELECT *

FROM Application.Cities

WHERE CityName = ’Nashville’

AND LatestRecordedPopulation = 601222;

The index on CityName we had is useful, but an index on LatestRecordedPopulation might also be good. It may also turn out that neither column alone provides enough of an improvement in performance. Composite indexes are great tools, but just how useful such an index will be is completely dependent on how many rows will be returned by CityName = ’Nashville’ and LatestRecordedPopulation = 601222.

The preceding query with existing indexes (clustered primary key on CityId, indexes on StateProvinceId that was part of the original structure, and CityName) is optimized with the previous plan, with the extra population predicate:

|--Nested Loops(Inner Join,

OUTER REFERENCES:([WideWorldImporters].[Application].[Cities].[CityID]))

|--Index Seek(OBJECT:([WideWorldImporters].[Application].[Cities].[CityName]),

SEEK:([WideWorldImporters].[Application].[Cities].[CityName]=N’Nashville’)

ORDERED FORWARD)

|--Clustered Index Seek

(OBJECT:([WideWorldImporters].[Application].[Cities].[PK_Application_Cities]),

SEEK:([WideWorldImporters].[Application].[Cities].[CityID]=

[WideWorldImporters].[Application].[Cities].[CityID]), WHERE:([WideWorldImporters].[Application].[Cities].[LatestRecordedPopulation]=(601222))

LOOKUP ORDERED FORWARD)

Adding an index on CityName and LatestRecordedPopulation seems like a good way to further optimize the query, but first, you should look at the data for these columns (consider future usage of the index too, but existing data is a good place to start):

SELECT CityName, LatestRecordedPopulation, COUNT(*) AS [count]

FROM Application.Cities

GROUP BY CityName, LatestRecordedPopulation

ORDER BY CityName, LatestRecordedPopulation;

This returns partial results:

CityName LatestRecordedPopulation count

---------------- ------------------------ -----------

Aaronsburg 613 1

Abanda 192 1

Abbeville 419 1

Abbeville 2688 1

Abbeville 2908 1

Abbeville 5237 1

Abbeville 12257 1

Abbotsford 2310 1

Abbott NULL 2

Abbott 356 3

Abbottsburg NULL 1