There must be clear benefits to the creation and adoption of AI technologies, otherwise why would we bother to continue along this path? However, along with benefits will come certain challenges and risks, which need to be identified, recognised and addressed.

3.1 SUSTAINABILITY – HOW OUR VALUES WILL CHANGE HUMANS, SOCIETY AND ORGANISATIONS

We have previously talked about how AI will affect us as individuals in our workplace by improving efficiencies and augmenting what we do and are capable of. It will also affect us in our everyday lives, removing inequalities and allowing us to become more human with a human-centric ethical purpose, undertaking tasks that involve creativity and empathy, among others.

How will AI change our society? We need to consider what implications AI will have on wider society now and in the long term, and what freedoms and human values we are prepared to give up in return for the benefits we will enjoy. Increased use of AI and wider adoption will come with some risks and challenges, such as the potential of its weaponisation, but it may also solve climate change and world poverty. How can we balance the risks versus the benefits?

As a society, we need to start preparing now for how we manage AI today and in the future. For example, would you be prepared to give up your job to an AI entity but in return receive a basic universal income? Maybe, but would you be prepared to give up your child’s future career dreams and aspirations for short-term gains here and now? What happens if we charge off with the aim of utopia and get it wrong?

3.1.1 Intergenerational equity

Intergenerational equity is the concept or idea of fairness or justice in relationships between generations. It is often considered as a value concept that focuses on the rights of future generations. It describes how each future generation has the right to inherit the same level of diversity in natural and cultural resources enjoyed by previous generations and equitable access to the use and benefits of these resources. A common example often quoted is current generations running up debt that will have to be paid off by future generations to come.

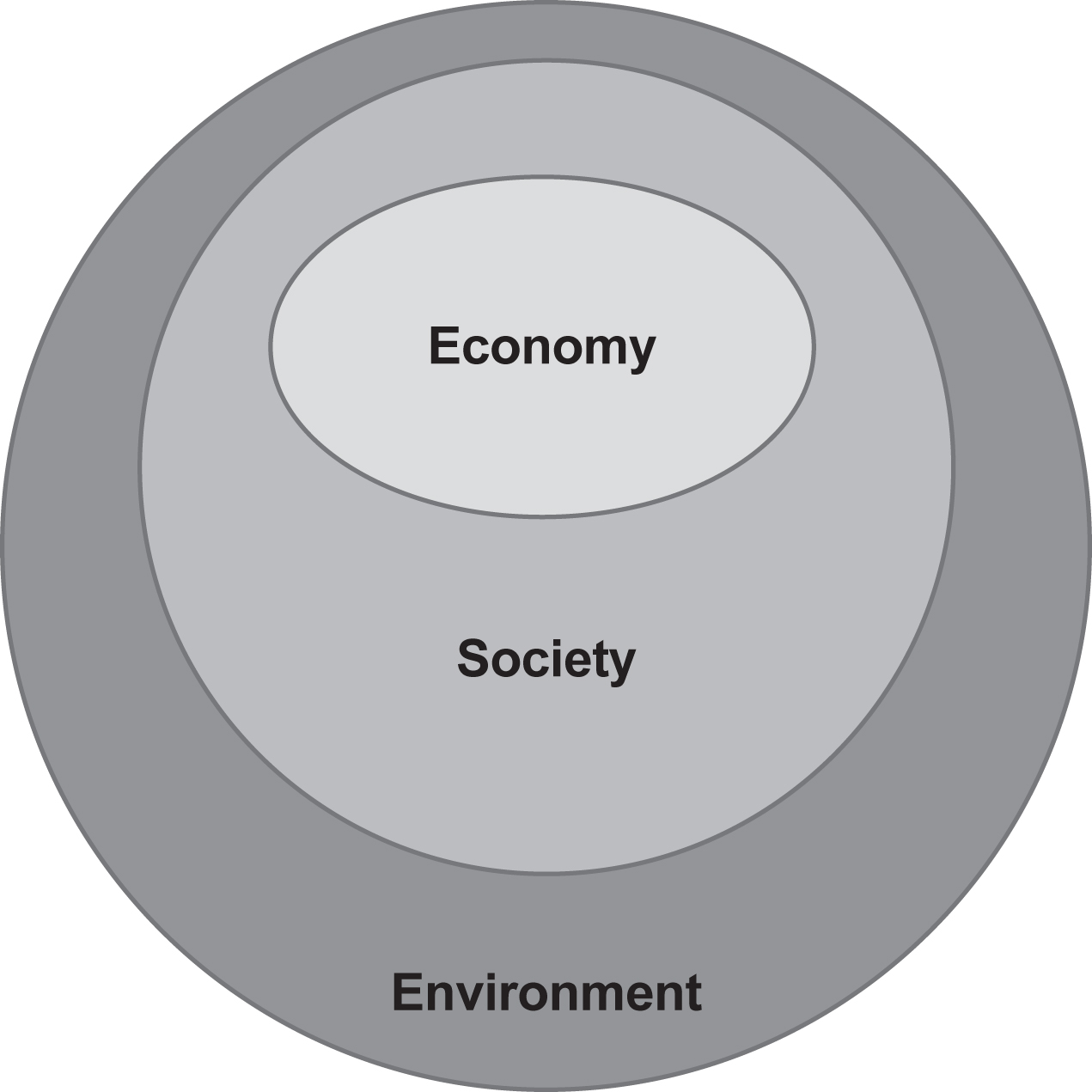

The study of intergenerational equity and view of sustainability is based on three pillars: economic, social and environmental (see Figure 3.1).

Economic – many economists have tried to predict the effect that AI will have on the economy. PwC forecast in 2017 that AI could contribute up to US$15.7 trillion to the global economy in 2030,28 more than the current output of China and India combined. Of this, US$6.6 trillion is likely to come from increased productivity and US$9.1 trillion is likely to come from consumption-side effects.

Social – in the UK during the 1970s, whole communities felt the impact of large organisations closing down operations, including dockyards, mines and airlines. AI brings with it big headlines of major disruption and potential job losses. In an era of humans and machines, the possibility exists for humans to move onto higher value work or to enrich and develop their talents while machines take away the heavy lifting, reducing the burden on human effort. Sustainability is part of the EU’s AI guidelines, in particular the human-centric ethical purpose. It is essential, therefore, that assessing the impact of AI on society is a part of that.

Environmental – the AI carbon footprint most certainly has an environmental impact, which needs to be factored into its business case. Donna Lu suggested in a 2019 article in New Scientist that ‘Creating an AI can be five times worse for the planet than a car.’29

Figure 3.1 The three pillars of sustainability

The emergence of AI and its progressively wider impact on many sectors across society requires an assessment of its effect on sustainable development. We must address concerns such as job displacement and social manipulation, design and develop AI technology safely and inclusively, and ensure equitable access to its benefits.

3.1.2 Design for sustainability

Most organisations already have policies around sustainability. AI can help to formulate sustainable processes to underpin our policies but also has to be factored into our sustainability approach. Key considerations should include:

- Be intentional about sustainability – use AI to drive organisational sustainability goals and help transition the organisation to a new ‘normal’ state rather than arrive there by accident.

- Partner with employees – engage with employees to identify repetitive processes and activities that can be replaced by AI systems, freeing them up to do higher quality work. This may seem obvious, so positive outcomes for employees need to be demonstrated and communicated.

- Water and electric conservation – development of smart buildings using AI-driven technologies will allow organisations to reduce their carbon footprint and save money at the same time.

- Supply chains – use AI to manage supply chains that eliminate unnecessary waste; these are known as intelligent integrated supply chains. Integrated supply chains mean we organise and coordinate more closely how we deliver something (a product or service).

- Develop a recycling programme – use AI to help reduce, reuse, recycle and repurpose products and goods.

- Chemical management – use AI to enable the safe management of harmful chemicals used in manufacturing and through product disposal, for example lithium batteries.

- Purchase only energy efficient products – use AI to identify the most energy efficient products within procurement.

- Develop sustainability work policies – sustainable work policies (e.g. working from home and using AI) can act as both a driver and an enabler of more effective work.

3.2 THE BENEFITS OF ARTIFICIAL INTELLIGENCE

The are many benefits of AI. Social media frenzy, television programmes and movies all extol the success of possible futures of AI, but we need to be practical because chasing ivory tower academic dreams won’t bring to life the benefits. As we move forward, we need to be explicit and careful about what AI and ML can achieve.

Paul R. Daugherty and H. James Wilson have written about their experience doing just that as management consultants: Human + Machine: Reimagining Work in the Age of AI.30 In their book they observe how AI is applied in many projects. They describe human-only systems, machine-only systems and human plus machine systems. They paint a picture of how the future may look, what roles humans and machines will play and what humans and machines will do together.

3.2.1 Human-only systems

What do humans do well?

- leadership and goals;

- creativity;

- empathy;

- judgement.

In Chapter 1 we marvelled at what the human being is capable of. Machines are some way off this – decades or perhaps centuries off. Humans are good at the subjective viewpoint. We are creative; for instance, only a human, in this case Einstein, could have imagined a thought experiment of travelling on a light beam. It changed our understanding and the world as we know it today.

So, humans will be the creative powerhouse. We will let machines do the heavy lifting, leaving us to do the higher value work. Ambiguity is also a role for humans – what happens if the AI is stuck because of a contradiction? It is up to a human to step in and determine the outcome. We can think of many situations where human intervention might be needed (e.g. a confused dementia patient unable to answer questions, or an ethical 50/50 contradiction). A human who is confused could easily confuse an AI system; other humans need to be on hand to support that person.

Humans must also provide the leadership; we must set the goals and the ethical standards. These will form principles, values and rights. We will rely on these in law and, where the judgement is subjective and ambiguous, it will be humans who do this high value work.

3.2.2 Machine-only systems

What do machines do well?

- monotonous tasks;

- prediction;

- iteration;

- adaption.

These four points describe what machines do well, and this is easy to see with digital computers. We use them to do monotonous tasks such as lots of accurate calculations. Predicting and iterating are useful in digital computers for the solution of our ML problems – lots of calculations, repetitive iterations, finding a solution. Adaption is a trait of future machines, when machines will adapt to the needs of the day. Machines in this sense will also adapt to extreme environments; these could be deep in the ocean or on other planets where humans are exposed to extremes. More practically, preparing food in sterile conditions or operating on humans in an operating theatre could be better done by machines.

3.2.2.1 Narrow (weak) artificial intelligence, artificial general intelligence and strong artificial intelligence

Weak or narrow AI is AI focused on a specific task.9 Popular ML of today, the capability we find on cloud digital services, is narrow AI, or narrow ML, focused on a specific task (e.g. supervised and unsupervised learning). Examples of narrow ML are support vector machines, decision trees, k-Nearest Neighbour algorithms. They test hypothesis based on a specific task. The learning from experience is on a specific focused task.

AGI is a machine capable of learning any intellectual task that a human can. It is hypothetical and not required for AI and ML in general. This hypothetical type of AI is often the subject of speculation and sometimes feared by humans. We are nowhere near AGI, and it could be decades or even centuries before we are close to achieving it.

AGI can be taken one step further. Science fiction is fond of machines that are assumed to have consciousness; strong AI is AGI that is also conscious. Consciousness is complex and difficult. Some consider it to be the hardest problem in AI. This is covered in more detail in Chapter 1, particularly Section 1.3.8.

AGI and strong, or conscious, AGI is not currently feasible and is not realistic in the foreseeable future. It is an active area of research, but not a requirement for AI or ML.

There has been considerable debate about consciousness. It is an open question and an academic exercise. One of the core complete rejections of strong AI, at least in digital computers, is presented by Professor John Searle. He argues using the Chinese Room thought experiment that strong AI cannot occur, and states:33

Computational models of consciousness are not sufficient by themselves for consciousness. The computational model for consciousness stands to consciousness in the same way the computational model of anything stands to the domain being modelled. Nobody supposes that the computational model of rainstorms in London will leave us all wet. But they make the mistake of supposing that the computational model of consciousness is somehow conscious. It is the same mistake in both cases.

Just like Sir James Lighthill’s report on AGI that started the AI ‘winter’ of the seventies and eighties,13 Professor Searle has given us an argument against the next step for strong AGI as well. In both cases, AGI and strong AI, we should note that they are a long way off and currently narrow, or weak, AI is here and working. Neither AGI or strong AI are a requirement for AI. We, as humans, set the goals for our AI to achieve. Working within the EU ethical guidelines for AI points us in the right direction for our AI to have a human-centric ethical purpose, to be trustworthy and technically robust. This approach echoes the work of Professor Max Tegmark, who emphasises the necessity of goals when making explicit what goals machines will achieve.34 What this means is that any explicit AI machine goal should be focused on achieving goals that are aligned with human goals. He shows that we need machines to align with our goals.

3.2.3 Human plus machine systems

Rather than thinking of either a machine or a human undertaking a task, surely the future is one with humans and machines in harmony? Or, as Stuart Russell emphasises, human compatible AI – this concept is a proposal to solve the AI control problem. Russell’s three principles are:1

- The machine’s only objective is to maximise the realisation of human preferences.

- The machine is initially uncertain about what those preferences are.

- The ultimate source of information about human preferences is human behaviour.

Machines designed in this way will control the development of AI. Ideally, humans will move to higher value work or have more time to enjoy being human. These principles are new, and it’s not yet clear how they will pan out; however, Stuart Russell suggests they are futureproof.

Practical examples of human plus machines can be easily imagined. A person using an exoskeleton is able to undertake tasks they weren’t previously capable of, perhaps allowing them to walk or lift heavy weights. We have search engines – academics can search literature in seconds, something that would have taken years, if not decades, 40 years ago. In medicine, brain interfaces and the study of the human brain is allowing doctors to better understand stroke recovery. Indeed, we may be able to test for consciousness in patients that we can’t communicate with. The idea of humans and machines together gives us super-human capabilities.

3.3 THE CHALLENGES OF ARTIFICIAL INTELLIGENCE

AI is challenging because it is learning from experience and, of itself, learning is a challenge, albeit one that humans rise to continually. Machines can help us with learning from experience.

If we consider AI or ML, we can think of a typical project having the following challenges linked to functionality, software and hardware:

- What functionality do we need to learn?

- collect data;

- prepare data;

- algorithms to learn from the data;

- present data;

- deploy the AI (product, service, publish).

- What software do we need for the above?

- write our own (e.g. programming languages: R, Python, SciKit-Learn, TensorFlow, C++, Obj C, Fortran, object orientated, etc.);

- open source;

- commercial.

- What hardware do we need for the software?

- tablet or smartphone;

- desktop computer;

- computer cluster;

- high-performance computer – cloud service;

- actuators and sensors – interact with the environment.

These different challenges highlight the nature of AI. It draws on many subject areas and is a universal subject. Practically, we will face mathematical and engineering challenges. We must do this ethically and follow existing laws, for example data protection. Fortunately, we are most likely doing this already and, if we identify where the learning from experience is, we are some way to defining what our challenge actually is. Just defining the challenge doesn’t mean it can be solved, but it’s a start.

The subjective and human part of any project is always a challenge. We will need to find the right skills, work with and/or around organisational attitudes and possibly even overcome a fear of AI. As Robert Dilts’ logical levels of change show us (see Figure 1.2), we will need to build rapport with others and respect and understand their values to make our AI project a success. AI and ML are based on the scientific method and it’s more than likely the soft human subjective skills that will cause the most challenges.

3.3.1 General ethical challenges AI raises

As part of the EU’s guidelines on ethical AI, we must understand the ethical challenges AI raises. This is by no means a simple subject; we must consider, for example:

- How do we deal with the ethics?

- Who is responsible?

- What does the law say?

- What about human rights?

- What about machine rights?

With AGI and strong AI, robotics becomes another ethical question. General approaches to robotic AI have been published by the Engineering and Physical Sciences Research Council.36 Other organisations are preparing AI principles also; these include the International Organization for Standardization.37 Key themes coming out from various organisations include transparency, accountability and care taken when considering bias, diversity, inequalities and unemployment. The themes link back to good ethics. The EU has built the guidelines for AI around an ethical purpose that we can measure using, for example, the United Nations sustainability goals.

3.4 UNDERSTANDING OF THE RISKS AI BRINGS TO PROJECTS

If we assume that we can define a goal for our AI or ML project that has an ethical purpose, then we have to deal with the practical details of what risks AI brings to a project. This means making the goal explicit and then defining a set of tasks, again explicitly. AI projects are engineered, and we are going to have to implement AI systematically. If we are training an AI system or developing an AI algorithm, then we must deal with the mathematics and engineering of our learning from experience. This will possibly be non-linear, complex, involve statistical quantities and so on. It will be difficult to gauge the time it will take to solve this task. These types of problems are risks to your project, so you will need suitably qualified people with experience to help you.

Projects that are non-linear need an iterative approach to solve the underlying mathematical problem. This becomes more involved when the non-linear problem is statistical in nature. An example is predicting the weather. Here, forecasters run 50 simulations (sometimes a lot more) and then assess the statistics of the simulations to understand the likelihood of a particular forecast occurring. We will need to be flexible, adopting an iterative approach to solving the problem.

At every stage of an AI project we are always learning. This can be uncomfortable for some team members who are used to well-organised lists of tasks to do. More complex projects will need a team working together, more than likely in an Agile setting, where regular planning and replannning can take place with the help of a domain expert – remember, they define what is fit for purpose. Communication with stakeholders will be essential, so delivery deadlines are not imposed without a sensible understanding of the iterative approach to projects. If the AI team and stakeholders are not aware of the iterative approach, then this is a major risk to the success of the project.

3.4.1 A typical AI project team

A typical AI project team can have a range of members. It is not easy to generalise because AI is universal. What we know is that an engineered AI project team might need to be:

- ‘T shaped’ (have high-level understanding of AI or another project specific area, with an in-depth understanding of the core requirement subject area);

- collaborative;

- communicative;

- skilled;

- focused;

- the ‘right mindset’.

Typical team members might include:

- product or service owner (business representative);

- data scientist;

- domain expert (ensuring the project is fit for purpose);

- engineer/scientist/mathematician;

- subject matter experts;

- ethicist;

- developers;

- AI trainers;

- AI testers;

- others.

3.4.2 What is ‘fit for purpose’?

Fit for purpose means the service or product is well suited to the task, goal or operation it is doing; it isn’t too complicated, too costly or takes too long.

A project’s output and products must be fit for purpose. EU law states that goods must be fit for purpose. The domain expert defines what is fit for purpose. Practically, the concept of fit for purpose can help us to simplify the learning from experience.

For example, if we are designing a robot that learns how to build a wall in space, the domain expert can save a lot of complication if the spatial accuracy of the robot is millimetres rather than micrometres. In science, the domain expert might specify the accuracy for a calculation to two decimal places, which could mean the ML or AI doesn’t need large computational resources.

When working in an Agile team, the domain expert will play a significant role in defining the problem and planning. The domain expert may not have an easy time defining what is fit for purpose in areas that are subjective – satisfying stakeholder expectations is easier said than done.

3.5 OPPORTUNITIES FOR AI

There are numerous opportunities for AI. ML has led the way here and has enabled the next step in AI capability. ML, remember, has been used successfully in many areas for about 80 years. The enablers of the internet, open-source software, cloud computing, the fourth industrial revolution, the IoT are all bringing the next epoch of exploration. The fifth industrial revolution takes us to extreme environments, and the exploration of space and what this has to offer. On a more practical footing, AI and ML offer opportunities in our day-to-day work. Ray Kurzweil, author of The Singularity is Near, is an optimistic who looks into and describes the future – a utopian future where we will:10

- have the ability to become more human;

- have beneficial use of technologies;

- have a universally sustainable lifestyle worldwide;

- have long-term personal and societal health and wealth gains.

Future AI will give us all super-human insight as well as, perhaps, the means to deliver the utopian ideal. In particular, the singularity is the exponential growth on capability that AI will contribute to.

3.6 FUNDING AI PROJECTS – THE NASA TECHNOLOGY READINESS LEVELS

The hype of AI is not enough to write a business case or determine how you will obtain funding for a project. NASA’s Technology Readiness Levels (TRLs) provide a measure of the maturity of technology. It was developed to allow NASA to obtain technology cost-effectively from their supply chain. The concept has been adopted by the EU and others, and more details of TRLs can be found at the EU’s EARTO project. The TRLs can be described as follows:40

- TRL 1 – basic principles observed;

- TRL 2 – technology concept formulated;

- TRL 3 – experimental proof of concept;

- TRL 4 – technology validated in lab;

- TRL 5 – technology validated in relevant environment (industrially relevant environment in the case of key enabling technologies);

- TRL 6 – technology demonstrated in relevant environment (industrially relevant environment in the case of key enabling technologies);

- TRL 7 – system prototype demonstration in operational environment;

- TRL 8 – system complete and qualified;

- TRL 9 – actual system proven in operational environment (competitive manufacturing in the case of key enabling technologies, or in space).

Understanding how mature our AI project is will help us to write a business case and find funders for the project.

3.7 SUMMARY

In this chapter we looked at the benefits and challenges of AI. In doing so, we emphasised what is fit for purpose, a key concept in making sure we have a realistic and credible project. The end of the chapter asked how mature the technology is that we are thinking of developing or adopting. With this knowledge, we are better placed to ask who is going to fund our AI project.