Chapter 2: Exploring the Three Compilers of Kotlin Multiplatform

In the previous chapter, we discussed that the interoperability quality of shared code is a key aspect of multiplatform development. To explore this interoperability quality, we need to examine how the three different backend compilers of Kotlin – Kotlin/JVM, Kotlin/Native, and Kotlin/JS – work. This will help you manage your expectations regarding the performance, future, and interoperability of Kotlin with the different platforms, which will help you leverage the potential of KMP.

By the end of this chapter, you will have a clearer picture of how the aforementioned compilers work, what interoperability constraints you'll have when working with them, and how to leverage their power.

In this chapter, we're going to cover the following topics:

- Kotlin compilers in general

- The Kotlin/JVM compiler

- The Kotlin/Native compiler

- The Kotlin/JS compiler

Kotlin compilers in general

First, let's make sure we are on the same page and have a basic understanding of how compilers and the Kotlin compiler work in general.

A compiler is a program that translates computer code in a given programming language into machine code or lower-level code. Compilers generally consist of two components:

- Frontend

- Backend

A frontend compiler deals with programming-language specifics, such as parsing the code, verifying syntax and semantic correctness, type checking, and building up the syntax tree. Generally, there is one frontend compiler and as many backend compilers as there are targets.

A backend compiler takes an intermediate representation (IR) of the code that's produced by the frontend compiler and creates an executable based on the IR. This can be run on the specific target while running certain optimizations.

In Kotlin, there are three different backend compilers: one for each of the Java virtual machine (JVM), JavaScript (JS), and native targets. All three produce different outputs that will conform to the target platform.

Until now, the three Kotlin backend compilers were developed pretty much independently, without much overlap. JetBrains recently started a new direction by introducing an IR for Kotlin code, which is already adopted in Kotlin/Native.

The Kotlin/JS and Kotlin/JVM backend compilers are being migrated to this new IR infrastructure at the time of writing; hopefully, they will have more stable versions once this book has been published.

This new unified IR-based compiler means that all three backend compilers will share the same logic, thus making feature development and bug fixes easier. It also brings the possibility of multiplatform compiler extensions, which could be pretty neat.

Now, let's look at the different Kotlin backend compilers.

The Kotlin/JVM compiler

The Kotlin/JVM backend compiler is what helps translate code written in Kotlin into Java bytecode, which is code that can be run on the JVM or Android. Kotlin was initially designed for the Java world, including Android, and the Kotlin/JVM compiler was the one that paved the way for the Kotlin language.

How it works

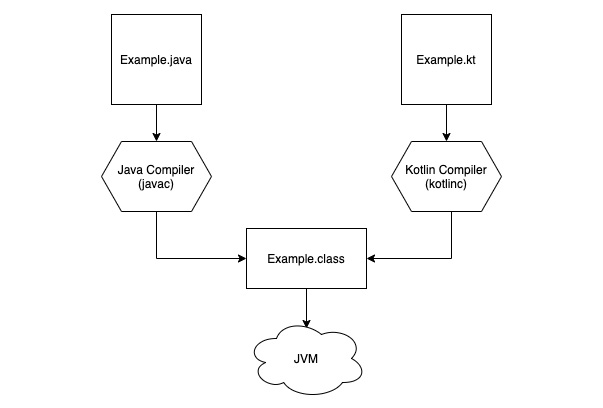

The Kotlin/JVM compiler generates the same .class executables that the Java compiler does, which is the Java bytecode that can be run on the JVM:

Figure 2.1 – How Kotlin/JVM works

This means that you can decompile your Java bytecode, the .class executables, and check the Java code, which is quite handy if you want to see what the generated Kotlin code looks like.

So, the Kotlin/JVM value proposition was (and still is) that it provides the rich palette of language features of Kotlin and translates the code you write with it into the same Java bytecode that has seamless interoperability with any other Java code.

This strong interoperability feature of Kotlin/JVM has led to a rapid rise in the number of people trusting Kotlin and choosing it over Java when developing Android apps. This huge community growth and the official Google support for Kotlin have evolved to a point where many of the official Android libraries are Kotlin-first.

Android is now moving to a new UI toolkit, Jetpack Compose, where the underlying Compose compiler completely relies on the Kotlin compiler. All this means that the Google team is now even more invested in Kotlin, which can be seen in their contribution to the Kotlin/JVM compiler infrastructure as well. The Compose compiler uses a newly introduced infrastructure of the Kotlin/JVM backend compiler.

See the following talk for more information: https://www.youtube.com/watch?v=UryyHq45Y_8.

This means that the Kotlin/JVM backend compiler is currently the most supported compiler by JetBrains, Google, and the huge Android community. Though things have been changing for the best, since Kotlin 1.5, the new unified IR-based Kotlin/JVM backend compiler became stable and enabled by default. This means that the other backend compilers can potentially benefit from any feature and bug fixes on the Kotlin/JVM compiler.

I also have to mention that the big success of Kotlin/JVM on Android was probably also helped by the way Android runs Java executables.

Executing Java code on Android

Applications running on mobile phones have more constraints and fewer resources than applications running on server and desktop environments. Using a VM not only helped Android support the vast number of hardware but in part also optimized the mobile environment. Dan Bornstein designed the Dalvik Virtual Machine (DVM), which is based on JVM and is specifically for Android devices.

This means that running the .class Java bytecode on Android isn't exactly as straightforward, because there isn't a JVM. This bytecode needs to be translated by the DVM; this is what the d8 Dex compiler does – it takes the Java bytecode and produces Dalvik bytecode or .dex:

Figure 2.2 – How the DVM works

So, Android needs to support any new Java versions in their Dex compiler before a developer can use the features of the new Java release.

The key takeaway is that Android developers have to wait for Dex to have support for that version because of how Java runs on Android.

This means that there's breathing time for Kotlin because until the Dex compiler doesn't support the new Java release, there is no point in supporting it in Kotlin, at least for Android developers.

Note that Android replaced DVM with Android Runtime (ART). DVM used just-in-time (JIT) compilation, which means that every app was compiled before it was launched. ART introduced ahead-of-time (AOT) compilation, which, during the application's installation phase, takes the Dalvik bytecode, translates it into the machine code, and stores it. Note that ART still includes a JIT compiler, which complements the AOT compiler by continually improving the performance of Android applications as they run. See https://source.android.com/devices/tech/dalvik/jit-compiler?hl=en and https://source.android.com/devices/tech/dalvik#AOT_compilation for more information.

Now that we've finished this small Android detour, you should have a good understanding of how the Kotlin/JVM backend compiler works, how well it is supported, and the enabling factors that led to its success.

Now, let's dive into the Kotlin/Native compiler, understand how it works, and how you can leverage it when you're trying to share code with iOS and other targets from the Apple ecosystem.

The Kotlin/Native compiler

The Kotlin/Native backend compiler is an LLVM-based compiler (the abbreviation stands for low-level virtual machine, which was officially deprecated to avoid any confusion since LLVM now means more than just a virtual machine (VM); we're talking about LLVM IR, LLVM debugger, and so on) that compiles Kotlin code into native binaries that can be run without a VM. It can be used to compile code for embedded devices, the Android Native Development Kit (NDK) or iOS, macOS, and other Apple targets.

We can immediately draw some comparisons here with Flutter, which uses the Android NDK and LLVM to compile Dart on Android and iOS, respectively; this is known to be one of the key factors of Flutter's pretty good performance compared to React Native.

One of Kotlin/Native's powers comes from the fact that it can provide complete two-way interoperability with the Native targets. This means that you can use the C, Swift, and Objective-C frameworks and static or dynamic C libraries in your shared Kotlin code (we saw this in Chapter 1, The Battle Between Native, Cross-Platform, and Multiplatform, where we wrote an actual file implementation based on NSFileHandle).

Note

The Kotlin/Native compiler can create an executable for many platforms, a static library or dynamic library with C headers for C/C++ projects, and an Apple framework for Swift and Objective-C projects.

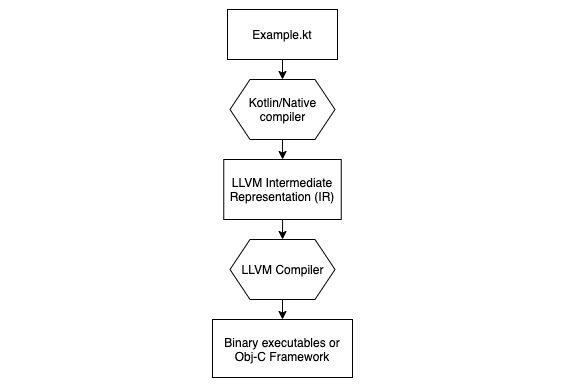

How it works

First, the Kotlin/Native compiler generates an LLVM IR of the original Kotlin code. Then, the LLVM compiler can work with this IR and create the necessary executables. This includes binaries or frameworks in the case of the Apple ecosystem:

Figure 2.3 – How Kotlin/Native works

Interoperability on iOS

Let's look at what the experience of consuming shared code written in Kotlin looks like on iOS.

With Kotlin/Native, you can generate not only binary executables but Obj-C frameworks for Apple targets. The way this works is that Kotlin/Native compiles Kotlin directly into native code with the help of an LLVM and generates some adapters/bridges to make this compiled Kotlin code accessible from Obj-C and Swift.

This means that if you're using Swift (which you most likely are), you have interoperability that looks like this: Kotlin <-> Obj-C <-> Swift.

This means that Obj-C acts as sort of a bridge between Kotlin and Swift, so to use a Swift library in Kotlin, the given library must be usable in Obj-C as well. This can be done by exporting the Swift library's API to Obj-C via @objc annotations.

Pure Swift modules, without these annotations, cannot be used, which means that you cannot base your actual implementations on modules such as SwiftUI, for example.

Note

Note that JetBrains has added direct interoperability with Swift to their roadmap, but its development is currently paused.

This means that the Kotlin/Native compiler will not generate an Obj-C-specific adapter for the native code, but rather a Swift one.

To get an all-around understanding of what you'll get when you compile your Kotlin code for an Obj-C framework with Kotlin/Native, the best way is to just compile your code and check out what its Obj-C representation looks like. The second best way is to check out this concise one-pager from the official documentation: https://kotlinlang.org/docs/native-objc-interop.html.

But to get a better idea of what your Kotlin code could look like on Obj-C, let's look at a relatively common example of a data class. Don't worry if you don't know about the concept of a data class; in Chapter 3, Introducing Kotlin for Swift Developers, we'll explore the core features of the Kotlin language.

Let's go with the following example data class:

// Kotlin code

data class Example(val param1: String, val param2: String)

This class will be compiled to the following Obj-C code:

// The generated Obj-C code

__attribute__((objc_subclassing_restricted))

__attribute__((swift_name("Example")))

@interface KotlinIos2Example : KotlinBase

- (instancetype)initWithParam11:(NSString *)param1

param2:(NSString *)param2

__attribute__((swift_name("init(param1:param2:)")))

__attribute__((objc_designated_initializer));

- (NSString *)component1

__attribute__((swift_name("component1()")));

- (NSString *)component2

__attribute__((swift_name("component2()")));

- (KotlinIos2Example *)doCopyParam1:(NSString *)param1

param2:(NSString *)param2

__attribute__((swift_name("doCopy(param1:param2:)")));

- (BOOL)isEqual:(id _Nullable)other

__attribute__((swift_name("isEqual(_:)")));

- (NSUInteger)hash __attribute__((swift_name("hash()")));

- (NSString *)description

__attribute__((swift_name("description()")));

@property (readonly) NSString *param1

__attribute__((swift_name("param1")));

@property NSString *param2

__attribute__((swift_name("param2")));

@end;

It may seem that the generated Obj-C code has a lot of additional code compared to the initial Kotlin code, but essentially, what you get with Kotlin data classes is that you can automatically generate the equals, copy, and hash functions.

Note

The preceding Obj-C code snippet, which was generated by the Kotlin/Native compiler, is only a snapshot of the compiler's functionality at the time of writing. As the compiler evolves, the generated code may look different in future versions.

In mobile applications, efficient asynchronous programming is an important factor, so there is a huge demand for high-quality language support to ease this. The Kotlin/JVM compiler does a pretty good job of complying with the Java concurrency model.

Now, let's explore how Kotlin/Native approaches this problem.

The concurrency model

One of the biggest headaches developers have to face in the Kotlin/Native world is bumping into concurrency-related issues in their code and experiencing the strictness of the current concurrency model of Kotlin/Native. Let's quickly cover why these restrictions were introduced and why the JetBrains team is currently working on some changes.

Long story short, because of how the current automatic memory management works on Apple-based native systems (iOS, macOS, and so on), some concurrency restrictions needed to be imposed in the Kotlin/Native world. This means that while mobile developers are pretty much used to being able to share objects between threads freely and have adopted various best practices and patterns to avoid race conditions when doing so, they still have to face the really strict world of Kotlin/Native if they plan on sharing logic that involves concurrency and sharing states across multiple threads.

It is possible to write efficient mobile apps even with these restrictions, but it requires a higher level of expertise and the current model is not perfect; in some edge cases, it introduces certain memory leaks.

All this has slowed down the adoption of KMP, and KMM in particular, and pushed JetBrains toward a new solution. They've announced a new memory management infrastructure, which should enable a more performant and developer-friendly concurrency model in Kotlin/Native.

Nonetheless, the current model will still be supported, and it is necessary to understand it until the new concurrency model arrives at a stable version. We'll start learning about the current concurrency model next, but I hope that after 1 or 2 years of writing this book, all the information in this section will be outdated and that people won't need to spend as much time understanding this topic.

The current state and concurrency model

The strictness of Kotlin/Native's concurrency and state model consists of the following two main rules, where the second is connected to the first:

- Mutable states can't be shared between threads.

- States need to be made immutable to be shared between threads. Their value, since they are immutable, cannot be changed afterward.

KMP shared code, as discussed previously, can be used across multiple platforms from the JVM, Native, and JS worlds. This means that the Kotlin/Native concurrency model will have to be enforced in that specific target only (later in this book, we'll see that this will affect how you design your shared code as well), and in practice, Kotlin/Native's concurrency rules will be enforced at runtime.

So, for the concept of immutability, Kotlin/Native introduces frozen objects:

Frozen = Immutable

In code, this means that you need to make all the states that you want to share between threads immutable/frozen. Freezing an object can be done in Kotlin/Native using the freeze() function.

Important note

Using freeze() not only freezes/makes the object itself immutable, but the whole subgraph, including any other object that can be reached from the object where freeze() is called.

Freezing is a one-way ticket, so you can't unfreeze any object.

A common source of crashes in Kotlin/Native applications is accidentally freezing objects that weren't purposefully targeted as freezable objects.

So far, we've discussed that Kotlin/Native enforces the aforementioned rules at runtime; this means that abusing those rules will result in runtime exceptions. Let's look at what exceptions you're likely to bump into in a multithreaded application.

IncorrectDereferenceException

This is the result of the first rule: mutable state cannot be shared across threads. Whenever you get an IncorrectDereferenceException exception, this means that an object that is unfrozen/mutable is shared across threads.

In practice, this can come up in different scenarios, such as calling a Kotlin function from Obj-C/Swift that runs on a background thread, with parameters created on another thread in Obj-C/Swift, or running shared code that was tested on the main thread only.

InvalidMutabilityException

As its name suggests, this exception is the result of the second rule: the value of an immutable object cannot be changed. This will happen any time you're trying to mutate an immutable. In other words, changing the value of a frozen object will cause an InvalidMutabilityException exception at runtime.

Unfortunately, in some cases, this is not that easy to debug because, as we mentioned previously, freeze()freezes the object itself and any other objects that the target object touches, which means you'll need to find out how or where a certain object was frozen.

A good practice is to implement the ensureNeverFrozen() function on objects that you're confident should not be shared across threads. An exception will be thrown right away if the object is already frozen or later on when an attempt to freeze is made.

That is enough of the basics. Now, let's touch on some additional best practices that you can apply during development.

Making sure that developers have a unified and consistent development experience when working with KMP isn't easy because of some of the different features in the JVM, Native, and JS worlds. Some examples include reflection, the state and concurrency model, the memory model, and annotation processing.

Annotation processing and reflection are two other big topics that we need to address since a lot of the libraries that are available in the Kotlin/JVM ecosystem are dependent on them. Having a Native version of them could unlock those libraries and bring their capabilities to the KMP world.

Annotation processing

In Java, annotations became popular because a lot of code generation tools can be created using the Java annotation processor to get rid of boilerplate code. These tools include libraries such as Dagger's dependency injection (DI), developed by Google, or Room, the persistence library on Android.

In the Kotlin/JVM world, this is done via the Kotlin Annotation Processing Tool (KAPT), which has two huge drawbacks:

- It has slow performance since KAPT needs to generate intermediate Java stubs, which can then be processed by the Java annotation processor.

- Since it relies on the Java annotation processor, it's impossible to build platform-agnostic libraries on top of KAPT, which could otherwise be used in KMP projects.

But the good news is that there is already a powerful alternative to KAPT. Kotlin Symbol Processing (KSP) is a new tool developed by the Google folks that offers similar functionality to KAPT. However, since it doesn't rely on Java annotations and the annotation processor, it can offer direct access to Kotlin compiler features, it's multiplatform friendly, and it's up to two times faster than KAPT.

Since Room and Moshi already provide experimental support for KSP, Android developers may have a good chance of not having to give up their current well-tried library choices if they want to build Kotlin Multiplatform apps.

In Chapter 1, The Battle Between Native, Cross-Platform, and Multiplatform, we briefly looked at the Commonizer tool. Let's dive deeper into that topic and see what problems it solves and why it was needed.

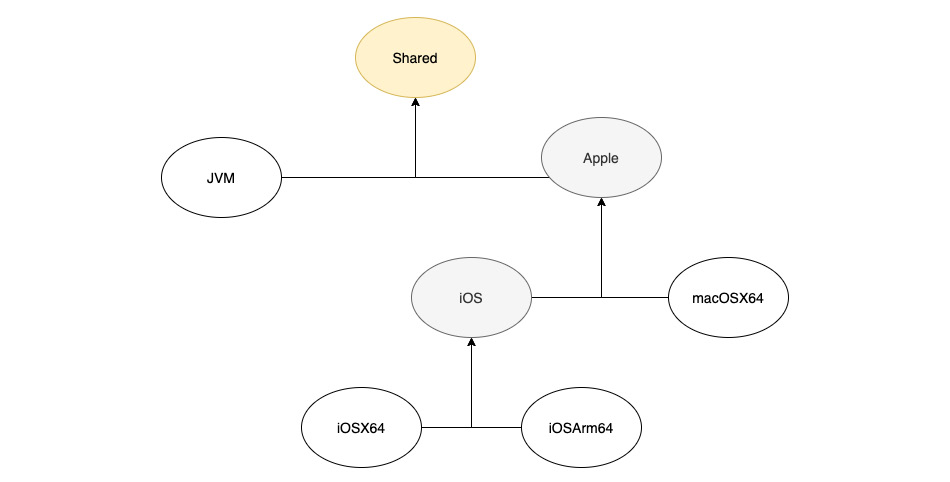

Intermediate source sets and the Commonizer

KMP's goal is to make code sharing across all targets as convenient as possible. On iOS, there are different CPU architectures. KMP supports the following targets:

- Arm64: This is a 64-bit ARM CPU architecture that's supported on iOS 7+. All devices from iOS 11 onward use this architecture.

- Arm32: This was used before Arm64.

- x64: This is a 64-bit Intel processor that's available for simulators.

This means that if you're developing a KMM application, you probably want to target both the Arm64 and x64 CPU architectures; you don't want to duplicate your actual platform implementations for these targets.

Additionally, if you plan on supporting macOSX64 targets later, you may have some logic between the Apple targets that relies on the same foundation dependencies and could be shared.

In these cases, you need an intermediate source set, that is, an iOS for combining the two Arm64 and x64 targets and Apple for combining the iOS targets with the macOSX64 target:

Figure 2.4 – iOS and Apple intermediate source sets

As you can see, you should use the targets provided by the KMP framework and combine them flexibly. By doing this, you would create both iOS and Apple intermediate source sets and define common platform functionality for the specific target set:

Figure 2.5 – Intermediate source set dependencies

At first, this wasn't possible with the framework, so even though both your iOS and macOS targets relied on the framework, for example, you had to duplicate actual implementations for iOSX64, iOSArm64, and macOSX64, which isn't at all scalable. The JetBrains guys came up with a nice solution and even went one step further to automate things.

With the Commonizer tool, you can create the aforementioned intermediate source sets, and the tool will be smart enough to infer the common dependencies and create the related abstract/actual declarations for you. It's even smarter than you'd expect because it can also infer the subtle differences between the different POSIX dependencies.

At this point, you may be wondering why we don't use it for any shared code. This is because it works with subtle or no differences at all; it was designed specifically for use cases such as the POSIX library, where there are many abstractions to make, but the actual declarations are mostly or completely the same. Also, if it did generate all the shared code, then it would lose its key multiplatform factor; that is, giving developers the flexibility to write shared code while working natively on the platform-specific questions.

If you'd like to fully understand how Commonizer works, I highly recommend this talk by Dmitry Savvinov: https://youtu.be/5QPPZV04-50.

If we'd like to achieve the intermediate source sets shown in Figure 2.4, all we have to do is add the following to Gradle (if you don't know what Gradle is, you'll find out in the next chapter, but, in brief, it's just a build tool):

val appleMain by creating {

dependsOn(commonMain)

}

val iosMain by getting {

dependsOn(appleMain)

dependencies {

// Add iOS specific dependencies

}

}

val macOSMain by getting {

dependsOn(appleMain)

dependencies {

// Add macOS specific dependencies

}

}

Note

Since providing an intermediate iOS source set for iOSArm64 and iOSX64 is so common, in that in most cases you probably don't want to write specific code for the different targets, the framework already provides an intermediate/shared source set configuration. That's why in the preceding code snippet, we don't have to manually create this iOS source set, only the Apple source set.

We could say that the Kotlin/Native backend compiler is currently living its community test phase. It's important to reason why Kotlin/JVM became a success so that we can compare it to the current state of Kotlin/Native. By doing this, we can see what its future could look like, which gives you a better perspective of what you should expect before investing in learning the technology.

I believe that the support of the JetBrains team and the Kotlin community is really good and that because of the soundness of the KMP approach, the framework will scale well. Even though technologies come and go, the multiplatform approach, as a concept, is probably here to stay.

Before we learn how to build KMM apps in more depth, let's cover the Kotlin/JS backend compiler as well so that you have the full picture and know what to expect if you plan on targeting a JS platform as well (be it the web, Node.js, or any other JS target).

The Kotlin/JS compiler

The Kotlin/JS compiler is the final piece of the puzzle for sharing code between different platforms. There are many use cases in which you could leverage this compiler, as follows:

- Sharing code between the backend and the frontend. If your backend is written in Node.js and you'd like to share code between your backends and frontend, Kotlin/JS can be a great tool.

- Sharing code between mobile platforms and the web, which is a great way to keep your frontend in sync.

So, what do you need to know about the Kotlin/JS backend compiler?

How it works

Kotlin/JS currently targets the ECMAScript 5 (ES5) JavaScript standard. As we saw with the Kotlin/JVM and Kotlin/Native compilers, Kotlin/JS has a similar process but produces a different type of executable. In short, it takes Kotlin code that it then translates into JavaScript code, given that the underlying code uses dependencies that can run on JavaScript.

It is currently migrating to the IR compiler infrastructure, so instead of directly generating JavaScript files from the Kotlin source code, it first generates an IR, which then gets compiled to JavaScript.

Kotlin currently also has experimental support for generating .d.ts TypeScript declaration files.

If you've been wondering, Kotlin/JS also allows you to use npm dependencies in your Kotlin/JS code. It's as simple as doing the following:

dependencies {

implementation(npm("bootstrap", "5.0.1"))

}

For those unfamiliar with npm, its purpose is similar to Cocoapods on iOS and Gradle on Android and Java.

As far as concurrency goes in the JS world, because of its single-threaded nature, you won't experience a similar strict concurrency model to Kotlin/Native and the complexity of coroutines may seem obsolete in the Kotlin/JS world.

Coroutines are one of Kotlin's powerful libraries that enable you to write concurrent, asynchronous code. We'll explore them in more depth in Chapter 3, Introducing Kotlin for Swift Developers.

Summary

In this chapter, we looked at the three Kotlin compilers that enable code sharing across different platforms: Kotlin/JVM, Kotlin/Native, and Kotlin/JS.

By now, you should have a better understanding of how KMP uses these compilers, how they work, and how they can enable you to share code across different platforms.

In the next chapter, we'll provide a brief introduction to Kotlin to help bring iOS developers up to speed with the Kotlin world. Then, we'll turn to more practical things and dive into creating a KMM project.