Chapter 10. Scalar Optimizations

An optimizing compiler improves the quality of the code that it generates by applying transformations that rewrite the code. This chapter builds on the introduction to optimization provided in Chapter 8 and the material on static analysis in Chapter 9 to focus on optimization of the code for a single thread of control—so-called scalar optimization. The chapter introduces a broad selection of machine-independent transformations that address a variety of inefficiencies in the compiled code.

Keywords: Optimization, Transformation, Machine Dependent, Machine Independent, Redundancy, Dead Code, Constant Propagation

10.1. Introduction

An optimizer analyzes and transforms the code with the intent to improve its performance. The compiler uses static analyses, such as data-flow analysis (see Chapter 9) to discover opportunities for transformations and to prove their safety. These analyses are preludes to transformations—unless the compiler rewrites the code, nothing will change.

Code optimization has a history that is as long as the history of compilers. The first

fortran compiler included careful optimization with the intent to provide performance that rivaled hand-coded assembly code. Since that first optimizing compiler in the late 1950s, the literature on optimization has grown to include thousands of papers that describe analyses and transformations.

Deciding which transformations to use and selecting an order of application for them remains one of the most daunting decisions that a compiler writer faces. This chapter focuses on

scalar optimization, that is, optimization of code along a single thread of control. It identifies five key sources of inefficiency in compiled code and then presents a set of optimizations that help to remove those inefficiencies. The chapter is organized around these five effects; we expect that a compiler writer choosing optimizations might use the same organizational scheme.

Scalar Optimization

code improvement techniques that focus on a single thread of control

Conceptual Roadmap

Compiler-based optimization is the process of analyzing the code to determine its properties and using the results of that analysis to rewrite the code into a more efficient or more effective form. Such improvement can be measured in many ways, including decreased running time, smaller code size, or lower processor energy use during execution. Every compiler has some set of input programs for which it produces highly efficient code. A good optimizer should make that performance available on a much larger set of inputs. The optimizer should be robust, that is, small changes in the input should not produce wild performance changes.

An optimizer achieves these goals through two primary mechanisms. It eliminates unnecessary overhead introduced by programming language abstractions and it matches the needs of the resulting program to the available hardware and software resources of the target machine. In the broadest sense, transformations can be classified as either

machine independent or

machine dependent. For example, replacing a redundant computation with a reuse of the previously computed value is usually faster than recomputing the value; thus, redundancy elimination is considered machine independent. By contrast, implementing a character string copy operation with the “scatter-gather” hardware on a vector processor is clearly

machine dependent. Rewriting that copy operation with a call to the hand-optimized system routine

bcopy might be more broadly applicable.

Machine Independent

A transformation that improves code on most target machines is considered

machine independent.

Machine Dependent

A transformation that relies on knowledge of the target processor is considered

machine dependent.

|

Overview

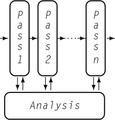

Most optimizers are built as a series of passes, as shown in the margin. Each pass takes code in

Ir form as its input. Each pass produces a rewritten version of the

Ir code as its output. This structure breaks the implementation into smaller pieces and avoids some of the complexity that arises in large, monolithic programs. It allows the passes to be built and tested independently, which simplifies development, testing, and maintenance. It creates a natural way for the compiler to provide different levels of optimization; each level specifies a set of passes to run. The pass structure allows the compiler writer to run some passes multiple times, if desirable. In practice, some passes should run once, while others might run several times at different points in the sequence.

Optimization Sequences

The choice of specific transformations and the order of their application has a strong impact on the effectiveness of an optimizer. To make the problem harder, individual transformations have overlapping effects (e.g. local value numbering versus superlocal value numbering) and individual applications have different sets of inefficiencies.

Equally difficult, transformations that address different effects interact with one another. A given transformation can create opportunities for other transformations. Symmetrically, a given transformation can obscure or eliminate opportunities for other transformations.

Classic optimizing compilers provide several levels of optimization (e.g.

-O,

−O1,

−O2, …) as one way of providing the end user with multiple sequences that they can try. Researchers have focused on techniques to derive custom sequences for specific application codes, selecting both a set of transformations and an order of application. Section 10.7.3 discusses this problem in more depth.

In the design of an optimizer, the selection of transformations and the ordering of those transformations play a critical role in determining the overall effectiveness of the optimizer. The selection of transformations determines what specific inefficiencies in the

ir program the optimizer discovers and how it rewrites the code to reduce those inefficiencies. The order in which the compiler applies the transformations determines how the passes interact.

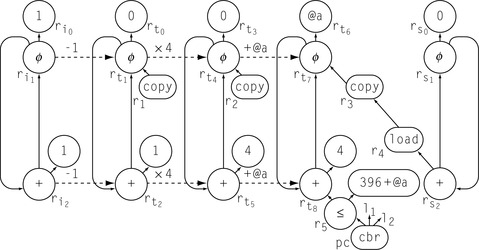

For example, in the appropriate context (

r

2 > 0 and

r

5 = 4), an optimizer might replace

mult r

2, r

5 ⇒ r

17 with

lshiftI r

2, 2 ⇒ r

17. This change replaces a multicycle integer multiply with a single-cycle shift operation and reduces demand for registers. In most cases, this rewrite is profitable. If, however, the next pass relies on commutativity to rearrange expressions, then replacing a multiply with a shift forecloses an opportunity (multiply is commutative, shift is not). To the extent that a transformation makes later passes less effective, it may hurt overall code quality. Deferring the replacement of multiplies by shifts may avoid this problem; the context needed to prove safety and profitability for this rewrite is likely to survive the intervening passes.

The first hurdle in the design and construction of an optimizer is conceptual. The optimization literature describes hundreds of distinct algorithms to improve

ir programs. The compiler writer must select a subset of these transformations to implement and apply. While reading the original papers may help with the implementation, it provides little insight for the decision process, since most of the papers advocate using their own transformations.

Compiler writers need to understand both what inefficiencies arise in applications translated by their compilers and what impact those inefficiencies have on the application. Given a set of specific flaws to address, they can then select specific transformations to address them. Many transformations, in fact, address multiple inefficiencies, so careful selection can reduce the number of passes needed. Since most optimizers are built with limited resources, the compiler writer can prioritize transformations by their expected impact on the final code.

As mentioned in the conceptual roadmap, transformations fall into two broad categories: machine-independent transformations and machine-dependent transformations. Examples of machine-independent transformations from earlier chapters include local value numbering, inline substitution, and constant propagation. Machine-dependent transformations often fall into the realm of code generation. Examples include peephole optimization (see Section 11.5), instruction scheduling, and register allocation. Other machine-dependent transformations fall into the realm the optimizer. Examples include tree-height balancing, global code placement, and procedure placement. Some transformations resist classification; loop unrolling can address either machine-independent issues such as loop overhead or machine-dependent issues such as instruction scheduling.

The distinction between the categories can be unclear. We call a transformation machine independent if it deliberately ignores target machine considerations, such as its impact on register allocation.

Chapter 8 and Chapter 9 have already presented a number of transformations, selected to illustrate specific points in those chapters. The next three chapters focus on code generation, a machine-dependent activity. Many of the techniques presented in these chapters, such as peephole optimization, instruction scheduling, and register allocation, are machine-dependent transformations. This chapter presents a broad selection of transformations, mostly machine-independent transformations. The transformations are organized around the effect that they have on the final code. We will concern ourselves with five specific effects.

■

Eliminate useless and unreachable code The compiler can discover that an operation is either useless or unreachable. In most cases, eliminating such operations produces faster, smaller code.

■

Move code The compiler can move an operation to a place where it executes fewer times but produces the same answer. In most cases, code motion reduces runtime. In some cases, it reduces code size.

■

Eliminate a redundant computation The compiler can prove that a value has already been computed and reuse the earlier value. In many cases, reuse costs less than recomputation. Local value numbering captures this effect.

■

Enable other transformations The compiler can rewrite the code in a way that exposes new opportunities for other transformations. Inline substitution, for example, creates opportunities for many other optimizations.

Optimization as Software Engineering

Having a separate optimizer can simplify the design and implementation of a compiler. The optimizer simplifies the front end; the front end can generate general-purpose code and ignore special cases. The optimizer simplifies the back end; the back end can focus on mapping the

IR version of the program to the target machine. Without an optimizer, both the front end and back end must be concerned with finding opportunities for improvement and exploiting them.

In a pass-structured optimizer, each pass contains a transformation and the analysis required to support it. In principle, each task that the optimizer performs can be implemented once. This provides a single point of control and lets the compiler writer implement complex functions once, rather than many times. For example, deleting an operation from the

IR can be complicated. If the deleted operation leaves a basic block empty, except for the block-ending branch or jump, then the transformation should also delete the block and reconnect the block's predecessors to its successors, as appropriate. Keeping this functionality in one place simplifies implementation, understanding, and maintenance.

From a software engineering perspective, the pass structure, with a clear separation of concerns, makes sense. It lets each pass focus on a single task. It provides a clear separation of concerns—value numbering ignores register pressure and the register allocator ignores common subexpressions. It lets the compiler writer test passes independently and thoroughly, and it simplifies fault isolation.

This set of categories covers most machine-independent effects that the compiler can address. In practice, many transformations attack effects in more than one category. Local value numbering, for example, eliminates redundant computations, specializes computations with known constant values, and uses algebraic identities to identify and remove some kinds of useless computations.

10.2. Eliminating Useless and Unreachable Code

Sometimes, programs contain computations that have no externally visible effect. If the compiler can determine that a given operation does not affect the program's results, it can eliminate the operation. Most programmers do not write such code intentionally. However, it arises in most programs as the direct result of optimization in the compiler and often from macro expansion or naive translation in the compiler's front end.

Two distinct effects can make an operation eligible for removal. The operation can be

useless, meaning that its result has no externally visible effect. Alternatively, the operation can be

unreachable, meaning that it cannot execute. If an operation falls into either category, it can be eliminated. The term

dead code is often used to mean either useless or unreachable code; we use the term to mean useless.

Useless

An operation is

useless if no operation uses its result, or if all uses of the result are, themselves dead.

Unreachable

An operation is

unreachable if no valid control-flow path contains the operation.

Removing useless or unreachable code shrinks the

ir form of the code, which leads to a smaller executable program, faster compilation, and, often, to faster execution. It may also increase the compiler's ability to improve the code. For example, unreachable code may have effects that show up in the results of static analysis and prevent the application of some transformations. In this case, removing the unreachable block may change the analysis results and allow further transformations (see, for example, sparse conditional constant propagation, or

sccp, in Section 10.7.1).

Some forms of redundancy elimination also remove useless code. For instance, local value numbering applies algebraic identities to simplify the code. Examples include

x + 0 ⇒ x, y × 1 ⇒ y, and

max(z, z) ⇒ z. Each of these simplifications eliminates a useless operation—by definition, an operation that, when removed, makes no difference in the program's externally visible behavior.

Because the algorithms in this section modify the program's control-flow graph (

cfg), we carefully distinguish between the terms

branch, as in an

iloccbr, and

jump, as in an

ilocjump. Close attention to this distinction will help the reader understand the algorithms.

10.2.1. Eliminating Useless Code

An operation can set a return value in several ways, including assignment to a call-by-reference parameter or a global variable, assignment through an ambiguous pointer, or passing a return value via a return statement.

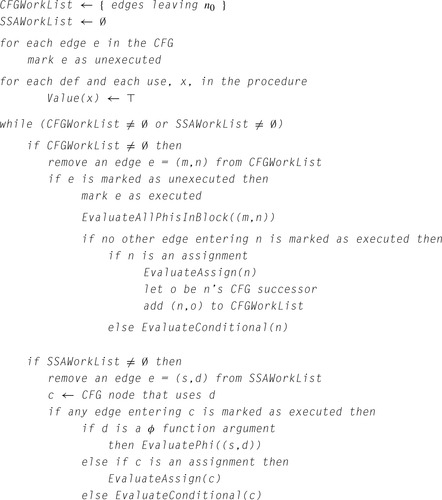

The classic algorithms for eliminating useless code operate in a manner similar to mark-sweep garbage collectors with the

IR code as data (see Section 6.6.2). Like mark-sweep collectors, they perform two passes over the code. The first pass starts by clearing all the mark fields and marking “critical” operations as “useful.” An operation is

critical if it sets return values for the procedure, it is an input/output statement, or it affects the value in a storage location that may be accessible from outside the current procedure. Examples of critical operations include a procedure's prologue and epilogue code and the precall and postreturn sequences at calls. Next, the algorithm traces the operands of useful operations back to their definitions and marks those operations as useful. This process continues, in a simple worklist iterative scheme, until no more operations can be marked as useful. The second pass walks the code and removes any operation not marked as useful.

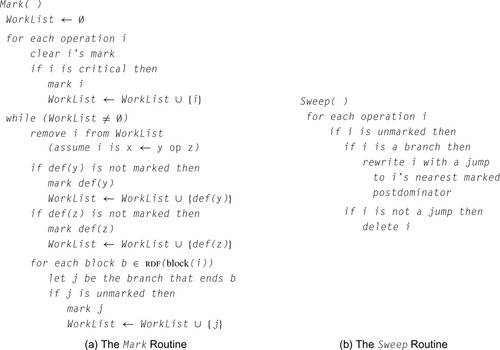

Figure 10.1 makes these ideas concrete. The algorithm, which we call

Dead, assumes that the code is in

ssa form.

ssa simplifies the process because each use refers to a single definition.

Dead consists of two passes. The first, called

Mark, discovers the set of useful operations. The second, called

Sweep, removes useless operations.

Mark relies on reverse dominance frontiers, which derive from the dominance frontiers used in the

ssa construction (see Section 9.3.2).

The treatment of operations other than branches or jumps is straightforward. The marking phase determines whether an operation is useful. The sweep phase removes operations that have not been marked as useful.

The treatment of control-flow operations is more complex. Every jump is considered useful. Branches are considered useful only if the execution of a useful operation depends on their presence. As the marking phase discovers useful operations, it also marks the appropriate branches as useful. To map from a marked operation to the branches that it makes useful, the algorithm relies on the notion of control dependence.

The definition of control dependence relies on

postdominance. In a

cfg, node

j postdominates node

i if every path from

i to the

cfg's exit node passes through

j. Using postdominance, we can define control dependence as follows: in a

cfg, node

j is control-dependent on node

i if and only if

1. There exists a nonnull path from

i to

j such that

j postdominates every node on the path after

i. Once execution begins on this path, it must flow through

j to reach the

cfg's exit (from the definition of postdominance).

2.

j does not strictly postdominate

i. Another edge leaves

i and control may flow along a path to a node not on the path to

j. There must be a path beginning with this edge that leads to the

cfg's exit without passing through

j.

Postdominance

In a

cfg,

j postdominates i if and only if every path from

i to the exit node passes through

j.

See also the definition of dominance on page 478.

In other words, two or more edges leave block

i. One or more edges leads to

j and one or more edges do not. Thus, the decision made at the branch-ending block

i can determine whether or not

j executes. If an operation in

j is useful, then the branch that ends

i is also useful.

This notion of control dependence is captured precisely by the

reverse dominance frontier of

j, denoted

rdf(

j). Reverse dominance frontiers are simply dominance frontiers computed on the reverse

cfg. When

Mark marks an operation in block

b as useful, it visits every block in

b's reverse dominance frontier and marks their block-ending branches as useful. As it marks these branches, it adds them to the worklist. It halts when that worklist is empty.

Sweep replaces any unmarked branch with a jump to its first postdominator that contains a marked operation. If the branch is unmarked, then its successors, down to its immediate postdominator, contain no useful operations. (Otherwise, when those operations were marked, the branch would have been marked.) A similar argument applies if the immediate postdominator contains no marked operations. To find the nearest useful postdominator, the algorithm can walk up the postdominator tree until it finds a block that contains a useful operation. Since, by definition, the exit block is useful, this search must terminate.

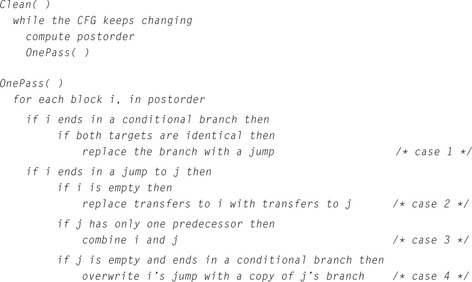

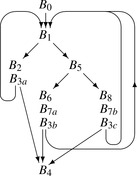

10.2.2. Eliminating Useless Control Flow

Optimization can change the

ir form of the program so that it has useless control flow. If the compiler includes optimizations that can produce useless control flow as a side effect, then it should include a pass that simplifies the

cfg by eliminating useless control flow. This section presents a simple algorithm called

Clean that handles this task.

Clean operates directly on the procedure's

cfg. It uses four transformations, shown in the margin. They are applied in the following order:

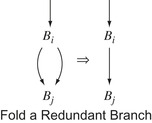

1.

Fold a Redundant Branch If

Clean finds a block that ends in a branch, and both sides of the branch target the same block, it replaces the branch with a jump to the target block. This situation arises as the result of other simplifications. For example,

B

i might have had two successors, each with a jump to

B

j. If another transformation had already emptied those blocks, then empty-block removal, discussed next, might produce the initial graph shown in the margin.

|

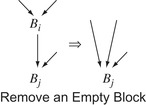

2.

Remove an Empty Block If

Clean finds a block that contains only a jump, it can merge the block into its successor. This situation arises when other passes remove all of the operations from a block

B

i. Consider the left graph of the pair shown in the margin. Since

B

i has only one successor,

B

j, the transformation retargets the edges that enter

B

i to

B

j and deletes

B

i from

B

j's set of predecessors. This simplifies the graph. It should also speed up execution. In the original graph, the paths through

B

i needed two control-flow operations to reach

B

j. In the transformed graph, those paths use one operation to reach

B

j.

|

3.

Combine Blocks If

Clean finds a block

B

i that ends in a jump to

B

j and

B

j has only one predecessor, it can combine the two blocks, as shown in the margin. This situation can arise in several ways. Another transformation might eliminate other edges that entered

B

j, or

B

i and

B

j might be the result of folding a redundant branch (described previously). In either case, the two blocks can be combined into a single block. This eliminates the jump at the end of

B

i.

|

4.

Hoist a Branch If

Clean finds a block

B

i that ends with a jump to an empty block

B

j and

B

j ends with a branch,

Clean can replace the block-ending jump in

B

i with a copy of the branch from

B

j. In effect, this hoists the branch into

B

i, as shown in the margin. This situation arises when other passes eliminate the operations in

B

j, leaving a jump to a branch. The transformed code achieves the same effect with just a branch. This adds an edge to the

cfg. Notice that

B

i cannot be empty, or else empty block removal would have eliminated it. Similarly,

B

i cannot be

B

j's sole predecessor, or else

Clean would have combined the two blocks. (After hoisting,

B

j still has at least one predecessor.)

|

Some bookkeeping is required to implement these transformations. Some of the modifications are trivial. To fold a redundant branch in a program represented with

iloc and a graphical

cfg,

Clean simply overwrites the block-ending branch with a jump and adjusts the successor and predecessor lists of the blocks. Others are more difficult. Merging two blocks may involve allocating space for the merged block, copying the operations into the new block, adjusting the predecessor and successor lists of the new block and its neighbors in the

cfg, and discarding the two original blocks.

Many compilers and assemblers have included an ad hoc pass that eliminates a jump to a jump or a jump to a branch.

Clean achieves the same effect in a systematic way.

Clean applies these four transformations in a systematic fashion. It traverses the graph in postorder, so that

B

i's successors are simplified before

B

i, unless the successor lies along a back edge with respect to the postorder numbering. In that case,

Clean will visit the predecessor before the successor. This is unavoidable in a cyclic graph. Simplifying successors before predecessors reduces the number of times that the implementation must move some edges.

In some situations, more than one of the transformations may apply. Careful analysis of the various cases leads to the order shown in Figure 10.2, which corresponds to the order in which they are presented in this section. The algorithm uses a series of

if statements rather than an

if-then-else to let it apply multiple transformations in a single visit to a block.

If the

cfg contains back edges, then a pass of

Clean may create additional opportunities—namely, unprocessed successors along the back edges. These, in turn, may create other opportunities. For this reason,

Clean repeats the transformation sequence iteratively until the

cfg stops changing. It must compute a new postorder numbering between calls to

OnePass because each pass changes the underlying graph. Figure 10.2 shows pseudo-code for

Clean.

|

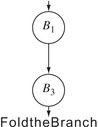

Clean cannot, by itself, eliminate an empty loop. Consider the

cfg shown in the margin. Assume that block

B2 is empty. None of

Clean's transformations can eliminate

B2 because the branch that ends

B2 is not redundant.

B2 does not end with a jump, so

Clean cannot combine it with

B3. Its predecessor ends with a branch rather than a jump, so

Clean can neither combine

B2 with

B1 nor fold its branch into

B1.

|

However, cooperation between

Clean and

Dead can eliminate the empty loop.

Dead used control dependence to mark useful branches. If

B1 and

B3 contain useful operations, but

B2 does not, then the

Mark pass in

Dead will decide that the branch ending

B2 is not useful because

B2 ∉

rdf(

B3). Because the branch is useless, the code that computes the branch condition is also useless. Thus,

Dead eliminates all of the operations in

B2 and converts the branch that ends it into a jump to its closest useful postdominator,

B3. This eliminates the original loop and produces the

cfg labelled “After Dead” in the margin.

|

In this form,

Clean folds

B2 into

B1, to produce the

cfg labelled “Remove

B2” in the margin. This action also makes the branch at the end of

B1 redundant.

Clean rewrites it with a jump, producing the

cfg labelled “Fold the Branch” in the margin. At this point, if

B1 is

B3's sole remaining predecessor,

Clean coalesces the two blocks into a single block.

|

This cooperation is simpler and more effective than adding a transformation to

Clean that handles empty loops. Such a transformation might recognize a branch from

Bi to itself and, for an empty

Bi, rewrite it with a jump to the branch's other target. The problem lies in determining when

Bi is truly empty. If

Bi contains no operations other than the branch, then the code that computes the branch condition must lie outside the loop. Thus, the transformation is safe only if the self-loop never executes. Reasoning about the number of executions of the self-loop requires knowledge about the runtime value of the comparison, a task that is, in general, beyond a compiler's ability. If the block contains operations, but only operations that control the branch, then the transformation would need to recognize the situation with pattern matching. In either case, this new transformation would be more complex than the four included in

Clean. Relying on the combination of

Dead and

Clean achieves the appropriate result in a simpler, more modular fashion.

10.2.3. Eliminating Unreachable Code

Sometimes the

cfg contains code that is unreachable. The compiler should find unreachable blocks and remove them. A block can be unreachable for two distinct reasons: there may be no path through the

cfg that leads to the block, or the paths that reach the block may not be executable—for example, guarded by a condition that always evaluates to false.

If the source language allows arithmetic on code pointers or labels, the compiler must preserve all blocks. Otherwise, it can limit the preserved set to blocks whose labels are referenced.

The former case is easy to handle. The compiler can perform a simple mark-sweep-style reachability analysis on the

cfg. First, it initializes a mark on each block to the value “unreachable.” Next, it starts with the entry and marks each

cfg node that it can reach as “reachable.” If all branches and jumps are unambiguous, then all unmarked blocks can be deleted. With ambiguous branches or jumps, the compiler must preserve any block that the branch or jump can reach. This analysis is simple and inexpensive. It can be done during traversals of the

cfg for other purposes or during

cfg construction itself.

Handling the second case is harder. It requires the compiler to reason about the values of expressions that control branches. Section 10.7.1 presents an algorithm that finds some blocks that are unreachable because the paths leading to them are not executable.

Section Review

Code transformations often create useless or unreachable code. To determine precisely which operations are dead, however, requires global analysis. Many transformations simply leave the dead operations in the

IR form of the code and rely on separate, specialized transformations, such as

Dead and

Clean, to remove them. Thus, most optimizing compilers include a set of transformations to excise dead code. Often, these passes run several times during the transformation sequence.

The three transformations presented in this chapter perform a thorough job of eliminating useless and unreachable code. The underlying analysis, however, can limit the ability of these transformations to prove that code is dead. The use of pointer-based values can prevent the compiler from determining that a value is unused. Conditional branches can occur in places where the compiler cannot detect the fact that they always take the same path; Section 10.8 presents an algorithm that partially addresses this problem.

Review Questions

1. Experienced programmers often question the need for useless code elimination. They seem certain that they do not write code that is useless or unreachable. What transformations from Chapter 8 might create useless code?

2. How might the compiler, or the linker, detect and eliminate unreachable procedures? What benefits might accrue from using your technique?

Hint: Write down the code to access

A[i, j] where

A is dimensioned

A[1:N, 1:M].

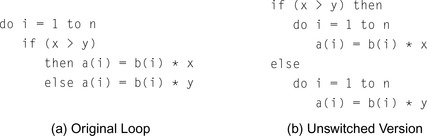

10.3. Code Motion

Moving a computation to a point where it executes less frequently than it executed in its original position should reduce the total operation count of the running program. The first transformation presented in this section,

lazy code motion, uses code motion to speed up execution. Because loops tend to execute many more times than the code that surrounds them, much of the work in this area has focused on moving loop-invariant expressions out of loops. Lazy code motion performs loop-invariant code motion. It extends the notions originally formulated in the available expressions data-flow problem to include operations that are redundant along some, but not all, paths. It inserts code to make them redundant on all paths and removes the newly redundant expression.

Some compilers, however, optimize for other criteria. If the compiler is concerned about the size of the executable code, it can perform code motion to reduce the number of copies of a specific operation. The second transformation presented in this section,

hoisting, uses code motion to reduce duplication of instructions. It discovers cases in which inserting an operation makes several copies of the same operation redundant without changing the values computed by the program.

10.3.1. Lazy Code Motion

Lazy code motion (

lcm) uses data-flow analysis to discover both operations that are candidates for code motion and locations where it can place those operations. The algorithm operates on the

ir form of the program and its

cfg, rather than on

ssa form. The algorithm use three different sets of data-flow equations and derives additional sets from those results. It produces, for each edge in the

cfg, a set of expressions that should be evaluated along that edge and, for each node in the

cfg, a set of expressions whose upward-exposed evaluations should be removed from the corresponding block. A simple rewriting strategy interprets these sets and modifies the code.

Redundant

An expression

e is

redundant at

p if it has already been evaluated on every path that leads to

p.

Partially Redundant

An expression

e is

partially redundant at

p if it occurs on some, but not all, paths that reach

p.

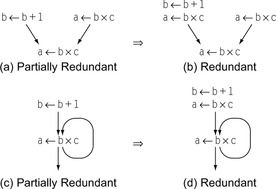

lcm combines code motion with elimination of both redundant and partially redundant computations. Redundancy was introduced in the context of local and superlocal value numbering in Section 8.4.1. A computation is

partially redundant at point

p if it occurs on some, but not all, paths that reach

p and none of its constituent operands changes between those evaluations and

p. Figure 10.3 shows two ways that an expression can be partially redundant. In Figure 10.3a,

a ← b × c occurs on one path leading to the merge point but not on the other. To make the second computation redundant,

lcm inserts an evaluation of

a ← b × c on the other path as shown in Figure 10.3b. In Figure 10.3c,

a ← b × c is redundant along the loop's back edge but not along the edge entering the loop. Inserting an evaluation of

a ← b × c before the loop makes the occurrence inside the loop redundant, as shown in Figure 10.3d. By making the loop-invariant computation redundant and eliminating it,

lcm moves it out of the loop, an optimization called

loopinvariant code motion when performed by itself.

In this context,

earliest means the position in the

cfg closest to the entry node.

The fundamental ideas that underlie

lcm were introduced in Section 9.2.4.

lcm computes both available expressions and anticipable expressions. Next,

lcm uses the results of these analyses to annotate each

cfg edge

with a set

Earliest(

i,

j) that contains the expressions for which this edge is the

earliest legal placement.

lcm then solves a third data-flow problem to find

later placements, that is, situations where evaluating an expression after its earliest placement has the same effect. Later placements are desirable because they can shorten the lifetimes of values defined by the inserted evaluations. Finally,

lcm computes its final products, two sets

Insert and

Delete, that guide its code-rewriting step.

with a set

Earliest(

i,

j) that contains the expressions for which this edge is the

earliest legal placement.

lcm then solves a third data-flow problem to find

later placements, that is, situations where evaluating an expression after its earliest placement has the same effect. Later placements are desirable because they can shorten the lifetimes of values defined by the inserted evaluations. Finally,

lcm computes its final products, two sets

Insert and

Delete, that guide its code-rewriting step.

Code Shape

lcm relies on several implicit assumptions about the shape of the code. Textually identical expressions always define the same name. Thus, each instance of

r

i + r

j always targets the same

r

k. Thus, the algorithm can use

r

k as a proxy for

r

i + r

j. This naming scheme simplifies the rewriting step; the optimizer can simply replace a redundant evaluation of

r

i + r

j with a copy from

r

k, rather create a new temporary name and insert copies into that name after each prior evaluation.

Notice that these rules are consistent with the register-naming rules described in Section 5.4.2.

lcm moves expression evaluations, not assignments. The naming discipline requires a second rule for program variables because they receive the values of different expressions. Thus, program variables are set by register-to-register copy operations. A simple way to divide the name space between variables and expressions is to require that variables have lower subscripts than any expression, and that in any operation other than a copy, the defined register's subscript must be larger than the subscripts of the operation's arguments. Thus, in

r

i + r

j ⇒ r

k, i < k and

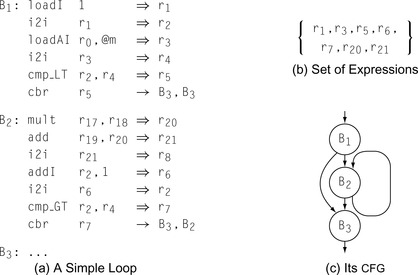

j < k. The example in Figure 10.4 has this property.

These naming rules allow the compiler to easily separate variables from expressions, shrinking the domain of the sets manipulated in the data-flow equations. In Figure 10.4, the variables are

r

2, r

4, and

r

8, each of which is defined by a copy operation. All the other names

r

1, r

3, r

5, r

6, r

7, r

20, and

r

21, represent expressions. The following table shows the local information for the blocks in the example:

| B 1 | B 2 | B 3 | |

|---|---|---|---|

| DE Expr | {r 1, r 3, r 5} | {r 7, r 20, r 21} | ∅ |

| UE Expr | {r 1, r 3} | {r 6, r 20, r 21} | ∅ |

| E xprK ill | {r 5,r 6,r 7} | {r 5,r 6,r 7} | ∅ |

DE

Expr(

b) is the set of downward-exposed expressions in block

b, UE

Expr(

b) is the set of upward-exposed expressions in

b, and E

xprK

ill(

b) is the set of expressions killed by some operation in

b. We will assume, for simplicity, that the sets for

B3 are all empty.

Available Expressions

The first step in

lcm computes available expressions, in a manner similar to that defined in Section 9.2.4.

lcm needs availability at the end of the block, so it computes A

vailO

ut rather than A

vailI

n. An expression

e is available on exit from block

b if, along every path from

n0 to

b,

e has been evaluated and none of its arguments has been subsequently defined.

lcm computes A

vailO

ut as follows:

For the example in Figure 10.4, this process produces the following sets:

| B 1 | B 2 | B 3 | |

|---|---|---|---|

| A vailO ut | {r 1, r 3, r 5} | {r 1, r 3, r 7, r 20, r 21} | … |

lcm uses the A

vailO

ut sets to help determine possible placements for an expression in the

cfg. If an expression

e ∈ A

vailO

ut(

b), the compiler could place an evaluation of

e at the end of block

b and obtain the result produced by its most recent evaluation on any control-flow path from

n0 to

b. If

e ∉ A

vailO

ut(

b), then one of

e's constituent subexpressions has been modified since

e's most recent evaluation and an evaluation at the end of block

b would possibly produce a different value. In this light, A

vailO

ut() sets tell the compiler how far forward in the

cfg it can move the evaluation of

e, ignoring any uses of

e.

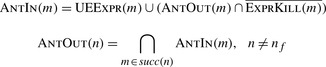

Anticipable Expressions

To capture information for backward motion of expressions,

lcm computes anticipability. Recall, from Section 9.2.4, that an expression is anticipable at point

p if and only if it is computed on every path that leaves

p and produces the same value at each of those computations. Because

lcm needs information about the anticipable expressions at both the start and the end of each block, we have refactored the equation to introduce a set A

ntI

n(

n) which holds the set of anticipable expressions for the entrance of the block corresponding to node

n in the

cfg.

lcm initializes the A

ntO

ut sets as follows:

Next, it iteratively computes A

ntI

n and A

ntO

ut sets for each block until the process reaches a fixed point.

|

For the example, this process produces the following sets:

| B 1 | B 2 | B 3 | |

|---|---|---|---|

| A ntI n | {r 1, r 3} | {r 20, r 21} | ∅ |

| A ntO ut | ∅ | ∅ | ∅ |

A

ntO

ut provides information about the safety of hoisting an evaluation to either the start or the end of the current block. If

x ∈ A

ntO

ut(

b), then the compiler can place an evaluation of

x at the end of

b, with two guarantees. First, the evaluation at the end of

b will produce the same value as the next evaluation of

x along any execution path in the procedure. Second, along any execution path leading out of

b, the program will evaluate

x before redefining any of its arguments.

Earliest Placement

Given solutions to availability and anticipability, the compiler can determine, for each expression, the earliest point in the program at which it can evaluate the expression. To simplify the equations,

lcm assumes that it will place the evaluation on a

cfg edge rather than at the start or end of a specific block. Computing an edge placement lets the compiler defer the decision to place the evaluation at the end of the edge's source, at the start of its sink, or in a new block in the middle of the edge. (See the discussion of critical edges in Section 9.3.5.)

For a

cfg edge

, an expression

e is in

Earliest(

i,

j) if and only if the compiler can legally move

e to

, an expression

e is in

Earliest(

i,

j) if and only if the compiler can legally move

e to

, and cannot move it to any earlier edge in the

cfg. The

Earliest equation encodes this condition as the intersection of three terms:

, and cannot move it to any earlier edge in the

cfg. The

Earliest equation encodes this condition as the intersection of three terms:

These terms define an earliest placement for

e as follows:

1.

e ∈ A

ntI

n(

j) means that the compiler can safely move

e to the head of

j. The anticipability equations ensure that

e will produce the same value as its next evaluation on any path leaving

j and that each of those paths evaluates

e.

2.

e ∉ A

vailO

ut(

i) shows that no prior computation of

e is available on exit from

i. Were

e ∈ A

vailO

ut(

i), inserting

e on

would be redundant.

would be redundant.

3. The third condition encodes two cases. If

e ∈ E

xprK

ill(

i), the compiler cannot move

e through block

i because of a definition in

i. If

e ∉ A

ntO

ut(

i), the compiler cannot move

e into

i because

e ∉ A

ntI

n(

k) for some edge

. If either is true, then

e can move no further than

. If either is true, then

e can move no further than

.

.

The

cfg's entry node,

n0 presents a special case.

lcm cannot move an expression earlier than

n0, so it can ignore the third term in the equation for

Earliest(

n0,

k), for any

k. The

Earliest sets for the continuing example are as follows:

| Earliest | {r 20, r 21} | ∅ | ∅ | ∅ |

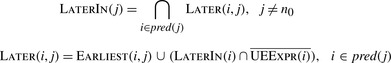

Later Placement

The final data-flow problem in

lcm determines when an earliest placement can be deferred to a later point in the

cfg while achieving the same effect.

Later analysis is formulated as a forward data-flow problem on the

cfg with a set L

aterI

n(

n) associated with each node and another set

Later(

i,

j) associated with each edge

.

lcm initializes the L

aterI

n sets as follows:

.

lcm initializes the L

aterI

n sets as follows:

Next, it iteratively computes L

aterI

n and

Later sets for each block. The computation halts when it reaches a fixed point.

|

As with availability and anticipability, these equations have a unique fixed point solution.

An expression

e ∈ L

aterI

n(

k) if and only if every path that reaches

k includes an edge

such that

e ∈

Earliest(

p,

q), and the path from

q to

k neither redefines

e's operands nor contains an evaluation of

e that an earlier placement of

e would anticipate. The

Earliest term in the equation for

Later ensures that

Later(

i,

j) includes

Earliest(

i,

j). The rest of that equation puts

e into

Later(

i,

j) if

e can be moved forward from

i, (

e ∈ L

aterI

n(

i)) and a placement at the entry to

i does not anticipate a use in

i, (

e ∉ UE

Expr(

i)).

such that

e ∈

Earliest(

p,

q), and the path from

q to

k neither redefines

e's operands nor contains an evaluation of

e that an earlier placement of

e would anticipate. The

Earliest term in the equation for

Later ensures that

Later(

i,

j) includes

Earliest(

i,

j). The rest of that equation puts

e into

Later(

i,

j) if

e can be moved forward from

i, (

e ∈ L

aterI

n(

i)) and a placement at the entry to

i does not anticipate a use in

i, (

e ∉ UE

Expr(

i)).

Given

Later and L

aterI

n sets,

e ∈ L

aterI

n(

i) implies that the compiler can move the evaluation of

e forward through

i without losing any benefit—that is, there is no evaluation of

e in

i that an earlier evaluation would anticipate, and

e ∈

Later(

i,

j) implies that the compiler can move an evaluation of

e in

i into

j.

For the ongoing example, these equations produce the following sets:

| B 1 | B 2 | B 3 | |

|---|---|---|---|

| L aterI n | ∅ | ∅ | ∅ |

| Later | {r 20, r 21} | ∅ | ∅ | ∅ |

Rewriting the Code

The final step in performing

lcm is to rewrite the code so that it capitalizes on the knowledge derived from the data-flow computations. To drive the rewriting process,

lcm computes two additional sets,

Insert and

Delete.

If

i has only one successor,

lcm can insert the computations at the end of

i. If

j has only one predecessor, it can insert the computations at the entry of

j. If neither condition applies, the edge

is a critical edge and the compiler should split it by inserting a block in the middle of the edge to evaluate the expressions in

Insert(

i,

j).

is a critical edge and the compiler should split it by inserting a block in the middle of the edge to evaluate the expressions in

Insert(

i,

j).

The

Delete set specifies, for a block, which computations

lcm should delete from the block.

Delete(

n0) is empty, of course, since no block precedes

n0. If

e ∈

Delete(

i), then the first computation of

e in

i is redundant after all the insertions have been made. Any subsequent evaluation of

e in

i that has upward-exposed uses—that is, the operands are not defined between the start of

i and the evaluation—can also be deleted. Because all evaluations of

e define the same name, the compiler need not rewrite subsequent references to the deleted evaluation. Those references will simply refer to earlier evaluations of

e that

lcm has proven to produce the same result.

For our example, the

Insert and

Delete sets are simple.

| Insert | {r 20, r 21} | ∅ | ∅ | ∅ |

| B 1 | B 2 | B 3 | |

|---|---|---|---|

| Delete | ∅ | {r 20, r 21} | ∅ |

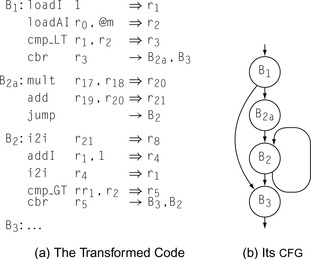

The compiler interprets the

Insert and

Delete sets and rewrites the code as shown in Figure 10.5.

lcm deletes the expressions that define

r

20 and

r

21 from

B2 and inserts them on the edge from

B1 to

B2.

Since

B1 has two successors and

B2 has two predecessors,

is a critical edge. Thus,

lcm splits the edge, creating a new block

B2a to hold the inserted computations of

r

20 and

r

21. Splitting

is a critical edge. Thus,

lcm splits the edge, creating a new block

B2a to hold the inserted computations of

r

20 and

r

21. Splitting

adds an extra

jump to the code. Subsequent work in code generation will almost certainly implement the

jump in

B2a as a fall through, eliminating any cost associated with it.

adds an extra

jump to the code. Subsequent work in code generation will almost certainly implement the

jump in

B2a as a fall through, eliminating any cost associated with it.

Coalescing

A pass that determines when a register to register copy can be safely eliminated and the source and destination names combined.

Notice that

lcm leaves the copy defining

r

8 in

B2.

lcm moves expressions, not assignments. (Recall that

r

8 is a variable, not an expression.) If the copy is unnecessary, subsequent copy coalescing, either in the register allocator or as a standalone pass, should discover that fact and eliminate the copy operation.

10.3.2. Code Hoisting

Code motion techniques can also be used to reduce the size of the compiled code. A transformation called

code hoisting provides one direct way of accomplishing this goal. It uses the results of anticipability analysis in a particularly simple way.

If an expression

e ∈ A

ntO

ut(b), for some block

b, that means that

e is evaluated along every path that leaves

b and that evaluating

e at the end of

b would make the first evaluation along each path redundant. (The equations for A

ntO

ut ensure that none of

e's operands is redefined between the end of

b and the next evaluation of

e along each path leaving

b.) To reduce code size, the compiler can insert an evaluation of

e at the end of

b and replace the first occurrence of

e on each path leaving

b with a reference to the previously computed value. The effect of this transformation is to replace multiple copies of the evaluation of

e with a single copy, reducing the overall number of operations in the compiled code.

To replace those expressions directly, the compiler would need to locate them. It could insert

e, then solve another data-flow problem, proving that the path from

b to some evaluation of

e is clear of definitions for

e's operands. Alternatively, it could traverse each of the paths leaving

b to find the first block where

e is defined—by looking in the block's UE

Expr set. Each of these approaches seems complicated.

A simpler approach has the compiler visit each block

b and insert an evaluation of

e at the end of

b, for every expression

e ∈ A

ntO

ut(

b). If the compiler uses a uniform discipline for naming, as suggested in the discussion of

lcm, then each evaluation will define the appropriate name. Subsequent application of

lcm or superlocal value numbering will then remove the newly redundant expressions.

Section Review

Compilers perform code motion for two primary reasons. Moving an operation to a point where it executes fewer times than it would in its original position should reduce execution time. Moving an operation to a point where one instance can cover multiple paths in the

cfg should reduce code size. This section presented an example of each.

lcm is a classic example of a data-flow driven global optimization. It identifies redundant and partially redundant expressions, computes the best place for those expressions, and moves them. By definition, a loop-invariant expression is either redundant or partially redundant;

lcm moves a large class of loop invariant expressions out of loops. Hoisting takes a much simpler approach; it finds operations that are redundant on every path leaving some point

p and replaces all the redundant occurrences with a single instance at

p. Thus, hoisting is usually performed to reduce code size.

The common implementation of sinking is called

cross jumping.

Review Questions

1. Hoisting discovers the situation when some expression

e exists along each path that leaves point

p and each of those occurrences can be replaced safely with an evaluation of

e at

p. Formulate the symmetric and equivalent optimization,

code sinking, that discovers when multiple expression evaluations can safely be moved forward in the code—from points that precede

p to

p.

2. Consider what would happen if you apply your code-sinking transformation during the linker, when all the code for the entire application is present. What effect might it have on procedure linkage code?

10.4. Specialization

In most compilers, the shape of the

ir program is determined by the front end, before any detailed analysis of the code. Of necessity, this produces general code that works in any context that the running program might encounter. With analysis, however, the compiler can often learn enough to narrow the contexts in which the code must operate. This creates the opportunity for the compiler to specialize the sequence of operations in ways that capitalize on its knowledge of the context in which the code will execute.

Major techniques that perform specialization appear in other sections of this book. Constant propagation, described in Sections 9.3.6 and 10.8, analyzes a procedure to discover values that always have the same value; it then folds those values directly into the computation. Interprocedural constant propagation, introduced in Section 9.4.2, applies the same ideas at the whole-program scope. Operator strength reduction, presented in Section 10.4, replaces inductive sequences of expensive computations with equivalent sequences of faster operations. Peephole optimization, covered in Section 11.5, uses pattern matching over short instruction sequences to find local improvement. Value numbering, explained in Section 8.4.1 and Section 8.5.1, systematically simplifies the

ir form of the code by applying algebraic identities and local constant folding. Each of these techniques implements a form of specialization.

Optimizing compilers rely on these general techniques to improve code. In addition, most optimizing compilers contain specialization techniques that specifically target properties of the source languages or applications that the compiler writer expects to encounter. The rest of this section presents three such techniques that target specific inefficiencies at procedure calls: tail-call optimization, leaf-call optimization, and parameter promotion.

10.4.1. Tail-Call Optimization

When the last action that a procedure takes is a call, we refer to that call as a tail call. The compiler can specialize tail calls to their contexts in ways that eliminate much of the overhead from the procedure linkage. To understand how the opportunity for improvement arises, consider what happens when

o calls

p and

p calls

q. When

q returns, it executes its epilogue sequence and jumps back to

p's postreturn sequence. Execution continues in

p until

p returns, at which point

p executes its epilogue sequence and jumps to

o's postreturn sequence.

If the call from

p to

q is a tail call, then no useful computation occurs between the postreturn sequence and the epilogue sequence in

p. Thus, any code that preserves and restores

p's state, beyond what is needed for the return from

p to

o, is useless. A standard linkage, as described in Section 6.5, spends much of its effort to preserve state that is useless in the context of a tail call.

At the call from

p to

q, the minimal precall sequence must evaluate the actual parameters at the call from

p to

q and adjust the access links or the display if necessary. It need not preserve any caller-saves registers, because they cannot be live. It need not allocate a new

ar, because

q can use

p's

ar. It must leave intact the context created for a return to

o, namely the return address and caller's

arp that

o passed to

p and any callee-saves registers that p preserved by writing them into the

ar. (That context will cause the epilogue code for

q to return control directly to

o.) Finally, the precall sequence must jump to a tailored prologue sequence for

q.

In this scheme,

q must execute a custom prologue sequence to match the minimal precall sequence in

p. It only saves those parts of

p's state that allow a return to

o. The precall sequence does not preserve callee-saves registers, for two reasons. First, the values from

p in those registers are no longer live. Second, the values that

p left in the

ar's register-save area are needed for the return to

o. Thus, the prologue sequence in

q should initialize local variables and values that

q needs; it should then branch into the code for

q.

With these changes to the precall sequence in

p and the prologue sequence in

q, the tail call avoids preserving and restoring

p's state and eliminates much of the overhead of the call. Of course, once the precall sequence in

p has been tailored in this way, the postreturn and epilogue sequences are unreachable. Standard techniques such as

Dead and

Clean will not discover that fact, because they assume that the interprocedural jumps to their labels are executable. As the optimizer tailors the call, it can eliminate these dead sequences.

With a little care, the optimizer can arrange for the operations in the tailored prologue for

q to appear as the last operations in its more general prologue. In this scheme, the tail call from

p to

q simply jumps to a point farther into the prologue sequence than would a normal call from some other routine.

If the tail call is a self-recursive call—that is,

p and

q are the same procedure—then tail-call optimization can produce particularly efficient code. In a tail recursion, the entire precall sequence devolves to argument evaluation and a branch back to the top of the routine. An eventual return out of the recursion requires one branch, rather than one branch per recursive invocation. The resulting code rivals a traditional loop for efficiency.

10.4.2. Leaf-Call Optimization

Some of the overhead involved in a procedure call arises from the need to prepare for calls that the callee might make. A procedure that makes no calls, called a leaf procedure, creates opportunities for specialization. The compiler can easily recognize the opportunity; the procedure calls no other procedures.

The other reason to store the return address is to allow a debugger or a performance monitor to unwind the call stack. When such tools are in use, the compiler should leave the save operation intact.

During translation of a leaf procedure, the compiler can avoid inserting operations whose sole purpose is to set up for subsequent calls. For example, the procedure prologue code may save the return address from a register into a slot in the

ar. That action is unnecessary unless the procedure itself makes another call. If the register that holds the return address is needed for some other purpose, the register allocator can spill the value. Similarly, if the implementation uses a display to provide addressability for nonlocal variables, as described in Section 6.4.3, it can avoid the display update in the prologue sequence.

The register allocator should try to use caller-saves registers before callee-saves registers in a leaf procedure. To the extent that it can leave callee-saves registers untouched, it can avoid the save and restore code for them in the prologue and epilogue. In small leaf procedures, the compiler may be able to avoid all use of callee-saves registers. If the compiler has access to both the caller and the callee, it can do better; for leaf procedures that need fewer registers than the caller-save set includes, it can avoid some of the register saves and restores in the caller as well.

In addition, the compiler can avoid the runtime overhead of activation-record allocation for leaf procedures. In an implementation that heap allocates

ars, that cost can be significant. In an application with a single thread of control, the compiler can allocate statically the

ar of any leaf procedure. A more aggressive compiler might allocate one static

ar that is large enough to work for any leaf procedure and have all the leaf procedures share that

ar.

If the compiler has access to both the leaf procedure and its callers, it can allocate space for the leaf procedure's

ar in each of its callers’

ars. This scheme amortizes the cost of

ar allocation over at least two calls—the invocation of the caller and the call to the leaf procedure. If the caller invokes the leaf procedure multiple times, the savings are multiplied.

10.4.3. Parameter Promotion

Ambiguous memory references prevent the compiler from keeping values in registers. Sometimes, the compiler can prove that an ambiguous value has just one corresponding memory location through detailed analysis of pointer values or array subscript values, or special case analysis. In these cases, it can rewrite the code to move that value into a scalar local variable, where the register allocator can keep it in a register. This kind of transformation is often called

promotion. The analysis to promote array references or pointer-based references is beyond the scope of this book. However, a simpler case can illustrate these transformations equally well.

Promotion

A category of transformations that move an ambiguous value into a local scalar name to expose it to register allocation

Consider the code generated for an ambiguous call-by-reference parameter. Such parameters can arise in many ways. The code might pass the same actual parameter in two distinct parameter slots, or it might pass a global variable as an actual parameter. Unless the compiler performs interprocedural analysis to rule out those possibilities, it must treat all reference parameters as potentially ambiguous. Thus, every use of the parameter requires a load and every definition requires a store.

If the compiler can prove that the actual parameter must be unambiguous in the callee, it can promote the parameter's value into a local scalar value, which allows the callee to keep it in a register. If the actual parameter is not modified by the callee, the promoted parameter can be passed by value. If the callee modifies the actual parameter and the result is live in the caller, then the compiler must use value-result semantics to pass the promoted parameter (see Section 6.4.1).

To apply this transformation to a procedure

p, the optimizer must identify all of the call sites that can invoke

p. It can either prove that the transformation applies at all of those call sites or it can clone

p to create a copy that handles the promoted values (see Section 10.6.2). Parameter promotion is most attractive in a language that uses call-by-reference binding.

Section Review

Specialization includes many effective techniques to tailor general-purpose computations to their detailed contexts. Other chapters and sections present powerful global and regional specialization techniques, such as constant propagation, peephole optimization, and operator strength reduction.

This section focused on optimizations that the compiler can apply to the code entailed in a procedure call. Tail-call optimization is a valuable tool that converts tail recursion to a form that rivals conventional iteration for efficiency; it applies to nonrecursive tail calls as well. Leaf procedures offer special opportunities for improvement because the callee can omit major portions of the standard linkage sequence. Parameter promotion is one example of a class of important transformations that remove inefficiencies related to ambiguous references.

Review Questions

1. Many compilers include a simple form of strength reduction, in which individual operations that have one constant-valued operand are replaced by more efficient, less general operations. The classic example is replacing an integer multiply of a positive number by a series of shifts and adds. How might you fold that transformation into local value numbering?

2. Inline substitution might be an alternative to the procedure-call optimizations in this section. How might you apply inline substitution in each case? How might the compiler choose the more profitable alternative?

10.5. Redundancy Elimination

A computation

x +

y is redundant at some point

p in the code if, along every path that reaches

p,

x +

y has already been evaluated and

x and

y have not been modified since the evaluation. Redundant computations typically arise as artifacts of translation or optimization.

We have already presented three effective techniques for redundancy elimination: local value numbering

lvn in Section 8.4.1, superlocal value numbering

svn in Section 8.5.1 and lazy code motion (

lcm) in Section 10.3.1. These algorithms cover the span from simple and fast

lvn to complex and comprehensive (

lcm). While all three methods differ in the scope that they cover, the primary distinction between them lies in the method that they use to establish that two values are identical. The next section explores this issue in detail. The second section presents one more version of value numbering, a dominator-based technique.

10.5.1. Value Identity versus Name Identity

lvn introduced a simple mechanism to prove that two expressions had the same value.

lvn relies on two principles. It assigns each value a unique identifying number—its value number. It assumes that two expressions produce the same value if they have the same operator and their operands have the same value numbers. These simple rules allow

lvn to find a broad class of redundant operations—any operation that produces a pre-existing value number is redundant.

With these rules,

lvn can prove that 2 +

a has the same value as

a + 2 or as 2 + b when

a and

b have the same value number. It cannot prove that

a +

a and 2 ×

a have the same value because they have different operators. Similarly, it cannot prove the

a + 0 and

a have the same value. Thus, we extend

lvn with algebraic identities that can handle the well-defined cases not covered by the original rule. The table in Figure 8.3 on page 424 shows the range of identities that

lvn can handle.

By contrast,

lcm relies on names to prove that two values have the same number. If

lcm sees

a +

b and

a +

c, it assumes that they have different values because

b and

c have different names. It has relies on a lexical comparison—name identity. The underlying data-flow analyses cannot directly accommodate the notion of value identity; data-flow problems operate a predefined name space and propagate facts about those names over the

cfg. The kind of ad hoc comparisons used in

lvn do not fit into the data-flow framework.

As described in Section 10.6.4, one way to improve the effectiveness of

lcm is to encode value identity into the name space of the code before applying

lcm.

lcm recognizes redundancies that neither

lvn nor

svn can find. In particular, it finds redundancies that lie on paths through join points in the

cfg, including those that flow along loop-closing branches, and it finds partial redundancies. On the other hand, both

lvn and

svn find value-based redundancies and simplifications that

lcm cannot find. Thus, encoding value identity into the name space allows the compiler to take advantage of the strengths of both approaches.

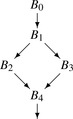

10.5.2. Dominator-Based Value Numbering

|

Chapter 8 presented both local value numbering

lvn and its extension to extended basic blocks (

Ebbs), called superlocal value numbering

svn. While

svn discovers more redundancies than

lvn, it still misses some opportunities because it is limited to

Ebbs. Recall that the

svn algorithm propagates information along each path through an

Ebb. For example, in the

cfg fragment shown in the margin,

svn will process the paths (

B0,

B1,

B2) and (

B0,

B1,

B3). Thus, it optimizes both

B2 and

B3 in the context of the prefix path (

B0,

B1). Because

B4 forms its own degenerate

Ebb,

svn optimizes

B4 without prior context.

From an algorithmic point of view,

svn begins each block with a table that includes the results of all predecessors on its

Ebb path. Block

B4 has no predecessors, so it begins with no prior context. To improve on that situation, we must answer the question: on what state could

B4 rely?

B4 cannot rely on values computed in either

B2 or

B3, since neither lies on every path that reaches

B4. By contrast,

B4 can rely on values computed in

B0 and

B1, since they occur on every path that reaches

B4. Thus, we might extend value numbering for

B4 with information about computations in

B0 and

B1. We must, however, account for the impact of assignments in the intervening blocks,

B2 or

B3.

Consider an expression,

x +

y, that occurs at the end of

B1 and again at the start of

B4. If neither

B2 or

B3 redefines

x or

y, then the evaluation of

x +

y in

B4 is redundant and the optimizer can reuse the value computed in

B1. On the other hand, if either of those blocks redefines

x or

y, then the evaluation of

x +

y in

B4 computes a distinct value from the evaluation in

B1 and the evaluation is not redundant.

Fortunately, the

ssa name space encodes precisely this distinction. In

ssa, a name that is used in some block

B

i can only enter

B

i in one of two ways. Either the name is defined by a

ϕ-function at the top of

B

i, or it is defined in some block that dominates

B

i. Thus, an assignment to

x in either

B2 or

B3 creates a new name for

x and forces the insertion of a

ϕ-function for

x at the head of

B4. That

ϕ-function creates a new

ssa name for

x and the renaming process changes the

ssa name used in the subsequent computation of

x +

y. Thus,

ssa form encodes the presence or absence of an intervening assignment in

B2 or

B3 directly into the names used in the expression. Our algorithm can rely on

ssa names to avoid this problem.

The other major question that we must answer before we can extend

svn to larger regions is, given a block such as

B4, how do we locate the most recent predecessor with information that the algorithm can use? Dominance information, discussed at length in Section 9.2.1 and Section 9.3.2, captures precisely this effect.

Dom(

B4) = {

B0,

B1,

B4}.

B4's immediate dominator, defined as the node in (

Dom(

B4) −

B4) that is closest to

B4, is

B1, the last node that occurs on all path from the entry node

B0 to

B4.

The dominator-based value numbering technique

dvnt builds on the ideas behind

svn. It uses a scoped hash table to hold value numbers.

dvnt opens a new scope for each block and discards that scope when they are no longer needed.

dvnt actually uses

ssa names as value numbers; thus the value number for an expression

ai ×

bj is the

ssa name defined in the first evaluation of

ai ×

bj. (That is, if the first evaluation occurs in

tk ←

ai ×

bj, then the value number for

ai ×

bj is

tk.)

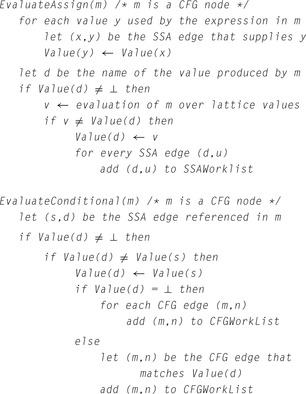

Figure 10.6 shows the algorithm. It takes the form of a recursive procedure that the optimizer invokes on a procedure's entry block. It follows both the

cfg for the procedure, represented by the dominator tree, and the flow of values in the

ssa form. For each block

B,

dvnt takes three steps: it processes the

ϕ-functions in

B, if any exist, it value numbers the assignments, and it propagates information into

B's successors and recurs on

B's children in the dominator tree.

Process the ϕ-Functions in B

dvnt must assign each

ϕ-function

p a value number. If

p is meaningless—that is, all its arguments have the same value number—

dvnt sets its value number to the value number for one of its arguments and deletes

p. If

p is redundant—that is, it produces the same value number as another

ϕ-function in

B—

dvnt assigns

p the same value number as the

ϕ-function that it duplicates.

dvnt then deletes

p.

Otherwise, the

ϕ-function computes a new value. Two cases arise. The arguments to

p have value numbers, but the specific combination of arguments have not been seen before in this block, or one or more of

p's arguments has no value number. The latter case can arise from a back edge in the

cfg.

Process the Assignments in B

Recall, from the

ssa construction, that uninitialized names are not allowed.

dvnt iterates over the assignments in

B and processes them in a manner analogous to

lvn and

svn. One subtlety arises from the use of

ssa names as value numbers. When the algorithm encounters a statement

x ←

y op z, it can simply replace

y with

VN[y] because the name in

VN[y] holds the same value as

y.

Propagate Information to B's Successors

Once

dvnt has processed all the

ϕ-functions and assignments in

B, it visits each of

B's

cfg successors

s and updates

ϕ function arguments that correspond to values flowing across the edge (

b,

s). It records the current value number for the argument in the

ϕ-function by overwriting the argument's

ssa name. (Notice the similarity between this step and the corresponding step in the renaming phase of the

ssa construction.) Next, the algorithm recurs on

B's children in the dominator tree. Finally, it deallocates the hash table scope that it used for

B.

This recursion scheme causes

dvnt to follow a preorder walk on the dominator tree, which ensures that the appropriate tables have been constructed before it visits a block. This order can produce a counterintuitive traversal; for the

cfg in the margin, the algorithm could visit

B4 before either

B2 or

B3. Since the only facts that the algorithm can use in

B4 are those discovered processing

B0 and

B1, the relative ordering of

B2,

B3, and

B4 is not only unspecified, it is also irrelevant.

|

Section Review

Redundancy elimination operates on the assumption that it is faster to reuse a value than to recompute it. Building on that assumption, these methods identify as many redundant computations as possible and eliminate duplicate computation. The two primary notions of equivalence used by these transformations are value identity and name identity. These different tests for identity produce different results.

Both value numbering and

lcm eliminate redundant computation.

lcm eliminates redundant and partially redundant expression evaluation; it does not eliminate assignments. Value numbering does not recognize partial redundancies, but it can eliminate assignments. Some compilers use a value-based technique, such as

dvnt, to discover redundancy and then encode that information into the name space for a name-based transformation such as

lcm. In practice, that approach combines the strength of both ideas.

Review Questions

1. The

dvnt algorithm resembles the renaming phase of the

ssa construction algorithm. Can you reformulate the renaming phase so that it performs value numbering as it renames values? What impact would this change have on the size of the

ssa form for a procedure?

2. The

dvnt algorithm does not propagate a value along a loop-closing edge—a back edge in the call graph.

lcm will propagate information along such edges. Write several examples of redundant expressions that a true “global” technique such as

lcm can find that

dvnt cannot.

10.6. Enabling Other Transformations