4

Costs and Benefits of Event-Processing Applications

This chapter describes methods for evaluating the costs and benefits of event-processing applications. This evaluation helps identify the range of applications for which event-processing approaches are suitable.

The costs and benefits of event-processing applications are related directly to business, because they visibly impact businesspeople as well as customers and suppliers. Therefore, costs and benefits should be evaluated by, or in conjunction with, business users. Applications that help businesspeople respond to events may change the way people work. For example, an application that detects risks and opportunities for traders in a company enables them to exploit these events and increase profits; however, a side effect of the application could be that trading patterns of different traders become increasingly similar and, as a consequence, correlations among trades increase. These types of influences are more likely to be apparent to people in the business than to IT experts.

Exploiting Events for Business Value

Millions of events are generated in enterprises every day, and many events are recorded in logs of various types. Enterprises can detect and record torrents of information generated by customers, suppliers, competitors, government agencies, and academic organizations. A study evaluating event-processing applications for a business has the benefit of identifying valuable, but unexploited, event sources inside and outside the enterprise. The study will also identify technology trends, such as decreasing costs of sensors, that allow the business to exploit entirely new sources of events. You will learn best practices for evaluating opportunities for using EDA to solve business problems in Chapter 11. In this chapter you will learn the key categories of costs and benefits of event-processing approaches and this will provide a foundation for analyzing the appropriateness of EDA methods to help solve your business problems.

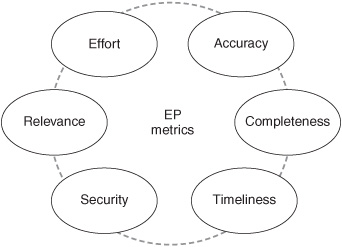

We organize the cost/benefit metrics into a collection of categories with the acronym REACTS, which stands for the following (see Figure 4-1):

![]() Relevance of information to a user

Relevance of information to a user

![]() Effort in tailoring a user’s interest profile to ensure that the user gets the information needed

Effort in tailoring a user’s interest profile to ensure that the user gets the information needed

Figure 4-1: Cost-benefit metrics for event-processing systems.

![]() Accuracy of detected events

Accuracy of detected events

![]() Completeness of detected events

Completeness of detected events

![]() Timeliness of responses

Timeliness of responses

![]() Security, safety, privacy, and provenance of information, and system reliability

Security, safety, privacy, and provenance of information, and system reliability

We first discuss each of these measures and show how designers trade off one measure against another in developing different applications.

Relevance

Information can be accurate but irrelevant. The relevance of information to a person depends, in part, on the person’s context. Information about road congestion due to an accident on a freeway in Los Angeles is relevant to people in the Los Angeles area but irrelevant to most people elsewhere. People pay attention to different issues at different times, so the relevance of information to a person changes over time.

Attention Amplification and Distraction

A benefit of a well-designed event-driven architecture (EDA) application is attention amplification: by filtering out irrelevant information and prioritizing relevant information, the application enables users to gain uninterrupted time to concentrate on important issues. Stephen R. Covey, in his popular book The 7 Habits of Highly Effective People, emphasizes the importance of prioritizing tasks so that you carry out activities that are important before those that appear urgent but are actually unimportant. A well-designed EDA application helps you do important tasks first: it acquires data from varied data sources, processes the data, identifies the information that deserves the most attention at the current time, and computes data that helps in executing responses. A poorly designed EDA application, on the other hand, is an attention distracter: it distracts agents from important activities by interrupting agents frequently to handle apparently urgent, but often irrelevant, events. Attention distracters are best turned off.

Note: A well-designed EDA application is an attention amplifier.

A precious, and increasingly scarce, resource is uninterrupted time during which you can give your undivided attention to the issues that truly matter to you. Applications that interrupt agents frequently, forcing them to pay attention to irrelevant data, create organizational attention deficit disorders with their concomitant huge costs. Critical information may not be seen if it is surrounded by irrelevant information, and systems that deliver too much irrelevant information are turned off or ignored.

The meanings of attention amplification and deficit are obvious when applied to human users of event-processing systems but they also apply to software agents. Communication of irrelevant data uses communication bandwidth and processing power unnecessarily. An important aspect of designing an event-processing application is determining what information obtained from data sources should be sent on for further processing and what should be discarded immediately.

Relevance is one of the metrics that businesses use when evaluating EDA technology. A question that you should ask when embarking on a smart systems application in general, and an EDA application in particular, is this: Will better relevance be obtained by continuing current business practices or by developing a new EDA application? The answer depends critically on how well the application is designed. Business as usual, without new technology, is better than a new system that interrupts your concentration by demanding your immediate attention for every piece of spam mail and irrelevant phone call. Well-designed EDA applications learn or can be told about your current context so that only relevant information is pushed to you.

Note: More irrelevant information, more quickly, is unproductive. An increasingly scarce resource is uninterrupted time to focus on what is important. A well-designed application enhances that precious commodity while a poorly designed one destroys it.

Enable Business Users to Tailor Systems to Their Needs

It is a best practice to allow end users (operations personnel or other business people) to control or modify the types of event notifications sent to them by the application. End users best understand tradeoffs between missing occasional critical information on the one hand and notification fatigue—weariness from getting too much irrelevant information—on the other. If an IT person or a top business executive decides which events will be pushed to the end user, their concern about costs of missing relevant events may make them configure applications to push more data, including more irrelevant data, to operations personnel. Furthermore, IT staff members and senior executives cannot predict the changing contexts of the people who use EDA applications; so, they may choose modalities of event notification—such as audible alarms—that are inappropriate for the current contexts of users.

Note: Enable end users to control the types of event notifications they receive.

Effort

This measure is the cost of the effort required to develop an EDA application that meets the individual needs of each user. Greater relevance is obtained by applications that are tailored by end users for their own specific needs; but, this requires investment in mechanisms that enable business users to tailor IT systems and also requires each user’s time in configuring and reconfiguring systems to meet his or her individual needs. The overall effort in configuring systems falls into two broad categories: the IT and systems-development effort in implementing the initial application, and each user’s effort in tuning the application to match that user’s needs. Business and user needs change, so the effort in reconfiguring the application to suit each user will continue long after the initial design.

Effort Required to Tailor Systems for Different Business Users

Different users within an enterprise have different needs: a one-size-fits-all specification of events and responses doesn’t work. People in many roles participate in tailoring a system to fit the needs of each user: professional services consultants provided by systems integrators and vendors tailor software to fit business needs. Technical staff have to do a great deal of background work and application integration to develop event-processing applications: they install sensors, tap into networks and data logs, and output events from business processes to capture events. IT staff in the enterprise learn event specification notations provided by vendors and set up businessoriented templates for end users; power business users create their own macros; and, finally, each business user spends time learning how to use tools to tailor the system to that user’s individual needs. This effort takes time because the fundamental issue is not technology but rather understanding how to carry out business activities better. Don’t rush this part of application development.

Business Users Need to Tailor Event-Processing Applications Themselves

Business environments change and the needs of business users of event-processing applications change too. Business users must be able to tailor event-processing applications to meet their changing needs. For example, financial traders use strategies that they want to keep private, and when they change trading strategies they need to be able to reconfigure their applications themselves. Central IT organizations cannot easily tailor applications to meet each business user’s changing needs and role. This implies that traders and other business users must have notations or user interfaces that allow them to change applications to meet their needs. This implies, in turn, that business users must spend the time to learn the notation and become skilled in working with a user interface, but time spent by business users away from their businesses is expensive.

Business Dialects for Tailoring Event-Processing Applications

One way of dealing with the tradeoff between generality and ease of use is to give business users a library of specification templates that they can combine in simple ways to obtain more-complex specifications. The templates are specific to the business; thus the template library and template composition mechanisms are, in effect, a dialect for specifying event-processing applications for a particular business. Mashups in web development are an example of a technology that enables combination of data from multiple tools easily. Integrated vertical applications for specific business problems, such as stock trading, logistics, or intrusion detection, reduce the amount of professional services and IT effort required to obtain an effective application for the business user. Nevertheless, business users need to participate in tuning the business-specific vertical application to their unique needs.

Note: The time and effort required to tailor EDA applications to the needs of business users is significant; nevertheless, this time and effort pays off in the long run.

Event Design Is as Important as Database Design

Event design is as important, and requires as much skill, as database design for several reasons including the following.

![]() Event processing will be used to repurpose “hard” infrastructure (steel pylons, wooden electric poles, transformers, and roads) for new applications. Hard infrastructure may last for 20 to 100 years, but the ways in which the infrastructure is used may need to be changed several times in that period. For example, society demands that utilities obtain energy for the electric grid from renewable—but volatile—sources, such as solar and wind power; this demand was not a factor when the hard infrastructure was set up. Event processing will enable hard infrastructure to be repurposed in cost-effective ways to satisfy changing needs provided events and event-processing systems are designed well. Designers of event-processing applications require deep business and technology skills: they must be familiar with existing business infrastructure and must be able to estimate how it is likely to be repurposed in the future.

Event processing will be used to repurpose “hard” infrastructure (steel pylons, wooden electric poles, transformers, and roads) for new applications. Hard infrastructure may last for 20 to 100 years, but the ways in which the infrastructure is used may need to be changed several times in that period. For example, society demands that utilities obtain energy for the electric grid from renewable—but volatile—sources, such as solar and wind power; this demand was not a factor when the hard infrastructure was set up. Event processing will enable hard infrastructure to be repurposed in cost-effective ways to satisfy changing needs provided events and event-processing systems are designed well. Designers of event-processing applications require deep business and technology skills: they must be familiar with existing business infrastructure and must be able to estimate how it is likely to be repurposed in the future.

![]() Many EDA applications will be layered on top of existing applications. Deciding what event objects to store and what to discard requires knowledge of the business because an event object may prove to be valuable for an application that isn’t even on the drawing boards. Event objects generated by one division of an enterprise may prove to be very valuable for another division, and this inter-divisional value may not have been recognized. Recording everything—because the records may be useful to somebody at some time—is not a viable option because that requires too much storage. Designers of eventprocessing applications need business skill to identify business events in underlying applications that can be exploited across the enterprise at the current or future time, and they need technical skill to determine event schemas and storage mechanisms.

Many EDA applications will be layered on top of existing applications. Deciding what event objects to store and what to discard requires knowledge of the business because an event object may prove to be valuable for an application that isn’t even on the drawing boards. Event objects generated by one division of an enterprise may prove to be very valuable for another division, and this inter-divisional value may not have been recognized. Recording everything—because the records may be useful to somebody at some time—is not a viable option because that requires too much storage. Designers of eventprocessing applications need business skill to identify business events in underlying applications that can be exploited across the enterprise at the current or future time, and they need technical skill to determine event schemas and storage mechanisms.

An Example: The Smart Grid

Let’s look at the smart grid to understand the tradeoffs in the costs of configuring systems to meet each user’s specific needs. An important aspect of the smart electric grid is demand-response—a mechanism by which businesses and residents adapt demand to the availability of power in general and renewable power in particular. To understand better the importance of design and configuration effort, consider two (of the many) options that utilities can offer consumers:

![]() Simple option —Each year consumers select from a small number of options offered by the utility. For instance, consumers get rebates if they allow the utility to turn off some of their appliances, such as air conditioners, for a few hours a year. Consumers who sign up for rebates may override control by utilities— for example, they may override the utility’s signal to turn off an air conditioner on a hot day; but, in this case consumers are charged penalties and rebates may no longer apply. Alternatively, consumers may elect to pay a higher price and not give the utility any control over the consumer’s consumption of power.

Simple option —Each year consumers select from a small number of options offered by the utility. For instance, consumers get rebates if they allow the utility to turn off some of their appliances, such as air conditioners, for a few hours a year. Consumers who sign up for rebates may override control by utilities— for example, they may override the utility’s signal to turn off an air conditioner on a hot day; but, in this case consumers are charged penalties and rebates may no longer apply. Alternatively, consumers may elect to pay a higher price and not give the utility any control over the consumer’s consumption of power.

![]() Sophisticated option —Homeowners and businesses buy and sell power to utilities, from instant to instant, depending on the price of power, the ratepayer’s needs for power, and the rate-payer’s ability to supply power. Homeowners and businesses may generate power using distributed energy resources such as solar and wind or by drawing power from batteries in plug-in hybrid electric vehicles or other devices. With this option, utilities or Independent Systems Operators (ISOs) will manage real-time markets for millions of small customers, many of whom will be both producers and consumers of power.

Sophisticated option —Homeowners and businesses buy and sell power to utilities, from instant to instant, depending on the price of power, the ratepayer’s needs for power, and the rate-payer’s ability to supply power. Homeowners and businesses may generate power using distributed energy resources such as solar and wind or by drawing power from batteries in plug-in hybrid electric vehicles or other devices. With this option, utilities or Independent Systems Operators (ISOs) will manage real-time markets for millions of small customers, many of whom will be both producers and consumers of power.

The effort required to enable users to customize the system to meet their requirements in the first option is minimal: customers merely check off a box on a form received by surface mail. Even for this simple situation, customers need to be educated about the penalties incurred by overriding control commands from the utility after customers sign up for rebates. The second, sophisticated option exploits smart technology more fully: it uses prices to obtain dynamic adjustment of supply and demand. The investment required in configuration technology, user interfaces, economics, psychology, and training of consumers is, however, much greater in the second option than in the first.

Note: Carefully analyze tradeoffs between the desired sophistication of a system and the effort required by business users to exploit the system effectively.

Accuracy

EDA applications are beneficial when they generate accurate responses and display accurate data. Some degree of inaccuracy is, however, likely. One type of inaccuracy is a false positive, the incorrect reporting of an event that does not actually occur. An example of a false positive is a false prediction that a tsunami will strike a beach at a specific time. An example of inaccuracy in an event parameter is a prediction that a destructive, category-five hurricane will hit a city when the actual storm that hits is only a minor tropical depression. Inappropriate decisions are made when an EDA application generates inaccurate data. A false tsunami warning results in beaches being evacuated unnecessarily, and an inaccurate prediction of a surge in the price of the stock of a company results in traders incurring losses.

Accuracy is different from relevance: information can be accurate and irrelevant. Likewise, information about a hurricane that is about to strike your home is relevant to you but can be inaccurate. Accuracy refers both to the accurate detection of an event and the execution of an appropriate response.

Costs of Inaccuracy

The costs of inaccuracy over the lifetime of an application depend on the frequency of inaccuracies and the average cost of an inaccurate response. One of the costs of inaccuracy in a newly deployed EDA application is the cost of losing customer trust; the cost of regaining end-user trust after a negative news story about the accuracy of the application can be very high. In some applications, rare but massive losses are more costly over the lifetime of the application than frequent small losses. Société Générale reported in 2008 that it lost over $7 billion due to one of its traders, and Barings Bank collapsed in 1995 after a trader lost over $1 billion. Carefully designed eventdriven applications could have detected anomalous trading patterns and reduced the losses. Steps can be taken to prevent massive losses by carrying out sanity checks of proposed actions: Unusual or high-risk responses are sent to another system for further approval while low-risk responses are executed directly. If an inappropriate highcost response has been invoked, then compensatory activities are initiated.

Reducing Costs of Inaccuracy by Double Checking

For example, algorithmic trading systems are designed to reduce costs of “fat-finger” errors. A fat-finger trade is an erroneous trade in which the actual amount traded is greater than the amount that the trader intended. For example, a fat-finger error may result in a trade of a million shares of a stock when the desired trade was only a thousand shares. The name fat finger derives from possible errors caused by a person with a fat finger who accidentally types extra zeros in the amount to be traded or leaves his finger on a key for too long. Some systems automatically detect and block probable fatfinger errors and, if necessary, execute compensatory actions. Such systems are compositions of two event-driven subsystems: the first generates proposals for responses, and the second filters out suspicious responses and takes compensatory actions.

Note: Sanity checking reduces inaccuracy but slows down responses.

Costs of Inaccurate Predictions of Rare Events

The cost of inaccuracy is a fundamental problem in EDA applications that warn about rare events. When systems give alerts about rare events, the frequency of false alerts is likely to be higher, and sometimes much higher, than the frequency of the actual events. Alerts about huge trading opportunities are likely to be more frequent than actual opportunities, and alerts from sensors about emergencies in intensive care units are likely to be more frequent than actual emergencies. There is a solid business case for EDA applications that generate some amount of inaccurate data. The question for application designers is: what degree of inaccuracy is appropriate?

Note: There is a business case for EDA applications that generate some inaccurate data; the design question is: what degree of inaccuracy is appropriate?

Tradeoffs between Accuracy and Other Metrics

The tradeoff between accuracy and other cost/benefit metrics is a complex one. Too many false alerts results in EDA applications being ignored, and this has negative consequences: firstly, the investment in the EDA application is wasted, and secondly, organizations that depend on these applications may have a false sense of security. The costs of inaccuracy are nonlinear: when genuine alarms occur only once a year, the cost of ten false alarms a day is more than ten times the cost of a single false alarm a day. Designs of EDA systems with absolutely zero inaccuracy are likely to generate information so late that it has little value, or produce fewer genuine alerts, or cost too much. Design tradeoffs are discussed in Chapter 11 on best practices.

Completeness

A system that provides only accurate information but does not provide all the information required to make decisions can be very costly. An example of incomplete information is a false negative —a response that is not executed because an event was not detected. A false positive is an erroneous signal that claims that a particular state change took place when it really didn’t, whereas a false negative is the absence of a signal that a particular state change took place when it really did take place. For example, in the smart electric grid example, a false positive is a signal that the grid is overloaded when it is not; and a false negative is the absence of a signal that the grid is overloaded when it truly is overloaded. A consequence of a false positive in this application is that demand for power may be reduced by turning off appliances forcibly and unnecessarily, whereas a consequence of a false negative is a possible brownout.

Relative Costs of False Negatives and False Positives

The costs of incomplete information are, in many cases, higher than the costs of inaccurate information. The cost of a false negative—no warning—when a tsunami strikes is measured in lives lost, property destroyed, and sea water inundating agricultural fields. The cost of a false warning of a tsunami includes the costs of clearing beaches unnecessarily, the negative impact on tourism, and possibly alarm fatigue from too many false warnings. In this and many other applications (see the smart grid example), the cost of a single false negative is much higher than the cost of a single false positive.

Since the cost of each false negative is often higher than the cost of each false positive, it is tempting to turn the tradeoff dial toward generating many more false positives with the goal of reducing the likelihood of false negatives by a small amount. Don’t yield to this temptation. The total costs of false positives don’t increase linearly with the frequency of false positives—doubling the frequency more than doubles the cost.

Note: The cost of a single false negative—absence of critical information—is usually much higher than the cost of a single false positive—the presence of inaccurate information; however, in many systems the frequency of false positives is much higher than the frequency of false negatives.

Sanity Checks Don’t Work Well for False Negatives

The costs of false positives can be limited, in some cases, by double-checking data or sanity checking information for reasonability. A sell-off in United Airlines (UAL) shares occurred after a report about the company declaring bankruptcy appeared on a website on September 8, 2008, but shares bounced back after investors realized that the report was about the company’s 2002 bankruptcy filing. Double-checking data reduces the likelihood of responding to inaccurate data. Generally, costs of false negatives cannot be controlled by double checking or sanity checking in the same way. Suppose a company has gone bankrupt, but a trader has received no information about the bankruptcy. Usually, the trader cannot deduce that the company is bankrupt from the absence of information about bankruptcy. In general, you cannot deduce the existence of a false negative from the absence of information.

Business Cases for Responding to Frequent Events and Rare Events

Business decisions are taken in the absence of complete information. Some degree of incompleteness is inevitable. An enterprise determines whether to adopt an eventprocessing application, in part, by evaluating the improvement in the quality of decisions due to more complete information engendered by EDA applications. Making the business case for more complete information offered by EDA applications is easier for applications that detect and respond to events frequently. RFID (radio frequency identification) applications for handling supplies detect events continuously in regular operations, and so the business case for better information is clear. Moreover, an enterprise can measure the benefits of such an application after it is deployed and justify the investment in a quantitative fashion. The business case is more nuanced when the application detects and responds to rare, but important, events. And quantitative justification of the investment, by making measurements before and after the application is deployed, is more difficult in these cases.

Timeliness

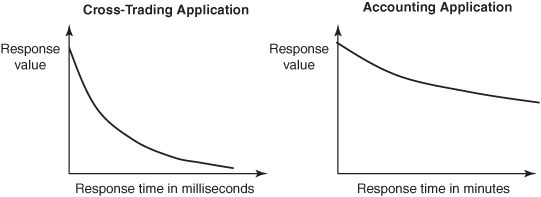

The effectiveness of a response depends on its timeliness. A tsunami warning issued after a tsunami strikes has little value. The value of a response to an event almost always decays with increased response time. The decrease in value of a response as response time increases is captured in a value-time function that plots response time on the X axis and response value on the Y axis, as shown in Figure 4-2. The value of a response drops significantly when response time increases by even a few milliseconds in some applications, while in other applications the value of a response may not decrease much even when response times increase by minutes. The costs of not dealing rapidly with threats and opportunities in milliseconds are huge in missile-defense and algorithmic-trading applications, whereas the costs of not responding in minutes, or even hours, are not high in some accounting applications. Even within a single application, such as the smart electric grid, the value of information as a function of delay varies from function to function—for instance, delays of fewer than 1 cycle (with 60 cycles per second) are beneficial for automatic circuit-switching functions, whereas delays of hours are acceptable for billing functions.

The Value of a Response Decreases with Delay

The rate at which the value of a response decays with response time depends on the maximum rate of change of the evolving threat or opportunity and the costs of not reacting to the threat or opportunity. Consider, for example, the Adverse Event Reporting System (AERS) used by the Food and Drug Administration (FDA) to monitor side effects of prescription drugs. The cost of delivery of inappropriate drugs to patients is huge, but the rate of change of the evolving threat is small—few patients are likely to be injured by inappropriate prescriptions of the drug in the next few milliseconds. Detecting possible side effects may take hours. Responses in seconds are not expected in AERS because such responses aren’t cost effective. Though faster is usually better, evaluate the costs and benefits of increased timeliness carefully before investing in faster systems.

Figure 4-2: Value-time functions.

Accuracy and completeness of event detections are critical in determining timeliness: detections based on less accurate and less complete information can be made quicker, leaving more time to respond to events.

Note: Faster detections of events leave more time to respond to them, but detections based on more accurate and complete information require more time for evaluation and less time for response.

Designers developing event-processing applications today have to consider the value-time function as it is today and as it is likely to be in the future. The value of rapid responses is likely to increase as business moves faster.

Tradeoffs between Timeliness and Accuracy

An analysis of the tradeoff between incremental costs of systems that deliver faster responses with incremental benefits accruing from greater speed shows that reducing response times by milliseconds does have benefits in many fully automated applications such as cross-trading. In cross-trading, an asset manager matches and then executes trades directly between client buyers and client sellers of an item without first sending the requests (bids and asks) to an exchange. Regulations prohibit an asset manager from holding buy or sell requests for more than a short time before passing the requests on to an exchange. Reducing delay between the initiation of a buy or sell order and the detection of the order by the cross-trading application by even a few milliseconds is valuable. If orders can be held by the application for a few milliseconds more, then the application can hold more orders before sending them to the exchange, and this gives the application both more time to detect matches and more orders to match.

An Example of the Timeliness-Accuracy Tradeoff from Seismology

The tradeoff between timeliness and other cost/benefit measures is illustrated by the following example from seismology. Networks of sensors and accelerometers detect shaking near geological fault lines and send data electronically to sites that analyze the data and issue alerts if appropriate. When a rupture occurs along a fault line, seismic waves emanate from the points of rupture; these waves can cause intense shaking many miles from the rupture. Seismic waves move along the earth’s surface much slower than data sent electronically and so data from sensors can reach populated areas before intense shaking starts. Population centers, depending on where they are located, can receive warnings a few seconds to several hundreds of seconds before severe shaking starts. The responses to such warnings are to slow down trains, stop elevators at floors and open elevator doors, open fire station doors, and secure electric power and communication networks. The challenges in building the application include:

![]() Completeness —Identify areas where dangerous shaking is likely to take place.

Completeness —Identify areas where dangerous shaking is likely to take place.

![]() Accuracy —Issue warnings only for regions where dangerous shaking will take place.

Accuracy —Issue warnings only for regions where dangerous shaking will take place.

![]() Timeliness —Issue warnings early enough that effective responses can be executed.

Timeliness —Issue warnings early enough that effective responses can be executed.

The system issues a warning based on sensor data accrued over a time interval after faults start to rupture. More sensor data can be obtained and more calculations can be carried out over longer time intervals. So, the accuracy and completeness of predictions improves with more time after the initial rupture. But, the longer the system waits to make a prediction, the less time there is to respond effectively. The tradeoff is between giving earlier, possibly erroneous warnings on the one hand, and giving later, more-accurate warnings on the other. This fundamental tradeoff has to be considered in relationship to tradeoffs among other measures such as total cost, effort, and security.

An Example of the Timeliness-Accuracy Tradeoff from Advertising

Advertisements, or sponsored links, shown with results of web searches and e-mail are selected in a timely manner. Users get impatient after waiting for more than a few seconds; so companies that offer services, such as web search, determine within those few seconds the appropriate sponsored links to show you. The companies execute complex algorithms to maximize their revenues based on the types of contracts they have with advertisers and their estimates of your behavior. They also have to deal with the tradeoff between timeliness and optimization—more time will help them show you ads that are most meaningful to you, but if the delay is too long, you will use an alternate system.

Better Timeliness from Predictive Systems

Systems that predict and respond to situations before they become critical give more time to respond. Many event-processing applications are predictive, which is why “the predictive enterprise” is one of the management aspirations that are specifically targeted by event processing. The benefits of prediction can also be captured by the value-time function, where zero time is the point at which the event starts; positive time is the time that elapses after the event starts; and negative time is the time before the event. Predictions of future events are, however, more likely to be inaccurate and incomplete than detections of the past.

Ways to Improve Timeliness

Timeliness of event-driven applications can be improved by improving any stage of the process: by receiving data more quickly, by responding more rapidly, by aggregating and analyzing data faster, or by predicting events farther into the future. For example, timeliness in algorithmic trading applications can be improved by obtaining trading data directly from exchanges rather than from slower, consolidated feeds. Timeliness can also be improved by using faster message oriented middleware (MOM) software, faster processors, and effective use of parallel systems such as networks of multiprocessor machines. Designers of event-processing systems make reasoned tradeoffs between timeliness and other parameters. This tradeoff must focus on the response time of the entire business application and not merely the time spent in a single component such as the event detector.

Security

Security is a concern for users of all software systems. It is, however, a particular concern for users of event-driven applications, because these applications are often critical to business. For example, electric power transmission companies that respond to possible brownouts by turning appliances on and off in homes via the smart electric grid must prevent hackers from orchestrating attacks that result in brownouts. The problem of cyber attack wasn’t as acute for utilities not so long ago when the companies’ IT networks didn’t reach homes and expose more points to attackers. Security is becoming more important and more challenging with increasingly tight integration of critical infrastructure—electric power, gas, water, roads, and air traffic—with sensors, responders, and IT woven into the infrastructure.

Note: Security is one of the biggest hurdles to the widespread deployment of EDA applications.

Event-Processing Applications and Customer Privacy

The broad area of security includes protection of privacy. Many event-driven applications acquire and store a great deal of personal information. An application that detects and responds to a customer’s location by monitoring mobile phones acquires information on where the customer has been. Cars equipped with GPS and telemonitoring devices can inform remote vehicle management sites about speeds at which cars are driven. Car insurance rates may depend on estimates of driving habits gathered from sensor data on cars. Many people don’t object to making some event types, such as car locations, public. Many people make videos public—and videos are event objects. Event objects, like diamonds, are forever. Event objects can be analyzed by everybody who can get access to them at any time—even long after the event. Business intelligence (BI) can be used to learn a great deal about a person by fusing event objects recorded over the years. Copyright laws can be used to prevent proliferation of copies of event objects, but finding and destroying all copies is an intractable task. Furthermore, copyright laws may not prevent people and companies from maintaining information deduced from analysis of event objects even if the objects themselves are protected by copyright.

Note: Protection of privacy is an increasing concern. But, event objects, like diamonds, can be forever. Event objects can be analyzed by everybody who can get access to them, even long after the event.

Smart systems are smart because they respond better to your needs and the needs of society and the environment; but responding better to your needs also requires that these systems know more about you. Consumers and regulatory agencies are already concerned with the potential invasion of privacy from smart systems. This issue will become even more critically important in the future.

Cyber-Security of Infrastructure

An attacker can find many ways to break into an EDA application. An attacker can spoof the enterprise, pretending to be a customer, and a hacker can spoof the customer, pretending to be the enterprise. In the case of the smart electric grid, a customer can attempt to change the signals sent from his home to the grid to reduce his bill. More dangerously, malicious hackers can attempt to cause brownouts by sending signals to turn on appliances in homes, thus overloading the system and possibly causing brownouts. Sensors and responders are becoming more widespread and more exposed; this gives malicious hackers more points at which to attack the system.

Preventing attackers from bringing down the system requires understanding the relationship between potential attacks and the underlying physical system; in the case of the electric power system, this requires understanding the effect of cyber-attack scenarios on the electromechanical system consisting of generator, transmission, distribution, and consumption systems. Cyber-physical security—understanding and managing the relationship between attacks to the cyber-infrastructure and the underlying physical, biological, or social system—is becoming increasingly important as EDA becomes widespread.

Event Processing for Detecting Attacks from Within

Not all attacks come from agents outside the enterprise; losses due to rogue traders at some major institutions (such as Société Générale and Barings Bank) were due to agents within the enterprise. Detection of insider attacks requires that EDA applications detect behavior that indicates attacks, and this is difficult when the attacker knows the detection algorithms. As systems—roads, ports, oil fields, and banks—get smarter, with event processing carried out at every step, the consequences of insider attacks get ever more serious.

The area of security includes forensics—understanding what went wrong when a system was attacked or when errors were made—and BI tools operating on eventobject repositories help here. An aspect of forensics is event provenance —descriptions of data items used to generate an event object and the process by which the event object was generated. This highlights the importance of careful designs of event schemas and links between simple event objects and the complex event objects created from them.

System Resilience

Resilience and robustness are related to security and fall under the broad rubric of security costs and benefits. Systems with standby or surplus capacity are generally more robust. The electric grid is robust because the ISO that manages the grid can call upon spinning reserves to supply additional power the instant it is needed. A fender-bender on a freeway doesn’t cause massive delays when traffic can flow along alternate lanes. A problem with an airplane doesn’t cause hours or days of delays for passengers when there are spare parts and spare planes available immediately. We are now increasingly relying on EDA to make our systems smarter and more efficient; we are using more information technology so that we can use less steel, burn less fossil fuel, build fewer power plants, and reduce our impact on the ecosystem. But, this also means that we are operating critical systems closer to the edge, with less spare capacity. And that’s why EDA applications must be reliable.

Impact of Interconnectedness on Security

The demand for event processing is driven, in part, by increasing global connectedness. Event-processing applications, in turn, drive increasing connectedness. EDA applications enable traders in New York and London to respond in seconds to changes in Shanghai. Electric power grids in Europe and North America are being consolidated. Increasing connectedness can lead to greater resilience: shortages at points in the network can be supplied from surpluses at other points. Tighter coupling across larger networks can also lead to rare, but catastrophic, events on a regional or even global scale. A problem in the power grid in Ohio caused a blackout for over 50 million people in the United States and Canada on August 14, 2003. Errors may propagate and failures can cascade across interconnected networks. EDA applications can monitor for errors and respond by taking action to prevent system failure. As EDA helps supply chains, stock exchanges, power grids, energy supplies, and water resources across regions become more interdependent, careful designs and alert operations are required to reduce the likelihood of rare but cataclysmic network-wide failures.

Security problems are not new; however, smart EDA applications woven into the fabric of daily life make security problems much more severe.

Note: The cost of ensuring security is a large part of the overall cost of EDA applications, but the cost of not ensuring security is much higher.

Summary

Since EDA applications have direct, visible impact on business, particular attention must be paid to business participation in evaluations of costs and benefits. The analysis of costs and benefits will help you determine whether to implement an EDA application for a business problem, or to use alternative technologies, or make no changes at all. The REACTS cost-benefit measures are more important in EDA applications than in other types of applications. Use the REACTS measures to help you design the best EDA application for your business problem.

All software systems have costs and benefits. The discussion in this chapter has restricted attention to features that are more typical of event-processing applications than other software applications. Be aware of another important cost since the discipline of business event processing is relatively new, finding people with expertise both in your business and in event-processing technologies will be difficult. Chapter 11 discusses how enterprises can deal with this issue.