Introduction to DevOps

DevOps is what you form when you break down the barriers that traditionally separated developers (“Dev”) from IT operations (“Ops”). When these teams work in isolation from one another, lack of communication and coordination between them can lead to inefficiencies and delays because the people who write code are out of sync with the people who deploy and manage it.

A successful DevOps implementation typically relies on the integration of various tools or solutions, sometimes referred to as toolchain or toolset, to eliminate manual steps. This integration helps to improve visibility and efficiency, reduce errors, and provides capability to scale the setup from a small team to enterprise level for cross-functional collaboration.

DevOps emphasizes agility, meaning the ability to implement changes easily. DevOps teams focus on standardizing development environments and automating delivery processes to improve delivery predictability, efficiency, security, and maintainability. DevOps also encourages empowering teams with the autonomy to build, validate, deliver, and support their own applications.

This chapter discusses the phases of the DevOps cycle along with various solutions available for each phase to adopt DevOps practices with IBM Z. It includes the following topics:

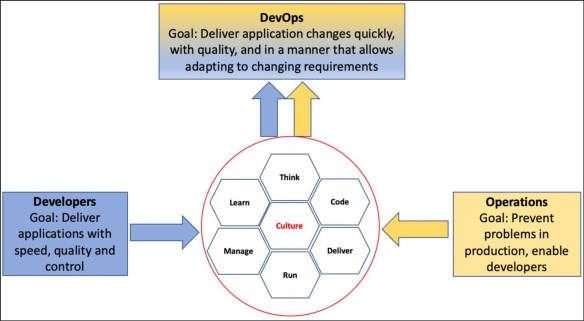

4.1 DevOps culture

A successful DevOps strategy attempts to address the previously mentioned challenges by establishing a culture that incorporates some, or all, of the following key values:

•Collective accountability: Everyone on the team must take responsibility for ensuring that software is delivered on time and meets expectations. Software delivery is everyone’s responsibility in DevOps.

•Transparency: In a DevOps culture, all team members must have constant visibility into what other members of the team are doing.

•Automation: DevOps places a priority on the use of automation to build quality into every step, ensure consistent results, and speed up delivery.

•Shared roles: Because DevOps brings together multiple teams, it might become more common for shared responsibilities. One example might be developers doing certain operational tasks in their test environments that were previously done by the operations team.

Figure 4-1 shows how developers and operations can come together by using DevOps culture to fulfill business requirements.

Figure 4-1 DevOps culture

4.2 DevOps on IBM Z

The flexibility, resilience, and agility a cloud platform brings to their hosted applications allows for streamlining an application delivery pipeline. Environments from development through testing and all the way to production can be provisioned and configured as needed, and when needed. This process minimizes the environment-related bottlenecks in the delivery process and makes for a compelling business case for cloud adoption with DevOps.

While integrating IBM Z into your hybrid cloud, it is imperative that developers and IT operations understand that the same, agile processes can also be performed on IBM Z, by using the same DevOps tools and having the same DevOps experience as other platforms. A range of solutions helps integrate systems, empowering developers with an open and familiar development environment with enterprise-wide, platform agnostic standardization, in turn helping developers build, test, and deploy code faster.

4.2.1 Continuous delivery and deployment with IBM Z

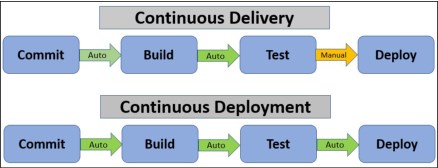

DevOps shifts the way developers deliver code. There are three key techniques in this regard:

•Continuous Integration: Developers frequently deliver their code to an integrated, common repository.

•Continuous Delivery: Code is frequently built and tested, all in an automated fashion. In this case, manual action is needed to deploy the code.

•Continuous Deployment: Code is automatically deployed to a live environment.

The goal of these techniques is to enable developers to rapidly deploy changes to an environment, simultaneously ensuring that the deployed code was rigorously tested such that quality not be compromised. The need to maintain quality at velocity is why test automation is an essential component of the process. The process that begins when developers commit their code changes to a repository and ends with deployment is often represented visually and is called a pipeline.

Figure 4-2 shows the difference between continuous delivery and continuous deployment pipelines.

Figure 4-2 Continuous delivery versus continuous deployment

Large enterprises sometimes use a combination of continuous deployment and continuous delivery. For example, developers might be encouraged to deliver their code at least once a day because a nightly build is automatically performed, followed by automatic functional tests, and finally automatic deployment into a test environment. This process describes a continuous deployment scenario.

However, the pipeline into a production environment might be gated by a manual approval so that portion is continuous delivery. Many benefits can be drawn without the need to enable automatic deployment into production.

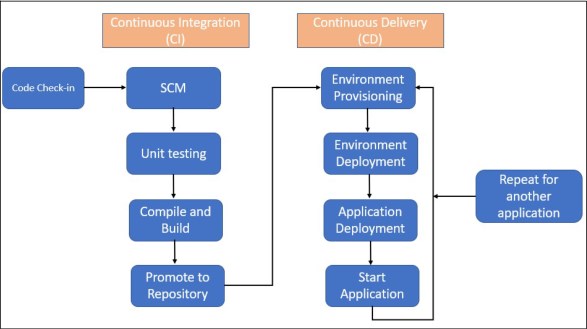

Many tasks are involved in each step of the code delivery process. Figure 4-3 shows the steps that are performed during continuous integration and continuous delivery (CI/CD).

Figure 4-3 CI/CD flow chart

Fortunately, tools are available for each step of this process. We begin reviewing these tools by focusing on the beginning with planning. Though we highlight their capabilities individually, many of these tools are bundled to optimize their benefits.

4.3 Analyze and plan

Before you can safely alter an application, you must understand how the application is composed to get a sense of where changes must be made and how changes affect other components of the application. Because enterprise applications are complex, this process can take time and energy. Fortunately, the IBM Application Discovery and Delivery Intelligence (ADDI) tool is available that makes it much easier to gather these insights.

IBM Application Discovery and Delivery Intelligence

IBM ADDI analyzes applications that are designed for IBM Z to quickly discover interdependencies. It breaks down a complex application into its various pieces and represents the pieces in a way that is approachable for developers. Developers can then easily see where their changes must be made in source code, and the effects those changes will have on other areas of the application. By using this platform, you can identify business logic within the application, and determine the extent of its implementation.

For example, ADDI can break down a complex application that was written in COBOL into its various source code modules, and the CICS transactions that start each module. Any data files or database transactions also are shown, along with whether the operations are performed by a module are reads, writes, updates, or the like. Almost immediately, you can correlate transactions to COBOL code, to reads of VSAM data or updates of databases.

For more information about ADDI, see this web page.

4.4 Source code managers

To fully realize agile development with multiple developers working simultaneously, it is imperative to use a source code manager (SCM). An SCM supports parallel development where each developer can easily maintain their own code streams and merge their code when it is ready to be promoted to the next phase.

SCMs also provide backup and version control of source code, which creates a safety net if something goes wrong. Although some SCMs can work with binary files, most often SCMs are designed to work with text files because source code is stored as text files. We discuss two widely used SCMs next.

Git

The de facto standard for SCMs these days is Git; it is commonly used for cloud-native development and can be used equally for developing code on IBM Z. Git is an open source code manager with several popular client/server implementations, such as GitHub, Bitbucket, and GitLab.

For on-premises configurations, the Git server runs on Linux, which makes it perfect for Linux on Z, or on z/OS through z/OS Container Extensions as described in 3.1.4, “Colocation with IBM Z”. A Git client is available for z/OS from Rocket Software1, which makes it easy to include Git-based CI/CD pipelines on z/OS. The real power lies in the mass integration of Git clients with seemingly every integrated development environment (IDE) you want to use. For more information about IDEs, see 4.5.2, “Integrated development environments ”.

IBM Engineering Workflow Management

IBM Engineering Workflow Management (EWM), formerly known as IBM Rational® Team Concert® (RTC), is a robust solution that is designed to act as a software development tool for your teams, regardless of on which platform the applications they are working. The SCM server component of EWM can run on z/OS, which might be convenient for z/OS-based applications. Also included in the solution are the tools agile development teams need to manage a backlog, track defects, and much more.

For more information about EWM, see this web page.

4.5 Code

Several terms are used to describe the process of writing software around cloud, and it is important to understand the differences between them to choose the best offering for your business needs. Table 4-1 lists the different cloud services and strategies. It is followed by a deeper discussion into cloud-native.

Table 4-1 Cloud services and strategies

|

Cloud service/strategy

|

Definition

|

|

Cloud-native

|

Applications developed from the outset to operate in the cloud and take advantage of the characteristics of cloud architecture, or an application that was refactored and reconfigured to do so. Developers design cloud-native applications to be scalable, platform agnostic, and consisting of microservices.

|

|

Cloud-ready/

Cloud-enabled |

An application that works in a cloud environment or a traditional application that was reconfigured for a cloud environment.

|

|

Cloud-based

|

A service or application being delivered over the internet “in the cloud”. It is a general term that is applied liberally to any number of cloud offerings.

|

|

Cloud-first

|

Cloud-first describes a business strategy in which organizations commit to using cloud resources first when starting new IT services, refreshing existing services, or replacing traditional technology.

|

4.5.1 Cloud-native

By using cloud-native applications, you can create an open ecosystem on IBM Z for access and use by administrators, developers, and architects, with no special skills required. With an open and connected environment, developers and administrators can more seamlessly build today’s business applications. These cloud-native applications can integrate with data and are optimally deployed across and managed within the hybrid multi-cloud.

Based on individual needs per workload (resources, time, cost, and so on), you have the choice to develop cloud-native applications in the private and public cloud, or a combination of both. Cloud-native development features the following attributes:

•Architecture: The architecture is microservice-based and the microservices run in dedicated containers.

•Automation: Everything is automated, including CI/CD, APIs, and configuration management.

•DevOps: Applications are driven by DevOps practices. The individuals that build the applications also run them; therefore, there is less of “throwing applications over the wall.”

A cloud-native application includes the following foundational elements:

•DevOps

•Continuous Delivery

•Microservices

•Containers

The deployment of applications is about empowering developers through supplying them with familiar tools and encouraging them to participate in an enterprise wide, fully automated, and continuous software delivery pipeline. Across the hybrid cloud ecosystem, IBM Z is designed to provide the flexibility to deploy workloads on- and off-premises, with the security, availability, and reliability you expect from Z.

4.5.2 Integrated development environments

IDEs are the digital home of your development teams. These suites of integrated tools allow developers to check out source code from an SCM, edit, and even run and debug their code in an intuitive experience. The goal is to enable developers to write code in parallel, receive rapid feedback on their work, deliver updates continuously, and maintain stable deployment environments.

IBM Developer for z/OS

IBM Developer for z/OS (IDz) is the premier IDE for z/OS. In addition to providing COBOL, PL/I, HLASM, Java, and C/C++ support, it includes a fully integrated debugger. IDz also provides the flexibility of editing style for new mainframe developers who might prefer the graphical flare, and experienced z/OS professionals who prefer command style.

Microsoft Visual Studio Code

Microsoft Visual Studio Code (VS Code) is a popular IDE that is built on open source. It is used for cloud-native development, and can be extended to work with z/OS by using the IBM Z Open Editor extension. This extension is at no cost and can be quickly added to VS Code with just a few clicks.

It provides support for IBM Enterprise COBOL, PL/I, HLASM, and JCL, including syntax highlighting, real-time error checking, code completion, and more features. The Zowe Explorer extension can also be added to VS Code to enable interaction with z/OS datasets, UNIX files, JES job output, and even MVS Commands.

This option is excellent for “hybrid” developers who are working across platforms and are familiar with VS Code from their projects on other platforms. VS Code is also widely used in school settings, which serves as a great way for an early professional who is new to IBM Z to quickly become productive.

IBM Wazi Code

IBM Wazi for Red Hat CodeReady Workspaces consists of two components: Code and Sandbox. Wazi Code enables developers who are working with z/OS applications a choice of VSCode or Eclipse-based IDEs.

Although you can obtain the Z Open Editor extension for VSCode for no cost, Wazi Code extends those capabilities to include debugging by using the IBM z/OS Debugger. This feature allows developers to perform visual debugging with variable inspection, breakpoints, and the like, in the same VSCode experience where they might choose to write their code.

This entire experience can be hosted in a Red Hat CodeReady Workspace, which provides the experience in-browser rather than locally installed. For more information about the Wazi Sandbox, see 4.7, “Test” on page 47.

4.6 Build

A build is a process in which source code is combined with any required libraries, and then is compiled, packaged, and made ready for deployment. This process might sound simple, but large applications can amount to millions of lines of code that might need hours to build.

Automated build is a repeatable build process that can be performed at any time and requires no human intervention. The build utility tools that are described in this section are designed to work with IBM Z.

IBM Dependency Based Build

IBM Dependency Based Build (DBB) provides the capabilities to build z/OS applications by using scripting languages that are commonly found in CI/CD pipelines. DBB APIs are written in Java and can be called by Java applications and Java-based scripting languages, such as Groovy and Maven. DBB includes an installation of Apache Groovy that was modified to run on z/OS UNIX System Services so you can run z/OS, TSO, or ISPF commands as part of the Groovy scripts.

With DBB, engineers can use Groovy scripts that were written for other platforms in the z/OS pipeline. DBB is ideal for compiling and link-editing programs. For this purpose, DBB provides a dependency scanner to analyze the relationship between source files, and a web application to store the dependency information and build reports.

DBB can also be used to add automated testing to the pipeline, or any other system administration task that you might write JCL for. DBB also works well with Git and Jenkins, as an example of an SCM and pipeline automation tool.

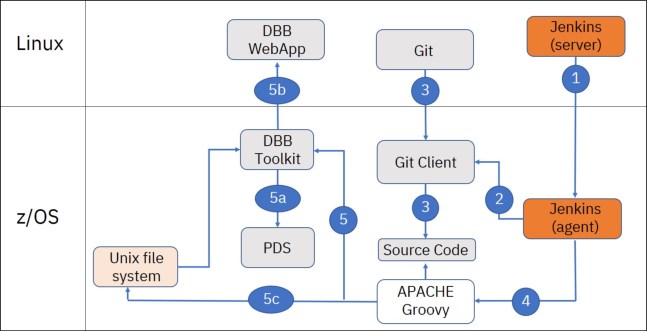

Figure 4-4 shows an example toolchain that Company A (from our example) is considering to implement DevOps principles on z/OS.

Figure 4-4 Git-based IBM Z open development open DevOps toolchain

Here, the application source code is stored in Git. DBB extracts the code from Git before it handles the compilation and generation of deployable artifacts, while Jenkins handles the pipeline and controls when DBB is run.

The following pathway is shown in Figure 4-4 on page 46:

1. The Jenkins server sends build commands to remote agent.

2. The Jenkins agent issues the Git pull command to update Git repository on z/OS.

3. The Git client automatically converts source from UTF-8 to EBCDIC during pull.

4. The Jenkins agent starts build scripts that contain DBB APIs to build code from the Git repository on z/OS.

5. The DBB Toolkit provides Java APIs to perform the following tasks:

– Create datasets, copy source from z/OS file system (zFS) to Partitioned Dataset (PDS), start z/OS programs, and issue ISPF and TSO commands.

– Scan and store dependency data from source files, perform dependency and impact analysis, and store build results.

– Copy logs from PDS to zFS and generate build reports, which can be saved in the build result.

4.7 Test

Users who are new to DevOps sometimes think DevOps is about doing less testing because code moves more quickly through the development phases and into production. The reality is that DevOps might mean conducting more testing because every code change is tested.

The key is that this testing is automated. Performing every test manually on every code change is impossible; therefore, we rely on test automation to ensure quality into code delivery.

In an agile environment, a significant need exists to move testing earlier in the development lifecycle, which often is referred to as a “shift left.” This shift drives a need for isolated test environments, which might conflict with the availability of development and test systems.

Traditionally, these “dev/test” environments run alongside production systems on IBM Z hardware and they are shared by teams of people. Having a limited number of environments, especially if tests for one function cannot be performed when another function is installed, can pose a challenge to software delivery. A few solutions are available to address this challenge, including the following examples that are described next:

•IBM Z Development and Test Environment

•IBM Wazi Sandbox

•IBM Wazi Virtual Test Platform

IBM Z Development and Test Environment

IBM Z Development and Test Environment (ZD&T) allows any z/OS software to run on a x86-compatible, on-premises system, or cloud instance. This availability is possible through an emulation layer that translates the IBM Z instruction sets and devices.

Moving dev/test to x86 frees up capacity for production workloads simultaneously while allowing greater flexibility and availability for dev/test. ZD&T provides a dev/test platform for IBM z/OS middleware, such as CICS, IMS, Db2, and other z/OS software, to run on Intel-compatible platforms without the need for IBM Z hardware. Because the environment that is provided by ZD&T is emulated, performance is acceptable only for test and not production workloads.

IBM Wazi Sandbox

The other component of IBM Wazi for Red Hat CodeReady Workspaces, Wazi Sandbox, provides a containerized dev/test environment that is optimized to run on Red Hat OpenShift. This OpenShift support means you can run emulated z/OS for test purposes on your public cloud of choice.

As you might expect from a cloud-based solution, a dashboard provides your developers the ability to provision and deprovision their individual sandboxes as needed. Similar to ZD&T, performance for Wazi Sandbox is acceptable only for test and not production workloads.

IBM Wazi Virtual Test Platform

IBM Wazi Virtual Test Platform (VTP) provides the ability to conduct a full transaction level test without needing middleware. Therefore, you can perform integration testing during the build process, which is a huge step that is left in development. It provides full stubbing capability for the middleware, starting with CICS with calls to Db2, DL/I, IBM MQSeries®, and Batch (including Db2 and DL/I).

4.8 Provision, deploy, and release

Deploy is where tested code is moved into execution. The full power of DevOps comes together in this phase in the form of an automated pipeline. This pipeline facilitates the steady movement of code changes from source code repository to a test environment, where automated tests are routinely performed to validate the changes.

Deployment tools do more than perform orchestrated deployments, they also track which version is deployed at any stage of the build and delivery pipeline. They can also manage the configurations of the environments of all the stages to which the application components must be deployed.

IBM z/OS Cloud Broker

z/OS Cloud Broker provides access to z/OS services within private cloud platforms where they can be used by the development community. It promotes a modern cloud-native experience by combining z/OS-based services and resources with your hybrid cloud environments. Developers can quickly create, modernize, deploy, and manage applications within the security of their firewall, themselves, without the need for intervention from system administrators.

z/OS Cloud Broker includes the following key features:

•Integrate z/OS resources with Red Hat OpenShift platform and simultaneously maintaining control over how these resources are used.

•Use the experience and trust in existing IBM Z investments.

IBM Cloud Provisioning and Management for z/OS

The IBM Cloud Provisioning and Management (CP&M) tool is used to provision z/OS software subsystems rapidly increasing the agility of the DevOps team. It helps transform IT infrastructure by integrating z/OS into your hybrid cloud.

Software service templates that provision IBM middleware, such as CICS, Db2, IMS, IBM MQ, and WebSphere® Application Server can be created and tracked by using CP&M. It simplifies the provisioning and de-provisioning of an environment, as needed, through a self-service marketplace.

It is available through IBM z/OS Management Facility (z/OSMF) for tasks that fall under the cloud provisioning category, such as resource management and software services, through Representational State Transfer (REST) APIs. Applications can use these public APIs to work with system resources and extract data.

Figure 4-5 shows an overview of CP&M for z/OS as a solution.

Figure 4-5 Cloud Provisioning and Management for z/OS workflow

For more information about CP&M, see this web page.

IBM UrbanCode Deploy

IBM UrbanCode® Deploy is a leading cross-platform deployment automation tool that is characterized as an application release automation solution with visibility, traceability, and auditing capabilities.

One of the many benefits of UrbanCode Deploy is its ability to help eliminate manual processes that are subject to human error, which in turn, leads to the enablement of continuous delivery for any combination of on-premises, cloud, and mainframe applications. The need for custom scripting is removed with UrbanCode Deploy by using tested integrations with many tools and technologies, such as Jenkins (which we discuss in the next section), Jira, Kubernetes, Microsoft, ServiceNow, and IBM WebSphere.

Quality checks are performed against every application before deployment. These checks are referred to as deployment approval gates. This process provides greater visibility and transparency into deployments for audit trails.

With UrbanCode® Deploy, you can set specific, required conditions that must be met before an application is promoted to an environment by establishing gates. These gates are defined at the environment level and each environment can have a solitary gate or conditional gates.

UrbanCode Deploy also aids in version control, which makes it easy to release only tested versions. It manages such control by providing application models and snapshots, where snapshots are manifests of versioned components and configuration and can be promoted as single items versus multiple components.

If you decide to use the IBM UrbanCode Deploy product suite, you receive access to Blueprint Designer. This component provides services, such as cloud orchestration, a graphical editor, IaC, and Cloud Automation Manager. These services in Blueprint Design collectively help to establish a CI/CD pipeline to generate and destroy short-term test environments to swiftly test application changes.

Some of the more critical benefits of UrbanCode Deploy are its ability to automate and increase the velocity of software deployment through different environments. It is designed to support the DevOps approach (a critical component of modernization), which enables the rapid release of incremental changes reliably and repeatedly.

You can use UrbanCode Deploy as a container and it is certified to work with Red Hat OpenShift and IBM Cloud Pak® for Applications. Support for the z/OS platform is available in the form of deploying it directly with the z/OS agent or through integration with existing z/OS deployment processes, such as IBM Rational® Team Concert® Enterprise Extensions.

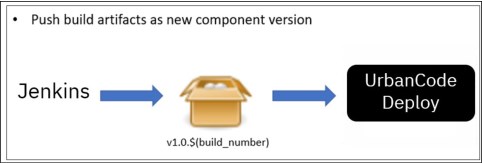

It also includes build and test tools that are used to automate application deployment to mainframe production environments. Figure 4-6 shows the IBM UrbanCode for deployment automation flow.

Figure 4-6 IBM UrbanCode Deploy

4.8.1 Continuous delivery with Jenkins and UrbanCode Deploy

UrbanCode also provides plug-ins to Jenkins continuous integration (CI). Jenkins CI server supports interactions with other technologies by using a plug-in model. Installed on a Jenkins server, the Jenkins Pipeline plug-in orchestrates UrbanCode Deploy deployments as part of a CI/CD pipeline in Jenkins (see Figure 4-7).

Figure 4-7 Jenkins plug-in for IBM UrbanCode Deploy

After running a build in your existing Jenkins CI infrastructure, the UrbanCode Deploy/Jenkins plug-in enables you to publish the build result to UrbanCode Deploy and trigger an application process for deployment.

Another aspect of deployment that is covered here is the deployment of IT Infra services. Red Hat Ansible Engine is the component within Ansible Automation Platform that uses hundreds of modules to automate all aspects of IT environments and processes. It helps developers and IT operations teams to quickly deploy IT services, applications, and environments to automate routine activities.

Red Hat Ansible

Ansible is an automation platform that uses a simple, English-like, widely used Open Source language that is called YAML for playbooks that automate application and IT infrastructure.

Ansible unites workflow orchestration with configuration management, provisioning, and application deployment in one easy-to-use platform. It uses code building blocks that are called playbooks, which are scripts with a group of commands or instructions and written in YAML to accomplish a task.

A significant step in enabling z/OS to participate in an Ansible-based enterprise automation strategy in the same way that the rest of your environments do, Red Hat Ansible Certified Content for IBM Z was made available to use Ansible on IBM Z. The use of Ansible to automate z/OS improves consistency across hybrid multi-cloud environments and allows z/OS to transparently participate in your infrastructure.

An initial collection of Ansible playbooks are designed to handle many tasks, such as working with datasets, retrieving job output, and submitting jobs on the system. More collections that are related to various use cases are being added to z/OS and the IBM Z broader community.

Implementing Ansible-based provisioning on z/OS can bring added value to your organization, especially so when you include Red Hat OpenShift, which was recently announced for Linux on IBM Z. With Ansible on IBM Z, you can seamlessly integrate workflow orchestration within a DevOps CI/CD pipeline of capabilities, including configuration management, provisioning, and application deployment in a single user-friendly platform, on any operating system.

Figure 4-8 shows a basic Ansible architecture.

Figure 4-8 Ansible architecture

Red Hat Ansible Certified Content for IBM Z helps you connect IBM Z to your wider enterprise automation strategy through the Ansible Automation Platform ecosystem. The IBM z/OS core collection is part of this certified content that focuses on z/OS infrastructure deployment and management. This automation content enables you to start using Ansible Automation Platform with z/OS to unite workflow orchestration in one easy-to-use platform with configuration management, provisioning, and application deployment.

IBM z/OS core collection provides sample playbooks, modules, and plug-ins in the form of Ansible Content Collections that can help accelerate your use of Ansible against z/OS inventories.

The following z/OS core modules are used in the IBM z/OS core collection:

•zos_job_submit

•zos_job_query

•zos_job_output

•zos_data_set

For more information about how to use the playbook, see this web page.

1 https://www.rocketsoftware.com/product-categories/mainframe/git-for-zos

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.