Introduction

z/OS Container Extensions is an exciting new capability that is delivered as part of IBM z/OS V2R4. It is designed to enable the ability to run almost any Docker container that can run on Linux on IBM Z in a z/OS environment alongside existing z/OS applications and data without a separate provisioned Linux server.

This extends the strategic software stack on z/OS as developers can build new, containerized applications, by using Docker and Linux skills and patterns, and deploy them on z/OS, without requiring any z/OS skills. In turn, data centers can operate popular open source packages, Linux applications, IBM software, and third-party software together with z/OS applications and data, leveraging industry standard skills.

As a new user for z/OS Container Extensions (zCX), it’s important that you have an understanding of the underlying constructs that make up zCX. This chapter introduces you to those concepts and includes the following topics:

1.1 z/OS Container Extensions overview

Enterprises increasingly embrace the use of hybrid workloads. Accordingly, the z/OS software ecosystem must expand and enable cloud-native workloads on z/OS. IBM z/OS Container Extensions (zCX) is a new feature of z/OS V2R4 that helps to facilitate this expansion.

zCX enables clients to deploy Linux on Z applications as Docker containers in a z/OS system to directly support workloads that have an affinity to z/OS. This is done without the need to provision a separate Linux server. At the same time, operational control is maintained within z/OS and benefits of z/OS Qualities of Service (QoS) are retained.

Linux on Z applications can run on z/OS, so you are able to use existing z/OS operations staff and reuse the existing z/OS environment.

zCX expands and modernizes the software ecosystem for z/OS by including Linux on Z applications, many of which were previously available only on a separately provisioned Linux. Most applications (including Systems Management components and development utilities/tools) that are currently available to run on Linux will be able to run on z/OS as Docker containers only.

Anything with s390x architecture (the IBM Z opcode set) in Docker Hub can be run in z/OS Container Extensions. The code is binary-compatible between Linux on Z and z/OS Container Extensions. Also, multi-platform Docker images will be able to run within z/OS Container Extensions.

In addition to open source packages, IBM plans to have IBM and third-party software available for the GA of z/OS 2.4. It is intended that clients will be able to participate with their own Linux applications, which can easily be packaged in Docker format and deployed in the same as open source, IBM, and vendor packages.

1.2 Container concepts

As a new Docker user, you must develop an understanding of the software and hardware platform that Docker is running on. Containers are designed to make better use of hardware and the host operating system.

This section introduces you to the concepts and information about Docker containers on IBM Z systems. This technology is transforming the application world and bringing a new vision to improve a developer’s workflow and speed up application deployment.

The use of containers has quickly become widespread in early-adopter organizations around the world, and it receives attention from large organizations today. It is important to highlight that system administrators and development teams are always looking for solution alternatives that can provide an agile development environment, speed to build, portability, and consistency. These qualities make possible the quick deployment of applications even while the demand for complex applications increases.

Docker is lightweight technology that runs multiple containers (group of processes that run in isolation) simultaneously on a single host. As a result, the developer’s workflow improves and application deployment happens more quickly.

Docker takes hardware virtualization to the next level. The major advantage of this technology is the ability to encapsulate an application in a container with its own operating environment along with its dependencies (code, run time, system tools, system libraries, and settings). So, you can deploy new applications without needing to install an operating system in a virtual machine, install software, or any other dependencies. In fact, everything you need to deploy for your application is available as one complete package in the form of a container.

Like a hypervisor, Docker allows sharing of resources, such as network, CPU, and disk to run multiple encapsulated workloads. Also, it provides ways to control how many resources can be used by a container. That way, you ensure that an application runs with all necessary resources, yet should not exhaust all resources on the host.

The Figure 1-1 illustrates the difference between Virtual Machine and Container architectures.

Figure 1-1 Virtual Machine versus Docker

At first, VMs gained popularity because they enabled higher levels of server utilization and provided better hardware usage. However, this technology is not ideal for every use case. If you must run the same applications in different types of virtual environments, the best choice is containerization and Docker provides this capability. The cost of making the wrong choice is significant and can delay critical deployments.

The difference is the layer at which abstraction takes place:

•VMs provide abstraction from the underlying hardware.

•Containers provide abstraction at the application layer (or operating system layer) that packages code and dependencies together.

It is common to deploy each application on its own server (or virtual server), but this approach might result in an inefficient system without financial benefits. The operational costs and the amount of effort that is required to control this large environment tend to exceed the expense and efficiency of a container infrastructure.

With container technology, organizations can reduce costs, increase efficiencies, and increase profits because the capability of applications can be quickly deployed to improve service.

It is easier to spin up a virtual machine to test a new application release, upgrade, or deploy a new operating system. However, it can produce virtual machine sprawl. Therefore, system administrators and developers are adopting container technology to combat this problem with a better use of server resources. Additionally, containers help organizations to meet growing business needs, without increasing the number of servers or virtual servers. The result is fewer efforts to maintain the environment.

1.2.1 Why do we have containers

This section describes some reasons that application developers and system administrators are adopting container technology to deploy their applications.

No need to run a full copy of an operating system

Unlike VMs, containers run directly on the kernel of hosting OS, which makes them lightweight and fast. Each container is a regular process that is running on the hosting Linux kernel, with its own container configuration and container isolation. This design brings more efficiency than VMs in terms of system resources, and it creates an excellent infrastructure that maintains all configurations and application dependencies internally.

Versioning of images

Docker containers provide an efficient mechanism to manage images. Having the necessary controls implemented helps you to easily roll back to a previous version of your Docker image. As a result, developers can efficiently analyze and solve problems within the application. It provides the necessary flexibility for development teams and allows them to quickly and easily test different scenarios. Chapter 6., “Private registry implementation” on page 107 provides information on how the related concept of tagging works.

Agility to deploy new applications

Today, developers face challenges when they must deploy new functions into a complex applications. The environments are more critical to operations, bigger (several components), and have many online users that are connected to the system.

For these reasons, developers are transitioning from monolithic applications to distributed microservices. As a result, organizations move more quickly, meet unexpected surges in mobile and social requests, and scale their business consistently.

The rapidly changing business and technology landscape of today illustrates the necessity to migrate these types of applications from the older style of monolithic single applications to a new, microservices-based model.

Docker is gaining popularity for the management of the new architecture that is based in microservices. It helps to manage and deploy this new architecture and quickly makes replications and achieves redundancy where the infrastructure requires it. Thanks to Docker, the setup of containerized applications can be as fast as running a machine process.

Isolation

Docker containers use two Linux kernel features (cgroups and Linux namespaces) to enforce and achieve the required isolation and segregation. You can have various containers for separate applications that run different services such that one container does not affect another container, despite the fact that they run in the same Docker host. In our case, that “same Docker host” is the zCX instance.

Linux namespaces provide resources that allow containers to create their own isolated environment, each with its own processes namespace, user namespace, and network namespace. Therefore, each Docker container creates its own workspace as a VM does.

With cgroups, you can manage system resources, such as CPU time, system memory, and network bandwidth to prevent a container from using all resources. Aside from this feature, Docker effectively permits you to control and monitor the system and each container separately.

Figure 1-2 Linux namespaces and cgroups

Better resource utilization

Virtualization technology provides the ability for a computer system to share resources so that one physical server can act as many virtual servers. It allows you to make better use of hardware. In the same way, Dockers can share resources and use less resources than a VM because it does not require to load a full copy of an operating system.

Application portability

Combining the application and all associated dependencies allows applications to be developed and compiled to run on multiple architectures. The decision on which platform to deploy an application now becomes based on factors that are inherent to the platform — and by extension the value that a particular platform provides to an organization — rather than which platform a developer prefers.

Application portability provides a great deal of flexibility in deploying applications into production and in choosing which platform to use for application development.

Containers make it easier to move the contained application across systems and run it without compatibility issues.

Colocation of data

For clients that run Linux applications on x86 platforms, it might be necessary to access data that resides on traditional mainframe systems. Colocation alongside the DATA can provide several advantages. Containerizing those applications and running them under zCX allows them to leverage the rich qualities of service that IBM Z and z/OS provide.

1.2.2 Docker overview

In this section, we introduce several basic Docker components, many of which we discuss later in this publication. If you are going to manage Docker in your organization, you must understand these components. A graphical overview of the components is shown in Figure 1-3.

Figure 1-3 Overview of Docker components

Docker image

A Docker image is a read-only snapshot of a container that is stored in Docker Hub or any other repository. As a VM golden image, the images are used as a template for building containers. It includes a tarball of layers (each layer is a tarball). To reduce disk space, a Docker image can have intermediate layers, which allows caching.

See the following resources for Docker images:

Dockerfile

A Dockerfile is a recipe file that contains instructions (or commands) and arguments that are used to define Docker images and containers. In essence, it is a file with a list of commands that are in sequence to build customized images.

Consider the following points regarding a Dockerfile:

•Includes instructions to build a container image

•Used to instantiate or transfer an image container

Figure 1-4 on page 7 and Figure 1-5 on page 7 show more information about a Dockerfile.

Figure 1-4 Dockerfile Architecture

|

# Dockerfile to build a container with IBM IM

FROM sles12.1:wlp

MAINTAINER Wilhelm Mild "[email protected]"

# Set the Install Reopsitories – replace defaults

RUN rm /etc/zypp/repos.d/*

COPY server.repo /etc/zypp/repos.d

# install the unzip package

RUN zypper ref && zypper --non-interactive install unzip

# copy package from local into docker image

COPY IM.installer.linux.s390x_1.8.zip tmp/IM.zip

# expose Ports for Liberty and DB2

EXPOSE 9080

EXPOSE 50000

# unzip IM, install it and delete all temp files

RUN cd tmp && unzip IM.zip && ./installc -log

/opt/IM/im_install.log -acceptLicense && rm -rf *

|

Figure 1-5 Sample Dockerfile

Docker container

A Docker container is a running instance of a Docker image and a standard unit in which the application and its dependencies reside. A container is built by way of a Dockerfile that contains instructions to include necessary components into the new container image. This object often is loaded when you run the docker run command to start a new container. Two Docker containers that are running on a Docker Host are shown in Figure 1-6.

Figure 1-6 Docker containers

Docker registry

A Docker registry is a local repository to store, distribute, and share Docker images. Users can store and retrieve images from these places by way of registry API. They use the docker push and docker pull commands, respectively. An example of Docker Registry (Hub) is shown in Figure 1-7.

Figure 1-7 Docker registry

Although you can create your own private registry to host your Docker images, some public and private registries are available on the cloud, where Docker users and partners can share images.

Public registries

DockerHub is a popular and public registry that offers to the open community a place to store Docker images.

For more information about Docker Hub, see this website.

Private registries

If your organization needs to use Docker images privately and allow only your users to pull and push images, you can set up your own private registry. Available options for setting up a private registry are listed in Table 1-1.

Table 1-1 Private Docker registries

|

Private registries

|

Description

|

|

|

Docker Trusted Registry (DTR)

|

Enables organizations to use a private internal Docker registry. It is a commercial product that is recommended for organizations that want to increase security when storing and sharing their Docker Images.

|

|

|

Open Source Registry

|

Available for IBM Z and it can be deployed as a Docker container on IBM Z.

|

|

Consider the following points regarding Docker registries:

•Enable sharing and collaboration of Docker images

•Docker registries are available for private and public repositories

•Certified base images are available and signed by independent software vendors (ISVs)

|

Note: For more information about registries, see this website.

|

You can find detail in how to implement a private registry service in chapter 6 of this publication.

Docker Engine

Docker Engine is the program that is used to create (build), ship, and run Docker containers. It is a client/server architecture with a daemon that can be deployed on a physical or virtual host. The client part communicates with the Docker Engine to run the commands that are necessary to manage Docker containers. Docker Engine functions are shown in Figure 1-8.

Figure 1-8 Docker Engine functions

Consider the following points regarding the Docker Engine:

•Runs on any zCX instance or virtual Linux server locally.

•Uses Linux kernel cgroups and namespaces for resource management and isolation.

1.3 zCX architecture

zCX is a feature of z/OS V2R4 that provides a pre-packaged turnkey Docker environment that includes Linux and Docker Engine components and is supported directly by IBM. The initial focus is on base Docker capabilities. zCX workloads are zIIP-eligible, so it provides competitive price performance.

There is limited visibility into the Linux environment. Access is as defined by Docker interfaces. Also, there is little Linux administrative overhead.

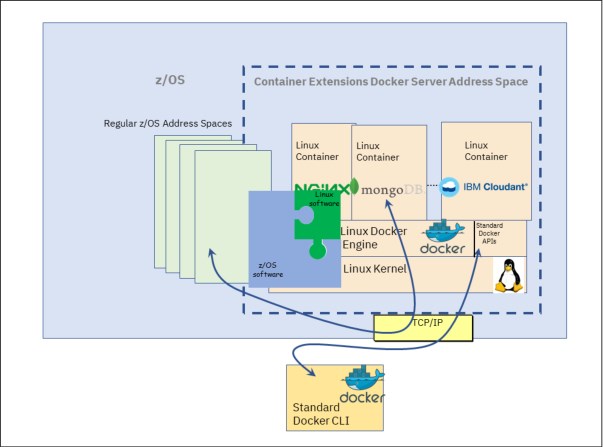

The basic architecture for zCX is described in Figure 1-9. zCX runs as an address space on z/OS that contains a Linux Kernel, Docker Engine, and the containers that can run within that instance.

Figure 1-9 zCX basic architecture

Within the zCX address space is the z/OS Linux Virtualization Layer. This layer allows virtual access to z/OS Storage and Networking. It uses the virtual interface to communicate with the Linux kernel, which allows zCX to support unmodified, open source Linux on Z kernels.

Linux storage and disk access is provided through z/OS owned and managed VSAM data sets. This allows zCX to leverage the latest I/O enhancements (for example, zHyperLinks, I/O fabric diagnostics, and so on), built-in host-based encryption, replication technologies, and IBM HyperSwap®. See Figure 1-10 for details.

Figure 1-10 Linux storage and disk access

Linux network access is provided through a high-speed virtual SAMEHOST link to the z/OS TCP/IP protocol stack. Each Linux Docker Server is represented by a z/OS owned, managed, and advertised Dynamic VIPA (DVIPA). This allows the restart of the zCX instance in another system in the sysplex. Cross memory services provide high-performance network access across z/OS applications and Linux Docker containers. To restrict external access, z/OS TCP/IP filters can be used. See Figure 1-11 for details.

Figure 1-11 Linux network access

CPU, memory, and workload management are all provided by z/OS services. Memory is provisioned through the zCX address space by using private, above-the-2-GB-bar, fixed memory that is managed by VSM (Virtual Storage Manager) and RSM (Real Storage Manager). Virtual CPUs are provisioned to each zCX address space. Each virtual CPU is a dispatchable thread (in other words, MVS TCB) within the address space. zIIP access is provided by the MVS dispatcher. Multiple Docker container instances can be hosted within a single zCX instance.

WLM policy and resource controls including service class association, goals, importance levels, tenant resource groups (including optional caps for CPU and real memory) are extended to zCX.

SMF data is available as well (Type, 30, 72, and so on), which enables z/OS performance management and capacity planning. Figure 1-12 provides more details.

Figure 1-12 Availability of SMF data

Multiple z/CX instances can be deployed within a z/OS System. This allows for isolation of applications, different business/performance priorities (in other words, unique WLM service classes) and the capping of resources that are allocated to workloads (CPU, memory, disk, and so on). Each zCX address space has its own assigned storage, network, and memory resources. CPU resources are shared with other address spaces. Resource access can be influenced by configuration and WLM policy controls.

In summary, zCX provides “hypervisor-like” capabilities by using the z/OS Dispatcher, WLM, and VSM/RSM components to manage access to memory and CPU. The zCX virtualization layer manages the storage, network, and console access by using dedicated resources. There is no communication across zCX virtualization layer instances except through TCP/IP and disk I/O which are mediated by z/OS. Figure 1-13 provides more details.

Figure 1-13 zCX “hypervisor-like” capabilities

1.4 Why use z/OS Container Extensions

Today’s enterprises increasingly deploy hybrid workloads. In this context, it is critical to embrace agile development practices, leverage open source packages, Linux applications, and IBM and third-party software packages alongside z/OS applications and data. In today’s hybrid world, packaging software as container images is a best practice. Today, zCX gives enterprises the best of both worlds, because with zCX you run Linux on Z applications — packaged as Docker containers — on z/OS alongside traditional z/OS workloads.

There are many reasons to consider using zCX, including these:

•You can integrate zCXworkloads into your existing z/OS workload management, infrastructure, and operations strategy. Thus, you take advantage of z/OS strengths such as pervasive encryption, networking, high availability, and disaster recovery.

•You can architect and deploy a hybrid solution that consists of z/OS software and Linux on Z Docker containers on the same z/OS system.

•You enjoy more open access to data analytics on z/OS by providing developers with standard OpenAPI-compliant RESTful services.

In this way, clients take advantage of z/OS Qualities of Service, Colocation of Applications and Data, Integrated Disaster Recovery/Planned Outage Coordination, Improved Resilience, Security, and Availability.

1.4.1 Qualities of service

IBM Z and z/OS are known for having robust qualities of service (reliability, availability, scalability, security, and performance). In today’s business environment, as enterprises shift toward hybrid cloud architectures, taking advantage of these qualities helps them gain a competitive advantage.

Too often, hybrid cloud architectures span multiple computing platforms, each with different qualities of service, which leaves enterprises exposed to multiple points of failure. These exposures can lead to lost business, loss of revenue, and even tarnished reputations. The ability to run hybrid workloads within a single platform and OS can help to alleviate these exposures. With zCX, this ability can now become a reality:

•Because zCX runs under z/OS, it inherits many of the qualities of service of the z/OS platform.

•You are no longer required to manage each platform individually and then coordinate activities across those platforms.

1.4.2 Colocation of applications and data

By colocating applications and data, you can simplify many of the operational processes that would be required to manage workloads. Without colocation, events such as maintenance windows and moving workloads between CECs/sites requires great coordination efforts that include non-z/OS administrators. Such efforts add complexity and increase the possibility for errors.

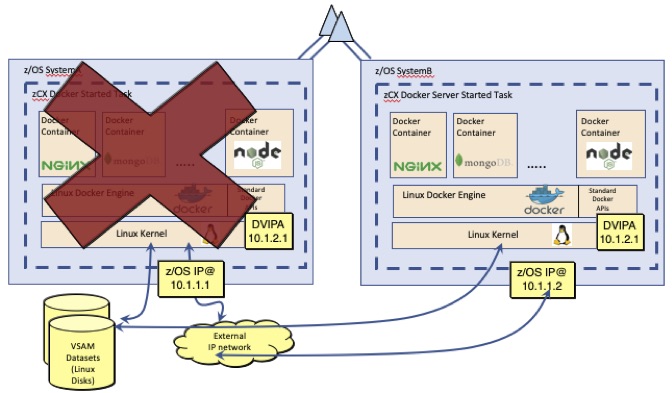

For Tier 1 applications that span both z/OS and Linux, zCX offers the ability to better integrate disaster recovery and planned outage scenarios. For example, with IBM GDPS® you can take advantage of its automatic, integrated restart capabilities to handle site switches or failiures without having to coordinate activities across platforms.

Single points of failure can be eliminated by the use of HyperSwap for the VSAM data sets that zCX uses for storage. Support for Dynamic VIPAs allows for non-disruptive changes, failover, and dynamic movement of workloads.

The following figures depict some example scenarios for integrating Operations and Disaster Recovery.

In Figure 1-14, you would use automated operations facilities within a single LPAR to restart-in-place for both planned and unplanned outages.

Figure 1-14 Operations disaster recovery scenario #1

Figure 1-15 shows how you could use automated operations facilities within a single LPAR to restart-in-place or restart on another LPAR within the sysplex for both planned and unplanned outages.

Figure 1-15 Operations disaster recovery scenario #2

In the scenario shown in Figure 1-16, you would use automated operations facilities within a single LPAR to restart-in-place, restart on another LPAR within the sysplex, or restart in an alternate site leveraging GDPS (or another automated DR framework) for both planned and unplanned outages.

Figure 1-16 Operations disaster recovery scenario #3

1.4.3 Security

Data breaches are being reported at an increasing frequency for organizations large and small. Such breaches lead to a loss of trust for the organization and its customers. Organizations are challenged to secure their data, and also to show auditors and government regulators that customer data is secure (especially sensitive customer data that might be used for fraud or identify theft). As result, security is a major requirement for applications today.

Organizations are adopting container technologies to containerize their workloads and make them easier to deploy and test. Also, these technologies enable the migration of application workloads from one specific platform to another without needing code updates. Additionally, organizations are required to provide a higher level of security for mission-critical and highly sensitive data.

By building your container environment into zCX instances, you take advantage of the benefits from a hardware platform that includes specialized processors, cryptographic cards, availability, flexibility, and scalability. These systems are prepared to handle blockchain applications and also cloud, mobile, big-data, and analytics applications.

It’s well known that IBM Z servers deliver industry-leading security features and reliability, with millisecond response times, hybrid cloud deployment, and performance at scale. The world’s top organizations rely on this platform to protect their data with a highly securable configuration to run mission critical workloads..

The zCX contains its own embedded Linux operating system, security mechanisms, and other features which can be configured to build a highly secure infrastructure for running container workloads. Through these benefits, zCX offers:

•Simplified, fast deployment and management of packaged solutions

•The ability to leverage security features without code changes

In the past, the manual way of individually installing applications and their dependencies could satisfy the needs of system administrators and application developers. However, the new business model (mobile, social, big data, and cloud) requires an agile development environment, speed to build, and consistency to quickly deploy complex applications. So, zCX comes with a preinstalled operating system and application codes that provide an environment that can be configured securely, in which to deploy your container workloads.

When correctly configured, a zCX appliance makes your cloud infrastructure more secure and efficient for your Docker deployments. While zCX is a new capability on z/OS, it is still a started task address space, subject to the normal z/OS address space level security constraints.

A zCX instance runs as a standard z/OS address space. Docker containers running inside a zCX instance run in their own virtual machine with its own virtual addresses and have no way to address memory in other address spaces. Access to data in other address spaces is only available through TCP/IP network interfaces or virtual disk I/O.

In a zCX environment, you have access to the Docker CLI and you can deploy new containers, start/stop containers, inspect container logs, delete containers, and so on. However, you are isolated within the environment of your zCX instance and cannot affect other zCX instances, unless your installation explicitly permits such access.

There are several aspects to the security setup for a zCX environment. These range from the typical IBM RACF® requirements for z/OS User ID’s, Started Tasks, file access, and USS (UNIX System Services) access to managing access to the Docker CLI.

In Chapter 3, “Security - Overview” on page 39, we discuss this in further detail. In Chapter 4, “Provisioning and managing your first z/OS Container” on page 47 and Chapter 9, “zCX user administration” on page 219, we provide examples of implementing different security approaches.

1.4.4 Consolidation

IBM Z servers feature a rich history of running multiple, diverse workloads, all on the same platform, with built-in security features that prevent applications from reading or writing to memory that is assigned to other applications that are running on the same box.

The IBM Z platform offers a high degree of scalability, with the ability to run many container machines on a single zCX instance. The ability to scale up allows you to even further consolidate your x86 workloads onto IBM Z without the need to keep multiple environments.

There are so many reasons to containerize an application, but the most important is with regards to business requirements. If you have workloads that are running on z/OS and part of the applications run on an x86, it makes sense to move your x86 workloads into a container under zCX. That way, the workloads are colocated and can take advantage of the qualities of service that IBM Z provides to an infrastructure. You can find examples of colocation in Chapter 8, “Integrating container applications with other processes on z/OS” on page 187.

With Docker, the application developers (or users) can pull the image from a repository and run a few command lines to get the entire application’s environment ready and accessible in just a few minutes.

1.4.5 Example use cases

There are many use cases that could be made possible by zCX. The following list gives some examples.

|

Note: This list does not constitute a commitment or statement of future software availability for zCX.

|

•Expanding the z/OS software ecosystem for z/OS applications to include support for,

– The latest Microservices (Logstash, etcd, Wordpress, and so on)

– Non-SQL databases (MongoDB, IBM Cloudant®, and so on)

– Analytics frameworks (for example, expanding the z/OS Spark ecosystem, IBM Cognos® components)

– Mobile application frameworks (example: MobileFirst Platform)

– Application Server environments (for example, IBM BPM, Portal Server, and so on)

– Emerging Programming languages and environments

•System Management components

– System management components in support of z/OS that are not available on z/OS

– Centralized databases for management

– Centralized UI portals for management products, as in these examples:

• IBM Tivoli® Enterprise Portal (TEPS)

• Service Management Unite (SMU)

•Open Source Application Development Utilities

– Complement existing z/OS ecosystem, Zowe, and DevOps tools

Note: Zowe is an open source project within the Open Mainframe Project that is part of The Linux Foundation.

Note: Zowe is an open source project within the Open Mainframe Project that is part of The Linux Foundation.

– IBM Application Discovery server components

– IBM UrbanCode® Deploy Server

– Gitlab/Github server

– Linux based development tools

– Linux Shell environments

– Apache Ant, Apache Maven

1.5 Additional considerations

Thanks to z/OS Container Extensions (zCX), z/OS environments can host Linux on Z applications as Docker containers for workloads that have an affinity for z/OS. z/OS Container Extensions does not replace traditional Linux on Z environments.

•If you are a client with Linux on Z installations, you might want to continue to run those installations because the gains from zCX might not be appreciable.

•If you are a z/OS client that used to, but no longer has a Linux on Z installation, you should consider zCX.

•If you are a z/OS client who has never had a Linux on Z installation, then zCX is a low-effort way to try Linux on Z.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.