Foreign lands

Plugging OpenCL in

13.1 Introduction

Up to this point, we have considered OpenCL in the context of the system programming languages C and C+ +; however, there is a lot more to OpenCL. In this chapter, we look at how OpenCL can be accessed from a selection of different programming language frameworks, including Java, Python, and the functional programming language Haskell.

13.2 Beyond C and C+ +

For many developers, C and C+ + are the programming languages of choice. For many others, this is not the case: for example, a large amount of the world’s software is developed in Java or Python. These high-level languages are designed with productivity in mind, often providing features such as automatic memory management, and performance has not necessarily been at the forefront of the minds of system designers. An advantage of these languages is that they are often highly portable, think of Java’s motto “write once, run everywhere,” and reduce the burden on the developer to be concerned with low-level system issues. However, it is often the case that it is not easy, sometimes it is even impossible, to get anything close to peak performance for applications written in these languages.

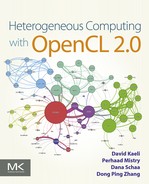

To address the performance gap and also to allow access to a wide set of libraries not written in a given high-level language, a foreign function interface (FFI) is provided to allow applications to call into native libraries written in C, C+ +, or other low-level programming languages. For example, Java provides the Java Native Interface, while Python has its own mechanism. Both Java (e.g. JOCL (Java bindings for OpenCL) [1]) and Python (e.g. PyOpenCL [2]) have OpenCL wrapper application programming interfaces (APIs) that allow the developer to directly access the compute capabilities offered by OpenCL. These models are fairly low level, and provide the plumbing between the managed runtimes and the native, unmanaged, aspects of OpenCL. To give a flavor of what is on offer, Listing 13.1 is a PyOpenCL implementation of vector addition.

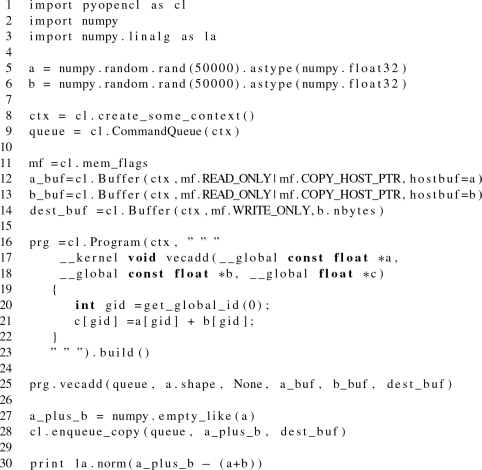

An example of moving beyond simple wrapper APIs is Aparapi [3]. Originally developed by AMD but now a popular open source project, Aparapi allows Java developers to take advantage of the computing power of graphics processing units (GPUs) and other OpenCL devices by executing data-parallel code fragments on the GPU rather than confining them to the local central processing unit (CPU). The Aparapi runtime system achieves this by converting Java bytecode to OpenCL at runtime and executing on the GPU. If for any reason Aparapi cannot execute on the GPU, it will execute in a Java thread pool. An important goal of Aparapi is to stay within the Java language both from a syntax point of view and from one of spirit. This design requirement can be seen from the source code to perform a vector addition, given in Listing 13.2, where there is no OpenCL C code or OpenCL API calls.

Instead, the Aparapi developer expresses OpenCL computations by generating instances of Aparapi classes, overriding methods that describe the functionality of a kernel that will be dynamically compiled to OpenCL at runtime from the generated Java bytecode.

Aparapi is an example of a more general concept of embedding a domain-specific language (DSL) within a hosting programming language: in this case, Java. DSLs focus on providing an interface for a domain expert, and commonly a DSL will take the form of a specific set of features for a given science domain—for example, medical imaging. In this case, the domain is that of data-parallel computations and in particular that of general-purpose computing on GPUs.

13.3 Haskell OpenCL

Haskell is a pure functional language, and along with Standard ML (SML) and its variants is one of the most popular modern functional languages. Unlike many of the other managed languages, Haskell (and SML) programming consists in describing functions, in terms of expressions, and evaluating them by application to argument expressions. In general, the model differs from imperative programming by not defining sequencing of statements and not allowing side effects. There is usually no assignment outside declarations. This is often seen as both a major advantage and a major disadvantage of Haskell. Combining side-effect free programming with Haskell’s large and often complex type system can often be an off-putting experience for the newcomer used to the imperative models of C, C+ +, or Java. However, side-effect free can be liberating in the presence of parallel programming, as in this case evaluating an expression will produce a single isolated result, which is thread-safe by definition. For this reason, Haskell has recently gained a lot of interest in the parallel programming research community. The interested reader new to Haskell would do well to read Hutton’s excellent book on programming in Haskell [4] and Meijer’s companion video series on Microsoft’s Channel 9 [5].

Owing to certain aspects of Haskell’s type system, it has proven to be an excellent platform for the design of embedded DSLs, which in turn provide abstractions that automatically compile the source code to GPUs. See, for example, Accelerate [6] or Obsidian [7] for two excellent examples of this approach. However, this is a book about low-level programming with OpenCL, and so here we stay focused, instead considering how the Haskell programmer can get direct access to the GPU via OpenCL. The benefits of accessing OpenCL via Haskell are many-fold but in particular

• OpenCL brings a level of performance to Haskell not achievable by existing CPU threading libraries;

• the high-level nature of Haskell significantly reduces the complexity of OpenCL’s host API and leads to a powerful and highly productive development environment.

There has been more than one effort to develop wrapper APIs for OpenCL in Haskell; however, we want more than a simple FFI binding for OpenCL. In particular, we want something that makes accessing OpenCL simpler, while still providing full access to the power of OpenCL. For this, we recommend HOpenCL [8], which is an open source library providing both a low-level wrapper to OpenCL and a higher-level interface that enables Haskell programmers to access the OpenCL APIs in an idiomatic fashion, eliminating much of the complexity of interacting with the OpenCL platform and providing stronger static guarantees than other Haskell OpenCL wrappers. For the remainder of this chapter, we focus on the latter higher-level API; however, the interested reader can learn more about the low-level API in the HOpenCL documentation. It should be noted that HOpenCL presently only supports the OpenCL 1.2 API. The advanced OpenCL 2.0 features such as device-queues and pipe objects have not yet been ported to HOpenCL.

As a simple illustration we again consider the vector addition described in Chapter 3. The kernel code is unchanged and is again embedded as a string, but the rest is entirely Haskell.

13.3.1 Module Structure

HOpenCL is implemented as a small set of modules all contained under the structure Langauge.OpenCL.

– Language.OpenCL.Host.Constants—defines base types for the OpenCL core API

– Langauge.OpenCL.Host.Core—defines the low-level OpenCL core API

– Language.OpenCL.GLInterop—defines the OpenGL interoperability API

– Language.OpenCL.Host—defines the high-level OpenCL API

For the most part, the following sections introduce aspects of the high-level API, and in the cases where reference to the core is necessary, it will be duly noted. For details of the low-level API, the interested reader is referred to the HOpenCL documentation [8].

13.3.2 Environments

As described in early chapters, many OpenCL functions require either a context, which defines a particular OpenCL execution environment, or a command queue, which sequences operations for execution on a particular device. In much OpenCL code, these parameters function as “line noise”—that is, technically necessary, they do not change over large portions of the code. To capture this notion, HOpenCL provides two type classes, Contextual and Queued, to qualify operations that require contexts and command queues, respectively.

In general, an application using HOpenCL will want to embed computations that are qualified into other qualified computations—for example, embedding Queued computations within Contextual computations and thus tying the knot between them. The with function is provided for this purpose:

13.3.3 Reference Counting

For OpenCL objects whose life is not defined by a single C scope, the C API provides operations for manual reference counting (e.g. clRetainContext/clReleaseContext). HOpenCL generalizes this notion with a type class LifeSpan which supports the operations retain and release:

The using function handles construction and release of new reference-counted objects. It introduces the ability to automatically manage OpenCL object lifetimes:

To simplify the use of OpenCL contexts (Context) and command queues (CommandQueue), which are automatically reference counted in HOpenCL, the operation withNew combines the behavior of the with function and the using function:

13.3.4 Platform and Devices

The API function platforms is used to discover the set of available platforms for a given system.

Unlike the C API, there is no need to call platforms twice, first to determine the number of platforms and second to get the actual list of platforms; HOpenCL manages all of the plumbing automatically. The only complicated aspect of the definition of platforms is that the result is returned within a monad m, which is constrained to be an instance of the type class MonadIO. This constraint enforces that the particular OpenCL operation happens within a monad that can perform input/output. This is true for all OpenCL actions exposed by HOpenCL, and is required to capture the fact that the underlying API may perform unsafe operations and thus needs sequencing.

After platforms have been discovered, they can be queried, using the overloaded (?) operator, to determine which implementation (vendor) the platform was defined by. For example, the following code selects the first platform and displays the vendor:

In general, any OpenCL value that can be queried by a function of the form clGetXXXInfo, where XXX is the particular OpenCL type, can be queried by an instance of the function:

For platform queries, the type of the operator (?) is

Similarly to the OpenCL C+ + wrapper API’s implementation of clGetXXXInfo, the type of the value returned by the operator (?) is dependent on the value being queried, providing an extra layer of static typing. For example, in the case of PlatformVendor, the result is the Haskell type String.

The devices function returns the set of devices associated with a platform. It takes the arguments of a platform and a device type. The device type argument can be used to limit the devices to GPUs only (GPU), CPUs only (CPU), all devices (ALL), or other options. As with platforms, the operator (?) is called to retrieve information such as name and type:

13.3.5 The Execution Environment

As described earlier, a host can request that a kernel be executed on a device. To achieve this, a context must be configured on the host that enables it to pass commands and data to the device.

Contexts

The function context creates a context from a platform and a list of devices:

If it is necessary to restrict the scope of the context—for example, to enable graphics interoperability—then properties may be passed using the contextFromProperties function:

Context properties are built with the operations noProperties, which defines an empty set of properties, and pushContextProperty, which adds a context property to an existing set. The operations noProperties and pushContextProperty are defined as part of the core API in Language.OpenCL.Host.Core:

Command queues

Communication with a device occurs by submitting commands to a command queue. The function queue creates a command queue within the current Contextual computation:

As CommandQueue is reference counted and defined within a particular Contextual computation, a call to queue will often be combined with withNew, embedding the command queue into the current context:

Buffers

The function buffer allocates an OpenCL buffer, assuming the default set of flags. The function bufferWithFlags allocates a buffer with the associated set of user-supplied memory flags (MemFlag is defined in Language.OpenCL.Host.Constants):

As buffers are associated with a Contextual computation (a Context), the using function can be used to make this association.

Data contained in host memory is transferred to and from an OpenCL buffer using the commands writeTo and readFrom, respectively:

Creating an OpenCL program object

OpenCL programs are compiled at runtime through two functions, programFromSource and buildProgram, that create a program object from the source string and build a program object, respectively:

The OpenCL kernel

Kernels are created with the function kernel:

Arguments can be individually set with the function fixArgument. However, often the arguments can be set at the point when the kernel is invoked, and HOpenCL provides the function invoke for this use case:

Additionally, it is possible to create a kernel invocation, which one can think of as a kernel closure, from a kernel and a set of arguments using the function setArgs (this can be useful in a multithreaded context):

A call to invoke by itself is not enough to actually enqueue a kernel; for this, an application of invoke is combined with the function overRange, which describes the execution domain and results in an event representing the enqueue, within the current computation:

Full source code example for vector addition

The following example source code implements the vector addition OpenCL application, originally given in Chapter 3, and is reimplemented here using HOpenCL:

This is the complete program!

13.4 Summary

In this chapter, we have shown that accessing OpenCL’s compute capabilities need not be limited to the C or the C+ + programmer. We highlighted that there are production-level bindings for OpenCL for many languages, including Java and Python, and focused on a high-level abstraction for programming OpenCL from the functional language Haskell.