5

How to Secure Compute Infrastructure

In this chapter, we will learn about the patterns that can be leveraged to secure a hybrid cloud compute infrastructure. A modern hybrid cloud infrastructure consists of the following compute types:

- Bare-metal servers

- Virtual machines (VMs)

- Containers

- Serverless

Depending on the type of compute, the pattern for securing them also varies. The following diagram shows the different protection patterns for compute that will be discussed in this chapter:

Figure 5.1 – Patterns for securing cloud compute infrastructure

In the shared responsibility model for cloud security, the roles and responsibilities between the cloud provider and consumer change based on the compute type consumed from the cloud. We will discuss patterns to provide isolation to varying degrees and security for bare-metal servers, VMs, containers, and serverless compute types.

We will cover the following main topics in this chapter:

- Securing physical (bare-metal) servers

- Trusted compute patterns

- Securing hypervisors

- Protecting VMs

- Securing containers

- Securing serverless implementations

Securing physical (bare-metal) servers

Problem

How to secure and protect bare-metal servers.

Context

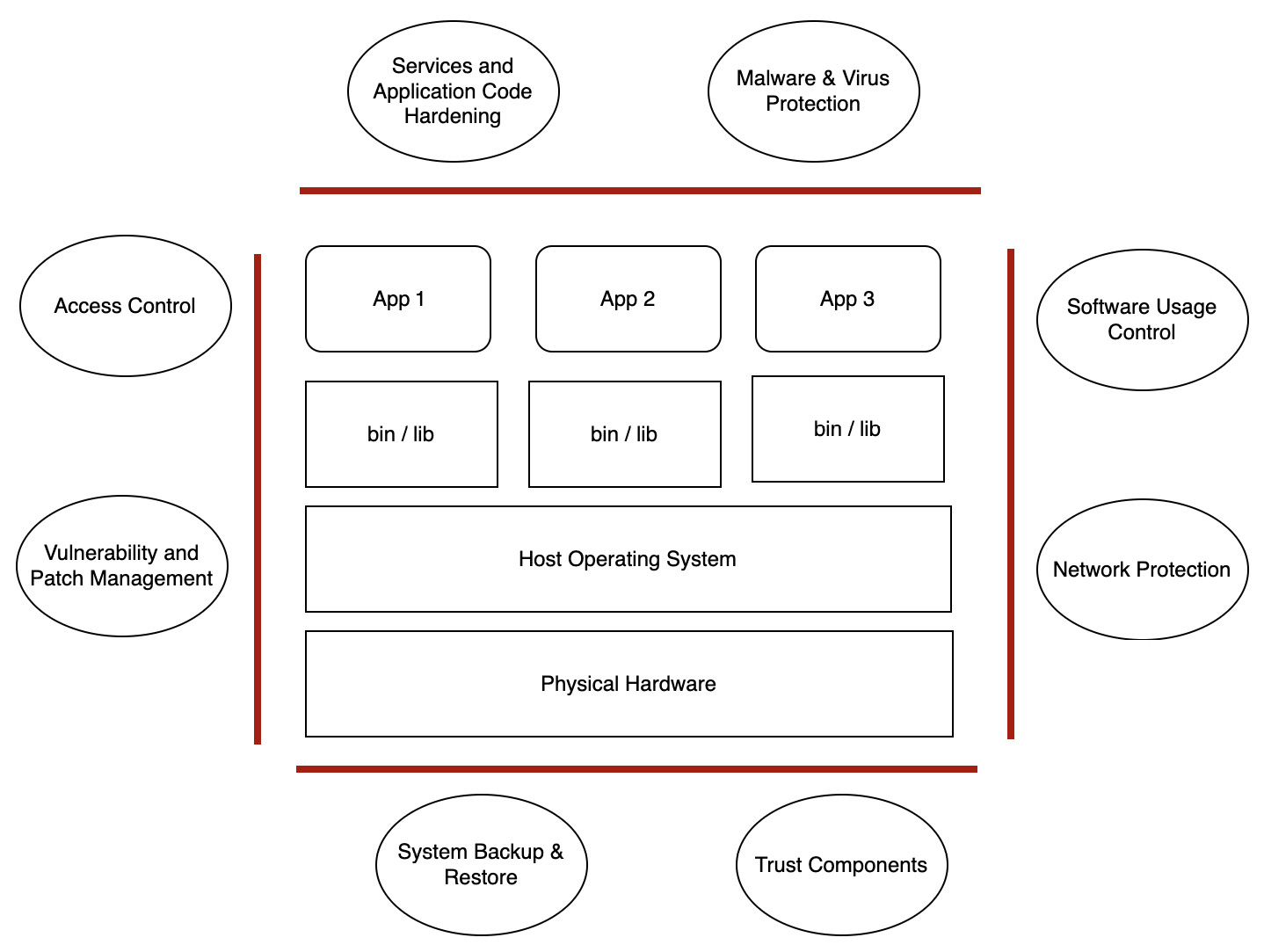

The foundation infrastructure is made up of bare-metal or physical servers. Bare-metal servers are also referred to as dedicated servers and provide maximum performance by delivering single tenancy. As shown in the diagram that follows, the security design needs to cover the different layers that make up the server. These layers include the physical hardware and the host operating system forming the bottom of the stack. The next layer is formed of the binaries and libraries that are leveraged by the operating system and hosted applications:

Figure 5.2 – Bare-metal server

The bare-metal server option provides direct root access to server resources and the consumer has the opportunity to customize the environment as per their needs. While there is flexibility with a bare-metal server, it increases the security risks. Server security should address the protection of critical components and services from exploits through the network as well as through native attacks. For bare-metal servers, the cloud provider takes essentially no responsibility for logical security, though it typically does take responsibility for the physical security of the servers.

Solution

The primary solution pattern for securing bare-metal servers is called server hardening. The hardening of a server is the process by which the security posture is improved by limiting the server’s exposure to threats and vulnerabilities. Hardening looks to reduce the attack surface and vectors. In general, a single-function system is likely to be more secure than a multifunction one. The larger the vulnerability surface, the more the server is subject to threats. Hardening aims to reduce the number of attack vectors, while threat prevention and vulnerability detection measure aims, respectively, to close entry points for attack vectors and to detect them should they exist.

You can see a visual representation of this in the following diagram:

Figure 5.3 – Bare-metal protection

Let us look at the process of hardening in more detail, as follows:

- The first step of hardening is to review and remove unused or unwanted users from the system that have privileged access.

- The next step is to disable unwanted services on the system, as well as removing unnecessary software. This will reduce the attack surface and vulnerabilities in the bin/lib layer.

- The next logical step is to ensure the operating systems and software are free from vulnerabilities. This step of hardening is done by setting the proper configuration and patching. Patching is the process of fixing or handling security vulnerabilities that can pose a risk to a business’s data and information. Details on vulnerability management are discussed in Chapter 11.

- Protecting the server through network hardening is required to ensure only the right connectivity to the outside world and the internet is provided to the server. For management functions, a separate private network needs to be planned. Details on network protection, isolation, and management are discussed in the next chapter.

Known uses

- Companies such as CrowdStrike and CyberArk provide a centralized management console from which administrators can connect to their enterprise network to monitor, protect, investigate and respond to incidents. This is accomplished by leveraging either an on-premises, hybrid, or cloud approach.

- Most of the large cloud service providers (CSPs) take care of the patching of physical servers from their side. Multiple patch management tools are in use, provided by different vendors such as Atera, NinjaOne, SolarWinds, SecPod, and ManageEngine.

Trusted compute patterns

Let’s get started!

Problem

How to ensure that the software runs on a trusted system.

Context

For the cloud service consumer, there is a need to confirm their software is running on trusted hardware. From the CSP perspective, there is a need to confirm that the software allowed on their hardware is authentic. Hardware should have the capability to prevent any unsigned software from being run on the compute infrastructure. Malware and rootkits try to bypass initial security checks and try to launch themselves even before the operating system is launched. In this model, we look at how the basic input/output system (BIOS) on a cloud-based server environment can be protected from malicious code.

Solution

The Trusted Computing Group (TCG) has developed and promoted a technology called trusted computing (TC). The key idea of TC is to provide hardware control over which software can be permitted to run on it. The mechanisms required to support this pattern include trusted platform modules (TPMs), hardware security modules (HSMs), and digital signatures (DSs). Trusted compute platforms are made available by the cloud provider with TPMs, which are HSMs that enable security assurance by validating DSs of code, starting at the BIOS using a measured boot.

You can see a visual representation of this in the following diagram:

Figure 5.4 – TC pattern

In this model, consistency is enforced by leveraging a combination of hardware and software controls. The hardware loads a unique encryption key from the HSM that is inaccessible to the rest of the system, including the owner or provider of the infrastructure. This security validation from the silicon chip and upward, combined with remote monitoring of this platform, provides cloud consumers with a means to verify that their workloads are running on trusted compute platforms that meet their security assurance requirements.

A related topic is confidential computing, which will be discussed in the context of data-in-use protection. This pattern will be discussed in Chapter 9.

Known uses

- IBM Cloud provides enhanced security verification of compute environments by using Intel® Trusted Execution Technology (Intel TXT). TPM also known as International Standards Organization/International Electrotechnical Commission (ISO/IEC) 11889) is an international standard for a secure cryptoprocessor (https://en.wikipedia.org/wiki/Secure_cryptoprocessor), a dedicated microcontroller designed to secure hardware using integrated cryptographic keys. TCG has certified TPM chips manufactured by Infineon Technologies, Nuvoton, and STMicroelectronics, having assigned TPM vendor identifiers (IDs) to Advanced Micro Devices, Atmel, Broadcom, IBM, Infineon, Intel, Lenovo, National Semiconductor, Nationz Technologies, Nuvoton, Qualcomm, Rockchip, Standard Microsystems Corporation, STMicroelectronics, Samsung, Sinosun, Texas Instruments, and Winbond (https://en.wikipedia.org/wiki/Trusted_Platform_Module).

- A reference implementation of a trusted compliant cloud based on technologies such as Intel TXT, TPM, virtualization by VMware, and cloud and data control by HyTrust is described in this document: https://builders.intel.com/docs/cloudbuilders/Trusted-compliant-cloud-a-combined-solution-for-trust-in-the-cloud-provided-by-Intel-HyTrust-and-VMware.pdf.

- Google provides shielded VMs so that when the VM boots, it’s running code that hasn’t been compromised. Shielded VMs provide a trusted firmware based on Unified Extended Firmware Interface (UEFI) and a virtual trusted platform module (vTPM) that validates guest VM pre-boot and boot integrity, and generates and protects encryption keys. Shielded VMs also include Secure Boot and Measured Boot to help protect VMs against boot- and kernel-level malware and rootkits. Integrity measurements collected as part of Measured Boot are used to identify any mismatches between the “healthy” baseline of the VM and the current runtime state.

Securing hypervisors

Problem

The next key area to be discussed in the stack is how to protect and secure hypervisors.

Context

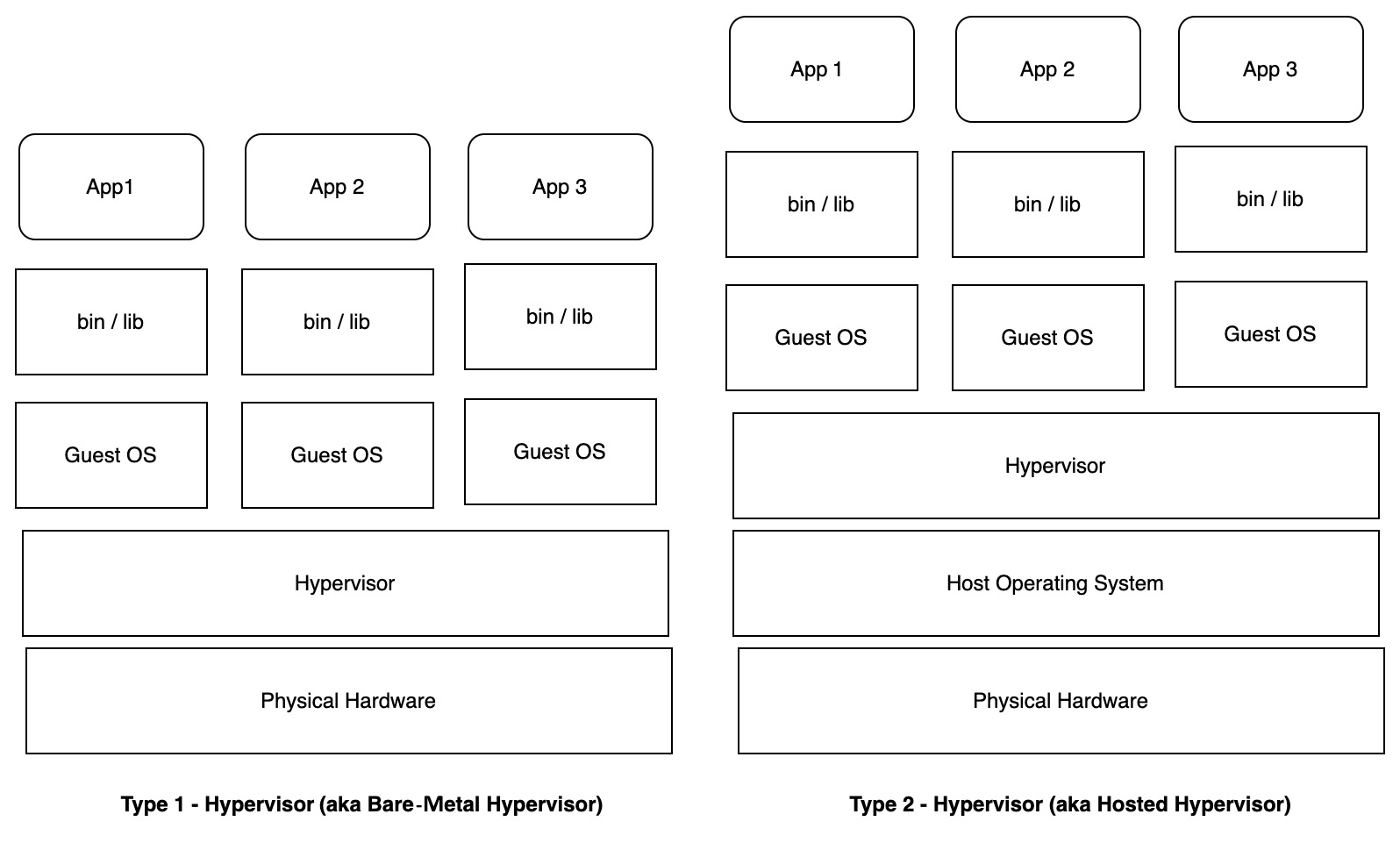

Virtualization and automation are the key enablers for the cloud. The virtualization layer sits between the physical infrastructure and the VMs. A hypervisor or VM manager is used to run numerous guest VMs and applications simultaneously on a single host machine and to provide separation between the guest VMs. As shown in the following diagram, virtualization provides a way to slice and dice physical infrastructure and provide this as VMs with variable configurations to the end users. The virtualization technology is delivered by hypervisors:

Figure 5.5 – Hypervisor types

As shown in the preceding diagram, there are two types of hypervisors. The Type 1 hypervisor is a component that sits directly on top of the bare-metal infrastructure. In the case of Type 2 hypervisors, there will be a host operating system layer in between the bare-metal and hypervisor. Depending on the type and the consumption model, the security management responsibility and protection of the stack vary. You can understand more about the hypervisor types by going through the links provided in the References section at the end of the chapter.

It is important to protect the hypervisor layer. A compromised hypervisor can affect all guest VMs running on top of it. An exposure or breach due to any vulnerability in this layer can expose data private to guest VMs. A hypervisor-based attack is an exploit in which an intruder takes advantage of vulnerabilities in the program used to allow multiple guest operating systems to share a common hardware processor. Here are some of the vulnerability and security threats applicable at the hypervisor layer:

- VM escaping–Hypervisors are designed to support strong isolation between the host and the VMs, but if there are vulnerabilities in the operating system running inside the VM, attackers can leverage that to break this isolation. In this situation where the isolation between VMs is broken, a guest VM might be able to control another VM or start communicating with the host operating system bypassing the hypervisor. Such an exploit opens the door to attackers to gain access to the host machine and launch further attacks. The VM can also control the compromised hypervisor and hackers can even move VMs.

- Hypervisor management vulnerability–A hypervisor is like an operating system or platform hosting multiple services including VMs on it. Users leverage the management consoles to control their VMs remotely. Any vulnerability in the client console or server side of the hypervisor management program can provide backdoor entry to a host or platform. This will provide easy access for hackers to break into the platform and control every VM. This type of security breach is of high risk to the virtual environment. If the management is done through any administrative machine, the hardening of that box is also important to prevent any illegal access. Any major failure of the hypervisor can result in the collapse of the overall system, including bringing down the guest VMs. Enforcing a security mechanism in a VM is an intermediate task. A virtualized environment is known for concurrently running multiple instances, applications, and even multiple operating systems, which again can be administered by multiple users in both a single-host and a multiple-host system with different user access. This environment also enables users to create, migrate, copy, and roll back VMs and run a multitude of applications. One of the other challenges is controlling a home or a guest operating system.

- Sniffing and spoofing–Sniffing and spoofing attacks work at the network interface layer. VMs interact through virtual switches to form a network. Sniffing is a passive attack to get some data. In this type of attack, VMs, if not restricted, can sniff out packets traveling to and fro to other VMs in the network or the whole network. Spoofing is an active security attack, sending improper requests for establishing a connection or intrusion from an untrusted source.

- Hyperjacking–Hyperjacking describes an attack in which a hacker takes malicious control over a hypervisor that creates a virtual environment within a VM host. The point of the attack is to target the host operating system by running applications on the VMs and the VMs themselves being completely oblivious to their presence.

- VM theft–An attacker can exploit security flaws to access information on a physical machine. With this access or information, the attacker can copy and/or move a VM in an unauthorized manner. This unauthorized copy or copy or movement of VM files can cause a very high degree of loss to a cloud consumer if the files and data contained in the VM are sensitive.

- VM sprawl–This is a situation of having many VMs in the virtual environment without proper control or management. In this scenario, a rightful VM may not get the required or requested system resources (that is, memory, disks, network channels, and so on). During this period, these resources are assigned or locked by other VMs, and they are effectively lost.

This is a scenario where the enterprise is leveraging bare-metal servers to set up its own private cloud and has control over the virtualization layer. Typically in most cloud scenarios, the cloud operator takes care of the hypervisor-layer hardening.

Solution

The same principles for securing bare-metal servers apply to hypervisor protection as well. The concepts of access control, hardening services at the hypervisor level, and vulnerability and patch management apply to the hypervisor level for the respective components. With regard to access control, it’s advisable to restrict copy and move operations for VMs containing critical/sensitive information. Additionally, for this security solution pattern to prevent attacks on VMs and limit VM theft, the cloud provider will include a firewall and intrusion detection system (IDS) to secure and isolate VMs that are hosted on the same physical machine. The solution components are detailed in the following diagram:

Figure 5.6 – Securing a hypervisor

Known uses

The vSphere Security Configuration Guide (SCG) is the baseline for security hardening of VMware vSphere itself, and the core of VMware security best practices. Started more than a decade ago as the VMware vSphere Security Hardening Guide, it has long served as guidance for vSphere administrators looking to protect their infrastructure.

Protecting VMs

Problem

Understanding how to secure VMs.

Context

With virtualization, compute resources are made available in the cloud in the form of VMs. VMs are like a server environment created within a computer. They have a guest operating system. The management plane of the hypervisor enables us to create and run multiple VMs. All the threats that are relevant for bare-metal servers are also applicable to VMs.

You can see a visual representation of this in the following diagram:

Figure 5.7 – VMs

VMs are subjected to the following attacks, in addition to those that are applicable to bare-metal servers as well:

- A VM can get infected with malware or operating system rootkits at runtime. This is malicious software that gives unauthorized access to a computer. It is hard to detect and can conceal its presence within an infected system. Hackers use rootkit malware to remotely access the VM and manipulate and steal data from it.

- A malicious VM can potentially access other VMs through shared memory, network connections, and other shared resources. Attacks from the host operating system and/or co-located VMs are known as outside-VM attacks. These attacks are difficult to detect. In the virtual environment, multiple VMs are usually provisioned on the same physical server in a cloud environment. VMs sharing the server raise potential threats through cross- or covert channel attacks. In this side-channel attack, a malicious VM breaks the isolation boundaries between VMs to access the shared hardware and cache locations to extract confidential information related to the target VM.

- VM image sprawl is a situation caused by the massive duplication of VM image files. In this, a large number of VMs created by cloud consumers may cause enormous administration issues if the VMs are infected and need security patching.

Solution

The hardening of a virtual server is similar to bare-metal server hardening. Hardening is the process by which the security of the virtual server is improved by reducing its exposure to threats and vulnerabilities.

You can see a visual representation of this in the following diagram:

Figure 5.8 – Securing VMs

Hardening includes the following:

- Removing any unnecessary usernames and logins.

- Removing unnecessary software.

- Removing any unnecessary drivers.

- Disabling any unwanted or unused services.

- Patching the VMs to bring the software up to the latest levels without any security vulnerabilities.

- Disabling/removing all unnecessary virtual hardware (central processing units (CPUs), random-access memory (RAM), and media devices) and limiting any direct access or VMs utilizing physical resources.

- Performing integrity validation, signature checking, or virtual encryption (on the guest operating system/server) to prevent unauthorized copying.

- Observing and monitoring change management procedures, remote auditing/control, and important statistics related to VM health. This includes the CPU utilization and overall bandwidth of the VMs, as well as the physical server, to ensure good health. In cases where the underlying infrastructure is not able to provide the capabilities needed to fulfill the VM demand, automation must be in place to migrate VMs across hosts to support the application demand.

- Depending on the shared security responsibility model in the cloud, the cloud consumer may be responsible for maintaining the physical infrastructure, its updates, and security patches.

Known uses

- The tools used for VM hardening are similar to those listed in the known uses under bare-metal protection.

- Microsoft Defender for Endpoint provides industry-leading endpoint security for Windows, macOS, Linux, Android, iOS, and network devices. It’s delivered at a cloud scale, with built-in artificial intelligence (AI). It enables the discovery of all endpoints and offers vulnerability management, endpoint protection, endpoint detection and response (EDR), mobile threat defense, and managed hunting, all in a single, unified platform.

- Symantec provides endpoint protection that goes beyond antivirus, blocking advanced threats as well. Traditional antivirus does not play well in virtualized infrastructure, so some vendors such as McAfee provide antivirus optimized for virtual environments. This optimizes anti-malware protection for virtualized deployments, freeing hypervisor resources while ensuring up-to-date security scans are run according to policy.

Securing containers

Problem

Patterns for securing containers.

Context

Containers provide a better way to efficiently use the underlying infrastructure compared to VMs. Application components and all dependencies are packed inside a container and executed in a secure way.

As shown in the following diagram, containers do not have any guest operating system. Instead, the container leverages the operating system and environment of the underlying layer:

Figure 5.9 – Containers

Containers bring several advantages, important one being build once, run anywhere. This is achieved by packing everything that an application needs into a container, thus isolating the application from the server on which it is running. A containerized application has everything it needs, packed as a container image. A container runtime (also known as container engine, which is a software component deployed on a host operating system) is needed to run containers. This image can be run on any machine, such as on a laptop or on a server in a cloud environment that has the container runtime deployed. Containerized applications can be deployed across a cluster of servers, leveraging container management platforms such as Kubernetes to automate this process. The security threats in a containerized environment are similar to deployments in a traditional environment. However, there are several changes in the way applications are run as containers. If we take a deeper look at the container threat model, there are several internal and external attackers involved, such as the following:

- External attackers include people or processes trying to gain access to deployments or images from outside

- Internal attackers are malicious insiders such as developers or administrators who have privileged access to the deployment as well as inadvertent actors who may have caused problems because of incorrect configuration

The routes to attacking a container-based deployment include the following:

- Exploiting vulnerable application code—The application typically uses several insecure or outdated libraries and packages. These tend to have many vulnerabilities that an attacker can exploit. Similar to any other application development project, badly written code is a security risk with container-based deployment as well, which can expose the enterprise to attack.

- Gaining privileged access—Containers provide an easy way to package the application and its dependencies, but many times, the creator of the container image may not pay sufficient attention to configuring the container image correctly. This can introduce weaknesses that an attacker can leverage to gain privileged access to the container instance. Container development includes an important step of build activity. Attackers may try to inject a malicious library or code injected in the build phase to leverage as a backdoor to gain access to the container in production.

- Insecure container registry—Builds or container images are shared for deployment leveraging container registries. Securing the container registry and controlling access to the images as part of the continuous integration/continuous deployment (CI/CD) process is an area of concern.

- Poor configuration and secrets exposure—Container deployment requires setting up secrets, ports, and underlying networks correctly. Credentials are shared with container code through several mechanisms in a containerized deployment. This is an area prone to attack by hackers. Also, if the network configuration is not set up correctly, this is another key area of vulnerability. Some examples of these secrets include passwords used to access databases, user-generated passwords, or application programming interface (API) keys/credentials as well as Secure Shell (SSH) keys or certificates.

- Container runtime vulnerabilities—As discussed in the introduction of this section, containers are run on top of a popular container runtime or container engine, such as runC, docker, containerd, or CRI-O. Many of these container runtimes meet the specifications outlined by the Open Container Initiative (OCI). Following this standard means the runtimes are matured and hardened to provide robustness and portability. OCI compliance also means interoperability for container registries. Containers are not first-class objects of the operating system and leverage Linux kernel primitives: namespaces (who you are allowed to talk to), control groups (cgroups: the number of resources you are allowed to use), and Linux Security Modules (LSMs)—what you are allowed to do. These kernel primitives allow you to set up secure, isolated, and metered execution environments for each process running inside the container. There is a possibility that there can be bugs in these primitives that make the container runtime vulnerable. Such vulnerabilities can be fatal if they allow the container to escape the strong isolation mechanisms to access the host operating system and other containers.

- Container management—Container deployment, orchestration, and management are done with tools such as Kubernetes. Complex deployment leveraging these tools involves multiple configurations and permission management. If this is not set and managed correctly, it becomes a target for attackers.

Solution

A container security pattern addresses the challenges discussed in the preceding context through following these best practices:

- Hardening the container environment—The container environment consists of the host operating environment, the container runtimes, and the related services including the container registry. The administrator needs to ensure that only the required services are up and that least-privilege access is provided to the users/systems based on the need. Avoid or shut down any other additional services running on these servers that are not required for the function, and limit container capabilities to what’s absolutely necessary to reduce the attack surface. The administrator also needs to lock down the container privileges to reduce and prevent any escalation attacks. Configuration and security reviews for both the containers and the underlying systems need to be done regularly.

- Keeping the container platform updated—Keep the container platform updated with patches to ensure the container runtimes are free from vulnerabilities. Updating and strengthening all runtimes and container management tools against attack should be performed regularly.

You can see a visual representation of such security processes in the following diagram:

Figure 5.10 – Container security

- Ensuring the enterprise only uses trusted containers—The next step is to ensure that the enterprise is only using trusted containers and downloads container images from trustworthy repositories. The recommendation is to deploy from private registries rather than directly deploying container images from untrusted sources. The organization needs to validate that the container image is free of vulnerabilities and does not have any outdated or insecure packages or components. It is important to put into practice a process to review reused internal components to ensure that they are safe and not compromised so that container images are secure. Roll back or replace high-risk containers with patched and updated ones. Bad containers can be thrown away and replaced with new ones without vulnerabilities, and this is different from the VM hardening pattern discussed earlier. Containers are immutable objects, so patch updates take the form of replacing the previous version with an updated one. It is recommended to reduce the container size and life where possible. Unused containers should be shut down and removed for improved security. Short-lived containers are a secure design practice that can be adopted by enterprises.

- Automating vulnerability scanning—It’s critical to have visibility into a container’s entire life cycle, from build to deployment. Automated vulnerability scanning is recommended to ensure no insecure container makes it into production. The scanning process to detect any vulnerability in containers cannot be a one-time activity, so this needs to be integrated with the CI/CD pipeline. This way, whenever the content is changed or updated, security scanning is performed. Also, when running containers go out of date with regard to packages, they can be updated for security patches.

- Network segmentation—Network segmentation is important, and isolating the containers as per the business requirement is a good practice to reduce the blast radius in case of an attack. This will limit the attack or compromise through the network layer to the minimum number of containers. Restrict container-to-container communication to the required minimum and protect the required interactions with a clear segmentation of the network and firewall rules. Also, auto-scaling can result in more containers being spun up, at least at times, so care needs to be taken so that security doesn’t break that. Container network traffic needs to continuously be monitored for any abnormal behavior and suspicious activities. The network configuration needs to be penetration-tested to ensure security is enforced at all layers. Network security patterns will be discussed in detail in the next chapter.

- Ensuring security—The shift toward containers has given developers more responsibility for security. Consequently, developers will need to spend time learning what trusted container image development really means, how to properly vet code for security, and how to adopt continuous security assessment tactics and minimize the attack surface of the overall container by removing unnecessary components. To truly keep containers secure, developers also need to have end-to-end (E2E) visibility into their entire container environment, allowing them to immediately detect and block malware and other security threats before they cause significant damage. With strong attention to some basic security best practices and benchmarks, administrators can make containers as secure as any type of code. Today, most developers focus on the functionality and stability of their code. They also need to take guidance from their security focal to ensure their code runs on secure containers as well.

- Regular auditing—Regular auditing of the container environment, leveraging security benchmarking and hunting tools, is recommended.

Known uses

- Palo Alto Networks provides a solution for container security that covers scanning images to identify high-risk issues, prevent vulnerabilities, provide developers with trusted images, and gain runtime visibility into various containerized environments.

- Aqua provides a solution covering different aspects of container security that can be integrated with the CI/CD pipeline. This includes CI scans, Dynamic Analysis, Image Assurance, Risk Explorer, Vulnerability Shield, Runtime Policies, behavioral profiles, workloads firewall, secrets injection, auditing, and forensics.

- Red Hat Advanced Cluster Security for Kubernetes integrates with development operations (DevOps) and security tools to help mitigate threats and enforce security policies that minimize operational risk to containerized applications.

Securing serverless implementations

Problem

Determining what the patterns for securing serverless deployments or cloud functions should be.

Context

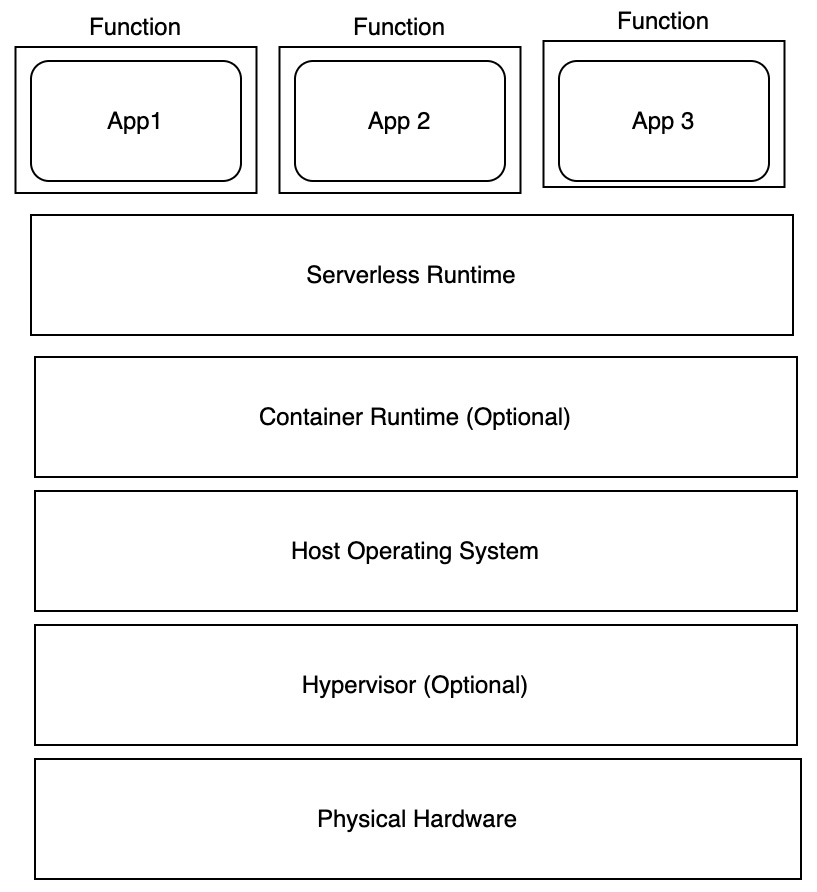

Serverless, as shown in the following diagram, is the latest among the computing type options. It is also called Function as a Service (FaaS). Amazon Web Services (AWS) Lambda, Azure Functions, IBM, and Google Cloud Functions are popular examples of serverless computing models. In this model, an application is broken into separate functions that run when triggered by some action. The consumer is charged only for the processing time used by each function as it executes:

Figure 5.11 – Serverless or cloud functions

A major challenge with securing serverless functions is that they are short-lived or ephemeral. So, it can be challenging to monitor and detect malicious activity in serverless functions. Serverless functions rely on underlying components and other cloud services to execute the tasks. Cloud providers only provide limited visibility compared to containers on the underlying infrastructure, so it can be difficult to identify where and when a function runs. For example, two invocations of the exact same function can run on completely different nodes. These dependencies can become complex and are hard to identify and harden for security. It is critical to ensure cloud functions are secure as they are heavily reused across applications. If there is any vulnerability with the function, it can impact multiple services and applications. Any prominent security issues should be remediated automatically. Vulnerabilities can exist in the underlying server and runtime infrastructure as well as in the code, dependencies, and third-party libraries used by the function. An attacker can exploit any of these vulnerabilities to gain access to cloud resources.

Another possible area to lock down is permissions and configuration settings for the functions. Most often, the functions are overprovisioned in terms of access in dev/test environments. More permissions are granted than what’s required to perform the task. Overprovisioned function permissions are high-risk, as if a hacker gains access to the function, they can harm other services.

Solution

As serverless is a new model for executing code, securing serverless also requires a paradigm shift. Traditional patterns of defense in depth (DiD) with firewalls and surrounding applications may not be effective for securing serverless. The organization must additionally build security around the functions hosted on cloud provider infrastructure. Hardening and securing the underlying infrastructure used by the cloud function is performed by the cloud provider. It’s worth noting that in a true hybrid model, serverless computing can end up being implemented extending across one or more clouds as a set of microservices that cause resources to be started and, hopefully, terminated upon need.

You can see a visual representation of how to secure serverless and cloud functions in the following diagram:

Figure 5.12 – Securing serverless or cloud functions

The key elements and tasks in this pattern for securing serverless functions include the following:

- Ensuring proper hardening of the function to enforce the least-privilege access control principle so that each function does no more and no less than what is required to execute the task.

- Scan the application code executed as part of the cloud function for any code, package, or library vulnerabilities and malware. Also, scan dependent libraries that are indirectly used by the serverless function.

- Ensure that the serverless environment is free from known security risks through continuous discovery, scans, and monitoring.

- It is important to check and validate the configuration to ensure no sensitive data, secrets, keys, or confidential elements are exposed through cloud functions.

Known uses

- AWS discusses a shared responsibility model for serverless security in which customers are free to focus on the security of application code, the storage and accessibility of sensitive data, observing the behavior of their applications through monitoring and logging, and identity and access management (IAM) to the respective service. AWS will take care of server management and deliver flexible scaling with automated high availability (HA).

- Aqua provides tools for the secure execution of serverless functions. The tools ensure least-privilege permissions, scan for vulnerabilities, automatically deploy runtime protection, and detect behavioral anomalies.

- Imperva Serverless Protection embeds itself into the function in order to defend against new attack vectors emerging in serverless functions.

- CloudGuard’s solution uses a code-centric platform that automates security and visibility for cloud-native serverless applications from development to runtime. By analyzing the serverless application code before and after deployment, organizations can achieve a continuous serverless security posture—automating application hardening, minimizing the attack surface, and simplifying governance.

Summary

In this chapter, we learned about the various patterns to secure the different types of compute infrastructure. We provided the context to the types of attacks that can happen on different layers of the compute infrastructure. For different compute types of the modern hybrid cloud infrastructure—namely, bare-metal servers, VMs, containers, and serverless—we discussed the patterns that can be used to address their security.

In the next chapter, we will learn about the patterns to secure a hybrid cloud network, which is an essential component of hybrid cloud infrastructure.

References

Refer to the following resources for more details on the topics covered in this chapter:

- IBM bare-metal servers using Intel® Trusted Execution Technology (Intel TXT)— https://cloud.ibm.com/docs/bare-metal?topic=bare-metal-bm-hardware-monitroing-security-controls

- Virtual Trusted Platform Module for Shielded VMs— https://cloud.google.com/blog/products/identity-security/virtual-trusted-platform-module-for-shielded-vms-security-in-plaintext

- CyberArk endpoint protection, privilege management, and patching— https://www.cyberark.com/products/endpoint-privilege-manager

- Microsoft Defender for Endpoint—https://docs.microsoft.com/en-us/microsoft-365/security/defender-endpoint/microsoft-defender-endpoint

- McAfee Management for Optimized Virtual Environments AntiVirus—https://www.mcafee.com/enterprise/en-us/assets/data-sheets/ds-move-anti-virus.pdf

- Palo Alto Networks container security— https://www.paloaltonetworks.com/prisma/cloud/container-security

- Aqua container security—https://www.aquasec.com/products/container-security/

- Red Hat Advanced Cluster Security for Kubernetes— https://www.redhat.com/en/topics/security/container-security

- Aqua Security for Serverless Functions (FaaS)— https://www.aquasec.com/products/serverless-container-functions/

- Imperva serverless security—https://www.imperva.com/products/serverless-security-protection/

- AWS shared security model for serverless— https://aws.amazon.com/blogs/architecture/architecting-secure-serverless-applications/

- Check Point CloudGuard serverless self-protection— https://www.checkpoint.com/cloudguard/serverless-security/